Professional Documents

Culture Documents

Stats For Finance2

Uploaded by

Rahul GattaniOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Stats For Finance2

Uploaded by

Rahul GattaniCopyright:

Available Formats

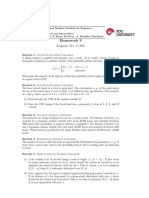

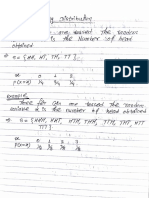

Statistics for Finance

1. Lecture 2: Some Basic Distributions.

We start be extending the notion of kurtosis and skewness for random variables.

Let a random variable X, with variance

2

and mean . The skewness is dened

as

E

_

_

X

_

3

_

and the kurtosis as

E

_

_

X

_

4

_

.

The following Lemma will be useful. It tells us how to derive the pdf of functions

of random variables, whose pdf is known.

Lemma 1. Let X a continuous random variable with pdf f

X

(x) and g a dierentiable

and strictly monotonic function. Then Y = g(X) has the pdf

f

Y

(y) = f

X

(g

1

(y))

d

dy

g

1

(y)

,

for y such that y = g(x), for some x. Otherwise f

Y

(y) = 0.

Proof. The proof is an easy application of the change of variables and is left as an

exercise.

The following is also a well known fact

Proposition 1. Let X

1

, X

2

, . . . , X

n

independent random variables. Then Var(X

1

+

+ X

n

) = Var(X

1

) + + Var(X

n

)

Some important notions are the characteristic function and the moment generat-

ing function of a random variable X. The characteristic function is dened as

(t) = E

_

e

itX

, t R

and the moment generating function, or Laplace transform is dened as

M(t) = E

_

e

tX

, t R

The importance of these quantinties is that they uniquely dene the corresponding

distribution.

1

2

1.1. Normal Distribution.

Normal distributions are probably the most fundamental ones. The reason for this

lies in the Central Limit Theorem , which states that if X

1

, X

2

, . . . are independent

random variables with mean zero and variance one, then

X

1

+ + X

n

n

converges in distribution to a standard normal distribution.

The standard normal distribution, often denoted by N(0, 1), is the distribution

with probability density function (pdf)

f(x) =

1

2

e

x

2

2

.

The normal distribution with mean and variance

2

, often denoted by N(,

2

),

has pdf

f(x) =

1

2

2

e

(x)

2

2

2

.

Let

F(x) =

1

2

_

x

y

2

2

dy

be the cumulative function of a N(0, 1) distribution. The q-quantile of the N(0, 1)

distribution is F

1

(q). The (1 )quantile of the N(0, 1) distribution is denoted

by z

. We will see later on that z

is widely used for condence intervals.

The characteristic function of a random variable X with N(,

2

) distribution is

E

_

e

itX

=

_

e

itx

1

2

2

e

(x)

2

2

2

dx

= e

2

t

2

2

.

1.2. Lognormal Distribution.

Consider a N(,

2

) random variable Z, then the random variable X = exp(Z)

is said to have a lognormal distribution. In other words X is lognormal if its

logarithm log X has a normal distribution. It is easy to see that the pdf of a

lognormal distribution associated to a N(,

2

) distribution is

f(x) =

1

x

2

2

e

(log x)

2

2

2

.

The median of the above distribution is exp(), while its mean is exp(+

2

/2). The

mean is larger than the median which indicates that the lognormal distribution is

right skewed. In fact the larger the variance

2

of the associated normal distribution,

the more skewed the lognormal distribution is.

3

Lognormal distributions are particularly important in mahtematical nance, as

it appears in the modelling of returns, where geometric Brownian Motion appears.

We will try to sketch this relation below. For more detailed discussion you can look

at Ruppert: Statistics and Finance: An Introduction, pg. 75-83.

The Net return of an asset measures the changes in prices of assets expressed

as fractions of the initial price. For example if P

t

is the proce of the asset at time t

then the net return at time t is dened as

R

t

=

P

t

P

t1

1 =

P

t

P

t1

P

t

.

The revenue from holding an asset is

revenue=initial investment net return.

The simple gross return is

P

t

P

t1

= R

t

+ 1.

The gross return over a period of k units of time is

1 + R

t

(k) =

P

t

P

tk

=

P

t

P

t1

P

t1

P

t2

P

tk+1

P

tk

= (1 + R

t

)(1 + R

t1

) (1 + R

tk+1

)

Often it is easier to work with log returns (also known as continuously compounded

returns). This is

r

t

= log(1 + R

t

) = log

P

t

P

t1

By analogy with above the log return over a period of k units of time is

r

t

(k) = r

t

+ + r

tk+1

.

4

A very common assumption in nance is to assume that the log returns on dierent

times are independent and identically distributed.

By the denition of the return the price of the asset at time t will be given by the

formula

P

t

= P

0

exp

_

r

t

+ + r

1

_

If the distribution of each r

i

is N(,

2

) then the distribution of the sum in the

above exponential will be N(t, t

2

). Therefore, the price of the asset at time t will

be a log-normal distribution.

Later on, you will see that is the time increments are taken to be innitesimal

the sum in the above exponential will approach a Brownian Motion with drift and

then the price of the asset will follow the exponential Brownian Motion.

1.3. Exponential, Laplace, Gamma. The exponential distribution with sclae

parameter > 0, often denoted by Exp() has pdf

e

x/

, x > 0,

mean and standard deviation . The Laplace distribution with mean and scale

parameter has pdf

e

|x|

2

, x R.

The standard deviation of the Laplace distribution is

2.

The Gamma distribution with scale parameter and shape parameter has pdf

()

x

1

e

x/

, x > 0,

with the normalisation

() =

_

0

x

1

e

u

du, > 0

which is the so called gamma function. Notice that when = 1 one recovers the

exponential distribution with scale parameter .

5

Proposition 2. Consider two independent random variables X

1

, X

2

, gamma dis-

tributed with shape parameters

1

,

2

respectively and scale parameters equal to .

Then the distribution of X

1

+X

2

is gamma with shape parameter

1

+

2

and scale

parameter .

The proof uses the following lemma

Lemma 2. If X

1

, X

2

are independent random variables with continuous probability

density functions f

X

1

(x) and f

X

2

(x), then the pdf of X

1

+ X

2

is

f

X

1

+X

2

(x) =

_

f

X

1

(x y)f

X

2

(y)dy.

Proof. Let formally f

X

1

+X

2

(x) = P(X

1

+ X

2

= x). We know that striclty speaking

the right hand side is zero in the case of a continuous random variable. We think,

though, of this as P(X

1

+ X

2

x). We then have

P(X

1

+ X

2

= x) =

_

P(X

1

+ X

2

= x, X

2

= y)dy

=

_

P(X

1

= x y, X

2

= y)dy

=

_

P(X

1

= x y)P(X

2

= y)dy

=

_

f

X

1

(x y)f

X

2

(y)dy,

where in the last step we used the independence of X

1

, X

2

.

We are now ready for the proof of Proposition 2

6

Proof. For simplicity we will assume that = 1. The general case follows along the

same lines. Based on the previous Lemma we have

f

X

1

+X

2

(x) =

_

f

X

1

(x y)f

X

2

(y)dy

=

_

x

0

(x y)

1

1

(

1

)

e

(xy)

y

2

1

(

2

)

e

y

dy

=

x

1

+

2

1

(

1

)(

2

)

e

x

_

1

0

(1 y)

1

1

y

2

1

dy

=

x

1

+

2

1

(

1

+

2

)

e

x

The third equality follows from an easy change of variables, while the last from

the well known property of Gamma functions that

_

1

0

(1 x)

1

1

x

2

1

dx =

(

1

)(

2

)

(

1

+

2

)

.

1.4.

2

Distribution.

If X is a N(0, 1) random variable, then the distribution of X

2

is called the

2

distribution with 1 degree of freedom. Often we denote the

2

distribution with

one degree of freedom by

2

1

.

It follows easily using Lemma 1, that the

2

1

distribution is actually a Gamma

distribution with shape and scale parameters 1/2 and 2, respectively. (Check this !)

If now U

1

, U

2

, . . . , U

n

are independent

2

1

distributions, then then distribution of

the sum U

2

1

+ + U

2

n

is called the

2

distribution with n degrees of freedom

and is denoted by

2

n

.

Since the

2

1

is a Gamma(1/2,2) distribution, we know from Proposition 2 that

the

2

n

distribution is acutally the Gamma distribution with shape parameter n/2

and scale parameter 2.

The

2

distribution is important since it is used to estimate the variance of a

random variable, based on the sample variance as this will be measured in a sampling

process. To see the relevance compare with the denition of the sample variance as

this is given in Denition 4 of Lecture 1.

1.5. t-Distribution.

The t-distribution is important when we want to derive condence intervals (we

will study this later on) for certain parameters of interest, when the (population)

variance of the underlying distribution is not known.At this stage we would need to

7

have a sampling estimate for the (population) variance, thus the tdistribution is

related to the

2

distribution.

Lets proceede with the denition of the tdistribution. If Z N(0, 1), and

U

2

n

then the distribution of Z/

_

U/n is called the t-distribution with n

degrees of freedom, often denoted by t

n

.

The pdf of the tdistribution with n degrees of freedom is given by

((n + 1)/2)

_

n(n/2)

_

1 +

t

2

n

_

(n+1)/2

To prove this we will need the following lemma

Lemma 3. Let X, Y two continuous random variables with joint pdf f

X,Y

(x, y).

Then the pdf of the quotient Z = Y/X is given by

f

Z

(z) =

_

|x|f

X,Y

(x, xz)dx

Proof.

P

_

Y

X

< z

_

=

_

0

_

zx

f

XY

(x, y) +

_

0

_

zx

f

XY

(x, y)

Dierentiating both sides with repsect to z we get

f

Y/X

(z) =

_

0

xf

XY

(x, xz)dx

_

0

xf

XY

(x, zx)dx

and the result follows.

The rest is left as an exercise.

As the degrees of freedom n tend to innity the t

n

distribution approximates the

standard normal distribution. To see this one needs to use the fact that

(1 +

x

2

n + 1

)

(n+1)/2

e

n+1

2n

x

2

e

x

2

/2

and the asymptotics of the Gamma function

(n)

2nn

n

e

n

.

Recall that when n is an integer (n+1) = n!, so the above is just Stirlings formula,

but it also holds in the general case that n is not integer.

8

1.6. F-Distribution.

If U, V independent and U

2

n

1

, V

2

n

2

then the distribution of

W =

U/n

1

V/n

2

is called the F-distribution with n

1

, n

2

degrees of freedom. F-distributions are

used in regression analysis. The pdf of the F-distribution is given by

((n

1

+ n

2

)/2)

(n

1

/2)(n

2

/2)

_

n

1

n

2

_

n

1

/2

x

n

1

/21

_

1 +

n

1

n

2

x

_

(n

1

+n

2

)/2

The proof is similar to the derivation of the pdf of the tdistribution and is therefore

left as an exercise.

9

1.7. Heavy-Tailed Distributions.

Distributions with high tail probabilities compared to a normal distribution, with

same mean and variance are called heavy-tailed. In other words a distribution F

with mean zero and variance one is heavy (right) tailed if

1 F(x)

_

x

e

x

2

/2

/

2

>> 1, x +

Similar statement holds for the left tail. A heavy-tailed distribution can also be

detected from high kurtosis (why ?)

A heavy-tailed distribution is more prone to extreme values, often called outliers.

In nance applications one is especially concerned with heavy-tailed returns, since

the possibility of an extreme negative value can deplete the capital reserves of a

rm.

For example t-distribution is heavy tailed, since its density is proportional to

1

1 + (x

2

/n)

(n+1)/2

|x|

(n+1)

>> e

x

2

/2

for large x.

A particular class of heavy-teiled distribution are the Pareto distributions or

simply power law distributions.

These are distributions with pdf

L(x)

|x|

.

L(x) is a slowly varying function, that is a function with the property that, for any

constant c,

L(cx)

L(x)

1, x .

An example of a slowly varying function is log x, or exp

_

(log x)

_

, for < 1. In the

Pareto distribution > 1, or = 1 if L(x) decays suciently fast.

1.8. Multivariate Normal Distributions.

The random vector (X

1

, X

2

, . . . , X

n

) R

n

is said to have a multivariate normal

distribution if for every constant vector (c

1

, c

2

, . . . , c

n

) R

n

, the distribution of

c

1

X

1

+ + c

n

X

n

is normal.

Multivariate normal distributions facilitate modelling on portfolios. A portfolio

is a weighted average of the assets with weights that sum up to one. The weights

specify what fraction of the total investment is allocated to assets.

As in one dimensional normal distributions, the multivariate distribution is de-

termined by the mean

(

1

, . . . ,

n

),

10

with

i

= E[X

i

] and the covariance matrix. That is the matric G = (G

i,j

) with

entries

G

ij

= E[X

i

X

j

]

i

,

j

.

For simplicity lets assume that

i

= 0, for i = 1, 2 . . . , n. Then the multivariate

normal density function is given by

1

(2)

n/2

(detG)

1/2

exp

_

1

2

< x, G

1

x >

_

, x R

n

,

where G

1

is the inverse of G, detG the determinant of G and < , > denotes the

inner product in R

n

, that is, if x, , y R

n

, then < x, y >=

n

i=1

x

i

y

i

.

1.9. Exercises.

1 (Logonormal Distributions) Compute the moments of a logonormal distribution

X = e

Z

, with Z a normal N(,

2

) distribution. In particular compute its mean,

standard deviation, skewness and kurtosis.

2. (Exponentials and Poissons) Exponential distributions often arise in the study

of arrivals, qeueing etc. modeling the time between interarrivals. Consider T to

be the time for the rst arrival in a system and suppose it has an exponential

distribution with scale parameter 1. Compute P(T > t + s|T > s).

Suppose that the number of arrivals on a system is a Poisson process with pa-

rameter . That is

Prob(#{arrivals before time t} = k) = e

t

(t)

k

k!

and arrivals in disjoint time intervals are independent.

What is the distribution of the interarrival times ?

3. Compute the moments of a gamma distribution with shape parameter and

scale parameter 1.

4. Prove Lemma 1

5. Prove that the distribution of

2

1

is a Gamma(1/2,1/2).

6. Show that a heavy tailed distribution has high kurtosis.

7. Derive the pdf of the tdistribution.

8. Compute the kurtosis of (a) N(0, 1), (b) an exponential with scale parameter

one.

9. (Mixture models). Let X

1

N(0,

2

1

) and X

2

N(0,

2

2

) two independent

normal distribution. Let also Y be another independent random variable with a

Bernoulli distribution, that is P(Y = 1) = p and P(Y = 0) = 1 p, for some

0 < p < 1.

A. What is the mean and the variance of Z = Y X

1

+ (1 Y )X

2

?

B. Are the tails of its distribution heavier or lighter when compared to a normal

distribution with the same mean and variance? If so, for what values of p ? Give

also an intuitive explanation of your mathematical derivation

11

Use some statistical software to draw the distribution of the mixture model, for

some values of the parameter p and compare it (especially the tails) with the one of

the corresponding normal.

You might also like

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- 6th Central Pay Commission Salary CalculatorDocument15 pages6th Central Pay Commission Salary Calculatorrakhonde100% (436)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Stats For Finance2Document11 pagesStats For Finance2Rahul GattaniNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Model Validation: Theory, Practice and Perspectives: Zeliade SystemsDocument22 pagesModel Validation: Theory, Practice and Perspectives: Zeliade SystemshenaffpNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- ABCDocument24 pagesABCRahul GattaniNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- STA301 My Student Quiz-3 by Vu Topper RMDocument9 pagesSTA301 My Student Quiz-3 by Vu Topper RMHamza ButtNo ratings yet

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- CDF ExplainedDocument31 pagesCDF ExplainedTayyab AhmedNo ratings yet

- Standard Normal DistributionDocument22 pagesStandard Normal DistributioncliermanguiobNo ratings yet

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Erf TablesDocument2 pagesErf TablesAlango Jr TzNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Statistical and Mathematical Methods For Data AnalysisDocument25 pagesStatistical and Mathematical Methods For Data AnalysisDrive 02No ratings yet

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- COVID-19 Cases by Age Group in the PhilippinesDocument5 pagesCOVID-19 Cases by Age Group in the PhilippinesZeniah LouiseNo ratings yet

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- A Novel Bivariate Generalized Weibull DistributionDocument25 pagesA Novel Bivariate Generalized Weibull DistributionLal PawimawhaNo ratings yet

- Probability & Statistics MCQsDocument17 pagesProbability & Statistics MCQsRohit SinghNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Statistics Homework HelpDocument26 pagesStatistics Homework HelpStatistics Homework SolverNo ratings yet

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Data 1 Histogram Data 2 Histogram: Data 1 Cumulative Distributions Data 2 Cumulative DistributionsDocument2 pagesData 1 Histogram Data 2 Histogram: Data 1 Cumulative Distributions Data 2 Cumulative DistributionsKhalil KhdourNo ratings yet

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Discrete Random Variables: 4.1 Definition, Mean and VarianceDocument15 pagesDiscrete Random Variables: 4.1 Definition, Mean and VariancejordyswannNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Management Science - Chapter 7 - Test ReveiwerDocument10 pagesManagement Science - Chapter 7 - Test ReveiwerAuroraNo ratings yet

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Argo Function Cheat Sheet PDFDocument4 pagesArgo Function Cheat Sheet PDFDubinhoNo ratings yet

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- Howell 8E Z Table PDFDocument4 pagesHowell 8E Z Table PDFelcoltonNo ratings yet

- 1.7 2d Random VariableDocument44 pages1.7 2d Random VariableSHarves AUdiNo ratings yet

- Homework 9: Assigned: Dec. 8, 2021Document2 pagesHomework 9: Assigned: Dec. 8, 2021Tuğra DemirelNo ratings yet

- Special Probability Distribution Ii: Juhaidah Binti Jamal Faculty Education of Technical and VocationalDocument32 pagesSpecial Probability Distribution Ii: Juhaidah Binti Jamal Faculty Education of Technical and VocationalJuhaidah Jamal100% (2)

- Elg 3126Document6 pagesElg 3126Serigne Saliou Mbacke SourangNo ratings yet

- Probability Distribution PDFDocument44 pagesProbability Distribution PDFSimon KarkiNo ratings yet

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- ProbDistributionsRandomVariablesDocument110 pagesProbDistributionsRandomVariablesjohnarulrajNo ratings yet

- Chap 4 - Discrete ProbabilityDocument63 pagesChap 4 - Discrete ProbabilityQuizziesZA100% (1)

- Poisson Distribution & ProblemsDocument2 pagesPoisson Distribution & ProblemsEunnicePanaliganNo ratings yet

- Chapter 4 Probability DistributionDocument29 pagesChapter 4 Probability DistributionAssimi DembéléNo ratings yet

- Math562TB 06F PDFDocument701 pagesMath562TB 06F PDFalcava219151No ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- SOA Exam 4C Fall 2009 Exams QuestionsDocument172 pagesSOA Exam 4C Fall 2009 Exams QuestionsaspiringinsomniacNo ratings yet

- Stat 230 Problem Set 2Document1 pageStat 230 Problem Set 2N squaredNo ratings yet

- CH 7Document77 pagesCH 7ArjunNo ratings yet

- V64i04 PDFDocument34 pagesV64i04 PDFawesome112358No ratings yet

- Risk Analysis SimulationDocument19 pagesRisk Analysis SimulationAqiel Siraj - El - Sayyid AdamNo ratings yet

- On Some Discrete Distributions and Their Applications With Real LDocument26 pagesOn Some Discrete Distributions and Their Applications With Real LAthiq AthiqNo ratings yet

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)