Professional Documents

Culture Documents

Hebbian Learning

Uploaded by

Piyush BansalCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Hebbian Learning

Uploaded by

Piyush BansalCopyright:

Available Formats

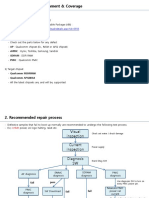

Next Assignment

Train a counter-propagation network to

compute the 7-segment coder and its

inverse.

You may use the code in

/cs/cs152/book:

counter.c

readme

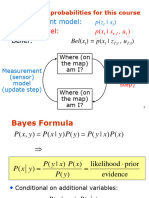

ART1 Demo

Increasing

vigilance

causes the

network to

be more

selective, to

introduce a

new

prototype

when the fit

is not good.

Try

different

patterns

Hebbian Learning

Hebbs Postulate

When an axon of cell A is near enough to excite a cell B and

repeatedly or persistently takes part in firing it, some growth

process or metabolic change takes place in one or both cells such

that As efficiency, as one of the cells firing B, is increased.

D. O. Hebb, 1949

A

B

In other words,

when a weight

contributes to

firing a neuron,

the weight is

increased. (If

the neuron

doesnt fire,

then it is not).

A

B

A

B

Colloquial Corollaries

Use it or lose it.

Colloquial Corollaries?

Absence makes the heart grow fonder.

Generalized Hebb Rule

When a weight contributes to firing a neuron,

the weight is increased.

When a weight acts to inhibit the firing of a

neuron, the weight is decreased.

Flavors of Hebbian Learning

Unsupervised

Weights are strengthened by the

actual response to a stimulus

Supervised

Weights are strengthened by the

desired response

Unsupervised Hebbian

Learning

(aka Associative Learning)

Simple Associative Network

a hardlim wp b + ( ) hardlim wp 0.5 ( ) = =

p

1 stimulus ,

0 no stimulus ,

= a

1 response ,

0 no response ,

=

input

output

Banana Associator

p

0

1 shape detected ,

0 shape not detected ,

= p

1 smell detected ,

0 smell not detected ,

=

Unconditioned Stimulus Conditioned Stimulus

Didnt

Pavlov

anticipate

this?

Banana Associator Demo

can be toggled

Unsupervised Hebb Rule

w

ij

q ( ) w

ij

q 1 ( ) oa

i

q ( )p

j

q ( ) + =

W q ( ) W q 1 ( ) oa q ( )p

T

q ( ) + =

Vector Form:

p 1 ( ) p 2 ( ) . p Q ( ) , , ,

Training Sequence:

actual response

input

Learning Banana Smell

w

0

1 w 0 ( ) , 0 = =

Initial Weights:

p

0

1 ( ) 0 = p 1 ( ) , 1 = { } p

0

2 ( ) 1 = p 2 ( ) , 1 = { } . , ,

Training Sequence:

w q ( ) w q 1 ( ) a q ( )p q ( ) + =

a 1 ( ) h a r d l i m w

0

p

0

1 ( ) w 0 ( ) p 1 ( ) 0.5 + ( )

h a r d l i m 1 0 0 1 0.5 + ( ) 0 (no banana)

=

= =

First Iteration (sight fails, smell present):

w 1 ( ) w 0 ( ) a 1 ( )p 1 ( ) + 0 0 1 + 0 = = =

o = 1

unconditioned

(shape)

conditioned

(smell)

Example

a 2 ( ) h a r d l i m w

0

p

0

2 ( ) w 1 ( ) p 2 ( ) 0.5 + ( )

h a r d l i m 1 1 0 1 0.5 + ( ) 1 (banana)

=

= =

Second Iteration (sight works, smell present):

w 2 ( ) w 1 ( ) a 2 ( )p 2 ( ) + 0 1 1 + 1 = = =

Third Iteration (sight fails, smell present):

a 3 ( ) hardlim w

0

p

0

3 ( ) w 2 ( ) p 3 ( ) 0.5 + ( )

hardlim 1 0 1 1 0.5 + ( ) 1 (banana)

=

= =

w 3 ( ) w 2 ( ) a 3 ( )p 3 ( ) + 1 1 1 + 2 = = =

Banana will now be detected if either sensor works.

Problems with Hebb Rule

Weights can become arbitrarily large.

There is no mechanism for weights to

decrease.

Hebb Rule with Decay

W q ( ) W q 1 ( ) oa q ( )p

T

q ( ) W q 1 ( ) + =

W q ( ) 1 ( )W q 1 ( ) oa q ( )p

T

q ( ) + =

This keeps the weight matrix from growing without bound,

which can be demonstrated by setting both a

i

and p

j

to 1:

w

i j

max

1 ( ) w

i j

max

oa

i

p

j

+ =

w

i j

max

1 ( ) w

i j

max

o + =

w

i j

max o

--- =

Banana Associator with Decay

Example: Banana Associator

a 1 ( ) h a r d l i m w

0

p

0

1 ( ) w 0 ( ) p 1 ( ) 0.5 + ( )

h a r d l i m 1 0 0 1 0.5 + ( ) 0 (no banana)

=

= =

First Iteration (sight fails, smell present):

w 1 ( ) w 0 ( ) a 1 ( )p 1 ( ) 0.1w 0 ( ) + 0 0 1 0.1 0 ( ) + 0 = = =

a 2 ( ) h a r d l i m w

0

p

0

2 ( ) w 1 ( ) p 2 ( ) 0.5 + ( )

h a r d l i m 1 1 0 1 0.5 + ( ) 1 (banana)

=

= =

Second Iteration (sight works, smell present):

w 2 ( ) w 1 ( ) a 2 ( )p 2 ( ) 0.1w 1 ( ) + 0 1 1 0.1 0 ( ) + 1 = = =

= 0.1 o = 1

Example

Third Iteration (sight fails, smell present):

a 3 ( ) hardlim w

0

p

0

3 ( ) w 2 ( ) p 3 ( ) 0.5 + ( )

hardlim 1 0 1 1 0.5 + ( ) 1 (banana)

=

= =

w 3 ( ) w 2 ( ) a 3 ( )p 3 ( ) 0.1w 3 ( ) + 1 1 1 0.1 1 ( ) + 1.9 = = =

General Decay Demo

no decay

larger decay

w

i j

m a x

o

- - - =

Problem of Hebb with Decay

Associations will be lost if stimuli are not occasionally

presented.

w

ij

q ( ) 1 ( )w

ij

q 1 ( ) =

If a

i

= 0, then

If = 0, this becomes

w

i j

q ( ) 0.9 ( )w

i j

q 1 ( ) =

Therefore the weight decays by 10% at each iteration

where there is no stimulus.

0 10 20 30

0

1

2

3

Solution to Hebb Decay Problem

Dont decay weights when there is no

stimulus

We have seen rules like this (Instar)

Instar (Recognition Network)

Instar Operation

a hardlim Wp b + ( ) hardlim w

T

1

p b + ( ) = =

The instar will be active when

w

T

1

p b >

or

w

T

1

p w

1

p u cos b > =

For normalized vectors, the largest inner product occurs when the

angle between the weight vector and the input vector is zero -- the

input vector is equal to the weight vector.

The rows of a weight matrix represent patterns

to be recognized.

Vector Recognition

b w

1

p =

If we set

the instar will only be active when u = 0.

b w

1

p >

If we set

the instar will be active for a range of angles.

As b is increased, the more patterns there will be (over a

wider range of u) which will activate the instar.

w

1

Instar Rule

w

ij

q ( ) w

ij

q 1 ( ) oa

i

q ( )p

j

q ( ) + =

Hebb with Decay

Modify so that learning and forgetting will only occur

when the neuron is active - Instar Rule:

w

i j

q ( ) w

i j

q 1 ( ) o a

i

q ( ) p

j

q ( ) a

i

q ( ) w q 1 ( ) + =

i j

w

ij

q ( ) w

ij

q 1 ( ) oa

i

q ( ) p

j

q ( ) w

i j

q 1 ( ) ( ) + =

w q ( )

i

w q 1 ( )

i

oa

i

q ( ) p q ( ) w q 1 ( )

i

( ) + =

or

Vector Form:

Graphical Representation

w q ( )

i

w q 1 ( )

i

o p q ( ) w q 1 ( )

i

( ) + =

For the case where the instar is active (a

i

=

1):

or

w q ( )

i

1 o ( ) w q 1 ( )

i

op q ( ) + =

For the case where the instar is inactive (a

i

=

0):

w q ( )

i

w q 1 ( )

i

=

Instar Demo

weight

vector

input

vector

AW

Outstar (Recall Network)

Outstar Operation

W a

-

=

Suppose we want the outstar to recall a certain pattern a*

whenever the input p = 1 is presented to the network. Let

Then, when p = 1

a satlins Wp ( ) satlins a

-

1 ( ) a

-

= = =

and the pattern is correctly recalled.

The columns of a weight matrix represent patterns

to be recalled.

Outstar Rule

w

ij

q ( ) w

i j

q 1 ( ) oa

i

q ( )p

j

q ( ) p

j

q ( )w

ij

q 1 ( ) + =

For the instar rule we made the weight decay term of the Hebb

rule proportional to the output of the network.

For the outstar rule we make the weight decay term proportional to

the input of the network.

If we make the decay rate equal to the learning rate o,

w

i j

q ( ) w

i j

q 1 ( ) o a

i

q ( ) w

ij

q 1 ( ) ( )p

j

q ( ) + =

Vector Form:

w

j

q ( ) w

j

q 1 ( ) o a q ( ) w

j

q 1 ( ) ( )p

j

q ( ) + =

Example - Pineapple Recall

Definitions

a satl ins W

0

p

0

Wp + ( ) =

W

0

1 0 0

0 1 0

0 0 1

=

p

0

shape

texture

weight

=

p

1 if a pineapple can be seen ,

0 otherwise ,

=

p

pi neap ple

1

1

1

=

Outstar Demo

Iteration 1

p

0

1 ( )

0

0

0

= p 1 ( ) , 1 =

)

`

p

0

2 ( )

1

1

1

= p 2 ( ) , 1 =

)

`

. , ,

a 1 ( ) satli ns

0

0

0

0

0

0

1 +

\ .

|

|

|

| |

0

0

0

(no response) = =

w

1

1 ( ) w

1

0 ( ) a 1 ( ) w

1

0 ( ) ( ) p 1 ( ) +

0

0

0

0

0

0

0

0

0

\ .

|

|

|

| |

1 +

0

0

0

= = =

o = 1

Convergence

a 2 ( ) satlins

1

1

1

0

0

0

1 +

\ .

|

|

|

| |

1

1

1

(measurements given) = =

w

1

2 ( ) w

1

1 ( ) a 2 ( ) w

1

1 ( ) ( ) p 2 ( ) +

0

0

0

1

1

1

0

0

0

\ .

|

|

|

| |

1 +

1

1

1

= = =

w

1

3 ( ) w

1

2 ( ) a 2 ( ) w

1

2 ( ) ( ) p 2 ( ) +

1

1

1

1

1

1

1

1

1

\ .

|

|

|

| |

1 +

1

1

1

= = =

a 3 ( ) satli ns

0

0

0

1

1

1

1 +

\ .

|

|

|

| |

1

1

1

(measurements recalled) = =

Supervised

Hebbian Learning

Linear Associator

a Wp =

p

1

t

1

{ , } p

2

t

2

{ , } . p

Q

t

Q

{ , } , , ,

Training Set:

a

i

w

ij

p

j

j 1 =

R

=

Hebb Rule

w

ij

new

w

ij

ol d

o f

i

a

i q

( )g

j

p

jq

( ) + =

Presynaptic Signal

Postsynaptic Signal

Simplified Form:

Supervised Form:

w

i j

new

w

ij

ol d

oa

iq

p

jq

+ =

w

i j

new

w

ij

ol d

t

iq

p

jq

+ =

Matrix Form:

W

new

W

old

t

q

p

q

T

+ =

actual output

input pattern

desired output

Batch Operation

W t

1

p

1

T

t

2

p

2

T

.

t

Q

p

Q

T

+ + + t

q

p

q

T

q 1 =

Q

= =

.

W

t

1

t

2

. t

Q

p

1

T

p

2

T

p

Q

T

T P

T

= =

T

t

1

t

2

. t

Q

=

P

p

1

p

2

. p

Q

=

Matrix Form:

(Zero Initial

Weights)

Performance Analysis

a Wp

k

t

q

p

q

T

q 1 =

Q

\ .

|

| |

p

k

t

q

q 1 =

Q

p

q

T

p

k

( ) = = =

p

q

T

p

k

( ) 1 q k = =

0 q k = =

Case I, input patterns are orthogonal.

a Wp

k

t

k

= =

Therefore the network output equals the target:

Case II, input patterns are normalized, but not orthogonal.

a Wp

k

t

k

t

q

p

q

T

p

k

( )

q k =

+ = =

Error term

Example

p

1

1

1

1

= p

2

1

1

1

= p

1

0. 5774

0. 5774

0. 5774

t

1

,

1

= =

)

`

p

2

0. 5774

0. 5774

0. 5774

t

2

,

1

= =

)

`

W TP

T

1 1

0. 5774 0. 5774 0. 5774

0. 5774 0. 5774 0. 5774

1. 1548 0 0

= = =

Wp

1

1. 1548 0 0

0. 5774

0. 5774

0. 5774

0. 6668

= =

Wp

2

0 1. 1548 0

0. 5774

0. 5774

0. 5774

0. 6668

= =

Banana Apple Normalized Prototype Patterns

Weight Matrix (Hebb Rule):

Tests:

Banana

Apple

Pseudoinverse Rule - (1)

F W ( ) | |t

q

Wp

q

| |

2

q 1 =

Q

=

Wp

q

t

q

= q 1 2 . Q , , , =

WP T =

T

t

1

t

2

. t

Q

= P

p

1

p

2

. p

Q

=

F W ( ) | |T WP| |

2

| |E| |

2

= =

|| E ||

2

e

i j

2

j

i

=

Performance Index:

Matrix Form:

Mean-squared error

Pseudoinverse Rule - (2)

WP T =

W TP

1

=

F W ( ) | |T WP| |

2

| |E| |

2

= =

Minimize:

If an inverse exists for P, F(W) can be made zero:

W TP

+

=

When an inverse does not exist F(W) can be minimized

using the pseudoinverse:

P

+

P

T

P ( )

1

P

T

=

Relationship to the Hebb Rule

W TP

+

=

P

+

P

T

P ( )

1

P

T

=

W T P

T

=

Hebb Rule

Pseudoinverse Rule

P

T

P I =

P

+

P

T

P ( )

1

P

T

P

T

= =

If the prototype patterns are orthonormal:

Example

p

1

1

1

1

t

1

,

1

= =

)

`

p

2

1

1

1

t

2

,

1

= =

)

`

W TP

+

1 1

1 1

1 1

1 1

\ .

|

|

|

| |

+

= =

P

+

P

T

P ( )

1

P

T

3 1

1 3

1

1 1 1

1 1 1

0. 5 0. 25 0. 25

0. 5 0. 25 0. 25

= = =

W TP

+

1 1

0. 5 0. 25 0. 25

0. 5 0. 25 0. 25

1 0 0

= = =

Wp

1

1 0 0

1

1

1

1

= = Wp

2

1 0 0

1

1

1

1

= =

Autoassociative Memory

p

1

1 1 1 1 1 1 1 1 1 1 1 1 1 1 .1 1

T

=

W p

1

p

1

T

p

2

p

2

T

p

3

p

3

T

+ + =

Tests

50% Occluded

67% Occluded

Noisy Patterns (7 pixels)

Supervised Hebbian Demo

Spectrum of Hebbian Learning

W

new

W

old

t

q

p

q

T

+ =

W

new

W

old

ot

q

p

q

T

+ =

W

new

W

old

ot

q

p

q

T

W

old

+ 1 ( )W

old

ot

q

p

q

T

+ = =

W

new

W

ol d

o t

q

a

q

( ) p

q

T

+ =

W

new

W

old

oa

q

p

q

T

+ =

Basic Supervised Rule:

Supervised with Learning Rate:

Smoothing:

Delta Rule:

Unsupervised:

target

actual

You might also like

- Haykin, Xue-Neural Networks and Learning Machines 3ed SolnDocument103 pagesHaykin, Xue-Neural Networks and Learning Machines 3ed Solnsticker59253% (17)

- Solutions Manual Probability Statistics Computer Scientists 2nd Edition Baron PDFDocument20 pagesSolutions Manual Probability Statistics Computer Scientists 2nd Edition Baron PDFwaqas naqvi43% (7)

- Numerical Methods For Solutions of Equations in PythonDocument9 pagesNumerical Methods For Solutions of Equations in Pythontheodor_munteanuNo ratings yet

- Machine Learning Tutorial SolutionsDocument8 pagesMachine Learning Tutorial SolutionsNguyen Tien ThanhNo ratings yet

- 6 Month Training Report On SubstationDocument95 pages6 Month Training Report On SubstationPiyush Bansal86% (7)

- Rudin's Principles of Mathematical Analysis NotesDocument99 pagesRudin's Principles of Mathematical Analysis Notestimcantango100% (5)

- 40 Java Inheritance Practice Coding QuestionsDocument24 pages40 Java Inheritance Practice Coding Questionsshaan100% (1)

- Presentation On Substation 220 KVDocument37 pagesPresentation On Substation 220 KVPiyush Bansal75% (12)

- Unit 1 Introduction To ComputerDocument23 pagesUnit 1 Introduction To ComputerNamdev Upadhyay100% (2)

- DLMS SERVER Object Library User ManualDocument26 pagesDLMS SERVER Object Library User ManualvietbkfetNo ratings yet

- SCADA Data Gateway Fact SheetDocument3 pagesSCADA Data Gateway Fact SheetMisbah El MunirNo ratings yet

- Ann 4Document88 pagesAnn 4Anirut M TkttNo ratings yet

- 24 AssociativeLearning1Document35 pages24 AssociativeLearning1Mahyar MohammadyNo ratings yet

- Internethebbian1 090921232804 Phpapp02Document53 pagesInternethebbian1 090921232804 Phpapp02Fierda Kurnia CapNo ratings yet

- 1 - Single Layer Perceptron ANN SDocument40 pages1 - Single Layer Perceptron ANN SDumidu GhanasekaraNo ratings yet

- HWDocument84 pagesHWTg DgNo ratings yet

- Eli Maor, e The Story of A Number, Among ReferencesDocument10 pagesEli Maor, e The Story of A Number, Among ReferencesbdfbdfbfgbfNo ratings yet

- I N F P F P F: Branching ProcessDocument7 pagesI N F P F P F: Branching ProcessFelix ChanNo ratings yet

- Part3 1Document8 pagesPart3 1Siu Lung HongNo ratings yet

- Problem Set 2 - Solution: X, Y 0.5 0 X, YDocument4 pagesProblem Set 2 - Solution: X, Y 0.5 0 X, YdamnedchildNo ratings yet

- Roark - Lec 2b - Forward BackwardDocument46 pagesRoark - Lec 2b - Forward BackwardAndrés Mauricio Barragán SarmientoNo ratings yet

- AI Machine Learning Neural Networks & Genetic AlgorithmsDocument71 pagesAI Machine Learning Neural Networks & Genetic Algorithmserjaimin89No ratings yet

- Steel ResitDocument11 pagesSteel ResitDarren XuenNo ratings yet

- Kalman Filter S13Document67 pagesKalman Filter S13Dan SionovNo ratings yet

- Suggested Solutions: Problem Set 3 Econ 210: April 27, 2015Document11 pagesSuggested Solutions: Problem Set 3 Econ 210: April 27, 2015qiucumberNo ratings yet

- Homework 3 Solutions PDFDocument7 pagesHomework 3 Solutions PDFforante3No ratings yet

- 6.441 Homework 3: Information Theory Problems SolvedDocument6 pages6.441 Homework 3: Information Theory Problems SolvedKushagra SinghalNo ratings yet

- Binary Response Models Logistic RegressionDocument6 pagesBinary Response Models Logistic RegressionjuntujuntuNo ratings yet

- (A) Source: 2.2 Binomial DistributionDocument6 pages(A) Source: 2.2 Binomial DistributionjuntujuntuNo ratings yet

- Power Flow Analysis: Newton-Raphson IterationDocument27 pagesPower Flow Analysis: Newton-Raphson IterationBayram YeterNo ratings yet

- Numerical Methods To Solve Systems of Equations in PythonDocument12 pagesNumerical Methods To Solve Systems of Equations in Pythontheodor_munteanuNo ratings yet

- Math 13: Differential EquationsDocument11 pagesMath 13: Differential EquationsReyzhel Mae MatienzoNo ratings yet

- Analysis Book BSC (HONS) MATHEMATICS DELHIUNIVERSITY.Document141 pagesAnalysis Book BSC (HONS) MATHEMATICS DELHIUNIVERSITY.Sanjeev Shukla100% (2)

- Power FlowDocument25 pagesPower FlowsayedmhNo ratings yet

- Midterm SolutionsDocument5 pagesMidterm SolutionsLinbailuBellaJiangNo ratings yet

- Lecture 4 PDFDocument17 pagesLecture 4 PDFAhmed MohamedNo ratings yet

- Neural Network and Fuzzy System MathDocument12 pagesNeural Network and Fuzzy System MathFahad hossienNo ratings yet

- Soft Computing 300Document7 pagesSoft Computing 300rohit SinghNo ratings yet

- Arc TanDocument3 pagesArc TanlennyrodolfoNo ratings yet

- Exact-Linearization Lyapunov-Based Design: Lab 2 Adaptive Control BacksteppingDocument35 pagesExact-Linearization Lyapunov-Based Design: Lab 2 Adaptive Control BacksteppingSachin ShendeNo ratings yet

- Hw1 Sol Amcs202Document4 pagesHw1 Sol Amcs202Fadi Awni EleiwiNo ratings yet

- Solution To Problem Set 5Document7 pagesSolution To Problem Set 588alexiaNo ratings yet

- Lec 16Document28 pagesLec 16Khai HuynhNo ratings yet

- Relationship of Z-Transform and Fourier TransformDocument9 pagesRelationship of Z-Transform and Fourier TransformarunathangamNo ratings yet

- 1 Iterative Methods For Linear Systems 2 Eigenvalues and EigenvectorsDocument2 pages1 Iterative Methods For Linear Systems 2 Eigenvalues and Eigenvectorsbohboh1212No ratings yet

- Jacek PolewczakDocument2 pagesJacek PolewczakTechnicalGames ChileNo ratings yet

- Assignment 4 AnsDocument10 pagesAssignment 4 AnsAnuska DeyNo ratings yet

- HW 1 SolDocument6 pagesHW 1 SolSekharNaikNo ratings yet

- Lecture 9 Gauss Seidal MethodDocument15 pagesLecture 9 Gauss Seidal MethodharjasNo ratings yet

- Queuing and Reliability Theory (MATH712) : MODULE 2: Advanced Queuing ModelsDocument14 pagesQueuing and Reliability Theory (MATH712) : MODULE 2: Advanced Queuing ModelsAnisha GargNo ratings yet

- Homework 8 Solutions: 6.2 - Gram-Schmidt Orthogonalization ProcessDocument5 pagesHomework 8 Solutions: 6.2 - Gram-Schmidt Orthogonalization ProcessCJ JacobsNo ratings yet

- Material: Glad & Ljung Ch. 12.2 Khalil Ch. 4.1-4.3 Lecture NotesDocument42 pagesMaterial: Glad & Ljung Ch. 12.2 Khalil Ch. 4.1-4.3 Lecture NotesArun JerardNo ratings yet

- Tutorial 6 Clustering Key TakeawaysDocument12 pagesTutorial 6 Clustering Key TakeawaysYomna SalemNo ratings yet

- ITERATIVEDocument8 pagesITERATIVEsofianina05No ratings yet

- IMC 2010 (Day 2)Document4 pagesIMC 2010 (Day 2)Liu ShanlanNo ratings yet

- PDF 4Document11 pagesPDF 4shrilaxmi bhatNo ratings yet

- Probability and Bayes rule explained with examplesDocument10 pagesProbability and Bayes rule explained with exampless_m_hosseini_ardaliNo ratings yet

- Tutorial - 5 - Solution - q1 q2 q3 q4 q5Document5 pagesTutorial - 5 - Solution - q1 q2 q3 q4 q5KireziNo ratings yet

- Unit III Syllabus and Time Domain Analysis of Discrete Time SystemsDocument35 pagesUnit III Syllabus and Time Domain Analysis of Discrete Time SystemsAbhimanyu PerumalNo ratings yet

- Lecture2 2023Document50 pagesLecture2 2023proddutkumarbiswasNo ratings yet

- Statmat IiDocument5 pagesStatmat IiEca RivandaNo ratings yet

- Qua Tern IonsDocument10 pagesQua Tern IonsPrashant SrivastavaNo ratings yet

- Perceptron Learning Algorithm Lecture SupplementDocument6 pagesPerceptron Learning Algorithm Lecture SupplementSinaAstaniNo ratings yet

- Selected Solutions, Griffiths QM, Chapter 1Document4 pagesSelected Solutions, Griffiths QM, Chapter 1Kenny StephensNo ratings yet

- Ten-Decimal Tables of the Logarithms of Complex Numbers and for the Transformation from Cartesian to Polar Coordinates: Volume 33 in Mathematical Tables SeriesFrom EverandTen-Decimal Tables of the Logarithms of Complex Numbers and for the Transformation from Cartesian to Polar Coordinates: Volume 33 in Mathematical Tables SeriesNo ratings yet

- Daily250113 NewDocument11 pagesDaily250113 NewPiyush BansalNo ratings yet

- FGMO - PDF FGMO - PDF FGMO - PDF FGMO PDFDocument5 pagesFGMO - PDF FGMO - PDF FGMO - PDF FGMO PDFabhi24shekNo ratings yet

- FGMO - PDF FGMO - PDF FGMO - PDF FGMO PDFDocument5 pagesFGMO - PDF FGMO - PDF FGMO - PDF FGMO PDFabhi24shekNo ratings yet

- FGMO - PDF FGMO - PDF FGMO - PDF FGMO PDFDocument5 pagesFGMO - PDF FGMO - PDF FGMO - PDF FGMO PDFabhi24shekNo ratings yet

- I. Regional Availability/Demand: Off Peak (03:00 HRS) MW Evening Peak (20:00 HRS) MW Day Energy (Net MU)Document4 pagesI. Regional Availability/Demand: Off Peak (03:00 HRS) MW Evening Peak (20:00 HRS) MW Day Energy (Net MU)ashok203No ratings yet

- UntitledDocument7 pagesUntitledPiyush BansalNo ratings yet

- UntitledDocument7 pagesUntitledPiyush BansalNo ratings yet

- UntitledDocument7 pagesUntitledPiyush BansalNo ratings yet

- UntitledDocument7 pagesUntitledPiyush BansalNo ratings yet

- UntitledDocument1 pageUntitledPiyush BansalNo ratings yet

- UntitledDocument7 pagesUntitledPiyush BansalNo ratings yet

- UntitledDocument1 pageUntitledPiyush BansalNo ratings yet

- UntitledDocument1 pageUntitledPiyush BansalNo ratings yet

- UntitledDocument1 pageUntitledPiyush BansalNo ratings yet

- In-Circuit and In-Application Programming of The 89C51Rx+/Rx2/66x MicrocontrollersDocument17 pagesIn-Circuit and In-Application Programming of The 89C51Rx+/Rx2/66x Microcontrollersv1swaroopNo ratings yet

- Cache MemoryDocument10 pagesCache MemoryasrNo ratings yet

- CS 162 Homework 6 SolutionsDocument4 pagesCS 162 Homework 6 SolutionsTP PROXYNo ratings yet

- Sprint #4: 1. Navigation 2. WireframesDocument15 pagesSprint #4: 1. Navigation 2. WireframesR4nd3lNo ratings yet

- Ikfast Paper Solutions PDFDocument4 pagesIkfast Paper Solutions PDFkwastekNo ratings yet

- Connecting SAP Through Microsoft Direct AccessDocument4 pagesConnecting SAP Through Microsoft Direct AccessKalyanaNo ratings yet

- 8.1.3.8 Packet Tracer - Investigate Unicast, Broadcast, and - IGDocument11 pages8.1.3.8 Packet Tracer - Investigate Unicast, Broadcast, and - IGlauraNo ratings yet

- Lec 1Document35 pagesLec 1jimmyboyjrNo ratings yet

- Applications of Computer NetworksDocument3 pagesApplications of Computer NetworksAnam MalikNo ratings yet

- BoardDiag ENGDocument20 pagesBoardDiag ENGPavsterSizNo ratings yet

- 14 FloorplanningDocument23 pages14 FloorplanningsrajeceNo ratings yet

- Emc Vnxe3150, Vnxe3300 Unified Storage SystemsDocument5 pagesEmc Vnxe3150, Vnxe3300 Unified Storage SystemsRuiNo ratings yet

- DBA Role and ResponsibilitiesDocument21 pagesDBA Role and ResponsibilitiesAzmath Tech TutsNo ratings yet

- Mcs 011Document197 pagesMcs 011Chandradeep Reddy TeegalaNo ratings yet

- Sample Questions 1Document4 pagesSample Questions 1shobhitNo ratings yet

- 24 Online User GuideDocument161 pages24 Online User GuideGiscard SibefoNo ratings yet

- Priority EncoderDocument7 pagesPriority EncoderAlinChanNo ratings yet

- Supermicro AOC Simso+ IPMI User and Configuration ManualDocument68 pagesSupermicro AOC Simso+ IPMI User and Configuration ManualGalip KuyukNo ratings yet

- Lab-11 Random ForestDocument2 pagesLab-11 Random ForestKamranKhanNo ratings yet

- Section 1-3: Strategies For Problem SolvingDocument10 pagesSection 1-3: Strategies For Problem SolvingPatel HirenNo ratings yet

- A Novel Ternary Content-Addressable MemoryDocument5 pagesA Novel Ternary Content-Addressable MemoryPrakhar ShuklaNo ratings yet

- ChaCha, a Stream Cipher Variant of Salsa20Document6 pagesChaCha, a Stream Cipher Variant of Salsa20Écio FerreiraNo ratings yet

- Nmap - Scanning The InternetDocument45 pagesNmap - Scanning The InternetDDN1967No ratings yet

- Bailey DCS Simulator API: PreviseDocument33 pagesBailey DCS Simulator API: PreviseRio YuwandiNo ratings yet

- (Lehe4764-02) Emcp Monitoring SoftwareDocument2 pages(Lehe4764-02) Emcp Monitoring Softwarevictor.cipriani0% (1)

- Java Decision Making TechniquesDocument4 pagesJava Decision Making TechniquesNikunj KumarNo ratings yet