Professional Documents

Culture Documents

01PE M1000e12

Uploaded by

Ryan BelicovCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

01PE M1000e12

Uploaded by

Ryan BelicovCopyright:

Available Formats

PowerEdge M1000e Administration and Configuration

PowerEdge M1000e Administration and Configuration

A blade server is a server chassis housing multiple thin, modular electronic circuit boards, known as server blades. Each blade is a server in its own right, often dedicated to a single application. The blades are literally servers on a card, containing processors, memory, integrated network controllers, an optional fiber channel host bus adaptor (HBA) and other input/output (IO) ports. Blade servers allow more processing power in less rack space, simplifying cabling and reducing power consumption. The PowerEdge M1000e solution is designed for the following markets: Corporate Public Small/Medium Business (SMB) Customers that require high performance, high availability, and manageability in the most rackdense form factor. A blade server are implemented in: Virtualization environments SAN applications (Exchange, database) High performance cluster and grid environments Front-end applications (web apps/Citrix/terminal services) File sharing access Web page serving and caching SSL encrypting of Web communication Audio and video streaming Like most clustering applications, blade servers can also be managed to include load balancing and failover capabilities.

PowerEdge M1000e Administration and Configuration

The PowerEdge M1000e Modular Server Enclosure solution is a fully modular blade enclosure optimized for use with all Dell M-series blades. The PowerEdge M1000e supports server modules, network, storage, and cluster interconnect modules (switches and pass-through modules), a high performance and highly available passive midplane that connects server modules to the infrastructure components, power supplies, fans, integrated KVM (iKVM) and Chassis Management Controllers (CMC). The PowerEdge M1000e uses redundant and hotpluggable components throughout to provide maximum uptime. These technologies are packed into a highly available rack dense package that integrates into standard Dell or 3rd party 1000mm depth racks. Dell provides complete, scale on-demand switch designs. With additional I/O slots and switch options, you have the flexibility you need to meet increasing demands for I/O consumption. Plus, Dells FlexIO modular switch technology lets you easily scale to provide additional uplink and stacking functionalityno need to waste your current investment with a rip and replace upgrade. Flexibility and scalability to maximize TCO. Best for environments needing to consolidate computing resources to maximize efficiency. Ultra dense servers, easy to deploy and manage, while minimizing energy and cooling consumption. Note: The PowerEdge M1000e Chassis has been created as a replacement for the current 1855/1955 chassis unit. The existing 8/9G blades will not fit or run in the 10G chassis, nor will the 10G blades fit or run in the 8/9G chassis.

PowerEdge M1000e Administration and Configuration

IT IS ALL ABOUT EFFICIENCY. Built from the ground up, the M1000e delivers one of the most energy efficient, flexible, and manageable blade server product on the market. The M1000e is designed to support future generations of blade technologies regardless of processor/chipset architecture. Built on Dells energy smart technology the M1000e can help customers to increase capacity, lower operating costs, and deliver better performance/watt. The key areas of interest are Power Delivery and Power Management. Shared power takes advantage of the large number of resources in the modular server, distributing power across the system without the excess margin required in dedicated rack mount servers and switches. The PowerEdge M1000e introduces an advanced power budgeting feature, controlled by the CMC and negotiated in conjunction with the Integrated Dell Remote Access Controller (iDRAC) on every server module. Prior to any server module powering up, the server module iDRAC performs a power budget inventory for the server module, based upon its configuration of CPUs, memory, I/O and local storage. Once this number is generated, the iDRAC communicates the power budget to the CMC, which confirms the availability of power from the system level, based upon a total chassis power inventory, including power supplies, iKVM, I/O Modules, fans and server modules. Since the CMC controls when every modular system element powers on, it can now set power policies on a system level. In coordination with the CMC, iDRAC hardware constantly monitors actual power consumption at each server module. This power measurement is used locally by the server module to insure that its instantaneous power consumption never exceeds the budgeted amount. While the system administrator may never notice these features in action, what they enable is a more aggressive utilization of the shared system power resources. No longer is the system flying blind in regards to power consumption, and there is no danger of exceeding power capacity availability, which could result in a spontaneous activation of power supply over current protection without these features. The cooling strategy for the PowerEdge M1000e supports a low impedance, highefficiency design. Lower airflow impedance allows the system to draw air through the system at a lower operating pressure than competitive systems. The lower backpressure reduces the system fan power consumed to meet the airflow requirements of the system, which correlates directly into power and cost savings. This highefficiency design philosophy also extends into the layout of the subsystems within the PowerEdge M1000e. The server modules, I/O modules, and power supplies are incorporated into the system with independent airflow paths. This isolates these components from preheated air, reducing the required airflow consumptions of each module. This hardware design is coupled with a thermal cooling algorithm that incorporates the following: Server module thermal monitoring by the iDRAC I/O module thermal health monitors CMC monitoring and fan control (throttling)

PowerEdge M1000e Administration and Configuration

Dells PowerEdge M1000e modular server enclosure delivers major enhancements in management features. Each subsystem has been reviewed and adjusted to optimize efficiencies, minimizing the impacts to existing management tools and processes, and providing future growth opportunities to standards based management. The M1000e helps reduce the cost and complexity of managing computing resources so you can focus on growing your business or managing your organization with features such as: Centralized CMC modules for redundant, secure access paths for IT administrators to manage multiple enclosures and blades from a single interface. One of the only blade solutions with an integrated KVM switch, enabling easy set up and deployment, and seamless integration into an existing KVM infrastructure.

Dynamic and granular power management so you have the capability to set power thresholds to help ensure your blades operate within your specific power envelope.

Real-time reporting for enclosure and blade power consumption, as well as the ability to prioritize blade slots for power, providing you with optimal control over power resources. And power is no longer just about power delivery, it is also about power management. Dynamic power management provides the capability to set high/low power thresholds to ensure blades operate within your power envelope. The PowerEdge M1000e System adds a number of advanced power management features that operate transparently to the user, while others require only a one time selection of desired operating modes. The system administrator sets priorities for each server module. The priority works in conjunction with the CMC power budgeting and iDRAC power monitoring to insure that the lowest priority blades are the first to enter any power optimization mode, should conditions warrant the activation of this feature.

PowerEdge M1000e Administration and Configuration

Server blade modules are accessible from the front of the PowerEdge M1000e enclosure. Up to sixteen half-height or eight full height server modules (or a mixture of the two blade types) are supported. At the bottom of the enclosure is a flip out multiple angle LCD panel for local systems management configuration, system information, and status. The front of the enclosure also contains two USB connections for USB keyboard and mouse (only, no USB flash or hard disk drives can be connected), a video connection and the system power button. The front control panels USB and video ports work only when the iKVM module is installed, as the iKVM provides the capability to switch the KVM between the blades. Not visibly obvious, but important nonetheless, are fresh air plenums at both top and bottom of the chassis. The bottom fresh air plenum provides nonpreheated air to the power supplies. The top fresh air plenum provides nonpreheated air to the CMC, iKVM and I/O Modules. It is also important to note that any empty blade server slots should have filler modules installed to maintain proper airflow through the enclosure. The high-speed midplane is completely passive, with no hidden stacking midplanes or interposers with active components. The midplane provides connectivity for I/O fabric networking, storage, and interprocess communications. Broad management capabilities include private Ethernet, serial, USB, and low level management connectivity between the CMC, iKVM switch, and server modules. Finally, the midplane encompasses a unique design in that it uses female connectors instead of male connectors, in case of bent pins, only the related module need to be replaced, the midplane does not. System Control Panel Features: System Control Panel w/ LCD panel and two USB keyboard/mouse and one video crash cart connections. The system power button turns the system on and off. Press to turn on the system. Press and hold 10 seconds to turn off the system. Caution: The system power button controls power to all of the blades and I/O modules in the enclosure. PowerEdge M1000e Administration and Configuration 7

The back panel of the M1000e enclosure supports: Up to 6 I/O modules for three redundant fabrics; available switches include Dell & Cisco 1Gb/10Gb Ethernet with modular bays, Dell 10Gb Ethernet with modular bays, Dell Ethernet pass-through, Brocade 4Gb Fibre Channel, Brocade 8Gb Fibre Channel, Fibre Channel pass-through, Mellanox DDR & QDR Infiniband. One or two (redundant) CMC modules, that include high performance, Ethernet based management connectivity via the CMC. An optional iKVM module.

Choice of 3 or 6 hot-pluggable power supplies, including thorough power management capabilities including delivering shared power to ensure full capacity of the power supplies available to all modules.

Nine, N+1 redundant fan modules all come standard. All back panel modules are hot-pluggable.

PowerEdge M1000e Administration and Configuration

The CMC provides multiple systems management functions for your modular server, including the M1000e enclosures network and security settings, I/O module and iDRAC network settings, and power redundancy and power ceiling settings. The optional Avocent iKVM analog switch module provides connections for a keyboard, video (monitor), and mouse. The iKVM can also be accessed from the front of the enclosure, providing front or rear panel KVM functionality, but not at the same time. For enhanced security, front panel access can be disabled using the CMCs interface. You can use the iKVM to access the CMC. It should be noted that chassis management and monitoring on previous blade systems (1855/1955) was done using a DRAC installed directly in to the chassis, the DRAC would then offer connectivity to the blades one by one. The PowerEdge M1000e blade server solution instead uses a CMC to manage and monitor the chassis and each server module has its own onboard iDRAC chip. The iDRAC offers features in line with the DRAC 5 and allows remote control using virtual machine, this was provide by the KVM on previous models of the blade systems, now the iKVM has only been offered as an option as a lot of customers do not access the blade servers locally.

PowerEdge M1000e Administration and Configuration

The M1000e server solution offers a holistic management solution designed to fit into any customer data center. It features: Dual Redundant Chassis Management Controllers (CMC) o Powerful management for the entire enclosure o Includes: real-time power management and monitoring; flexible security; status/ inventory/ alerting for blades, I/O and chassis iDRAC o One per blade with full DRAC functionality like other Dell servers including vMedia/KVM o Integrates into CMC or can be used separately iKVM o Embedded in the chassis for easy KVM infrastructure incorporation allowing one admin per blade o Control Panel on front of M1000e for crash cart access Front LCD o Designed for deployment and local status reporting Management connections transfer health and control traffic throughout the chassis. The system management fabric is architected for 100BaseT Ethernet over differential pairs routed to each module. There are two 100BaseT interfaces between CMCs, one switched and one unswitched. All system management Ethernet is routed for 100 Mbps signaling. Every module has a management network link to each CMC, with redundancy provided at the module level. Failure of any individual link will cause failover to the redundant CMC.

PowerEdge M1000e Administration and Configuration

10

The FlexAddress feature is an optional upgrade introduced in CMC 1.1 that allows server modules to replace the factory assigned World Wide Name and Media Access Control (WWN/MAC) network IDs with WWN/MAC IDs provided by the chassis. Every server module is assigned unique WWN and MAC IDs as part of the manufacturing process. Before the FlexAddress feature was introduced, if you had to replace one server module with another, the WWN/MAC IDs would change and Ethernet network management tools and SAN resources would need to be reconfigured to be aware of the new server module. FlexAddress allows the CMC to assign WWN/MAC IDs to a particular slot and override the factory IDs. If the server module is replaced, the slot-based WWN/MAC ID remains the same. This feature eliminates the need to reconfigure Ethernet network management tools and SAN resources for a new server module. Additionally, the override action only occurs when a server module is inserted in a FlexAddress enabled chassis; no permanent changes are made to the server module. If a server module is moved to a chassis that does not support FlexAddress, the factory assigned WWN/MAC IDs are used.

PowerEdge M1000e Administration and Configuration

11

Features Lock the World Wide Name (WWN) of the Fibre Channel controller and Media Access Control (MAC) of the Ethernet and iSCSI controller into a blade slot, instead of to the blades hardware Service or replace a blade or I/O mezzanine card and maintain all address mapping to Ethernet and storage fabrics Easy and highly reliable booting from Ethernet or Fibre Channel based Storage Area Networks (SANs) All MAC/WWN/iSCSIs in the chassis will never change Fast & Efficient integration into existing network infrastructure FlexAddress is simple and easy to implement FlexAddress SD card comes with a unique pool of MAC/WWNs and is able to be enabled on a single enclosure at a given time, until disabled Works with all I/O modules including Cisco, Brocade, and Dell PowerConnect switches as well as pass-thru modules PowerEdge M1000e Administration and Configuration

Benefits Easily replace blades without network management effort

Ease of Management

An almost no-touch blade replacement

Fewer future address name headaches No need to learn a new management tool Low cost vs switch-based solution Simple and quick to deploy No need for the user to configure No risk of duplicates on your network or SAN Choice is independent of switch or pass-through module

12

Each M-series server module connects to traditional network topologies; these network topologies include Ethernet, fibre-channel, and Infiniband. The M1000e enclosure uses three layers of I/O fabric to connect the server module with the I/O module via the midplane. Up to six hot-swappable I/O modules can be installed within the enclosure. The I/O modules include fibre-channel switch and passthrough modules, Infiniband switches, and 1 GbE and 10 GbE Ethernet switch and pass-through modules. The six I/O slots are classified as Fabrics A, B, or C. Each fabric contains 2 slots numbered 1 and 2 resulting in A1 and A2, B1 and B2, and finally C1 and C2. Each 1 and 2 relate to the ports found on the server side I/O cards (LOM or mezzanine cards). Fabric A connects to the hardwired LAN-on-Motherboard (LOM) interface. Currently, only Ethernet pass-through or switch modules may be installed in Fabric A. Further, Fabrics B and C are a 1 to 10 Gb/sec dual port, quad-lane redundant fabric which allow higher bandwidth I/O technologies and can support Ethernet, Infiniband, and fibre-channel modules. Fabric B and C can be used independently of each other, for example either 1 GbE, 10 GbE, fibre-channel, or Infiniband can be installed in Fabric B, and any one of the other types in Fabric C. To communicate with an I/O module in the Fabric B or C slots, a blade must have a matching mezzanine card installed in the Fabric B or C mezzanine card location. Also, GbE I/O modules that would be used in Fabric A may also be installed in the Fabric B or C slots provided a matching GbE mezzanine card is installed in that same fabric. In summary, the only mandate is that Fabric A is always a GbE LOM. And Fabrics B and C are similar in design, but an optional mezzanine card can be installed in one of the available Fabric B or C mezzanine slots located on the motherboard. There is no interdependency of the three fabrics, the choice for one fabric does not restrict or limit or depend on the choice any other fabric.

PowerEdge M1000e Administration and Configuration

13

To understand the PowerEdge M1000e architecture, it is necessary to first define four key terms: fabric, lane, link and port. A fabric is defined as a method of encoding, transporting, and synchronizing data between devices. Examples of fabrics are Gigabit Ethernet (GE), Fibre Channel (FC) or Infiniband (IB). Fabrics are carried inside the PowerEdge M1000e system, between server module and I/O Modules through the midplane. They are also carried to the outside world through the physical copper or optical interfaces on the I/O modules. A lane is defined as a single fabric data transport path between I/O end devices. In modern high speed serial interfaces each lane is comprised of one transmit and one receive differential pair. In reality, a single lane is four wires in a cable or traces of copper on a printed circuit board, a transmit positive signal, a transmit negative signal, a receive positive signal, and a receive negative signal. Differential pair signaling provides improved noise immunity for these high speed lanes. Various terminology is used by fabric standards when referring to lanes. PCIe calls this a lane, Infiniband calls it a physical lane, and Fibre Channel and Ethernet call it a link. A link is defined here as a collection of multiple fabric lanes used to form a single communication transport path between I/O end devices. Examples are two, four and eight lane PCIe, or four lane 10GBASEKX4. PCIe, Infiniband and Ethernet call this a link. The differentiation has been made here between lane and link to prevent confusion over Ethernets use of the term link for both single and multiple lane fabric transports. Some fabrics such as Fibre Channel do not define links as they simply run multiple lanes as individual transports for increased bandwidth. A link as defined here provides synchronization across the multiple lanes, so they effectively act together as a single transport. A port is defined as the physical I/O end interface of a device to a link. A port can have single or multiple lanes of fabric I/O connected to it. The PowerEdge M1000e system management hardware and software includes Fabric Consistency Checking, preventing the accidental activation of any misconfigured fabric device on a server module. Since mezzanine to I/O Module connectivity is hardwired yet fully flexible, a user could inadvertently hot plug a server module with the wrong mezzanine into the system. For instance, if Fibre Channel I/O Modules are located in Fabric C I/O Slots, then all server modules must have either no mezzanine in fabric C or only Fibre Channel cards in fabric C. If a GE mezzanine card is in a Mezzanine C slot, the system automatically detects this misconfiguration and alerts the user of the error. No damage occurs to the system, and the user has the ability to reconfigure the faulted module.

PowerEdge M1000e Administration and Configuration

14

The iDRAC on each server modules calculates the amount of airflow required on an individual server module level and sends a request to the CMC. This request is based on temperature conditions on the server module, as well as passive requirements due to hardware configuration. Concurrently, each IOM can send a request to the CMC to increase or decrease cooling to the I/O subsystem. The CMC interprets these requests, and can control the fans as required to maintain Server and I/O Module airflow at optimal levels. Fans are loud when running at full speed. It is rare that fans need to run at full speed. Please ensure that components are operating properly if fans remain at full speed. The CMC will automatically raise and lower the fan speed to a setting that is appropriate to keep all modules cool. If a single fan is removed, all fans will be set to 50% speed if the enclosure is in Standby mode; if the enclosure is powered on, removal of a single fan is treated like a failure (nothing happens). Re-installation of a fan will cause the rest of the fans to settle back to a quieter state. Whenever communication to the CMC or iDRAC is lost such as during firmware update, the fan speed will increase and create more noise.

PowerEdge M1000e Administration and Configuration

15

Flexible and scalable, the PowerEdge M1000e is designed to support future generations of blade technologies regardless of processor or chipset architecture. The M1000e has these advantages: The M1000e blade enclosure helps reduce the cost and complexity of managing computing resources with some of the most innovative, most effective, easiest-to-use management features in the industry. Speed and ease of deployment: Each 1U server takes on average approximately 15 minutes to rack, not including cabling. The M1000e enclosure can be racked in approximately the same amount of time then each blade takes seconds to physically install, and cable management is reduced. When you need to add additional blade servers, they slide right in. High-density: In 40U of rack space customers can install 64 blades (4 enclosures by 16 slots) into four M1000e enclosures versus 40 1U servers. The M1000e is a leader in power efficiency, built on innovative Dell Energy Smart technology. The M1000e is the only solution that supports mixing full- and half-height blades in adjacent slots within the same chassis without limitations or caveats. Redundant Chassis Management Controllers (CMCs) provide a powerful systems management tool, giving comprehensive access to component status, inventory, alerts and management. Dell FlexIO modular switch technology lets you easily scale to provide additional uplink and stacking functionality giving you the flexibility and scalability for today's rapidly evolving networking landscape without replacing your current environment. Our FlexAddress technology ties Media Access Control (MAC) and World Wide Name (WWN) addresses to blade slots not to servers or switch ports so reconfiguring your setup is as simple as sliding a blade out of a slot and replacing it with another. The M1000e's passive midplane design keeps critical active components on individual blades or as hot-swappable shared components within the chassis, improving reliability and serviceability.

PowerEdge M1000e Administration and Configuration

16

PowerEdge M1000e Administration and Configuration

17

PowerEdge M1000e Administration and Configuration

18

You might also like

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- 01 - PE M1000eDocument18 pages01 - PE M1000eRyan BelicovNo ratings yet

- EqualLogic - Configuration - Guide EXAMPLES CISCO DELL 54xxDocument37 pagesEqualLogic - Configuration - Guide EXAMPLES CISCO DELL 54xxOndra BajerNo ratings yet

- Monolithic Thermal Design White PaperDocument17 pagesMonolithic Thermal Design White PaperRyan BelicovNo ratings yet

- 0166 Dell Intel Reckoner 050609 HRDocument2 pages0166 Dell Intel Reckoner 050609 HRRyan Belicov100% (1)

- Monolithic Thermal Design White PaperDocument17 pagesMonolithic Thermal Design White PaperRyan BelicovNo ratings yet

- 1 Poweredge M1000E - Administration and ConfigurationDocument4 pages1 Poweredge M1000E - Administration and ConfigurationRyan BelicovNo ratings yet

- 02 - Installation & FRUsDocument18 pages02 - Installation & FRUsRyan BelicovNo ratings yet

- Solve Your It Energy Crisis: With An Energy Smart Solution From DellDocument5 pagesSolve Your It Energy Crisis: With An Energy Smart Solution From DellRyan BelicovNo ratings yet

- Independent Study - Bake Off With Dell Winning Against HP For Server Management SimplificationDocument22 pagesIndependent Study - Bake Off With Dell Winning Against HP For Server Management SimplificationRyan BelicovNo ratings yet

- Dedup Whitepaper 4AA1-9796ENWDocument14 pagesDedup Whitepaper 4AA1-9796ENWRyan BelicovNo ratings yet

- 00a Training Cover-Agenda-ToC Partner1Document8 pages00a Training Cover-Agenda-ToC Partner1Ryan BelicovNo ratings yet

- SentinelLogManager Day2 5 POCsDocument8 pagesSentinelLogManager Day2 5 POCsRyan BelicovNo ratings yet

- Adi - Installing and Configuring SCE 2010Document20 pagesAdi - Installing and Configuring SCE 2010Ryan BelicovNo ratings yet

- HP Storageworks D2D Backup System: HP D2D 4004 HP D2D 4009 Start HereDocument2 pagesHP Storageworks D2D Backup System: HP D2D 4004 HP D2D 4009 Start HereRyan BelicovNo ratings yet

- Integration With HP DataProtector c02747484Document34 pagesIntegration With HP DataProtector c02747484Ryan BelicovNo ratings yet

- Integration With NetBackup c02747485Document38 pagesIntegration With NetBackup c02747485Ryan BelicovNo ratings yet

- Integration With CommVault Simpana c02747478Document52 pagesIntegration With CommVault Simpana c02747478Ryan BelicovNo ratings yet

- 19Document16 pages19Ryan BelicovNo ratings yet

- Dedup and Replication Solution Guide VLS and D2D c01729131Document138 pagesDedup and Replication Solution Guide VLS and D2D c01729131Ryan BelicovNo ratings yet

- Exam Preparation Guide HP2-Z12 Servicing HP Networking ProductsDocument5 pagesExam Preparation Guide HP2-Z12 Servicing HP Networking ProductsRyan BelicovNo ratings yet

- APS NetworkingDocument2 pagesAPS NetworkingChristian Antonio HøttNo ratings yet

- Lefthand BundlesDocument13 pagesLefthand BundlesRyan BelicovNo ratings yet

- 16Document20 pages16Ryan BelicovNo ratings yet

- ContentDocument176 pagesContentRyan BelicovNo ratings yet

- 12Document24 pages12Ryan BelicovNo ratings yet

- 20Document42 pages20Ryan BelicovNo ratings yet

- 7Document78 pages7Ryan BelicovNo ratings yet

- 23Document64 pages23Ryan BelicovNo ratings yet

- 24Document96 pages24Ryan BelicovNo ratings yet

- 22Document30 pages22Ryan BelicovNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Huawei E6000 Blade Server Brochure 02Document2 pagesHuawei E6000 Blade Server Brochure 02Williams RochaNo ratings yet

- B SeriesDocument71 pagesB SeriesprdelongNo ratings yet

- Check Point Utm 1 BrochureDocument28 pagesCheck Point Utm 1 BrochureManu Balakrishna PillaiNo ratings yet

- Case Study: Installing The Eucalyptus Community Cloud (ECC) : Physical Hardware LayoutDocument6 pagesCase Study: Installing The Eucalyptus Community Cloud (ECC) : Physical Hardware LayoutReinaldo CanovasNo ratings yet

- Understanding key decision makers and business processes in the data centerDocument141 pagesUnderstanding key decision makers and business processes in the data centerKalPurushNo ratings yet

- XD-Integration With VMware Vsphere PDFDocument22 pagesXD-Integration With VMware Vsphere PDFFausto Martin Vicente MoralesNo ratings yet

- Implementing Cisco UCS Solutions Sample ChapterDocument44 pagesImplementing Cisco UCS Solutions Sample ChapterPackt PublishingNo ratings yet

- Data Sheet c78-618603Document29 pagesData Sheet c78-618603Arjun RamNo ratings yet

- HP Virtual Connect Family DatasheetDocument12 pagesHP Virtual Connect Family DatasheetOliver AcostaNo ratings yet

- Dell Poweredge M1000e System Configuration GuideDocument48 pagesDell Poweredge M1000e System Configuration GuideasdfNo ratings yet

- Data Sheet 5010 NexusDocument22 pagesData Sheet 5010 NexusnaveenvarmainNo ratings yet

- Firmware Upgrade of Sun Blade 6000 CMM Module Through CLI Mode Using Tftpd32Document7 pagesFirmware Upgrade of Sun Blade 6000 CMM Module Through CLI Mode Using Tftpd32deb.bhandari5617No ratings yet

- 14 Enterprise ComputingDocument51 pages14 Enterprise ComputingTaufik Ute Hidayat Qeeran100% (2)

- HP Architechtural DiagramDocument71 pagesHP Architechtural Diagrampranav_023No ratings yet

- Ibm Manual Blade Center PDFDocument264 pagesIbm Manual Blade Center PDFsebastianspinNo ratings yet

- Huawei Esight Server Device Manager White Paper PDFDocument20 pagesHuawei Esight Server Device Manager White Paper PDFMarco SquinziNo ratings yet

- Configure Cisco Imm For Flashstack and Deploy Red Hat Enterprise LinuxDocument125 pagesConfigure Cisco Imm For Flashstack and Deploy Red Hat Enterprise Linuxsalist3kNo ratings yet

- FusionCloud Desktop Solution Engineering Installation Preparation Guide For CustomerDocument71 pagesFusionCloud Desktop Solution Engineering Installation Preparation Guide For CustomerRogério FerreiraNo ratings yet

- PROGNOSIS IP Telephony Manager Key Features With CUCM Appliances v2 - 0Document32 pagesPROGNOSIS IP Telephony Manager Key Features With CUCM Appliances v2 - 0chaitanya dhakaNo ratings yet

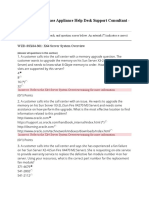

- Oracle Database Appliance Help Desk Support Consultant - Online AssessmentDocument35 pagesOracle Database Appliance Help Desk Support Consultant - Online AssessmentashisNo ratings yet

- Advantech Full DatasheetDocument234 pagesAdvantech Full DatasheetE TNo ratings yet

- 5060 MGC-8 Hardware GuideDocument124 pages5060 MGC-8 Hardware GuideBen Duong100% (1)

- Hitachi White Paper Compute Blade 2000Document15 pagesHitachi White Paper Compute Blade 2000onNo ratings yet

- Manage Virtual MachinesDocument22 pagesManage Virtual Machinessanjay dubeyNo ratings yet

- Cisco UCS 5108 Server Chassis Hardware Installation GuideDocument78 pagesCisco UCS 5108 Server Chassis Hardware Installation GuidemicjosisaNo ratings yet

- Cisco Intersight Management For UCS X-Series Lab v1: Americas HeadquartersDocument62 pagesCisco Intersight Management For UCS X-Series Lab v1: Americas HeadquartersAndyNo ratings yet

- Technical Sales LENU319EMDocument239 pagesTechnical Sales LENU319EMHibaNo ratings yet

- Brocade Adapters v3.1.0.0 Admin GuideDocument324 pagesBrocade Adapters v3.1.0.0 Admin GuideSundaresan SwaminathanNo ratings yet

- SAP HP ProLiant MasterDocument46 pagesSAP HP ProLiant MasterShah MietyNo ratings yet

- Huawei E9000 Hardware SolutionDocument5 pagesHuawei E9000 Hardware SolutionwessNo ratings yet