Professional Documents

Culture Documents

Hadoop Multi Users

Uploaded by

sairamgopal0 ratings0% found this document useful (0 votes)

30 views2 pageshadoop multi user configuration

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Documenthadoop multi user configuration

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

30 views2 pagesHadoop Multi Users

Uploaded by

sairamgopalhadoop multi user configuration

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

You are on page 1of 2

Need for multiple users

In hadoop we run different tasks and store data in HDFS.

If several users are doing tasks using the same user account, it will be difficult to trace the jobs and track the

tasks/defects done by each user.

Also the other issue is with the security.

If all are given the same user account, all users will have the same privilege and all can access everyones data, can

modify it, can perform execution, can delete it also.

This is a very serious issue.

For this we need to create multiple user accounts.

Benefits of Creating multiple users

1) The directories/files of other users cannot be modified by a user.

2) Other users cannot add new files to a users directory.

3) Other users cannot perform any tasks (mapreduce etc) on a users files.

In short data is safe and is accessible only to the assigned user and the superuser.

Steps for setting up multiple User accounts

For adding new user capable of performing hadoop operations, do the following steps.

Step 1

Creating a New User

For Ubuntu

sudo adduser --ingroup <groupname> <username>

For RedHat variants

useradd -g <groupname> <username>

passwd <username>

Then enter the user details and password.

Step 2

we need to change the permission of a directory in HDFS where hadoop stores its temporary data.

Open the core-site.xml file

Find the value of hadoop.tmp.dir.

In my core-site.xml, it is /app/hadoop/tmp. In the proceeding steps, I will be using /app/hadoop/tmp as my directory

for storing hadoop data ( ie value of hadoop.tmp.dir).

Then from the superuser account do the following step.

hadoop fs chmod -R 1777 /app/hadoop/tmp/mapred/staging

Step 3

The next step is to give write permission to our user group on hadoop.tmp.dir (here /app/hadoop/tmp. Open core-

site.xml to get the path for hadoop.tmp.dir). This should be done only in the machine(node) where the new user is

added.

chmod 777 /app/hadoop/tmp

Step 4

The next step is to create a directory structure in HDFS for the new user.

For that from the superuser, create a directory structure.

Eg: hadoop fs mkdir /user/username/

Step 5

With this we will not be able to run mapreduce programs, because the ownership of the newly created directory

structure is with superuser. So change the ownership of newly created directory in HDFS to the new user.

hadoop fs chown R username:groupname <directory to access in HDFS>

Eg: hadoop fs chown R username:groupname /user/username/

Step 6

login as the new user and perform hadoop jobs..

su username

You might also like

- Windows Powers Hell 2Document20 pagesWindows Powers Hell 2sairamgopalNo ratings yet

- Aditya Hrudayam in KannadaDocument4 pagesAditya Hrudayam in Kannadahiremathvijay83% (6)

- Recovering Namenode From SecondarynamenodeDocument1 pageRecovering Namenode From SecondarynamenodesairamgopalNo ratings yet

- Application For The Issue of Original Degree: Hallticket No: 09X21A0409Document1 pageApplication For The Issue of Original Degree: Hallticket No: 09X21A0409sairamgopalNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Visualizing DataDocument51 pagesVisualizing DataMichael WelkerNo ratings yet

- Dump StateDocument10 pagesDump StateElvira Nicoll Heredia MenesesNo ratings yet

- Review Question BankDocument12 pagesReview Question BankVõ Minh ThànhNo ratings yet

- How To Get Data Into DropDown List From Database in JSPDocument3 pagesHow To Get Data Into DropDown List From Database in JSPYanno Dwi AnandaNo ratings yet

- AS400 Interview Questions For AllDocument272 pagesAS400 Interview Questions For Allsharuk100% (2)

- SAS Interview QuestionsDocument33 pagesSAS Interview Questionsapi-384083286% (7)

- Java Codelab Solutions - Section 2.2.3Document1 pageJava Codelab Solutions - Section 2.2.3thetechbossNo ratings yet

- Chapter 12: Example 1: Inventory ABC AnalysisDocument1 pageChapter 12: Example 1: Inventory ABC Analysiscovipanama covidNo ratings yet

- Etherchannel Configuration PDFDocument2 pagesEtherchannel Configuration PDFKaushikNo ratings yet

- Enhanced Autofs Administrator'S Guide: Hp-Ux 11I V1Document88 pagesEnhanced Autofs Administrator'S Guide: Hp-Ux 11I V1netfinityfrNo ratings yet

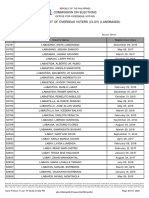

- JEDDAH - CERTIFIED LIST OF OVERSEAS VOTERS CLOV LABASANO To POBAR PDFDocument900 pagesJEDDAH - CERTIFIED LIST OF OVERSEAS VOTERS CLOV LABASANO To POBAR PDFJo SNo ratings yet

- Paramount Maths Volume-1 InHindi PDFDocument238 pagesParamount Maths Volume-1 InHindi PDFpao cnd100% (1)

- L8CN: Network Layer: Logical AddressingDocument23 pagesL8CN: Network Layer: Logical AddressingAhmad ShdifatNo ratings yet

- Database Programming With PL/SQL 4-2: Practice Activities: Conditional Control: Case StatementsDocument5 pagesDatabase Programming With PL/SQL 4-2: Practice Activities: Conditional Control: Case StatementsАбзал КалдыбековNo ratings yet

- Tuning Guide: Ibm Security QradarDocument40 pagesTuning Guide: Ibm Security Qradarsecua369No ratings yet

- Vehicle Management System DBMS Project: AbstractDocument4 pagesVehicle Management System DBMS Project: Abstractmadan kumarNo ratings yet

- Patriot 200 Product Sheet 256GBDocument1 pagePatriot 200 Product Sheet 256GBAdam NugrahaNo ratings yet

- EpicorUserExpCust UserGuide 905700 Part1of3Document124 pagesEpicorUserExpCust UserGuide 905700 Part1of3ஜெயப்பிரகாஷ் பிரபாகரன்No ratings yet

- Walter Fischer: DVB-T Technology and Overview On Mobile TVDocument204 pagesWalter Fischer: DVB-T Technology and Overview On Mobile TVTiano Tiano Sitorus100% (1)

- DreamHome SQLDocument4 pagesDreamHome SQLCLOUDYO NADINo ratings yet

- Project 3Document18 pagesProject 3api-515961562No ratings yet

- Oracle Apps Technical Online TrainingDocument7 pagesOracle Apps Technical Online TrainingImran KhanNo ratings yet

- 7 Data File HandlingDocument40 pages7 Data File HandlingSatayNo ratings yet

- XML Based Attacks: Daniel TomescuDocument25 pagesXML Based Attacks: Daniel TomescudevilNo ratings yet

- Design - Cisco Virtualization Solution For EMC VSPEX With Microsoft Hyper-V 2012 For 50 VMDocument126 pagesDesign - Cisco Virtualization Solution For EMC VSPEX With Microsoft Hyper-V 2012 For 50 VMkinan_kazuki104No ratings yet

- Kendriya Vidyalaya Sangathan, Chennai Region Practice Test 2020 - 21 Class XiiDocument9 pagesKendriya Vidyalaya Sangathan, Chennai Region Practice Test 2020 - 21 Class XiiRamanKaurNo ratings yet

- Networks6thF U1Document156 pagesNetworks6thF U1Marcelo MonteiroNo ratings yet

- DISM Image Management Command PDFDocument10 pagesDISM Image Management Command PDFHack da SilvaNo ratings yet

- Naidu Experiance ResumeDocument3 pagesNaidu Experiance ResumeGvgnaidu GoliviNo ratings yet

- Structure Query LanguageDocument24 pagesStructure Query LanguageRavi singhNo ratings yet