Professional Documents

Culture Documents

Netronome SDN NFV Whitepaper 11-13

Uploaded by

vutuanhvtaCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Netronome SDN NFV Whitepaper 11-13

Uploaded by

vutuanhvtaCopyright:

Available Formats

Software-Defined Network

Functions Virtualization

(SDN & NFV)

This paper discusses two key networking trends: SDN and NFV. It shows how the two

are not only complementary, but, when deployed together, are synergistic. Whereas NFV

focuses on network platform virtualization, SDN is focused on network virtualization.

When deploying NFV platforms, the number of end points can grow exponentially

due to the nature of the many virtual machines (VMs) being spawned. SDN can be used to

provide the connectivity between these VM-based end points in a dynamic way.

Additionally, since NFV focuses on higher-layer (L4-L7) devices (e.g., network security

devices and gateways), there is a recognized need to extend SDN-OpenFlow protocol to

move beyond the current L2-L4 control and into the higher layers.

NFV: A Cross-operators Initiative

Network operators aiming at reducing their

TCO while accelerating new service deploy-

ment, have been working on a new initiative

termed Network Functions Virtualization, or

NFV. The goal is, for example, instead of

procuring a proprietary network appliance,

the operator can deploy applications and

services that can run on top of virtual

machines (VMs) in a Commercial Of The

Shelf (COTS) server architecture platform.

Such applications are referred to as VNFs, or

virtual network functions, and can be dynam-

ically chained to form a multi-service platform.

NFV Value Proposition

The operators goal is to reduce Capex/Opex,

and allow for faster services deployment,

greater fexibility and diferentiation, while

having a low barrier to entry introducing new

services for additional revenue.

In trying to capitalize on the virtualization

gain achieved by the IT industry, NFV targets

many network equipment applications, from

residential gateways at the low-end to a

high-performance frewall at the high-end,

that can run on a range of industry-standard

hardware, all of which require processing

trafc at all 7-layers of the OSI protocol stack.

ETSI ISG NFV

NFV, part of ETSI, is an industry specifcations

group (ISG) aiming at fostering faster inter-

operable implementations, for a broad

number of use cases, rather than necessarily

spawning new standards activities.

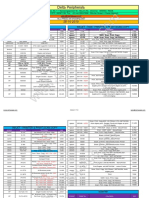

Figure 1 below highlights the three func-

tional working groups within the ISG NFV

efort. The management and orchestration

(MANO) WG is responsible for the manage-

ment and orchestration of network services

This paper examines the

architecture model of both

SDN and NFV, discussing

possible integration models,

and addressing deployment

scenarios to extend SDN-

OpenFlow to support in-line

(in the forwarding plane)

L4-L7 networking services

to better manage NFV

platforms.

The paper concludes by

highlighting Netronomes

strategy, value proposition

and product portfolio in SDN

and NFV

Figure 1. High-level NFV

framework. Three functional

working groups: MANO,

SWA and INF.

Source: ISG Architecture and

Framework document.

VNF

Virtual

Compute

Virtual

Network

Virtual

Storage

Virtualization Layer

Compute Network Storage

VNF VNF VNF VNF

Software Architecture (SWA)

Virtual Network Functions (VNFs)

NFV

Management

and

Orchestration

(MANO)

Hardware Resources

NFV Infrastructure (INF)

APPLICATION

LAYER

CONTROL

LAYER

INFRASTRUCTURE

LAYER

Applications Network Services

Network Controller

SDN Control Software

Southbound API

Northbound APIs

Network Device Network Device

Network Device

Network Device Network Device

NETRONOME WHITEPAPER: Software-Defned Network Functions Virtualization (NFV) 2

on NFV infrastructure. The software architecture WG (SWA) is

responsible for the decomposition of the VNF into functional

blocks and their requirements. The infrastructure WG (INF)

is responsible for specifying the requirements of three

domains: Compute (include storage), hypervisor and network.

Emergence of SDN

In the meantime, an orthogonal new concept has been gaining

ground in the data center and WAN. Software-defned network-

ing, or SDN, is about separating the control plane from the

data plane, making the latter simple and fast, dealing mostly

with the lower layers (L2-L4). The interface between the two is

referred to as the southbound interface. The Open Networking

Foundation (ONF) has defned the OpenFlow protocol as the

standard southbound interface between the SDN controller

and the OpenFlow switch.

The SDN controller has a birds-eye view of the whole

network and can dynamically steer the trafc based on

statistics gathered from the network. In addition, third-party

applications, management and orchestration functions can run

north of the SDN controller. In SDN, these applications are

network-aware, and can communicate their requirements

policies to the network via the SDN controller, resulting in

dynamically changing the network topology, for example.

At the same time, the application can monitor network state

and adapt accordingly.

ONFs SDN-OpenFlow

Figure 2 depicts the ONF-OpenFlow architecture model. In this

architecture, the control layer is the reference point. ONF has

defned a standard OpenFlow protocol, south of the controller.

Business applications and networking services run north of the

controller over a northbound interface, or NBI. The latter is only

now starting to be standardized by ONF.

The infrastructure layer is where all the OpenFlow switches

reside. Their main function is to forward data plane trafc

based on match-action rules defned by the SDN controller.

These switches are equivalent to switches and routers in todays

legacy networks.

SDN-OpenFlow Supports L4-L7

for Best Synergy with NFV

Todays limited deployment of SDN-OpenFlow networks calls

for higher-layer networking services to be north of the SDN

controller. Although this model works for some higher-layer

services, such as DDoS attack prevention, it falls short for many

other networking services, such as Firewall, load balancing

and IPS/IDS.

In order to meet network scaling, low-latency and high-per-

formance, some networking services need to be inserted in the

data path or forwarding plane. Some of the challenges include:

1. Not every service can be run satisfactorily north of the

controller due to performance, latency and scalability issues.

2. While some can, many other services cant and need to be

run inside an enhanced OF switch. Examples of such services

that need to reside in the switch include Intrusion Prevention

(IPS) and SSL Virtual Private Networks (VPNs).

3. In addition, legacy L4-L7 devices, such as load balancers and

frewalls, can reside in the infrastructure layer (forwarding

plane) where the controller can steer trafc based on some

criteria to such legacy devices.

4. The above can be satisfed by extending the OF protocol,

or devising a new protocol focused on L4-L7 while maintain-

ing state information, but this will be up to the ONFs

technical WGs to decide.

Adding L4-L7 Intelligence in SDN-OpenFlow

Figure 3 depicts some of the deployment models to allow

SDN-OpenFlow networks to efectively support L4-L7 services.

There are many deployment models geared toward adding

L4-L7 support in SDN-OpenFlow networks (see Figure 3):

1. Running as applications on the controller

Controller programs SDN switch on per-fow basis

2. Standalone network appliance

Trafc directed to appliance either based on static policy or

dynamically driven by controller, or simply in-line with trafc

E.g., Firewall, Load balancer

Figure 2. ONFs SDN reference architecture Figure 3. Adding L4-L7 support in SDN-OpenFlow networks.

APPLICATION

LAYER

CONTROL

LAYER

INFRASTRUCTURE

LAYER

Network Device Network Device

Network Device

Intelligent Switch

with Layer 4-7

Layer 4-7

Appliance

Applications Layer 4-7 Services

Network Controller

SDN Control Software

Southbound API

Northbound APIs

1

2

3

NETRONOME WHITEPAPER: Software-Defned Network Functions Virtualization (NFV) 3

3. Full Layer 4-7 network services running on an intelligent

switch

Intelligent switch becomes Layer 2-7 device

E.g., IPS/IDS

Note: Any network device in the infrastructure layer,

including network security devices and gateways, are referred

to as switches.

Combining NFV and SDN

Table 1 depicts how SDN & NFV are complementary. SDN can

be used to interconnect the many VM-based end points in

NFV platfroms.

Figure 4 depicts a typical deployment scenario in the data

center. The SDN fabric focus is on fast L2 / L3 forwarding, while

the application servers, appliances and gateways are intelli-

gent edge devices, optionally architected using NFV concepts

(i.e., COTS server architecture).

Figure 4. This fgure depicts the synergy between SDN and NFV in the

data center.

Table 1. SDN and NFV are complementary and synergistic.

Figure 5. NFV reference architecture framework depicts interfaces between the various

functional blocks. (Source: ISG NFV architecture and framework document.)

From an architectural model perspec-

tive, SDN can be integrated into the

NFV architecture framework model

shown in Figure 5. The orchestration

and the management applications

can themselves be VNFs running on

one or more VMs. In such cases, the

OpenFlow protocol is part of the Nf-Vi

interface; or it can be part of the Vn-Nf

interface.

NFV and SDN can be viewed

as synergistic and complementary.

When deployed together, network

equipment can be built using virtual-

ized COTS server architecture, while

the overall network is controlled

through SDN. The resulting network

of NFV equipment can be dynamically

reconfgured, based on applications

and trafc patterns. In addition, the

SDN controller can steer trafc from

one network equipment to another if

required. An example can be a legacy

frewall that is dynamically inserted

in the trafc path when network

monitoring statistics show abnormal-

ity in the trafc.

Architecture Implementation

SDN

aSoftware-based

architecture

aAbout network

virtualization

aNetwork-aware

applications

aFast OpenFlow switches

(L2-L4)

aCentralized controller with

birds-eye view of the network

aMiddleboxes and gateways

(L4-L7)

NFV

aAbout network

equipment

virtualization

aUses COTS server

architecture

aSDN to control

VM connectivity

aVirtualized appliances (L4-L7

middleboxes and gateways)

aNetwork equipment

implemented using server

architecture

aOfoad /acceleration,

when needed

NFV is about virtualizing appliances.

SDN is about virtualizing the network.

APP

NFV

APP

NFV

GW

NFV

OpenFlow

Controller

APP

APP

APP APP

NFV

NFV NFV

Application

Servers

Security

Middlebox

Gateway

Server

NFV NFV

SDN Fabric

Virtual

Computing

Virtual

Storage

Virtualization Layer

Hardware Resources

Virtual

Network

VNF 1

NFVI

VI-Ha

VNF 2 VNF 3

OSS/BSS

Vf-Vi

Orchestrator

NFV Management

and Orchestration

VNF

Manager(s)

Virtualized

Infrastructure

Manager(s)

Service, VNF and

Infrastructure Description

Computing

Hardware

Storage

Hardware

Network

Hardware

EMS 1 EMS 2 EMS 3

Execution reference points Other reference points Main NFV reference points

Vn-Nf

Figure 5. ETSI ISG NFV reference framework architecture diagram.

NETRONOME WHITEPAPER: Software-Defned Network Functions Virtualization (NFV) 4

Service Chaining: Example of SDN-NFV

Working Together

With NFV, the server platform hosts the various network func-

tions (applications and services) on its VMs. Some VNFs need a

single VM, while others may require multiple VMs to meet

their performance target. Allocating a number of VMs to a

specifc VNF is best done dynamically through a management

and orchestration entity that can be either local or remote.

In addition, the resulting large number of VM-based end points

will need to be interconnected in a dynamic fashion based on

network topology and network trafc.

One advantage of SDN-based NFV is simplifying the ability

to implement service chaining of multiple VNFs on the same

platform. The orchestration layer can dynamically defne a series

of services to be implemented on the virtualized platform based

on intelligent information gathered from the network. An exam-

ple of a chain includes a frewall followed by a load balancer,

which, in turn, is followed by a WAN optimization function.

Netronome Strategy, Architecture and Products

Netronome ofers integrated solutions for NFV & SDN, adhering

to the Open COTS server architecture. Today, the Netronome

architecture uses a three-tier processing architecture (Figure 6)

based on two diferent processing chips. When migrating to

the new NFP-6xxx Flow Processor, which combines both

packet and fow processing on one-chip, this architecture will

be reduced to only two chips.

Tier 1

Application / Control Plane Processing

Deep Packet Inspection / Application ID

Content Inspection, Behavioral Heuristics

Forensics, PCRE, Analytics

Tier 2 & 3: Packet & Flow Processing

Packet Classifcation / Filtering

L2-L4 Packet Classifcation

Stateful Flow Processing

Cryptography / PKI

Flow-based, Dynamic Load Balancing

L2 Switching, L3 Routing, NAPT

IPsec / SSL VPNs

SSL Inspection

4x100, 12x40, 48x10 GigE I/O

The Netronome FlowNIC

Netronomes fow processor-based NICs,

or FlowNICs (Figures 7a, 7b), tightly

coupled to multi-socket (up to four) x86

CPUs over a standard PCIe3 interface,

provides an ideal component to extend

a COTS server architecture to support

both SDN and NFV.

With 216 programmable packet

and fow processing cores, it caters to

over 400 Gbps of networking I/O, while

relaying 200 Gbps to up to four x86

devices. The result is one universal

hardware design that can meet not only

DPI and network analytics applications,

but also frewall and load balancing

applications.

Figure 7a. The

intellignet FlowNIC

is an ofoad/

acceleration card

to multi-socket

multi-core x86

Figure 7b. Netronomes NFP-6xxx contains 216 programmable packet

and fow processing cores, supporting multi-100s Gbps on both the

network I/O side and the x86 side.

FlowNIC

VM

VM

M VM

MM VM

VM

VM

M VM

MM VM

VM

VM

M VM

MM VM

VM

VM

M VM

MM VM

PCIe3 4x8

Multicore

CPUs

x86

x86

x86

x86

Optics

20x10G

4x40G

2x100G

Optics

G

G

VM

VM

M VM

MM VM

VM

VM

M VM

MM VM

VM

VM

M VM

MM VM

VM

VM

M VM

MM VM

VM

VM

VMM

M VM

M

MMMM

M VM

VM

VM

VM

VM

VMM

M VM

M

MMMM

M VM

VM

VM

VM

VM

VM M

M VM

M

MMMM

M VM

VM

VM

VM

VM

VM M

M VM

M

MMMM

M VM

Netronome Flow Processing

Application and Control Plane Processing

vNIC0 vNIC1 vNIC255

x86 CPU

Load Balancing

L2-L7 Flow

Processing

L2-L4 Packet

Processing

200Gbps L2-L7

Flow Processing per NFP

Network Modules with Integrated Bypass

8x10 GigE 8x10 GigE 2x100 GigE 2x100 GigE 4x40 GigE 4x40 GigE

Figure 6.

A three-tier processing

architecture is ideal

for high-performance

workloads.

NFV Platform and SDN Gateways

The software-defned NFV platform (Figure 8a) is an open

platform for hosting various virtual network functions (VNFs)

as standalone or in a service chain confguration. The platform

integrates multi-core x86 with Netronome ofoad and acceler-

ation card(s) and software. The integrated system can be fully

virtualized.

The NFV platform can be used in the following applications:

1. NFV / Middlebox platform

2. Host various applications, such as fre wall, IPS/IDS and load

balancing (VNF as a Service)

3. As a NFV Infrastructure as a Service (IaaS)

4. Accelerated Open vSwitch platform

The SDN gateway is an integrated hardware / software

solution focusing on the gateway function between a private or

public cloud and the WAN. It is also ideal for interconnecting

geographically disparate enterprises over the WAN.

As shown in Figure 8b, the SDN Gateway reference platform

can be used as:

1. Multi-tenant DC to MPLS WAN, under SDN OpenFlow 1.3

control

2. Enterprise-to-enterprise connectivity over the WAN

under SDN OpenFlow 1.3 control

3. Accelerated (OpenVSwitch) OVS with OVSDB and OF-Confg

support

NETRONOME WHITEPAPER: Software-Defned Network Functions Virtualization (NFV) 5

MPLS WAN

SDN OpenFlow

Controller

O

p

e

n

F

l

o

w

1

.

3

.

x

O

p

e

n

F

l

o

w

1

.

3

.

x

OF-Config,

OVSDB

OpenFlow Gateway

Multi-tenant

Private DC

Public DC

OF-Config,

OVSDB

Orchestrator

Figure 8a. The 1U reference design platform combines hosted

VNF applications under SDN OpenFlow 1.3 control.

Challenges Ahead

The latest industry eforts in drafting NFV requirements, and

its strategy to solicit Proof of Concepts, or PoCs, will trigger

multi-vendor interoperable implementations aligning with

specifc operator use cases. It remains to be seen how SDN

can be integrated in such PoCs and how these can meet the

challenges in scalability, low-latency and reliability. In any

case, SDN-OpenFlow needs to better address control of inline

L4-L7 devices, as these will be based on NFV implementations.

Netronome has operations in:

USA (Santa Clara [HQ], Pittsburgh & Boston), UK (Cambridge), Malaysia (Penang), South Africa (Centurion) and China (Shenzhen, Hong Kong)

info@netronome.com 877.638.7629 netronome.com

Netronome is a registered trademark, and the Netronome Logo and The Flow Processing Companyare trademarks of Netronome Systems, Inc.

All other trademarks are the property of their respective owners. 2013 Netronome Systems, Inc. All rights reserved. Specifcations are subject to change without notice. (11-13)

Summary

SDN and NFV are two key infection points addressing

complementary goals: NFV is about network equipment

virtualization, while SDN is about network virtualization.

Netronome is capitalizing on both key networking trends by

ofering turnkey reference platforms addressing both SDN

and NFV simultaneously, while meeting the latest OpenFlow

protocol and NFV infrastructure domain requirements.

Figure 8b. Intelligent gateway connecting multi-tenant data center

to data center over a legacy MPLS WAN.

You might also like

- ONAP Orchestration Architecture Use Cases WP Feb 2018Document11 pagesONAP Orchestration Architecture Use Cases WP Feb 2018radhia saidaneNo ratings yet

- MIPI DPI Specification v2Document34 pagesMIPI DPI Specification v2Dileep ChanduNo ratings yet

- Software Defined Networking (SDN) - a definitive guideFrom EverandSoftware Defined Networking (SDN) - a definitive guideRating: 2 out of 5 stars2/5 (2)

- Electronics Manual CNCDocument219 pagesElectronics Manual CNCVojkan Milenovic100% (4)

- Penetration Testing in Wireless Networks: Shree Krishna LamichhaneDocument51 pagesPenetration Testing in Wireless Networks: Shree Krishna LamichhanedondegNo ratings yet

- Logitech Z 5500 Service Manual: Read/DownloadDocument2 pagesLogitech Z 5500 Service Manual: Read/DownloadMy Backup17% (6)

- Software-Defined Networks: A Systems ApproachFrom EverandSoftware-Defined Networks: A Systems ApproachRating: 5 out of 5 stars5/5 (1)

- Telco Cloud - 02. Introduction To NFV - Network Function VirtualizationDocument10 pagesTelco Cloud - 02. Introduction To NFV - Network Function Virtualizationvikas_sh81No ratings yet

- An Introduction To Software Defined NetworkingDocument9 pagesAn Introduction To Software Defined NetworkingPhyo May ThetNo ratings yet

- Software Defined Network and Network Functions VirtualizationDocument16 pagesSoftware Defined Network and Network Functions VirtualizationSaksbjørn Ihle100% (1)

- Chapter 1 DBMSDocument32 pagesChapter 1 DBMSAishwarya Pandey100% (1)

- Introduction To NFVDocument48 pagesIntroduction To NFVMohamed Abdel MonemNo ratings yet

- CH 29 Multimedia Multiple Choice Questions Ans Answers PDFDocument9 pagesCH 29 Multimedia Multiple Choice Questions Ans Answers PDFAnam SafaviNo ratings yet

- Chapter 5 SDN Control PlaneDocument22 pagesChapter 5 SDN Control Planerhouma rhouma100% (1)

- OpenStack Foundation NFV ReportDocument24 pagesOpenStack Foundation NFV ReportChristian Tipantuña100% (1)

- Telco Cloud - 03. Introduction To SDN - Software Defined NetworkDocument8 pagesTelco Cloud - 03. Introduction To SDN - Software Defined Networkvikas_sh81No ratings yet

- SDN Controller Mechanisms for Flexible NetworkingDocument9 pagesSDN Controller Mechanisms for Flexible NetworkingSamNo ratings yet

- SDN-OpenFlow Position Statement v2 - May 2012Document10 pagesSDN-OpenFlow Position Statement v2 - May 2012SnowInnovatorNo ratings yet

- How To Install OVS On Pi PDFDocument8 pagesHow To Install OVS On Pi PDFSeyhaSunNo ratings yet

- SB SDN NVF Solution PDFDocument12 pagesSB SDN NVF Solution PDFabdollahseyedi2933No ratings yet

- Network Virtualization and SDN/NFV - 2 DaysDocument1 pageNetwork Virtualization and SDN/NFV - 2 DaysArmen AyvazyanNo ratings yet

- Survey of Software-Defined Networks and Network Function Virtualization (NFV)Document6 pagesSurvey of Software-Defined Networks and Network Function Virtualization (NFV)Nayera AhmedNo ratings yet

- WP Software Defined NetworkingDocument8 pagesWP Software Defined Networkingmore_nerdyNo ratings yet

- Accelerating NFV Delivery With OpenstackDocument24 pagesAccelerating NFV Delivery With OpenstackAnonymous SmYjg7gNo ratings yet

- Ashton Metzler Associates Ten Things To Look For in An SDN ControllerDocument14 pagesAshton Metzler Associates Ten Things To Look For in An SDN ControllerLeon HuangNo ratings yet

- Software-Defined Networking: A SurveyDocument17 pagesSoftware-Defined Networking: A SurveyVilaVelha CustomizeNo ratings yet

- D C: Partitioning OSPF Networks With SDN: Ivide and OnquerDocument8 pagesD C: Partitioning OSPF Networks With SDN: Ivide and OnquerEng AbdelRhmanNo ratings yet

- FINAL ChapterDocument38 pagesFINAL ChapterMinaz MinnuNo ratings yet

- 4th UnitDocument8 pages4th UnitCh Shanthi PriyaNo ratings yet

- Towards Carrier Grade SDNsDocument20 pagesTowards Carrier Grade SDNs史梦晨No ratings yet

- An Intelligent Framework For Vehicular Ad Hoc Networks Using SDN ArchitectureDocument5 pagesAn Intelligent Framework For Vehicular Ad Hoc Networks Using SDN ArchitectureflorianNo ratings yet

- Future Internet: Software-Defined Networking Using Openflow: Protocols, Applications and Architectural Design ChoicesDocument35 pagesFuture Internet: Software-Defined Networking Using Openflow: Protocols, Applications and Architectural Design ChoicesIrbaz KhanNo ratings yet

- Ethanol: SDN for 802.11 Wireless NetworksDocument9 pagesEthanol: SDN for 802.11 Wireless NetworksnyenyedNo ratings yet

- Samson BADocument9 pagesSamson BASamNo ratings yet

- Design and Implementation of Dynamic Load Balancer On Openflow Enabled SdnsDocument10 pagesDesign and Implementation of Dynamic Load Balancer On Openflow Enabled SdnsIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalNo ratings yet

- Analysis of Network Function Virtualization and Software Defined VirtualizationDocument5 pagesAnalysis of Network Function Virtualization and Software Defined Virtualizationsaskia putri NabilaNo ratings yet

- SDN Network Management with Huawei SolutionsDocument7 pagesSDN Network Management with Huawei SolutionsdarnitNo ratings yet

- Course Title:-Advanced Computer Networking Group Presentation On NFV FunctionalityDocument18 pagesCourse Title:-Advanced Computer Networking Group Presentation On NFV FunctionalityRoha CbcNo ratings yet

- SDN-MaterialDocument5 pagesSDN-MaterialTØXÏC DHRUVNo ratings yet

- Assignment: Software Defined Networking (SDN)Document12 pagesAssignment: Software Defined Networking (SDN)Rosemelyne WartdeNo ratings yet

- Automation Configuration of Networks by Using Software Defined Networking (SDN)Document6 pagesAutomation Configuration of Networks by Using Software Defined Networking (SDN)nassmahNo ratings yet

- Y Performance Evaluation of Software Defined Networking Controllers A Comparative StudyDocument12 pagesY Performance Evaluation of Software Defined Networking Controllers A Comparative StudyTuan Nguyen NgocNo ratings yet

- SDNDocument11 pagesSDNForever ForeverNo ratings yet

- SDN: Management and Orchestration Considerations: White PaperDocument13 pagesSDN: Management and Orchestration Considerations: White PaperTaha AlhatmiNo ratings yet

- What Is Software Defined Network (SDN) ? AnsDocument52 pagesWhat Is Software Defined Network (SDN) ? AnsAriful HaqueNo ratings yet

- Network Function VirtualisationDocument11 pagesNetwork Function VirtualisationDestinNo ratings yet

- SDNand NFVintegrationin Openstack CloudDocument7 pagesSDNand NFVintegrationin Openstack Cloudyekoyesew100% (1)

- Modern Networking Requirements and SDN ArchitectureDocument32 pagesModern Networking Requirements and SDN ArchitecturekavodamNo ratings yet

- NFVDocument4 pagesNFVAnonymous uG4WhXbSeUNo ratings yet

- Current Industry Trends: Open TranscriptDocument8 pagesCurrent Industry Trends: Open Transcriptasdf01220No ratings yet

- What is SDN? Software Defined Networking explainedDocument8 pagesWhat is SDN? Software Defined Networking explainedAvneesh PalNo ratings yet

- Demystifying NFV in Carrier NetworksDocument8 pagesDemystifying NFV in Carrier NetworksGabriel DarkNo ratings yet

- Constraint programming for flexible SFC deployment in NFV/SDN networksDocument10 pagesConstraint programming for flexible SFC deployment in NFV/SDN networksMario DMNo ratings yet

- CN TheoryDocument17 pagesCN TheoryJaya Ragha Charan Reddy GaddamNo ratings yet

- SDNDocument14 pagesSDNSara Nieves Matheu GarciaNo ratings yet

- Document 2Document8 pagesDocument 2Gassara IslemNo ratings yet

- Galluccio 2015Document9 pagesGalluccio 2015dark musNo ratings yet

- Network Functions Virtualization: by William Stallings Etwork Functions Virtualization (NFV) Originated From DisDocument13 pagesNetwork Functions Virtualization: by William Stallings Etwork Functions Virtualization (NFV) Originated From DisDomenico 'Nico' TaddeiNo ratings yet

- Access Control NFV Enforcement at The Ehu-Openflow Enabled FacilityDocument3 pagesAccess Control NFV Enforcement at The Ehu-Openflow Enabled FacilityEdmond NurellariNo ratings yet

- Open-Source Network Optimization Software in The Open SDN/NFV Transport EcosystemDocument14 pagesOpen-Source Network Optimization Software in The Open SDN/NFV Transport EcosystemAldo Setyawan JayaNo ratings yet

- SDN Mobile RANDocument6 pagesSDN Mobile RANAbr Setar DejenNo ratings yet

- Kreutz Et Al.: Software-Defined Networking: A Comprehensive SurveyDocument19 pagesKreutz Et Al.: Software-Defined Networking: A Comprehensive SurveyKimberley Rodriguez LopezNo ratings yet

- 08b - NFVDocument36 pages08b - NFVturturkeykey24No ratings yet

- Implementing NDN Using SDN: A Review of Methods and ApplicationsDocument10 pagesImplementing NDN Using SDN: A Review of Methods and ApplicationsVilaVelha CustomizeNo ratings yet

- 04 Client Servertechnology BasicDocument42 pages04 Client Servertechnology BasicS M Shamim শামীমNo ratings yet

- Network Function VirtualizationDocument9 pagesNetwork Function VirtualizationFateh AlimNo ratings yet

- VHDL Examples for Odd Parity, Pulse, and Priority CircuitsDocument55 pagesVHDL Examples for Odd Parity, Pulse, and Priority Circuitssaketh rNo ratings yet

- AWS Certified DevOps Engineer Professional Exam BlueprintDocument3 pagesAWS Certified DevOps Engineer Professional Exam BlueprintVikas SawnaniNo ratings yet

- Terminal IDEDocument1 pageTerminal IDErenjivedaNo ratings yet

- PrintersDocument5 pagesPrintersnithinnikilNo ratings yet

- Ase Sag 1 PDFDocument376 pagesAse Sag 1 PDFZack HastleNo ratings yet

- hw2 AnsDocument5 pageshw2 AnsJIBRAN AHMEDNo ratings yet

- Opennebula Instal StepsDocument23 pagesOpennebula Instal StepsKarthikeyan PurusothamanNo ratings yet

- Tridium: Open Integrated SolutionsDocument16 pagesTridium: Open Integrated SolutionsAsier ErrastiNo ratings yet

- Electronics A to D D to A Converters GuideDocument51 pagesElectronics A to D D to A Converters GuidesoftshimNo ratings yet

- DBAN TutorialDocument5 pagesDBAN TutorialChristian CastañedaNo ratings yet

- GoToMeeting Organizer QuickRef GuideDocument5 pagesGoToMeeting Organizer QuickRef GuideWin Der LomeNo ratings yet

- Game Resource Generator Guide-1Document6 pagesGame Resource Generator Guide-1Reev B Ulla0% (2)

- Sis 5582Document222 pagesSis 5582Luis HerreraNo ratings yet

- Ult Studio Config 71Document18 pagesUlt Studio Config 71JoséGuedesNo ratings yet

- UPC-F12C-ULT3 Web 20190315Document3 pagesUPC-F12C-ULT3 Web 20190315Tran DuyNo ratings yet

- 8051 Microcontroller Based RFID Car Parking SystemDocument4 pages8051 Microcontroller Based RFID Car Parking SystemRahul AwariNo ratings yet

- Zentyal As A Gateway - The Perfect SetupDocument8 pagesZentyal As A Gateway - The Perfect SetupmatNo ratings yet

- Java-Dll Week 4Document2 pagesJava-Dll Week 4Rhexel ReyesNo ratings yet

- Home Theatre System: HT-DDW870Document60 pagesHome Theatre System: HT-DDW870felipeecorreiaNo ratings yet

- Quick Start Guide - Barco - Encore E2Document2 pagesQuick Start Guide - Barco - Encore E2OnceUponAThingNo ratings yet

- nanoPEB-SIO V1Document10 pagesnanoPEB-SIO V1Robert NilssonNo ratings yet

- Installation Procedure For Linux: $sudo LsusbDocument2 pagesInstallation Procedure For Linux: $sudo LsusbRai Kashif JahangirNo ratings yet

- Product Data Sheet 6ES7214-1AD23-0XB0Document6 pagesProduct Data Sheet 6ES7214-1AD23-0XB0MancamiaicuruNo ratings yet

- IBM Director Planning, Installation, and Configuration GuideDocument448 pagesIBM Director Planning, Installation, and Configuration GuideshektaNo ratings yet