Professional Documents

Culture Documents

Process Management, Threads, Process Scheduling - Operating Systems

Uploaded by

MukeshOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Process Management, Threads, Process Scheduling - Operating Systems

Uploaded by

MukeshCopyright:

Available Formats

Chapters 3&5

Process- Concept &Process Scheduling

OPERATING SYSTEMS

Prescribed Text Book Operating System Principles, Seventh Edition By Abraham Silberschatz, Peter Baer Galvin and Greg Gagne

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

PROCESS MANAGEMENT

Current day computer systems allow multiple programs to be loaded into memory and executed concurrently. Process is nothing but a program in execution. A process is the unit of work in a modern time sharing system. A system is a collection of processes: operating system processes executing operating system code and user processes executing user code. By switching CPU between processes, the operating system can make the computer more productive.

Overview

A batch system executes jobs whereas a time shared system has user programs or tasks. On a single user system, user may be able to run several programs at one time: a word processor, a web browser and an e-mail package. All of these are called processes. The Process A process is a program in execution. A process is more than a program code sometimes known as text section. It also includes the current activity as represented by the value of the program counter and the contents of the processors registers. A process generally also includes the process stack which contains temporary data and a data section which contains global variables. A process may also include a heap which is memory that is dynamically allocated during process run time. Program itself is not a process, a program is a passive entity such as a file containing a list of instructions stored on disk (called an executable file) whereas process is an active entity with a program counter specifying the next instruction to execute and a set of associated resources. A program becomes a process when an executable file is loaded into memory. Although two processes may be associated with the same program, they are considered two separate execution sequences. Process State As a process executes, it changes state. The state of a process is defined in part by the current activity of that process. Each process may be in one of the following states-

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

New: The process is being created. Running: Instructions are being executed. Waiting: The process is waiting for some event to occur. Ready: The process is waiting to be assigned to the processor. Terminated: The process has finished execution. Process Control Block Each process is represented in the operating system by a process control block also called task control block. It contains many pieces of information associated with a specific process including:

Process State: The state may be new, ready, running, waiting, halted etc. Program Counter: The counter indicates the address of the next instruction to be executed for this process. CPU registers: The registers vary in number and type depending on the computer architecture.

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

CPU scheduling information: This information includes a process priority, pointers to scheduling queues, and other scheduling parameters. Memory management information: This information may include such information as the value of base and limit registers etc. Accounting information: This information includes the amount of CPU and real time used, time limits etc. I/O status information: This information includes the list of I/O devices allocated to the process, etc. Threads Process is a program that performs a single thread of execution. A single thread of control allows the process to perform only one task at one time. Process Scheduling The objective of multi programming is to have some process running at all times to maximize CPU utilization. The objective of time sharing is to switch the CPU among processes so frequently that users can interact with each program while it is running. To meet these objectives, the process scheduler selects an available process for program execution on the CPU. For a single processer system, there will never be more than one running process.

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

Scheduling Queues As processes enter the system, they are put inside the job queue, which consists of all processes in the system. The processes that are residing in main memory and are ready and waiting to execute are kept on a list called the ready queue. This queue is stored as a linked list. A ready queue header contains pointers to the first and final PCBs in the list. Each PCB includes a pointer field that points to the next PCB in the ready queue. When a process is allocated the CPU, it executes for a while and eventually quits, is interrupted or waits for the occurrence of a particular event such as the completion of an I/O request. The list of processes waiting for a particular I/O device is called a device queue. Each device has its own device queue. Example-

A common representation for process scheduling is queuing diagram. Each rectangular box represents a queue. Two types of queues are present: ready queue and a set of device queues. Circles represent the resources that serve the queues and the arrows indicate the flow of processes in the system.

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

A new process is initially put in the ready queue. It waits there until it is selected for execution or is dispatched. Once the process is allocated the CPU and is executing, one of the following events might occur a) The process could issue and I/O request and then be placed in the I/O queue. b) The process could create a new sub process and wait for the sub processs termination. c) The process could be removed forcibly from the CPU as a result of an interrupt and be put back in the ready queue.

Schedulers

A process migrated among the various scheduling queues throughout its life time. The OS must select for scheduling purposes, processes from these queues and this selection is carried out by scheduler. In a batch system, more processes are submitted than can be executed immediately. These processes are spooled to a mass storage device (disk) where they are kept for later execution. The long term scheduler or job scheduler selects processes from this pool and loads them into memory for execution. The short term scheduler or CPU scheduler selects from among the processes that are ready to execute and allocates the CPU to one of them. The distinction between these two lies in the frequency of execution. The long term scheduler controls the degree of multi programming (the number of processes in memory). Most processes can be described as either I/O bound or CPU bound. An I/O bound process is one that spends more of its time doing I/O than it spends doing computations. A CPU bound process generates I/O requests infrequently using more of its time doing computations. Long term scheduler must select a good mix of I/O bound and CPU bound processes. A system with best performance will have a combination of CPU bound and I/O bound processes. Some operating systems such as time sharing systems may introduce additional/intermediate level of scheduling. Idea behind medium term scheduler is that sometimes it can be advantageous to remove processes from memory and thus reduce the degree of multi programming. Later the process can be reintroduced into memory and its execution can be continued where it left off. This scheme is called swapping. The process is swapped out and is later swapped in by the medium term scheduler.

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

Context switch

Interrupts cause the OS to change a CPU from its current task and to run a kernel routine. When an interrupt occurs, the system needs to save the current context of the process currently running on the CPU so that it can restore that context when its processing is done, essentially suspending the process and then resuming it. The context is represented in the PCB of the process, it includes the value of CPU registers, process state and memory management information. We perform a state save of the current state of the CPU and then state restore to resume operations. Switching the CPU to another process requires performing a state save of the current process and a state restore of a different process. This task is known as context switch. Kernel saves the context of the old process in its PCB and loads the saved context of the new process scheduled to run. Context switch time is pure overhead. Its speed varies from machine to machine depending on the memory speed, the number of registers that must be copied and the existence of special instructions. Context switch times are highly dependent on hardware support.

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

PROCESS SCHEDULING

Process is an executing program with a single thread of control. Most operating systems provide features enabling a process to contain multiple threads of control. A thread is a basic unit of CPU utilization; it comprises of a thread ID, a program counter, a register set and a stack. It shares with other threads belonging to the same process its code section, data section and other operating system resources such as open files and signals. A traditional or heavy weight process has a single thread of control.

Benefits of multi threaded programming are: Responsiveness: Multi threading an interactive application may allow a program to continue running even if part of it is blocked or is performing a lengthy operation, thereby increasing responsiveness to the user. Resource interaction: Threads share the memory and the resources of the process to which they belong. The benefit of sharing code and data is that it allows an application to have several different threads of activity within the same address space. Economy: Allocating memory and resources for process creation is costly. Because threads share resources of the process to which they belong, it is more economical to create and context switch threads.

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

Utilization of multi processor architecture: The benefits of multi threading can be greatly increased in a multi processor architecture where threads may be running in parallel on different processors. Multi threading on a multi CPU machine increases concurrency. User threads are supported above the kernel and are managed without kernel support whereas kernel threads are supported and managed directly by the operating system. CPU scheduling is the basis of multi programmed operating systems. By switching CPU among processes, the OS can make the computer more productive. On operating systems that support threads, it is kernel level threads and not processes that are scheduled by operating system. But process scheduling and thread scheduling are often used interchangeably. In a single processor system, only one process can run at a time; others must wait until CPU is free and can be rescheduled. The objective of multi programming is to have some process running at all times to maximize CPU utilization. Under multi programming, several processes are kept in memory at one time. When one process has to wait, the OS takes the CPU away from that process and gives the CPU to another process. As CPU is one of the primary computer resources, its scheduling is central to operating system design. CPU I/O burst cycle: Process execution consists of a cycle of CPU execution and I/O wait. Processes alternate between these two states. Process execution begins with CPU burst followed by an I/O burst and so on. The final CPU burst ends with a system request to terminate execution.

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

An I/O bound program has many short CPU bursts. A CPU bound program might have a few long CPU bursts.

CPU scheduler

Whenever the CPU becomes idle, the operating system must select one of the processes in the ready queue to be executed. The selection process is carried out by the short term scheduler or CPU scheduler. The scheduler selects a process from the processes in memory that are ready to execute and allocates the CPU to that process. The ready queue is not necessarily a first in first out queue. A ready queue can be implemented as a FIFO queue, a priority queue, a tree or an unordered linked list. All the processes in the ready queue are lined up waiting for a chance to run on the CPU. The records in the queue are process control blocks of the processes.

Pre-emptive scheduling

CPU scheduling decisions may take place under the following four conditionsa) b) c) d) When a process switches from the running state to the waiting state When a process switches from the running state to the ready state When a process switches from the waiting state to the ready state When a process terminates

When scheduling takes place under conditions 1 and 4, scheduling scheme is non - pre emptive or co-operative. Else it is called pre emptive. Under non pre emptive scheduling, once the CPU has been allocated to a process, the process keeps the CPU until it releases the CPU either by terminating or by switching to the waiting state. Pre emptive scheduling incurs cost associated with access to shared data. Pre emption also affects the design of the operating system kernel.

Dispatcher

The dispatcher is the module that gives control of the CPU to the process selected by the short term scheduler. This function involvesa) Switching context b) Switching to user mode c) Jumping to the proper location in the user program to restart that program

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

Dispatcher should be as fast as possible since it is invoked during every process switch. The time it takes for the dispatcher to stop one process and start another running is called dispatch latency.

Scheduling criteria

Different CPU scheduling algorithms have different properties. Criteria for comparing CPU scheduling algorithmsa) CPU utilization: Keep the CPU as busy as possible b) Through put: One measure of work of CPU is the number of processes that are completed per time unit called through put.

c) Turnaround time: The interval from the time of submission of a process to the time of completion is the turnaround time. Turnaround time is the sum of the periods spent waiting to get into memory, waiting in the ready queue, executing on the CPU and doing I/O. d) Waiting time: It is the sum of the periods spent waiting in the ready queue. e) Response time: The time it takes for the process to start responding but is not the time it takes to output the response.

It is desirable to maximize CPU utilization and through put and to minimize turnaround time, waiting time and response time.

Scheduling algorithms

First Come First Serve: This is the simplest CPU scheduling algorithm. The process that requests the CPU first is allocated the CPU first. The implementation of FCFS is managed with a FIFO queue. When a process enters the ready queue, its PCB is linked onto the tail of the queue. When the CPU is free, it is allocated to the process at the head of the queue. The running process is then removed from the queue. The average waiting time under FCFS is often quite long. The FCFS scheduling algorithm is non pre-emptive.

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

Once the CPU has been allocated to a process, that process keeps the CPU until it releases the CPU either by terminating or by requesting I/O. It is simple, fair, but poor performance. Average queuing time may be long.

Average wait = ( (8-0) + (12-1) + (21-2) + (26-3) )/4 = 61/4 = 15.25 Shortest Job First: This algorithm associates with each process the length of the processs next CPU burst. When the CPU is available, it is assigned to the process that has the smallest next CPU burst. If the next CPU bursts of two processes are the same, FCFS scheduling is used to break the tie. t( n+1 ) Here: t(n+1) t(n) T(n) W = w * t( n ) + ( 1 - w ) * T( n )

is time of next burst. is time of current burst. is average of all previous bursts . is a weighting factor emphasizing current or previous bursts.

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

SJF algorithm is optimal in that it gives the minimum average waiting time for a given set of processes. The real difficulty with SJF is knowing the length of the next CPU request. SJF scheduling is used frequently in long term scheduling. It cannot be implemented at the level of short term scheduling as there is no way to know the length of the next CPU burst. The SJF algorithm can be either pre emptive or non pre emptive. This choice arises when a new process arrives at the ready queue while a previous process is still executing. The next CPU burst of the newly arrived process may be shorter than what is left of the currently executing process. A pre emptive SJF will preempt the currently executing process where as non preemptive SJF algorithm will allow the currently running process to finish its CPU burst. Preemptive SJF is sometimes called shortest remaining time first scheduling. Priority The SJF is a special case of the general priority scheduling algorithm. A priority is associated with each process and CPU is allocated to the process with highest priority. Equal priority processes are scheduled in FCFS order. An SJF algorithm is simply a priority algorithm where the priority is the inverse of the predicted next CPU burst. The larger the CPU burst, the lower the priority. Priorities are generally indicated by some fixed range of numbers usually 0 to 7. Priorities can be defined either internally or externally. Internally defined priorities use some measurable quantity to computer the priority of a process. External priorities are set by criteria outside the operating system. Priority scheduling can be either pre emptive or non pre emptive. When a process arrives at the ready queue, its priority is compared with the priority of the currently running process. A preemptive priority scheduling algorithm will preempt the CPU is the priority of the newly arrived process is higher than the priority of the currently running process. A non preemptive priority scheduling algorithm will put the new process at the head of the ready queue. The major problem with priority scheduling algorithm is indefinite blocking or starvation. A process that is ready to run but waiting for the CPU can be considered blocked. A priority scheduling algorithm can leave some low priority processes waiting indefinitely. A solution to the problem of indefinite blockage of low priority processes is aging. Aging is a technique of gradually increasing the priority of processes that wait in the system for a long time.

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

Round Robin The round robin scheduling algorithm is designed for time sharing systems. It is similar to FCFS scheduling but preemption is added to switch between processes. A small unit of time called a time quantum or time slice is defined. A time quantum is generally from 10 to 100 milli seconds. The ready queue is treated as a circular queue. The CPU scheduler goes around the ready queue allocating the CPU to each process for a time interval of up to 1 time quantum. To implement RR scheduling, ready queue is implemented as a FIFO queue. New processes are added to the tail of ready queue. The CPU scheduler picks the first process from the ready queue, sets the timer to interrupt after 1 time quantum and dispatches the process. The process may either have a CPU burst of less than 1 time quantum. Then, the process itself will release the CPU voluntarily. The scheduler will then proceed to the next process in the ready queue. Else if the CPU burst of the currently running process is longer than 1 time quantum, the timer will go off and will cause an interrupt to the operating system. A context switch will be executed and the process will be put at the tail of the ready queue. The CPU scheduler will then select the next process in the ready queue. In the RR scheduling algorithm, no process is allocated the CPU for more than 1 time quantum in a row. If a processs CPU burst exceeds 1 time quantum, that process is preempted and is put back in the ready queue. Thus this algorithm is preemptive. The performance of the RR algorithm depends heavily on the size of time quantum. If the time quantum is extremely large, the RR policy is same as the FCFS policy. If the time quantum is extremely small, the RR approach is called processor sharing.

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

Algorithm evaluation

Selection of an algorithm is difficult. The first problem is defining the criteria to be used in selecting an algorithm. Criteria are often defined in terms of CPU utilization, response time or through put. Criteria includes several measures such asa) Maximizing CPU utilization under the constraint that the maximum response time is 1 second. b) Maximizing through put such that turnaround time is linearly proportional to total execution time. Various evaluation methods that can be usedI. Deterministic modeling-

One major class of evaluation methods is analytic evaluation. Analytic evaluation uses the given algorithm and the system work load to produce a formula or number that evaluates the performance of the algorithm for that workload. One type of analytic evaluation is deterministic modeling. This method takes a particular pre determined work load and defines the performance of each algorithm for that work load. Deterministic modeling is simple and fast. It returns exact numbers allowing for comparison of algorithms. The main uses of deterministic modeling are in describing scheduling algorithms and providing examples. II. Queuing models-

On many systems, the processes that run vary from day to day so there is no static set of processes to use for deterministic modeling. The distribution of CPU and I/O bursts can be determined however. These distributions can then be measured and approximated. The result is a mathematical formula describing the probability of a particular CPU burst. The computer system is described as a network of servers. Each server has a queue of waiting processes. The CPU is a server with its ready queue as is the I/O system with its device queues. By knowing the arrival rates and service rates, utilization, average queue length, average wait time can be computed. This area of study is called queuing network analysis.

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

Let n be the average queue length, let W be the average waiting time in the queue and let be the average arrival rate for new processes in the queue. If the system is in the steady state, then the number of processes leaving the queue must be equal to the number of processes that arrive. Thus, n = * W

This equation known as Littles formula is useful because it is valid for any scheduling algorithm and arrival distribution. Queuing analysis can be useful in comparing scheduling algorithms.

III.

Simulations

Running simulations involves programming a model of the computer system. Running simulations involves programming a model of the computer system. Software data structures represent the major components of the system. The data to drive the simulation can be generated in several ways. The most common method uses a random number generator which is programmed to generate processes, CPU burst times, arrivals, departures etc. The distributions can be defined mathematically (uniform, exponential, Poisson) or empirically. The results define the distribution of events in the real system; this distribution can then be used to drive the simulation. The frequency distribution indicates how many instances of each event occur; it does not indicate anything about the order of occurrence. To rectify this, we use trace tapes.

A trace tape is created by monitoring the real system and recording the sequence of actual events. This sequence is then used to drive the simulation. Trace tapes provide an excellent way to compare two algorithms on the same set of real inputs. Simulations can be expensive, requiring hours of computer time. Trace tapes can require large amounts of storage space.

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

IV.

Implementation

The only completely accurate way to evaluate a scheduling algorithm is to code it up, put it in the operating system and see how it works. This approach puts the actual algorithm in the real system for evaluation under real operating conditions. The major difficulty with this approach is high cost. Another difficulty is that the environment in which the algorithm is used will change. The most flexible scheduling algorithms are those that can be altered by the system managers or by the users so that they can be tuned for a specific application or set of applications. Another approach is to use APIs that modify the priority of a process or thread.

Thread Scheduling

Thread is a basic unit of CPU utilization. Support for threads may be provided either at the user level for user threads or by the kernel for kernel threads. User threads are supported above the kernel and are managed without kernel support whereas kernel threads are supported and managed directly by the operating system.

Three common ways of establishing a relationship between user level and kernel level threads are: Many to one model - Maps many user level threads to one kernel thread. Thread management is done by the thread library in user space hence it is efficient but entire process will block if a thread makes a blocking system call. As only one thread can access the kernel at a time, multiple

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

threads are unable to run in parallel on multi processors.

One to one model - Maps each user thread to kernel thread. It allows more concurrency by allowing another thread to run when a thread makes a blocking system call; it also allows multiple threads to run in parallel on multi processors. Disadvantage is that creating a user thread requires creating the corresponding kernel thread.

Many to many model - Multiplexes many user level threads to a smaller or equal number of kernel level threads. The number of kernel threads may be specific to either a particular application or a particular machine. Developers can create as many user threads as necessary and the corresponding kernel threads can run in parallel on a multi processor. Also, when a thread performs a blocking system call, the kernel can schedule another thread for execution.

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

On operating systems that support threads, it is kernel level threads that are being scheduled by operating system. User level threads are managed by thread library. To run on a CPU, user level threads must be mapped to an associated kernel level thread although this mapping may be indirect and may use a light weight process.

Contention scope: One major difference between user level and kernel level threads lies in

how they are scheduled. On systems implementing the many to one and many to many, the thread library schedules user level threads to run on an available light weight process, a scheme known as process contention scope since competition for the CPU takes place among threads belonging to the same process. Also to decide which kernel thread to schedule onto a CPU, the kernel uses system contention scope. PCS is done according to priority - the scheduler selects the runnable thread with the highest priority to run. PCS will preempt the thread currently running in favor of a higher priority thread. A thread library provides the programmer an API for creating and managing threads. Two primary ways of implementing a thread library are

a) Provide a library entirely in user space with no kernel support, all code and data structures for the library exist in user space.

b) Implement a kernel thread library supported directly by the operating system. Code and data structures for the library exist in kernel space.

Three main thread libraries in use today are: POSIX threads/Pthreads - may be provided as either a user or kernel level library

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

Win32 - kernel level library available on Windows Systems Java - allows thread creation and management directly in Java programs. Pthreads - refers to the POSIX standard defining an API for thread creation and synchronization. This is a specification for thread behavior. Numerous systems implement Pthreads specification. For more information about Pthreads, please refer to this website: https://computing.llnl.gov/tutorials/pthreads/ http://linux.die.net/man/7/pthreads

Pthread scheduling: POSIX Pthread API allows specifying either PCS or SCS during thread

creation. Pthreads identifies the following contention scope values: PTHREAD_SCOPE_PROCESS schedules threads using PCS scheduling PTHREAD_SCOPE_SYSTEM schedules threads using SCS scheduling On systems implementing the many to many model, the PTHREAD_SCOPE_PROCESS policy schedules user level threads onto available LWPs. The number of LWPs is maintained by thread library using scheduler activations. The PTHREAD_SCOPE_SYSTEM scheduling policy will create and bind an LWP for each user level thread using the one- to one policy. The Pthread IPC provides the following two functions for getting and setting the contention policy: Pthread_attr_setscope(pthread_attr_t *attr, int scope) Pthread_attr_getscope(pthread_attr_t *attr, int scope) The first parameter for both functions contains a pointer to the attribute set for the thread. The second parameter for the pthread_attr_setscope() function is passed either the PTHREAD_SCOPE_SYSTEM or PTHREAD_SCOPE_PROCESS value indicating how the contention scope is to be set. In the case of pthread_attr_getscope(), the second parameter contains a pointer to an int value that is set to the current value of the contention scope. If an error occurs, each of these functions returns non zero values.

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

Chapters 3&5

Process- Concept &Process Scheduling

Aslesha L.Akkineni Assistant Professor, CSE VNR VJIET

You might also like

- Unix Process Control. Linux Tools and The Proc File SystemDocument89 pagesUnix Process Control. Linux Tools and The Proc File SystemKetan KumarNo ratings yet

- Unit3 FDSDocument33 pagesUnit3 FDSOur Little NestNo ratings yet

- Dbms Important QuestionDocument4 pagesDbms Important QuestionpthepronabNo ratings yet

- OS Lab ManualDocument55 pagesOS Lab ManualAbhishek VyasNo ratings yet

- DBMS - Transactions ManagementDocument40 pagesDBMS - Transactions ManagementhariNo ratings yet

- Assignment 1: Transaction, Concurrency and RecoveryDocument12 pagesAssignment 1: Transaction, Concurrency and RecoverygshreyaNo ratings yet

- Synchronisation HardwareDocument17 pagesSynchronisation HardwareTarun100% (4)

- Memory Management Processor Management Device Management File Management SecurityDocument47 pagesMemory Management Processor Management Device Management File Management Securityyashika sarodeNo ratings yet

- Operating SystemDocument41 pagesOperating SystemLapa EtienneNo ratings yet

- Os Notes PDFDocument115 pagesOs Notes PDFVishnu PalNo ratings yet

- Theoretical Concept of Unix Operating SystemDocument10 pagesTheoretical Concept of Unix Operating SystemManish Yadav100% (1)

- ThreadsDocument47 pagesThreads21PC12 - GOKUL DNo ratings yet

- CN Stop and Wait ProtocolDocument3 pagesCN Stop and Wait ProtocolPriyadarshini RNo ratings yet

- SIMD Computer OrganizationsDocument20 pagesSIMD Computer OrganizationsF Shan Khan0% (1)

- Object Oriented Programming Using JavaDocument91 pagesObject Oriented Programming Using Javal Techgen lNo ratings yet

- Principles of Operating System Questions and AnswersDocument15 pagesPrinciples of Operating System Questions and Answerskumar nikhilNo ratings yet

- 2mark and 16 OperatingSystemsDocument24 pages2mark and 16 OperatingSystemsDhanusha Chandrasegar SabarinathNo ratings yet

- CA 2marksDocument41 pagesCA 2marksJaya ShreeNo ratings yet

- Operating SystemDocument35 pagesOperating SystemVasudevNo ratings yet

- Operating System TutorialDocument64 pagesOperating System TutorialSuraj RamolaNo ratings yet

- Operating System MCQ'SDocument19 pagesOperating System MCQ'SGuruKPO100% (2)

- Data Structure Compiled NoteDocument53 pagesData Structure Compiled NoteamanuelNo ratings yet

- Advanced Operating SystemsDocument51 pagesAdvanced Operating SystemsaidaraNo ratings yet

- Study of Unix CommandsDocument22 pagesStudy of Unix CommandsChetana KestikarNo ratings yet

- Unix Systems Programming and Compiler Design Laboratory - 10CSL67Document33 pagesUnix Systems Programming and Compiler Design Laboratory - 10CSL67Vivek Ranjan100% (1)

- Multithreading in JavaDocument33 pagesMultithreading in JavaShashwat NepalNo ratings yet

- Os 101 NotesDocument102 pagesOs 101 Notesvg0No ratings yet

- 2 Marks QuestionsDocument30 pages2 Marks QuestionsVictor LfpNo ratings yet

- Multithreading: The Java Thread ModelDocument15 pagesMultithreading: The Java Thread ModelMasterNo ratings yet

- Unix Process ManagementDocument9 pagesUnix Process ManagementJim HeffernanNo ratings yet

- CS1253 2,16marksDocument24 pagesCS1253 2,16marksRevathi RevaNo ratings yet

- Operating System Exercises - Chapter 3 SolDocument2 pagesOperating System Exercises - Chapter 3 Solevilanubhav67% (3)

- IT-403 Analysis and Design of AlgorithmDocument19 pagesIT-403 Analysis and Design of AlgorithmRashi ShrivastavaNo ratings yet

- Data Mining Introduction and Knowledge Discovery ProcessDocument38 pagesData Mining Introduction and Knowledge Discovery Processchakrika100% (1)

- Introduction To Design and Analysis of AlgorithmsDocument50 pagesIntroduction To Design and Analysis of AlgorithmsMarlon TugweteNo ratings yet

- Java 2marks Questions With AnswerDocument19 pagesJava 2marks Questions With AnswerGeetha SupriyaNo ratings yet

- Cse-V-Operating Systems (10CS53) - Notes PDFDocument150 pagesCse-V-Operating Systems (10CS53) - Notes PDFVani BalamuraliNo ratings yet

- Memory ManagementDocument10 pagesMemory ManagementSaroj MisraNo ratings yet

- Operating System Ii Memory Management Memory Management Is A Form of Resource Management AppliedDocument23 pagesOperating System Ii Memory Management Memory Management Is A Form of Resource Management AppliedSALIHU ABDULGANIYU100% (1)

- Chap-2 (C )Document15 pagesChap-2 (C )gobinathNo ratings yet

- Operating System Lab - ManualDocument70 pagesOperating System Lab - ManualKumar ArikrishnanNo ratings yet

- B.tech CS S8 High Performance Computing Module Notes Module 1Document19 pagesB.tech CS S8 High Performance Computing Module Notes Module 1Jisha Shaji100% (1)

- Operating System FundamentalsDocument6 pagesOperating System FundamentalsGuruKPO100% (2)

- CS 1304-MICROPROCESSORS 1. What Is Microprocessor? Give The Power SupplyDocument34 pagesCS 1304-MICROPROCESSORS 1. What Is Microprocessor? Give The Power Supplychituuu100% (2)

- NOTESDocument156 pagesNOTESVenkat PappuNo ratings yet

- CIA-I B.Tech. IV Sem Theory of Automata and Formal LanguagesDocument2 pagesCIA-I B.Tech. IV Sem Theory of Automata and Formal LanguagesvikNo ratings yet

- CS333 Midterm Exam from Alexandria UniversityDocument4 pagesCS333 Midterm Exam from Alexandria UniversityRofaelEmil100% (2)

- Multithreading in Java: Understanding Threads, Priorities, SynchronizationDocument25 pagesMultithreading in Java: Understanding Threads, Priorities, SynchronizationAbhishek PandeyNo ratings yet

- Compiler Design Short NotesDocument133 pagesCompiler Design Short NotesSaroswat RoyNo ratings yet

- Chapter-1 Background and Some Basic Commands: 1) Explain Salient Features of UNIX System? Explain All BrieflyDocument30 pagesChapter-1 Background and Some Basic Commands: 1) Explain Salient Features of UNIX System? Explain All BrieflyLoli BhaiNo ratings yet

- Usp Lab ManualDocument35 pagesUsp Lab ManualReshma BJNo ratings yet

- CamScanner document scansDocument63 pagesCamScanner document scansBkdNo ratings yet

- Operating System NotesDocument63 pagesOperating System NotesmogirejudNo ratings yet

- Computer Organization and ArchitectureDocument6 pagesComputer Organization and Architectureakbisoi1No ratings yet

- Client Server Architecture A Complete Guide - 2020 EditionFrom EverandClient Server Architecture A Complete Guide - 2020 EditionNo ratings yet

- UNIX Programming: UNIX Processes, Memory Management, Process Communication, Networking, and Shell ScriptingFrom EverandUNIX Programming: UNIX Processes, Memory Management, Process Communication, Networking, and Shell ScriptingNo ratings yet

- Protocols and Reference Models - CCNAv7-1Document41 pagesProtocols and Reference Models - CCNAv7-1MukeshNo ratings yet

- Basic Router and Switch Configuration - CCNAv7 Module 2Document36 pagesBasic Router and Switch Configuration - CCNAv7 Module 2MukeshNo ratings yet

- Introduction To Networks - CCNAv7 Module-1Document42 pagesIntroduction To Networks - CCNAv7 Module-1Mukesh0% (1)

- Transport Layer - MukeshDocument54 pagesTransport Layer - MukeshMukeshNo ratings yet

- Unicast Routing Protocols - RIP, OSPF and BGPDocument48 pagesUnicast Routing Protocols - RIP, OSPF and BGPMukesh100% (1)

- Deadlocks in Operating SystemsDocument37 pagesDeadlocks in Operating SystemsMukeshNo ratings yet

- Cisco Introduction To Cyber Security Chap-1Document9 pagesCisco Introduction To Cyber Security Chap-1Mukesh80% (5)

- Quality of ServiceDocument40 pagesQuality of ServiceMukeshNo ratings yet

- Cisco Introduction To Cyber Security Chap-4Document9 pagesCisco Introduction To Cyber Security Chap-4MukeshNo ratings yet

- Cisco Introduction To Cyber Security Chap-2Document9 pagesCisco Introduction To Cyber Security Chap-2MukeshNo ratings yet

- Multimedia Data and ProtocolsDocument59 pagesMultimedia Data and ProtocolsMukesh100% (1)

- Next Generation IP - IPv6Document46 pagesNext Generation IP - IPv6Mukesh100% (1)

- Bluetooth, IEEE 802.11, WIMAXDocument30 pagesBluetooth, IEEE 802.11, WIMAXMukesh71% (7)

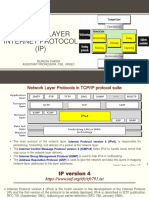

- Network Layer - IP - MukeshDocument19 pagesNetwork Layer - IP - MukeshMukesh100% (2)

- Multicasting and Multicast ProtocolsDocument50 pagesMulticasting and Multicast ProtocolsMukeshNo ratings yet

- Operating Systems - DeadlocksDocument14 pagesOperating Systems - DeadlocksMukesh100% (3)

- Unicast Routing - MukeshDocument29 pagesUnicast Routing - MukeshMukeshNo ratings yet

- Introduction To SecurityDocument37 pagesIntroduction To SecurityMukeshNo ratings yet

- Operating Systems: Files Concept & Implementing File SystemsDocument27 pagesOperating Systems: Files Concept & Implementing File SystemsMukesh88% (48)

- Introduction To Networks, Reference ModelsDocument38 pagesIntroduction To Networks, Reference ModelsMukesh100% (2)

- OS Process Synchronization, DeadlocksDocument30 pagesOS Process Synchronization, DeadlocksMukesh100% (4)

- Classsical Encryption Techniques MukeshDocument13 pagesClasssical Encryption Techniques MukeshMukeshNo ratings yet

- Assymetric Cryptography, Kerberos, X.509 CertificatesDocument34 pagesAssymetric Cryptography, Kerberos, X.509 CertificatesMukesh80% (5)

- MVC & Struts NotesDocument6 pagesMVC & Struts NotesMukesh100% (1)

- Trusted Systems, Firewalls, Intrusion Detection SystemsDocument18 pagesTrusted Systems, Firewalls, Intrusion Detection SystemsMukesh100% (34)

- MC (Unit-7) MANET'sDocument23 pagesMC (Unit-7) MANET'sMukesh93% (42)

- Symmetric Encryption, DES, AES, MAC, Hash Algorithms, HMACDocument76 pagesSymmetric Encryption, DES, AES, MAC, Hash Algorithms, HMACMukesh86% (7)

- JSP NotesDocument35 pagesJSP NotesMukesh71% (14)

- Database Issues in Mobile ComputingDocument20 pagesDatabase Issues in Mobile ComputingMukesh90% (51)

- Servlets NotesDocument18 pagesServlets NotesMukesh100% (8)

- Slide Bab 7 Proses Desain UI Step 8 9-VREDocument85 pagesSlide Bab 7 Proses Desain UI Step 8 9-VREM Khoiru WafiqNo ratings yet

- Lab2 Solution PDFDocument2 pagesLab2 Solution PDFKunal RanjanNo ratings yet

- PDFDocument214 pagesPDFKumari YehwaNo ratings yet

- Wi-Fi Certified Interoperatibility CertificateDocument1 pageWi-Fi Certified Interoperatibility CertificatelisandroNo ratings yet

- Ece ChecklistDocument2 pagesEce ChecklistAlfred Loue OrquiaNo ratings yet

- Web Programming - SyllabusDocument3 pagesWeb Programming - SyllabusAkshuNo ratings yet

- Calculus HandbookDocument198 pagesCalculus HandbookMuneeb Sami100% (1)

- P0903BSG Niko-Sem: N-Channel Logic Level Enhancement Mode Field Effect TransistorDocument5 pagesP0903BSG Niko-Sem: N-Channel Logic Level Enhancement Mode Field Effect TransistorAndika WicaksanaNo ratings yet

- CTRPF AR Cheat Code DatabaseDocument180 pagesCTRPF AR Cheat Code DatabaseIndraSwacharyaNo ratings yet

- SOP Overview Presentation 1 21 11Document52 pagesSOP Overview Presentation 1 21 11yvanmmNo ratings yet

- Serial Code Number Tinta L800Document5 pagesSerial Code Number Tinta L800awawNo ratings yet

- Optimal Control System Company Profile PDFDocument9 pagesOptimal Control System Company Profile PDFkarna patelNo ratings yet

- Organizational Maturity LevelsDocument1 pageOrganizational Maturity LevelsArmand LiviuNo ratings yet

- Techknowlogia Journal 2002 Jan MacDocument93 pagesTechknowlogia Journal 2002 Jan MacMazlan ZulkiflyNo ratings yet

- Sample Resume for Software Testing EngineerDocument3 pagesSample Resume for Software Testing EngineermartinNo ratings yet

- ICT - Minimum Learning Competencies - Grade 9 and 10Document8 pagesICT - Minimum Learning Competencies - Grade 9 and 10kassahunNo ratings yet

- Universiti Malaysia Sarawak Pre-TranscriptDocument2 pagesUniversiti Malaysia Sarawak Pre-Transcriptsalhin bin bolkeriNo ratings yet

- The Complete Digital Workflow in Interdisciplinary Dentistry.Document17 pagesThe Complete Digital Workflow in Interdisciplinary Dentistry.floressam2000No ratings yet

- Yacht Devices NMEA 2000 Wi-Fi GatewayDocument44 pagesYacht Devices NMEA 2000 Wi-Fi GatewayAlexander GorlachNo ratings yet

- MARS Release History and NotesDocument12 pagesMARS Release History and NotesNabil AlzeqriNo ratings yet

- ITEC3116-SNAL-Lecture 16 - Linux Server ConfigurationDocument24 pagesITEC3116-SNAL-Lecture 16 - Linux Server ConfigurationHassnain IjazNo ratings yet

- AutopilotDocument76 pagesAutopilotAnthony Steve LomilloNo ratings yet

- A7III-A7RIII Custom-Settings and Support eBook-V5 PDFDocument203 pagesA7III-A7RIII Custom-Settings and Support eBook-V5 PDFMiguel Garrido Muñoz100% (1)

- CMOS Means Complementary MOS: NMOS and PMOS Working Together in A CircuitDocument8 pagesCMOS Means Complementary MOS: NMOS and PMOS Working Together in A Circuitsuresht196No ratings yet

- Histograms Answers MMEDocument5 pagesHistograms Answers MMEEffNo ratings yet

- SVC Training Document VKSDocument51 pagesSVC Training Document VKSTien Dat TranNo ratings yet

- US23-0920-02 UniSlide Install Manual Rev D (12!16!2010)Document72 pagesUS23-0920-02 UniSlide Install Manual Rev D (12!16!2010)sq9memNo ratings yet

- Training Devices and Addressing ......................................................................... 1-2Document12 pagesTraining Devices and Addressing ......................................................................... 1-2Julian David Arevalo GarciaNo ratings yet

- Micron 8GB DDR4 Memory Module SpecsDocument2 pagesMicron 8GB DDR4 Memory Module SpecsAli Ensar YavaşNo ratings yet

- 93E 15-80 KVA Guide SpecificationDocument11 pages93E 15-80 KVA Guide SpecificationHafiz SafwanNo ratings yet