Professional Documents

Culture Documents

Software Testing

Uploaded by

api-3781097Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Software Testing

Uploaded by

api-3781097Copyright:

Available Formats

MSIT 32 Software Quality and Testing 1

SOFTWARE QUALITY & TESTING

(MSIT - 32)

: Contributing Author :

Dr. B.N. Subraya

Infosys Technologies Ltd.,

Mysore

PDF created with pdfFactory Pro trial version www.pdffactory.com

2

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 3

Contents

Chapter 1

INTRODUCTION TO SOFTWARE TESTING 1

1.1 Learning Objectives.......................................................................... 1

1.2 Introduction...................................................................................... 1

1.3 What is Testing?............................................................................... 3

1.4 Approaches to Testing....................................................................... 5

1.5 Importance of Testing....................................................................... 6

1.6 Hurdles in Testing............................................................................. 6

1.7 Testing Fundamentals........................................................................ 7

Chapter 2

SOFTWARE QUALITY ASSURANCE 10

2.1 Learning Objectives.......................................................................... 10

2.2 Introduction...................................................................................... 10

2.3 Quality Concepts............................................................................... 11

2.4 Quality of design............................................................................... 12

2.5 Quality of Conformance.................................................................... 12

2.6 Quality Control (QC)......................................................................... 13

2.7 Quality Assurance (QA).................................................................... 13

2.8 Software Quality ASSURANCE (SQA)............................................. 14

2.9 Formal Technical Reviews (FTR)....................................................... 21

2.10 Statistical Quality Assurance.............................................................. 27

2.11 Software Reliability........................................................................... 30

2.12 The SQA Plan.................................................................................. 31

PDF created with pdfFactory Pro trial version www.pdffactory.com

4 Contents

Chapter 3

PROGRAM INSPECTIONS, WALKTHROUGHS AND REVIEWS

QUALITY ASSURANCE 36

3.1 Learning Objectives.......................................................................... 36

3.2 Introduction...................................................................................... 36

3.3 Inspections and Walkthroughs............................................................ 37

3.4 Code Inspections.............................................................................. 38

3.5 An Error Check list for Inspections.................................................... 39

3.6 Walkthroughs.................................................................................... 42

Chapter 4

TEST CASE DESIGN 43

4.1 Learning Objectives.......................................................................... 43

4.2 Introduction...................................................................................... 43

4.3 White Box Testing............................................................................ 44

4.4 Basis Path Testing............................................................................ 45

4.5 Control Structure testing.................................................................... 49

4.6 Black Box Testing............................................................................ . 53

4.7 Static Program Analysis.................................................................... 57

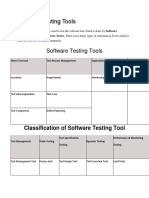

4.8 Automated Testing Tools................................................................... 58

Chapter 5

TESTING FOR SPECIALIZED ENVIRONMENTS 60

5.1 Learning Objectives.......................................................................... 60

5.2 Introduction...................................................................................... 60

5.3 Testing GUIs.................................................................................... 60

5.4 Testing of Client/Server Architectures................................................ 63

5.5 Testing documentation and Help facilities............................................ 63

Chapter 6

SOFTWARE TESTING STRATEGIES 65

6.1. Learning Objectives.......................................................................... 65

6.2. Introduction...................................................................................... 65

6.3 A Strategic Approach To Software Testing......................................... 69

6.4 Verification and Validation.................................................................. 70

6.5 Organizing for software testing.......................................................... 71

6.6 A Software Testing Strategy.............................................................. 72

6.7 Strategic issues................................................................................. 75

6.8 Unit Testing...................................................................................... 75

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 5

6.9 Integration Testing............................................................................ 80

6.10 Validation Testing.............................................................................. 85

6.11 System Testing.................................................................................. 86

6.12 Summary.......................................................................................... 89

Chapter 7

TESTING OF WEB BASED APPLICATIONS 91

7.1 Introduction...................................................................................... 91

7.2 Testing of Web Based Applications: Technical Peculiarities.................. 91

7.3 Testing of Static Web- based applications........................................... 92

7.4 Testing of Dynamic Web based applications........................................ 94

7.5 Future Challenges............................................................................. 96

Chapter 8

TEST PROCESS MODEL 97

8.0 Need for Test Process Model............................................................ 97

8.1 Test Process Cluster.......................................................................... 98

Chapter 9

TEST METRICS 103

9.0 Introduction...................................................................................... 103

9.1 Overview of the Role and Use of Metrics........................................... 104

9.2 Primitive Metric and Computed Metrics.............................................. 104

9.3 Metrics typically used within the Testing Process................................ 105

9.4 Defect Detection Effectiveness percentage (DDE)............................. 106

9.5 Setting up and administering a Metrics Program.................................. 106

PDF created with pdfFactory Pro trial version www.pdffactory.com

6

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 7

Chapter 1

Introduction to Software Testing

1.1 LEARNING OBJECTIVES

Y

ou will learn about:

l What is Software Testing?

l Need for software Testing,

l Various approaches to Software Testing,

l What is the defect distribution,

l Software Testing Fundamentals.

1.2 INTRODUCTION

Software testing is a critical element of software quality assurance and represents the ultimate process

to ensure the correctness of the product. The quality product always enhances the customer confidence

in using the product thereby increases the business economics. In other words, a good quality product

means zero defects, which is derived from a better quality process in testing.

The definition of testing is not well understood. People use a totally incorrect definition of the word

testing, and that this is the primary cause for poor program testing. Examples of these definitions are such

statements as “Testing is the process of demonstrating that errors are not present”, “The purpose of

MSIT 32 Software Quality and Testing 1

PDF created with pdfFactory Pro trial version www.pdffactory.com

8 Chapter 1 - Introduction to Software Testing

testing is to show that a program performs its intended functions correctly”, and “Testing is the process of

establishing confidence that a program does what it is supposed to do”.

Testing the product means adding value to it, which means raising the quality or reliability of the

program. Raising the reliability of the product means finding and removing errors. Hence one should not

test a product to show that it works; rather, one should start with the assumption that the program contains

errors and then test the program to find as many errors as possible. Thus a more appropriate definition is:

Testing is the process of executing a program with the intent of finding errors.

Purpose of Testing

To show the software works: It is known as demonstration-oriented

To show the software doesn’t work: It is known as destruction-oriented

To minimize the risk of not working up to an acceptable level: it is known as evaluation-oriented

Need for Testing

Defects can exist in the software, as it is developed by human beings who can make mistakes during

the development of software. However, it is the primary duty of a software vendor to ensure that software

delivered does not have defects and the customers day-to-day operations do not get affected. This can be

achieved by rigorously testing the software. The most common origin of software bugs is due to:

l Poor understanding and incomplete requirements

l Unrealistic schedule

l Fast changes in requirements

l Too many assumptions and complacency

Some of major computer system failures listed below gives ample evidence that the testing is an

important activity of the software quality process.

l In April of 1999, a software bug caused the failure of a $1.2 billion military satellite launch, the

costliest unmanned accident in the history of Cape Canaveral launches. The failure was the

latest in a string of launch failures, triggering a complete military and industry review of U.S.

space launch programs, including software integration and testing processes. Congressional

oversight hearings were requested.

l On June 4, 1996, the first flight of the European Space Agency’s new Ariane 5 rocket failed

shortly after launching, resulting in an estimated uninsured loss of a half billion dollars. It was

reportedly due to the lack of exception handling of a floating-point error in a conversion from a

64-bit integer to a 16-bit signed integer.

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 9

l In January of 2001 newspapers reported that a major European railroad was hit by the aftereffects

of the Y2K bug. The company found that many of their newer trains would not run due to their

inability to recognize the date ’31/12/2000'; the trains were started by altering the control system’s

date settings.

l In April of 1998 a major U.S. data communications network failed for 24 hours, crippling a large

part of some U.S. credit card transaction authorization systems as well as other large U.S.

bank, retail, and government data systems. The cause was eventually traced to a software bug.

l The computer system of a major online U.S. stock trading service failed during trading hours

several times over a period of days in February of 1999 according to nationwide news reports.

The problem was reportedly due to bugs in a software upgrade intended to speed online trade

confirmations.

l In November of 1997 the stock of a major health industry company dropped 60% due to reports

of failures in computer billing systems, problems with a large database conversion, and inadequate

software testing. It was reported that more than $100,000,000 in receivables had to be written

off and that multi-million dollar fines were levied on the company by government agencies.

l Software bugs caused the bank accounts of 823 customers of a major U.S. bank to be credited

with $924,844,208.32 each in May of 1996, according to newspaper

reports. The American Bankers Association claimed it was the largest such error in banking

history. A bank spokesman said the programming errors were corrected and all funds were

recovered.

All the above incidents only reiterate the importance of thorough testing of software applications and

products before they are put on production. It clearly demonstrates that cost of rectifying defect during

development is much less than rectifying a defect in production.

1.3 WHAT IS TESTING?

l “Testing is an activity in which a system or component is executed under specified conditions;

the results are observed and recorded and an evaluation is made of some aspect of the system

or component” - IEEE

l Executing a system or component is known as dynamic testing.

l Review, inspection and verification of documents (Requirements, design documents Test Plans

etc.), code and other work products of software is known as static testing.

l Static testing is found to be the most effective and efficient way of testing.

PDF created with pdfFactory Pro trial version www.pdffactory.com

10 Chapter 1 - Introduction to Software Testing

l Successful testing of software demands both dynamic and static testing.

l Measurements show that a defect discovered during design that costs $1 to rectify at that stage

will cost $1,000 to repair in production. This clearly points out the advantage of early testing.

l Testing should start with small measurable units of code, gradually progress towards testing

integrated components of the applications and finally be completed with testing at the application

level.

l Testing verifies the system against its stated and implied requirements, i.e., is it doing what it is

supposed to do? It should also check if the system is not doing what it is not supposed to do, if

it takes care of boundary conditions, how the system performs in production-like environment

and how fast and consistently the system responds when the data volumes are high.

Reasons for Software Bugs

Following are the reasons for Software Bugs:

l Miscommunication or no communication - as to specifics of what an application should or

shouldn’t do (the application’s requirements).

l Software complexity - the complexity of current software applications can be difficult to

comprehend for anyone without experience in modern-day software development. Windows-

type interfaces, client-server and distributed applications, data communications, enormous

relational databases, and sheer size of applications have all contributed to the exponential growth

in software/system complexity. And the use of object-oriented techniques can complicate instead

of simplify a project unless it is well-engineered.

l Programming errors - programmers, like anyone else, can make mistakes.

l Changing requirements - the customer may not understand the effects of changes, or may

understand and request them anyway - redesign, rescheduling of engineers, effects on other

projects, work already completed that may have to be redone or thrown out, hardware

requirements that may be affected, etc. If there are many minor changes or any major changes,

known and unknown dependencies among parts of the project are likely to interact and cause

problems, and the complexity of keeping track of changes may result in errors. Enthusiasm of

engineering staff may be affected. In some fast-changing business environments, continuously

modified requirements may be a fact of life. In this case, management must understand the

resulting risks, and QA and test engineers must adapt and plan for continuous extensive testing

to keep the inevitable bugs from running out of control.

l time pressures - scheduling of software projects is difficult at best, often requiring a lot of

guesswork. When deadlines loom and the crunch comes, mistakes will be made.

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 11

l Poorly documented code - it’s tough to maintain and modify code that is badly written or poorly

documented; the result is bugs. In many organizations management provides no incentive for

programmers to document their code or write clear, understandable code. In fact, it’s usually the

opposite: they get points mostly for quickly turning out code, and there’s job security if nobody

else can understand it (‘if it was hard to write, it should be hard to read’).

l Software development tools - visual tools, class libraries, compilers, scripting tools, etc. often

introduce their own bugs or are poorly documented, resulting in added bugs.

1.4 APPROACHES TO TESTING

Many approaches have been defined in literature. The importance of any approache depends on the

type of the system in which you are testing. Some of the approaches are given below:

Debugging-oriented:

This approach identifies the errors during debugging the program. There is no difference between

testing and debugging.

Demonstration-oriented:

The purpose of testing is to show that the software works. Here most of the time, the software is

demonstrated in a normal sequence/flow. All the branches may not be tested. This approach is mainly to

satisfy the customer and no value added to the program.

Destruction-oriented:

The purpose of testing is to show the software doesn’t work.

It is a sadistic process, which explains why most people find it difficult. It is difficult to design test

cases to test the program.

Evaluation-oriented:

The purpose of testing is to reduce the perceived risk of not working up to an acceptable value.

Prevention-oriented:

It can be viewed as testing is a mental discipline that results in low risk software. It is always better to

forecast the possible errors and rectify it earlier.

In general, program testing is more properly viewed as the destructive process of trying to find the

errors (whose presence is assumed) in a program. A successful test case is one that furthers progress in

this direction by causing the program to fail. However, one wants to use program testing to establish some

PDF created with pdfFactory Pro trial version www.pdffactory.com

12 Chapter 1 - Introduction to Software Testing

degree of confidence that a program does what it is supposed to do and does not do what it is not

supposed to do, but this purpose is best achieved by a diligent exploration for errors.

1.5 IMPORTANCE OF TESTING

Testing activity cannot be eliminated in the life cycle as the end product must be bug free and reliable

one. Testing is important because:

l Testing is a critical element of software Quality Assurance

l Post-release removal of defects is the most expensive

l Significant portion of life cycle effort expended on testing

In a typical service oriented project, about 20-40% of project effort is spent on testing. It is much more

in the case of “human-rated” software.

For example, at Microsoft, tester to developer ratio is 1:1 whereas at NASA shuttle development

center (SEI Level 5), the ratio is 7:1. This shows that how testing is an integral part of Quality assurance.

1.6 HURDLES IN TESTING

As in many other development projects, testing is not free from hurdles. Some of the hurdles normally

encountered are:

l Usually late activity in the project life cycle

l No “concrete” output and therefore difficult to measure the value addition

l Lack of historical data

l Recognition of importance is relatively less

l Politically damaging as you are challenging the developer

l Delivery commitments

l Too much optimism that the software always works correctly

Defect Distribution

In a typical project life cycle, testing is the late activity. When the product is tested, the defects may be

due to many reasons. It may be either programming error or may be defects in design or defects at any

stages in the life cycle. The overall defect distribution is shown in fig 1.1 .

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 13

Design

27%

Rqmts.

Design

Code

Code Other

Rqmts. 7%

56%

Other

10%

Fig 1.1: Software Defect Distribution

1.7 TESTING FUNDAMENTALS

Before understanding the process of testing software, it is necessary to learn the basic principles of

testing.

1.7.1 Testing Objectives

l “Testing is a process of executing a program with the intent of finding an error.

l A good test is one that has a high probability of finding an as yet undiscovered error.

l A successful test is one that uncovers an as yet undiscovered error.”

The objective is to design tests that systematically uncover different classes of errors and do so with

a minimum amount of time and effort.

Secondary benefits include:

l Demonstrate that software functions appear to be working according to specification.

l Those performance requirements appear to have been met.

l Data collected during testing provides a good indication of software reliability and some indication

of software quality.

PDF created with pdfFactory Pro trial version www.pdffactory.com

14 Chapter 1 - Introduction to Software Testing

Testing cannot show the absence of defects, it can only show that software defects are present.

1.7.2 Test Information Flow

A typical test information flow is shown in Fig 1.2.

Fig 1.2: Test information flow in a typical software test life cycle

In the above figure:

l Software configuration includes a Software Requirements Specification, a Design Specification,

and source code.

l A test configuration includes a test plan and procedures, test cases, and testing tools.

l It is difficult to predict the time to debug the code, hence it is difficult to schedule.

1.7.3 Test Case Design

Some of the points to be noted during the test case design are:

l Can be as difficult as the initial design.

l Can test if a component conforms to specification - Black Box Testing.

l Can test if a component conforms to design - White box testing.

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 15

l Testing cannot prove correctness as not all execution paths can be tested.

Consider the following example shown in fig 1.3,

Fig: 1.3

A program with a structure as illustrated above (with less than 100 lines of Pascal code) has about

100,000,000,000,000 possible paths. If attempted to test these at rate of 1000 tests per second, would take

3170 years to test all paths. This shows that exhaustive testing of software is not possible.

QUESTIONS

1. What is software testing? Explain the purpose of testing?

2. Explain the origin of the defect distribution in a typical software development life cycle?

_________

PDF created with pdfFactory Pro trial version www.pdffactory.com

16 Chapter 2 - Software Quality Assurance

Chapter 2

Software Quality Assurance

2.1 LEARNING OBJECTIVES

Y

ou will learn about:

l Basic principles about the Software Quality,

l Software Quality Assurance and SQA activities

l Software Reliability

2.2 INTRODUCTION

The quality is defined as “a characteristic or attribute of something”. As an attribute of an item,

quality refers to measurable characteristics-things we are able to compare to known standards such as

length, color, electrical properties, malleability, and so on. However, software, largely an intellectual

entity, is more challenging to characterize than physical objects.

Quality design refers to the characteristic s that designers specify for an item. The grade of materials,

tolerance, and performance specifications all contribute to the quality of design.

Quality of conformance is the degree to which the design specification s are followed during

manufacturing. Again, the greater the degree of conformance, the higher the level of quality of

conformance.

16 Chapter 2 - Software Quality Assurance

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 17

Software Quality Assurance encompasses

q A quality management approach

q Effective software engineering technology

q Formal technical reviews

q A multi tiered testing strategy

q Control of software documentation and changes made to it

q A procedure to assure compliance with software development standards

q Measurement and reporting mechanisms

Software quality is achieved as shown in figure 2.1:

Formal

Technical Measurement

Software Review

Engineering

Methods

Quality

Standards SCM

And & & SQA Testing

And

Figure 2.1: Achieving Software Quality

2.3 QUALITY CONCEPTS

What are quality concepts?

l Quality

l Quality control

l Quality assurance

l Cost of quality

PDF created with pdfFactory Pro trial version www.pdffactory.com

18 Chapter 2 - Software Quality Assurance

The American heritage dictionary defines quality as “a characteristic or attribute of something”. As an

attribute of an item quality refers to measurable characteristic-things, we are able to compare to known

standards such as length, color, electrical properties, and malleability, and so on. However, software,

largely an intellectual entity, is more challenging to characterize than physical object.

Nevertheless, measures of a programs characteristics do exist. These properties include

1. Cyclomatic complexity

2. Cohesion

3. Number of function points

4. Lines of code

When we examine an item based on its measurable characteristics, two kinds of quality may be

encountered:

l Quality of design

l Quality of conformance

2.4 QUALITY OF DESIGN

Quality of design refers to the characteristics that designers specify for an item. The grade of materials,

tolerance, and performance specifications all contribute to quality of design. As higher graded materials

are used and tighter, tolerance and greater levels of performance are specified the design quality of a

product increases if the product is manufactured according to specifications.

2.5 QUALITY OF CONFORMANCE

Quality of conformance is the degree to which the design specifications are followed during

manufacturing. Again, the greater the degree of conformance, the higher the level of quality of conformance.

In software development, quality of design encompasses requirements, specifications and design of

the system.

Quality of conformance is an issue focused primarily on implementation. If the implementation follows

the design and the resulting system meets its requirements and performance goals, conformance quality is

high.

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 19

2.6 QUALITY CONTROL (QC)

QC is the series of inspections, reviews, and tests used throughout the development cycle to ensure

that each work product meets the requirements placed upon it. QC includes a feedback loop to the

process that created the work product. The combination of measurement and feedback allows us to tune

the process when the work products created fail to meet their specification. These approach views QC as

part of the manufacturing process QC activities may be fully automated, manual or a combination of

automated tools and human interaction. An essential concept of QC is that all work products have defined

and measurable specification to which we may compare the outputs of each process the feedback loop is

essential to minimize the defect produced.

2.7 QUALITY ASSURANCE (QA)

QA consists of the editing and reporting functions of management. The goal of quality assurance is to

provide management with the data necessary to be informed about product quality, there by gaining

insight and confidence that product quality is meeting its goals. Of course, if the data provided through QA

identify problems, it is management’s responsibility to address the problems and apply the necessary

resources to resolve quality issues.

2.7.1 Cost of Quality

Cost of quality includes all costs incurred in the pursuit of quality or in performing quality related

activities. Cost of quality studies are conducted to provide a base line for the current cost of quality, to

identify opportunities for reducing the cost of quality, and to provide a normalized basis of comparison.

The basis of normalization is usually money. Once we have normalized quality costs on a money basis, we

have the necessary data to evaluate where the opportunities lie to improve our process further more we

can evaluate the effect of changes in money based terms.

QC may be divided into cost associated with

l Prevention

l Appraisal

l Failure

Prevention costs include

q Quality Planning

q Formal Technical Review

PDF created with pdfFactory Pro trial version www.pdffactory.com

20

q Test Equipment

q Training

Appraisal costs include activity to gain insight into product condition the “First time through” each

process.

Examples for appraisal costs include:

l In process and inter process inspection

l Equipment calibration and maintenance

l Testing

Failure Costs are costs that would disappear if no defects appeared before shipping a product to

customer. Failure costs may be subdivided into internal and external failure costs.

Internal failure costs are costs incurred when we detect an error in our product prior to shipment.

Internal failure costs includes

l Rework

l Repair

l Failure Mode Analyses

External failure costs are the cost associated with defects found after the product has been shipped to

the customer.

Examples of external failure costs are

1. Complaint Resolution

2. Product return and replacement

3. Helpline support

4. Warranty work

2.8 SOFTWARE QUALITY ASSURANCE (SQA)

Quality Is defined as conformance to explicitly stated functional and performance requirements,

explicitly documented development standards, and implicit characteristics that are expected of all

professionally developed software.

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 21

The above definition emphasizes three important points.

1. Software requirements are the foundation from which quality is measured. Lack of conformance

to requirements is lack of quality.

2. Specified standards define a set of development criteria that guide the manner in which software

is engineered. If the criteria are not followed, lack of quality will almost surely result.

3. There is a set of implicit requirements that often goes unmentioned. (e.g. the desire of good

maintainability). If software conforms to its explicit requirements but fails to meet implicit

requirements, software quality is questionable.

2.8.1 Background Issues

QA is an essential activity for any business that produces products to be used by others.

The SQA group serves as the customer in-house representative. That is the people who perform SQA

must look at the software from customer’s point of views.

The SQA group attempts to answer the questions asked below and hence ensure the quality of software.

The questions are

1. Has software development been conducted according to pre-established standards?

2. Have technical disciplines properly performed their role as part of the SQA activity?

SQA Activities

SQA Plan is interpreted as shown in Fig 2.2

SQA is comprised of a variety of tasks associated with two different constituencies

1. The software engineers who do technical work like

l Performing Quality assurance by applying technical methods

l Conduct Formal Technical Reviews

l Perform well-planed software testing.

2. SQA group that has responsibility for

l Quality assurance planning oversight

l Record keeping

l Analysis and reporting.

PDF created with pdfFactory Pro trial version www.pdffactory.com

22

QA activities performed by SE team and SQA are governed by the following plan.

l Evaluation to be performed.

l Audits and reviews to be performed.

l Standards that is applicable to the project.

l Procedures for error reporting and tracking

l Documents to be produced by the SQA group

l Amount of feedback provided to software project team.

SQA Planning Team

Activities

Software Engineers

Activities

SQA Plan

Figure 2.2: Software Quality Assurance Plan

l What are the activities performed by SQA and SE team?

l Prepare SQA Plan for a project

l Participate in the development of the project’s software description

l Review software-engineering activities to verify compliance with defined software process.

l Audits designated software work products to verify compliance with those defined as part of

the software process.

l Ensures that deviations in software work and work products are documented and handled

according to a documented procedure.

l Records any noncompliance and reports to senior management.

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 23

2.8.2 Software Reviews

Software reviews are a “filter “ for the software engineering process. That is, reviews are applied at

various points during software development and serve to uncover errors that can then be removed.

Software reviews serve to “purify” the software work products that occur as a result of analysis, design,

and coding.

Any review is a way of using the diversity of a group of people to:

1. Point out needed improvements in the product of a single person or a team;

2. Confirm that parts of a product in which improvement is either not desired, or not needed.

3. Achieve technical work of more uniform, or at least more predictable quality that can be achieved

without reviews, in order to make technical work more manageable.

There are many different types of reviews that can be conducted as part of software- engineering like

1. An informal meeting if technical problems are discussed.

2. Formal presentation of software design to an audience of customers, management, and technical

staff is a form of review.

3. Formal technical review is the most effective filter from a quality assurance standpoint. Conducted

by software engineers for software engineers, the FTR is an effective means of improving

software quality.

2.8.3 Cost impact of Software Defects

To illustrate the cost impact of early error detection, we consider a series of relative costs that is based

on actual cost data collected for large software projects.

Assume that an error uncovered during design will cost 1.0 monetary unit to correct. Relative to this

cost, the same error uncovered just before testing commences will cost 6.5 units; during testing 15 units;

and after release, between 60 and 100 units.

2.8.4 Defect Amplification and Removal

A defect amplification model can be used to illustrate the generation and detection of errors during

PDF created with pdfFactory Pro trial version www.pdffactory.com

24

preliminary design, detail design, and coding steps of the software engineering process. The model is

illustrated schematically in Figure 2.3.

A box represents a software development step. During the step, errors may be inadvertently generated.

Review may fail to uncover newly generated errors from previous steps, resulting in some number of

errors that are passed through. In some cases, errors passed through from previous steps, resulting in

some number of errors that are passed through. In some cases errors passed through from previous steps

are amplified (amplification factor, x) by current work. The box subdivisions represent each of these

characteristics and the percent efficiency for detecting errors, a function of the thoroughness of review.

DEVELOPMENT STEP

DEVELOPMENT STEP

Errors from

Errors from previous

previousStep

StepDEFECTS

DEFECTS DETECTION

DETECTION

Errors passed through Percent efficiency for error

Amplified errors 1:x detection

Errors

Newly generated errors passed to

next step

FigureFigure

2.3: 2.3: DefectAmplification

Defect Amplification Model.

Model.

Figure 2.4 illustrates hypothetical example of defect amplification for a software development process

in which no reviews are conducted. As shown in the figure each test step is assumed to uncover and

correct fifty percent of all incoming errors without introducing new errors (an optimistic assumption). Ten

preliminary design errors are amplified to 94 errors before testing commences. Twelve latent defects are

released to the field. Figure 2.5 considers the same conditions except that design and code reviews are

conducted as part of each development step. In this case, ten initial preliminary design errors are amplified

to 24 errors before testing commences.

Only three latent defects exist. By recalling the relative cost associated with the discovery and

correction of errors, overall costs (with and without review for our hypothetical example) can be established.

To conduct reviews a developer must expend time and effort and the development organization must

spend money. However, the results of the preceding or previous, example leave little doubt that we have

encountered a “Pay now or pay much more lately” syndrome.

Formal technical reviews (for design and other technical activities) provide a demonstrable cost benefit

and they should be conducted.

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 25

Preliminary design

0

070%

Detail Design

10 3, 2

2

50%

1-1.5

1

25 15 Code/Unit

5 Test

5

24

10 60%

-3

10

25

To

Integration Test integration

24

12

070%

Validation test

10

2

6

50%

1-1.5

25 System Test

3

060%

0

Latent errors

Figure2.4: Defect Amplification -No Reviews

PDF created with pdfFactory Pro trial version www.pdffactory.com

26

Preliminary design

0

00%

Detail Design

10 10,

6

6

4 x 0%

1.5

4

x = 1.5 37 Code/Unit

Test

25

10

94

20%

27x3

x=3

25

To

integration

Integration Test

94

47

050%

Validation test

10

2

24

50%

1-1.5

25 System Test

12

060%

0

Latent errors

Figure 2.5: Defect Amplification - Reviews Conducted

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 27

2.9 FORMAL TECHNICAL REVIEWS (FTR)

FTR is a SQA activity that is performed by software engineers.

Objectives of the FTR are

l To uncover errors in function, logic, are implementations for any representation of the software.

l To verify that software under review meets its requirements.

l To ensure that software has been represented according to predefined standards

l To achieve software that is developed in an uniform manner

l To make projects more manageable

In addition, the FTR serves as a training ground, enabling junior engineers to observe different approaches

to software analysis, design, and implementation. The FTR also serves to promote backup and continuity

because numbers of people become familiar with parts of the software that they may not have other wise

seen.

The FTR is actually a class of reviews that include walkthrough inspection and round robin reviews,

and other small group technical assessments of software. Each FTR is conducted as meeting and will be

successful only if it is properly planned, controlled and attended.

Types of Formal Technical Review

While the focus of this research is on the individual evaluation aspects of reviews, for context several

other FTR techniques are discussed as well. Among the most common forms of FTR are the following:

1. Desk Checking, or reading over a program by hand while sitting at one’s desk, is the oldest

software review technique [Adrion et al. 1982]. Strictly speaking, desk checking is not a form of

FTR since it does not involve a formal process or a group. Moreover, desk checking is generally

perceived as ineffective and unproductive due to (a) its lack of discipline and (b) the general

ineffectiveness of people in detecting their own errors. To correct for the second problem,

programmers often swap programs and check each other’s work. Since desk checking is an

individual process not involving group dynamics, research in this area would be relevant but

none applicable to the current research was found.

It should be noted that Humphrey [1995] has developed a review method, called Personal

Review (PR), which is similar to desk checking. In PR, each programmer examines his own

products to find as many defects as possible utilizing a disciplined process in conjunction with

Humphrey’s Personal Software Process (PSP) to improve his own work. The review strategy

includes the use of checklists to guide the review process, review metrics to improve the process,

and defect causal analysis to prevent the same defects from recurring in the future. The approach

PDF created with pdfFactory Pro trial version www.pdffactory.com

28

taken in developing the Personal Review process is an engineering one; no reference is made in

Humphrey [1995] to cognitive theory.

2. Peer Rating is a technique in which anonymous programs are evaluated in terms of their

overall quality, maintainability, extensibility, usability and clarity by selected programmers who

have similar backgrounds [Myers 1979]. Shneiderman [1980] suggests that peer ratings of

programs are productive, enjoyable, and non-threatening experiences. The technique is often

referred to as Peer Reviews [Shneiderman 1980], but some authors use the term peer reviews

for generic review methods involving peers [Paulk et al 1993; Humphrey 1989].

3. Walkthroughs are presentation reviews in which a review participant, usually the software

author, narrates a description of the software and the other members of the review group

provide feedback throughout the presentation [Freedman and Weinberg 1990; Gilb and Graham

1993]. It should be noted that the term “walkthrough” has been used in the literature variously.

Some authors unite it with “structured” and treat it as a disciplined, formal review process

[Myers 1979; Yourdon 1989; Adrion et al. 1982]. However, the literature generally describes

walkthrough as an undisciplined process without advance preparation on the part of reviewers

and with the meeting focus on education of participants [Fagan 1976].

4. Round-robin Review is a evaluation process in which a copy of the review materials is made

available and routed to each participant; the reviewers then write their comments/questions

concerning the materials and pass the materials with comments to another reviewer and to the

moderator or author eventually [Hart 1982].

5. Inspection was developed by Fagan [1976, 1986] as a well-planned and well-defined group

review process to detect software defects – defect repair occurs outside the scope of the

process. The original Fagan Inspection (FI) is the most cited review method in the literature and

is the source for a variety of similar inspection techniques [Tjahjono 1996]. Among the FI-

derived techniques are Active Design Review [Parnas and Weiss 1987], Phased Inspection

[Knight and Myers 1993], N-Fold Inspection [Schneider et al. 1992], and FTArm [Tjahjono

1996]. Unlike the review techniques previously discussed, inspection is often used to control the

quality and productivity of the development process.

A Fagan Inspection consists of six well-defined phases:

i. Planning. Participants are selected and the materials to be reviewed are prepared and checked

for review suitability.

ii. Overview. The author educates the participants about the review materials through a presentation.

iii. Preparation. The participants learn the materials individually.

iv. Meeting. The reader (a participant other than the author) narrates or paraphrases the review

materials statement by statement, and the other participants raise issues and questions. Questions

continue on a point only until an error is recognized or the item is deemed correct.

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 29

v. Rework. The author fixes the defects identified in the meeting.

vi. Follow-up. The “corrected” products are reinspected.

Practitioner Evaluation is primarily associated with the Preparation phase.

In addition to classification by technique-type, FTR may also be classified on other dimensions, including

the following:

A. Small vs. Large Team Reviews. Siy [1996] classifies reviews into those conducted by small

(1-4 reviewers) [Bisant and Lyle 1996] and large (more than 4 reviewers) [Fagan 1976, 1986]

teams. If each reviewer depends on different expertise and experiences, a large team should

allow a wider variety of defects to be detected and thus better coverage. However, a large team

requires more effort due to more individuals inspecting the artifact, generally involves greater

scheduling problems [Ballman and Votta 1994], and may make it more difficult for all participants

to participate fully.

B. No vs. Single vs. Multiple Session Reviews. The traditional Fagan Inspection provided for one

session to inspect the software artifact, with the possibility of a follow-up session to inspect

corrections. However, variants have been suggested.

Humphrey [1989] comments that three-quarters of the errors found in well-run inspections are

found during preparation. Based on an economic analysis of a series of inspections at AT&T,

Votta [1993] argues that inspection meetings are generally not economic and should be replaced

with depositions, where the author and (optionally) the moderator meet separately with inspectors

to collect their results.

On the other hand, some authors [Knight and Myers 1993; Schneider et al. 1992] have argued

for multiple sessions, conducted either in series or parallel. Gilb and Graham [1993] do not use

multiple inspection sessions but add a root cause analysis session immediately after the inspection

meeting.

C. Nonsystematic vs. Systematic Defect-Detection Technique Reviews. The most frequently

used detection methods (ad hoc and checklist) rely on nonsystematic techniques, and reviewer

responsibilities are general and not differentiated for single session reviews [Siy 1996]. However,

some methods employ more prescriptive techniques, such as questionnaires [Parnas and Weiss

1987] and correctness proofs [Britcher 1988].

D. Single Site vs. Multiple Site Reviews. The traditional FTR techniques have assumed that the

group-meeting component would occur face-to-face at a single site. However, with improved

telecommunications, and especially with computer support (see item F below), it has become

increasingly feasible to conduct even the group meeting from multiple sites.

E. Synchronous vs. Asynchronous Reviews. The traditional FTR techniques have also assumed

PDF created with pdfFactory Pro trial version www.pdffactory.com

30

that the group meeting component would occur in real-time; i.e., synchronously. However, some

newer techniques that eliminate the group meeting or are based on computer support utilize

asynchronous reviews.

F. Manual vs. Computer-supported Reviews. In recent years, several computer supported review

systems have been developed [Brothers et al. 1990; Johnson and Tjahjono 1993; Gintell et al.

1993; Mashayekhi et al 1994]. The type of support varies from simple augmentation of the

manual practices [Brothers et al. 1990; Gintell et al. 1993] to totally new review methods [Johnson

and Tjahjono 1993].

2.2.2 Economic Analyses of Formal Technical Review

Wheeler et al. [1996], after reviewing a number of studies that support the economic benefit of FTR,

conclude that inspections reduce the number of defects throughout development, cause defects to be

found earlier in the development process where they are less expensive to correct, and uncover defects

that would be difficult or impossible to discover by testing. They also note “these benefits are not without

their costs, however. Inspections require an investment of approximately 15 percent of the total development

cost early in the process [p. 11].”

In discussing overall economic effects, Wheeler et al. cite Fagan [1986] to the effect that investment

in inspections has been reported to yield a 25-to-35 percent overall increase in productivity. They also

reproduce a graphical analysis from Boehm [1987] that indicates inspections reduce total development

cost by approximately 30%.

The Wheeler et al. [1996] analysis does not specify the relative value of Practitioner Evaluation to

FTR, but two recent economic analyses provide indications.

l Votta [1993]. After analyzing data collected from 13 traditional inspections conducted at AT&T,

Votta reports that the approximately 4% increase in faults found at collection meetings (synergy)

does not economically justify the development delays caused by the need to schedule meetings

and the additional developer time associated with the actual meetings. He also argues that it is

not cost-effective to use the collection meeting to reduce the number of items incorrectly identified

as defective prior to the meeting (“false positives”). Based on these findings, he concludes that

almost all inspection meetings requiring all reviewers to be present should be replaced with

Depositions, which are three person meetings with only the author, moderator, and one reviewer

present.

l Siy [1996]. In his analysis of the factors driving inspection costs and benefits, Siy reports that

changes in FTR structural elements, such as group size, number of sessions, and coordination of

multiple sessions, were largely ineffective in improving the effectiveness of inspections. Instead,

inputs into the process (reviewers and code units) accounted for more outcome variation than

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 31

structural factors. He concludes by stating “better techniques by which reviewers detect

defects, not better process structures, are the key to improving inspection effectiveness

[Abstract, p. 2].” (emphasis added)

Votta’s analysis effectively attributes most of the economic benefit of FTR to PE, and Siy’s explicitly

states that better PE techniques “are the key to improving inspection effectiveness.” These findings, if

supported by additional research, would further support the contention that a better understanding of

Practitioner Evaluation is necessary.

2.2.3 Psychological Aspects of FTR

Work on the psychological aspects of FTR can be categorized into four groups.

1. Egoless Programming. Gerald Weinberg [1971] began the examination of psychological issues

associated with software review in his work on egoless programming. According to Weinberg,

programmers are often reluctant to allow their programs to be read by other programmers

because the programs are often considered to be an extension of the self and errors discovered

in the programs to be a challenge to one’s self-image. Two implications of this theory are as

follows:

i. The ability of a programmer to find errors in his own work tends to be impaired since he

tends to justify his own actions, and it is therefore more effective to have other people check

his work.

ii. Each programmer should detach himself from his own work. The work should be considered

a public property where other people can freely criticize, and thus, improve its quality; otherwise,

one tends to become defensive, and reluctant to expose one’s own failures.

These two concepts have led to the justification of FTR groups, as well as the establishment

of independent quality assurance groups that specialize in finding software defects in many

software organizations [Humphrey 1989].

2. Role of Management. Another psychological aspect of FTR that has been examined is the

recording of data and its dissemination to management. According to Dobbins [1987], this must

be done in such a way that individual programmers will not feel intimidated or threatened.

3. Positive Psychological Impacts. Hart [1982] observes that reviews can make one more careful

in writing programs (e.g., double checking code) in anticipation of having to present or share the

programs with other participants. Thus, errors are often eliminated even before the actual review

sessions.

4. Group Process. Most FTR methods are implemented using small groups. Therefore, several

PDF created with pdfFactory Pro trial version www.pdffactory.com

32

key issues from small group theory apply to FTR, such as group think (tendency to suppress

dissent in the interests of group harmony), group deviants (influence by minority), and domination

of the group by a single member. Other key issues include social facilitation (presence of others

boosts one’s performance) and social loafing (one member free rides on the group’s effort)

[Myers 1990]. The issue of moderator domination in inspections is also documented in the

literature [Tjahjono 1996].

Perhaps the most interesting research from the perspective of the current study is that of Sauer

et al. [2000]. This research is unusual in that it has an explicit theoretical basis and outlines a

behaviorally motivated program of research into the effectiveness of software development

technical reviews. The finding that most of the variation in effectiveness of software development

technical reviews is the result of variations in expertise among the participants provides additional

motivation for developing a solid understanding of Formal Technical Review at the individual

level.

It should be noted that all of this work, while based on psychological theory, does not address the issue

of how practitioners actually evaluate software artifacts.

2.9.1 The Review Meeting

The Focus of the FTR is on a work product - a component of the software.

At the end of review all attendees of the FTR must decide

1. Whether to accept the work product without further modification.

2. Reject the work product due to serve errors (Once corrected another review must be performed)

3. Accept the work product provisionally (minor errors have been encountered and must be corrected

but no additional review will be required).

Once the decision made, all FTR attendees complete a sign-off indicating their participation in the

review and their concurrence with the review team findings.

2.9.2 Review reporting and record keeping

The review summary report is typically is a single page form. It becomes part of the project historical

record and may be distributed to the project leader and other interested parties. The review issue lists

serves two purposes.

1. To identify problem areas within the product

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 33

2. To serve as an action item. Checklist that guides the producer as corrections are made. An

issues list is normally attached to the summary report.

It is important to establish a follow up procedure to ensure that item on the issues list have been

properly corrected. Unless this is done, it is possible that issues raised can “fall between the cracks”. One

approach is to assign responsibility for follow up for the review leader. A more formal approach as signs

responsibility independent to SQA group.

2.9.3 Review Guidelines

The following represents a minimum set of guidelines for formal technical reviews

l Review the product, not the producer

l Set an agenda and maintain it

l Limit debate and rebuttal

l Enunciate problem areas but don’t attempt to solve every problem noted

l Take return notes

l Limit the number of participants and insist upon advance preparation

l Develop a check list each work product that is likely to be reviewed

l Allocate resources and time schedule for FTRs.

l Conduct meaningful training for all reviewers

l Review your earlier reviews

2.10 STATISTICAL QUALITY ASSURANCE

Statistical quality assurance reflects a growing trend throughout industry to become more quantitative

about quality. For software, statistical quality assurance implies the following steps

l Information about software defects is collected and categorized

l An attempt is made to trace each defect to its underlying cause

l Using Pareto principle (80% of the defects can be traced to 20% of all possible causes), isolate

the 20% (the “vital few”)

PDF created with pdfFactory Pro trial version www.pdffactory.com

34

l Once the vital few causes have been identified, move to correct the problems that have caused

the defects.

This relatively simple concept represents an important step toward the creation of an adaptive software

engineering process in which changes are made to improve those elements of the process that introduce

errors. To illustrate the process, assume that a software development organization collects information on

defects for a period of one year. Some errors are uncovered as software is being developed. Other

defects are encountered after the software has been released to its end user.

Although hundreds of errors are uncovered all can be tracked to one of the following causes.

q Incomplete or Erroneous Specification (IES)

q Misinterpretation of Customer Communication (MCC)

q Intentional Deviation from Specification (IDS)

q Violation of Programming Standards ( VPS )

q Error in Data Representation (EDR)

q Inconsistent Module Interface (IMI)

q Error in Design Logic (EDL)

q Incomplete or Erroneous Testing (IET)

q Inaccurate or Incomplete Documentation (IID)

q Error in Programming Language Translation of design (PLT)

q Ambiguous or inconsistent Human-Computer Interface (HCI)

q Miscellaneous (MIS)

To apply statistical SQA table 2.1 is built. Once the vital few causes are determined, the software

development organization can begin corrective action.

After analysis, design, coding, testing, and release, the following data are gathered.

Ei = The total number of errors uncovered during the ith step in the software

Engineering process

Si = The number of serious errors

Mi = The number of moderate errors

Ti = The number of minor errors

PS = Size of the product (LOC, design statements, pages of documentation at the ith

step

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 35

Ws, Wm, Wt = weighting factors for serious, moderate and trivial errors where recommended values

are Ws = 10, Wm = 3, Wt = 1.

The weighting factors for each phase should become larger as development progresses. This rewards

an organization that finds errors early.

At each step in the software engineering process, a phase index, PIi, is computed

PIi = Ws (Si/Ei)+Wm (Mi/Ei)+Wt (Ti/Ei)

The error index EI ids computed by calculating the cumulative effect or each PIi, weighting errors

encountered later in the software engineering process more heavily than those encountered earlier.

EI =S (i x PIi)/PS

= (PIi+2PI2 +3PI3 +iPIi)/PS

The error index can be used in conjunction with information collected in table to develop an overall

indication of improvement in software quality.

DATA COLLECTION FOR STATISTICAL SQA

Total Serious Moderate Minor

Error No. % No. % No % No %

IES 205 22 34 27 68 18 103 24

MCC 156 17 12 9 68 18 76 17

IDS 48 5 1 1 24 6 23 5

VPS 25 3 0 0 15 4 10 2

EDR 130 14 26 20 68 18 36 8

IMI 58 6 9 7 18 5 31 7

EDL 45 5 14 11 12 3 19 4

IET 95 10 12 9 35 9 48 11

IID 36 4 2 2 20 5 14 3

PLT 60 6 15 12 19 5 26 6

HCI 28 3 3 2 17 4 8 2

MIS 56 6 0 0 15 4 41 9

TOTALS 942 100 128 100 379 100 435 100

PDF created with pdfFactory Pro trial version www.pdffactory.com

36

2.11 SOFTWARE RELIABILITY

Software reliability, unlike many other quality factors, can be measured, directed and estimated using

historical and developmental data. Software reliability is defined in statistical terms as “Probability of

failure free operation of a computer program in a specified environment for a specified time” to illustrate,

program x is estimated to have reliability of 0.96 over 8 elapsed processing hours. In other words, if

program x were to be executed 100 times and required 8 hours of elapsed processing time, it is likely to

operate correctly to operate 96/100 times.

2.11.1 Measures of Reliability and Availability

In a computer-based system, a simple measure of reliability is Mean Time Between Failure

(MTBF), where

MTBF = MTTF+MTTR

The acronym MTTF and MTTR are Mean Time To Failure and Mean Time To Repair, respectively.

In addition to reliability measure, we must develop a measure of availability. Software availability is the

probability that a program is operating according to requirements at a given point in time and is defined as:

Availability = MTTF / (MTTF+MTTR) x100%

The MTBF reliability measure is equally sensitive to MTTF and MTTR. The availability measure is

somewhat more sensitive to MTTR an indirect measure of the maintainability of the software.

2.11.2 Software Safety and Hazard Analysis

Software safety and hazard analysis are SQA activities that focus on the identification and assessment

of potential hazards that may impact software negatively and cause entire system to fail. If hazards can

be identified early in the software engineering process software design features can be specified that will

either eliminate or control potential hazards.

A modeling and analysis process is conducted as part of safety. Initially hazards are identified and

categorized by criticality and risk.

Once hazards are identified and analyzed, safety related requirements could be specified for the

software i.e., the specification can contain a list of undesirable events and desired system responses to

these events. The roll of software in managing undesirable events is then indicated.

Although software reliability and software safety are closely related to one another, it is important to

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 37

understand the subtle difference between them. Software reliability uses statistical analysis to determine

the likelihood that a software failure will occur however, the occurrence of a failure does not necessarily

result in a hazard or mishap. Software safety examines the ways in which failure result in condition that

can be lead to mishap. That is, failures are not considered in a vacuum. But are evaluated in the context

of an entire computer based system.

2.12 THE SQA PLAN

The SQA plan provides a road map for instituting software quality assurance. Developed by the SQA

group and the project team, The plan serves as a template for SQA activities that are instituted for each

software project.

ANSI/IEEE Standards 730-1984 and 983-1986 SQA plans is defined as shown below.

I. Purpose of Plan

II. References

III Management

1. Organization

2. Tasks

3. Responsibilities

IV. Documentation

1. Purpose

2. Required software engineering documents

3. Other Documents

V. Standards, Practices and conventions

1. Purpose

2. Conventions

VI. Reviews and Audits

1. Purpose

2. Review requirements

PDF created with pdfFactory Pro trial version www.pdffactory.com

38

a. Software requirements

b. Designed reviews

c. Software V & V reviews

d. Functional Audits

e. Physical Audit

f. In-process Audits

g. Management reviews

VII. Test

VIII. Problem reporting and corrective action

IX. Tools, techniques and methodologies

X. Code Control

XI. Media Control

XII. Supplier Control

XIII. Record Collection, Maintenance, and retention

XIV. Training

XV. Risk Management.

2.12.1 The ISO Approach to Quality Assurance System

ISO 9000 describes the elements of a quality assurance in general terms. These elements include the

organizational structure, procedures, processes, and resources needed to implement quality planning, quality

control, quality assurance, and quality improvement. However, ISO 9000 does not describe how an

organization should implement these quality system elements.

Consequently, the challenge lies in designing and implementing a quality assurance system that meets

the standard and fits the company’s products, services, and culture.

2.12.2 The ISO 9001 standard

ISO 9001 is the quality assurance standard that applies to software engineering. The standard contains

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 39

20 requirements that must be present for an effective quality assurance system. Because the ISO 9001

standard is applicable in all engineering disciplines, a special set of ISO guidelines have been developed to

help interpret the standard for use in the software process.

The 20 requirements delineated by ISO9001 address the following topic.

1. Management responsibility

2. Quality system

3. Contract review

4. Design control

5. Document and data control

6. Purchasing

7. Control of customer supplied product

8. Product identification and tractability

9. Process control

10.Inspection and testing

11. Control of inspection, measuring, and test equipment

12.Inspection and test status

13.Control of non confirming product

14.Corrective and preventive action

15.Handling, storage, packing, preservation, and delivery

16.Control of quality records

17.Internal quality audits

18.Training

19.Servicing

20.Statistical techniques

In order for a software organization to become registered to ISO 9001, it must establish policies and

procedure to address each of the requirements noted above and then be able to demonstrate that these

policies and procedures are being followed.

PDF created with pdfFactory Pro trial version www.pdffactory.com

40

2.12.3 Capability Maturity Model (CMM)

The Capability Maturity Model for Software (also known as the CMM and SW-CMM) has been a

model used by many organizations to identify best practices useful in helping them increase the maturity

of their processes. It was developed by the software development community along with Software

Engineering Institute and Carnegie Melon University under direction of the US department of defense.

It is applicable to any size software company. Its five levels as shown in Figure: 2.6 provide a simple

means to assess a company’s software development maturity and determine the key practices they could

adopt to move up to the next level of maturity.

5. Continuous proce ss impro ve ment through quantita tive feedback and

Optimizing ne w approaches.

Managed 4. Controlled Process

Defined 3. Organizatio nal level thinking

Repeatable 2. Project leve l thin king

Initial 1 A dhoc and chaotic pr ocess.

Fig 2.6: The software capability maturity model is used to assess a software company’s maturity at software

development

Level 1: Initial. The software development processes at this level are ad hoc and often chaotic.

There are no general practices for planning, monitoring or controlling the process. The test process is just

as ad hoc as the rest of the process.

Level 2: Repeatable. This maturity level is best described as project level thinking. Basic project

management processes are in place to track the cost, schedule, functionality and quality of the project.

Basic disciplines like software testing practices like test plans and test cases are used.

Level3: Defined: Organizational, not just project specific, thinking comes into play at this level.

Common management and engineering activities are standardized and documented. These standards are

adapted and approved for use in different projects. Test documents and plans are reviewed and approved

before testing begins.

Level4: Managed. At this maturity level, the organization’s process is under statistical control. Product

quality is specified quantitatively beforehand and the software isn’t release until that goal is met.

Level5:Optimizing.This level is called optimizing which is a continuously improving from level 4.

New technologies and processes are attempted, the results are measured, and both incremental and

revolutionary changes are instituted to achieve even better quality levels.

Perspective on CMM ratings: During 1997-2001, 1018 organizations were assessed. Of those,

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 41

27% were rated at Level 1, 39% at 2, 23% at 3, 6% at 4, and 5% at 5. (For ratings during the period

1992-96, 62% were at Level 1, 23% at 2, 13% at 3, 2% at 4, and (0.4% at 5.) The median size of

organizations was 100 software engineering/maintenance personnel; 32% of organizations were U.S.

federal contractors or agencies. For those rated at Level 1, the most problematical key process area

was in Software Quality Assurance.

QUESTIONS

1. Quality and reliability are related concepts, but are fundamentally different in a number of ways. Discuss them.

2. Can a program be correct and still not be reliable? Explain.

3. Can a program be correct and still not exhibit good quality? Explain.

4. Explain in more detail, the review technique adopted in Quality Assurance.

PDF created with pdfFactory Pro trial version www.pdffactory.com

42

Chapter 3

Program Inspections, Walkthroughs

and Reviews

3.1 LEARNING OBJECTIVES

Y

ou will learn about

l What is static testing and its importance in Software Testing.

l Guidelines to be followed during static testing

l Process involved in inspection and walkthroughs

l Various check lists to be followed while handling errors in Software Testing

l Review techniques

3.2 INTRODUCTION

Majority of the programming community worked under the assumptions that programs are written

solely for machine execution and are not intended to be read by people. The only way to test a program

is by executing it on a machine. Weinberg built a convincing strategy that why programs should be read by

people, and indicated this could be an effective error detection process.

Experience has shown that “human testing” techniques are quite effective in finding errors, so much

so that one or more of these should be employed in every programming project. The method discussed in

this Chapter are intended to be applied between the time that the program is coded and the time that

computer based testing begins. We discuss this based on two ways:

42 Chapter 3 - Program Inspections, Walkthroughs and Reviews

PDF created with pdfFactory Pro trial version www.pdffactory.com

MSIT 32 Software Quality and Testing 43

l It is generally recognized that the earlier errors are found, the lower are the costs or correcting

the errors and the higher is the probability of correcting the errors correctly.

l Programmers seem to experience a psychological change when computer-based testing

commences.

3.3 INSPECTIONS AND WALKTHROUGHS

Code inspections and walkthroughs are the two primary “human testing” methods. It involve the

reading or visual inspection of a program by a team of people. Both methods involve some preparatory

work by the participants. Normally it is done through meeting and it is typically known as “meeting of the

minds”, a conference held by the participants. The objective of the meeting is to find errors, but not to find

solutions to the errors (i.e. to test but not to debug).

What is the process involved in inspection and walkthroughs?

The process is performed by a group of people (three or four), only one of whom is the author of the

program. Hence the program is essentially being tested by people other than the author, which is in

consonance with the testing principle stating that an individual is usually ineffective in testing his or her

own program. Inspection and walkthroughs are far more effective compared to desk checking (the process

of a programmer reading his/her own program before testing it) because people other than the program’s

author are involved in the process. These processes also appear to result in lower debugging (error

correction) costs, since, when they find an error, the precise nature of the error is usually located. Also,

they expose a batch or errors, thus allowing the errors to be corrected later enmasse. Computer based

testing, on the other hand, normally exposes only a symptom of the error and errors are usually detected

and corrected one by one.

Some Observations:

l Experience with these methods has found them to be effective e in finding from 30% to 70% of

the logic design and coding errors in typical programs. They are not, however, effective in

detecting “high-level” design errors, such as errors made in the requirements analysis process.

l Human processes find only the “easy” errors (those that would be trivial to find with computer-

based testing) and the difficult, obscure, or tricky errors can only be found by computer-based

testing.

l Inspections/walkthroughs and computer-based testing are complementary; error-detection

efficiency will suffer if one or the other is not present.

l These processes are invaluable for testing modifications to programs. Because modifying an