Professional Documents

Culture Documents

Kernel Based Nonlinear Weighted Least Squares Regression

Kernel Based Nonlinear Weighted Least Squares Regression

Uploaded by

Journal of ComputingCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Kernel Based Nonlinear Weighted Least Squares Regression

Kernel Based Nonlinear Weighted Least Squares Regression

Uploaded by

Journal of ComputingCopyright:

Available Formats

Kernel Based Nonlinear Weighted Least

Squares Regression

Antoni Wibowo and Mohamad Ishak Desa

AbstractIn this paper, we consider a regression model with heteroscedastic errors in which the prediction of ordinary least

squares (OLS) based regressions can be inappropriate. Weighted least squares (WLS) is widely used to handle in the

heteroscedastic model by transforming an original model into a new model that satisfies homoscedasticity assumption. However,

WLS yields a linear prediction and has no guarantee to avoid the negative effect of multicollinearity. Under this circumstance, we

propose a method to overcome these difficulties using the hybridization of WLS regression with kernel method. We use WLS to

handle the heteroscedastic errors and use kernel method to perform a nonlinear model and to eliminate the negative effect of

multicollinearity in this regression model. Then, we compare the performance of the proposed method with the WLS regression

and it gives better results than WLS regression.

Index Terms Kernel principal component regression, kernel trick, multicollinearity, heteroscedastic, nonlinear regression,

weighted least squares.

.

1 INTRODUCTION

et us consider a heteroscedastic regression model as

follows:

Y = X +s, (1)

E(s) = ,

Ior(s) = o

1

2

F

where

Y = (

1

2

N

)

1

, X = (1

N

X

),

= (x

1

x

2

x

N

)

1

,x

= (x

1

x

2

x

p

)

1

e R

N

,

= ([

0

[

1

[

p

)

1

, s = (e

1

e

2

e

N

)

1

,

and F = Jiog(

1

w

1

,

1

w

2

, ,

1

w

N

) with w

and o

1

2

are positive

real numbers and i = 1, 2, , N. The weight wi is

estimated using the data Y and X, see for example [2], [6]

and[7].Thesizesofx

,Y,X

,X, [andsarep1,N1,Np,

N(p+1),(p+1)1andN1,respectively,where1

N

isaN

1vectorwithallelementsequaltooneandandRistheset

ofrealnumbers.Thevectorx

1

denotesthetransposeofthe

vectorx

.

AnimplicationoftheassumptionIor(s) = o

1

2

F isthat

the ordinary least squares (OLS) estimator [

`

=

(X

1

X)

-1

X

1

Y can be inappropriate and it is not the best

linearunbiasedestimator(BLUE)of,seeforexample[1]

and [8]. That is, among all the unbiased estimators, OLS

does not provide the estimate with the smallest variance.

Depending on the nature of the heteroscedasticity,

significancetestscanbetoohighortoolowwhichimplies

the significance test has low power. These difficulties are

usually handled by transforming model 1 into a new

model that satisfies homoscedasticity assumption and

calleditweightedleastsquares(WLS)method.Thismethod

also yields a linear prediction model, however, the WLS

solutionispreferredtotheOLSsolution[1].

Furthermore, we say that multicollinearity exists on

regressors matrix X if (X

1

X ) is a nearly singular matrix,

i.e., if some eigenvalues of X

1

X are close to zero. If

multicollinearity exists on X then Ior([

`

]

) can be a large

number and under the assumption that i is normally

distributed,thetestsforinferencesj (j=0,1,...,p)have

low power and the confidence interval can be large [15].

Therefore, it will be difficult to decide if a variable xj

makes a significant contribution to the regression. These

implicationsareknownastheeffectofmulticollinearity.

We can use principal component regression (PCR) to

eliminate the effects of multicollinearity. However, PCR

yields linear prediction models which have limitation in

applications since most real problems are nonlinear. In

recentyears,[3],[4],[9],[10],[11]and[16]haveproposed

kernel principal component regression (KPCR) to overcome

this linearity and to avoid the negative effect of

multicollinearity. KPCR was constructed based on kernel

principal component analysis (KPCA) and homoscedasticity

assumption, see [12] and [13] for the detailed of KPCA,

whichwasperformedbymappinganoriginalinputspace

into a higherdimensional feature space. Therefore, KPCR

- AntoniWibowoiswiththeFacultyofComputerScienceandInformation

Systems,UniversitiTeknologiMalaysia,81310UTMJohorBahru,Johor,

Malaysia.

- Mohammad Ishak Desa is with the Faculty of Computer Science and

Information Systems, Universiti Teknologi Malaysia, 81310 UTM Johor

Bahru, Johor, Malaysia.

L

JOURNAL OF COMPUTING, VOLUME 3, ISSUE 11, NOVEMBER 2011, ISSN 2151-9617

HTTPS://SITES.GOOGLE.COM/SITE/JOURNALOFCOMPUTING

WWW.JOURNALOFCOMPUTING.ORG 56

can be inappropriate to be used in regression analysis

whenhomoscedasticityassumptionisnotsatisfied.

Under the circumstance, we propose a nonlinear

method which can eliminate the effect of multicolinearity

and compromises the heteroscedasticity assumption. In

this method, an original data set is transformed into a

higherdimensional feature space and to create a multiple

linear regression with heteroscedasticity assumption in

this space. Then, we perform WLS method for this linear

regression to obtain a multiple linear regression with

homoscedasticityassumption.Furthermore,weuseatrick

to have an explicitly homoscedastic multiple linear

regression and perform the similar procedure of PCR in

this feature space to obtain a nonlinear prediction and to

eliminate the effect of multicollineary. We refer the

proposed technique as the weighted leastsquares KPCR

(WLSKPCR).

The rest of manuscript are organized as follows:

Section 2, we present theories and methods of WLS

regression, kernel trick, WLS KPCR and WLS KPCRs

algorithm.InSection3,wecomparetheperformanceWLS

regression and WLS KPCR. Then,we give conclusions in

Section4.

2 THEORIES AND METHODS

2.1 Weighted Least Squares Regression

WLS method in the linear regression is performed as

follows. First, we define L = Jiog(

1

w

1

,

1

w

2

, ,

1

w

N

)

whichimpliesthat

L

1

= L, LL

1

= F andL

-1

= Jiog(

w

1

,

w

2

,

w

N

).

Itisevidentthat

L

-1

Y = L

-1

X[ +L

-1

s(2)

Let Y

1

= L

-1

Y , X

1

= L

-1

X and s

1

= L

-1

s. It is easy to

verify that E(s

1

) = and Ior(s

1

) = o

1

2

I

N

and model 1

becomes

Y

1

= X

1

[ +s

1

,(3)

E(s

1

) = ,

Ior(s

1

) = o

1

2

I

N

.

We can see that the error s

1

in model 3 satisfies

homoscedasticityassumption.Toobtaintheestimatorof

inmodel3wesolve

min (Y

1

-X

1

[)

1

(Y

1

-X

1

[

1

)(4)

with respect to . Let [

be the solution to the problem 4

whichsatisfiestheleastsquaresnormalequations

(X

1

1

X

1

)[

= X

1

1

Y

1

.(5)

It is evident that if the row vectors of X are linearly

independent, then the row vectors of X

1

are also linearly

independent. Hence, X

1

1

X

1

= X

1

F

-1

X is invertible and we

obtain

= (X

1

1

X

1

)

-1

X

1

1

Y

1

= X

1

F

-1

Y.(6)

andcalledittheWLSestimatorof[.

Let y = (y

1

y

2

y

N

)

1

e R

N

be the observed data

correspondingtoYand[

-

= ([

-

0

[

-

1

[

-

p

)

1

e R

p+1

be

thevalueof[

whenYinEq.(6)isreplacedbyy.Thefitted

valueofy, sayy,isgivenby

y = (y

1

y

2

y

N

)

1

= X[

-

(7)

andthepredictionofWLSbasedlinearregressionisgivenby

1

(x) = [

-

0

+ [

-

]

p

]=1

x

]

,(8)

where

1

is a function from R

p

into R. We should notice

that the elements of matrix X in model 1 can be chosen

such that multicollinearity is not present in X.

Unfortunately, eigenvalues of X

1

X are not equal to

eigenvalues of X

1

1

X

1

which implies there is no guarantee

that multicollinearity does not exist in X

1

. The present of

multicollinearity in X

1

can seriously deteriorate the

predictionofWLSregression.

2.2 Heteroscedastic Regression Model in Feature

Space

The procedure for constructing WLS KPCR is almost

similar to WLS regression that previously explained in

Subsection 2.1. First, we transform our data set into an

Euclidean space of higher dimension by a function. The

important point here is that the function is not explicitly

defined. Then, we construct a heteroscedastic linear

regressionmodelinthisspaceanduseWLSsprocedureto

obtain a homoscedastic linear regression model, and

followed by performing a trick to obtain the explicit

homoscedasticregressionandusethesimilarprocedureof

PCR to eliminate the effect of multicollineary in this

regressionmodel.

Assuming we have a function : R

p

- F where F is

called the feature space which is an Euclidean space of

higherdimensionthanp,sayp

P

.Then,wedefine

T = ((x

1

) (x

N

))

1

,

JOURNAL OF COMPUTING, VOLUME 3, ISSUE 11, NOVEMBER 2011, ISSN 2151-9617

HTTPS://SITES.GOOGLE.COM/SITE/JOURNALOFCOMPUTING

WWW.JOURNALOFCOMPUTING.ORG 57

C =

1

N

T

T

T

and

K = TT

1

where sizes of T, C and K are N pF , pF pF and N N,

respectively. We assume that (x

|

)

N

=1

= . If F is

infinitedimensional, we consider the linear operator

(x

)(x

)

1

insteadofthematrixC[13].

The heteroscedasticity regression model in the feature space

isgivenby

Y

0

= Ty + s

2

, (9)

E(s

2

) = ,

Ior(s

2

) = o

2

2

F

where y = (y

1

y

2

y

pP

)

1

is a vector of regression

coefficients in the feature space, s

2

is a vector of random

errors, Y

0

= (I

N

-

1

N

1

N

1

N

1

) Y and F

= Jiog(

1

w

1

,

1

w

2

, ,

1

w

N

)

wherew

ando

2

arepositiverealnumbersfori=1,2,...,

N. The weight w

is estimated using the data y

= (I

N

-

1

N

1

N

1

N

1

) y and X. We notice that we cannot use the

generalized inverse matrix to obtain the estimator of y

sinceTisnotknownexplicitly.

Then, we define L

= Jiog(

1

w

1

,

1

w

2

, ,

1

w

N

) which

implies

1

= L

, L

1

= F

andL

-1

= Jiog(w

1

, w

2

, w

N

)

andobtain

Z

0

= 0y + s

2

, (10)

E(s

2

) = ,

Ior(s

2

) = o

2

2

I

N

,

where Z

0

= L

-1

Y

0

, 0 = L

-1

T and s

2

= L

-1

s

2

.

Furthermore, we define two matrices K

= 00

1

= L

-1

KL

-1

and C

=

1

N

0

1

0. The relation of eigenvalues and

eigenvectors of the matrices C

and K

are related in the

followingtheorem.

Theorem 1. [16] Suppose z

`

= u and a e F {}.The

followingstatementsareequivalent:

1. z

`

andasatisfyza = C

o.

2. z

`

andasatisfyzNK

h = K

2

handa = b

N

|=1

(x

),

forsomeh = (b

1

b

2

b

N

)

1

e R

N

{}.

3. z

`

and a satisfy zNh

= K

and a = b

N

|=1

(x

),

forsomeh

= (b

1

b

2

b

N

)

1

e R

N

{}.

Let p

P

betherankof0where p

P

min(N,p

P

).Sincethe

rank of 0 is equal to the rank of K

and the rank of 0

1

0,

then the rank of K

and the rank of 0

1

0 are equal to p

P

.

We should notice that K

is symmetric and positive

semidefinite which implies the eigenvalues of K

are

nonnegativerealnumbers.Let

1 2 1

...

r r

+

> > > > >

1

F F

p p +

> > 0 = = =

N

betheeigenvaluesofK

,

B = (h

1

h

2

. . . h

N

) be the matrix of the corresponding

normalized eigenvectors h

|

(i = 1, 2, . . . , N) of K

, u

|

=

b

l

x

l

anda

I

= 0

1

u

|

forl=1,2,... p

P

.

Then, according to Theorem 1 we obtain eigenvalues

andeigenvectorsproblemasfollows

z

I

a

|

= 0

1

u

|

or l = 1, 2, . . . , p

P

1

a

]

= ]

1 i i = ], or i, ] = 1,2, , p

P

u otbcrwisc.

.

Since the rank of

T

is equal p

P

, then the remaining (p

P

p

P

)eigenvaluesof

T

arezero.Let

k

(k= p

P

+1, p

P

+

2,...,p

P

),bethezeroeigenvaluesof

T

anda

k

bethe

normalized eigenvectors of

T

corresponding to

k

.

Then, we define A = (a

1

a

2

a

p

F

) which is an

orthogonalmatrix.Itisnotdifficulttoverifythat

A

T

0

T

0A = D,

where

D = _

D

p

F

O

O O

],

D

pP

= _

z

1

u

. .

u z

p

F

_.

andOisazeromatrix.

SinceAA

1

= I

p

F

,wecanrewritethemodel(10)as

Z

0

= U0 +s

2

, (11)

E(s

2

) = ,

Ior(s

2

) = o

2

2

I

N

,

whereU = 0Aand0 = A

1

y.Let

U = (U

(p

F

)

U

(p

F

-p

F

)

)and0 = (0

(p

F

)

0

(p

F

-p

F

)

)

1

,

with sizes of U

(p

F

)

, U

(p

F

-p

F

)

, 0

(p

F

)

, and 0

(p

F

-p

F

)

are

N p

P

, N (p

P

-p

P

), p

P

1 and (p

P

- p

P

) 1,

respectively.Themodel(11)canbewrittenas

Z

0

= U

(p

F

)

0

(p

F

)

+ U

(p

F

-p

F

)

0

(p

F

-p

F

)

+ s

2

,(12)

E(s

2

) = ,

Ior(s

2

) = o

2

2

I

N

.

SinceD = A

1

0

1

0A = U

1

U,weobtain

JOURNAL OF COMPUTING, VOLUME 3, ISSUE 11, NOVEMBER 2011, ISSN 2151-9617

HTTPS://SITES.GOOGLE.COM/SITE/JOURNALOFCOMPUTING

WWW.JOURNALOFCOMPUTING.ORG 58

U

1

(p

F

)

U

(p

F

)

= D

p

F

,

U

1

(p

F

-p

F

)

U

(p

F

-p

F

)

= ,

and

U

1

(p

F

)

U

(p

F

-p

F

)

= .

Since (U

(p

F

-p

F

)

0

(p

F

-p

F

)

)

1

(U

(p

F

-p

F

)

0

(p

F

-p

F

)

) is equal to

zero, we see that (U

(p

F

-p

F

)

0

(p

F

-p

F

)

) is equal to 0.

Consequently,themodel(12)issimplifiedto

Z

0

= U

(p

F

)

0

(p

F

)

+s

2

, (13)

E(s

2

) = ,

Ior(s

2

) = o

2

2

I

N

.

Let us assume that

1 2

, , ...,

F

r r p

+ +

are close to zero

anddefine

U

(p

F

)

= (U

()

U

(p

F

-)

), 0

(p

F

)

= ( 0

()

1

0

(p

F

-)

1

)

1

and

D

(p

F

)

= _

D

()

O

O O

],

with

D

()

= _

z

1

u

. .

u z

_,

D

(p

F

-)

= _

z

+1

u

. .

u z

p

F

_,

andsizesofU

()

, U

(p

F

-)

, 0

()

, onJ 0

(p

F

-)

N r, N

( p

P

- r), r 1and( p

P

r)1,respectively.Themodel

(13)cannowbewrittenas

Z

0

= U

()

0

()

+ U

(p

F

-)

0

(p

F

-)

+ s

2

(14)

E(s

2

) = ,

Ior(s

2

) = o

2

2

I

N

.

It is evident that the estimator of 0

(p

F

-)

, say 0

(p

F

-)

=

(0

`

(+1)

0

`

(+2)

. . . 0

`

(p

F

-)

)

1

, isgivenby

(p

F

-)

= (U

1

(p

F

-)

U

(p

F

-)

)

-1

U

1

(p

F

-)

Z

0

= D

(p

F

-)

-1

U

1

(p

F

-)

Z

0

(15)

andthevarianceof0

`

] (] = r + 1, . . ., p

P

- r)is

Ior(0

`

] ) = o

2

I

N

(D

(p

F

-)

-1

)

]]

(16)

Since z

+1

, z

21

, . . . , z

p

F

-

are close to zero, the diagonal

elements of D

(p

F

-)

-1

and also the variance of 0

`

] (] = r +

1, . . . , p

P

- r) will be very large numbers. Thus, we

encounter the ill effect of multicollinearity in the model

(14). To avoid the effects of multicollinearity we dispose

theterm U

(p

F

-)

0

(p

F

-)

asin[15]andobtain

= U

(r)

0

(r)

+

(17)

where

is a random vector influenced by dropping

U

(p

F

-)

0

(p

F

-)

in the model (17). We usually dispose the

term U

(p

F

-)

0

(p

F

-)

to handle the effects of

multicollinearityonthePCR.Wecanusetheratio

1

l

(l

=1,2,, p

P

)todetectthepresenceofmulticollinearityon

U

(p

F

)

. If

1

l

is smaller than, say < 1/1000, then we

considerthatmulticollinearityexistsonU

(p

F

)

[6].

NotethatU

()

1

U

()

= D

,whichisinvertible.Hence,the

estimatorof0

()

,say0

()

, isgivenby

()

= (U

()

1

U

()

)

-1

U

()

1

Z

0

(18)

Let z

0

= L

-1

(I

N

-

1

N

1

N

1

N

1

) y be the observed data

corresponding to Z

0

and 0

( )

-

e R

be the value of 0

( )

whenZ

0

isreplacedbyz

0

intheEq.(18).Hence

()

-

= (U

()

1

U

()

)

-1

U

1

()

z

0

, (19)

= D

()

-1

U

()

1

z

0

.

Since

U = (U

()

U

(p

F

-)

U

(p

F

-p

F

)

)

= (0A

()

0A

(p

F

-)

0A

(p

F

-p

F

)

),

we obtain U

()

= 0A

()

. As we see that A

()

=

0

1

(u

1

u

2

u

).Hence,

U

()

= 00

1

()

= K

()

(20)

where

()

= (u

1

u

2

u

). Until now, however, we do

notknow U

()

explicitlyyet.

2.2 Kernel Trick

We use a trick, called a kernel trick, for obtaining the

regression estimators of Eq. (19). Let us consider the

followingtheorem:

Theorem2: ([5], [12]) For any symmetric, continuous

and positive semidefinite function R

p

R

p

- R, there

existsafunction R

p

- Fsuchthat

(x, y) = (x)

1

(y).

ByusingTheorem2,ifwechooseacontinuous,symmetric

and positive semidefinite function R

p

R

p

- R

JOURNAL OF COMPUTING, VOLUME 3, ISSUE 11, NOVEMBER 2011, ISSN 2151-9617

HTTPS://SITES.GOOGLE.COM/SITE/JOURNALOFCOMPUTING

WWW.JOURNALOFCOMPUTING.ORG 59

then there exists R

p

- F such that (x

, x

]

) =

(x

)I (x

]

). The function is called the kernel function.

Insteadofchoosingexplicitly,wechooseakerneland

employthecorrespondingfunction as.

LetK

]

= (x

, x

]

).Hence,weobtain

K = _

K

11

K

1N

. .

K

N1

K

NN

_

It is evident that K is known explicitly now. This implies

thatK

=L

-1

KL

-1

,

()

andU

()

arealsoknownexplicitly.

2.4 WLS KPCR

Now, let us consider model 17 again. Since we have

known that K

= L

-1

KL

-1

,

()

and U

()

explicitly, we can

obtain the regression coefficients of this model. Then, the

fittedvalueof z

0

inthetransformedscale,sayz

0

,isgiven

by

z

0

= (z

01

z

02

z

0N

)

1

(21)

= K

()

0

( )

-

andresidualbetweenz

0

andz

0

isgivenby

e

2

= (c

21

c

22

c

2N

)

1

= z

0

- z

0

.(22)

Thefittedvalueofyintheoriginalscale,sayy,isgivenby

y = (y

1

y

2

y

N

)

1

= y1

N

+ KL

-1

()

0

( )

-

(23)

andthetheWLSKPCRspredictionisgivenby

g(x) = y + c

N

=1

(x, x

), (24)

where y =

1

N

1

N

1

y, g is a function from R

P

into R and

(c

1

c

2

. . . c

N

) = L

-1

()

0

( )

-

. The number r is called the

retainednumberofnonlinearPCsfortheWLSKPCR.

2.5 WLS KPCR Algorithms

We summarize the procedure in subsection 2.22.4 to

obtaintheWLSKPCRspredictionasfollows:

1. Given

1 2

( , , ,..., ),

i i i ip

y x x x ,i=1,2,...,N.

2. Calculate (1/ )1

T

N

y N y =

and ( (1/ ) )

T

o N N N

I N = y 1 1 y .

3. Estimate V

andfind L

.

4. Calculate

1

o o

z = L y

.

5. Chooseakernel R

p

R

p

- R.

6. Contruct ( , ), ( )

ij i j ij

K K k = = x x K

and

1 1

K = L KL

.

7. Diagonalize K

. Let

1 2

...

r

> > >

1

... ... 0

p F p F N

' ' +

> > > = = = be the

eigenvalues of K

and

1 2

, ...,

N

b b b be the

correspondingnormalizedeigenvectorsof K

.

8. Detect multicollinearity on K

. Let r be the

retained number of nonlinear PCs such that

1

1

max .

1000

s

r s

= >

`

)

9. Construct

l

l

l

b

o

= forl=1,2,...,rand

( ) r

I =

1 2

( ... )

r

.

10. Calculate

*

( ) ( ) ( )

,

r r r

= K U

I 0

1

( ) ( )

T

r r o

= D U z and

1 2

( ... )

T

N

c c c = c =

1 *

( ) ( )

r r

I 0

11. Given a vector e x R

p

, the WLS KPCRs

predictionisgivenby

1

( ) ( , )

N

i i

i

x y c k x x g

=

= +

Note that the above algorithm works under the

assumption

1

( ) 0

N

i

i

x

=

=

. When

1

( ) 0

N

i

i

x

=

=

we

replaceKby

N

K =KEKKE+EKEinStep6,whereE

is the N N matrix with all elements equal to

1

,

N

and

workbasedon

N

K inthesubsequentsteps

3 CASE STUDY

In our case study, we use the Gaussian kernel

2

( , ) exp( / ) k = x y x - y where is the parameter

of the kernel. As mentioned before that there are several

methodtoestimatetheweight

i

w seeforexample[2],[6],

[7] and [14]. We use the method based on replication to

estimate the weight

i

w as follows. First, we arrange the

data x inorderofincreasing

i

y andmakesomegroups,

say M (< N) groups, of the ordered data. Let the kth

group, k = 1, 2, . . . ,M, contains

{ }

( , )

ik ik

y x ' ' for some

i = 1, 2 ,, N where

{ }

1 2,...,

,

N

ik

y y y y ' e

and

{ }

1 2,...,

, .

N

ik

x x x x ' e Let

k

x' and

2

k

s be the average of

JOURNAL OF COMPUTING, VOLUME 3, ISSUE 11, NOVEMBER 2011, ISSN 2151-9617

HTTPS://SITES.GOOGLE.COM/SITE/JOURNALOFCOMPUTING

WWW.JOURNALOFCOMPUTING.ORG 60

{ }

ik

x' and variance of

{ }

ik

y' respectively. Then we make

the prediction from the set

( ) { }

2

,

k k

x s ' , say

2 1

( )

o

f x c c x = + , where

2

f is a function from R

into R

and

1

,

o

c c e R. Furthermore, we calculate the estimated

variance of

i

y by using the predictor

2

( )

i

f x . The weight

i

w

ischoseninverselyfromtheestimatedvarianceof .

i

y

The similar procedure is performed to obtain the weights

of WLS KPCR . We only replace

i

y by

oi

y where

oi

y is

theithelementof

o

y .

We also use the average monthly income from food

sales (y) and the corresponding annual advertising

expenses (x) for 30 restaurants [6]. The data are given in

Table1andarecalledthetrainingdata.Weaddthenoises

in the response values of the training data which are

generated by a normally distributed random noise with

zero mean and standard deviation

| |

1

0,1 o e . Afterward,

we use some of the data to test the prediction by WLS

basedlinear regression and WLS KPCRand call them the

testingdata.Wealsoaddthenoisesintheresponsevalues

of the testing data which are generated by a normally

distributed random noise with zero mean and standard

deviation

2

o where

| |

2

0,1 o e . For the sake of

comparison, we set

1

o and

2

o equal to 0.25 and 0.5,

respectively. Then, we generated 1000 sets for both

training and testing data to test the performance of the

WLSbasedregressionandWLSKPCR.

Table1:Therestaurantfoodssalesdata(yi100)

i xi yi

(100)

i xi yi

(100)

i xi yi

(100)

1 3.00 81.464 11 9.00 131.434 21

15.050 178.187

2 3.150 72.661 12 11.345 140.564 22

178.187 185.304

3 3.085 72.344 13 12.275 151.352 23

15.150 155.931

4 5.225 90.743 14 12.400 146.426 24

16.800 172.579

5 5.350 98.588 15 12.525 130.963 25

16.500 188.851

6 6.090 96.507 16 12.310 144.630 26

17.830 192.424

7 8.925 126.574 17 13.700 147.041 27

19.500 203.112

8 9.015 114.133 18 15.000 179.021 28

19.200 192.482

9 8.885 115.814 19 15.175 166.200 29

19.000 218.715

10 8.950 123.181 20 14.995 180.732 30

19.350 214.317

Let

i

e and

i

y betheresidualandthepredictionofOLS

method, respectively. Itis well known that the plot ofthe

residual

i

e anditscorresponding

i

y isusefultocheckthe

assumption of constant variance. Our choice of WLS

solutionisalsobasedonthepatternofresiduals.Theplot

of

i

e and

i

y

isshowninFigure1(a)inwhichthevariation

of the residuals increases significantly as the prediction

values increase. Hence, this plot indicates violation of the

assumption of constant variance. Consequently, the

ordinary least squares fit inappropriate and the estimator

of OLS is not the best linear unbiased estimator (BLUE).

Fortheshakeofcomparison,thevaluesofMarechosento

betwo,fourandfive.ForinstancewhenM=2,itmeans

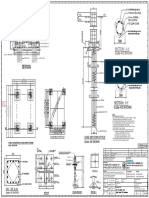

(a)

(b)

Figure 1: Plot of residual and its corresponding predicted

valuefortrainingdata:(a)OLSbasedlinearregression,(b)

WLSKPCR.

that the ordered data is divided into two groups where

each group contains 50 percent of the ordered data. The

plotofresidual

2i

e anditscorrespondingpredictionvalue

oi

z withM=2and=0.5isshowninFigure1(b).Figure

JOURNAL OF COMPUTING, VOLUME 3, ISSUE 11, NOVEMBER 2011, ISSN 2151-9617

HTTPS://SITES.GOOGLE.COM/SITE/JOURNALOFCOMPUTING

WWW.JOURNALOFCOMPUTING.ORG 61

1(b) shows a residual plot with no systematic pattern

around zero. It seems that the assumption of constant

variance is satisfied for the data associated with this

residual plot. We notice that the eigenvalues of X

1

X are

5.2012e+03 and 4.3800 and the eigenvalues of X

1

1

X

1

are

1.05717e03and6.4251e07.Theratiooftheeigenvaluesof

X

1

X and X

1

1

X

1

are 4.3800/5.2012e+03 = 8.4228e04 and

6.4251e07/1.05717e03=6.0779e04, respectively. Hence,

multicollinearity exists in the regression matrices X and

X

1

. It implies that the prediction of OLS based linear

regression and the prediction of WLS regression can be

inappropriatetobeused.

We also notice that the estimator of WLS KPCR is

BLUE for regression model with heteroscedastic errors

andprovides the estimate with the smallest variance. The

comparisonofthetwomethodsisshowninTable2where

thevaluesinthetableareaverages ofRMSEfor1000sets

of the training data and the testing data in the original

scale.FromTable2,weseethattheRMSEsofWLSKPCR

are smaller compared to RMSEs of WLS regression. For

these data, the choice = 0.05 yields better results than

othervaluesofsandWLSKPCRreducesRMSEofWLS

regressiondowntomorethan50percent.

Table 2: RMSE of WLS regression and WLS KPCR forthe

restaurantfoodssalesdata.

Data Method RSME

M=2 M=4 M=5

Training

WLS

regression

870.3370 870.0094 869.9483

WLSKPCR

(=0.05,r=23)

387.4919 387.4247 387.4118

WLSKPCR

(=0.1,r=21)

430.6168 430.5629 430.5506

WLSKPCR

(=0.5,r=18)

601.4776 601.4338 601.4254

WLSKPCR

(=1,r=17)

624.2837 624.2512 624.2440

Testing

WLS

regression

835.0128 834.8192 835.2938

WLSKPCR

(=0.05,r=23)

398.7448 398.7926 398.7979

WLSKPCR

(=0.1,r=21)

463.4775 463.4887 463.4777

WLSKPCR

(=0.5,r=18)

689.8822 689.9098 689.8971

WLSKPCR

(=1,r=17)

721.3939 721.3949 721.3855

4 CONCLUSIONS

WLS is a technique to be used in case of a regression

model with non constant variances. However, this

technique yields a linear prediction and has no guarantee

that itcan handle the negative effects of multicollinearity.

In this paper, we proposed WLS KPCR to be used in a

heteroscedastic regression model and to overcome those

limitations. We should notice that WLS KPCR yields a

nonlinear prediction and can avoid the effects of

multicollinearity in heteroscedastic regression model. The

estimateofWLSKPCRsregressioncoefficientsisthebest

linear unbiased estimator in which the proof of the

unbiasedestimatorofWLSKPCRfollowstheproofofthe

unbiased estimator of PCR (See [15]). In our case study,

WLS KPCR outperforms WLS regression in a

heteroscedasticmodel.

ACKNOWLEGDEMENT

The authors sincerely thank to Universiti Teknologi

Malaysia and Ministry of Higher Education (MOHE)

Malaysia for Research University Grant (RUG) with

number Q.J130000.7128.02J88. In addition, we also thank

to The Research Management Center (RMC) UTM for

supportingthisresearchproject.

REFERENCES

[1]S.ChattefueeandA.S.Hadi. RegressionbyExample: FouthEdition.

JohnWileyandSons,2006.

[2]J.J.Faraway.LinearModelswithR.ChapmanandHall/CRC,2005.

[3] L. Hoegaerts, J.A.K. Suykens, J. Vandewalle, and B. D. Moor.

Subset based least squares subspace in reproducing kernel hilbert

space.Neurocomputing,pages293323,2005.

[4] A.M. Jade,B. Srikanth, B.DKulkari,J.PJog,andL.Priya.Feature

extraction and denoising using kernel pca. Chemical Engineering

Sciences,58:44414448,2003.

[5] H. Q. Minh, P. Niyogi, and Y. Yao. Mercers theorem, feature

maps, and smoothing. Lecture Notes in Computer Science, Springer

Berling,4005/2006,2009.

[6] D. C. Montgomery, Elizabeth A. Peck, and G. Geoffrey Vining.

IntroductiontoLinearRegression.WileyInterscience,2006.

[7] D. R. Norman and H. Smith. Applied Regression Analysis. John

WileyandSons,1998.

JOURNAL OF COMPUTING, VOLUME 3, ISSUE 11, NOVEMBER 2011, ISSN 2151-9617

HTTPS://SITES.GOOGLE.COM/SITE/JOURNALOFCOMPUTING

WWW.JOURNALOFCOMPUTING.ORG 62

[8] J. O. Rawlings, Sastry G. Pantula, and David A. Dickey. Applied

regressionanalysis:aresearchtool.Springer,1998.

[9] R. Rosipal, M. Girolami, L. J. Trejo, and Andrzej Cichoki. Kernel

pca for feature extraction and denoising in nonlinear regression.

NeuralComputingandApplications,pages231243,2001.

[10] R. RosipalandL.J.Trejo. Kernel partial least squares regression

in reproducing kernel hilbert space. Journal of Machine Learning

Research,2:97123,2002.

[11] R. Rosipal, L. J. Trejo, and A. Cichoki. Kernel principal

component regression with em approach to nonlinear principal

componentextraction.TechnicalReport,UniversityofPaisley,UK,2001.

[12] B. Scholkopf, A. Smola, and K.R. Muller. Nonlinear component

analysisasakerneleigenvalueproblem.NeuralComputation,10:1299

1319,1998.

[13] B. Scholkopf and A. J. Smola. Learning with kernels. The MIT

Press.,2002.

[14]G.A.F.SeberandA.J.Lee.LinearRegressionAnalysis.JohnWiley

andSons,Inc.,2003.

[15]M.S.Srivastava.MethodsofMultivariateStatistics.JohnWileyand

Sons,Inc.,2002.

[16] A. Wibowo and Y. Yamamoto. A note of kernel principal

component regression. To appear in Computational Mathematics and

Modeling.

Antoni Wibowo is currently working as a senior lecturer in the faculty

of computer science and information systems in UTM. He received

B.Sc in Math Engineering from University of Sebelas Maret (UNS)

Indonesia and M.Sc in Computer Science from University of

Indonesia. He also received M. Eng and Dr. Eng in System and

Information Engineering from University of Tsukuba Japan. His

interests are in the field of computational intelligence, machine

learning, operations research and data analysis.

Mohamad Ishak Desa is a professor in the faculty of computer

science and information systems in UTM. He received B.Sc. in

Mathematics from UKM in Malaysia, along an advance diploma in

system analysis from Aston University. He received a M.A. in

Mathematics from University of Illinois, and then, a PhD in operations

research from Salford University in UK. He is currently the Head of

Operation Business Intelligence Research Group in UTM. His

interests are operations research, optimization, logistic and supply

chain.

JOURNAL OF COMPUTING, VOLUME 3, ISSUE 11, NOVEMBER 2011, ISSN 2151-9617

HTTPS://SITES.GOOGLE.COM/SITE/JOURNALOFCOMPUTING

WWW.JOURNALOFCOMPUTING.ORG 63

You might also like

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (843)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5807)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (346)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1091)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Statistics: Dr. Samta SoniDocument13 pagesStatistics: Dr. Samta SoniSamta Soni100% (1)

- Product Lifecycle Management Advantages and ApproachDocument4 pagesProduct Lifecycle Management Advantages and ApproachJournal of ComputingNo ratings yet

- Impact of Facebook Usage On The Academic Grades: A Case StudyDocument5 pagesImpact of Facebook Usage On The Academic Grades: A Case StudyJournal of Computing100% (1)

- Common Size Statement Analysis PDF Notes 1Document10 pagesCommon Size Statement Analysis PDF Notes 124.7upskill Lakshmi V0% (1)

- Cloud Computing: Deployment Issues For The Enterprise SystemsDocument5 pagesCloud Computing: Deployment Issues For The Enterprise SystemsJournal of ComputingNo ratings yet

- Hybrid Network Coding Peer-to-Peer Content DistributionDocument10 pagesHybrid Network Coding Peer-to-Peer Content DistributionJournal of ComputingNo ratings yet

- Divide and Conquer For Convex HullDocument8 pagesDivide and Conquer For Convex HullJournal of Computing100% (1)

- Business Process: The Model and The RealityDocument4 pagesBusiness Process: The Model and The RealityJournal of ComputingNo ratings yet

- Decision Support Model For Selection of Location Urban Green Public Open SpaceDocument6 pagesDecision Support Model For Selection of Location Urban Green Public Open SpaceJournal of Computing100% (1)

- Complex Event Processing - A SurveyDocument7 pagesComplex Event Processing - A SurveyJournal of ComputingNo ratings yet

- Hiding Image in Image by Five Modulus Method For Image SteganographyDocument5 pagesHiding Image in Image by Five Modulus Method For Image SteganographyJournal of Computing100% (1)

- Energy Efficient Routing Protocol Using Local Mobile Agent For Large Scale WSNsDocument6 pagesEnergy Efficient Routing Protocol Using Local Mobile Agent For Large Scale WSNsJournal of ComputingNo ratings yet

- K-Means Clustering and Affinity Clustering Based On Heterogeneous Transfer LearningDocument7 pagesK-Means Clustering and Affinity Clustering Based On Heterogeneous Transfer LearningJournal of ComputingNo ratings yet

- An Approach To Linear Spatial Filtering Method Based On Anytime Algorithm For Real-Time Image ProcessingDocument7 pagesAn Approach To Linear Spatial Filtering Method Based On Anytime Algorithm For Real-Time Image ProcessingJournal of ComputingNo ratings yet

- Phenacetin MsdsDocument6 pagesPhenacetin MsdstylerNo ratings yet

- Gun For Glory Shooting Championship Entry Form at PUNE 050812Document3 pagesGun For Glory Shooting Championship Entry Form at PUNE 050812GunFor GloryNo ratings yet

- Praecipe D Oppo Intervene 2Document3 pagesPraecipe D Oppo Intervene 2DcSleuthNo ratings yet

- Sampling of Geosynthetics For TestingDocument3 pagesSampling of Geosynthetics For TestingEdmundo Jaita CuellarNo ratings yet

- Captive Product Pricing PDFDocument6 pagesCaptive Product Pricing PDFKavita SubramaniamNo ratings yet

- Alcatel-Lucent OMSN Product Family Optical Multi-Service NodeDocument12 pagesAlcatel-Lucent OMSN Product Family Optical Multi-Service NodeMostifa MastafaNo ratings yet

- Essilor 360 - English ECP BrochureDocument4 pagesEssilor 360 - English ECP Brochurebibekananda87No ratings yet

- Blacher - Pricing With A Volatility SmileDocument2 pagesBlacher - Pricing With A Volatility Smilefrolloos100% (1)

- Foundation Cost Comparison FinalDocument5 pagesFoundation Cost Comparison FinalAbilaash VNo ratings yet

- Career Opportunities in Health Economics: Why Become A Health Economist?Document6 pagesCareer Opportunities in Health Economics: Why Become A Health Economist?Hanan AhmedNo ratings yet

- P492Document501 pagesP492Rohit KhandelwalNo ratings yet

- UNIV 1001 - Written Assignment Unit 2Document2 pagesUNIV 1001 - Written Assignment Unit 2soso19051hotmail.com soso19051hotmail.comNo ratings yet

- Rolls Royce Phantom World Specification 2012Document1 pageRolls Royce Phantom World Specification 2012sdfhsdkfhksNo ratings yet

- Gantry Tower (1TA) Foundation at Rupsi (13!03!19)Document1 pageGantry Tower (1TA) Foundation at Rupsi (13!03!19)Zahid Hasan ZituNo ratings yet

- Strength of Manila RopeDocument2 pagesStrength of Manila Ropemister_no34No ratings yet

- CLL4728A THRU CLL4764A Surface Mount Silicon Zener Diode 1 Watt, 3.3 Thru 100 Volts DescriptionDocument4 pagesCLL4728A THRU CLL4764A Surface Mount Silicon Zener Diode 1 Watt, 3.3 Thru 100 Volts DescriptionPelotaDeTrapoNo ratings yet

- vf1000l Manual enDocument79 pagesvf1000l Manual enCompuWan PolancoNo ratings yet

- Cell Lab Report PosterDocument1 pageCell Lab Report Posterapi-633883722No ratings yet

- ProgramDocument8 pagesProgramMichille Piquero DE LeonNo ratings yet

- DMX Operator I - Eng Rev 01-18Document23 pagesDMX Operator I - Eng Rev 01-18helio avtechNo ratings yet

- World Petroleum For Al SDocument11 pagesWorld Petroleum For Al Scrni rokoNo ratings yet

- Catálogo VDODocument11 pagesCatálogo VDOalex aedoNo ratings yet

- SF3040 DS UnlockedDocument2 pagesSF3040 DS UnlockedEdgar isaacNo ratings yet

- Transformer Sheet DR RefaatDocument19 pagesTransformer Sheet DR Refaatمحمد عليNo ratings yet

- Software Engineering MethodologyDocument38 pagesSoftware Engineering MethodologyRifky Fahrul ArifinNo ratings yet

- Maa Ujaleswari Tour & Travels: Name: Address: Dist.: Contact No.Document1 pageMaa Ujaleswari Tour & Travels: Name: Address: Dist.: Contact No.Kamala MotorNo ratings yet

- UntitledDocument2 pagesUntitledpeterNo ratings yet

- ResearchDocument10 pagesResearchElijah ColicoNo ratings yet