Professional Documents

Culture Documents

Low-Complexity Iris Coding and Recognition Based On Directionlets

Uploaded by

Reetika BishnoiOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Low-Complexity Iris Coding and Recognition Based On Directionlets

Uploaded by

Reetika BishnoiCopyright:

Available Formats

IEEE TRANSACTIONS ON INFORMATION FORENSICS AND SECURITY 1

Low-complexity iris coding and recognition

based on directionlets

Vladan Velisavljević, Member, IEEE,

Abstract

A novel iris recognition method is presented. In the method, the iris features are extracted using the oriented

separable wavelet transforms (directionlets) and they are compared in terms of a weighted Hamming distance. The

feature extraction and comparison are shift-, size- and rotation-invariant to the location of iris in the acquired image.

The generated iris code is binary, whose length is fixed (and therefore commensurable), independent of the iris image,

and comparatively short. The novel method shows a good performance when applied to a large database of irises

and provides reliable identification and verification. At the same time, it preserves conceptual and computational

simplicity and allows for a quick analysis and comparison of iris samples.

Index Terms

Biometrics, directional transform, directional vanishing moments, feature extraction, iris recognition, personal

identification, wavelet transform.

I. I NTRODUCTION

Biometrics usually refers to recognizing and identifying individuals using their various physiological, physical or

behavioral characteristics and traits [1]. This emerging science has recently become very popular with the increasing

need for higher security levels in personal authentication and identification systems. Such systems are deployed

in various applications, such as border-crossing or door-access control, but also in managing the access rights to

digital media content [2].

Among many biometric methods, iris recognition is an active topic because of reliability and an easy non-invasive

acquisition of biometric data. Iris, an annular region of human eye between the black pupil and the white sclera

(see Fig. 1), has a very complex structure that is unique for each individual and each eye. As reported in [3]–[5],

the randomness of the human iris texture is ensured by the individual differences in development of the anatomic

structure of eyes in the pre-natal period. Furthermore, the iris texture is stable over the life-span and is externally

visible, which makes the process of identification easy and quick. All these properties allow for a convenient

application of iris recognition methods in biometric security systems.

V. Velisavljević is with Deutsche Telekom Laboratories, Ernst-Reuter-Platz 7, 10587 Berlin, Germany, e-mail: vladan.velisavljevic@telekom.de.

April 15, 2009 DRAFT

2 IEEE TRANSACTIONS ON INFORMATION FORENSICS AND SECURITY

(a) (b) (c) (d) (e)

Fig. 1. Iris is an annular region of human eye between the black pupil and the white sclera. It has a complex structure unique for each eye and

each individual. The randomness of this structure ensures a reliable personal authentication and identification. Several examples of iris images

are taken from the database CASIA-IrisV3-Lamp [6].

Distance

Region Feature 1 0 0 ... 0 1 1 Feature score

localization extraction comparison

0 0 1 ... 1 1 0

(a) (b) (c)

Fig. 2. A common iris recognition system consists of three phases: (a) iris region localization, (b) feature extraction and encoding and (c)

feature comparison. The task of the system is to encode the captured iris image and to allow for a reliable pairwise comparison of the encoded

iris images denying impostors and accepting genuine subjects.

The main tasks of the iris recognition system are to provide a compact representation of the iris image (i.e. to

encode the captured iris image) and to allow for a reliable pairwise comparison of the encoded iris images denying

impostors and accepting genuine subjects. A common iris recognition system consists of three phases: (a) image

acquisition and iris region localization, (b) feature extraction and encoding and (c) feature comparison, as shown

in Fig. 2. Notice that some authors group these phases differently (e.g. in [7]), but the objectives and the order of

steps are substantially the same. Many researchers address the second phase (b) as the most challenging part of the

system.

After the pioneering conjecture by Flom and Safir [3] that iris patterns could serve like fingerprints for biometric

personal identification, several solutions to the problem of iris recognition have been proposed. One of the most

remarkable is the work published by Daugman in [4] and also in a recent development [8], [9]. In this work, iris

regions are precisely localized using active contours and, then, the binary iris code is extracted from the phase

of the coefficients obtained by the complex oriented multiscale two-dimensional (2D) Gabor filtering. Finally, two

iris codes are compared using a normalized Hamming distance. The initial method was further developed and

successfully implemented in several real-time applications resulting in more than 200 billion accurate pairwise iris

comparisons, as reported in [9].

In the work of Wildes [5], the Hough transform [10], [11] is applied along circular contours to an edge map

DRAFT April 15, 2009

VELISAVLJEVIĆ: LOW-COMPLEXITY IRIS CODING AND RECOGNITION BASED ON DIRECTIONLETS 3

obtained by a gradient-based edge detector [12] to localize the iris. Then, the iris texture is decomposed using a

Laplacian pyramid and represented by a set of coefficients ordered in feature vectors. Finally, to compare two iris

images, an image registration technique is used to align them and the corresponding feature vectors are compared

using a normalized correlation. However, this non-trivial image registration technique significantly increases the

computational complexity of the entire method. Moreover, the corresponding iris feature vectors are long and

consist of real numbers, which makes the binary representation inefficient.

The method proposed in [13] substantially reduces the computational complexity of iris encoding by applying the

one-dimensional (1D) wavelet transform (WT) to concentric circles taken from iris images and by generating the

feature vectors using the zero-crossing representation of the circular wavelet coefficients [14]. However, such feature

vectors consist of unbounded real numbers inconvenient for binary encoding. Similarly, the approach explained in

[15] is also based on the zero-crossing representation of the wavelet coefficients, whereas, the method presented

in [16] exploits a set of 1D WTs applied along the angular direction to a normalized iris image. In the latter,

the feature vectors contain descriptions of neighbor extremum points in the transform coefficients and, thus, have

variable lengths. The same authors exploited the circularly symmetric 2D Gabor filters to obtain a commensurable

iris code [17]. However, the code consists of an array of real numbers, that is, means and absolute deviations of

the coefficients within small blocks, and the corresponding binary representation is long. Moreover, in all these

methods [13], [15]–[17], the processed coefficients are obtained by interpolation at non-integer coordinates from

the original image pixels. Such an interpolation increases the overall computational and conceptual complexity of

the methods.

The importance of capturing directional (radial and angular) information in iris images has been also noticed and

exploited in [18], [19], where a directional filter-bank is applied to a band-pass filtered iris image to extract feature

vectors. These feature vectors are compared using the Euclidean and Hamming distance, respectively, to identify

or verify subjects.

Furthermore, several other iris recognition methods proposed in [20]–[25] are based on different transforms and

designed for specific applications. An influence of different image compression ratio and sampling scheme on the

iris recognition performance has been analyzed in [26] and [27]. A quality measure of iris images with respect to

the feature extraction efficiency is presented in [28], whereas ordinal measures are used in iris comparison [29].

Finally, several improvements of the standard iris recognition methods have been proposed in [30]–[33], where

either the process of iris acquisition, localization and segmentation has been addressed or the iris image has been

enhanced using an eyelash removal algorithm.

The novel iris recognition method explained in this paper is based on directionlets. These oriented separable 2D

WTs have been proven in [34] to provide a sparse representation of elongated and oriented features in images along

different directions, like edges or contours. Furthermore, they have been shown to improve the performance of the

wavelet-based image compression methods [35]. At the same time, and very importantly, directionlets retain the

separability and conceptual and computational simplicity of the standard 2D WT. This property makes the main

difference between directionlets and some other directional filter-banks, like Gabor filters.

April 15, 2009 DRAFT

4 IEEE TRANSACTIONS ON INFORMATION FORENSICS AND SECURITY

s0 = 0 0

MΛ = 1 1 M Λ′ = 2 2

–1 1 s1 = 0 1 –1 1

Fig. 3. An example of construction of directionlets based on integer lattices for pair of transform directions (45◦ , 135◦ ).

For these reasons, directionlets are used in the phase (b) of the novel method to allow for an efficient character-

ization of the directional features in irises along both radial and angular directions. Like most of the previous iris

recognition approaches, this method is shift-, size- and rotation-invariant to the iris image, that is, the results of

coding and comparison do not depend on the relative position, size and angular shift of the irises. Furthermore, the

method aims at the following additional goals: (1) to provide a short binary code of the iris features, whose length

is fixed, commensurable, and independent of the iris image, (2) to allow for a scalable accuracy of recognition

(adapted to the size of the iris database) and (3) to preserve computational efficiency in each phase of the method.

The new method is capable of outperforming several other iris recognition methods in terms of iris recognition

performance, while providing an iris code of a comparable or even shorter length, as explained in the continuation.

The rest of the paper is organized as follows. A brief review of the construction of directionlets is given in

Section II. The novel method is explained in detail in Section III. The iris recognition is applied to an iris database

and the results are presented and compared to the performance of several other methods in Section IV. Finally, a

conclusion is given in Section V.

II. D IRECTIONLETS

The construction of directionlets has been explained in detail in [34] and an application to image compression

in [35]. Here, only a brief review of the basic ideas is given.

Directionlets are constructed as separable 2D basis functions of the so-called skewed asymmetric WT. These

transforms make use of the two concepts: asymmetry and directionality. Asymmetry is obtained by an unbalanced

iteration of transform steps along two transform directions, that is, the transform is applied more along one than

along the other direction. Directionality is a result of the construction along two skewed transform directions (not

necessarily horizontal or vertical) across integer lattices. An example of the construction of directionlets is shown

in Fig. 3 for directions 45◦ and 135◦ , whereas two examples of the basis functions are illustrated in Fig. 4 (b) and

(c) using an asymmetric frequency decomposition, as shown in Fig. 4 (a).

DRAFT April 15, 2009

VELISAVLJEVIĆ: LOW-COMPLEXITY IRIS CODING AND RECOGNITION BASED ON DIRECTIONLETS 5

(a) (b) (c)

Fig. 4. Directionlets allow for an asymmetric iteration of the filtering and subsampling operations applied along two different directions, not

necessarily horizontal or vertical. (a) An asymmetric decomposition in frequency for two iterations. The basis functions obtained from the (b)

Haar and (c) biorthogonal ”9-7” [36] 1D wavelet filter-bank. Notice that the basis functions have directional vanishing moments along the two

chosen directions, which allows for a more efficient representation of oriented elongated features, like edges or contours.

Filtering using the corresponding high-pass (HP) wavelet filters along any pair of directions imposes directional

vanishing moments (DVM). This property of directionlets allows for efficient capturing of oriented features and a

sparser representation of natural images than the representation provided by the standard WT.

For the same reason, directionlets are used for capturing and representing oriented features of iris images. However,

even though the originally proposed transform in [34] and [35] is critically sampled (the filtering operations are

followed by subsampling), an oversampled version obtained by removing the subsampling operations is used in the

implementation in this paper. Such a construction results in a shift-invariant transform with a preserved number of

coefficients in each subband.

Notice that, because of separability, directionlets inherit the computational simplicity and the simplicity of purely

1D filter design from the standard 2D WT. This fact is also exploited in the iris recognition method to keep the

overall computational complexity low.

III. I RIS R ECOGNITION USING D IRECTIONLETS

As mentioned in Introduction and shown in Fig. 2, the iris recognition method consists of the three phases: (a)

iris region localization, (b) feature extraction and encoding and (c) feature comparison. In the novel method, the iris

localization is adopted from Daugman [4] with few modifications. The novel feature extraction method is based on

directionlets. Finally, the feature comparison is computed as the best Hamming distance corresponding to relative

angular shifts between two iris codes. All the phases are explained next in detail.

A. Region Localization

The annular iris region is bounded by two borders: the inner border (with the pupil) and the outer border (with

the sclera). In [4], these two borders are approximated by non-concentric circular shapes, whose parameters are

April 15, 2009 DRAFT

6 IEEE TRANSACTIONS ON INFORMATION FORENSICS AND SECURITY

estimated by maximization of the radial derivative of circular integrals over the iris image smoothed at the scale σ.

The same approach is adopted here and six parameters are extracted as a result of the iris region localization:

the coordinates of the pupil and sclera centers (x0 , y0 ) and (x1 , y1 ), respectively, and the corresponding radii r0

and r1 . The circular integral over the contour C(xc , yc , r) is computed from the image z(x, y) as

Z

1

I(xc , yc , r) = z [x(r, θ), y(r, θ)] dθ, (1)

2πr

C(xc ,yc ,r)

where x(r, θ) = xc +r cos θ and y(r, θ) = yc +r sin θ are expressed in a polar coordinate system. The six parameters

are found as

∂

(x∗c , yc∗ , r∗ ) = arg max Gσ (r) ∗ I (xc , yc , r) , (2)

(r,xc ,yc ) ∂r

where Gσ (r) is a Gaussian smoothing function of scale σ. First, the pupil circle parameters (x0 , y0 , r0 ) are estimated

using (2) and integrating over the entire circle, that is, along the contour C(xc , yc , r) = {(x(r, θ), y(r, θ))|0 ≤

θ < 2π}. Then, since the eyelids and eyelashes are likely to occlude the upper and lower parts of the sclera, the

parameters (x1 , y1 , r1 ) are obtained using only the left and right 90◦ -cones, that is, C = {(x(r, θ), y(r, θ))|(−π/4 ≤

θ < π/4) ∪ (3π/4 ≤ θ < 5π/4)}. To improve a practical implementation of the maximization in (2), the continuous

integration and differentiation are discretized and a gradient ascent search on a multigrid is applied, as in [4].

Following [22], an initial estimate of the pupil center (x0 , y0 ) is obtained as the center of gravity of the binarized

iris image.

Notice that the iris region localization is shift- and size-invariant to the iris, that is, the parameter estimates are

adapted to the iris borders in the image.

B. Feature Extraction

The iris region is analyzed using directionlets and the corresponding binary code is generated with a predetermined

and fixed length. The extraction algorithm consists of the three parts: (i) filtering (or transforming) the original iris

image using oriented filters based on the 9-7 wavelet filter-bank [36], (ii) sampling the corresponding wavelet

coefficients at specified sampling coordinates and (iii) generating a binary code, as explained next.

(i) Filtering (transforming) iris image

Two classes of processing using the 1D 9-7 wavelet filter-bank are combined in this phase: smoothing and

directional filtering. The smoothing consists of iterated steps of low-pass (LP) filtering applied along the

horizontal and vertical directions. The resulting coefficients are equivalent to the LP subbands of the standard

multiscale undecimated 2D WT. In turn, the directional filtering consists of only one step of HP filtering along

a direction α ∈ {0◦ , 90◦ , 45◦ , 135◦ }. To reduce the influence of noise in the original image on the obtained

transform coefficients, the directional filtering is applied only to the third and fourth scale of the smoothing

multiscale decomposition. Thus, such a transform results in eight directional subbands denoted as dα,s (x, y),

where the scale s ∈ {3, 4}. The equivalent filters for each dα,s (x, y) are given by

s−1

Y ³ i´ ³ i´

Hα,s (z1 , z2 ) = H0 z12 · H0 z22 · H1 (zvα ) , (3)

i=0

DRAFT April 15, 2009

VELISAVLJEVIĆ: LOW-COMPLEXITY IRIS CODING AND RECOGNITION BASED ON DIRECTIONLETS 7

(a) s = 3, α = 0◦ (b) s = 3, α = 90◦ (c) s = 3, α = 45◦ (d) s = 3, α = 135◦

(e) s = 4, α = 0◦ (f) s = 4, α = 90◦ (g) s = 4, α = 45◦ (h) s = 4, α = 135◦

Fig. 5. The impulse and frequency responses of eight equivalent filters Hα,s (z1 , z2 ) given by (3) used to obtain the corresponding directional

subbands dα,s (x, y), where α ∈ {0◦ , 90◦ , 45◦ , 135◦ } and s ∈ {3, 4}. First, smoothing is applied as a multiscale standard undecimated 2D

WT with 4 scales along the horizontal and vertical directions. Then, a set of directional HP filterings along the four directions is applied to the

third and fourth scale (s = 3, 4). The impulse and frequency responses are shown for all the combinations of the parameters α and s. Notice

that each filter is a band-pass with an approximate frequency band ( π2 , π)/2s and with a DVM imposed along the direction α.

where H0 (z) and H1 (z) are 1D LP and HP filters of the 9-7 filter-bank, the vector vα takes values from

the set {(1, 0), (0, 1), (1, 1), (−1, 1)} corresponding to the directions α ∈ {0◦ , 90◦ , 45◦ , 135◦ }, respectively,

and zvα = (z1 , z2 )(v1 ,v2 ) = z1v1 · z2v2 (the notation is adopted from [37]). The corresponding impulse and

frequency responses of the equivalent filters are shown in Fig. 5. Notice that the equivalent filters in (3) are

separable and, thus, the required number of operations in the filtering process is reduced. Notice also that

each filter is band-pass with an approximate frequency band ( π2 , π)/2s and with a DVM along the direction

α. An example of the directional subbands is shown in Fig. 6 for the iris image given in Fig. 1(e). These

subbands are sampled in the next phase to generate feature vectors.

(ii) Sampling wavelet coefficients

The directional subbands dα,s are sampled so that the retained coefficients capture the iris features oriented

along both radial and angular directions. Owing to a probable light reflection close to the pupil and sclera

and occlusion by eyelids and eyelashes in the upper part of the iris, the coefficients in these regions are not

used. That is, the coefficients are sampled only within the annulus with radius r such that

r0 + δr /5 ≤ r ≤ rmax − δr /5,

where δr = rmax − r0 . The maximal radius rmax depends on the angle θ as

q

rmax = r12 − d2 · sin2 (θ − θd ) − d · cos(θ − θd ),

p

where d = (x0 − x1 )2 + (y0 − y1 )2 and θd = arctan[(y0 − y1 )/(x0 − x1 )] are the relative distance and

angle between the pupil and sclera centers, respectively (see Fig. 7). This annulus is segmented into four

April 15, 2009 DRAFT

8 IEEE TRANSACTIONS ON INFORMATION FORENSICS AND SECURITY

(a) s = 3, α = 0◦ (b) s = 3, α = 90◦ (c) s = 3, α = 45◦ (d) s = 3, α = 135◦

(e) s = 4, α = 0◦ (f) s = 4, α = 90◦ (g) s = 4, α = 45◦ (h) s = 4, α = 135◦

Fig. 6. The directional subbands dα,s are obtained by filtering the iris image given in Fig. 1 (e) using the equivalent filters (3) shown in Fig.

5 at two scales s = 3, 4 and with DVM along a direction α ∈ {0◦ , 180◦ , 45◦ , 135◦ }. Only the marked regions of each subband are used in

the sampling to capture the radial and angular information. The regions are chosen so that they avoid the upper part of the iris that is likely

occluded by eyelids and eyelashes.

60◦ -wide regions centered at the angles K = {0◦ , 180◦ , 45◦ , 135◦ }, respectively, as shown in Fig. 8 (and

also marked in Fig. 6). Notice that these regions do not overlap with the upper 120◦ -cone of the iris.

The sampled coefficients are grouped into 16 clusters denoted as Wk,s,o (i, j) and indexed by the region

k ∈ K, the scale s ∈ {3, 4} and the transform orientation o ∈ {rad, ang}. The sampling process is defined

by Wk,s,o (i, j) = dα,s (x(i, j), y(i, j)), where the index α is chosen according to Table I. The sampling

coordinates are given in a polar coordinate system as x(i, j) = [x0 + ri cos θj ] and y(i, j) = [y0 + ri sin θj ]

and they are rounded to the nearest integer to avoid interpolation. Here,

j

θj = θk∗ + 60◦ · , j = 0, · · · , Js,o − 1,

Js,o − 1

µ ¶

1 3 i

ri = r0 + δr + · , i = 0, . . . , Is,o − 1.

5 5 Is,o − 1

The border angle θk∗ = k − 30◦ , whereas the parameters Is,o and Js,o determine the number of samples

along the radial and angular axes, respectively. Even though Is,o and Js,o can be chosen arbitrarily, in the

DRAFT April 15, 2009

VELISAVLJEVIĆ: LOW-COMPLEXITY IRIS CODING AND RECOGNITION BASED ON DIRECTIONLETS 9

x1 x0 x

θ=-π/2

r1

θ=π

y1

θd θ=0

y0 d

r0

θ

rmax(θ)

y θ=π/2

Fig. 7. The pupil and sclera borders are represented by two non-concentric circles. The distance between the two centers is denoted as d and

the corresponding angle θd .

TABLE I

A CHOICE OF THE ANGLE α OF DVM FOR ALL COMBINATIONS OF k ∈ {0◦ , 180◦ , 45◦ , 135◦ } AND o ∈ {rad, ang} TO CAPTURE PROPERLY

THE RADIAL AND ANGULAR INFORMATION IN IRIS .

k 0◦ 0◦ 180◦ 180◦ 45◦ 45◦ 135◦ 135◦

o rad ang rad ang rad ang rad ang

α 90◦ 0◦ 90◦ 0◦ 135◦ 45◦ 45◦ 135◦

experiments in Section IV, good results are achieved following the relations

I3,o = 2 · I4,o , J3,o = 2 · J4,o ,

(4)

Is,ang = 2 · Is,rad , Js,rad = 2 · Js,ang

because of the correlation of the coefficients generated by the dyadic WT. Furthermore, owing to the resolution

of the iris images used in the experiments, we use

Js,ang = 2 · Is,rad . (5)

(iii) Generating binary code

The binary code consists of signs of the retained coefficients Wk,s,o (i, j), that is, for each retained value, the

corresponding bit is determined by

(

1, Wk,s,o (i, j) ≥ 0

bk,s,o (i, j) = . (6)

0, Wk,s,o (i, j) < 0

April 15, 2009 DRAFT

10 IEEE TRANSACTIONS ON INFORMATION FORENSICS AND SECURITY

-15

0° °

-30

180° 0°

180° 0°

15°

165°

0° 135° 45° 30

15 °

45

5

13

°

105°

75°

Fig. 8. The directional subbands dα,s are sampled so that the retained coefficients capture the iris features oriented along both radial and

angular directions. To avoid an influence of light reflection, the coefficients are not sampled in the area next to the pupil and sclera. Thus, only

the coefficients in the annulus r0 + δr /5 ≤ r ≤ rmax − δr /5 are retained. This area is segmented into 4 regions that have angular width 60◦

and that are centered at the angles 0◦ , 180◦ , 45◦ and 135◦ , respectively. In this way, the retained coefficients do not belong to the upper areas

of the iris that are likely occluded by eyelids and eyelashes. The sampled coefficients are grouped into 16 clusters denoted as Wk,s,o (i, j) and

indexed by the region k ∈ {0◦ , 180◦ , 45◦ , 135◦ }, the scale s ∈ {3, 4} and the transform orientation o ∈ {rad, ang}. The sampling process

is defined by Wk,s,o (i, j) = dα,s (x(i, j), y(i, j)), where the index α is chosen according to Table I.

The resulting binary code contains all the bits from (6) for k ∈ K, s ∈ {3, 4} and o ∈ {rad, ang} concatenated

sequentially. The length of the code is given by

X X

L= Is,o · Js,o = 4 · Is,o · Js,o . (7)

k,s,o s,o

Notice that the length L depends neither on the content nor on the resolution of the iris image and, thus,

the binary code is commensurable. Assuming the parameters Is,o and Js,o are chosen as in (4) and (5), the

length in (7) is equal to L = 160 · n2 , where n = I4,rad . Thus, only a single parameter n is used to scale

the code length and to balance between the code preciseness and iris comparison reliability on one hand and

computational complexity on the other hand. Such a code is easily stored and compared using fast binary

operations and it is reliable ensuring a trustworthy identification, as explained in the continuation.

C. Feature Comparison

The task of the phase (c) is to calculate a weighted Hamming distance score between two binary codes generated

in the phase (b) with equal parameters Is,o and Js,o . The distance score is computed across the region k, scale

s and orientation o, where a weighting factor ws is used to compensate for a smaller number of samples at the

smoother scale s = 4, due to (4). Hence, w3 = 1 and w4 = 4.

To ensure rotation invariance, the distance score is computed for different relative angles of the two iris images,

DRAFT April 15, 2009

VELISAVLJEVIĆ: LOW-COMPLEXITY IRIS CODING AND RECOGNITION BASED ON DIRECTIONLETS 11

which corresponds to angular shift in the binary code and in the captured iris features. Then, the resulting distance

score D(s, o) for a particular scale s and orientation o is equal to the minimal score computed across all allowed

(1) (2)

shifts. More precisely, given two iris codes bk,s,o (i, j) and bk,s,o (i, j), the distance score D12 (s, o) is computed as

( 34 Js,o +d 2c e−1) ³ ´

X (Is,o

X −1)

X (1) (2)

D12 (s, o) = min bk,s,o (i, j) ⊕ bk,s,o (i, j − c) . (8)

− 12 Js,o ≤c≤ 21 Js,o

k∈K i=0 j= 14 Js,o +d 2c e

The total distance score is given by

1 X X

D12 = ws · D12 (s, o), (9)

B s o

where

X X

B =2·4· I3,o · J3,o /2 = 4 · I3,o · J3,o .

o o

Assuming (4) and (5), it follows that B = 54 L.

Notice that the maximal relative angle between two iris images that can be compensated using (8) corresponds

to an angular shift of ± 12 Js,o samples, that is, to ±30◦ . Notice also that the distance score (9) is normalized so that

0 ≤ D12 ≤ 1. Moreover, the smaller D12 is, the more similar the iris images are. In case of self-distance, where

an iris image is compared to itself, D11 = 0.

IV. E XPERIMENTAL R ESULTS

The novel iris recognition method is applied to the iris database CASIA-IrisV3-Lamp [6], which contains 16213

iris images at the resolution 480×640 pixels taken by an infra-red camera from 819 different subjects. The method

is compared to the other three algorithms, Daugman [4], Ma et al. with WT [16] and Ma et al. with a modified

Gabor filtering [17], in terms of recognition performance and code length.

The coding process is performed with the scaling parameter n = 2, 3, . . . , 8 and the iris codes are generated

using (6). The resulting length of the code according to (7) is shown in the upper part of Table II and compared

to the other corresponding lengths.

In the comparison process, the distance scores (9) are computed for all pairs of iris codes. The comparison

is applied within two modes: identification (one-to-many) and verification (one-to-one). In identification, one iris

sample per class is chosen as representative. Then, every other sample is compared to the list of representatives and

it is assigned an identity of the representative with the minimal distance score. The performance of identification

is measured in terms of correct recognition rate (CRR) that equals a ratio between a number of correct identity

assignments and the total number of tests.

In verification, the irises are pair-wise matched, that is, each corresponding distance score D12 is compared to

a threshold T . As common for binary decision problems in the machine learning literature (e.g. [38]), the four

possible outcomes of the matching are denoted as: (1) true-positive (TP) - D12 ≤ T for two irises from the same

class, (2) false-positive (FP) - D12 ≤ T for two irises from different classes, (3) true-negative (TN) - D12 > T for

two irises from different classes and (4) false-negative (FN) - D12 > T for two irises from the same class (see Fig.

April 15, 2009 DRAFT

12 IEEE TRANSACTIONS ON INFORMATION FORENSICS AND SECURITY

TABLE II

T HE LENGTH OF THE IRIS CODE FOR THE NOVEL METHOD WITH DIFFERENT VALUES OF THE PARAMETER n AND FOR THE THREE OTHER

METHODS .

Directionlets L

n=2 640 bits

n=3 1440 bits

n=4 2560 bits

n=5 4000 bits

n=6 5760 bits

n=7 7840 bits

n=8 10240 bits

Daugman [4] 2048 bits

Ma (wavelets) [16] 10240 bits

Ma (Gabor) [17] 1536 real numbers

D12£T D12>T

same

class TP FN

different

FP TN

classes

Fig. 9. Four outcomes are possible in matching two iris samples with the distance score D12 and the matching threshold T : true-positive

(TP), false-positive (FP), true-negative (TN) and false-negative (FN) matching. Two iris samples from a same class can be correctly matched -

TP, or wrongly mismatched - FN. Similarly, two iris samples from different classes can be correctly rejected - TN, or wrongly matched - FP.

The process of verification is evaluated using a ROC curve, where the false non-match rate, defined as FN/(TP+FN), is plotted versus the false

match rate, defined as FP/(TN+FP), for different values of T .

9). These four outcomes are counted for the entire database (all pairs of irises) for the threshold T sweeping from

small (conservative threshold) to high (liberal threshold) values. Then, the performance of verification is evaluated

using a receiver operating characteristic (ROC) curve obtained by plotting the false non-match rate (FNMR), defined

as FN/(TP+FN), versus the false match rate (FMR), defined as FP/(TN+FP). Two parameters are extracted from

the ROC curve to evaluate the performance: the area under the 100%-FNMR curve (A-z) and the equal error rate

(EER), that is, the rate where FMR=FNMR.

The novel method is compared to the other three methods within both the identification and verification modes.

It should be emphasized that the comparative performance statistics reported here are based on the author’s own

implementations of the alternative algorithms, not their original implementations. The resulting ROC curves are

shown in Fig. 10, whereas the numeric parameters CRR, A-z and EER are listed in Table III. Notice that the

performance of the novel method is comparable to or even slightly better than the performance of the other methods,

DRAFT April 15, 2009

VELISAVLJEVIĆ: LOW-COMPLEXITY IRIS CODING AND RECOGNITION BASED ON DIRECTIONLETS 13

FNMR (%)

25 Daugman

7

Ma (WT)

8 6 5 4 3 2

20 Ma (Gabor), d1 , d2 , d3

Dir-lets, n = 2, 3, · · · , 8

15

d3 FMR=FNMR

d2

10

d1

5

EER

0 −2 −1 0

10 10 FMR 10

Fig. 10. ROC curves for the iris recognition method based on directionlets with n = 2, 3, . . . , 8 (shown in blue) applied to the CASIA-IrisV3-

Lamp database. The curves are compared to the same for several other methods: Daugman [4] (red), Ma with WT [16] (green) and Ma with

Gabor filtering [17] (magenta) with three distance measures. The performance is compared in terms of EER, as listed in Table III. The novel

method is comparable or even slightly better than the other methods.

TABLE III

N UMERIC RESULTS OF IRIS IDENTIFICATION AND VERIFICATION EXPRESSED IN TERMS OF CRR, A- Z AND EER.

Directionlets CRR A-z EER

n=2 81.7% 0.963 8.386%

n=3 89.5% 0.972 6.482%

n=4 91.6% 0.981 5.929%

n=5 92.3% 0.982 5.439%

n=6 93.7% 0.984 4.957%

n=7 94.4% 0.988 4.589%

n=8 94.7% 0.989 4.124%

Daugman [4] 91.2% 0.978 6.187%

Ma (wavelets) [16] 89.7% 0.946 7.055%

Ma (Gabor) [17]

d1 90.9% 0.974 5.526%

d2 90.7% 0.972 5.941%

d3 90.7% 0.967 5.698%

depending on the parameter n. Moreover, the length of the binary code is comparably short.

To show the rotation-invariance introduced in the feature comparison algorithm (Section III-C), the iris images

in the CASIA database are rotated for random angles in the range ±30◦ . Then, the entire process (including iris

localization, feature extraction and comparison) is repeated and the results are listed in Table IV. Notice that all

April 15, 2009 DRAFT

14 IEEE TRANSACTIONS ON INFORMATION FORENSICS AND SECURITY

TABLE IV

N UMERIC RESULTS OF IRIS IDENTIFICATION AND VERIFICATION FOR ROTATED IMAGES .

Directionlets CRR A-z EER

n=2 78.5% 0.962 8.506%

n=3 88.3% 0.972 6.660%

n=4 90.9% 0.977 5.954%

n=5 91.7% 0.981 5.459%

n=6 93.1% 0.984 5.025%

n=7 93.9% 0.986 4.723%

n=8 94.3% 0.987 4.321%

the numeric parameters, CRR, A-z and EER, are only slightly changed as compared to the non-rotated case shown

in Table III.

The novel method retains computational simplicity in all phases. This complexity is analyzed next in detail.

A. Computational Complexity

The computational complexity of all phases explained in Section III is noticeably affected by two main factors:

the maximization (2) of the integro-differential operator and the transform with the filters (3). The processes of

sampling wavelet coefficients, generating and comparing binary codes have rather a negligible impact on the overall

complexity.

The maximization (2) used to localize the iris has already been optimized in the original implementation [4]

and reported to take around 25ms when applied to 640×480 images and processed on a 3GHz PC. Here, a non-

optimized C source code is executed on a 2.4GHz PC with the images at the same resolution and the computational

time amounts to 80ms per image.

The implemented transform in the phase (b) is separable and, thus, has a reduced complexity. The number of

operations per step of the transform is given by L1 · N M for a LP filtering and L2 · N M for a HP filtering, where

L1 and L2 are lengths of the 1D LP and HP filters, respectively (in case of the 9-7 filter-bank, L1 = 9 and L2 = 7).

Since four directional filtering operations are applied to the third and fourth level of the multiscale decomposition,

the total number of operations is determined by (8L1 + 8L2 ) · N M . However, since only the transform coefficients

around the sampling locations are really required, the total number of operations is reduced in practice and it

depends on the scaling parameter n. The execution time of a non-optimized C source code on the same 2.4GHz

processor varies from 50 to 70ms for different values of n.

Finally, sampling the transform coefficients, generating the binary code by (6) and computing the weighted

Hamming distance by (9) require significantly smaller execution time (less than 1ms in total).

V. C ONCLUSION

The novel iris recognition method proposed in this paper is based on directionlets, separable wavelet transforms

with directional vanishing moments. The method is shift-, size- and rotation-invariant to the iris images. In the

DRAFT April 15, 2009

VELISAVLJEVIĆ: LOW-COMPLEXITY IRIS CODING AND RECOGNITION BASED ON DIRECTIONLETS 15

method, the annular iris region is first localized in the acquired image and, then, the region is transformed using

the smoothing and directional filtering along a set of directions. The resulting transform coefficients are sampled

so that the oriented (both radial and angular) features in irises are captured and encoded, which is essential for

efficient iris recognition. The generated code is binary and commensurable, whose length is fixed and independent

of the image. The iris images are compared in the identification and verification modes and the novel method is

proven to outperform some other iris recognition methods, while providing even shorter iris codes. At the same

time, the computational complexity of the method is retained low in all phases.

ACKNOWLEDGMENT

Portions of the research in this paper use the CASIA-IrisV3 database collected by the Chinese Academy of

Sciences, Institute of Automation (CASIA) [6]. The author thanks to this group for providing an access to the

database.

R EFERENCES

[1] A. K. Jain, R. Bolle, and E. S. Pankanti, Biometrics: personal identification in networked society. Norwell, MA: Kluwer, 1999.

[2] A. K. Jain, A. Ross, and S. Pankanti, “Biometrics: a tool for information security,” IEEE Trans. Inform. Forensics and Security, vol. 1,

no. 2, pp. 125–143, Jun. 2006.

[3] L. Flom and A. Safir, “Iris recognition system,” U.S. Patent 4 641 349, Feb. 3, 1987.

[4] J. Daugman, “High confidence visual recognition of persons by a test of statistical independence,” IEEE Trans. Pattern Anal. Machine

Intell., vol. 15, no. 11, pp. 1148–1161, Nov. 1993.

[5] R. P. Wildes, “Iris recognition: an emerging biometric technology,” Proc. IEEE, vol. 85, no. 9, pp. 1348–1363, Sep. 1997.

[6] “CASIA-IrisV3-Lamp,” Chinese Academy of Sciences Institute of Automation (CASIA), Beijing, China. [Online]. Available:

http://www.cbsr.ia.ac.cn/IrisDatabase

[7] N. A. Schmid, M. V. Ketkar, H. Singh, and B. Cukic, “Performance analysis of iris-based identification system at the matching score

level,” IEEE Trans. Inform. Forensics and Security, vol. 1, no. 2, pp. 154–168, Jun. 2006.

[8] J. Daugman, “Statistical richness of visual phase information: update on recognizing persons by iris patterns,” Int. Journal on Computer

Vision, vol. 45, no. 1, pp. 25–38, 2001.

[9] ——, “Probing the uniqueness and randomness of IrisCodes: results from 200 billion iris pair comparisons,” Proc. IEEE, vol. 94, no. 11,

pp. 1927–1935, Nov. 2006.

[10] V. Hough and C. Paul, “Method and means for recognizing complex patterns,” U.S. Patent 3 069 654, Dec. 18, 1962.

[11] J. Illingworth and J. Kittler, “A survey of the Hough transform,” Computer Vision, Graphics and Image Processing, vol. 44, no. 1, pp.

87–116, Oct. 1988.

[12] D. H. Ballard and C. M. Brown, Computer Vision. Englewood Cliffs, NJ: Prentice-Hall, 1982.

[13] W. W. Boles and B. Boashash, “A human identification technique using images of the iris and wavelet transform,” IEEE Trans. Signal

Processing, vol. 46, no. 4, pp. 1185–1188, Apr. 1998.

[14] S. Mallat, “Zero-crossings of a wavelet transform,” IEEE Trans. Inform. Theory, vol. 37, no. 4, pp. 1019–1033, Jul. 1991.

[15] C. Sanchez-Avila, R. Sanchez-Reillo, and D. de Martin-Roche, “Iris-based biometric recognition using dyadic wavelet transform,” IEEE

Aerospace and Electronic Systems Magazine, vol. 17, no. 10, pp. 3–6, Oct. 2002.

[16] L. Ma, T. Tan, Y. Wang, and D. Zhang, “Efficient iris recognition by characterizing key local variations,” IEEE Trans. Image Processing,

vol. 13, no. 6, pp. 739–750, Jun. 2004.

[17] ——, “Personal identification based on iris texture analysis,” IEEE Trans. Pattern Anal. Machine Intell., vol. 25, no. 12, pp. 1519–1533,

Dec. 2003.

April 15, 2009 DRAFT

16 IEEE TRANSACTIONS ON INFORMATION FORENSICS AND SECURITY

[18] C.-H. Park, J.-J. Lee, M. J. T. Smith, and K.-H. Park, “Iris-based personal authentication using a normalized directional energy feature,”

in Proc. 4th Int. Conf. Audio- and Video-Based Biometric Person Authentication (AVBPA 2003), Guildford, UK, Jun. 2003, pp. 224–232.

[19] C.-H. Park, J.-J. Lee, S.-K. Oh, Y.-C. Song, D.-H. Choi, and K.-H. Park, “Iris feature extraction and matching based on multiscale and

directional image representation,” in Scale-Space 2003, Lecture Notes in Computer Science 2695, Germany, 2003, pp. 576–583.

[20] D. Schonberg and D. Kirovski, “EyeCerts,” IEEE Trans. Inform. Forensics and Security, vol. 1, no. 2, pp. 144–153, Jun. 2006.

[21] K. Miyazawa, K. Ito, T. Aoki, K. Kobayashi, and A. Katsumata, “An iris recognition system using phase-based image matching,” in Proc.

IEEE International Conference on Image Proc. (ICIP 2006), Atlanta, GA, Oct. 2006, pp. 325–328.

[22] K. Miyazawa, K. Ito, T. Aoki, K. Kobayashi, and H. Nakajima, “An effective approach for iris recognition using phase-based image

matching,” IEEE Trans. Pattern Anal. Machine Intell., vol. 30, no. 10, pp. 1741–1756, Oct. 2008.

[23] J.-C. Lee, P. S. H. amd C.-S. Chiang, T.-M. Tu, and C.-P. Chang, “An empirical mode decomposition approach for iris recognition,” in

Proc. IEEE International Conference on Image Proc. (ICIP 2006), Atlanta, GA, Oct. 2006, pp. 289–292.

[24] D. M. Monro, S. Rakshit, and D. Zhang, “DCT-based iris recognition,” IEEE Trans. Pattern Anal. Machine Intell., vol. 29, no. 4, pp.

586–595, Apr. 2007.

[25] J. Thornton, M. Savvides, and B. V. K. V. Kumar, “A Bayesian approach to deformed pattern matching of iris images,” IEEE Trans.

Pattern Anal. Machine Intell., vol. 29, no. 4, pp. 596–606, Apr. 2007.

[26] J. Daugman and C. Downing, “Effect of severe image compression on iris recognition performance,” IEEE Trans. Inform. Forensics and

Security, vol. 3, no. 1, pp. 52–61, Mar. 2008.

[27] S. Rakshit and D. M. Monro, “An evaluation of image sampling and compression for human iris recognition,” IEEE Trans. Inform.

Forensics and Security, vol. 2, no. 3, pp. 605–612, Sep. 2007.

[28] C. Belcher and Y. Du, “A selective feature information approach for iris image-quality measure,” IEEE Trans. Inform. Forensics and

Security, vol. 3, no. 3, pp. 572–577, Sep. 2008.

[29] Z. Sun and T. Tan, “Ordinal measures for iris recognition,” IEEE Trans. Pattern Anal. Machine Intell., to appear in 2009.

[30] K. R. Park, “New automated iris image acquisition method,” Applied Optics, vol. 44, no. 5, pp. 713–734, Feb. 2005.

[31] L. R. Kennell, R. W. Ives, and R. M. Gaunt, “Binary morphology and local statistics applied to iris segmentation for recognition,” in Proc.

IEEE International Conference on Image Proc. (ICIP 2006), Atlanta, GA, Oct. 2006, pp. 293–296.

[32] D. Zhang, D. M. Monro, and S. Rakshit, “Eyelash removal method for human iris recognition,” in Proc. IEEE International Conference

on Image Proc. (ICIP 2006), Atlanta, GA, Oct. 2006, pp. 285–288.

[33] Z. He, T. Tan, Z. Sun, and X. Qiu, “Towards accurate and fast iris segmentation for iris biometrics,” IEEE Trans. Pattern Anal. Machine

Intell., to appear in 2009.

[34] V. Velisavljević, B. Beferull-Lozano, M. Vetterli, and P. L. Dragotti, “Directionlets: anisotropic multidirectional representation with separable

filtering,” IEEE Trans. Image Processing, vol. 15, no. 7, pp. 1916–1933, Jul. 2006.

[35] V. Velisavljević, B. Beferull-Lozano, and M. Vetterli, “Space-frequency quantization for image compression with directionlets,” IEEE

Trans. Image Processing, vol. 16, no. 7, pp. 1761–1773, Jul. 2007.

[36] M. Antonini, M. Barlaud, P. Mathieu, and I. Daubechies, “Image coding using wavelet transform,” IEEE Trans. Image Processing, vol. 1,

no. 2, pp. 205–220, Apr. 1992.

[37] E. Viscito and J. P. Allebach, “The analysis and design of multidimensional FIR perfect reconstruction filter banks for arbitrary sampling

lattices,” IEEE Trans. Circuits Syst., pp. 29–41, Jan. 1991.

[38] J. Davis and M. Goadrich, “The relationship between precision-recall and roc curves,” in Proc. of the 23rd ACM Int. Conf. on Machine

Learning, vol. 148, Pittsburgh, PA, 2006, pp. 233–240.

DRAFT April 15, 2009

You might also like

- Efficient IRIS Recognition Through Improvement of Feature Extraction and subset SelectionDocument10 pagesEfficient IRIS Recognition Through Improvement of Feature Extraction and subset SelectionJasir CpNo ratings yet

- Enhance Iris Segmentation Method For Person Recognition Based On Image Processing TechniquesDocument10 pagesEnhance Iris Segmentation Method For Person Recognition Based On Image Processing TechniquesTELKOMNIKANo ratings yet

- A Hybrid Method For Iris SegmentationDocument5 pagesA Hybrid Method For Iris SegmentationJournal of ComputingNo ratings yet

- Iris Recognition System: A Survey: Pallavi Tiwari, Mr. Pratyush TripathiDocument4 pagesIris Recognition System: A Survey: Pallavi Tiwari, Mr. Pratyush Tripathianil kasotNo ratings yet

- International Journal of Computational Engineering Research (IJCER)Document8 pagesInternational Journal of Computational Engineering Research (IJCER)International Journal of computational Engineering research (IJCER)No ratings yet

- Fusion of Gabor Filter and Steerable Pyramid To Improve Iris Recognition SystemDocument9 pagesFusion of Gabor Filter and Steerable Pyramid To Improve Iris Recognition SystemIAES IJAINo ratings yet

- A Robust Iris Segmentation Scheme Based On Improved U-NetDocument8 pagesA Robust Iris Segmentation Scheme Based On Improved U-NetpedroNo ratings yet

- 1st Paper On Objective - 1Document13 pages1st Paper On Objective - 1DIVYA C DNo ratings yet

- Iris Recognition: Introduction & Basic MethodologyDocument7 pagesIris Recognition: Introduction & Basic MethodologyAnonymous 1yCAFMNo ratings yet

- Iris Based Authentication ReportDocument5 pagesIris Based Authentication ReportDragan PetkanovNo ratings yet

- Localización Confiable Del Iris Utilizando La Transformada de Hough, La Bisección de Histograma y La ExcentricidadDocument12 pagesLocalización Confiable Del Iris Utilizando La Transformada de Hough, La Bisección de Histograma y La ExcentricidadkimalikrNo ratings yet

- Human Iris Recognition Techniques Using Wavelet TransformDocument8 pagesHuman Iris Recognition Techniques Using Wavelet Transformkeerthana_ic14No ratings yet

- Iris Based Authentication System: R.Shanthi, B.DineshDocument6 pagesIris Based Authentication System: R.Shanthi, B.DineshIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalNo ratings yet

- Iris Scanning ReportDocument17 pagesIris Scanning ReporthunnbajajNo ratings yet

- Ijet V3i4p17Document5 pagesIjet V3i4p17International Journal of Engineering and TechniquesNo ratings yet

- Literature Review: Iris Segmentation Approaches For Iris Recognition SystemsDocument4 pagesLiterature Review: Iris Segmentation Approaches For Iris Recognition SystemsInternational Journal of computational Engineering research (IJCER)No ratings yet

- A Study of Various Soft Computing Techniques For Iris RecognitionDocument5 pagesA Study of Various Soft Computing Techniques For Iris RecognitionInternational Organization of Scientific Research (IOSR)No ratings yet

- Implementation of Reliable Open SourceDocument6 pagesImplementation of Reliable Open Sourcenilesh_092No ratings yet

- New IC PaperDocument150 pagesNew IC PaperRaja Ramesh DNo ratings yet

- Performance of Hasty and Consistent Multi Spectral Iris Segmentation Using Deep LearningDocument5 pagesPerformance of Hasty and Consistent Multi Spectral Iris Segmentation Using Deep LearningEditor IJTSRDNo ratings yet

- Efficient Iris Recognition by Characterizing Key Local VariationsDocument12 pagesEfficient Iris Recognition by Characterizing Key Local VariationsVishnu P RameshNo ratings yet

- Iris Recognition Phase Base AokiDocument16 pagesIris Recognition Phase Base AokiAlex WongNo ratings yet

- ijaerv13n10_194Document8 pagesijaerv13n10_194taehyung kimNo ratings yet

- Mpginmc 1079Document7 pagesMpginmc 1079Duong DangNo ratings yet

- An Efficient Technique For Iris Recocognition Based On Eni FeaturesDocument6 pagesAn Efficient Technique For Iris Recocognition Based On Eni FeaturesKampa LavanyaNo ratings yet

- An Approach For Secure Identification and Authentication For Biometrics Using IrisDocument4 pagesAn Approach For Secure Identification and Authentication For Biometrics Using IrisJohnny BlazeNo ratings yet

- Iris Recognition System With Frequency Domain Features Optimized With PCA and SVM ClassifierDocument9 pagesIris Recognition System With Frequency Domain Features Optimized With PCA and SVM ClassifierElprogrammadorNo ratings yet

- Periocular-Assisted Multi-Feature Collaboration For Dynamic Iris RecognitionDocument14 pagesPeriocular-Assisted Multi-Feature Collaboration For Dynamic Iris RecognitionsheelaNo ratings yet

- Iris Recognition: Existing Methods and Open Issues: NtroductionDocument6 pagesIris Recognition: Existing Methods and Open Issues: NtroductionDhimas Arief DharmawanNo ratings yet

- An Efficient Iris Segmentation Approach To Develop An Iris Recognition SystemDocument6 pagesAn Efficient Iris Segmentation Approach To Develop An Iris Recognition Systemhhakim32No ratings yet

- Optik: Hafiz Tayyab Mustafa, Jie Yang, Hamza Mustafa, Masoumeh ZareapoorDocument13 pagesOptik: Hafiz Tayyab Mustafa, Jie Yang, Hamza Mustafa, Masoumeh Zareapoorsamaneh keshavarziNo ratings yet

- Toward Accurate and Fast Iris Segmentation For Iris BiometricsDocument15 pagesToward Accurate and Fast Iris Segmentation For Iris BiometricsNithiya SNo ratings yet

- A Review On How Iris Recognition WorksDocument11 pagesA Review On How Iris Recognition WorksSathya KalaivananNo ratings yet

- 2010 - Beining Et Al - China - Finger-Vein Authentication Based On Wide Line Detector and Pattern NormalizationDocument4 pages2010 - Beining Et Al - China - Finger-Vein Authentication Based On Wide Line Detector and Pattern Normalizationsamuel limongNo ratings yet

- Fcrar2004 IrisDocument7 pagesFcrar2004 IrisPrashant PhanseNo ratings yet

- An Effective Segmentation Technique For Noisy Iris ImagesDocument8 pagesAn Effective Segmentation Technique For Noisy Iris ImagesInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- An Atm With EyeDocument24 pagesAn Atm With EyeunweltedNo ratings yet

- Eye Recognition with Mixed Convolutional and Residual NetworkDocument1 pageEye Recognition with Mixed Convolutional and Residual NetworkKathir VelNo ratings yet

- Iris Recognition Using MATLABDocument9 pagesIris Recognition Using MATLABmanojmuthyalaNo ratings yet

- Iris RecognitionDocument14 pagesIris RecognitionAnaNo ratings yet

- Biometric Identification Using Matching Algorithm MethodDocument5 pagesBiometric Identification Using Matching Algorithm MethodInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- 73 1470546684 - 07-08-2016 PDFDocument3 pages73 1470546684 - 07-08-2016 PDFEditor IJRITCCNo ratings yet

- An Embedded Real-Time Finger-Vein Recognition SystemDocument6 pagesAn Embedded Real-Time Finger-Vein Recognition SystemArun GopinathNo ratings yet

- Deep-PRWIS: Periocular Recognition Without The Iris and Sclera Using Deep Learning FrameworksDocument9 pagesDeep-PRWIS: Periocular Recognition Without The Iris and Sclera Using Deep Learning FrameworksragouNo ratings yet

- 2nd Objective PaperDocument5 pages2nd Objective PaperDIVYA C DNo ratings yet

- A Literature Review On Iris Segmentation Techniques For Iris Recognition SystemsDocument5 pagesA Literature Review On Iris Segmentation Techniques For Iris Recognition SystemsInternational Organization of Scientific Research (IOSR)No ratings yet

- Pupil detection and feature extraction algorithm for efficient Iris recognitionDocument12 pagesPupil detection and feature extraction algorithm for efficient Iris recognitionaliov mezherNo ratings yet

- Iris Pattern Recognition Using Morlet Wavelet MethodDocument5 pagesIris Pattern Recognition Using Morlet Wavelet MethodViji VasanNo ratings yet

- Change Detection of Pulmonary Embolism Using Isomeric Cluster and Computer VisionDocument12 pagesChange Detection of Pulmonary Embolism Using Isomeric Cluster and Computer VisionIAES IJAINo ratings yet

- IJIVP Vol 12 Iss 2 Paper 8 2610 2614Document5 pagesIJIVP Vol 12 Iss 2 Paper 8 2610 2614Nirwana SeptianiNo ratings yet

- Facial Recognition Using Multi Edge Detection and Distance MeasureDocument13 pagesFacial Recognition Using Multi Edge Detection and Distance MeasureIAES IJAINo ratings yet

- Iris Recognition Using Curvelet Transform Based On Principal Component Analysis and Linear Discriminant AnalysisDocument7 pagesIris Recognition Using Curvelet Transform Based On Principal Component Analysis and Linear Discriminant AnalysisTushar MukherjeeNo ratings yet

- 20th European Signal Processing Conference (EUSIPCO 2012) Bucharest, Romania, August 27 - 31, 2012Document5 pages20th European Signal Processing Conference (EUSIPCO 2012) Bucharest, Romania, August 27 - 31, 2012Jothibasu MarappanNo ratings yet

- Using 2D Haar Wavelet Transform For Iris Feature Extraction: 2010 Asia-Pacific Conference On Information TheoryDocument5 pagesUsing 2D Haar Wavelet Transform For Iris Feature Extraction: 2010 Asia-Pacific Conference On Information TheoryAswiniSamantrayNo ratings yet

- Feature Extraction of An Iris For PatternRecognitionDocument7 pagesFeature Extraction of An Iris For PatternRecognitionJournal of Computer Science and EngineeringNo ratings yet

- Deep Learning Based Eye Gaze Tracking For Automotive Applications An Auto-Keras ApproachDocument4 pagesDeep Learning Based Eye Gaze Tracking For Automotive Applications An Auto-Keras ApproachVibhor ChaubeyNo ratings yet

- S R SDocument7 pagesS R Sfaizan_keNo ratings yet

- 3Document14 pages3amk2009No ratings yet

- Review On Iris Recognition Research Directions - A Brief StudyDocument11 pagesReview On Iris Recognition Research Directions - A Brief StudyIJRASETPublicationsNo ratings yet

- Lec 2Document80 pagesLec 2Kearrake KuranNo ratings yet

- Specman e BasicsDocument27 pagesSpecman e BasicsReetika BishnoiNo ratings yet

- UVM Interview QuestionsDocument12 pagesUVM Interview QuestionsReetika BishnoiNo ratings yet

- Xapp884 PRBS GeneratorChecker PDFDocument8 pagesXapp884 PRBS GeneratorChecker PDFReetika BishnoiNo ratings yet

- Specman e LRM LatestDocument1,658 pagesSpecman e LRM LatestReetika BishnoiNo ratings yet

- Specman e LRM LatestDocument1,658 pagesSpecman e LRM LatestReetika BishnoiNo ratings yet

- E LanguageDocument4 pagesE LanguageReetika BishnoiNo ratings yet

- System Verilog ClassesDocument11 pagesSystem Verilog ClassesReetika BishnoiNo ratings yet

- CiscoDocument531 pagesCiscoReetika BishnoiNo ratings yet

- C++ TutorialDocument231 pagesC++ TutorialAnonymous 61AC6bQ100% (3)

- P 1Document3 pagesP 1Reetika BishnoiNo ratings yet

- Interfacing With MicrocontrollerDocument44 pagesInterfacing With MicrocontrollerPradeep PoojaryNo ratings yet

- Iris Scan (Iris Recognization)Document10 pagesIris Scan (Iris Recognization)sayyanNo ratings yet

- Keya PandeyDocument15 pagesKeya Pandeykeya pandeyNo ratings yet

- FT Goblin Full SizeDocument7 pagesFT Goblin Full SizeDeakon Frost100% (1)

- Lorilie Muring ResumeDocument1 pageLorilie Muring ResumeEzekiel Jake Del MundoNo ratings yet

- Model:: Powered by CUMMINSDocument4 pagesModel:: Powered by CUMMINSСергейNo ratings yet

- AnkitDocument24 pagesAnkitAnkit MalhotraNo ratings yet

- Week 3 SEED in Role ActivityDocument2 pagesWeek 3 SEED in Role ActivityPrince DenhaagNo ratings yet

- People vs. Ulip, G.R. No. L-3455Document1 pagePeople vs. Ulip, G.R. No. L-3455Grace GomezNo ratings yet

- Circular 09/2014 (ISM) : SubjectDocument7 pagesCircular 09/2014 (ISM) : SubjectDenise AhrendNo ratings yet

- ABBBADocument151 pagesABBBAJeremy MaraveNo ratings yet

- Compilation of CasesDocument121 pagesCompilation of CasesMabelle ArellanoNo ratings yet

- 4.5.1 Forestry LawsDocument31 pages4.5.1 Forestry LawsMark OrtolaNo ratings yet

- MSBI Installation GuideDocument25 pagesMSBI Installation GuideAmit SharmaNo ratings yet

- 158 Oesmer Vs Paraisa DevDocument1 page158 Oesmer Vs Paraisa DevRobelle Rizon100% (1)

- An4856 Stevalisa172v2 2 KW Fully Digital Ac DC Power Supply Dsmps Evaluation Board StmicroelectronicsDocument74 pagesAn4856 Stevalisa172v2 2 KW Fully Digital Ac DC Power Supply Dsmps Evaluation Board StmicroelectronicsStefano SalaNo ratings yet

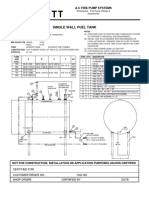

- Single Wall Fuel Tank: FP 2.7 A-C Fire Pump SystemsDocument1 pageSingle Wall Fuel Tank: FP 2.7 A-C Fire Pump Systemsricardo cardosoNo ratings yet

- Sav 5446Document21 pagesSav 5446Michael100% (2)

- Supplier Quality Requirement Form (SSQRF) : Inspection NotificationDocument1 pageSupplier Quality Requirement Form (SSQRF) : Inspection Notificationsonnu151No ratings yet

- Ebook The Managers Guide To Effective Feedback by ImpraiseDocument30 pagesEbook The Managers Guide To Effective Feedback by ImpraiseDebarkaChakrabortyNo ratings yet

- C.C++ - Assignment - Problem ListDocument7 pagesC.C++ - Assignment - Problem ListKaushik ChauhanNo ratings yet

- SAP ORC Opportunities PDFDocument1 pageSAP ORC Opportunities PDFdevil_3565No ratings yet

- Tutorial 5 HExDocument16 pagesTutorial 5 HExishita.brahmbhattNo ratings yet

- CadLink Flyer 369044 937 Rev 00Document2 pagesCadLink Flyer 369044 937 Rev 00ShanaHNo ratings yet

- Applicants at Huye Campus SiteDocument4 pagesApplicants at Huye Campus SiteHIRWA Cyuzuzo CedricNo ratings yet

- Software EngineeringDocument3 pagesSoftware EngineeringImtiyaz BashaNo ratings yet

- Aptio ™ Text Setup Environment (TSE) User ManualDocument42 pagesAptio ™ Text Setup Environment (TSE) User Manualdhirender karkiNo ratings yet

- How To Make Money in The Stock MarketDocument40 pagesHow To Make Money in The Stock Markettcb66050% (2)

- Death Without A SuccessorDocument2 pagesDeath Without A Successorilmanman16No ratings yet

- APM Terminals Safety Policy SummaryDocument1 pageAPM Terminals Safety Policy SummaryVaviNo ratings yet

- 7th Kannada Science 01Document160 pages7th Kannada Science 01Edit O Pics StatusNo ratings yet

- Terms and Condition PDFDocument2 pagesTerms and Condition PDFSeanmarie CabralesNo ratings yet