Professional Documents

Culture Documents

Validation, Verification, and Testing Plan Checklist

Uploaded by

api-3738664Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Validation, Verification, and Testing Plan Checklist

Uploaded by

api-3738664Copyright:

Available Formats

VALIDATION, VERIFICATION, AND TESTING PLAN CHECKLIST

GUIDELINES FOR THE VALIDATION, VERIFICATION, AND TESTING PLAN CHECKLIST:

This checklist is provided as part of the evaluation process for the Validation, Verification, and Testing Plan. The checklist assists designated reviewers in

determining whether specifications meet criteria established in HUD’s System Development Methodology (SDM). The objective of the evaluation is to

determine whether the document complies with HUD development methodology requirements.

Attached to this document is the DOCUMENT REVIEW CHECKLIST. Its purpose is to assure that documents achieve the highest standards relative to

format, consistency, completeness, quality, and presentation.

Submissions must include the following three documents, and must be presented in the following order: (First) Document Review Checklist, (Second) the

Validation, Verification, and Testing Plan Checklist, and (Third) the Validation, Verification, and Testing Plan.

Document authors are required to complete the two columns indicated as “AUTHOR X-REFERENCE Page #/Section #” and “AUTHOR COMMENTS”

before the submission. Do NOT complete the last two columns marked as “COMPLY” and “REVIEWER COMMENTS” since these are for the designated

reviewers.

Document reviewers will consult the HUD SDM and the SDM templates when reviewing the documents and completing the reviewer’s portions of this

checklist.

AUTHOR REFERENCE (Project Identifier):

Designated Reviewers: Start Date: Completed Date: Area Reviewed: Comments:

1:

2:

3:

4:

Summary Reviewer:

12 April 2002 Peer Review Page i

VALIDATION, VERIFICATION, AND TESTING PLAN CHECKLIST

The Validation, Verification, and Testing Plan provides guidance for management and technical efforts throughout the test period. It establishes a

comprehensive plan to communicate the nature and extent of testing necessary for a thorough evaluation of the system. This plan is used to coordinate the

orderly scheduling of events by providing equipment specifications and organizational requirements, the test methodology to be employed, a list of the test

materials to be delivered, and a schedule for user (tester) orientation and participation. Finally, it provides a written record of the required inputs, execution

instructions, and expected results of the system test.

TABLE OF CONTENTS

1.0 General Information 4.0 Testing Characteristics

1.1 Purpose 4.1 Testing Conditions

1.2 Scope 4.2 Extent of Testing

1.3 System Overview 4.3 Data Recording

1.4 Project References 4.4 Testing Constraints

1.5 Acronyms and Abbreviations 4.5 Test Progression

1.6 Points of Contact 4.6 Test Evaluation

1.6.1 Information 4.6.1 Test Data Criteria

1.6.2 Coordination 4.6.1.1 Tolerance

2.0 Test Evaluation 4.6.1.2 System Breaks

2.1 Requirements Traceability Matrix 4.6.2 Test Data Reduction

2.2 Test Evaluation Criteria 5.0 Test Description

2.3 User System Acceptance Criteria *5.x [Test Identifier]

3.0 Testing Schedule 5.x.1 System Functions

3.1 Overall Test Schedule 5.x.2 Test/Function Relationships

3.2 Security 5.x.3 Means of Control

*3.x [Testing Location Identifier] 5.x.4 Test Data

3.x.1 Milestone Chart 5.x.4.1 Input Data

3.x.2 Equipment Requirements 5.x.4.2 Input Commands

3.x.3 Software Requirements 5.x.4.3 Output Data

3.x.4 Personnel Requirements 5.x.4.4 Output Notification

3.x.5 Deliverable Materials 5.x.5 Test Procedures

3.x.6 Testing Tools 5.x.5.1 Procedures

3.x.7 Site Supplied Materials 5.x.5.2 Setup

5.x.5.3 Initialization

5.x.5.4 Preparation

5.x.5.5 Termination

* Each testing location and test identifier should be under a separate header.

Generate new sections and subsections as necessary for each testing location

from 3.3 through 3.x, and for each test from 5.1 through 5.x.

12 April 2002 Peer Review Page ii

VALIDATION, VERIFICATION, AND TESTING PLAN CHECKLIST

To be completed by Author To be completed by Reviewer

AUTHOR

REQUIREMENT X-REFERENCE AUTHOR COMMENTS COMPLY REVIEWER

Page #/Section # COMMENTS

Y N

1.0 GENERAL INFORMATION

1.1 Purpose: Describe the purpose of the Validation,

Verification, and Testing Plan.

1.2 Scope: Describe the scope of the Validation,

Verification, and Testing Plan as it relates to the

project.

1.3 System Overview: Provide a brief system overview

description as a point of reference for the remainder

of the document, including responsible organization,

system name or title, system code, system category,

operational status, and system environment or special

conditions.

1.4 Project References: Provide a list of the references

that were used in preparation of this document.

1.5 Acronyms and Abbreviations: Provide a list of the

acronyms and abbreviations used in this document

and the meaning of each.

1.6 Points of Contact:

1.6.1 Information: Provide a list of the points of

organizational contact (POCs) that may be

needed by the document user for

informational and troubleshooting

purposes.

1.6.2 Coordination: Provide a list of

organizations that require coordination

between the project and its specific support

function. Include a schedule for

coordination activities.

12 April 2002 Peer Review Page 1

VALIDATION, VERIFICATION, AND TESTING PLAN CHECKLIST

To be completed by Author To be completed by Reviewer

AUTHOR

REQUIREMENT X-REFERENCE AUTHOR COMMENTS COMPLY REVIEWER

Page #/Section # COMMENTS

Y N

2.0 TEST EVALUATION

2.1 Requirements Traceability Matrix: Prepare a

functions/test matrix that lists all application

functions on one axis and cross-reference them to all

tests included in the test plan.

2.2 Test Evaluation Criteria: Decide the specific

criteria that each segment of the system/subsystem

must meet.

2.3 User System Acceptance Criteria: Describe the

minimum function and performance criteria that

must be met for the system to be accepted as “fit for

use” by the user or sponsoring organization.

3.0 TESTING SCHEDULE

3.1 Overall Test Schedule: Prepare a testing schedule to

reflect the unit, integration, and system acceptance

tests and the time duration of each. This schedule

should reflect the personnel involved in the test effort

and the site location.

3.2 Security: Prepare a list of requirements necessary to

ensure the integrity of the testing procedures, data,

and site. Any special security considerations (e.g.,

passwords, classifications, security or monitoring

software, or computer room badges) should be

described in detail.

12 April 2002 Peer Review Page 2

VALIDATION, VERIFICATION, AND TESTING PLAN CHECKLIST

To be completed by Author To be completed by Reviewer

AUTHOR

REQUIREMENT X-REFERENCE AUTHOR COMMENTS COMPLY REVIEWER

Page #/Section # COMMENTS

Y N

3.x [Testing Location Identifier]: (Each testing

location in this section should be under a separate

header. Generate new subsections as necessary for

each location from 3.3 through 3.x.) Identify the

location at which the testing will be conducted, and

the organizations participating in the test. List the

tests to be performed at this location.

3.x.1 Milestone Chart: Provide a chart to depict

the activities and events, as well as give

consideration to all tests scheduled for this

location.

3.x.2 Equipment Requirements: Provide a chart

or listing of the period of usage and

quantity required of each item of equipment

employed throughout the test period in

which the system is to be tested.

3.x.3 Software Requirements: Identify any

software required in support of the testing

when it is not a part of the system being

tested.

3.x.4 Personnel Requirements: Provide a listing

of the personnel necessary to perform the

test.

3.x.5 Deliverable Materials: Itemize all

materials that will be delivered as part of

the system test, to include the quantity and

full identification.

3.x.6 Testing Tools: Identify the testing tools to

be used during the preparation for and

execution of the test.

3.x.7 Site Supplied Materials: Describe any

materials required to perform the test that

need to be supplied at the test site.

12 April 2002 Peer Review Page 3

VALIDATION, VERIFICATION, AND TESTING PLAN CHECKLIST

To be completed by Author To be completed by Reviewer

AUTHOR

REQUIREMENT X-REFERENCE AUTHOR COMMENTS COMPLY REVIEWER

Page #/Section # COMMENTS

Y N

4.0 TESTING CHARACTERISTICS

4.1 Testing Conditions: Indicate whether the testing

will use the normal input and database or whether

some special test input is to be used.

4.2 Extent of Testing: Indicate the extent of the testing

to be employed. If limited testing is to be employed,

present test requirements either as a percentage of

some well-defined total quantity or as a number of

samples of discrete operating conditions or values.

Indicate the rationale for adopting limited testing..

4.3 Data Recording: Indicate data recording

requirements for the testing process, including data

not normally recorded during system operation.

4.4 Testing Constraints: Indicate the anticipated

limitations imposed on the testing because of system

or test conditions (timing, interfaces, equipment,

personnel).

4.5 Test Progression: Include an explanation

concerning the manner in which progression is made

from one test to another so that the cycle or activity

for each test is completely performed.

12 April 2002 Peer Review Page 4

VALIDATION, VERIFICATION, AND TESTING PLAN CHECKLIST

To be completed by Author To be completed by Reviewer

AUTHOR

REQUIREMENT X-REFERENCE AUTHOR COMMENTS COMPLY REVIEWER

Page #/Section # COMMENTS

Y N

4.6 Test Evaluation:

4.6.1 Test Data Criteria: Describe the rules by

which test results will be evaluated.

4.6.1.1 Tolerance: Discuss the range

over which a data output value

or a system performance

parameter can vary and still be

considered acceptable.

4.6.1.2 System Breaks: The maximum

number of interrupts, halts, or

other system breaks which may

occur because of non-test

conditions.

4.6.2 Test Data Reduction: Describe the

technique to be used for manipulation of the

raw test data into a form suitable for

evaluation, if applicable.

12 April 2002 Peer Review Page 5

VALIDATION, VERIFICATION, AND TESTING PLAN CHECKLIST

To be completed by Author To be completed by Reviewer

AUTHOR

REQUIREMENT X-REFERENCE AUTHOR COMMENTS COMPLY REVIEWER

Page #/Section # COMMENTS

Y N

5.0 TEST DESCRIPTION

5.x [Test Identifier]: (Each test in this section should

be under a separate header. Generate new

subsections as necessary for each test from 5.1

through 5.x.) Describe the test to be performed.

5.x.1 System Functions: Provide a detailed list

of the system and communications

functions to be tested.

5.x.2 Test/Function Relationships: Provide a

list of the tests that constitute the overall

test activity. Include a test/function matrix

summarizing the overall allocation of the

system tests to the functions.

5.x.3 Means of Control: Indicate whether the

test is to be controlled by manual,

semiautomatic, or automatic means.

5.x.4 Test Data: Identify any security

considerations in each of the following

subsections.

5.x.4.1 Input Data: Describe the manner in which

input data are controlled in order to test the

system with a minimum number of data

types and values, exercise the system with a

range of bona fide data types and values

that test for overload, saturation, and other

"worst case" effects, and exercise the system

with bogus data and values that test for

rejection of irregular input.

5.x.4.2 Input Commands: Describe steps used to

control initialization of the test; to halt or

interrupt the test; to repeat unsuccessful or

incomplete tests; to alternate modes of

operation as required by the test; and to

terminate the test.

12 April 2002 Peer Review Page 6

VALIDATION, VERIFICATION, AND TESTING PLAN CHECKLIST

To be completed by Author To be completed by Reviewer

AUTHOR

REQUIREMENT X-REFERENCE AUTHOR COMMENTS COMPLY REVIEWER

Page #/Section # COMMENTS

Y N

5.x.4.3 Output Data: Identify the media and

location of the data produced by the tests.

5.x.4.4 Output Notification: Describe the manner

in which output notifications (messages

output by the system concerning status or

limitations on internal performance) are

controlled.

5.x.5 Test Procedures:

5.x.5.1 Procedures: Describe the step-by-step

procedures to perform each test.

5.x.5.2 Setup: Describe or refer to standard

operating procedures that describe the

activities associated with setup of the

computer facilities to conduct the test,

including all routine machine activities.

5.x.5.3 Initialization: Itemize, in test sequence

order, the activities associated with

establishing the testing conditions, starting

with the equipment in the setup condition.

5.x.5.4 Preparation: Describe, in sequence, any

special operations.

5.x.5.5 Termination: Itemize, in test sequence

order, the activities associated with

termination of the test.

12 April 2002 Peer Review Page 7

You might also like

- Unit and Integration Test PlanDocument8 pagesUnit and Integration Test PlanSmartNo ratings yet

- Guidelines For The Maintenance Manual ChecklistDocument8 pagesGuidelines For The Maintenance Manual ChecklistNawshard Mohammed HaniffaNo ratings yet

- Software Test Plan Template 2013Document10 pagesSoftware Test Plan Template 2013Salma EyadiNo ratings yet

- Test PlanDocument8 pagesTest PlanMeenakshiNo ratings yet

- Project Setup Checklist TestDocument3 pagesProject Setup Checklist Testmukesh1104No ratings yet

- Software Test Plan TemplateDocument10 pagesSoftware Test Plan TemplateBrook AyalnehNo ratings yet

- EVoting Test PlanDocument27 pagesEVoting Test Planchobiipiggy26No ratings yet

- Test Plan V 2.0Document15 pagesTest Plan V 2.0balaji_lvNo ratings yet

- TestingDocument83 pagesTestingapi-3856328No ratings yet

- Software Test Plan (STP) Template: Q:/Irm/Private/Initiati/Qa/Qaplan/TestplanDocument14 pagesSoftware Test Plan (STP) Template: Q:/Irm/Private/Initiati/Qa/Qaplan/TestplanSwapnil PatrikarNo ratings yet

- Performance Test ReportDocument44 pagesPerformance Test ReportDaniel TenkerNo ratings yet

- SW Test MatDocument143 pagesSW Test MatBest BuddyNo ratings yet

- Software Testing Module4Document12 pagesSoftware Testing Module4Raven KnightNo ratings yet

- Project Test Plan - IPADocument5 pagesProject Test Plan - IPAobada_shNo ratings yet

- SQE AssignmentDocument25 pagesSQE AssignmentSaifullah KhalidNo ratings yet

- System Testing TemplateDocument12 pagesSystem Testing TemplateSE ProjectNo ratings yet

- IT Project MNGTDocument66 pagesIT Project MNGTAbhijat ShrivastavaNo ratings yet

- Qwbfhrsjmfmrnrsnrnrllgjukttgktfkn: 1.1 About The Company 1.2 Problem Statement 1.3 Proposed Solution 1.4 DeliverablesDocument6 pagesQwbfhrsjmfmrnrsnrnrllgjukttgktfkn: 1.1 About The Company 1.2 Problem Statement 1.3 Proposed Solution 1.4 DeliverablesHarjot SinghNo ratings yet

- SC 1.2 - Specific Criteria For Accreditation in The Field of Chemical TestingDocument20 pagesSC 1.2 - Specific Criteria For Accreditation in The Field of Chemical TestingNOOR AZHIM BIN AB MALEK (DOA)No ratings yet

- Quality Assurance Plan ChecklistDocument8 pagesQuality Assurance Plan ChecklistJanell Parkhurst, PMP100% (8)

- Assignment 3 - Test PlanDocument57 pagesAssignment 3 - Test Planapi-540028293No ratings yet

- Test Strategy OverviewDocument28 pagesTest Strategy OverviewTuyen DinhNo ratings yet

- Standard Operating Procedure (SOP) : Meta-Xceed, Inc. September 17, 2002Document23 pagesStandard Operating Procedure (SOP) : Meta-Xceed, Inc. September 17, 2002Ramakrishna.SNo ratings yet

- ICT Testing Guide - L1Document12 pagesICT Testing Guide - L1Arun ChughaNo ratings yet

- Test SpecificationDocument2 pagesTest SpecificationYathestha SiddhNo ratings yet

- IEC62304 Template Software Development Plan V1 0Document11 pagesIEC62304 Template Software Development Plan V1 0劉雅淇No ratings yet

- Test PlanDocument26 pagesTest Planflyspirit99No ratings yet

- A (1) NoraDocument10 pagesA (1) Norametuhsamuel123456789No ratings yet

- Software Testing: Govt. of Karnataka, Department of Technical EducationDocument9 pagesSoftware Testing: Govt. of Karnataka, Department of Technical EducationnaveeNo ratings yet

- Testing Process Flow 0.03Document8 pagesTesting Process Flow 0.03elangovan100% (3)

- Quality Assurance Plan - Assignment 2Document23 pagesQuality Assurance Plan - Assignment 2MohammadFahadKhanNo ratings yet

- CTFL Trainig Session PlanDocument5 pagesCTFL Trainig Session PlanFuad HassanNo ratings yet

- Chapter III2Document9 pagesChapter III2ivy mae floresNo ratings yet

- Perf Testing FundamentalsDocument2 pagesPerf Testing FundamentalsRavindu HimeshaNo ratings yet

- Testing Plan: by Bora Çakmaz Alper ÇÖMEN Berkay ERGİNDocument13 pagesTesting Plan: by Bora Çakmaz Alper ÇÖMEN Berkay ERGİNKrysalisNo ratings yet

- Made For Science Quanser Rotary Servo Base Unit CoursewareStud LabVIEWDocument90 pagesMade For Science Quanser Rotary Servo Base Unit CoursewareStud LabVIEWHelmut PintoNo ratings yet

- The Testing Process: - Test Case DesignDocument17 pagesThe Testing Process: - Test Case DesignRohit KumarNo ratings yet

- Unit 4 Software Testing: Structure Page NosDocument18 pagesUnit 4 Software Testing: Structure Page NosAshish SemwalNo ratings yet

- SQA AssignmentDocument6 pagesSQA AssignmentAngel ChocoNo ratings yet

- Master Test PlanDocument9 pagesMaster Test PlansomerandomhedgehogNo ratings yet

- Aurmtf002 R1Document5 pagesAurmtf002 R1shumaiyl9No ratings yet

- ICT Testing Guide - LDocument11 pagesICT Testing Guide - LArun ChughaNo ratings yet

- Testing in System Integration ProjectDocument23 pagesTesting in System Integration ProjectMelissa Miller100% (3)

- Test Plan - Camino LeafDocument31 pagesTest Plan - Camino Leafapi-480230170No ratings yet

- IEC62304 Template Traceabaility Matrix V1 0Document7 pagesIEC62304 Template Traceabaility Matrix V1 0劉雅淇No ratings yet

- Test - Plan GuideDocument3 pagesTest - Plan GuideTerryNo ratings yet

- Amazon PlanningDocument18 pagesAmazon PlanningMohan RajendaranNo ratings yet

- IEC62304 Template Software Test Report V1 0Document6 pagesIEC62304 Template Software Test Report V1 0劉雅淇No ratings yet

- MSSE SOFTWARE - MergedDocument18 pagesMSSE SOFTWARE - MergedVeeranan SenniahNo ratings yet

- Library Management System Test PlanDocument14 pagesLibrary Management System Test PlanChun Chun0% (2)

- Test Plan TodoistDocument7 pagesTest Plan Todoistppp gggNo ratings yet

- Spicejet - Test PlanDocument13 pagesSpicejet - Test PlanNarendra GowdaNo ratings yet

- Project xxxx Test StrategyDocument25 pagesProject xxxx Test StrategyAbhishek GuptaNo ratings yet

- Test Plan TemplateDocument11 pagesTest Plan Templateapi-3806986100% (8)

- Project Name: "New Arrivals": FNA Test PlanDocument6 pagesProject Name: "New Arrivals": FNA Test PlanNusrat HasanNo ratings yet

- Testing Chapter 9Document55 pagesTesting Chapter 9Malik JubranNo ratings yet

- Structured Software Testing: The Discipline of DiscoveringFrom EverandStructured Software Testing: The Discipline of DiscoveringNo ratings yet

- Testing UMTS: Assuring Conformance and Quality of UMTS User EquipmentFrom EverandTesting UMTS: Assuring Conformance and Quality of UMTS User EquipmentNo ratings yet

- Test Management Plan Ver 9 - v2Document12 pagesTest Management Plan Ver 9 - v2api-3738664No ratings yet

- SyllabusadvancedDocument42 pagesSyllabusadvancedapi-3733726No ratings yet

- PMP DetalDocument5 pagesPMP Detalapi-3738664No ratings yet

- TestBench PCDocument19 pagesTestBench PCapi-3738664No ratings yet

- Mobile Testing PracticalDocument11 pagesMobile Testing Practicalapi-3738664100% (1)

- Silk Presentation 2Document27 pagesSilk Presentation 2api-3738664No ratings yet

- Silk Presentation 1Document28 pagesSilk Presentation 1api-3738664No ratings yet

- RFT RRDocument6 pagesRFT RRapi-3738664No ratings yet

- Advancedsyllabusv07 12july07 BetaDocument111 pagesAdvancedsyllabusv07 12july07 Betaapi-3738664No ratings yet

- TestBench PC ManualDocument500 pagesTestBench PC Manualapi-3738664No ratings yet

- Mobile Testing TheoryDocument67 pagesMobile Testing Theoryapi-3738664No ratings yet

- Mobile Test Case FormatDocument3 pagesMobile Test Case Formatapi-3738664No ratings yet

- Silk PresentationDocument55 pagesSilk Presentationapi-3738664No ratings yet

- Presentation On Test PlanDocument25 pagesPresentation On Test Planapi-3738664No ratings yet

- Silk Presentation 2Document27 pagesSilk Presentation 2api-3738664No ratings yet

- TestDrive GoldDocument78 pagesTestDrive Goldapi-3738664No ratings yet

- Foundation Certificate in Software Testing Practice ExamDocument11 pagesFoundation Certificate in Software Testing Practice Examapi-3738664No ratings yet

- ESPIRE OM FMT G116 Training TemplateDocument8 pagesESPIRE OM FMT G116 Training Templateapi-3738664No ratings yet

- Iseb Foundation Software Testing Exam Question Answers - 02Document11 pagesIseb Foundation Software Testing Exam Question Answers - 02namratavohra18No ratings yet

- ISEB Condensed Notes ChangingDocument69 pagesISEB Condensed Notes ChangingVenkata Suresh Babu ChitturiNo ratings yet

- Istqb Test PaperDocument8 pagesIstqb Test Papermadhumohan100% (3)

- Istqb Test 3Document7 pagesIstqb Test 3api-3738664No ratings yet

- ISTQB MockupDocument12 pagesISTQB MockupKapildevNo ratings yet

- Istqb Test 2Document7 pagesIstqb Test 2api-3738664No ratings yet

- Chapter 4-Static TestingDocument11 pagesChapter 4-Static Testingapi-3738664No ratings yet

- Chapter 5Document10 pagesChapter 5api-3738664No ratings yet

- ISEB Foundation Course in Software Testing PRACTICE EXAM (45mins-30 Questions)Document5 pagesISEB Foundation Course in Software Testing PRACTICE EXAM (45mins-30 Questions)Irfan100% (2)

- Chapter 6Document4 pagesChapter 6api-3738664No ratings yet

- Chapter 2Document15 pagesChapter 2api-3738664No ratings yet

- Chapter 3Document7 pagesChapter 3api-3738664No ratings yet

- Do Not Go GentleDocument5 pagesDo Not Go Gentlealmeidaai820% (1)

- Basic Physics II - Course OverviewDocument2 pagesBasic Physics II - Course OverviewNimesh SinglaNo ratings yet

- Business Process Re-Engineering: Angelito C. Descalzo, CpaDocument28 pagesBusiness Process Re-Engineering: Angelito C. Descalzo, CpaJason Ronald B. GrabilloNo ratings yet

- Unified Field Theory - WikipediaDocument24 pagesUnified Field Theory - WikipediaSaw MyatNo ratings yet

- Metaverse in Healthcare-FADICDocument8 pagesMetaverse in Healthcare-FADICPollo MachoNo ratings yet

- Language Assistant in ColombiaDocument2 pagesLanguage Assistant in ColombiaAndres CastañedaNo ratings yet

- 2015 International Qualifications UCA RecognitionDocument103 pages2015 International Qualifications UCA RecognitionRodica Ioana BandilaNo ratings yet

- HTTPDocument40 pagesHTTPDherick RaleighNo ratings yet

- Application of Leadership TheoriesDocument24 pagesApplication of Leadership TheoriesTine WojiNo ratings yet

- Review of Decree of RegistrationDocument21 pagesReview of Decree of RegistrationJustine Dawn Garcia Santos-TimpacNo ratings yet

- The Ecology of School PDFDocument37 pagesThe Ecology of School PDFSravyaSreeNo ratings yet

- Motion Filed in Lori Vallow Daybell Murder CaseDocument23 pagesMotion Filed in Lori Vallow Daybell Murder CaseLarryDCurtis100% (1)

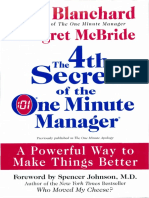

- The 4th Secret of The One Minute ManagerDocument147 pagesThe 4th Secret of The One Minute ManagerReyes CristobalNo ratings yet

- INFOSEM Final ReportDocument40 pagesINFOSEM Final ReportManasa BanothNo ratings yet

- Lit Exam 2nd QuarterDocument4 pagesLit Exam 2nd Quarterjoel Torres100% (2)

- Assignment Histogram and Frequency DistributionDocument6 pagesAssignment Histogram and Frequency DistributionFitria Rakhmawati RakhmawatiNo ratings yet

- Στιχοι απο εμεναDocument30 pagesΣτιχοι απο εμεναVassos Serghiou SrNo ratings yet

- Permutations & Combinations Practice ProblemsDocument8 pagesPermutations & Combinations Practice Problemsvijaya DeokarNo ratings yet

- Chapter 14 ECON NOTESDocument12 pagesChapter 14 ECON NOTESMarkNo ratings yet

- Woodward FieserDocument7 pagesWoodward FieserUtkarsh GomberNo ratings yet

- Qimen Grup2Document64 pagesQimen Grup2lazuli29100% (3)

- Anatomy of PharynxDocument31 pagesAnatomy of PharynxMuhammad KamilNo ratings yet

- Remedial 2 Syllabus 2019Document11 pagesRemedial 2 Syllabus 2019Drew DalapuNo ratings yet

- Sociology of Behavioral ScienceDocument164 pagesSociology of Behavioral ScienceNAZMUSH SAKIBNo ratings yet

- Literary Criticism ExamDocument1 pageLiterary Criticism ExamSusan MigueNo ratings yet

- 2125 Things We Can No Longer Do During An RPGDocument51 pages2125 Things We Can No Longer Do During An RPGPećanac Bogdan100% (2)

- Virl 1655 Sandbox July v1Document16 pagesVirl 1655 Sandbox July v1PrasannaNo ratings yet

- Social Emotional Lesson Plan 1Document6 pagesSocial Emotional Lesson Plan 1api-282229828No ratings yet

- Seminar Report on 21st Century TeachingDocument4 pagesSeminar Report on 21st Century Teachingeunica_dolojanNo ratings yet

- Legion of Mary - Some Handbook ReflectionsDocument48 pagesLegion of Mary - Some Handbook Reflectionsivanmarcellinus100% (4)