Professional Documents

Culture Documents

Memory PDF

Uploaded by

Wity WytiOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Memory PDF

Uploaded by

Wity WytiCopyright:

Available Formats

1

Memory Systems

This chapter begins the discussion of memory systems from the implementation of a single bit. The

architecture of memory chips is then constructed using arrays of bit implementations coupled with

familiar digital logic components such as decoders, transmission gates and multiplexors to access

groups of bits such as words. Several alternative memory chip architectures are explored and motivated.

These chips in turn are used to construct memory systems: these are systems that are designed to return

specific multibit quantities such as bytes and words using memory chips of various sizes. Finally, we

conclude with view of a memory system from a programmers perspective which is essentially that of a

linear array of storage locations. Our programming view is now based a thorough understanding of the

implementation and leads naturally to the first set of programmer (assembly language) directives that

we will explore: directives for data storage.

Static Random Access Memory (SRAM) Cell

The storage of a single bit of information using CMOS SRAM technology is shown in Figure 1. The

basic storage component is constructed with a pair of cross coupled inverters, These inverters are

embedded in a larger circuit that provides controlled connections to the inverter loop through two n-

type switches. When the word line is driven high the bit lines carry the value of the bit and the value of

the complement of the bit stored in the cell.

As we will see in the following sections, the term random refers to the fact that any bit in a RAM array

can be accessed directly without having to first read through a sequence of other bits. Static RAM cells

Memory Systems:DRAFT

2 Memory Systems

are capable of retaining the stored value as long as power is supplied to the circuit. This design is

often referred to as a 6T design since 6 transistors are used: two for each inverter and one each

for access to the value of the bit and its complement.

Dynamic Random Access Memory (DRAM) Cell

The structure a single DRAM cell is shown in Figure 2. In this design a bit of information is stored

as a charge on the internal capacitor. To read the value stored in the cell the word line is driven

high. The n-type transistor is turned on and the charge on the capacitor is sensed on the bit line.

This implementation uses one transistor and is therefore often referred to as a 1T design.

The process of reading the value of the bit by discharging the capacitor is a destructive operation.

Therefore the bit value must be written back in amplified form following a read operation. Even if

the value is not read, physical cells experience leakage of the stored charge over time. Therefore

periodically the bit values stored in the cell must be read and written back. This process is referred

to as refresh and is periodically and automatically performed by special circuits. It is this behavior

that leads to the label dynamic.

Now that we have an idea how individual bits are stored we can move up the level of memory

chips and study the internal architecture and operation of some standard memory components.

D

D

Select (word line)

FIGURE 1. Structure of an SRAM cell

Memory Systems 3

Architecture of Memory Chips: DRAFT

Architecture of Memory Chips

Now let us consider the architecture and operation of memory chips. The architecture we will discuss is

applicable to both SRAM and DRAM based designs. At the core of this architecture is a two-dimen-

sional array of bits where each bit may be implemented as an SRAM or DRAM cell. A single bit in this

array can be selected or addressed by providing the row and column indices of the location of the cell.

This bit value stored in this cell can be read into a buffer from which it can be read off-chip. Let us make

this sequence of steps more precise using an example and with reference to Figure 3.

Imagine we have a memory chip that stores 1 Mbits. To access any cell in this chip we must be able to

identify this cell. The simplest approach would be to number all of the cells and provide the integer

value or number of the cell you wish to read. Such a number is the memory address of the cell and pro-

viding this number is referred to as the process of addressing a cell. How many bits do we need to

address 1 Mbits? We have 2

20

= 1 M and therefore we need a 20 bit number which is referred to as the

address of the cell.

However these memory cells (that store the individual bits) are not stored in a linear array that can be

addressed in such a simple manner. The single bit cells are arranged in a two-dimensional array of

1024x1024 cells. All of the cells in a row share the same word line or select signal. Thus when a word

line is asserted all of the cells in the row will drive their bit values onto the bit lines. Similarly all of the

cells in a column share the same bit line. Therefore we conclude that at any given time only one cell in

a column should be placing a value on this shared bit line. Addressing a row or column now requires 10

bits (2

10

= 1024). While strictly speaking a cell holds a bit value we will use the term bit and cell inter-

changeably.

D (bit line)

Select ( word line )

C

access transistor

FIGURE 2. Structure of a DRAM cell

Memory Systems:DRAFT

4 Memory Systems

Now when a 20 bit address is provided we may see the following sequence of events. The most sig-

nificant 10 bits if the address are used as a row address. This address is provided to a 10 bit row

decoder. Each of the 1024 outputs of the row decoder are connected to one of the 1024 word lines

in the array. The selected row will drive their bit values onto the corresponding 1024 bit lines. The

1024 bit values are latched into a row buffer that can be graphically thought of as residing at the

bottom of the array in Figure 3. Now the 10 bit column address is used to select one of the 1024

bits from this row buffer and provide the value to the chip output. The access of data from the chip

is controlled by two signals. The first is the chip select (CS) which enables the chip. The second is

a signal that controls whether data is being written to the array (RW=0) or whether data is being

read from the array (RW=1). The combination of CS and RW control the the tristate devices on

the data signals D. The organization shown in Figure 3 uses bidirectional signals for data into and

out of the chip rather than having separate input data signals and output data signals.

Now imagine that we had four identical memory planes shown in Figure 3. Each plane operating

concurrently on the same 20 bit address. The result is 4 bits for every access and we would have 4

Mbit chip. Such a chip would be described as a 1Mx4 chip since we have 2

20

addresses and at each

FIGURE 3. Typical organization of a memory chi

A

R

o

w

D

e

c

o

d

e

. . .

.

.

.

I/O

Column Decode

CS

RW

D

word line

memory cell

bit line

Memory Cell Array

Memory Systems 5

Building Memory Systems: DRAFT

address the chip delivers 4 bits. We can conceive of alternative similar data organizations for 1 Mbit

chips such as 256Kx4, 1Mx1 and 128Kx8. While the total number of bits within the chip may be the

same the key distinguishing features is the number of distinct addresses that are supported and the num-

ber of bits stored at each address. With a fixed number of total bits on the chip, the number of distinct

addresses provided by the chip determines the number of bits at each address.

Building Memory Systems

To design a memory system we will aggregate several memory chips and combinational components.

The design is determined by the types of chips available, for example 1Mx4 or 4Mx1, and the number

of distinct addresses that are to be provided. Solutions are illustrated through the following examples.

Example 1

Imagine we wish to design a 1Mx8 memory system using 1Mx4 memory chips. The total number of bits

to be provided by the memory system is 8M bits. Each available 1Mx4 chip provides 4M bits and thus

we will need two chips. Further we have 1M addresses but need to provided 8 bits at each address while

each chip can only provide 4 bits at each address. We can organize the two chips as shown in Figure 4.

One chip provides the least significant 4 bits at each address and the second chip provides the most sig-

nificant four bits at the same address. The 20 bit address is provided to both chips as are the chip select

and read/write control signals.

Example 2

Now let us modify the previous example by asking for a memory system with 2M addresses and four

bits at each address and using the same 1Mx4 memory chips. The total number of bits in the memory

system is still the same: 8 Mbits. We observe that each chip can provide a 4 bit quantity and 1 M

addresses. Thus our organization can be as shown in Figure 5. One chip services the first 1M addresses,

that is addresses from 0 to 2

20

-1. The second chip provides the data at the remaining addresses, that is

addresses from 2

20

to 2

21

-1. However we need additional control. Only one of the two memory chips

must be enabled depending on the value of the address, We can achieve this control by using the most

significant bit of the address and a 1:2 decoder. The most significant bit of an address determines which

of the two halves of the address range is being accessed. The outputs of the decoder are connected to the

individual chip select signals and the corresponding chip will be enabled. The remaining address lines

and the read/write control signal are connected to the corresponding inputs of each chip. Note that each

chip still is provided with exactly 20 address bits. The memory select signal (Msel) is used as an enable

to the memory system.

Memory Systems:DRAFT

6 Memory Systems

This approach represents a common theme. We first organize the memory chips to determine how

addresses will be serviced. Some bits of the address as necessary will be used to determine which

set of chips will deliver data at a specific address. These bits of the address are decoded to enable to

correct set of memory chips. A few more examples will help clarify this approach.

Example 3

Now we consider a memory system with 4 M addresses and four bits at each address for a total of

16 Mbits. As before using 1Mx4 chips we will need four chips. Based on the previous example the

most straightforward design is one wherein each chip serves exactly one fourth of the addresses.

The first chip will service addresses in the range 0 to 2

20

-1. The second chip will serve addresses in

the range 2

20

to 2

21

-1 and so on. The total address range is 0 to 2

22

-1. The two most significant bits

of the address can be used to select the chip. The remaining 20 bits of address are provided to each

chip as is the common read/write control. A 2:4 decoder operating on the two most significant bits

of the address is used to select the chip. As before Msel is used as a memory system enable by serv-

ing as an enable signal for the decoder which in turn generates the chip select signals. The organi-

zation is shown in Figure 6.

FIGURE 4. A 1Mx8 memory system

1Mx4

D0

D1

D2

D3

D0

D1

D2

D3

1Mx4

D4

D5

D6

D7

A19:A0

CS

RW

Memory Systems 7

Building Memory Systems: DRAFT

Note that only one chip is active at any given time. However we may have organizations where this is

not necessarily the case as in the next example.

Example 4

In this example we are interested in a memory system that will provide 2M addresses with 8 bits at each

address. Using the same 1Mx4 chips we need at least two chips to provide an 8 bit quantity at any given

address. We also need four chips to provide the necessary total of 16 Mbits (2M addresses and 8 bits at

each address). These chips are organized in pairs as shown in Figure 7. The first two chips provide four

bits each for the first 1M addresses. The second pair of chips does so similarly for the second 1 M

addresses. A decoder uses the most significant bit of the address to determine which of the two pairs of

chips will be selected for any specific address.

The preceding examples have illustrated several common ways for constructing memory systems of a

given wordwidth using memory chips that provide multibit quantities. While this is the view that mem-

ory system designers may have programmers certainly do not think of memory in these terms. When we

write programs our view of memory is quite a bit more abstract. Programming tools such as assemblers,

linkers and loaders hide the details of the preceding examples from the application programmers. The

most common and arguably simplest logical model of memory is that of a liner array of storage loca-

tions. This view along with programmer directives to utilize this view are discussed next.

FIGURE 5. A 2Mx4 memory system

CS

CS

D0

D1

D2

D3

1:2

A19:A0

A20

Msel

RW

RW

RW

Memory Systems:DRAFT

8 Memory Systems

A Logical/Programmers View of Memory

From the previous section we have seen that memory systems can be designed such that when

reading from memory addresses return values stored at that address. As a user or programmer we

are interested in having available a sequence of memory addresses at which we can store values.

From this perspective we really do not care how this particular sequence of addresses are realized,

that is, what memory chip types are used and how address bits are decoded. There is a logical view

of memory that programmers and compilers have while a physical implementation is the realm of

the memory systems designer. This section describes a logical view of memory.

From the construction of a memory system we know that memory is viewed as a sequence of

addresses and at each address or location is stored a value. In digital systems information is stored

in binary form. Therefore the first question we ask is how many bits can be stored at each memory

address? We have seen examples of how to construct memory systems that return 8, 16 or 32 bit

values for each address. A memory system designed to store 8-bit numbers at each address is

FIGURE 6. A 4Mx4 memory system

2:4

CS

CS

CS

CS

Msel

A21:A20

A19:A0

D0

D1

D2

D3

D0

D1

D2

D3

RW

Memory Systems 9

A Logical/Programmers View of Memory: DRAFT

referred to as byte addressed memory. Similarly one that is designed to return a 32-bit word from each

address is referred as word addressed memory. Although we must be careful to remember that modern

microprocessors are 64-bit machines and word addressed memory implies accessing 64-bit quantities.

However, it is quite common for microprocessor systems to provide byte addressed memory even

though the word size may be 32 or 64 bits. Therefore we will consistently use a byte addressed memory

in our examples which can be viewed as shown in Figure 8.

The contents of each memory location in the figure is an 8-bit value that shown in hexadecimal nota-

tion. The address of each memory location is shown adjacent to the location. Addresses are assumed to

be 32-bit values and are also shown in hexadecimal notation. Note the distinction between addresses

and the values stored at those addresses! You should keep in mind that in other models you may see

each address return 16, 32 or 64 bits rather than 8 bits.

We can think of several reasons why memory should addressed in bytes. Images are organized as arrays

of pixels which in black and white images can often be stored as 8-bit values. The ASCII code uses an

8-bit code and storage of character strings typically uses a sequence of byte locations. However the

majority of modern high performance processors internally operate on 32 and 64 bits and therefore will

FIGURE 7. A 2M x 8 memory system

CS

CS

CS

CS

1:2

A19:A0

A20

Msel

D4

D5

D6

D7

D0

D1

D2

D3

RW

Memory Systems:DRAFT

10 Memory Systems

be storing and retrieving 32 and 64 bit quantities. Considering that we have a byte addressed mem-

ory what are the issues if our microprocessor performs accesses to 32-bit words?

The first issue is how are these words stored? For example, consider the need to store the 32-bit

quantity 0x4400667a at address 0x10010000. The address refers to a single byte in memory how-

ever we wish to store 4 bytes at this location. The straightforward solution is to use the 4 bytes

starting at address 0x10010000. After storage the memory will appear as shown in Figure 9. Note

the most significant byte of the word is stored at memory location 0x10010000 and the least signif-

icant byte of the word is stored at memory location 0x10010003. This type of storage convention is

referred to as big endian referred to in this ways since the big end or most significant byte of the

word is stored first. We could just as easily have stored the bytes of the word in memory in the

reverse order, that is, the contents of memory location 0x10010000 would have been 0x7a which is

the least significant byte or little end of the word. Not surprisingly this storage convention is

referred to as little endian. Different microprocessor vendors will adopt one convention or the

other in the way in which words are stored. For example, Intel x86 architectures are little endian

while Sun and Apple architectures are big endian. As you might imagine this places a bit of a bur-

den on communication software that transfers data between machines that use different storage

conventions since the order of bytes with each word must be reversed. In general, unless stated oth-

erwise little endian storage convention will be used.

FIGURE 8. A logical view of byte addressed memory

0x00

0x33

0x00

0x18

0x22

0xab

0xd4

0x44

0x2c

0x22

0x01

memory

contents

memory

address

0x10010000

0x10010001

0x10010002

0x10010003

0x10010004

0x10010005

0x100100006

0x100100007

0x100100008

0x100100009

0x10010000a

Memory Systems 11

Assembler Directives and Data Layout: DRAFT

If the word size is 32 bits, in a byte addressed memory every fourth address will be the start of a new

word. Such addresses are referred to word boundaries. Alternatively if the word size is 64 bits each

word is comprised of 8 bytes. Therefore every eighth byte will correspond to a word boundary. In gen-

eral we can think of 2

k

byte boundaries where where n is the number of bits in the address.

Assembler Directives and Data Layout

We now have view of memory as a sequence of addresses and values stored at those addresses. The dis-

cussion in this section adopts a programmers perspective in describing how we use such a logical model

of memory. For our purposes we can also consider this to be equivalent to the compilers view of mem-

ory since the majority of programming in modern machines is performed in high-level languages and it

is the compiler that is responsible for data storage and management.

First a few definitions. In the following examples a word is 32 bits or four bytes. All addresses are 32

bits and memory is byte addressed. From this perspective how do we describe the placement of data in

memory? One approach might be the following. Imagine we have a robot that reads storage commands

and places data in memory. The robot or loader (an operating system program in real life) starts at some

fixed location in memory and continues to follow your commands for storing data. What type of com-

mands might make sense? Consider the following commands or directives. Note the . in front of each

command to signify a storage directive. We assume that the loader starts at memory location

0x10010000 although in general it can start anywhere. Finally little endian storage is assumed. Now

consider the following sequence of data directives.

.word 24: This command directs the loader to place the value 24 (or equivalently 0x18) in memory

at the current word. Note that a word is 4 bytes. Therefore the value that is actually stored is the 32-

0x44

0x00

0x66

0x7a

0x10010000

0x10010001

0x10010002

0x10010003

FIGURE 9. Storage of 32-bit words in byte addressed memory

0 k n

Memory Systems:DRAFT

12 Memory Systems

bit number 0x00000018. The loaders view of memory is now as follows (assuming little

endian storage).

.byte 6: This command directs the loader to store the value of 6 in the next byte in memory.

The contents of memory now appears as follows.

.asciiz Help me!: This directive instructs the loader to store the sequence of characters Help

me in memory. Each character is stored in a byte according the ASCII code. Remember spaces

between words are a character! The quotation marks are not stored. They are used to delimit the

characters. Thus the preceding character string will require 8bytes of storage. Finally there is

MEMORY

0x18

0x00

0x00

0x00

Loaders

Pointer

MEMORY

0x18

0x00

0x00

0x00

0x06

Loaders

Pointer

Memory Systems 13

Assembler Directives and Data Layout: DRAFT

one more character called the null termination character that is automatically stored after the string

to identify the end of the string bringing the total storage space to 9 bytes. The memory now appears

as follows.

.space n: Simply skip the next n bytes.

Consider the example sequence of directives and the resulting storage shown in Figure 10. Assume that

the loader starts at address 0x10010000. The .data directive signifies the start of a sequence of data

directives. The directives shown lead to the pattern of storage shown in the figure. However now sup-

pose we wish to follow the last directive with .word 26. This will cause the storage of a word to start at

location in memory which is not a word boundary. This is the issue of memory alignment, We will sim-

plify the memory model here and require that words start on a word boundary. We can enforce this

requirement with one last directive, namely .align k. This command forces the loader to move to the

next 2

k

byte boundary. In order to force the loader to move to the next word boundary we simply place

the directive .align 2 after the last command. The storage map that results from this new sequence of

commands is shown in Figure 11.

MEMORY

0x18

0x00

0x00

0x00

0x06

0x48

0x65

0x6c

0x70

0x20

0x6d

0x65

0x21

0x00

Loaders

Pointer

Memory Systems:DRAFT

14 Memory Systems

We can think now of programs that are comprised of a sequence of data directives and which are

used to store data structures in memory. For example, we can envision a sequence of .word direc-

tives that would store the elements of a vector in successive memory locations. Think for a moment

about the storage of a two dimensional array such as matrix. Memory is a linear array of locations.

Thus two dimensional data structures must be translated to a linear array for storage purposes. One

straightforward approach is store the elements of the first row in a sequence of memory locations,

followed by the elements of the second row and so on. We could also store the data by columns.

Such row-major or column-major ordering of data structures are automatically performed by com-

pilers. Data directives such as those described in this section are used to describe such storage pat-

terns.

FIGURE 10. Data directives and resulting storage pattern

.data

.word 24

.byte 7

.asciiz help

0x18

0x00

0x00

0x00

0x07

0x68

0x65

0x6c

0x70

0x00

0x10010000

0x10010004

0x10010008

Memory Systems 15

Assembler Directives and Data Layout: DRAFT

FIGURE 11. Data directives and resulting storage pattern: a second example

0x18

0x00

0x00

0x00

0x07

0x43

0x43

0x43

0x43

0x00

0x10010000

0x10010004

0x10010008

0x1001000c

0x1a

0x00

0x00

0x00

.data

.word 24

.byte 7

.asciiz help

.align 2

.word 26

Memory Systems:DRAFT

16 Memory Systems

You might also like

- Unit - 5 Increasing The Memory SizeDocument14 pagesUnit - 5 Increasing The Memory SizeKuldeep SainiNo ratings yet

- Memory SystemsDocument93 pagesMemory SystemsdeivasigamaniNo ratings yet

- CAO AssignmentDocument44 pagesCAO AssignmentAbhishek Dixit40% (5)

- Unit 4Document61 pagesUnit 4ladukhushi09No ratings yet

- MEMORY SYSTEM OVERVIEWDocument68 pagesMEMORY SYSTEM OVERVIEWshiva100% (1)

- Expanding Memory SizeDocument12 pagesExpanding Memory SizeFelistus KavuuNo ratings yet

- COA Mod4Document71 pagesCOA Mod4Boban MathewsNo ratings yet

- CO - MODULE - 2 (A) - Memory-SystemDocument78 pagesCO - MODULE - 2 (A) - Memory-Systemddscraft123No ratings yet

- 5-4 - Chapter 8 - HW 5 - Exercises Logic_and_Computer_Design_Fundamentals_-_4th_International_Edition(2)Document2 pages5-4 - Chapter 8 - HW 5 - Exercises Logic_and_Computer_Design_Fundamentals_-_4th_International_Edition(2)David SwarcheneggerNo ratings yet

- Lecture-5 Development of Memory ChipDocument82 pagesLecture-5 Development of Memory ChipNaresh Kumar KalisettyNo ratings yet

- Chapter 5-The Memory SystemDocument84 pagesChapter 5-The Memory SystemupparasureshNo ratings yet

- COA Module 4 BEC306CDocument16 pagesCOA Module 4 BEC306Csachinksr007No ratings yet

- Mc9211unit 5 PDFDocument89 pagesMc9211unit 5 PDFsanthoshNo ratings yet

- comexam2مهمDocument28 pagescomexam2مهمHappy JavaNo ratings yet

- Memory BasicsDocument25 pagesMemory Basicsrajivsharma1610No ratings yet

- Microprocessor Microcontroller EXAM 2021 MGDocument11 pagesMicroprocessor Microcontroller EXAM 2021 MGRene EBUNLE AKUPANNo ratings yet

- Computer OrganizationDocument32 pagesComputer Organizationy22cd125No ratings yet

- CO Module3Document39 pagesCO Module3pavanar619No ratings yet

- Computer Basics Study GuideDocument11 pagesComputer Basics Study GuidePrerak DedhiaNo ratings yet

- COA Module4Document35 pagesCOA Module4puse1223No ratings yet

- Dlco Unit 5 PDFDocument24 pagesDlco Unit 5 PDFKarunya MannavaNo ratings yet

- COA AssignmentDocument8 pagesCOA AssignmentVijay SankarNo ratings yet

- Lec2 MemDocument12 pagesLec2 MemparagNo ratings yet

- Lesson 1Document9 pagesLesson 1Marcos VargasNo ratings yet

- Set7 Memory InterfacingDocument32 pagesSet7 Memory Interfacing666667No ratings yet

- Memory Organization CAODocument11 pagesMemory Organization CAOArslan AliNo ratings yet

- DE Unit 5Document54 pagesDE Unit 5sachinsahoo147369No ratings yet

- Unit3 CRRDocument29 pagesUnit3 CRRadddataNo ratings yet

- Understanding Memory SystemsDocument27 pagesUnderstanding Memory SystemsSRUTHI. SNo ratings yet

- 5.5 Connecting Memory Chips To A Computer BusDocument11 pages5.5 Connecting Memory Chips To A Computer BusMohd Zahiruddin ZainonNo ratings yet

- CO UNIT-5 NotesDocument22 pagesCO UNIT-5 NotesAmber HeardNo ratings yet

- Answer For Tutorial 2Document6 pagesAnswer For Tutorial 2AndySetiawanNo ratings yet

- Chapter 5-The Memory SystemDocument80 pagesChapter 5-The Memory SystemPuneet BansalNo ratings yet

- The Memory System: Fundamental ConceptsDocument115 pagesThe Memory System: Fundamental ConceptsRajdeep ChatterjeeNo ratings yet

- Computer architecture, volatile memory and digital logic explainedDocument10 pagesComputer architecture, volatile memory and digital logic explainedKudakwasheNo ratings yet

- Week 5: Assignment SolutionsDocument3 pagesWeek 5: Assignment SolutionsRoshan RajuNo ratings yet

- BT Internal Memory deDocument4 pagesBT Internal Memory dePhạm Tiến AnhNo ratings yet

- Chepter 12Document10 pagesChepter 12To KyNo ratings yet

- TutoriaL IIIT Allahabad On MemoryDocument13 pagesTutoriaL IIIT Allahabad On MemoryishtiyaqNo ratings yet

- BT 0068Document10 pagesBT 0068Mrinal KalitaNo ratings yet

- Cs501 Finalterm Paper 31 July 2013: All Mcqs From My Paper (New + Old)Document7 pagesCs501 Finalterm Paper 31 July 2013: All Mcqs From My Paper (New + Old)Faisal Abbas BastamiNo ratings yet

- Module-4 Memory and Programmable Logic: Read-Only Memory (Rom)Document32 pagesModule-4 Memory and Programmable Logic: Read-Only Memory (Rom)Yogiraj TiwariNo ratings yet

- A 64 Bit Static RAM: Row Selection CircuitDocument7 pagesA 64 Bit Static RAM: Row Selection CircuitChristopher PattersonNo ratings yet

- Memory Address DecodingDocument25 pagesMemory Address DecodingEkansh DwivediNo ratings yet

- Unit 6 Memory OrganizationDocument24 pagesUnit 6 Memory OrganizationAnurag GoelNo ratings yet

- 06 Computer Architecture PDFDocument4 pages06 Computer Architecture PDFgparya009No ratings yet

- COA Assignment: Ques 1) Describe The Principles of Magnetic DiskDocument8 pagesCOA Assignment: Ques 1) Describe The Principles of Magnetic DiskAasthaNo ratings yet

- Lecture 6Document10 pagesLecture 6Elmustafa Sayed Ali AhmedNo ratings yet

- CamDocument29 pagesCamMary BakhoumNo ratings yet

- Computer Architecture and Organization Assignment IIDocument2 pagesComputer Architecture and Organization Assignment IIDEMEKE BEYENENo ratings yet

- Memory Interfacing 8086 Good and UsefulDocument39 pagesMemory Interfacing 8086 Good and UsefulSatish GuptaNo ratings yet

- Pentium CacheDocument5 pagesPentium CacheIsmet BibićNo ratings yet

- Computer OrganizationDocument8 pagesComputer Organizationmeenakshimeenu480No ratings yet

- Architecture: MDCT CoreDocument5 pagesArchitecture: MDCT Coregpa0007No ratings yet

- Memory Unit Bindu AgarwallaDocument62 pagesMemory Unit Bindu AgarwallaYogansh KesharwaniNo ratings yet

- Practical Reverse Engineering: x86, x64, ARM, Windows Kernel, Reversing Tools, and ObfuscationFrom EverandPractical Reverse Engineering: x86, x64, ARM, Windows Kernel, Reversing Tools, and ObfuscationNo ratings yet

- Master System Architecture: Architecture of Consoles: A Practical Analysis, #15From EverandMaster System Architecture: Architecture of Consoles: A Practical Analysis, #15No ratings yet

- Modern Introduction to Object Oriented Programming for Prospective DevelopersFrom EverandModern Introduction to Object Oriented Programming for Prospective DevelopersNo ratings yet

- SNES Architecture: Architecture of Consoles: A Practical Analysis, #4From EverandSNES Architecture: Architecture of Consoles: A Practical Analysis, #4No ratings yet

- 2022 Annual Report-E PDFDocument325 pages2022 Annual Report-E PDFF550VNo ratings yet

- WEG srw01 Smart Relay User Manual 10000445295 6.0x Manual EnglishDocument159 pagesWEG srw01 Smart Relay User Manual 10000445295 6.0x Manual EnglishMichael adu-boahenNo ratings yet

- Intelligent Solar Charge Controller User's Manual Solar 30: Read/DownloadDocument3 pagesIntelligent Solar Charge Controller User's Manual Solar 30: Read/DownloadJandrew Gomez DimapasocNo ratings yet

- M61 Guide en-USDocument2 pagesM61 Guide en-USJuan OlivaNo ratings yet

- Bayes Theorem 2Document14 pagesBayes Theorem 2Muhammad YahyaNo ratings yet

- Introduction To Direct Digital Control Systems: Purpose of This GuideDocument82 pagesIntroduction To Direct Digital Control Systems: Purpose of This GuidekdpmansiNo ratings yet

- Network Theory - Coupled CircuitsDocument6 pagesNetwork Theory - Coupled CircuitsHarsh GajjarNo ratings yet

- Electronics and Communication Engineering Semester 3Document154 pagesElectronics and Communication Engineering Semester 3Vinitha SankerNo ratings yet

- Storz Xenon 300 Light Source - Service ManualDocument75 pagesStorz Xenon 300 Light Source - Service ManualISRAEL MACEDONo ratings yet

- Kioxia Thgbmtg5d1lbail 15nm 4gb E-Mmc Ver5.0 e Rev.1.0 20211007Document30 pagesKioxia Thgbmtg5d1lbail 15nm 4gb E-Mmc Ver5.0 e Rev.1.0 20211007Duc Nguyen TruongNo ratings yet

- Operating Instructions: SR-SAT102 SR-SAT182Document18 pagesOperating Instructions: SR-SAT102 SR-SAT182EmaxxSeverusNo ratings yet

- Model 1540: Digital 3-Axis Fluxgate MagnetometerDocument2 pagesModel 1540: Digital 3-Axis Fluxgate MagnetometerThomas ThomasNo ratings yet

- SF DumpDocument13 pagesSF DumpMJ Aranda beniNo ratings yet

- Tronxy X5 Assemble English User ManualDocument16 pagesTronxy X5 Assemble English User ManualPhil Hall0% (1)

- Transformer Connections: Powerpoint PresentationDocument20 pagesTransformer Connections: Powerpoint PresentationfahiyanNo ratings yet

- Foxess 10,5K G10500 220VDocument2 pagesFoxess 10,5K G10500 220Valex reisNo ratings yet

- CL5 BrochureDocument6 pagesCL5 BrochureWilliamNo ratings yet

- IGCSE - Physics - Lesson Plan 6 - ElectricityDocument3 pagesIGCSE - Physics - Lesson Plan 6 - ElectricityHoracio FerrándizNo ratings yet

- Ecb-Vavs SPDocument5 pagesEcb-Vavs SPRodrigo VerniniNo ratings yet

- Service Manual: PP P PDocument110 pagesService Manual: PP P PEdson LimaNo ratings yet

- Schematic TransmitterDocument1 pageSchematic TransmitterMario Leonel Torres MartinezNo ratings yet

- Invertek P2 Advanced GuideDocument73 pagesInvertek P2 Advanced GuideAri SutejoNo ratings yet

- 1.1 Certificado de Calidad Modulo de Control 4090-9002Document10 pages1.1 Certificado de Calidad Modulo de Control 4090-9002Diana V. Rosales100% (1)

- JKM590 615N 66HL4M BDV F1C1 enDocument2 pagesJKM590 615N 66HL4M BDV F1C1 enLuka KosticNo ratings yet

- Single Family DwellingDocument27 pagesSingle Family Dwellingjayson platinoNo ratings yet

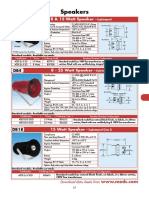

- Explosionproof and Weatherproof SpeakersDocument4 pagesExplosionproof and Weatherproof Speakersparallax1957No ratings yet

- MPPT Arduino ATMega8 Solar Charge Controller Ver01 Schematics PCBDocument3 pagesMPPT Arduino ATMega8 Solar Charge Controller Ver01 Schematics PCBBayu SasongkoNo ratings yet

- To Study The Characteristics of A Common Emitter NPN (Or PNP) Transistor & To Find Out The Values of Current & Voltage GainsDocument11 pagesTo Study The Characteristics of A Common Emitter NPN (Or PNP) Transistor & To Find Out The Values of Current & Voltage GainsAman AhamadNo ratings yet

- MXL User ManualDocument45 pagesMXL User ManualOld Customs Cars ServicesNo ratings yet

- ABU Robocon 2023 RulebookDocument9 pagesABU Robocon 2023 RulebookRelif MarbunNo ratings yet