Professional Documents

Culture Documents

Uploaded by

Hany ElGezawyOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Uploaded by

Hany ElGezawyCopyright:

Available Formats

A Color Correction System using a Color Compensation Chart

Seok-Han Lee, Tae-Yong Kim, and Jong-Soo Choi

Dept. of Image Engineering, Graduate School of Advanced Imaging Science, Multimedia, and

Film Chung-Ang University, DongJak-Ku, Seoul 156-756 Republic of Korea

{ichthus, tykim, jschoi}@imagelab.cau.ac.kr

Abstract

In this paper, we propose a color correction system

using a color compensation chart. The implementation

target of the proposed system is the color image

captured by digital imaging device, and the system

introduces the color compensation chart designed for

it to estimate the transformation between the colors in

the image and the reference colors. The color

correction process of the proposed system consists of

two steps, i.e., the profile creation process and the

profile application process. During the profile creation

process, the relationships between the captured colors

and the reference colors are estimated. And the system

creates a color profile and embeds the estimation

result. During the profile application process, the

colors in the images which are captured under the

same condition as that of the chart image are

corrected using the created color profile. To evaluate

the performance of the system, we perform experiments

under various conditions. And we compare the results

with those of widely used commercial applications.

1. Introduction

With the advance of digital imaging devices such as

digital cameras, their abilities to represent accurate

colors have been important issues. However, accurate

color representation is a difficult problem for digital

imaging devices, because color images of unknown

objects are captured under various unknown conditions

such as illuminations. Furthermore, color distributions

of captured images are also dominated by

characteristics of the imaging devices.

In this paper, we propose the color correction

system using the color compensation chart. The

implementation target of the proposed system is the

color image captured by digital imaging device such as

digital camera or digital scanner. The proposed system

uses the color compensation chart designed for it to

2006 International Conference on Hybrid Information Technology (ICHIT'06)

0-7695-2674-8/06 $20.00 2006

Fig. 1. The block diagram for the proposed system.

estimate the relationship between the colors in the

image and the reference colors. The color correction

process consists of two steps, i.e., the profile creation

process and the profile application process. During the

profile creation process, the relationships between the

captured colors and the reference colors are estimated.

And the system creates a color profile and embeds the

estimation result in the profile. During the profile

application process, the colors in the images which are

captured under the same condition as that of the chart

image are corrected using the created color profile.

2. System description

We adopted the concepts of color management

system (CMS) and profile connection space (PCS) to

realize the proposed system. Fig.1 shows the block

diagram of the proposed system [2]. First, the image of

the color compensation chart is captured. And a

relationship between the colors of the patches in the

captured chart image and the internal reference colors

is estimated. After the estimation, the system creates an

ICC color profile, and embeds the relationship in the

profile. The created profile is used as the source profile

as in Fig.1 to correct the colors of the other images

(a) The color compensation chart. (b) Color gamuts considered to

determine the patch colors.

Fig. 2. The color compensation chart and its gamut.

Fig. 3. Indices of the patches.

which are captured under the same condition as that of

the chart image.

3. Color compensation chart for the

proposed system

For the proposed system, we designed the color

compensation chart as shown in Fig.2(a). This chart

provides the reference colors for the estimation of the

relationship between the colors in the image and the

actual colors. By the relationship, every color in the

captured image is transformed into the actual color.

Eight patches located in the center area of the chart are

used to create TRCs. The leftmost three patches in the

compensation chart provide the primary colors of RGB.

The colors of the patches were determined based on

the most general colors observed in common

environment and general skin colors of Korean people

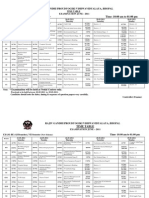

Table.1. Patch colors.

L*

71.18

89.39

70.58

43.35

31.98

71.18

a*

-0.37

6.63

22.04

32.32

19.46

-0.37

b*

-0.37

6.61

11.04

19.83

6.13

-0.37

L*

38.00

87.14

84.24

54.57

41.52

42.49

a*

43.71

1.74

10.57

5.95

5.90

11.50

b*

20.96

27.87

52.94

48.67

24.54

18.55

L*

56.08

88.11

71.28

55.22

36.40

44.94

-12.54

-24.66

-24.62

-13.43

-17.49

-0.29

a* -37.07

b*

15.32

2.66

16.32

8.28

5.23

L*

39.58

95.50

88.70

81.15

62.53

47.85

a*

1.60

0.23

-0.04

-0.78

-0.13

40.08

36.62

b* -35.32

L*

60.05

a* -16.50

0.25

-0.01

-0.28

-0.39

79.51

40.96

33.70

23.12

53.35

0.66

-0.61

0.38

0.14

-16.33

b*

25.63

0.43

-0.40

2.96

-0.25

-5.03

L*

52.33

86.78

77.20

63.91

34.27

83.54

a*

41.92

-4.71

-10.11

-13.44

-10.63

-10.32

b*

-1.53

-0.60

11.32

-7.68

-16.13

-9.65

L*

53.54

88.13

80.36

50.60

29.96

38.07

a*

5.95

1.86

4.88

25.15

12.62

-6.50

b*

77.19

0.59

-7.76

-20.52

-17.88

-15.08

L*

71.18

80.54

74.88

67.73

43.10

71.18

a*

-0.37

9.34

10.64

12.13

24.62

-0.37

b*

-0.37

15.13

18.04

19.17

26.85

-0.37

2006 International Conference on Hybrid Information Technology (ICHIT'06)

0-7695-2674-8/06 $20.00 2006

Fig. 4. Profile creation process.

[6, 7]. The color gamuts of widely used digital cameras

were also considered, because the proposed system is

for the images captured by digital cameras. Fig.2(b)

shows the color gamuts of the compensation chart and

the considered digital cameras. The + marks in

Fig.2(b) denote the patch colors, and they were

adopted to be located in the considered gamuts. Table

1 shows the L*a*b* values of the patches in the chart,

and Fig.3 shows the index of every patch which is used

in the entire process.

4. Color correction algorithm for the

proposed system

4.1. Profile creation process.

Fig.4 shows the block diagram of the color profile

creation process. The first step of the profile creation

1.2

1

0.9

Normalized Color //

0.7

0.6

0.5

C aptu r ed

Fi tte d

Refe rnc e

0.4

0.3

0.2

Outp ut C o lo r (No rm aliz ed )///

0.8

0.8

0.6

Red

Green

Blue

0.4

0.2

0.1

0

(4, 4)

(4, 3)

(4, 2)

(4, 1)

(3, 4)

(3, 3)

(3, 2)

(3, 1)

Index of Patch

(a) Result of curve regression.

0.2

0.4

0.6

0.8

Input Color (Normaliz ed)

1.2

(b) TRCs for each channel.

Fig. 5. Results of the TRC estimation process.

process is the TRC estimation. And the color in the

RGB space is converted into that of CIEXYZ color

space. Then the transformation to map the color to the

correct color is estimated. The results of the

estimations are embedded in the ICC profile.

4.1.1. Estimation of the tone reproduction curves.

The eight patches located in the center area of the chart

are use for the TRC estimation process (i.e., from (3,

1) to (3, 4) and from (4, 1) to (4, 4) in Fig.3). For each

of R, G, and B channels, a function to fit the color

values of these eight patches in the image to those in

the internal reference chart is estimated, and the

function is used as the TRC. This process is performed

in R, G, and B channels independently using the colors

of the eight patches in each channel, and we get three

TRCs during this process. In this paper, we apply

curve regression to fit the color values of the eight

patches to those of the internal reference chart as

follows [11]:

g ( x ) = a1 f1 ( x ) + a 2 f 2 ( x ) + a3 f 3 ( x ) + a4 f 4 ( x ), 0 x 1,

f 1 ( x ) = 1, f 2 ( x ) = x, f 3 ( x ) = sin( x ), f 4 ( x ) = exp( x ),

(1)

where an is undetermined coefficient, and x is

normalized color of the patch to be in the range of 0.0

to 1.0. The coefficients in Eq.(1) are determined to

minimize the sum of the square of the differences

between the fitted color values of the eight patches in

the image and those of the internal reference chart.

2

R = [ ri ] = yi a n f n ( xi ) ,

i =1

i =1

n =1

(2)

This estimation is performed for each of R, G, and

B channels, and we get three TRCs. Fig.5 shows the

result of the TRC estimation process. Fig.5(a) is the

2006 International Conference on Hybrid Information Technology (ICHIT'06)

0-7695-2674-8/06 $20.00 2006

result of the curve regression, and Fig.5(b) shows three

TRCs for each of R, G, and B channels. Regression

errors of the patches used for the regression are shown

in Table 1. The TRCs are preserved in the created ICC

profile for the profile application process as described

in section 4.1.4.

4.1.2. Color space conversion. As described in

section 2, the system adopts PCS and CMS. They were

defined by ICC to provide a reference color space as a

common interface for color specification between

different devices. Accordingly, every process in the

PCS is independent of kinds of devices [2]. And the

color space such as CIEXYZ or CIELAB is employed

in the device independent color space. In the proposed

system, XYZ color space was introduced for the PCS.

Therefore, RGB colors of the patches in the image

should be converted into those of XYZ color space

before the estimation of the transformation. However,

there exist some conditions which should be

considered for the color space conversion. As defined

in the specification ICC.1:2004-10 [2], the PCS is

relative to a specific illuminant (i.e., D50). Moreover,

we should consider the connection between the PCS

and the device color space. Therefore, following

conditions are considered to estimate the color

conversion matrix:

1) Reference white of the PCS: The PCS defined

in the specification ICC.1:2004-10 is based on

the illuminant D50. Therefore, the reference

white point in the PCS should be equal to D50

(i.e., X=0.9642, Y=1.0, Z=0.8249).

2) Device color space: We employed sRGB as the

device color space. The sRGB space is the

standard device color space for most of digital

imaging or displaying devices. It is defined

based on the illuminant D65.

3) Chromatic adaptation transformation: As the

illuminant of the device color space is not equal

to that of the PCS, the chromatic adaptation

method is required for the color space

conversion. We used the linear Bradford model

for the chromatic adaptation transformation,

which is recommended by the specification

ICC.1:2004-10 for the chromatic adaptation

transformation [1, 2].

By the condition 1), 2), we get the color space

conversion matrix which maps the color in the RGB

space to that of the XYZ space follows:

0.412424 0.357579 0.180464

M cvt = 0.212656 0.715158 0.072186 .

0.019332 0.119193 0.950444

Table 3. ICC profile Tags for the system.

(3)

And by the condition 3), we can get the chromatic

adaptation transformation matrix Madapt as follors:

M adapt

1.047835 0.022897 0.050147

= 0.029556 0.990481 0.017056 .

0.009238 0.015050 0.752034

(4)

By Eq.(3), and Eq.(4), the color space conversion

matrix MCVS for the proposed system can be estimated

as follows:

0.436052

M CVS = M adapt M cvt = 0.222492

0.013929

0.385082

0.716886

0.097097

0.143087

0.060621 .

0.714185

(5)

4.1.3. Correction of the image color in the PCS. The

colors of the image are corrected by the linear

transformation which maps the colors of the image into

those of the reference colors in the PCS. The

transformation is estimated as follows:

M XYZ = M Crr M' XYZ

XN

X1 X 2

= Y1 Y2 L Y N

Z 1 Z 2

Z N

m11 m12 m13 X '1

= m 21 m 22 m 23 Y '1

m 31 m 32 m 33 Z '1

(6)

X '2

Y '2

Z '2

Fig. 6. Profile Structure.

X 'N

Y ' N ,

Z ' N

where Xi, Yi, and Zi denote the colors of the reference

chart, and Xi, Yi, and Zi are those of the color patches

in the chart image. MCrr is the transformation matrix

2006 International Conference on Hybrid Information Technology (ICHIT'06)

0-7695-2674-8/06 $20.00 2006

Tag

Signature

Data Type

mediaWhitePointTag

wtpt

XYZType

mediaBlackPointTag

bkpt

XYZType

CopyrightTag

cprt

multiLocalizedUnicodeTag

profileDescriptionTag

desc

multiLocalizedUnicodeTag

redMatrixCouilmnTag

rXYZ

XYZType

greenMatrixCouilmnTag

gXYZ

XYZType

blueMatrixCouilmnTag

bXYZ

XYZType

redTRCTag

rTRC

curveType

greenTRCTag

gTRC

curveType

blueTRCTag

bTRC

curveType

which maps the colors of the image into those of the

reference colors. Eq.(6) is rearranged to be estimated

estimated by SVD (singular value decomposition) as

follows:

X 1 X '1

X X'

2= 2

M

X N X 'N

Y1

Y

2

M

Y N

X '1

X'

= 2

X 'N

Z1

Z

2

M

Z N

X '1

X'

= 2

X 'N

Y '1

Y '2

M

Y 'N

Y '1

Y '2

M

Y 'N

Y '1

Y '2

M

Y 'N

Z '1

m11

Z ' 2

m ,

12

m13

Z 'N

(7)

Z '1

m 21

Z ' 2

m ,

22

m 23

Z 'N

(8)

Z '1

m 31

Z ' 2

m .

32

m 33

Z 'N

(9)

4.1.4. ICC color profile created by the system. The

estimated relationship to correct the image color is

embedded in the ICC color profile. The ICC color

profile should include the necessary elements as single

file. Fig.6 shows the structure of the ICC profile.

Table.3 shows the tags used for the ICC profile created

by the system. They are defined in the specification

ICC.1:2004-10 [2]. The leftmost column represents the

tag type, and the middle column demotes the signature

embedded in the profile to specify the tag type. The

rightmost column means the data type for each tag type

defined in the specification.

1) mediaWhitePointTag, and mediaBlackPointTag:

The reference white and the reference black are

encoded in each tag. The chromatic adaptation

transformation is applied to the color space

conversion matrix in the proposed system. And the

ICC profile prescribes that the mediaWhitePointTag

values should be equal to the illuminant D50 for

display

devices.

Therefore,

the

mediaWhitePointTag of the proposed system is set

to the values of D50. The mediaBlackPointTag is

set to the reference color of black (i.e., X = 0, Y = 0,

Z = 0).

2) redMatrixColumnTag, greenMatrixColumnTag,

and blueMatrixColumnTag: These tags are

intended to be used for the transform from the

device color space to the PCS. In the proposed

system, the color space conversion matrix

multiplied by the color correction matrix is

embedded in each of redMatrixColumnTag,

greenMatrixColumnTag,

and

blueMatrixColumnTag as follows:

t11

M T = M Crr M CVS = t 21

t 31

t12

t 22

t 32

t13

t 23 ,

t 33

redMatrixC olumn X t11

redMatrixC olumn = t ,

Y

21

redMatrixC olumn Z t31

greenMatri xColumn X t12

greenMatri xColumn = t ,

Y

22

greenMatri xColumn Z t32

input color normalized to be in the range of 0.0 to

1.0 to the output color in the RGB color space as

follows:

redTRC [i ] = g R ( x i ),

g reen TRC [i ] = g G ( x i ),

b lue TRC [i ] = g B ( x i ), i = 0, 1, 2, ..., N S , x i =

i

.

NS

(12)

Here, NS denotes the number of samples of g(x) which

is estimated by Eq.(1). In the proposed system, g(x) is

sampled at 1024 points in the range of 0.0 to 1.0. The

subscripts R, G, and B represent the g(x) values in each

of R, G, and B channel, respectively.

4.2 Profile application process

In the profile application process the image colors are

corrected by the relationship embedded in the color

profile. Fig.7 shows the block diagram of the profile

application process [2]. The tone reproduction is

applied to the color of the input image. And the color

is corrected by the matrix embedded on the created

color profile.

(10)

Fig. 7. Profile Application process.

5 Experimental results

(11)

blueMatrix Column X t13

blueMatrix Column = t .

Y

23

blueMatrix Column Z t33

3) rTRCTag, gTRCTag, and bTRCTag: The TRCs

for each of R, G, and B channels are preserved in

each of rTRCTag, gTRCTag, and bTRCTag. The

proposed system employs the curveType data type

defined in the ICC specification. For the curveType,

a 1-D lookup table(LUT) is established to map the

2006 International Conference on Hybrid Information Technology (ICHIT'06)

0-7695-2674-8/06 $20.00 2006

The experiments are performed in the two

categories. First, the performance of the system is

evaluated under the various light sources. We use

SpectraLight III by GretagMacbeth which can produce

CIE standard light sources. The second experiment is

performed for the white balance distortions. For the

second experiment, we distort the white balance of the

captured image with the preset modes of the digital

camera used for the experiment.

The performance of the system is evaluated by the

average of the color difference of every patch. The

color difference of every patch is estimated as follows:

Table 4. The color differences for the various

illumination models.

D65

CWF

Horizon

U30

"A"

Original

4.24

8.1

28.4

17.6

21.9

Proposed

5.82

6.91

14.7

9.01

QP card

4.32

5.38

17.6

7.32

Table 5. Comparison of the color differences for the

white balance modes.

Sunlight

Shadow

Cloud

Original

6.94

16.82

9.39

31.2

22.41

8.51

Proposed

5.87

6.57

7.59

9.62

9.21

7.92

QP card

8.44

8.72

8.57

11.71

11.52

5.95

6.44

7.68

15.53

14.21

6.13

6.89

7.45

16.29

15.82

Profile

Maker 5

3.44

4.42

9.57

6.23

6.88

Profile

Maker 5

i1

3.86

4.77

12.4

8.75

9.05

i1

Fig. 8. Comparison of the performances of the

systems for the illumination models.

E*ab = (( L*) + ( a*) + ( b*) )

2

2 1/ 2

,

L* = L *1 L *2 , a* = a *1 a *2 , b* = b *1 b *2 , ,

(13)

where L*1, a*1, and b*1 represent the captured color,

and L*2, a*2, and b*2 mean the internal reference color.

The color difference of every patch is averaged, and

we consider this average of the color difference as the

color correction error. The performance of the system

for each experiment is compared with those of three

commercial applications: ProfileMaker 5 and i1 by

GretagMacbeth, QP Color Kit 1 by QPcard. These

systems have their own color charts and their own

unique profile formats. The images are captured by

Canon EOS-10D digital camera.

5.1. Experimental results for the various light

sources

2006 International Conference on Hybrid Information Technology (ICHIT'06)

0-7695-2674-8/06 $20.00 2006

Tungsten Fluorescent

Fig. 9. Images for the comparison of the color

differences for the white balance distortions.

For the experiments, we consider the CIE standard

illumination models generated by SpectraLight III:

D65 (6500K), CWF(4150K), Horizon(2300K),

U30(3100K), and Standard light A(2856K). The

images are captured under these illumination models,

and the color differences are estimated in CIELAB

space for the evaluation of the system performance.

However, every system considered for the experiments

has its own color chart. Therefore, we employ

ColorChecker color chart by GretagMacbeth as the

test chart, which is not used by any systems. The

experiment is performed as follows. First, the image of

each systems native color chart is captured under the

illumination model. And each systems color profile

for the illumination model is created for the color

correction. Then, the image of the test chart of Fig.10

is captured under the same illumination model as that

of the native color chart image of each system. The

image of the test chart is corrected with the color

profile which was created based on the image of the

native chart. Finally, the color correction error of each

Captured

Corrected

Captured

(a)

Corrected

(b)

Fig. 10. The experimental results under the arbitrary white balance modes.

Captured

Corrected

(a)

Captured

Corrected

(b)

Fig. 11. The experimental results under the arbitrary conditions.

system for the test chart image is estimated, and

compared.

The estimated color differences are shown in Table

4. From the result, it seems that ProfileMaker5 shows

the best results. However, we can verify that the

proposed system presents almost equivalent

performance to the other commercial systems. Fig.8

shows the image of the experimental results.

5.2 Experimental results for the white balance

distortions

The white balance of the captured image is distorted

with the preset modes of the digital camera. The digital

2006 International Conference on Hybrid Information Technology (ICHIT'06)

0-7695-2674-8/06 $20.00 2006

camera used for the experiment can be set to the preset

white balance values such as 1) Sunlight (5200K:

X=0.9605, Y=1.0, Z=0.8636), 2) Shadow (7000K:

X=0.9494, Y=1.0, Z=1.1597), 3) Cloud (6000K:

X=0.9523, Y=1.0, Z=1.0080), 4) Tungsten (3200K:

X=1.0, Y=1.0, Z=0.2605), and 5) Florescent (4000K:

X=0.9963, Y=1.0, Z=0.6095). The experiment is

performed in the similar manner as that of section 5.1.

However, the white balance value is distorted instead

of the illumination variation.

Table 5 represents the color differences for the

white balance distortions. From Table 5, we verify that

the proposed system is not much affected by the white

balance distortions. Moreover, it shows the superior

performance to the other systems. Fig.9 shows the

results for the white balance distortions.

5.3. Experimental results for the visibility

Fig.10 and Fig.11 show the experimental results for

the arbitrary conditions. The purpose of this

experiment is to ensure the visibility presented to the

users. Fig.10 presents the results for the arbitrary white

balance modes. The results show that the color

correction performance of the proposed system is not

much affected by the white balance distortions.

Fig.11(a) shows the examples for the outdoor images.

The colors of the captured images were distorted by

Fluorescent mode under unknown illuminations. We

can verify that every color becomes clearer than that of

the original image. The color correction performance is

also verified by the white color and the green tone

reflected on the window in the image. Fig.11(b)

presents another examples, which also confirms the

color correction performance of the proposed system.

6. Conclusion

We proposed the color correction system using the

color compensation chart designed for it. To correct

the color of the captured image, we employed the

concepts of CMS and PCS. And we introduced the

ICC color profile to embed the estimation results for

the color correction in it. The color corrections for the

images which were captured under the same condition

as that of the chart image were performed with the ICC

color profile created by the proposed system. The

proposed system shows almost equivalent performance

to the commercial systems under the various

illumination models. For some white balance

distortions, however, we verified that the proposed

system provides superior performances. The

experimental results present the possibility of the

proposed system for the practical use. Through the

experiments, we found that the errors of the results

may be reduced more by refinement processes. In our

future work, the refinement process to reduce the error

will be considered. And a process which can

compensate the error caused by non-uniform

brightness of the chart image will be included. The

experiments were performed with digital cameras.

However, the proposed system may be used for other

digital imaging devices such as digital photo-scanner.

The modification for other digital imaging devices also

will be considered in our future work.

7. Acknowledgments

2006 International Conference on Hybrid Information Technology (ICHIT'06)

0-7695-2674-8/06 $20.00 2006

This work is supported by the Ministry of

Education and Human Resource Development under

BK21 project, and financially supported by the

Ministry of Education and Human Resource

Development (MOE), the Ministry of Commerce,

Industry and Energy (MOCIE) and the Ministry of

Labor (MOLAB) through the fostering project of the

Lab and Excellency.

8. Reference

[1] Hsien-Che Lee, Introduction to Color Imaging

Science, Cambridge University Press, 2005.

[2] Specification ICC.1:2004-10 (Profile version

4.2.0.0), International Color Consortium, 2004.

[3] Hung-Shin Chen and Hiroaki Kotera, Threedimensional Gamut Mapping Method Based on

the Concept of Image Dependence, Journal of

Image Science and Technology, Vol.46, No.1,

pp44-52, Jan./Feb. 2002.

[4] B. Pham and G. Pringle, Color Correction for an

Image Sequence, IEEE Computer Graphics and

Applications, pp38-42, 1995.

[5] Eung-Joo Lee, Favorite Color Correction for

Reference Color, IEEE Transactions on

Consumer Electronics, Vol.44, No.1, Feb., 1998.

[6] Byung-Tae Ahn, Eun-Bae Moon, and Kyung-Suk

Song, Study of Skin Colors of Korean Women,

Proceedings of SPIE, Vol.4421, pp705-708, June

2002.

[7] Jung-Sook Jun, A Study on the Color Scheme of

Urban Landscape Based on Digital Image Color

Analysis, Yonsei Graduate School of Human

Environmental Sciences, 2002.

[8] H.C. Do, S. I. Chien, K. D. Cho, H. S. Tae, Color

Reproduction Error Correction for Color

Temperature Conversion in PDP-TV, IEEE

Transactions on Consumer Electronics, Vol. 49,

No. 3, pp. 473-478, Aug. 2003.

[9] D. I. Han, Real-Time Color Gamut Mapping

Method for Digital TV Display Quality

Enhancement, IEEE Transactions on Consumer

Electronics, Vol. 50, No. 2, pp. 691-699, May

2004.

[10] B. Pham and G. Pringle, Color Correction for an

Image Sequence, IEEE Computer Graphics and

Applications, pp38-42, 1995.

[11] S. Nakamura, Applied Numerical Methods in C,

Prentice Hall, London, 1995.

You might also like

- Essential Fashion Illustration Essential Color and MediumDocument190 pagesEssential Fashion Illustration Essential Color and MediumHany ElGezawy100% (8)

- 0625 w15 QP 61Document12 pages0625 w15 QP 61Hany ElGezawyNo ratings yet

- Essential Fashion Illustration DigitalDocument192 pagesEssential Fashion Illustration DigitalHany ElGezawy73% (15)

- Essential Fashion Illustration MenDocument192 pagesEssential Fashion Illustration MenHany ElGezawy100% (2)

- 0625 w15 QP 63Document20 pages0625 w15 QP 63Hany ElGezawyNo ratings yet

- 0625 w15 QP 31Document16 pages0625 w15 QP 31Hany ElGezawy100% (1)

- 0625 w15 QP 62Document16 pages0625 w15 QP 62Hany ElGezawyNo ratings yet

- 0625 w15 QP 33Document16 pages0625 w15 QP 33Hany ElGezawyNo ratings yet

- Essential Fashion Illustration DetailsDocument193 pagesEssential Fashion Illustration DetailsHany ElGezawy95% (37)

- 0625 w15 QP 12Document20 pages0625 w15 QP 12Hany ElGezawyNo ratings yet

- Essential Fashion Illustration MenDocument192 pagesEssential Fashion Illustration MenHany ElGezawy100% (2)

- 0625 w15 QP 32Document20 pages0625 w15 QP 32Hany ElGezawyNo ratings yet

- 0625 w15 Ms 13Document2 pages0625 w15 Ms 13Hany ElGezawyNo ratings yet

- 0625 w15 QP 11Document16 pages0625 w15 QP 11Hany ElGezawyNo ratings yet

- 0625 w15 Ms 63Document5 pages0625 w15 Ms 63Hany ElGezawy100% (1)

- 0625 w15 Ms 32Document7 pages0625 w15 Ms 32Hany ElGezawy100% (1)

- 0625 w15 QP 13Document16 pages0625 w15 QP 13Hany ElGezawyNo ratings yet

- 0625 w15 Ms 33Document7 pages0625 w15 Ms 33Hany ElGezawyNo ratings yet

- 0625 w15 Ms 62Document5 pages0625 w15 Ms 62Hany ElGezawy100% (1)

- 0625 w15 Ms 61Document5 pages0625 w15 Ms 61Hany ElGezawyNo ratings yet

- 0625 w15 Ms 31Document8 pages0625 w15 Ms 31Hany ElGezawy75% (4)

- 0625 w15 Ms 12Document2 pages0625 w15 Ms 12Hany ElGezawyNo ratings yet

- 0625 m15 QP 32Document16 pages0625 m15 QP 32Hany ElGezawy100% (2)

- 0625 m15 QP 62Document16 pages0625 m15 QP 62Hany ElGezawyNo ratings yet

- 0625 w15 Ms 11Document2 pages0625 w15 Ms 11Hany ElGezawyNo ratings yet

- 0625 m15 QP 22Document20 pages0625 m15 QP 22Hany ElGezawyNo ratings yet

- 0625 m15 QP 12Document20 pages0625 m15 QP 12Hany ElGezawyNo ratings yet

- 0625 m15 QP 52Document12 pages0625 m15 QP 52Hany ElGezawyNo ratings yet

- 0625 m15 Ms 62Document5 pages0625 m15 Ms 62Hany ElGezawyNo ratings yet

- 0625 m15 Ms 52Document5 pages0625 m15 Ms 52Hany ElGezawyNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5782)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- WS Calipers and MicrometersDocument2 pagesWS Calipers and MicrometersEster Fatmawati100% (1)

- Steady StateDocument1 pageSteady StatebellebelalNo ratings yet

- Science Form 4 Exam QuestionDocument7 pagesScience Form 4 Exam QuestionNorliyana Ali78% (23)

- Quantum Mech RVW 2Document29 pagesQuantum Mech RVW 2ravb12-1No ratings yet

- Innovair 2017 Innovair Catalog Spanish - Compressed PDFDocument24 pagesInnovair 2017 Innovair Catalog Spanish - Compressed PDFDenise Garrido100% (3)

- ADP I Lab Manual FinalDocument52 pagesADP I Lab Manual Finalसंकेत कलगुटकरNo ratings yet

- S-Frame Design of Steel StructuresDocument71 pagesS-Frame Design of Steel StructuresBarry OcayNo ratings yet

- WORKSHOPDocument9 pagesWORKSHOPManjunatha EikilaNo ratings yet

- Project Report EEDocument33 pagesProject Report EESumit Chopra20% (5)

- AKM 204E - Fluid Mechanics: 2 W 3 W 3 W - 6 2 Atm, AbsoluteDocument4 pagesAKM 204E - Fluid Mechanics: 2 W 3 W 3 W - 6 2 Atm, AbsoluteSude OğuzluNo ratings yet

- Control Systems OverviewDocument19 pagesControl Systems OverviewTaku Teekay MatangiraNo ratings yet

- Scientific American - May 2014Document88 pagesScientific American - May 2014Christopher Brown100% (1)

- Hawe MobileDocument18 pagesHawe MobileZivomir VulovicNo ratings yet

- Corbin World Directory of Custom Bullet Makers 2001Document94 pagesCorbin World Directory of Custom Bullet Makers 2001aikidomoysesNo ratings yet

- ECE5590 MPC Problem FormulationDocument32 pagesECE5590 MPC Problem FormulationPabloNo ratings yet

- Forces in Equilibrium ExercisesDocument5 pagesForces in Equilibrium ExercisesayydenNo ratings yet

- Mathematical Patterns in NatureDocument55 pagesMathematical Patterns in NatureGenevieve Amar Delos SantosNo ratings yet

- Design of Axial Loaded ColumnsDocument30 pagesDesign of Axial Loaded ColumnsCharles AjayiNo ratings yet

- 7 Semoldn New 200411050825Document2 pages7 Semoldn New 200411050825Pankaj KumarNo ratings yet

- ELE515 Power System Protection CourseDocument2 pagesELE515 Power System Protection CourseSanjesh ChauhanNo ratings yet

- 2.4 2.6 Newtons Laws and Momentum PDFDocument10 pages2.4 2.6 Newtons Laws and Momentum PDFAZTonseNo ratings yet

- Final GATE 2023 ME UA SecondDocument44 pagesFinal GATE 2023 ME UA SecondStarNo ratings yet

- Morse-VibrationAndSound DjvuDocument839 pagesMorse-VibrationAndSound DjvuBhaveshNo ratings yet

- IAE V2500 BeamerDocument478 pagesIAE V2500 Beamerashufriendlucky92% (12)

- Nikola Tesla's patent for an electrical transformerDocument13 pagesNikola Tesla's patent for an electrical transformerdllabarre100% (1)

- Mathematics Class IX B Chapter 1 Worksheet-1Document5 pagesMathematics Class IX B Chapter 1 Worksheet-1Debashish ChakravartyNo ratings yet

- Swiss Guidelines-SAEFLDocument41 pagesSwiss Guidelines-SAEFLRoshanRSVNo ratings yet

- Research ArticleDocument10 pagesResearch ArticleKumar SingarapuNo ratings yet

- Chemrite PP FibreDocument2 pagesChemrite PP FibreghazanfarNo ratings yet