Professional Documents

Culture Documents

Articol 54

Uploaded by

Ioana ElizaOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Articol 54

Uploaded by

Ioana ElizaCopyright:

Available Formats

Quadratic Teams, Information Economics, and Aggregate Planning Decisions

Author(s): Charles H. Kriebel

Source: Econometrica, Vol. 36, No. 3/4 (Jul. - Oct., 1968), pp. 530-543

Published by: The Econometric Society

Stable URL: http://www.jstor.org/stable/1909521

Accessed: 05-05-2016 08:59 UTC

Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at

http://about.jstor.org/terms

JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted

digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about

JSTOR, please contact support@jstor.org.

Wiley, The Econometric Society are collaborating with JSTOR to digitize, preserve and extend access to

Econometrica

This content downloaded from 193.226.34.227 on Thu, 05 May 2016 08:59:58 UTC

All use subject to http://about.jstor.org/terms

Eco,lometrica, Vol. 36, No. 3-4 (July-October, 1968)

QUADRATIC TEAMS, INFORMATION ECONOMICS, AND

AGGREGATE PLANNING DECISIONS'

BY CHARLES H. KRIEBEL

The construct of team decision theory developed by J. Marschak and R. Radner provides

a useful framework for the conceptualization and evaluation of information within or-

ganizations. Quadratic teams concern team decision problems where the organizational

objective criterion is represented by a quadratic function of the action and state variables.

The purpose of this paper is to explicitly relate the analysis of quadratic team decision

problems to recent research on optimal decision rules for aggregate planning-for example,

as reported by Holt et al [5], Theil [9], van de Panne [10], and others. A numerical example

is presented based on the well known microeconomic model of production and employment scheduling in a paint factory.

1. QUADRATIC TEAM DECISION PROBLEMS2

CONSIDER AN ORGANIZATION (or team) composed of i= 1, 2, ..., N members who

choose actions during each of t= 1, 2, ..., T time periods. Let the action of member

i in period t be denoted by ait, and let xi, correspond to a state of the world observed by member i in period t. The states xi, are random variables, which will be

identified by the notation xit, and are elements of the space X. Suppose the objective function which the organization seeks to optimize is3

(1) c(a, x) = X0-2a'(x)+a'Qa

where 20 is a scalar; a is a (column) vector of team actions with elements ait; A(x) is

a measurable vector-valued function on the space X, which is partitioned to con-

form with a; and Q is a square symmetric matrix with (N T)2 elements, also

partitioned to conform with a. That is,

T

c(a, x) = X0-2 , Y ait i(X)+ Y Y ait q(ij) _aj

t= 1 i= 1 t,t= 1 i,j= 1

where q(ij),, are elements of the partitioned matrix Qt, for i,j = 1, 2, ..., N; and

Q, are ordered matrices from the partitioned Q for t, z = 1, 2, ..., T.

Since the states xit occur randomly, the optimization of (1) necessarily involves

the computation of expectations on c(a,x), viz., for the elements of x(x). To con-

sider information explicitly, define an observation on the state xit as hit(x)=zit,

for i= 1, 2, .. N; t= 1, 2, ..., T; where {zi,} =Z, the space of observations. That is,

1 This report was prepared as part of the activities of the Management Sciences Research Group,

Carnegie-Mellon University, under Contract NONR 760(24), NR 047-048 with the U.S. Office of

Naval Research and under a Ford Foundation Grant. Reproduction in whole or in part is permitted

for any purpose of the U.S. Government.

2 The basic propositions of team theory are presented in Marschak [3]. The discussion in this and the

next section is based on Radner [6] and [7].

3 Single primes correspond to the transpose operation.

530

This content downloaded from 193.226.34.227 on Thu, 05 May 2016 08:59:58 UTC

All use subject to http://about.jstor.org/terms

QUADRATIC

TEAMS

531

the information function hi, defines a mapping from xi, in X into hi,(x) in Z. More

generally, h(.) corresponds to a random function, such that hr(xx)=z rs with

probability P(ZriXs, hr). The (NT)-tuple of all information functions in the organization,

h = ((h1l, ...I hi1,, hN1),..., (h1T . hiT, ..., hNT)),

is called the information system (or structure) where he-H, and provides an

explicit description of the information (observations, forecasts, messages, or the

like) that is available to each individual in the organization at each instant of time.

To distinguish the computation of expected values in the remaining discussion,

the following conventions are employed :4 Ex is taken with respect to the marginal

joint probability measure on the state space X; Exlz,h or EXlZ is taken with respect

to the conditional joint probability measure on the state space X given the infor-

mation system h and the observations z e Z; and E, is taken with respect to the

marginal joint probability measure on the observation space Z, given h E H, where

X = ., Xil, ..XN1). (X1T .XiT. XNT)) and

Z = 1 ((ll n Zil, ZNJ) (Z1T, ZiT. ZNT)) -

Finally, we define a component decision rule, cxi, as an explicit procedure which

unambiguously specifies a particular terminal action or decision to be selected in

response to each item of information available. That is, cxi is a real-valued measurable function on Z which defines a mapping from zi, in Z into cij (z) in A, the action

space, for i= 1, 2, ..., N; t= 1, 2, ..., T. The collection of all component decision

functions for the organization is called the team decision function, a s A, and will

be written a (h (x)) = a (z) = a.

The function for c (a, x) in (1) is convex if the matrix Q is positive definite. In this

case, necessary and sufficient conditions for optimization of the function with

respect to team actions direct that the partial derivatives of the components of a

are equated to zero.5 Let 0/0a denote taking partial derivatives with respect to a

column vector. Then

0/0ac(a, x)= -2A(x)+2Qa = 0

or

(2) a* = Q- 1 i(x)

Now suppose i (x) can be written

(3) A(x) = Rx

where R is a square matrix with (N T)2 elements, and x' is the column vector of

state variables defined above. Then from (2), the best team decision rule given an

a priori probability distribution on xZ is

4 For example, see [8], for further discussion on the relative importance of these distinctions within

the Bayesian framework of applied statistical decision theory.

5 A general discussion of quadratic optimization is available in [1].

This content downloaded from 193.226.34.227 on Thu, 05 May 2016 08:59:58 UTC

All use subject to http://about.jstor.org/terms

532 CHARLES H. KRIEBEL

(4) Ex(*=Q-lRExxI,

or

T

(5) EXt = QtR,EX for t= 1, 2, , T,

1,1 = 1

where Q - and R have been partitioned as Q by actions and time periods, and the

submatrices of the partitioning by time periods are identified as Qt,r and Rt,

respectively. The form of the solution in (5) is particularly useful in distinguishing

optimal strategy dynamic decision rules, which are discussed more fully below.

(Cf., [9] and [10].)

In [7], Radner derives a set of simultaneous "stationarity" conditions for optimal

component decision rules given an information system, under which a team

decision rule remains optimal if and only if it cannot be improved by changing

any of the individual component rules. Restating Radner's theorem for the partitioned objective function in (1) gives:6

THEOREM: Let E i2 (x)< oo, for i= 1, 2, ..., N; t= 1, 2, ..., T; then for any

information system h, the unique (a.e.) best tream decisionfunction is the solution of

T

(6) q(ii), -it + E q(ii)Z , Ex,, (ai, hit) + E E q(ij),, Exlz (ail hit) = Exlz (2itI hit)

t#t

=1

Jor i= 1, 2, ..., N; t= 1, 2, ..., T.

The stationarity conditions in (6) have also been referred to as "person-by-

person" optimality requirements which, when taken in conjunction with (2) or (4),

specify a procedure for the evaluation of information system alternatives. In this

regard, the decision theory constructs of "perfect" and "null" information provide

explicit criteria for normative analysis under uncertainty. Let V'0) represent a

(theoretically) perfect information system, such that h((x)W=x', where x corresponds (a posteriori) to the true states of the world; let h(?) represent a (theoretically) null information system, such that h(?) (x) = y, where y is an (N T) vector

of constants (independent of x). The value of a particular information system,

V(h(k)), is defined as the net change in the expected payoff of the optimal team

decision rule that obtains from implementing the system h(k) in lieu of the null

system, h(0 .i More specifically, for c(a, x) in (1), an objective cost (or loss) function,

the expected value of perfect information is

6 This theorem is proved in [7] and can be interpreted as follows: The computed expected value of

the decision rule oi, is the action aj, Then if the conditions on 4j, in (6) are met simultaneously by each

ait (for i= 1, 2, ..., N; t= 1, 2, ..., T), the function c(a,x) is stationary at the optimal value, since a' =

aQa in (1).

7Note, the statement of (1) implies that information costs are independent of the actions, a, and

hence can be evaluated separately, i.e., simply by subtracting the cost of the system from V(h(k)). This

assumption would be inappropriate if Q was not stationary over time and, for example, depended upon

x. The practical consequences of assumed independence between decision and information processing

costs are discussed at length in [2].

This content downloaded from 193.226.34.227 on Thu, 05 May 2016 08:59:58 UTC

All use subject to http://about.jstor.org/terms

QUADRATIC

TEAMS

533

(7) V(h('O)) = EX [i'(XZ) Q- 1 A(X)] -i (5X) Q - 1 i (X-)

where EAX(X) = X(48 The relation in (7) gives an upper bound on performance

improvement that can be realized in this case through reductions in information

uncertainty.

2. ECONOMICS AND INFORMATION SYSTEMS

In [6] Radner analyzes several information systems for quadratic team decision

models. Variants on two of these partially decentralized information system models

are detailed below for the case where the team decision rule is partitioned by

member actions and time periods. In the final section, these models are specialized

to the aggregate production planning problem of Holt, Theil, and others (cf.,

[5, 9, andlO]), and a numerical example is presented.

Suppose the state of the world is such that each team member observes a

random variable Yi, from the information process which generates xi,, where

Yit= Oi, () on X, and Oit(*) corresponds to an observational function for member i

in period t, Oi,e hit. For the physical structure of many organizations it is often

reasonable to assume that the observational functions Oi= {Oil, ..., Oit, ..., OiT} are

statistically independent for all i. Clearly, this assumption does not mean that the

information system, which we will identify as h(1). Then h')-= 0i for all i, and the

place the converse applies generally. Suppose, however, that we initially assume

that no communication is permitted between any members of the team. For a

particular organization this assumption corresponds to a completely decentralized

information system, which we will identify as h(1). Then h) = Oi for all i, and the

(vector) information functions are also statistically independent. Referring to the

theorem in (6), independence implies that

(8) E(tIjOj)=E(cxj) for j ii.

Thus, the optimal team decision rule under the completely decentralized infor-

mation system h(1) is given directly by (6) and (2). For example, the optimal

strategy dynamic decision rules (cf. [9]) for the first period are

T

(9) il = (zlq(ji) )Z[Ex z(Rl ZOil)- E q(ji)1rExjz(7irlOil- E E q(ij) 1, a] I

T=2

T=

j#i

for i=1, 2, ..., N

where

T N

(10) aJt = , 2; q(js ) ExlzAkr(x)T( for ji; t= 1, 2, ..., T.

T=l k=l

Following the previous discussion, the expected value of the completely decentralized information system h(1) gives

8 If c(a, x) in (1) corresponded to a payoff function, the signs in (7) would be reversed.

This content downloaded from 193.226.34.227 on Thu, 05 May 2016 08:59:58 UTC

All use subject to http://about.jstor.org/terms

534

CHARLES

H.

KRIEBEL

T N

( 11) V(h( ')= I I ( q(ii)tt){EzEX1Z(2it Oit)2_[Ez Exlz (it oit) ]

t=l i=l

where the notation Oit = {Oil, Oi2, Oit} the t-tuple of observations by member i

up to and including period t, for t= 1, 2, ..., T. That is, assuming the team objective function in (1) represents operating costs, the relative worth to the organization of employing this system is the reduction in expected costs that is

possible by acknowledging the variance in the state variables (or a transformation

of these variables) a posteriori. The value of the system is linear in the variance

component and hence exhibits constant returns to scale relative to the posterior

variance of the linear component in (1). The obvious distinction between h(l) and

the null system is that in the latter case no observations are made on the information processes, and hence there is no formal basis on which to revise an a priori

distribution on x.

For example, suppose the random state variables xit are independently distributed according to a normal probability law, with density function fN(xitlpit, ai 2)

for all i, t. The joint distribution of the xit, which we identify as the random vector

x, is also normally distributed, according to fN(x I tt, Z), where the expected value

of the random vector is p and the covariance matrix is Z. That is,

(12) fN(xLu, Z) = (27t)2 N T Il/2exp{ (x_ 2 -)/-l(xx)}

for i= 1, 2, ... N; t= 1, 2, ..., T; and -oo x xit, X. Now suppose (3) holds, so

that

(13) A(x) = Rx',

where R is the matrix defined above. Then from (12) it follows that the distribution

of i(xc) or, more compactly, . _ i(xZ), is also normal, where in particular

(14) i isfN(QJRI,RZR').

Assume further, for computational convenience, that the precision of the process

is known exactly, i.e., that Z is in fact the true covariance matrix, but the process

mean, say yA, is not known with certainty. Then a natural conjugate a priori

distribution (cf., [8]) for the random vector jA is the normal probability distribu-

tion fN(IlIm, S) with m=Ry and S=(RZR'). The quantity S as a measure of the

precision of the information available on pA can be expressed in units of the process

mean precision, say s. The information S can be defined in terms of the parameter

n, for n S/s. Then, using the notation cb and 6c to denote prior and posterior

parameters, respectively, the prior distribution on Pi can be expressed as fN (i Im,

sh). As the observations Oit(x) are recorded by the team members, they are comparable to "samples" providing statistics (i, n) on the normal process. In this

regard (cf., [8, Chapter 12]), the posterior distribution of Pi will also be normal

with parameters

This content downloaded from 193.226.34.227 on Thu, 05 May 2016 08:59:58 UTC

All use subject to http://about.jstor.org/terms

QUADRATIC

TEAMS

535

(Il5a) mh = n (nmh+ nm),

(15b) n = h+n .

The mean and variance of the posterior distribution are therefore,

(I1 6a) E (ttA I m, n) = -A = mh,

(16b) Var thl , n)--sh

Identifying the diagonal elements of the covariance matrix in (16b) as &i', the result

in (11) corresponding to the expected value of the completely decentralized information system h(l1, can be rewritten

T N

(17) V(h(l))= (1/q(ii),,) it.

t=l i=l

The second information system model we now wish to examine relaxes the

previous assumption and allows communication of member observations.

One of the most common forms of communication in any organization is the

dissemination of "summary information" through published reports. Suppose that

each team member communicates some function of his observations, say dit=

6i(zit), to a centralized agency in the organization, which computes all such information received and then periodically disseminates this compilation to each

member as a report.9 We will define this information system as "partially decentralized information reporting" and identify it by the symbol h 2). Then the infor-

mation function for each member under h(2) is given by V)(x)={Oit(x),6}, for

i= 1, 2, . N; t= 1, 2, ..., T; where the collection of reports by the central staff

to each member is

dt = { (d11, ..., dN), (d1t, . dNt)) , for t= 1, 2, ..., T,

and

dit = 6i(zit) = 6i(Oit(x)) , for all i, t,

given 6(()=(1(.)-, .)-, 6N(.)); and Oit(x)={ Oil ...I Oit) as above.

Before proceeding to the derivation of the best team decision rule under h

we note the following lemma (cf., [6, p. 504]).

LEMMA: Let A, B, and C be independent random variables and let b be a contraction of B, and c be a contraction of C; and let D be a real valued random variable

defined by D=f(A, B, c) where f(.) is some measurable function. Then

(18) E[DIA, b, C] = E[DIA, b, c].

Now suppose we assume, as before, that the observations Oit(x) are independent

' For example, the functions bi(.) might correspond to a weighted average of the observations

zi overr =1, 2, ..., t.

This content downloaded from 193.226.34.227 on Thu, 05 May 2016 08:59:58 UTC

All use subject to http://about.jstor.org/terms

536

CHARLES

H.

KRIEBEL

for all j#A i; i, j = 1, 2, ..., N. Applying the lemma in (18) to the stationarity theorem

in (6) gives'0

(19) ExIz(ajt I W))= Ex1z(ajtlj) for i#j,

and

(20) EXjZ(a(iTjM2))= ExjZ(Xi@I6) for li#t.

Substituting from (19) and (20) into the theorem in (6) we obtain

T

(21) q Zii) q(,,)1 Exaz(ixi(5)+ Z Z q(ij)1rEx1tLI(5) = E t

r

r=

j#i

fori=1,2,...,N; t=1,2,..., T.

For any given set of observations, the right-hand side of (21) can be expressed as

(22) E /(xt h(i2)) = EXIZ (Rjtjdt), all i, t,

since by direct substitution into the lemma in (18) we have

f = 4t B = Oit , b = 6i(Oit) = ditd

A =(a constant), C= {6j}ji, c = Idjtlji

The conditional expectation of (21), for ( given, is therefore

T

(23) E q(jj)r=1

Exjz(aiT56)

+ Y jti

, q(ij)tr Exlz (j I )=Exlz (it dt)

r=1

for i= 1, 2, ..., N; t= 1, 2, ..., T. Subtracting (23) from (21) gives

(24) ctit = Exjz (aitl 6)+ (q(j)j [Exjz (ijtI ht) -Exjz (jitI dt)]

for i= 1, 2, . N; t= 1, 2, ..., T. On the other hand the relation in (23) could be

solved directly to obtain

(25) EXIZ(ax6) = Q-1 EXIZ(Jd).

Substitution, by individual components, from (25) into (24) yields the best team

decision function under ht21, say a; where for i= 1, 2, ..., N and t= 1, 2, ..., T

T N

(26) &qit = Z tx kdt)?(1/q(ii)tt)[Exlz (itl hlt)-Exjz (jtj jdt)]

,r=1 k=1

and the coefficients q(ik)tT are elements from the partitioned Q- 1, as defined above.

Proceeding as before, the expected value of the "partially decentralized in-

formation reporting" system h(2), becomes upon simplification

10 More specifically, for (19) the elements in (18) are: f=cat, A={k} for k#i,j; B=0jt; C=Oit;

c=bi, and D=ajt. For (20) the elements in (18) are: f=XiT; A=(a constant); B=(Oj,, Oit, 3d); b=3i;

C = {bj}, c = {djt} for j#A i; and D = ajT-

This content downloaded from 193.226.34.227 on Thu, 05 May 2016 08:59:58 UTC

All use subject to http://about.jstor.org/terms

QUADRATIC

TEAMS

537

T N

(27) V(h(21) = E E A [2)) Var (2itI dt)]

t =1 i= 1

+2 E E q(ii)t Cov ()it AXj I dt)d

t<t i,j

where "Var(.)" and "Cov( )" correspond to the variance and covariance, respectively.

The first summation component in the decision rule of (26) is the counterpart

of, say (4) given (3), where the expectation is over the distribution of states conditioned on the reported observations. The second component in (26) represents

an adjustment for the current period (t) between the reported observations for the

ith member and his own observation, where the weighting factor, say wit, for the

current period conditioned on the report is wit = q(LL)tt -(1/q(ii)tt), for all i, t.

The expression for V(h(2)) in (27) for the a posteriori distribution on the state

variables is most easily explained by again continuing the example discussed

under the previous system h(l). Assuming (12), (13), and (14) hold, recall the posterior distribution on jl was determined under h(l) on the basis of the individual

members' observations only. Under the current system, h , communication of

observations takes place, and hence the posterior distribution is obtained with

respect to the pooled observations for each period, in the form of a report, dt.

Following the earlier notation, the covariance matrix of the posterior distribution

of i? based on dt, that is comparable to (16b) based on Oit only, is now

(28) Var (p. I mh, ) -s(e%+ e) = s(e),

where e is the relative precision of the process on the basis of the augmented information. Then identifying the elements of (28) as O(ij)tr, the value of h'2) in (27)

can be written as

T N

(29) V(h (2) = E t=1

(11q(ii)tt)

[1I2 - 5(ii)tt] + 2 E E q( (ij)tT

i=1 r t<t i,j

where vi2 are the elements in (16b). It is apparent that V(h(2)) > V(h"')), since (17) is

contained within (29), and that the system h 2) also exhibits constant returns to

scale.

3. AGGREGATE PLANNING SYSTEMS: THE PAINT FACTORY CASE ILLUSTRATION

In [9] Theil presents a general discussion of optimal decision rules for aggregate

planning problems under quadratic preferences (i.e., criterion functions). One

empirical study reported in detail is the well known analysis of production and

employment scheduling in a paint factory by C. Holt, F. Modigliani, J. Muth, and

H. Simon (cf. [5]). We now wish to consider this planning problem within the

framework of the preceding team decision models.

This content downloaded from 193.226.34.227 on Thu, 05 May 2016 08:59:58 UTC

All use subject to http://about.jstor.org/terms

538

CHARLES

H.

KRIEBEL

The basic decision problem posed by Holt and his associates was to determine

values for production (Pr) and work force (WV) levels in each of t= 1, 2, ..., T time

periods which minimize total variable costs of operations, estimated by the quadratic function

T

(30) C(T)= E {C13+cjLt+C2(Wt-Wt__ClJ2+C3(Pt-C4L t)2 +CsPt

t=1 -C6Lt+cI2PtLt+c7(It-c8 -cgSt)2}

where unit sales in each period (St) occur randomly; unit inventories (It) are given

by the balancing equation

(31) t =It - 1 + Pt -St for t = 1, 2, ..., T

the number of workers available for work (Lt) differs from the total payroll work

force (Wt) by randomly determined absentees (rt) in each period, that is,

(32) Lt= Wt-rt, for t=1, 2, ..., T;

and initial inventory (Io) and work force (W1) levels are known conditions."

To formulate (30) as a team decision problem, consider the team composed of

two members, i = 1, 2, where each member is responsible for a single decision

variable, P, and WV, respectively, and records observations on one of the indepen-

dent state variables for sales (St) and absentees (r,), in t= 1, 2, .... T time periods.

That is, following our earlier notation, let alt-Pt and a2t- Wt, and let x 1tSt

and x2t- rt for t= 1, 2, ..., T and N=2. Substituting into (3) for the relations in

(31) and (32), it is clear that this cost function is a special case of the general quadratic in (1).12 Then, given a planning horizon, T, an optimal solution to the static

decision problem in (3), follows directly from (2). More specifically, estimates for

the cost coefficients in (30), provided by the original authors are

cl = 340.0, C4= 5.67, C7 = 0.0825,

(33) c2 = 64.3 , C5= 51.2, cs = 320.0,

C3 = 0.2, c6= 281.0, c9 = cll = c12 = c13 = 0.0

Given a planning horizon of T=6 time periods, the optimal static decision rules

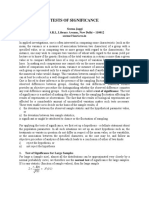

for production and work force levels from (2) are presented in Table I.

Theil and others have shown that the optimal stratcgy for the dynamic decision

problem in (30) is obtained by selecting the decision rule of the first period, updating forecasts of the state variable and initial conditions in each successive time

period on the basis of the most recent information available. For a planning

" In the original analysis presented in [5], no explicit provision was made for absenteeism, so that

L,= W, for all t, in (30) and (32). The modification for absentees is introduced here to permit a simple

interpretation of the team decision model and, as such, is nominally a generalization of the original

problem.

12 See Theil [9, Chapters 3 and 5] (particularly, pages 163-166), for the specific algebraic detail of(1),

given (30). In the interests of brevity this detail is not repeated.

This content downloaded from 193.226.34.227 on Thu, 05 May 2016 08:59:58 UTC

All use subject to http://about.jstor.org/terms

539

Term

TABLEI

P*(optimalrducn)

W*(optimalwrkfce)

OPTIMALSCDENRUFHWKX-PAIGOZ

6-.95301287 4650.219

5-.619032874 .1026

4-3.680719 2 .4078

3-1.58904627 .59

20.485193 70.65

1.83076945 2.03168

6-89.0125374 8

5-39.0281746 93

4-3.80267159 4

31.57064829-

278.501463-9

152.80946-3 7

tConsaWOSI-r,23456

This content downloaded from 193.226.34.227 on Thu, 05 May 2016 08:59:58 UTC

All use subject to http://about.jstor.org/terms

540

CHARLES

H.

KRIEBEL

horizon of six time periods, the optimal strategy dynamic decision rules for pro-

duction and work force levels, t= 1, 2..., are

0.460St - 3.061rt

0.231St+ 1 + 1.309rt+ 1

0. 106S+ 2+ ?0.601rt+ 2

(34.1) P* 152.82 +Et 04S +2 +E0607r,+2 _0.46011 ?j+0.902Wt_j

0.008St + 4 + 0.044rt + 4

- 0.004St + 5-0.022rt + 5

and

0.0106St + 0.0601rt

0.0094St + 1 + 0.0535rt + 1

0.0080St + 2? 0.045 1rt+ 2

(34.2) W* 1.183 + 0.760Wt - 1-0.0106It - 1 + Et .65S+3 0.0366r +3

0.0049St + 4 + 0.0278rt + 4

_0.0030St+5 +0.0168rt+ 5_

where Et[xt+,] corresponds to a forecast of the state variable x in period t+?based on information available at the start of period t. The decision rules in (34)

follow directly.from (33) and (5) for t=1. It is these dynamic rules which will

occupy our attention for the remainder of this discussion. In this regard, the optimal decision rule coefficient values are relatively insensitive to changes in the

planning horizon parameter for 6 < T < oo (cf., [9, Chapter 5]). For computational

convenience, therefore, a six-period planning horizon will be employed in subsequent analysis of the paint factory case.

As indicated above, the expected value of perfect information criterion provides

an upper bound on cost savings that can be realized through reductions in in-

formation uncertainty. The perfect information criterion in (7) for the paint

factory cost coefficient estimates in (33) gives

2

(35) V(h(c1)) = L E k(oj)tr, (xitxjT)

i,j= 1 t,= 1

where the elements a (xitxjT) are the covariance terms for St and rt, t= 1, 2, ..., 6;

and the coefficients k(ij), = k(jJrt are given in Table II. If we assume that sales and

absentees are independently distributed random variables and that the underlying

stochastic process generating each variable is stationary over time, (35) simplifies to

(36) V(h("))= 1.4384a2 (St) + 29.1217a2 (rt)

? 2.2856a (St St + 1)- 6.0818a (rtrt + 1)

? 1.6156a (St St+ 2)- 1.1014a (rtrt+ 2)

? 1.0026a (St St + 3) + 0.2030a (rtrt + 3)

? 0.5120a (St St + 4) + 0.5428a (rtrt + 4)

? 0.0470a (St St + 5) + 0.2660a (rtrt + 5)

This content downloaded from 193.226.34.227 on Thu, 05 May 2016 08:59:58 UTC

All use subject to http://about.jstor.org/terms

QUADRATIC

S

6

3

4

TEAMS

541

TABLEI

25.3160

10.479-6

25.1796-03

10.26-57

25.143-0697

10.26-35798

24.9765-0831

10.284-563

24.675-01893

10.358-92476

23.857-01469

10.45-367289

tij12

1 23456

This content downloaded from 193.226.34.227 on Thu, 05 May 2016 08:59:58 UTC

All use subject to http://about.jstor.org/terms

VALUESOFk(iJ),:CRINWGHTPMY

542

CHARLES

H.

KRIEBEL

The structure of the matrix K in Table II evolves directly from the R and Q ma-

trices (cf., equation (7)), which in turn depend on the cost coefficients {C7, C3, C4,

C5, C2} and {C7, C9, C3, C4, C12}, respectively in (30). The covariance terms in (35) are

those of the underlying stochastic process generating the state variables. (If these

covariances are unknown a priori they can be estimated from subjective and/or

observational data.) Clearly, covariance as an index of state variable dispersion is a

convenient measure of information uncertainty for decision making. In this case,

systems which provide more complete information on the state variables exhibit

constant returns to scale in the process covariance. More specifically, for production and workforce decisions the expected value of increasingly accurate forecasts

(conditional expectations) of the state variables is V(h(')) in the limit.

In the discussion of information systems for the quadratic team, two particular

systems were described in some detail: h"), a completely decentralized information

structure; and h(2', a partially decentralized structure which allowed communication of reports on observations. Following this analysis for the paint factory cost

coefficients in (33), the optimal strategy dynamic decision rules under h(') in (9) are

(37.1) Pt = E[PI* I lt]

and

(37.2) Wt = E [W* 02t] for t = 1, 2,.

Similarly applying system h(2) to the paint factory case, the optimal strategy

dynamic rules in (26) are

(38.1) Pt = E[P*Idt] +?0.712 E1[(St.dt)(St h -1.632 E[(rtldt)-(rtl h

+ 0.594 E [(St+ 1 Idt) -(St+ 1 h(l2t) +0.475 E[(St+2 Idt)-(St+21h'lt')

+0.356 E [(St+3dt)-(St+31h (2))] +0.237 E[(St+41dt)-(St+41h (2))]

+0.119 E[(St+ 5 dt)-(St+51h (2))],

and

(38.2) Wt = E[W*ldt]+0.0476E[(rtJdt)-(rt Ih(2))

for t= 1, 2. where P* and W* are given by the decision rules in (34.1) and

(34.2), respectively, and the terms E(.Idt) and E(.I )h() are forecasts of the state

variables based on the report of observations (dt) and each member's knowledge

of the reporting system and his own observations (hW)). Concerning the latter, the

optimal strategy dynamic rules in (38) permit adjustment of the forecasts of the

local state variables in each case on the basis of improved information.

From the decision rules given in (34), (37), and (38), it is apparent that the

economics of introducing a formal description of information systems into the

analysis of aggregate planning decision rules, is reflected directly in the feed-

forward segment of the decision functions and the relative increased accuracy

such systems provide for state variable forecasts. In this regard, the team decision

This content downloaded from 193.226.34.227 on Thu, 05 May 2016 08:59:58 UTC

All use subject to http://about.jstor.org/terms

QUADRATIC

TEAMS

543

model has been a convenient analytical framework for detailing the normative

evaluation. Some practical considerations based on this discussion for the design

of computer-based decision and information systems in the firm are analyzed in [2].

Carnegie-Mellon University

REFERENCES

[1] BOOT, J. C. G.: Quadratic Programming, North-Holland Publishing Company and Rand McNally and Co., 1964.

[21 KRIEBEL, C. H.: "Information Processing and Programmed Decision Systems," Management

Sciences Research Report No. 69 (April, 1966), Carnegie Institute of Technology, Pittsburgh,

Pa.

[31 MARSCHAK, J.: "Towards an Economic Theory of Organization and Information," Chapter

XIV in R. M. Thrall, C. H. Coombs, and R. L. Davis (eds.), Decision Processes, J. Wiley, 1954.

[41 "Problems in Information Economics," Chapter 2, in C. P. Bonini, R. K. Jaedicke, and

H. M. Wagner (eds.), Management Controls: New Directions in Basic Research, McGraw-Hill,

1964.

[5] HOLT, C. C., F. MODIGLIANI, J. F. MUTH, AND H. A. SIMON: Planning Production Inventories and

the Work Force, Prentice Hall, 1960.

[61 RADNER, R.: "The Evaluation of Information in Organizations," pp. 491-533 in J. Neyman (eds.),

Proceedings of the Fourth Berkelel Symposium on Mathematical Statistics and Probability,

University of California Press, Berkeley, 1961.

[7] "Team Decision Problems," Annals of Mathematical Statistics (September, 1962), pp.

857-881.

[81 RAIFFA, H., AND R. SCHLAIFER: Applied Statistical Decision Theory, Division of Research, Harvard Business School, Harvard University, 1961.

[91 THEIL, H.: Optimal Decision Rules for Government and Industry, North-Holland Publishing Co.

and Rand McNally and Co., 1964.

[101 VAN DE PANNE, C.: "Optimal Strategy Decisions for Dynamic Linear Decision Rules in Feedback Form" Econometrica (April, 1965), pp. 307-320.

This content downloaded from 193.226.34.227 on Thu, 05 May 2016 08:59:58 UTC

All use subject to http://about.jstor.org/terms

You might also like

- Brake System PDFDocument9 pagesBrake System PDFdiego diaz100% (1)

- Tailwind OperationsDocument3 pagesTailwind Operationsiceman737No ratings yet

- Smell Detectives: An Olfactory History of Nineteenth-Century Urban AmericaDocument35 pagesSmell Detectives: An Olfactory History of Nineteenth-Century Urban AmericaUniversity of Washington PressNo ratings yet

- History of Computer AnimationDocument39 pagesHistory of Computer AnimationRanzelle UrsalNo ratings yet

- Column and Thin Layer ChromatographyDocument5 pagesColumn and Thin Layer Chromatographymarilujane80% (5)

- Decentralized and Simultaneous Generation and Transmission Expansion Planning Through Cooperative Game TheoryDocument16 pagesDecentralized and Simultaneous Generation and Transmission Expansion Planning Through Cooperative Game TheorybhheartNo ratings yet

- 2997 Spring 2004Document201 pages2997 Spring 2004combatps1No ratings yet

- NDPoverviewDocument39 pagesNDPoverview鞠可轶No ratings yet

- The Structure of Determining Matrices For Single-Delay Autonomous Linear Neutral Control SystemsDocument17 pagesThe Structure of Determining Matrices For Single-Delay Autonomous Linear Neutral Control SystemsinventionjournalsNo ratings yet

- Some Fixed Point Theorems For Expansion Mappings: A.S.Saluja, Alkesh Kumar Dhakde Devkrishna MagardeDocument7 pagesSome Fixed Point Theorems For Expansion Mappings: A.S.Saluja, Alkesh Kumar Dhakde Devkrishna MagardeInternational Journal of computational Engineering research (IJCER)No ratings yet

- Lógica Fuzzy MestradoDocument15 pagesLógica Fuzzy Mestradoaempinto028980No ratings yet

- Paul Honeiné, Cédric Richard, Patrick Flandrin, Jean-Baptiste PothinDocument4 pagesPaul Honeiné, Cédric Richard, Patrick Flandrin, Jean-Baptiste PothinPramod BhattNo ratings yet

- Systems, Structure and ControlDocument188 pagesSystems, Structure and ControlYudo Heru PribadiNo ratings yet

- The Structure of Determining Matrices For A Class of Double - Delay Control SystemsDocument18 pagesThe Structure of Determining Matrices For A Class of Double - Delay Control SystemsinventionjournalsNo ratings yet

- 10 1 1 457 1165 PDFDocument30 pages10 1 1 457 1165 PDFvamshiNo ratings yet

- Multivariate Dynamic Kernels for Financial Time Series ForecastingDocument8 pagesMultivariate Dynamic Kernels for Financial Time Series ForecastingfbxurumelaNo ratings yet

- 10.3934 Dsfe.2022022Document27 pages10.3934 Dsfe.2022022Jialin XingNo ratings yet

- hw1 Sols PDFDocument5 pageshw1 Sols PDFQuang H. LêNo ratings yet

- Genetic Programming For Financial Time Series PredictionDocument10 pagesGenetic Programming For Financial Time Series Predictionp_saneckiNo ratings yet

- 迭代估计带应用Document14 pages迭代估计带应用彭力No ratings yet

- Costate Prediction Based Optimal Control For Non-Linear Hybrid SystemsDocument6 pagesCostate Prediction Based Optimal Control For Non-Linear Hybrid Systemsapi-19660864No ratings yet

- Finite-Time Event-Triggered Extended Dissipative Control For Discrete Time Switched Linear Systems - DiscreteDocument17 pagesFinite-Time Event-Triggered Extended Dissipative Control For Discrete Time Switched Linear Systems - DiscreteBharathNo ratings yet

- Maquin STA 09Document28 pagesMaquin STA 09p26q8p8xvrNo ratings yet

- Inverse Optimal Control:: What Do We Optimize?Document17 pagesInverse Optimal Control:: What Do We Optimize?Akustika HorozNo ratings yet

- International Journal of Mathematics and Statistics Invention (IJMSI)Document10 pagesInternational Journal of Mathematics and Statistics Invention (IJMSI)inventionjournalsNo ratings yet

- Kernal Methods Machine LearningDocument53 pagesKernal Methods Machine LearningpalaniNo ratings yet

- Multi-Objective Geometric Programming Problem Based On Neutrosophic Geometric Programming TechniqueDocument12 pagesMulti-Objective Geometric Programming Problem Based On Neutrosophic Geometric Programming TechniqueMia AmaliaNo ratings yet

- Dynopt - Dynamic Optimisation Code For Matlab: Fikar/research/dynopt/dynopt - HTMDocument12 pagesDynopt - Dynamic Optimisation Code For Matlab: Fikar/research/dynopt/dynopt - HTMdhavalakkNo ratings yet

- Demand Forecasting Time Series BasicsDocument28 pagesDemand Forecasting Time Series BasicsMario VasquezNo ratings yet

- Loss Modeling Features of ActuarDocument15 pagesLoss Modeling Features of ActuarPatrick MugoNo ratings yet

- 1 s2.0 S0895717710001743 MainDocument7 pages1 s2.0 S0895717710001743 MainAhmad DiabNo ratings yet

- Exp 4 and 6Document7 pagesExp 4 and 6user NewNo ratings yet

- Architectural Traceability Transformation RealityDocument71 pagesArchitectural Traceability Transformation RealityssfofoNo ratings yet

- Data Clustering Using Particle Swarm Optimization: - To Show That The Standard PSO Algorithm Can Be UsedDocument6 pagesData Clustering Using Particle Swarm Optimization: - To Show That The Standard PSO Algorithm Can Be UsedKiya Key ManNo ratings yet

- 6.App-Determinants by Using Generating FunctionsDocument18 pages6.App-Determinants by Using Generating FunctionsImpact JournalsNo ratings yet

- A Tutorial on ν-Support Vector Machines: 1 An Introductory ExampleDocument29 pagesA Tutorial on ν-Support Vector Machines: 1 An Introductory Exampleaxeman113No ratings yet

- 2006 2 PDFDocument6 pages2006 2 PDFGodofredoNo ratings yet

- JMM Volume 7 Issue 2 Pages 199-220Document22 pagesJMM Volume 7 Issue 2 Pages 199-220milahnur sitiNo ratings yet

- D-Choquet Integrals: Choquet Integrals Based On DissimilaritiesDocument27 pagesD-Choquet Integrals: Choquet Integrals Based On DissimilaritiesKumar palNo ratings yet

- HTTPWWW Ptmts Org Pl05-3-MuellerDocument15 pagesHTTPWWW Ptmts Org Pl05-3-MuellerFabian MkdNo ratings yet

- Numerical Analysis: MTL851: Dr. Mani MehraDocument32 pagesNumerical Analysis: MTL851: Dr. Mani MehraPragya DhakarNo ratings yet

- Particle Swarm Optimization: Algorithm and Its Codes in MATLABDocument11 pagesParticle Swarm Optimization: Algorithm and Its Codes in MATLABSaurav NandaNo ratings yet

- Architectural Traceability Transformation RealityDocument67 pagesArchitectural Traceability Transformation RealityssfofoNo ratings yet

- Bhaumik-Project - C - Report K Mean ComplexityDocument10 pagesBhaumik-Project - C - Report K Mean ComplexityMahiye GhoshNo ratings yet

- Fuzzy Adaptive H Control For A Class of Nonlinear SystemsDocument6 pagesFuzzy Adaptive H Control For A Class of Nonlinear SystemsnguyenquangduocNo ratings yet

- Tfestimate: SyntaxDocument14 pagesTfestimate: Syntaxsafinasal7432No ratings yet

- Data Mining and Analysis: Fundamental Concepts and Algorithms Chapter 5Document24 pagesData Mining and Analysis: Fundamental Concepts and Algorithms Chapter 5akshayhazari8281No ratings yet

- Choquet Integral With Stochastic Entries: SciencedirectDocument15 pagesChoquet Integral With Stochastic Entries: SciencedirectKumar palNo ratings yet

- Basic Computing Customization of Traceability TractabilityDocument88 pagesBasic Computing Customization of Traceability TractabilityssfofoNo ratings yet

- Cambridge University Press Econometric TheoryDocument6 pagesCambridge University Press Econometric TheoryThor NxNo ratings yet

- DCT Presentation1Document39 pagesDCT Presentation1party0703No ratings yet

- X n+1 Anxn + bnxn-1: The Numerov Algorithm For Satellite OrbitsDocument6 pagesX n+1 Anxn + bnxn-1: The Numerov Algorithm For Satellite OrbitsEpic WinNo ratings yet

- Fuzzy Set Theory: Classical Set:-A Classical Set "A" Is A CollectionDocument71 pagesFuzzy Set Theory: Classical Set:-A Classical Set "A" Is A Collectionahmed s. NourNo ratings yet

- CGA TILL LAB 9 (1) RemovedDocument18 pagesCGA TILL LAB 9 (1) Removedsalonia.it.21No ratings yet

- Rough Sets Association AnalysisDocument14 pagesRough Sets Association Analysisgolge_manNo ratings yet

- Systems & Control Letters: Srdjan S. Stanković, Dragoslav D. ŠiljakDocument5 pagesSystems & Control Letters: Srdjan S. Stanković, Dragoslav D. Šiljakfalcon_vamNo ratings yet

- Linearization and Stability in SimulinkDocument5 pagesLinearization and Stability in SimulinkPhạm Ngọc HòaNo ratings yet

- Book E Chapt 5 Stat Secs 08-12 For Submission Ver01 08-30-09Document41 pagesBook E Chapt 5 Stat Secs 08-12 For Submission Ver01 08-30-09Bruce WayneNo ratings yet

- American Mathematical SocietyDocument20 pagesAmerican Mathematical SocietyAndrea MaroniNo ratings yet

- Numerical Integration in Structural DynamicsDocument17 pagesNumerical Integration in Structural Dynamicsdik_gNo ratings yet

- Toplogical and Geometric KM-Single Valued Neutrosophic Metric SpacesDocument25 pagesToplogical and Geometric KM-Single Valued Neutrosophic Metric SpacesScience DirectNo ratings yet

- 4curve Fitting TechniquesDocument32 pages4curve Fitting TechniquesSubhankarGangulyNo ratings yet

- Hopf Bifurcation For A Class of Three-Dimensional Nonlinear Dynamic SystemsDocument13 pagesHopf Bifurcation For A Class of Three-Dimensional Nonlinear Dynamic SystemsSteven SullivanNo ratings yet

- EE580 Final Exam 2 PDFDocument2 pagesEE580 Final Exam 2 PDFMd Nur-A-Adam DonyNo ratings yet

- Naaqs 2009Document2 pagesNaaqs 2009sreenNo ratings yet

- Country Wing Auto-Mobile GarageDocument25 pagesCountry Wing Auto-Mobile GarageDmitry PigulNo ratings yet

- Teodorico M. Collano, JR.: ENRM 223 StudentDocument5 pagesTeodorico M. Collano, JR.: ENRM 223 StudentJepoyCollanoNo ratings yet

- 1 s2.0 S1747938X21000142 MainDocument33 pages1 s2.0 S1747938X21000142 MainAzmil XinanNo ratings yet

- Lab 1 Handout Electrical MachinesDocument23 pagesLab 1 Handout Electrical Machinesvishalsharma08No ratings yet

- Managerial Performance Evaluation ProceduresDocument3 pagesManagerial Performance Evaluation Procedures1robcortesNo ratings yet

- Water Sampling and Borehole Inspection FormsDocument2 pagesWater Sampling and Borehole Inspection FormsSibanda MqondisiNo ratings yet

- Media Effects TheoriesDocument6 pagesMedia Effects TheoriesHavie Joy SiguaNo ratings yet

- Conductivity NickelDocument2 pagesConductivity Nickelkishormujumdar998No ratings yet

- Quiz 1Document3 pagesQuiz 1JULIANNE BAYHONNo ratings yet

- 1993 - Kelvin-Helmholtz Stability Criteria For Stratfied Flow - Viscous Versus Non-Viscous (Inviscid) Approaches PDFDocument11 pages1993 - Kelvin-Helmholtz Stability Criteria For Stratfied Flow - Viscous Versus Non-Viscous (Inviscid) Approaches PDFBonnie JamesNo ratings yet

- Margin Philosophy For Science Assessment Studies: EstecDocument11 pagesMargin Philosophy For Science Assessment Studies: EstecFeyippNo ratings yet

- Symbiosis Skills and Professional UniversityDocument3 pagesSymbiosis Skills and Professional UniversityAakash TiwariNo ratings yet

- Guimaras State CollegeDocument5 pagesGuimaras State CollegeBabarianCocBermejoNo ratings yet

- 5 Tests of Significance SeemaDocument8 pages5 Tests of Significance SeemaFinance dmsrdeNo ratings yet

- G String v5 User ManualDocument53 pagesG String v5 User ManualFarid MawardiNo ratings yet

- Pnas 1703856114Document5 pagesPnas 1703856114pi. capricorniNo ratings yet

- ST326 - Irdap2021Document5 pagesST326 - Irdap2021NgaNovaNo ratings yet

- Comparison of Waste-Water Treatment Using Activated Carbon and Fullers Earth - A Case StudyDocument6 pagesComparison of Waste-Water Treatment Using Activated Carbon and Fullers Earth - A Case StudyDEVESH SINGH100% (1)

- Mil B 49430BDocument36 pagesMil B 49430Bparam.vennelaNo ratings yet

- Demand Performa For Annual DemandDocument10 pagesDemand Performa For Annual DemandpushpNo ratings yet

- A Review On Micro EncapsulationDocument5 pagesA Review On Micro EncapsulationSneha DharNo ratings yet

- Timeline of Internet in The PhilippinesDocument29 pagesTimeline of Internet in The PhilippinesJhayson Joeshua Rubio100% (1)

- AWS D1.5 PQR TitleDocument1 pageAWS D1.5 PQR TitleNavanitheeshwaran SivasubramaniyamNo ratings yet

- ADL MATRIX STRATEGY FOR BPCL'S GROWTHDocument17 pagesADL MATRIX STRATEGY FOR BPCL'S GROWTHSachin Nagar100% (1)