Professional Documents

Culture Documents

Overcoming Mmwave Challenges With Calibration: White Paper

Uploaded by

MRousstiaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Overcoming Mmwave Challenges With Calibration: White Paper

Uploaded by

MRousstiaCopyright:

Available Formats

WHITE PAPER

Overcoming mmWave Challenges

with Calibration

The objective of calibration at millimeter wave frequencies is no different than at longer

wavelengths: remove the largest contributor to measurement uncertainty. But the reality is

while the objective is the same, the actual process for achieving the calibration is quite

different.

When testing mmWave devices, you need to be aware of the unique challenges of

calibration at frequencies of 30- 300 GHz.

Figure 1: Network analyzer with a test set controller and frequency extenders

Find us at www.keysight.com Page 1

Even the best hardware can’t eliminate all the potential errors generated during device

testing. All testing hardware has some degree of innate imprecision. And at mmWave

frequencies, wavelengths are smaller, which means greater sources of error to the

measurement from relatively small changes to the measurement plane. In other words,

a shift of only a few millimeters can lead to large phase shifts. This tight margin makes it

even more critical for you to minimize these errors with proper calibration.

You’re likely familiar with standard network analyzer calibration and vector-error-corrected

measurements. This is often a routine part of maximizing the accuracy of your measurements

(by minimizing the innate imprecision of your network analyzer). But, at mmWave

frequencies, there are special calibration considerations to make that are essential for

accurate, repeatable measurements in these high frequency bands. So what changes do

you need to make for working in the mmWave frequency range? How can you ensure you’re

getting the most reliable measurements and avoiding costly test errors?

Your VNA is Only as Good as Its Calibration

VNAs provide high measurement accuracy. But, this accuracy is really only possible when

your VNA is calibrated using a mathematical technique called vector-error-correction. This

type of calibration is different than the yearly calibration done in a cal lab to ensure the

instrument is functioning properly and meeting its published specifications for things like

output power and receiver noise floor.

Calibrating a VNA-based test system helps to obtain the largest contributor to measurement

uncertainty: systematic errors. Systematic errors are repeatable, non-random errors that can

be measured and removed mathematically. They come from the network analyzer itself, plus

all of the test cables, adapters, fixtures, and/or probes that are between the analyzer and the

DUT. And, error correction removes the systematic errors. A vector-error-corrected VNA

system provides the best picture of the true performance of the device under test. Which

means a network analyzer and its measurements are really only as good as its calibration.

Without proper calibration you risk measurement uncertainty. In some cases, this uncertainty

may be negligible. But at mmWave frequencies, the wavelengths are short and every small

imperfection is amplified. This uncertainty could easily lead to device failure or lack of

compliance to strict standards. It’s more important than ever that you use proper calibration

techniques for making measurements with your VNA.

New Calibration Challenges

Calibration is critical to minimize the expense of re-test and failed parts and increase the

likelihood of success at these mmWave frequencies. Working with mmWave systems

requires you to achieve a broadband calibration over a wide frequency range- often from 500

MHz all the way up to 125 GHz or higher. But calibrating at such a broad frequency range

comes with a new set of challenges that you likely didn’t experience at lower frequency

ranges.

Find us at www.keysight.com Page 2 P

a

g

e

To effectively calibrate your VNA at mmWave frequencies, you need a load that can offer this

broadband frequency coverage. You can get reasonable accuracy using a well-designed

broad band load. A sliding load however, if not desirable at millimeter wave. There are other

ways to achieve high accuracy. You might consider using a polynomial model, which is often

used at low frequencies. This type of model describes only inductance and return loss of the

loads. With this implementation, you need three bands- low band, high band, and a type of

broadband sliding load. This is typically enough at frequencies below 30 GHz, and you can

get a solid calibration using this method. But, if we try to apply this to a higher frequency

signal, you’ll notice some issues.

The Old Model: Polynomial Calibration

Figure 2 shows a short with three different polynomial models, low band, high band, and

broadband along with their deviations from the actual physical model. The red trace

represents a low band model, one that is optimized for low band performance. It has a good

load, but potentially limited shorts. For this signal, around 40 GHz, we notice that it breaks

down and the error starts to expand out.

Figure 2: Low vs high vs broadband load models across a frequency range of 0 -70 GHz

The blue trace represents just using shorts without any low band load. In this case, with the

multiple shorts, you limit the performance at 40 GHz and above.

However, if you are able to combine a broadband model that takes advantage of the lower

band load of the red trace and the high band offset short corrections of the blue trace, your

result would be something like the green trace.

Find us at www.keysight.com Page 3 P

a

g

e

This demonstrates the new challenge of working at mmWave frequencies. As we get into

these broadband frequencies, we need to eliminate the load. To do this, you need to use

multiple shorts to cover the broad frequency range that you are now working in. A polynomial

model is inadequate at millimeter wave frequencies. A new solution is required.

The New Model: Database Calibration

So, we now know we need to use multiple shorts to cover the broad frequency range we are

working with. But, how do we do this? What does this look like in practice?

What you’re looking for is a calibration kit (Figure 3) that eliminates the need for a broadband

load and implements multiple shorts to cover the frequency range that you are working in. For

example, Keysight has a mechanical, coaxial calibration kit that has a low band load, four

shorts, and an open. This kit is able to cover the low frequencies up to 50 GHz with the load.

And, it uses the offset shorts to provide states on the Smith Chart that represent different

impedance conditions.

Figure 3: Mechanical calibration kit

This implementation uses a database model instead of a polynomial model. As we saw in the

example in Figure 2, the polynomial model doesn’t work at mmWave frequencies, so we

need a different technique.

The database model is a good fit. It characterizes each device using a specified dataset. This

model uses a Smith Chart with known data of various components across a certain frequency

range. For example, for a source match type measurement, let’s take a look at a high reflect

device. What represents a good short at this frequency? We plot that out, and we use this as

our database calibration model. You can do that for any type of measurement you are

working with: plot out the ideal conditions and use that as a model. This dataset then allows

us to calibrate our system.

This calibration can be enhanced by using a least squares fit method, applied in the

computation of the error correction terms. Essentially, this applies a weighting to ensure the

right standard is used in the calibration error extraction.

Find us at www.keysight.com Page 4 P

a

g

e

The Keysight calibration kit in Figure 3 uses these techniques and allows us to effectively

calibrate our system for mmWave testing. It’s important to realize that calibration kits and

methods that work at lower frequencies simply do not work at these broadband frequencies.

You will need to consider a new set of calibration tools that will optimize the accuracy of your

mmWave test set up.

Types

Understanding the type of calibration that’s required at millimeter wave frequencies is critical,

but it can be complicated by the variance of connector types. There are four main ways to

interface with your device under test at mmWave frequencies: coaxial, on-wafer, waveguide,

and over the air (OTA). Each of these methods requires a different approach to calibration.

We are seeing that coaxial and on-wafer are the most prevalent methods, so for this

discussionwe will focus on those methods.

Find us at www.keysight.com Page 5 P

a

g

e

Coaxial Interface

A coaxial connection is the traditional interface option. 1.00 mm connectors are an industry

standard and can operate broadband to 120 GHz, making them a simple choice when

transitioning to testing in higher frequency ranges.

1.00 mm coaxial connections are also regulated with an IEEE standard (IEEE 287-2007),

which comes with two main benefits:

1. Your measurements will be traceable, which is critical for tracking and measuring

uncertainty. Figure 4 shows the uncertainty computed real-time when using a 1.00 mm

compliant connector.

2. You can make standards-compliant measurements. These specific measurements are

often necessary when trying to meet an industry or regulatory specification.

Figure 4: Uncertainty computed real-time when using a 1.00 mm compliant connector

On-Wafer Interface

While coaxial connections are commonplace in most labs, on-wafer measurements are

quickly becoming the most commonly used interface method when doing design and device

characterization of mmWave components. The calibration standards for on-wafer

measurements include similar devices to coaxial interfaces (opens, shorts, and loads), but

they are on a substrate, such as an integrated substrate standard (ISS).

Typically, on-wafer calibrations are made using a SOLT (Short Open Load Thru) technique.

But, you may be familiar with one of the other supported calibration methods: SOLR (Short

Open Load Reciprocal), LRM (Line Reflect Match), LRRM (Line Reflect Reflect Match), and

TRL (Thru Reflect Line).

Find us at www.keysight.com Page 6 P

a

g

e

Figure 5: On-wafer measurement test set-up

Regardless of the specific calibration method used, when testing above 50 GHz, you will

likely use an ISS. You’ll need to thin the ISS to 10 mils thickness for testing. As you do this,

you will produce modes that are not well controlled. Having uncontrolled modes can lead to

ground coupling. And, since higher order modes are not stable, they can’t be characterized,

and therefore corrected. With ground coupling present, as you do a reflection type

measurement you will get serious distortion above 50 GHz.

With ground coupling and distortion present, your measurements will be misleading. This

margin of error is likely significant at the frequencies you are working in. To get a proper

calibration, you need to use an absorber. An absorber will help minimize substrate higher

order mode generation, giving you a cleaner, more accurate measurement.

Power Calibration

When thinking about testing your mmWave devices, you likely know you need to test your

transceivers and convertors using a VNA. Additionally, you also need to accurately determine

the power applied to the device. For example, if you’re looking at harmonics with an amplifier,

this power measurement is critical to understanding how well the amplifier works.

The Early Power Calibration Model

Early efforts to calibrate for power measurements at mmWave frequencies involved using

multiple sensors to cover the frequency range. Typically, the sensors were broadband

waveguide sensors since coaxial sensors are limited to diode-based detection. This method

uses multiple calibrations to cover such a broad frequency range (often about 50-120 GHz)

Find us at www.keysight.com Page 7 P

a

g

e

often were too great to yield accurate measurements. There is a lot of room for error with this

method, and it isn’t an ideal solution.

The New Power Calibration Model

A more recent innovation is to use broadband power sensor technology. This thermal-based

technology (thermocouple) makes it easier to calibrate the broadband power. Since it uses a

single connection instead of multiple connections it eliminates a lot of potential error that can

result from hooking up multiple sensors.

You’ll see this new technology implemented in power sensors like the one in Figure 6. This

sensor has a 1.0 mm connector and provides coverage from DC up to 120 GHz. When

calibrating for power measurements, it is important to consider the potential error induced

from the power sensors you select and if this error is negligible or not.

Figure 6: Keysight U8489A power sensor

Find us at www.keysight.com Page 8 P

a

g

e

Conclusion

You need to be able to make accurate, repeatable measurements to avoid design failures and

missed deadlines. Tight margins at mmWave frequencies mean new, more precise calibration

techniques are needed. So understanding the differences in calibration needed in millimeter wave

frequency band is critical for your success. While test hardware quality is as important as ever,

your test set up is only as good as its calibration. Proper calibration is the first step to a reliable

test setup.

Now that you know some of the key areas to evaluate, consider reevaluating your test set up,

calibration tools and techniques. Learn more about Keysight’s mmWave test sets and calibration

tools:

• N9041B UXA Signal Analyzer

• N5291A 900 Hz to 120 GHz PNA MM-Wave System

• U8489A DC to 120 GHz USB Thermocouple Power Sensor

www.keysight.com

For more information on Keysight Technologies’ products, applications or services,

please contact your local Keysight office. The complete list is available at:

www.keysight.com/find/contactus

Find us at www.keysight.com Page 9 P

T hi s inf or mat ion is sub jec t to chang e wi thou t not i ce. © Ke ys ight Techn olog ie s, 2018 , Pub l ished in USA, Ma y 17 , 2018 , 5992 -3026E N

a

g

e

You might also like

- Seismic Inversion Techniques for Improved Reservoir CharacterizationDocument10 pagesSeismic Inversion Techniques for Improved Reservoir Characterizationbambus30100% (1)

- Digital Modulations using MatlabFrom EverandDigital Modulations using MatlabRating: 4 out of 5 stars4/5 (6)

- Transformer InsulationDocument14 pagesTransformer InsulationcjtagayloNo ratings yet

- New Japa Retreat NotebookDocument48 pagesNew Japa Retreat NotebookRob ElingsNo ratings yet

- The K FactorDocument11 pagesThe K FactorSOURAV PRASADNo ratings yet

- Focus Group Discussion PDFDocument40 pagesFocus Group Discussion PDFroven desu100% (1)

- Handbook of Microwave Component Measurements: with Advanced VNA TechniquesFrom EverandHandbook of Microwave Component Measurements: with Advanced VNA TechniquesRating: 4 out of 5 stars4/5 (1)

- Horizontal Machining Centers: No.40 Spindle TaperDocument8 pagesHorizontal Machining Centers: No.40 Spindle TaperMax Litvin100% (1)

- S4 - SD - HOTS in Practice - EnglishDocument65 pagesS4 - SD - HOTS in Practice - EnglishIries DanoNo ratings yet

- How Can Distributed Architecture Help Mmwave Network Analysis?Document11 pagesHow Can Distributed Architecture Help Mmwave Network Analysis?MRousstiaNo ratings yet

- Analog vs. Digital Galvanometer Technology - An IntroductionDocument5 pagesAnalog vs. Digital Galvanometer Technology - An IntroductionendonezyalitanilNo ratings yet

- LabviewDocument5 pagesLabviewAmineSlamaNo ratings yet

- Signal Processing: Lap-Luat Nguyen, Roland Gautier, Anthony Fiche, Gilles Burel, Emanuel RadoiDocument19 pagesSignal Processing: Lap-Luat Nguyen, Roland Gautier, Anthony Fiche, Gilles Burel, Emanuel RadoiRidouane OulhiqNo ratings yet

- Getting Back To The Basics of Electrical MeasurementsDocument12 pagesGetting Back To The Basics of Electrical MeasurementsAvinash RaghooNo ratings yet

- De-Embedding Using A VnaDocument6 pagesDe-Embedding Using A VnaA. VillaNo ratings yet

- Webcast Reminder RF & Microwave Component Measurement FundamentalsDocument26 pagesWebcast Reminder RF & Microwave Component Measurement FundamentalspNo ratings yet

- Calibration Techniques For Improved MIMO and Beamsteering CharacterizationDocument12 pagesCalibration Techniques For Improved MIMO and Beamsteering CharacterizationAntonio GeorgeNo ratings yet

- Fi LiDocument17 pagesFi LiFikaduNo ratings yet

- Hyperparameter Tuning For Support Vector Machines - C and Gamma Parameters - by Soner Yıldırım - Towards Data ScienceDocument7 pagesHyperparameter Tuning For Support Vector Machines - C and Gamma Parameters - by Soner Yıldırım - Towards Data ScienceAnalog4 MeNo ratings yet

- New Ways To Measure Torque May Make Your Current Method ObsoleteDocument6 pagesNew Ways To Measure Torque May Make Your Current Method ObsoleteDrake Moses KabbsNo ratings yet

- Affordable Solutions For Testing 28 GHZ 5G Devices With Your 6 GHZ Lab InstrumentationDocument5 pagesAffordable Solutions For Testing 28 GHZ 5G Devices With Your 6 GHZ Lab InstrumentationArun KumarNo ratings yet

- ML_Lec-6Document16 pagesML_Lec-6BHARGAV RAONo ratings yet

- Lab 7.strain Gages With Labview.v2 PDFDocument11 pagesLab 7.strain Gages With Labview.v2 PDFPrasanna KumarNo ratings yet

- Some Fiber Optic Measurement ProceduresDocument6 pagesSome Fiber Optic Measurement ProceduresAmir SalahNo ratings yet

- Techniques For VNA MeasurementsDocument16 pagesTechniques For VNA MeasurementsA. VillaNo ratings yet

- A 95-dB Linear Low-Power Variable Gain AmplifierDocument10 pagesA 95-dB Linear Low-Power Variable Gain Amplifierkavicharan414No ratings yet

- App Note 11410-00270Document8 pagesApp Note 11410-00270tony2x4No ratings yet

- A Compact Ultra-Wideband Load-Pull Measurement SystemDocument6 pagesA Compact Ultra-Wideband Load-Pull Measurement Systemoğuz odabaşıNo ratings yet

- Accurate Power Measurements on Modern Communication SystemsDocument12 pagesAccurate Power Measurements on Modern Communication Systems123vb123No ratings yet

- PublishedPaper 2020-APCSM MachineLearningDocument8 pagesPublishedPaper 2020-APCSM MachineLearningJilmil KalitaNo ratings yet

- Attenuation Measurement White Paper FinalDocument7 pagesAttenuation Measurement White Paper Finalm_agasenNo ratings yet

- The Design of N Bit Quantization Sigma-Delta Analog To Digital ConverterDocument4 pagesThe Design of N Bit Quantization Sigma-Delta Analog To Digital ConverterBhiNo ratings yet

- Relevance of Project in Present Context: E&Ce Dept GndecbDocument7 pagesRelevance of Project in Present Context: E&Ce Dept GndecbVijaya ShreeNo ratings yet

- First Steps in 5G: Overcoming New Radio Device Design Challenges SeriesDocument8 pagesFirst Steps in 5G: Overcoming New Radio Device Design Challenges Seriestruongthang nguyenNo ratings yet

- Advanced Adjustment ConceptsDocument18 pagesAdvanced Adjustment ConceptsAlihuertANo ratings yet

- Application Note 1287-11 - Specifying Calibration Standards and Kits For Agilent Vector Network AnalyzersDocument42 pagesApplication Note 1287-11 - Specifying Calibration Standards and Kits For Agilent Vector Network Analyzerssanjeevsoni64No ratings yet

- I Ee ExploreDocument6 pagesI Ee ExplorebaruaeeeNo ratings yet

- A Generic DMM Test and Calibration Strategy: Peter B. CrispDocument11 pagesA Generic DMM Test and Calibration Strategy: Peter B. CrispRangga K NegaraNo ratings yet

- Curve Correction in Atomic Absorption: Application NoteDocument6 pagesCurve Correction in Atomic Absorption: Application NoteShirle Palomino MercadoNo ratings yet

- ISO6892Document5 pagesISO6892jeridNo ratings yet

- Blog Advanced RF Pa TestingDocument18 pagesBlog Advanced RF Pa Testingthangaraj_icNo ratings yet

- 5989 4840enDocument41 pages5989 4840enEugenCartiNo ratings yet

- 5989 5765enDocument48 pages5989 5765enTiến ĐạtNo ratings yet

- Digicomms Revision Chap1 1Document5 pagesDigicomms Revision Chap1 1Nicko RicardoNo ratings yet

- Optimizing Low-Dwell Sweeps with Vibrator Simulation and QCDocument5 pagesOptimizing Low-Dwell Sweeps with Vibrator Simulation and QCMuhammad FurqanNo ratings yet

- GMSK Modulation BasicsDocument3 pagesGMSK Modulation BasicsLaxman SwamyNo ratings yet

- Help To Select Your Signal Level Meter: To Begin With There Are Three Varieties of MetersDocument3 pagesHelp To Select Your Signal Level Meter: To Begin With There Are Three Varieties of Metersanmol149No ratings yet

- Electroforesis Cuantificación Band - Un Arte o Una CienciaDocument5 pagesElectroforesis Cuantificación Band - Un Arte o Una Ciencialmrc101No ratings yet

- Micro Leveling Tech NoteDocument4 pagesMicro Leveling Tech NotemarcusnicholasNo ratings yet

- The Prize Collecting Steiner Tree Problem: Theory and PracticeDocument10 pagesThe Prize Collecting Steiner Tree Problem: Theory and PracticeJitender KumarNo ratings yet

- Optics and Lasers in Engineering: Junfei Dai, Beiwen Li, Song ZhangDocument7 pagesOptics and Lasers in Engineering: Junfei Dai, Beiwen Li, Song Zhangmamoto2312No ratings yet

- Use of Dithering in ADCDocument12 pagesUse of Dithering in ADCRajeev Ramachandran100% (1)

- GMSK Basics: Signal Using MSK ModulationDocument4 pagesGMSK Basics: Signal Using MSK ModulationsagardongarNo ratings yet

- Digital MultimeterDocument53 pagesDigital MultimeterVincent KorieNo ratings yet

- Gaussian Minimum Shift KeyingDocument4 pagesGaussian Minimum Shift KeyingShubham SinghalNo ratings yet

- Thesis BayerDocument5 pagesThesis Bayercarlamolinafortwayne100% (2)

- Measuring EDGE SignalsDocument3 pagesMeasuring EDGE SignalsKien TranNo ratings yet

- Monitoring Rotating Machinery VibrationsDocument14 pagesMonitoring Rotating Machinery VibrationsSEBINNo ratings yet

- CMW500 - Wireless Device Production Test PDFDocument34 pagesCMW500 - Wireless Device Production Test PDFAlvaro Cea CamposNo ratings yet

- 10step To A Perfect Digital DemodulationDocument16 pages10step To A Perfect Digital Demodulationbayman66No ratings yet

- High Speed Numerical Techniques For Transmission Line Protection S D A PickeringDocument4 pagesHigh Speed Numerical Techniques For Transmission Line Protection S D A Pickeringkvasquez1979No ratings yet

- Q FactorDocument12 pagesQ FactorNida AkNo ratings yet

- High-Performance D/A-Converters: Application to Digital TransceiversFrom EverandHigh-Performance D/A-Converters: Application to Digital TransceiversNo ratings yet

- Software Radio: Sampling Rate Selection, Design and SynchronizationFrom EverandSoftware Radio: Sampling Rate Selection, Design and SynchronizationNo ratings yet

- RF Molex VerticalDocument1 pageRF Molex VerticalMRousstiaNo ratings yet

- Example - Data SheetDocument2 pagesExample - Data SheetMRousstiaNo ratings yet

- DWG No.: Technical specifications for SMA jack end launch connectorDocument1 pageDWG No.: Technical specifications for SMA jack end launch connectorMRousstiaNo ratings yet

- Samtec Connector - MaleDocument1 pageSamtec Connector - MaleMRousstiaNo ratings yet

- SMA - Male - Pi 142 0801 811Document4 pagesSMA - Male - Pi 142 0801 811MRousstiaNo ratings yet

- Hard Gold Plating Vs Soft Gold Plating - Which Is Right For My Application?Document5 pagesHard Gold Plating Vs Soft Gold Plating - Which Is Right For My Application?MRousstiaNo ratings yet

- RF Molex VerticalDocument1 pageRF Molex VerticalMRousstiaNo ratings yet

- EMIFILr Chip Ferrite Bead Data SheetDocument4 pagesEMIFILr Chip Ferrite Bead Data SheetMRousstiaNo ratings yet

- Murata LDocument11 pagesMurata LMRousstiaNo ratings yet

- MT 086Document10 pagesMT 086Muhammad IkhsanNo ratings yet

- RO3200 Laminate Data Sheet RO3203 RO3206 RO3210Document2 pagesRO3200 Laminate Data Sheet RO3203 RO3206 RO3210MRousstiaNo ratings yet

- Creating a 4 Layer PCBDocument9 pagesCreating a 4 Layer PCBMRousstiaNo ratings yet

- Philadelphia 5G Summit AgendaDocument3 pagesPhiladelphia 5G Summit AgendaMRousstiaNo ratings yet

- RO4000 Laminates - Data SheetDocument4 pagesRO4000 Laminates - Data SheetFuggNo ratings yet

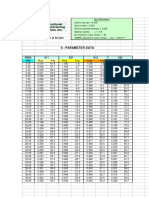

- S - Parameter Data: Ims P/N RCX 0302 PW A T 50 OhmDocument1 pageS - Parameter Data: Ims P/N RCX 0302 PW A T 50 OhmMRousstiaNo ratings yet

- PrberDocument3 pagesPrberMRousstiaNo ratings yet

- RCX Partial Wraps PDFDocument2 pagesRCX Partial Wraps PDFMRousstiaNo ratings yet

- How Do I Reset My Workspace Panels Back To Their Default Positions?Document3 pagesHow Do I Reset My Workspace Panels Back To Their Default Positions?MRousstiaNo ratings yet

- Call FOR Papers: Europe'S Premier Microwave, RF, Wireless and Radar EventDocument11 pagesCall FOR Papers: Europe'S Premier Microwave, RF, Wireless and Radar EventMRousstiaNo ratings yet

- RCX Partial Wraps PDFDocument2 pagesRCX Partial Wraps PDFMRousstiaNo ratings yet

- MFDocument5 pagesMFschoebelNo ratings yet

- 5992 1822enDocument29 pages5992 1822enMRousstiaNo ratings yet

- Don't Sacrifice The Benefits of Millimeter-Wave Equipment: by Missing The BasicsDocument13 pagesDon't Sacrifice The Benefits of Millimeter-Wave Equipment: by Missing The BasicsMRousstiaNo ratings yet

- 5992 2348enDocument7 pages5992 2348enMRousstiaNo ratings yet

- Don't Sacrifice The Benefits of Millimeter-Wave Equipment: by Missing The BasicsDocument13 pagesDon't Sacrifice The Benefits of Millimeter-Wave Equipment: by Missing The BasicsMRousstiaNo ratings yet

- European Microwave Week 2018: "Passion For Microwaves"Document1 pageEuropean Microwave Week 2018: "Passion For Microwaves"MRousstiaNo ratings yet

- Probe Selection GuideDocument29 pagesProbe Selection GuideMRousstiaNo ratings yet

- PGACPDocument4 pagesPGACPMRousstiaNo ratings yet

- Theory Is An Explanation Given To Explain Certain RealitiesDocument7 pagesTheory Is An Explanation Given To Explain Certain Realitiestaizya cNo ratings yet

- Alfa Romeo Giulia Range and Quadrifoglio PricelistDocument15 pagesAlfa Romeo Giulia Range and Quadrifoglio PricelistdanielNo ratings yet

- 09 Chapter TeyyamDocument48 pages09 Chapter TeyyamABNo ratings yet

- Lab Report AcetaminophenDocument5 pagesLab Report Acetaminophenapi-487596846No ratings yet

- PA2 Value and PD2 ValueDocument4 pagesPA2 Value and PD2 Valueguddu1680No ratings yet

- Canopen-Lift Shaft Installation: W+W W+WDocument20 pagesCanopen-Lift Shaft Installation: W+W W+WFERNSNo ratings yet

- Dental System SoftwareDocument4 pagesDental System SoftwareHahaNo ratings yet

- LM385Document14 pagesLM385vandocardosoNo ratings yet

- Degree and Order of ODEDocument7 pagesDegree and Order of ODEadiba adibNo ratings yet

- Joel Werner ResumeDocument2 pagesJoel Werner Resumeapi-546810653No ratings yet

- Preparatory Lights and Perfections: Joseph Smith's Training with the Urim and ThummimDocument9 pagesPreparatory Lights and Perfections: Joseph Smith's Training with the Urim and ThummimslightlyguiltyNo ratings yet

- Science SimulationsDocument4 pagesScience Simulationsgk_gbuNo ratings yet

- Intraoperative Nursing Care GuideDocument12 pagesIntraoperative Nursing Care GuideDarlyn AmplayoNo ratings yet

- Andrew Linklater - The Transformation of Political Community - E H Carr, Critical Theory and International RelationsDocument19 pagesAndrew Linklater - The Transformation of Political Community - E H Carr, Critical Theory and International Relationsmaria luizaNo ratings yet

- ProSteel Connect EditionDocument2 pagesProSteel Connect EditionInfrasys StructuralNo ratings yet

- GEHC DICOM Conformance CentricityRadiologyRA600 V6 1 DCM 1030 001 Rev6 1 1Document73 pagesGEHC DICOM Conformance CentricityRadiologyRA600 V6 1 DCM 1030 001 Rev6 1 1mrzdravko15No ratings yet

- Journal 082013Document100 pagesJournal 082013Javier Farias Vera100% (1)

- Sample of Accident Notification & Investigation ProcedureDocument2 pagesSample of Accident Notification & Investigation Procedurerajendhar100% (1)

- Electronics Foundations - Basic CircuitsDocument20 pagesElectronics Foundations - Basic Circuitsccorp0089No ratings yet

- MacEwan APA 7th Edition Quick Guide - 1Document4 pagesMacEwan APA 7th Edition Quick Guide - 1Lynn PennyNo ratings yet

- Activities/Assessments 2:: Determine The Type of Sampling. (Ex. Simple Random Sampling, Purposive Sampling)Document2 pagesActivities/Assessments 2:: Determine The Type of Sampling. (Ex. Simple Random Sampling, Purposive Sampling)John Philip Echevarria0% (2)

- Current Developments in Testing Item Response Theory (IRT) : Prepared byDocument32 pagesCurrent Developments in Testing Item Response Theory (IRT) : Prepared byMalar VengadesNo ratings yet

- Assessment in Southeast AsiaDocument17 pagesAssessment in Southeast AsiathuckhuyaNo ratings yet

- History shapes Philippine societyDocument4 pagesHistory shapes Philippine societyMarvin GwapoNo ratings yet

- SPSS-TEST Survey QuestionnaireDocument2 pagesSPSS-TEST Survey QuestionnaireAkshay PatelNo ratings yet