Professional Documents

Culture Documents

FDFDF

Uploaded by

akshay patri0 ratings0% found this document useful (0 votes)

5 views1 pagegfg

Original Title

fdfdf

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Documentgfg

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF or read online from Scribd

0 ratings0% found this document useful (0 votes)

5 views1 pageFDFDF

Uploaded by

akshay patrigfg

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF or read online from Scribd

You are on page 1of 1

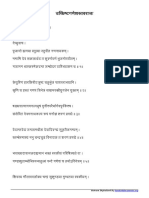

2. Simple versus Multiple Regression Coefficients. This is based on Baltagi (19876). Consider the

multiple regression

Vist BX + BXu tus §=1,2,..40

slong with the following auxiliary regressions:

Xu = 8+6Xu +90

Xu = 8+ dXa +0 si

Im section 3, we showed that , the OLS estimate of can be interpreted asa simple regression

of ¥ on the OLS residuals, A similar interpretation can be given toy. Kennedy (1981, p. 416)

lai that ia not necemarly the sare sy, the OLS extimate of obtained from the regreasion

Y¥ on by, bs and a constant, ¥;= 9+ Sibu +630. +t. Prow this claim by finding a relationship

between the 3's and the 8,

15, Consider the simple regression with no constant: ¥j= 8X;+u; 7=1,2,..450

‘where u; ~ 11D(0,¢2) independent of X,. Theil (1971) showed that among all linear estimators in

¥,, the minimum mean square estimator for 8, Le, that which minimizes E(B ~ §) Is given by

Ba PDL KM/GP DL XP 40%).

(a) Show that E(B) = /(1 +), where c= 02/92, X? > 0.

(©) Conclude that the Bias (B) = E(B) — 9 = ~[e/(1 + 0)]9. Note that this bias is postive

(negative) when 8 is negative (positive). This also means that jis biased towards zero,

(©) Show that MSE(B) = £(B— 8)? = 0/[SD}.. X? + (0?/8*)]. Conclude that it is smaller than

the MSE@oxs)

2, For the simple linear regression with heteroskedastcity of the form B(u2) = 0?

show that E(s?/ C7, 2?) understates the variance of Boz,s which is

Tha tor/(lha a

ba} where b> 0,

You might also like

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- MA Econometrics II: Midterm 2019: Instructor: Bipasha Maity Ashoka University Spring Semester 2019 March 13, 2019Document2 pagesMA Econometrics II: Midterm 2019: Instructor: Bipasha Maity Ashoka University Spring Semester 2019 March 13, 2019akshay patriNo ratings yet

- Aghori Mantra EngDocument7 pagesAghori Mantra Engakshay patriNo ratings yet

- Nonparametric InferenceDocument16 pagesNonparametric Inferenceakshay patriNo ratings yet

- Ucchishta-Ganesha-Sthavaraja Sanskrit PDF File249 PDFDocument4 pagesUcchishta-Ganesha-Sthavaraja Sanskrit PDF File249 PDFakshay patriNo ratings yet

- Dehasta-Devata-cakra StrotraDocument4 pagesDehasta-Devata-cakra Strotraakshay patri100% (1)

- PS1 Sol Page1Document1 pagePS1 Sol Page1akshay patriNo ratings yet

- Instlecture Reputation Ashoka 2019 PDFDocument53 pagesInstlecture Reputation Ashoka 2019 PDFakshay patriNo ratings yet

- Teesta CraftDocument8 pagesTeesta Craftakshay patriNo ratings yet

- Notes 2 Differentiated ProductsDocument25 pagesNotes 2 Differentiated Productsakshay patriNo ratings yet

- MA Econometrics II Midterm 2018Document3 pagesMA Econometrics II Midterm 2018akshay patriNo ratings yet

- Bhairavashivastava PDFDocument4 pagesBhairavashivastava PDFSanjeev DhondiNo ratings yet

- GLOSSARY - Shamballa School PDFDocument211 pagesGLOSSARY - Shamballa School PDFakshay patri100% (2)

- PS1 Sol Part2Document6 pagesPS1 Sol Part2akshay patriNo ratings yet

- Essentials of LYX - An Overview of Features and FunctionsDocument24 pagesEssentials of LYX - An Overview of Features and FunctionsVinny Tagor100% (1)

- Conf Int PDFDocument1 pageConf Int PDFakshay patriNo ratings yet

- Yta 8Document35 pagesYta 8akshay patriNo ratings yet

- Libra Scorpio Sagittarius PDFDocument234 pagesLibra Scorpio Sagittarius PDFakshay patriNo ratings yet

- Lien2004 PDFDocument18 pagesLien2004 PDFakshay patriNo ratings yet

- 2 Matrix Algebra PDFDocument41 pages2 Matrix Algebra PDFakshay patriNo ratings yet

- The Decline of The West, Oswald Spengler PDFDocument14 pagesThe Decline of The West, Oswald Spengler PDFJoe HinderbergenNo ratings yet

- Ucchishta-Ganesha-Sthavaraja Sanskrit PDF File249 PDFDocument4 pagesUcchishta-Ganesha-Sthavaraja Sanskrit PDF File249 PDFakshay patriNo ratings yet

- The Way of The Disciple PDFDocument346 pagesThe Way of The Disciple PDFakshay patriNo ratings yet

- Substance by Harold W PercivalDocument2 pagesSubstance by Harold W Percivalakshay patriNo ratings yet

- Pesnarka 1999 PDFDocument217 pagesPesnarka 1999 PDFakshay patriNo ratings yet

- Life by Harold W PercivalDocument3 pagesLife by Harold W Percivalakshay patri100% (1)

- LalitAstavaratnam or Arya Dwishati - Rishi DurvasaDocument21 pagesLalitAstavaratnam or Arya Dwishati - Rishi Durvasaakshay patriNo ratings yet

- Aghori Mantra EngDocument7 pagesAghori Mantra Engakshay patriNo ratings yet

- Nila Sarasvati StotraDocument5 pagesNila Sarasvati Stotraakshay patri100% (1)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)