Professional Documents

Culture Documents

Matrix Multiplication Algorithms for Parallel Computing

Uploaded by

shahrzad.abediOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Matrix Multiplication Algorithms for Parallel Computing

Uploaded by

shahrzad.abediCopyright:

Available Formats

MATRIX MULTIPLICATION

(Part b)

By:

Shahrzad Abedi

Professor: Dr. Haj Seyed Javadi

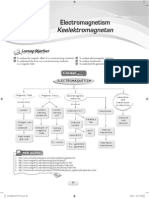

MATRIX Multiplication

SIMD

MIMD

Multiprocessors

Multicomputers

Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn 2

Matrix Multiplication Algorithms

for Multiprocessors

Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

3

p1

p2

p3

p4

p1 p2 p3 p4

Matrix Multiplication Algorithm

for a UMA Multiprocessor

Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn 4

p1

p2

p3

p4

Matrix Multiplication Algorithm

for a UMA Multiprocessor

Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn 5

p1

p2

C A

B

Example:

n= 8 , P=2 n/p= 4

n/p times

We must read n/p rows of A and we must read every

element of B, n/p times

Matrix Multiplication Algorithms

for Multiprocessors

Question : Which Loop should be made

parallel in the sequential Matrix multiplication

algorithm?

Grain Size :

Amount of work performed between processor

interactions

Ratio of Computation time to Communication

Time : Computation time / Communication time

6 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Sequential Matrix Multiplication

Algorithm

Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn 7

Matrix Multiplication Algorithms

for Multiprocessors

Design Strategy If load balancing is not a

problem maximize grain size

Question : Which Loop should be made

parallel ? i or j or k ?

K has data dependency

If j Grain-size = O(

n

3

/np)= O(

n

2

/p)

If i Grain-size = O(

n

3

/p)

X

8 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Matrix Multiplication Algorithm

for a UMA Multiprocessor

Parallelizing i loop

9

Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Matrix Multiplication Algorithm

for a UMA Multiprocessor

n/p rows each (

n

2

)

n/p x

n

2

= (

n

3

/p)

Synchronization

overhead (p)

Complexity (

n

3

/p + p)

10

Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Matrix Multiplication in Loosely

Coupled Multiprocessors

Some matrix elements may be much easier

to access than others

It is important to keep local as many memory

references as possible

In previous UMA algorithm :

Every process must access n/p rows of matrix A

and access every element of B n/p times

Only a single addition and a single

multiplication occur for every element of B

fetched . This is not a good ratio!

Implementation of this algorithm on NUMA

Multi-processors yields poor speedup!

11 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Matrix Multiplication in Loosely

Coupled Multiprocessors

Another method must be found to partition

the problem

An attractive method Block Matrix

Multiplication

12 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Block Matrix Multiplication

A and B are both n x n matrices, n= 2k

A and B can be thought of as conglomerates of

4 smaller matrices, each of size k x k

Given this partitioning of A and B into blocks , C is

defined as follows:

13 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Block Matrix Multiplication

For example there are processes,

then matrix multiplication is done by dividing

A and B into p blocks of size k x k.

14 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Block Matrix Multiplication

STEP 1: compute C

i, j

= A

i,1

B

1,j

A B

15

Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

P1: P2:

P3: P4:

P1: :P2

P3: :P4

Block Matrix Multiplication

STEP 2: Compute C

i,j

=C

i,j

+A

i,2

B

2,j

16 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

P1:

P3:

P2:

P4:

P1: :P2

:P4 P3:

Block Matrix Multiplication

Each block multiplication requires 2k

2

memory fetches, k

3

additions and k

3

multiplications

The number of arithmetic operations per

memory access has risen from 2 , in previous

algorithm to:

17

Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J.

Quinn

Matrix Multiplication Algorithm

for NUMA Multiprocessors

Try to resolve memory contention as much as

possible

Increase the locality of memory references to

reduce memory access time

Design Strategy Reduce average memory

latency time by increasing locality

18 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Algorithms for Multicomputers:

Row-Column Oriented Algorithm

Partition Matrix A into rows and B into columns(n is a

power of 2 and we are executing algorithm on an n-

processor hypercube):

One imaginable parallelization:

Parallelize the outer loop (i)

All parallel processes access column 0 of b, then column 1

of b, etc.

This results in a sequence of broadcast steps each having

(logn) on an n-processor hypercube( refer to chapter 6,

p. 170)

In the case of a multiprocessor too much contention

for the same memory bank is called hot spot

19 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Row-Column Oriented Algorithm

Design Strategy Eliminate contention for

shared resources by changing temporal order

of data accesses.

New Solution for a multicomputer:

Change the order in which the algorithm

computes the elements of each row of C

Processes are organized as a ring.

After each process has used its current column of

B, it fetches the next column of B from its

successor on the ring

20 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Row-Column Oriented Algorithm

1 0

5 4

3

2

7 6

We embed a ring in a hypercube

with dilation 1 using Gray Codes

Each message can be sent in

time (1)

2

6

3

4

1 0

5

7

21 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Row-Column Oriented Algorithm

Example : Use 4 processes to multiply two matrices A

4x4

and B

4x4

22 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

P1: P4:

P2: P3:

Row-Column Oriented Algorithm

Example : Use 4 processes to multiply two matrices A

4x4

and B

4x4

23 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

P1: P4:

P2: P3:

Row-Column Oriented Algorithm

Example : Use 4 processes to multiply two matrices A

4x4

and B

4x4

24 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

P1:

P4:

P2: P3:

Row-Column Oriented Algorithm

Example : Use 4 processes to multiply two matrices A

4x4

and B

4x4

25 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

P1:

P4:

P2: P3:

Row-Column Oriented Algorithm

Generalizing the algorithm :

Multiplying l x m and m x n matrices on p processors where p<l

and p<n

Assume l and n are integer multiples of p

Every processor begins with l/p rows of A and n/p columns of B

and multiplies the (l/p) x m submatrix of A with the m x (n/p)

submatrix of B producing a (l/p) x (n/p) submatrix of the product

matrix

Then every processor passes its piece of B to its successor

processor

After p iterations, each processor has multiplied its piece of A

with every piece of B, building a (l/p) x n section of the

product matrix

The total number of Computation steps : (l/p)m(n/p)p=

(lmn/p)

26 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Row-Column Oriented Algorithm

total Communication time:

The standard assumption : Sending and

receiving a message has Message latency

plus message transmission time times the

number of values sent

: Message latency

: Message transmission time

Every iteration has communication time :

2(+m(n/p))

Over p iteration total communication time is :

27 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Algorithms for Multicomputers:

Block-Oriented Algorithm

We want to maximize number of multiplications

performed per iteration

Multiplying l x m matrix A by m x n matrix B (l, m and

n are integer multiples of where p is an even

power of 2.

Processors as a two-dimensional mesh with

wraparound connections

Give each processor a subsection

of A and subsection of B.

28 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Block-Oriented Algorithm

The new Matrix multiplication algorithm is a

corollary of two results shown earlier:

Block matrix multiplication performed analogously to

scalar matrix multiplication Each occurrence of

scalar multiplication is replaced by an occurrence of

matrix multiplication

The algorithm previously used on 2-dimensional mesh

of processors with a staggering technique The same

staggering technique is used to position the blocks of

A and B, so that every processor multiplies two

submatrices every iteration

29 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Block-Oriented Algorithm

Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn 30

Phase 1: Staggering the block submatrixes of matrix

A is done in both directions: left and right

Block-Oriented Algorithm

Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn 31

Phase 1: Staggering the block submatrixes of matrix

B is done in both directions: up and down

Block-Oriented Algorithm

Chapter 7: Matrix Multiplication , Parallel

Computing :Theory and Practice, Michael J.

Quinn

32

Block-Oriented Algorithm

From s point of view:

Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn 33

A

1,2

*B

2,2

A

1,1

*B

1,2

+ A

1,0

*B

0,2

+ + C

1,2

= A

1,3

*B

3,2

(1)

(2)

Block-Oriented Algorithm

Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn 34

(3) (4)

Block-Oriented Algorithm

There are iterations that every processor sends and

receives a portion of matrix A and B

Number of Computation steps

The staggering and unstaggering phase takes steps instead of

p -1 steps in Getlemans algorithm

How?

There are iterations that every processor sends and

receives a portion of matrix A and B

Total communication steps for transferring A block /B block

2( + ( )) =

35 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Block-Oriented Algorithm

total Communication time:

The standard assumption : Sending and receiving

a message has Message latency plus message

transmission time times the number of values

sent

: Message latency

: Message transmission time

36 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

The two multicomputer Algorithms

Both the block oriented algorithm and the row-

column algorithm have the same number of

computation steps : (lmn/p)

When does the second algorithm require less

communication time?

Assume that we are multiplying two n x n matrices,

where n is an integer multiple of p

37

Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

The two multicomputer Algorithms

Thus the block oriented algorithm is uniformly

superior to the row-column algorithm when

the number of processors is an even power of

2 greater than or equal to 16.

38 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

Questions?

39 Chapter 7: Matrix Multiplication , Parallel Computing :Theory and Practice, Michael J. Quinn

You might also like

- Trial Terengganu SPM 2013 PHYSICS Ques - Scheme All PaperDocument0 pagesTrial Terengganu SPM 2013 PHYSICS Ques - Scheme All PaperCikgu Faizal67% (3)

- Meth HybridDocument80 pagesMeth HybridRuthneta JeffreyNo ratings yet

- Trial Terengganu SPM 2014 Physics K1 K2 K3 SkemaDocument16 pagesTrial Terengganu SPM 2014 Physics K1 K2 K3 SkemaCikgu Faizal100% (2)

- Chapter 7 P2Document20 pagesChapter 7 P2siewkiemNo ratings yet

- Biologi F4 Final SBP 2007 P2Document36 pagesBiologi F4 Final SBP 2007 P2Ummu SyifakNo ratings yet

- Physics Perfect Score Module Form 5Document48 pagesPhysics Perfect Score Module Form 5jemwesleyNo ratings yet

- 9701 w01 Ms 3Document4 pages9701 w01 Ms 3Hubbak KhanNo ratings yet

- Welcome: Pra U / STPM Physics 960Document7 pagesWelcome: Pra U / STPM Physics 960ROSAIMI BIN ABD WAHAB MoeNo ratings yet

- PB165242 PG068 - PG106Document39 pagesPB165242 PG068 - PG106Cikgu Zul ZacherryNo ratings yet

- TU PTPTN Booklet Guidelines and Procedures 2019 PDFDocument18 pagesTU PTPTN Booklet Guidelines and Procedures 2019 PDFSachin KumarNo ratings yet

- Modul Cakna KelantanDocument22 pagesModul Cakna KelantanNALLATHAMBYNo ratings yet

- Set B Section A Question 2: Compilation of Trial State Paper 3 QuestionsDocument18 pagesSet B Section A Question 2: Compilation of Trial State Paper 3 QuestionsCart KartikaNo ratings yet

- Peka Physics-E - M - F and Internal ResistanceDocument5 pagesPeka Physics-E - M - F and Internal ResistanceAl NazurisNo ratings yet

- Form 4 Physics Chapter 2.5-2.9 - Teacher'sDocument14 pagesForm 4 Physics Chapter 2.5-2.9 - Teacher'sPavithiran100% (1)

- Bengkel Add Maths - Taburan KebarangkalianDocument13 pagesBengkel Add Maths - Taburan KebarangkalianAbdul Manaf0% (1)

- 2016 Melaka Biologi Kertas 2Document17 pages2016 Melaka Biologi Kertas 2Hemanathan HarikrishnanNo ratings yet

- Exercises Addmath Form 4Document8 pagesExercises Addmath Form 4Nurul AfifahNo ratings yet

- Physics Paper 2 Module For SPM 2013Document53 pagesPhysics Paper 2 Module For SPM 2013Nicholas Ch'ngNo ratings yet

- SPM Kimia Tingkatan 4,5 - Paper3 - 20170420112820Document4 pagesSPM Kimia Tingkatan 4,5 - Paper3 - 20170420112820nurzatilNo ratings yet

- MRSM Add Maths p1 2004Document11 pagesMRSM Add Maths p1 2004murulikrishan100% (3)

- Physics Perfect Score Module Form 4Document52 pagesPhysics Perfect Score Module Form 4Muhammad Nur Hafiz Bin Ali75% (4)

- Pks (Hookes Law)Document4 pagesPks (Hookes Law)zaliniNo ratings yet

- IT Phy F5 Topical Test 3 (BI)Document10 pagesIT Phy F5 Topical Test 3 (BI)rospazitaNo ratings yet

- 9701 w01 Ms 2Document4 pages9701 w01 Ms 2Hubbak Khan100% (1)

- MSG456 Mathematical - Programming (May 2010)Document7 pagesMSG456 Mathematical - Programming (May 2010)dikkanNo ratings yet

- Chapter 9: DifferentiationDocument10 pagesChapter 9: DifferentiationHayati Aini AhmadNo ratings yet

- Final ZCA101 Dr. MD RoslanDocument10 pagesFinal ZCA101 Dr. MD RoslanZurainul Mardihah Zainul AbiddinNo ratings yet

- Dn. BHD .: Indices, Surds and LogarithmsDocument18 pagesDn. BHD .: Indices, Surds and LogarithmsEZ WhiteningNo ratings yet

- Analisis Item Bio SPM 2003-2019Document4 pagesAnalisis Item Bio SPM 2003-2019Caryn YeapNo ratings yet

- 2009 Book 13Document1 page2009 Book 13nayagam74No ratings yet

- XT MATHS Grade 11 Functions Parabolas + Lines MemoDocument7 pagesXT MATHS Grade 11 Functions Parabolas + Lines MemoJunaedi SiahaanNo ratings yet

- Add Math SPM 2000 PDFDocument7 pagesAdd Math SPM 2000 PDFaimarashidNo ratings yet

- Physics Paper 1, 2, 3 Trial SPM 2010 MRSMDocument100 pagesPhysics Paper 1, 2, 3 Trial SPM 2010 MRSMrenuNo ratings yet

- SMK Air Tawar Microorganisms Form 5 Science NotesDocument235 pagesSMK Air Tawar Microorganisms Form 5 Science Noteskembara08100% (1)

- SM Sains Muzaffar Syah Melaka: Fizik Tingkatan 4Document8 pagesSM Sains Muzaffar Syah Melaka: Fizik Tingkatan 4Taty Sutrianty A ShukorNo ratings yet

- Answer Ulangkaji Berfokus 1 Understanding SkillDocument10 pagesAnswer Ulangkaji Berfokus 1 Understanding SkillCart KartikaNo ratings yet

- Mathematics Form 1 - Chapter 1Document47 pagesMathematics Form 1 - Chapter 1SABARIVAASAN A/L SEELAN Moe100% (1)

- Chapter 9 P2Document18 pagesChapter 9 P2faisal850720035887No ratings yet

- Heery's Zen Notes: Physics KSSM F5 2021Document13 pagesHeery's Zen Notes: Physics KSSM F5 2021Haa PeaceNo ratings yet

- Dokumen - Tips Physics STPM Past Year Questions With Answer 2006Document18 pagesDokumen - Tips Physics STPM Past Year Questions With Answer 2006lllNo ratings yet

- Theme: Waves, Light and Optics Chapter 5: WavesDocument17 pagesTheme: Waves, Light and Optics Chapter 5: WavesJacqueline Lim100% (1)

- Tutorial 1 Basic Concept of Electrical With AnswerDocument3 pagesTutorial 1 Basic Concept of Electrical With AnswernisasoberiNo ratings yet

- Graphing spring constant and surface area pressure relationshipDocument6 pagesGraphing spring constant and surface area pressure relationshipSeraMa JambuiNo ratings yet

- CHAPTER 7: Coordinate Geometry: 7.1 Division of A Line SegmentDocument19 pagesCHAPTER 7: Coordinate Geometry: 7.1 Division of A Line SegmentISMADI SURINNo ratings yet

- Chapter 02 - Forces and MotionsDocument12 pagesChapter 02 - Forces and MotionsAl NazurisNo ratings yet

- Chapter 5, Form 4Document9 pagesChapter 5, Form 4Teoh MilayNo ratings yet

- The Empirical Formula of Copper II OxideDocument4 pagesThe Empirical Formula of Copper II Oxideみゆ マイクロNo ratings yet

- Beaver Results 2017 for Top Malaysian SchoolsDocument369 pagesBeaver Results 2017 for Top Malaysian SchoolsSumathi RadhakrishnanNo ratings yet

- STPM Trials 2009 Chemistry Answer Scheme TerengganuDocument17 pagesSTPM Trials 2009 Chemistry Answer Scheme Terengganusherry_christyNo ratings yet

- Undergraduate Computational Physics Projects On Quantum ComputingDocument16 pagesUndergraduate Computational Physics Projects On Quantum Computingxerox aquiNo ratings yet

- Matlab Assignment: 1 Submitted TODocument16 pagesMatlab Assignment: 1 Submitted TOZain Ul AbidinNo ratings yet

- ReviewDocument23 pagesReviewY19ec151No ratings yet

- Practice 2 Max, Min and Map KDocument12 pagesPractice 2 Max, Min and Map KJair RamirezNo ratings yet

- Matrix Multiplications and Collective Communication: Michael HankeDocument38 pagesMatrix Multiplications and Collective Communication: Michael HankeSơn NguyễnNo ratings yet

- 4th ReviewDocument20 pages4th ReviewY19ec151No ratings yet

- HIGH SPEED VEDIC MULTIPLIER FOR DSP'SDocument40 pagesHIGH SPEED VEDIC MULTIPLIER FOR DSP'SManish KumarNo ratings yet

- Bab 7 Matrix - MultiplicationDocument4 pagesBab 7 Matrix - MultiplicationChikal TyagaNo ratings yet

- Blocked Matrix MultiplyDocument6 pagesBlocked Matrix MultiplyDeivid CArpioNo ratings yet

- 4th Review - Copy1Document21 pages4th Review - Copy1Y19ec151No ratings yet

- 3rd Semester DLD LAB 3Document7 pages3rd Semester DLD LAB 3210276No ratings yet

- Reading Process Theories: Bottom-Up Model Interactive Model Top-Down ModelDocument2 pagesReading Process Theories: Bottom-Up Model Interactive Model Top-Down ModelBeverly Panganiban CadacioNo ratings yet

- Team GuttersDocument3 pagesTeam Guttersgailjd1100% (3)

- Manual ElevatorsDocument75 pagesManual ElevatorsThomas Irwin DsouzaNo ratings yet

- University of Tripoli Faculty of Engineering Petroleum EngineeringDocument10 pagesUniversity of Tripoli Faculty of Engineering Petroleum EngineeringesraNo ratings yet

- Computers & Industrial Engineering: Guohui Zhang, Xinyu Shao, Peigen Li, Liang GaoDocument10 pagesComputers & Industrial Engineering: Guohui Zhang, Xinyu Shao, Peigen Li, Liang Gaocloud69windNo ratings yet

- Reportte Cambios CPC 2a1Document1 pageReportte Cambios CPC 2a1FERNANDO FERRUSCANo ratings yet

- Joe Armstrong (Programmer)Document3 pagesJoe Armstrong (Programmer)Robert BonisoloNo ratings yet

- Iphone 5se SchematicpdfDocument28 pagesIphone 5se SchematicpdfBruno Vieira BarbozaNo ratings yet

- Analysis, Design and Implementation of Zero-Current-Switching Resonant Converter DC-DC Buck ConverterDocument12 pagesAnalysis, Design and Implementation of Zero-Current-Switching Resonant Converter DC-DC Buck Converterdaber_huny20No ratings yet

- This Study Resource Was: Question AnswersDocument3 pagesThis Study Resource Was: Question AnswerskamakshiNo ratings yet

- 2500 ManualDocument196 pages2500 Manualfede444No ratings yet

- Ethics by Baruch Spinoza PDFDocument2 pagesEthics by Baruch Spinoza PDFBrianNo ratings yet

- INDUSTRIAL VISIT TO ESCORTS TRACTOR ASSEMBLYDocument5 pagesINDUSTRIAL VISIT TO ESCORTS TRACTOR ASSEMBLYShane Khan50% (2)

- Epigraphs - SubtitleDocument2 pagesEpigraphs - Subtitle17ariakornNo ratings yet

- Band III VHF Antennas 174 240 MHZDocument14 pagesBand III VHF Antennas 174 240 MHZragiNo ratings yet

- Math8 Q1 Module8of8 SolvingRationalALgebraicEquationsIn2Variables v2Document24 pagesMath8 Q1 Module8of8 SolvingRationalALgebraicEquationsIn2Variables v2Jumar MonteroNo ratings yet

- Materi TOEFL ListeningDocument29 pagesMateri TOEFL Listeningputra fajarNo ratings yet

- WCO - Data Model PDFDocument25 pagesWCO - Data Model PDFCarlos Pires EstrelaNo ratings yet

- Electrical Safety Testing GuideDocument3 pagesElectrical Safety Testing GuideBalasoobramaniam CarooppunnenNo ratings yet

- KFC District HeatingDocument15 pagesKFC District HeatingAdrianUnteaNo ratings yet

- Gsubp Guideline Apec RHSCDocument16 pagesGsubp Guideline Apec RHSCHsin-Kuei LIUNo ratings yet

- Stationary Waves: Hyperlink DestinationDocument10 pagesStationary Waves: Hyperlink DestinationDyna MoNo ratings yet

- Genbio 2 Module 1 Genetic Engineering ProcessDocument26 pagesGenbio 2 Module 1 Genetic Engineering Processeaishlil07No ratings yet

- English exam practice with present tensesDocument6 pagesEnglish exam practice with present tensesMichael A. OcampoNo ratings yet

- MV Capacitor CalculationDocument1 pageMV Capacitor CalculationPramod B.WankhadeNo ratings yet

- List of Intel MicroprocessorsDocument46 pagesList of Intel MicroprocessorsnirajbluelotusNo ratings yet

- 9 Little Translation Mistakes With Big ConsequencesDocument2 pages9 Little Translation Mistakes With Big ConsequencesJuliany Chaves AlvearNo ratings yet

- DbtdihwnaDocument6 pagesDbtdihwnaapi-2522304No ratings yet

- Template Builder ManualDocument10 pagesTemplate Builder ManualNacer AssamNo ratings yet

- Steps To Design A PCB Using OrcadDocument3 pagesSteps To Design A PCB Using OrcadkannanvivekananthaNo ratings yet