Professional Documents

Culture Documents

55205-mt - Pattern Recognition & Image Processing

Uploaded by

SRINIVASA RAO GANTA0 ratings0% found this document useful (0 votes)

258 views2 pagesOriginal Title

55205-mt----pattern recognition & image processing

Copyright

© Attribution Non-Commercial (BY-NC)

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Attribution Non-Commercial (BY-NC)

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

258 views2 pages55205-mt - Pattern Recognition & Image Processing

Uploaded by

SRINIVASA RAO GANTACopyright:

Attribution Non-Commercial (BY-NC)

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 2

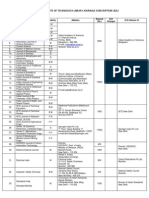

Code No: 55205/MT

NR

M.Tech. – II Semester Regular Examinations, September, 2008

PATTERN RECOGNITION & IMAGE PROCESSING

(Common to Computer Science & Engineering/ Computer Science)

Time: 3hours Max. Marks:60

Answer any FIVE questions

All questions carry equal marks

---

1.a) What is the Bayes error probability? Give an example for two class

case.

b) What is mixed probability density function?

2. Consider a two – class classification problem with the following

Gaussian class – conditional densities

P ( x / w1 ) ∼ N ( 0,1)

P ( x / w2 ) ∼ N (1, 2 )

Assume P (ω1 ) = P (ω2 ) and a 0-1 loss function

(a) Sketch the two densities on the same plot

(b) Suppose the following training sets D1 and D2 are available

from classes w1 and w2 respectively, to estimate µ1 , µ2,σ 12 , σ 22 .

Find the maximum likehood estimates µˆ1 , µˆ 2 , σˆ12 andσˆ 22

D1 = { 0.67, 1.19, -1.20. –0.02, -0.16 }

D2 = { 1.00, 0.55, 2.55, -1.65 , 1.61 }

3. Explain the application of hidden markov models for isolated

speech unit recognition. Give illustrate examples.

4. Write short notes on the following

(a) Image transforms (b) Contrast stretching (c) Binary images.

5. Consider a two – class classification problem and assume that the

classes are equally probable. Let the features X= ( x, x2 ,......, xd ) be

binary valued (1 or 0). Let Pij denote the probability that feature

xi takes the value 1 given class j. Let the features be conditionally

independent for both classes. Finally assume that d is odd and

that Pi1 = P > 1/ 2 and Pi 2 = 1 − P , for all i. Show that the optional

Bayes decision rule becomes: decide class one if

x1 + x2 + ...... + xd > d / 2 , and class two otherwise.

6.a) Explain the principle of Laplacian operator for edge detection.

b) Describe edge relaxation technique.

7. Write short notes on the following

(a) Similarity measures (b) Loss functions

(b) Feature selection

8.a) Give a definition and mathematical description of first order

markov model.

b) Briefly explain the basic problems of hidden markov models.

&_&_&_&

You might also like

- JNTUH MBA Course Structure and Syllabus 2013Document80 pagesJNTUH MBA Course Structure and Syllabus 2013SRINIVASA RAO GANTANo ratings yet

- JNTUH Syllabus 2013 M.Tech EPSDocument23 pagesJNTUH Syllabus 2013 M.Tech EPSSRINIVASA RAO GANTANo ratings yet

- JNTUH MCA Syllabus 2013Document107 pagesJNTUH MCA Syllabus 2013SRINIVASA RAO GANTANo ratings yet

- JNTUH Syllabus 2013 M.tech Communication SysDocument26 pagesJNTUH Syllabus 2013 M.tech Communication SysSRINIVASA RAO GANTANo ratings yet

- JNTUH M.TECH PEDS 2013 SyllabusDocument24 pagesJNTUH M.TECH PEDS 2013 SyllabusSRINIVASA RAO GANTA100% (1)

- Kvs Librarypolicy2012Document59 pagesKvs Librarypolicy2012SRINIVASA RAO GANTANo ratings yet

- JNTUH Syllabus 2013 Civil Engg Course StructureDocument2 pagesJNTUH Syllabus 2013 Civil Engg Course StructureSRINIVASA RAO GANTANo ratings yet

- IP Addresses and SubnettingDocument16 pagesIP Addresses and SubnettingHusnainNo ratings yet

- Guidelines For Open Educational Resources (OER) in Higher EducationDocument27 pagesGuidelines For Open Educational Resources (OER) in Higher EducationSRINIVASA RAO GANTANo ratings yet

- JNTUH Syllabus 2013 M.Tech CSEDocument33 pagesJNTUH Syllabus 2013 M.Tech CSESRINIVASA RAO GANTANo ratings yet

- Paper-I Set-WDocument16 pagesPaper-I Set-WKaran AggarwalNo ratings yet

- Vbit Journals 2011-12Document6 pagesVbit Journals 2011-12SRINIVASA RAO GANTANo ratings yet

- Code No: 25079Document8 pagesCode No: 25079SRINIVASA RAO GANTANo ratings yet

- Reg Pay Degree 020110Document16 pagesReg Pay Degree 020110SRINIVASA RAO GANTANo ratings yet

- Librarian Is Teaching or Non TeachingDocument1 pageLibrarian Is Teaching or Non TeachingSRINIVASA RAO GANTANo ratings yet

- Library Quality Criteria For NBA AccreditationDocument3 pagesLibrary Quality Criteria For NBA AccreditationSRINIVASA RAO GANTA100% (6)

- NET EXAM Paper 1Document27 pagesNET EXAM Paper 1shahid ahmed laskar100% (4)

- Ready Reckoner Radar EwDocument27 pagesReady Reckoner Radar EwSRINIVASA RAO GANTANo ratings yet

- Dspace On WindowsDocument15 pagesDspace On WindowsSRINIVASA RAO GANTANo ratings yet

- Code - No: 25042: Freespace Guide CutoffDocument8 pagesCode - No: 25042: Freespace Guide CutoffSRINIVASA RAO GANTANo ratings yet

- r05011801 Metallurgical AnalysisDocument4 pagesr05011801 Metallurgical AnalysisSRINIVASA RAO GANTANo ratings yet

- Code No: 25056Document8 pagesCode No: 25056SRINIVASA RAO GANTANo ratings yet

- Code No: 25022Document4 pagesCode No: 25022SRINIVASA RAO GANTANo ratings yet

- r05311902 Computer GraphicsDocument4 pagesr05311902 Computer GraphicsSRINIVASA RAO GANTANo ratings yet

- Rr322305 ImmunologyDocument4 pagesRr322305 ImmunologySRINIVASA RAO GANTANo ratings yet

- Code No: 35051Document8 pagesCode No: 35051SRINIVASA RAO GANTANo ratings yet

- Code No: 35060Document4 pagesCode No: 35060SRINIVASA RAO GANTANo ratings yet

- Rr322105-High Speed AerodynamicsDocument8 pagesRr322105-High Speed AerodynamicsSRINIVASA RAO GANTANo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)