Professional Documents

Culture Documents

Bio Mimetic Robotics

Uploaded by

Mohammed SallamOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Bio Mimetic Robotics

Uploaded by

Mohammed SallamCopyright:

Available Formats

This page intentionally left blank

BIOMIMETIC ROBOTICS

This book is written as an initial course in robotics. It is ideal for the

study of unmanned aerial or underwater vehicles, a topic on which

few books exist. It presents the fundamentals of robotics, from an

aerospace perspective, by considering only the eld of robot mech-

anisms. For an aerospace engineer, three-dimensional and parallel

mechanisms ight simulation, unmanned aerial vehicles, and space

robotics take on an added signicance. Biomimetic robot mecha-

nisms are fundamental to manipulators and walking, mobile, and ying

robots. As a distinguishing feature, this book gives a unied and inte-

grated treatment of biomimetic robot mechanisms. It is ideal prepa-

ration for the next robotics module: practical robot-control design.

Although the book focuses on principles, computational procedures

are also given due importance. Students are encouraged to use com-

putational tools to solve the examples in the exercises. The author has

also included some additional topics beyond his course coverage for

the enthusiastic reader to explore.

Ranjan Vepa has been with Queen Mary and Westeld College in

the Department of Engineering since 1984, after receiving his Ph.D.

from Stanford University. Dr. Vepas research covers, in addition to

biomimetic robotics, aspects of the design, analysis, simulation, and

implementation of avionics and avionics-related systems, including

nonlinear aerospace vehicle kinematics, dynamics, vibration, control,

and ltering. He has published numerous journal and conference

papers and has contributed to a textbook titled Application of Arti-

cial Intelligence in Process Control. He is active in learning-based

design education and the utilization of modern technology in engineer-

ing education. Dr. Vepa is a Chartered Engineer, a Member of the

Royal Aeronautical Society (MRAeS) London, and a Fellow of the

Higher Education Academy.

Biomimetic Robotics

mechanisms and control

Ranjan Vepa

Queen Mary, University of London

CAMBRIDGE UNIVERSITY PRESS

Cambridge, New York, Melbourne, Madrid, Cape Town, Singapore, So Paulo

Cambridge University Press

The Edinburgh Building, Cambridge CB2 8RU, UK

First published in print format

ISBN-13 978-0-521-89594-1

ISBN-13 978-0-511-50691-8

Ranjan Vepa 2009

2009

Information on this title: www.cambridge.org/9780521895941

This publication is in copyright. Subject to statutory exception and to the

provision of relevant collective licensing agreements, no reproduction of any part

may take place without the written permission of Cambridge University Press.

Cambridge University Press has no responsibility for the persistence or accuracy

of urls for external or third-party internet websites referred to in this publication,

and does not guarantee that any content on such websites is, or will remain,

accurate or appropriate.

Published in the United States of America by Cambridge University Press, New York

www.cambridge.org

eBook (EBL)

hardback

To my father, Narasimha Row, and mother, Annapurna

Contents

Preface page xiii

Acronyms xv

1. The Robot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 Robotics: An Introduction 1

1.2 Robot-Manipulator Fundamentals and Components 5

1.3 From Kinematic Pairs to the Kinematics of Mechanisms 12

1.4 Novel Mechanisms 13

1.4.1 Rack-and-Pinion Mechanism 14

1.4.2 Pawl-and-Ratchet Mechanism 14

1.4.3 Pantograph 15

1.4.4 Quick-Return Mechanisms 15

1.4.5 Ackermann Steering Gear 16

1.4.6 Sun and Planet Epicyclic Gear Train 17

1.4.7 Universal Joints 17

1.5 Spatial Mechanisms and Manipulators 18

1.6 Meet Professor da Vinci the Surgeon, PUMA, and SCARA 20

1.7 Back to the Future 23

exercises 24

2. Biomimetic Mechanisms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

2.1 Introduction 25

2.2 Principles of Legged Locomotion 27

2.2.1 Inchworm Locomotion 29

2.2.2 Walking Machines 30

2.2.3 Autonomous Footstep Planning 31

2.3 Imitating Animals 31

2.3.1 Principles of Bird Flight 33

2.3.2 Mechanisms Based on Bird Flight 34

2.3.3 Swimming Like a Fish 37

vii

viii Contents

2.4 Biomimetic Sensors and Actuators 39

2.4.1 Action Potentials 43

2.4.2 Measurement and Control of Cellular Action Potentials 46

2.4.3 Bionic Limbs: Interfacing Articial Limbs to Living Cells 47

2.4.4 Articial Muscles: Flexible Muscular Motors 51

2.4.5 Prosthetic Control of Articial Muscles 53

2.5 Applications in Computer-Aided Surgery and Manufacture 55

2.5.1 Steady Hands: Active Tremor Compensation 56

2.5.2 Design of Scalable Robotic Surgical Devices 58

2.5.3 Robotic Needle Placement and Two-Hand Suturing 60

exercises 61

3. Homogeneous Transformations and Screw Motions . . . . . . . . . . . . 62

3.1 General Rigid Motions in Two Dimensions 62

3.1.1 Instantaneous Centers of Rotation 64

3.2 Rigid Body Motions in Three Dimensions: Denition of Pose 64

3.2.1 Homogeneous Coordinates: Transformations of Position

and Orientation 65

3.3 General Motions of Rigid Frames in Three Dimensions: Frames

with Pose 66

3.3.1 The DenavitHartenberg Decomposition 66

3.3.2 Instantaneous Axis of Screw Motion 67

3.3.3. A Screw from a Twist 69

exercises 70

4. Direct Kinematics of Serial Robot Manipulators . . . . . . . . . . . . . . 74

4.1 Denition of Direct or Forward Kinematics 74

4.2 The DenavitHartenberg Convention 74

4.3 Planar Anthropomorphic Manipulators 76

4.4 Planar Nonanthropomorphic Manipulators 78

4.5 Kinematics of Wrists 80

4.6 Direct Kinematics of Two Industrial Manipulators 81

exercises 86

5. Manipulators with Multiple Postures and Compositions . . . . . . . . . . 89

5.1 Inverse Kinematics of Robot Manipulators 89

5.1.1 The Nature of Inverse Kinematics: Postures 91

5.1.2 Some Practical Examples 95

5.2 Parallel Manipulators: Compositions 99

5.2.1 Parallel Spatial Manipulators: The Stewart Platform 101

5.3 Workspace of a Manipulator 105

exercises 107

Contents ix

6. Grasping: Mechanics and Constraints . . . . . . . . . . . . . . . . . . . . 111

6.1 Forces and Moments 111

6.2 Denition of a Wrench 112

6.3 Mechanics of Gripping 112

6.4 Transformation of Forces and Moments 114

6.5 Compliance 115

6.5.1 Passive and Active Compliance 116

6.5.2 Constraints: Natural and Articial 116

6.5.3 Hybrid Control 117

exercises 118

7. Jacobians . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

7.1 Differential Motion 120

7.1.1 Velocity Kinematics 123

7.1.2 Translational Velocities and Acceleration 124

7.1.3 Angular Velocities 127

7.2 Denition of a Screw Vector: Instantaneous Screws 127

7.2.1 Duality with the Wrench 129

7.2.2 Transformation of a Compliant Body Wrench 130

7.3 The Jacobian and the Inverse Jacobian 131

7.3.1 The Mobility Criterion: Overconstrained Mechanisms 133

7.3.2 Singularities: Physical Interpretation 134

7.3.3 Manipulability: Putting Redundant Mechanisms to Work 136

7.3.4 Computing the Inverse Kinematics: The Lyapunov

Approach 137

exercises 140

8. Newtonian, Eulerian, and Lagrangian Dynamics . . . . . . . . . . . . . 142

8.1 Newtonian and Eulerian Mechanics 142

8.1.1 Kinetics of Screw Motion: The NewtonEuler Equations 145

8.1.2 Moments of Inertia 146

8.1.3 Dynamics of a Links Moment of Inertia 147

8.1.4 Recursive Form of the NewtonEuler Equations 149

8.2 Lagrangian Dynamics of Manipulators 152

8.2.1 Forward and Inverse Dynamics 154

8.3 The Principle of Virtual Work 156

exercises 158

9. Path Planning, Obstacle Avoidance, and Navigation . . . . . . . . . . . 164

9.1 Fundamentals of Trajectory Following 164

9.1.1 Path Planning: Trajectory Generation 165

9.1.2 Splines, B ezier Curves, and Bernstein Polynomials 167

9.2 Dynamic Path Planning 172

x Contents

9.3 Obstacle Avoidance 174

9.4 Inertial Measuring and Principles of Position and

Orientation Fixing 180

9.4.1 Gyro-Free Inertial Measuring Units 188

9.4.2 Error Dynamics of Position and Orientation 189

exercises 193

10. Hamiltonian Systems and Feedback Linearization . . . . . . . . . . . . 198

10.1 Dynamical Systems of the Liouville Type 198

10.1.1 Hamiltons Equations of Motion 199

10.1.2 Passivity of Hamiltonian Dynamics 202

10.1.3 Hamiltons Principle 203

10.2 Contact Transformation 204

10.2.1 HamiltonJacobi Theory 205

10.2.2 Signicance of the Hamiltonian Representations 206

10.3 Canonical Representations of the Dynamics 207

10.3.1 Lie Algebras 208

10.3.2 Feedback Linearization 210

10.3.3 Partial StateFeedback Linearization 213

10.3.4 Involutive Transformations 214

10.4 Applications of Feedback Linearization 215

10.5 Optimal Control of Hamiltonian and Near-Hamiltonian Systems 223

10.6 Dynamics of Nonholonomic Systems 225

10.6.1 The Bicycle 228

exercises 236

11. Robot Control . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 242

11.1 Introduction 242

11.1.1 Adaptive and Model-Based Control 242

11.1.2 Taxonomies of Control Strategies 252

11.1.3 Human-Centered Control Methods 252

11.1.4 Robot-Control Tasks 257

11.1.5 Robot-Control Implementations 258

11.1.6 Controller Partitioning and Feedforward 259

11.1.7 Independent Joint Control 260

11.2 HAL, Do You Understand JAVA? 261

11.3 Robot Sensing and Perception 263

exercises 269

12. Biomimetic Motive Propulsion . . . . . . . . . . . . . . . . . . . . . . . . 272

12.1 Introduction 272

12.2 Dynamics and Balance of Walking Biped Robots 272

12.2.1 Dynamic Model for Walking 272

12.2.2 Dynamic Balance during Walking: The Zero-Moment Point 277

Contents xi

12.2.3 Half-Model for a Quadruped Robot: Dynamics and Control 279

12.3 Modeling Bird Flight: Robot Manipulators in Free Flight 281

12.3.1 Dynamics of a Free-Flying Space Robot 282

12.3.2 Controlling a Free-Flying Space Robot 284

12.4 Flapping Propulsion of Aerial Vehicles 285

12.4.1 Unsteady Aerodynamics of an Aerofoil 287

12.4.2 Generation of Thrust 294

12.4.3 Controlled Flapping for Flight Vehicles 299

12.5 Underwater Propulsion and Its Control 301

exercises 304

Answers to Selected Exercises . . . . . . . . . . . . . . . . . . . . . . . . 309

Appendix: Attitude and Quaternions . . . . . . . . . . . . . . . . . . . . 317

Bibliography 335

Index 339

Preface

This book is intended for a rst course in a robotics sequence. There are indeed a

large number of books in the eld of robotics, but for the engineer or student inter-

ested in unmanned aerial or underwater vehicles there are very few books. From the

point of view of an aerospace engineer (ight simulation, unmanned aerial vehicles,

and space robotics) three-dimensional and parallel mechanisms take on an added

signicance.

The fundamentals of robotics, from an aerospace perspective, can be very well

presented by considering just the eld of robot mechanisms. Biomimetic robot

mechanisms are fundamental to manipulators and to walking, mobile, and ying

robots. A distinguishing feature of the book is that a unied and integrated treat-

ment of biomimetic robot mechanisms is offered.

This book is intended to prepare a student for the next logical module in a

course on robotics: practical robot-control design. Throughout the book, in every

chapter, are introduced the most important and recent developments relevant to

the subject matter. Although the books primary focus is on understanding princi-

ples, computational procedures are also given due importance as a matter of course.

Students are encouraged to use whatever computational tools they consider appro-

priate to solve the examples in the exercises.

When writing this text, I included the topics in my course and some more for

the enthusiastic reader with the initiative for self-learning.

Thus, Chapter 1 gives an overview of robotics, with a focus on generic robotic

mechanisms, whereas Chapter 2 is about biomimetic mechanisms. Chapter 3 draws

attention to the integrated representation of displacement and rotation. Chapters 4

and 5 are about direct and inverse kinematics. Chapter 6 is about the mechanics of

grasping, and Chapter 7 provides a gentle introduction to Jacobians. Dynamics of

mechanisms is introduced in Chapter 8, and path planning and trajectory following

are discussed in Chapter 9. Chapter 10 is dedicated to the techniques of transform-

ing the dynamics to simpler forms that are useful for such tasks as control synthesis.

Chapter 10 also considers the ever-important problem of the dynamics of nonholo-

nomic systems with the archetypal example of the bicycle.

xiii

xiv Preface

Chapter 11 on robot control and Chapter 12 on biomimetic motive propulsion

are unique elements of this book. Most books dive straight into the mathematics of

robot-control synthesis. Here the student is introduced to important concepts in the

preceding chapters and is thus better prepared. Additionally, the problem of robot

control is inherently different from other applications of control engineering in that

it is patently nonlinear. Thus, Chapter 11 introduces the strategies for robot control

from a broad application perspective, keeping in mind the real-world and nonideal

situations faced on a routine basis. The book concludes with a unique and lively

chapter on biomimetic approaches to thrust generation in robots and robot vehicles.

All chapters feature exercises designed to reinforce the chapter content and

ensure the student has sufcient background to address the subsequent chapters.

The appendix provides a basic introduction to attitude representation, dynamics,

and control.

Any general text draws heavily on the work of countless engineers and scien-

tists. It is not possible to include references to the whole body of published work in

the eld. Yet those books and papers that were consulted in the preparation of the

manuscript are referenced, along with selected texts and papers that are appropriate

for the reader to obtain more detail and breadth.

I would like to thank my colleagues in the Department of Engineering at Queen

Mary, for their support in this endeavor. I would also like to express my special

thanks to Peter Gordon, Senior Editor, Aeronautical and Mechanical Engineering,

Cambridge University Press, New York, for his enthusiastic support for this project.

I would like to thank my wife, Sudha, for her love, understanding, and patience.

Her encouragement was a principal factor that provided the motivation to complete

the project. Finally, I must add that my interest in the kinematics of mechanisms was

inherited from my late father many years ago. It was, in fact, he who brought to my

attention the work of Alexander B. W. Kennedy and of Denavit and Hartenberg,

as well as countless other related aspects, a long time ago. To him I owe my deepest

debt of gratitude. This book is dedicated to his memory.

R. Vepa

London, UK, 2008

Acronyms

ATP adenosine triphosphate

BCF body or caudal n

CNS central nervous system

CP caudate and putamen

CVG Coriolis vibratory gyroscope

DCM direction cosine matrix

DH DenavitHartenberg

DOF degree of freedom

DTG dynamically tuned gyroscope

DVT deep vein thrombosis

ECG electrocardiogram

EEG electroencephalogram

EMF electromotive force

EMG electromyogram

ENG electroneurogram

FET eld-effect transistor

FOG ber-optic gyroscope

GOD glucose oxidase

GPpe globus pallidus pars externa

IMU inertial measuring unit

INS inertial navigation system

I/O input/output

IRS inertial reference system

ISFET ion-selective eld-effect transistor

IUPAC International Union of Pure and Applied Chemistry

LASER light amplication by stimulated emission of radiation

LED light-emitting diode

MPF median or paired n

MRAC model reference adaptive control

MRI magnetic resonance image

OS operating system

xv

xvi Acronyms

OSI open system interconnection

PD proportional-derivative

PI proportional-integral

PUMA Programmable Universal Machine for Assembly

PVDF poly-vinylidene uoride triuoroethylene

QCM quartz crystal microbalance

RF radio frequency

RLG ring-laser gyroscope

ROV remotely operated vehicle

RTOS real-time operating system

SCARA Selectively Compliant Assembly Robot Arm

SMA shape memory alloy

SNpc substantia nigra pars compacta

STN subthalamic nucleus

TCP tool center point

UAV unmanned aerial vehicle

UT Universal Time

VDT virtual device table

VOR vestibulo-ocular reex

1

The Robot

1.1 Robotics: An Introduction

The concept of a robot as we know it today evolved over many years. In fact, its

origins could be traced to ancient Greece well before the time when Archimedes

invented the screw pump. Leonardo da Vinci (14521519) made far-reaching con-

tributions to the eld of robotics with his pioneering research into the brain that

led him to make discoveries in neuroanatomy and neurophysiology. He provided

physical explanations of how the brain processes visual and other sensory inputs

and invented a number of ingenious machines. His ying devices, although not

practicable, embodied sound principles of aerodynamics, and a toy built to bring

to fruition Leonardos drawing inspired the Wright brothers in building their own

ying machine, which was successfully own in 1903. The word robot itself seems

to have rst appeared in 1921 in Karel Capeks play, Rossums Universal Robots,

and originated from the Slavic languages. In many of these languages the word

robot is quite common as it stands for worker. It is derived from the Czech word

robitit, which implies drudgery. Indeed, robots were conceived as machines capa-

ble of repetitive tasks requiring a lower intelligence than that of humans. Yet today

robots are thought to be capable of possessing intelligence, and the term is probably

inappropriate. Nevertheless it is in use. The term robotics was probably rst coined

in science ction work published around the 1950s by Isaac Asimov, who also enun-

ciated his three laws of robotics. It was from Asimovs work that the concept of

emulating humans emerged. A typical robot arm based on the anatomy of humans

is illustrated in Figure 1.1.

The evolution of a robot has a long and fascinating history, and this is traced in

Table 1.1. Apart from the down-to-earth inventors, a number of high-ying thinkers

contributed to its development by imagining the unimaginable. Although there were

many such philosophers, some of the recent thinkers who contributed to the evolu-

tion of robotics are identied in Table 1.2. In fact, the late Werner von Braun, the

legendary head of the U.S. space agency, NASA, used Arthur C. Clarkes book,

Exploration of Space (1951), to persuade President John F. Kennedy to commit the

United States to going to the Moon. According to Arthur C. Clarke, Cyrano de

1

2 The Robot

Body or capstan

(can rotate about a vertical axis)

shoulder

(can rotate about

a horizontal axis)

upper arm

lower arm

elbow

(can rotate about

a horizontal axis)

wrist and hand

(gripper assembly)

Figure 1.1. A typical robot arm.

Bergerac must be credited both for rst employing the rocket in space travel and

for inventing the ramjet in the 1650s. These inventions, however, became a reality

300 years later.

What then is a robot? An industrial robot in its simplest form is a programmable

multifunctional manipulator designed to move materials, parts, tools, or specialized

devices through a sequence of programmed motions to perform a variety of tasks.

Some of the earliest developments in the eld of robotics were concerned with car-

rying out tasks in environments that were either patently dangerous for humans to

enter or simply inaccessible. A classic example is the handling of nuclear fuel rods

in the environment of a nuclear reactor. Another, the American program to land on

the Moon, was supported in no small measure by developments in robotics, particu-

larly the Apollo Lunar Module. It was a remarkable vehicle that allowed the astro-

nauts to descend softly onto the lunar surface, carry out a number of experiments

on it, and return to the command and service modules in lunar orbit. Examples of

robots today include the Space Shuttles satellite manipulator, the Martian rover,

unmanned aerial vehicles (UAVs), and autonomously operated underwater vehi-

cles for ocean exploration. A broad taxonomy of robots is introduced in Figure 1.2.

Although a true robot would be an intelligent, autonomous mobile entity, capable of

interacting with its environment in a meaningful, adaptive, and intelligent manner,

no such entities exist at the present time.

An intelligent autonomous vehicle does indeed possess a number of capabilities

not found in a common machine, however ingenious it may be. Yet such intelligent

machines are only evolving at the present time. Considering the impressive range

of human achievements in the last century, it is fair to say that the development of

1.1 Robotics: An Introduction 3

Table 1.1. Evolution of robots [remotely operated vehicles (ROVs),

unmanned aerial vehicles (UAVs)]: The chronology

Year Invention Inventor

The pre-1900 period

200 b.c.e. Screw pump Archimedes

1440 c.e. Printing press Johannes Gutenberg

1589 Knitting machine William Lee

1636 Micrometer William Gascoigne

1642 Calculating machine Blaise Pascal

1725 Stereotyping William Ged

1733 The ying shuttle John Kay

1765 Steam engine James Watt

1783 Parachute Louis Lenormand

1783 Hot air balloon Montgoler Brothers

1785 Power loom Edward Cartwright

1793 Cotton gin Eli Whitney

1800 Lathe Henry Maudslay

1804 Steam locomotive Richard Trevithick

1816 Bicycle Karl von Sauerbronn

1823 Digital calculating machine Charles Babbage

1831 Dynamo Michael Faraday

1834 Reaping machine Cyrus McCormick

1846 Sewing machine Elias Howe

1852 Gyroscope Leon Foucault

1852 Passenger lift Elisha Otis

1858 Washing machine William Hamilton

1859 Internal combustion engine Jean-Joseph-Etienne Lenoir

1867 Typewriter Christopher Scholes

1868 Motorized bicycle Michaux Brothers

1876 Carpet sweeper Melville Bissell

1885 Motorcycle Edward Butler

1885 Motor car engine Gottlieb Daimle/Karl Benz

1886 Electric fan Schuyler Wheeler

1892 Diesel engine Rudolf Diesel

1895 Wireless Guglielmo Marconi

1898 Submarine John P. Holland

The post-1900 period

1901 Vacuum cleaner Hubert Booth

1903 Airplane Wright Brothers

1911 Combine harvester Benjamin Holt

1926 Rocket Robert H. Goddard

1929 Electronic television Vladimir Zworykin

1944 Automatic digital computer Howard Aiken

1949 Transistor Team of American engineers

1955 Hovercraft Christopher Cockerell

1957 Satellite Team of Russian engineers

1959 Microchip Team of American engineers

1962 Industrial robot manipulator George Devol, Unimation

1960s Automated assembly line Team of American engineers

1971 Microprocessor Team of American engineers

1981 Space Shuttle Team of American engineers

4 The Robot

Table 1.2. Dreamers, thinkers (eccentrics) who imagined the unimaginable

Year Thinker/writer Contribution

1510 Leonardo da Vinci Conceptual painting of a helicopter

1648 Cyrano de Bergerac Histoire comique des Estats et Empires de la Lune

(book)

1831 Mary Wollstonecraft-Shelley Frankenstein or, The Modern Prometheus (book)

1864 Jules Verne From Earth to the Moon, Journey to the Centre of

the Earth, Twenty Thousand Leagues Under the

Sea (books)

1901 H. G. Wells The Time Machine, The First Men on the Moon

(books)

1921 Karel Capek Rossums Universal Robots (play)

1930 Edgar Rice Burroughs The Martian Tales (a series of 11 books published

between 1911 and 1930)

1945 Arthur C. Clarke Concept of geostationary communication satellites

1948 Norbert Weiner Cybernetics or Control and Communication in the

Animal (book)

1950 Isaac Asimov I Robot (book)

1954 Jules Verne Twenty Thousand Leagues Under the Sea (Disney

lm)

1955 Walt Elias Disney Robots for entertainment in the Disneyland theme

park

1968 Arthur C. Clarke, Stanley

Kubrick

2001: A Space Odyssey (book, lm), HAL

(computer)

1977 George Lucas Star Wars (lms), R2D2, C3P0 (robots).

these machines may be effected in the not-so-distant future. For our part, the study

of industrial robotics is best initiated by considering the simplest of these creatures:

the robot manipulator. The manipulator is indeed the basic element in a walking

robot, which in turn could be considered to be a primary component of an intelligent

autonomous robot.

Machine

Manipulator Dexterity

Vision & Mobility Mobile Robot

Spatial Awareness

ROV

Autonomy &

Adaptability

Communication &

Interaction

Unmanned

Autonomous V.

Community of

UAVs

Intelligent

Autonomous V.

Interdependence &

Coordination

Figure 1.2. Taxonomy of robots.

1.2 Robot-Manipulator Fundamentals and Components 5

Table 1.3. Types of links

Type of link Illustration

Nullary

Unary

Binary

Ternary

Quaternary

N-ary

.

1.2 Robot-Manipulator Fundamentals and Components

Manipulation involves not only the motion, location, orientation, and geometry of a

real physical object but also the procedures for changing these attributes as well as

the physical design of a mechanical system that can effectively perform the manipu-

lation functions. A robot manipulator is essentially a kinematic system that is an

assemblage of a number of kinematic components. Although these components

could in general be exible, we shall restrict our attention to rigid components. The

basic rigid-component elements are referred to as kinematic links or simply links.

The basic interconnections between these links are referred to as kinematic joints or

simply joints.

The simplest of kinematic arrangements is made up of two links. Thus we

may consider two rigid bodies or links, one of which is free to move in three-

dimensional space. Six independent parameters are required for completely spec-

ifying the motion of the free body in space, relative to the xed body. The rela-

tive motion itself may be uniquely expressed in terms of the relative position of the

center of gravity of the body in Cartesian space in the surge (forward and back-

ward), sway (sideward), and heave (up and down) together with the orientation of

the body. The orientation of the body is dened by its attitude and specied as a

sequence of three successive rotations about the surge, sway, and heave directions

in terms of a roll, a pitch, and a yaw angle, respectively. The six independent param-

eters or degrees of freedom (DOFs), as they are referred to by dynamicists, of the

rigid body are the displacements of the center of gravity of the rigid body in the

surge, sway, and heave directions and the sequence of roll, pitch, and yaw rotations

about these directions.

We may classify the links on the basis of the number of joints used to intercon-

nect them to each other, as illustrated in Table 1.3. Thus a nullary link is a rigid body

that is not connected to any other link, and a unary link is one that is connected

to just one other link by a single joint. A binary link is one that is connected to

6 The Robot

two other links by two distinct and separate joints. In a similar manner we dene

a ternary link as one that is connected to three other links at three distinct points

on it by separate joints and a quaternary link as one that is connected to four other

links at four distinct points on it by separate joints. In general, an N-ary link has N

independent joints associated with it. Each link could be of arbitrary shape and the

joints not only located at arbitrary points but also of arbitrary shape.

A joint itself is then dened as a connection between links that allows certain

relative motion between links. At the outset such joints as welds, rigid connections,

or bonded joints are excluded. Joints may then be classied, irrespective of their

nature, based on the number of links associated with each. A binary joint connects

two links together, ternary joints connect three links together, and in general an

N-ary joint connects N links together.

In dening an N-ary link we associate N joints with it. It is sometimes more con-

venient to dene an N-ary link as one that is connected to N other links. The two

denitions are equivalent when every joint is a binary joint. A kinematic system is

then a collection of kinematic links connected together by an associated set of kine-

matic joints. A typical robot manipulator is such an assemblage or kinematic chain,

transmitting a denite motion. When one element of such a kinematic chain is xed,

the kinematic chain is a mechanism. From the point of view of robot manipulators,

the objective is to investigate and synthesize kinematic chains to produce mecha-

nisms that can transmit, constrain, or control various relative motions. This is the

notion of kinematic structure.

The kinematic structure of a robot manipulator must satisfy two essential

requirements:

1. First, it must have the ability to displace objects in three dimensions, operate

within a nite workspace, have a reasonable amount of dexterity in avoiding

obstacles, be able to reach into conned spaces and thus be able to arbitrarily

alter an objects position and orientation from one to another;

2. Second, once positioned, the manipulator must have the ability to grasp with

optimumforce arbitrary or customobjects and hold themstationary irrespective

of the other forces and moments they are subjected to.

Considering the rst of these requirements, the primary design problem re-

duces to one of kinematic design. In general, to operate in three-dimensional space,

a kinematic mechanism must possess at least six DOFs in order to function without

any constraints. The DOFs are removed as one applies kinematic constraints to the

assemblage.

To understand the kinematic design problem, the synthesis of a component sys-

tem in a constrained kinematic mechanism so that it has a nite number of relative

DOFs may rst be considered. To ensure that the complete sets of feasible designs

are nite in number, we need to consider the constraints that must be imposed. First,

we consider the number of connected planar systems that can be put together from

purely topological considerations. Considering four different links, we may identify

1.2 Robot-Manipulator Fundamentals and Components 7

Table 1.4. Four-link kinematic systems and their interchange

graphs

Four-link kinematic system Interchange graph

two open-loop arrangements, two single-loop arrangements, and two multiloop

arrangements, as shown in Table 1.4. Also shown is a graph-theoretic representation

of the kinematic structure in the form of an interchange graph in which a link in the

physical mechanism is modeled as a node and a physical binary joint as an edge.

One of the open-loop arrangements has three branches, and one of the closed-loop

arrangements includes a branch in addition to the loop. Only one of these arrange-

ments has all its links connected serially, whereas another has all links connected

in a single loop. The former is a classic serial manipulator structure shown in Fig-

ure 1.3, which we will consider extensively in later sections. Also shown in the gure

is an end-effector in the formof wrist and gripper. Without the wrist the manipulator

would at most have the capability to approach a certain object from a single angle.

The wrist gives the manipulator the mechanical exibility to perform real tasks. Yet

there are still a number of other limitations as the range of movement of each joint is

8 The Robot

wrist and hand

(gripper assembly)

Figure 1.3. A typical robot serial manipulator including an end-effector (wrist-and-gripper

assembly).

limited. As a result, the workspace, which is the space of positions and orientations

reachable by the robots end-effector, is still severely limited. But within this limited

workspace it is the wrist that gives the end-effector the capability to point toward

an arbitrary direction. The most common types of wrists are quite analogous to the

human wrist, with three or more spatial (nonplanar) DOFs. The latter single-loop

chain is the classic four-link kinematic chain, and when one of its links is xed, one

obtains the well-known four-bar mechanism shown in Figure 1.4. Link OA is the

driving link or the crank, and link AB is the coupler link or coupler.

The path traced by a point on the coupler link BA is referred to as a coupler

curve. Generally moving the point to different locations on the coupler would gen-

erate different coupler curves. A basic task is to be able to predict the geometry

of the coupler curve generated by the point on the coupler. Coupler curves can be

surprisingly complex. The corresponding design problem consists of synthesizing a

mechanism for generating a specic coupler curve (for example, the shape of an

aerofoil or a ships hull). In the generic case, a coupler curve of a four-bar mecha-

nism is an algebraic curve of degree six. Different shapes of coupler curves that can

arise from four-bar and other mechanisms have been cataloged by several authors.

It is interesting to observe that there exist at least two other four-bar mechanisms

that can generate the same coupler curve as the one generated by a four-bar mech-

anism.

O'

O

B

A

Figure 1.4. A four-bar linkage, with an exam-

ple of a coupler curve generated by a point on

the coupler.

1.2 Robot-Manipulator Fundamentals and Components 9

Table 1.5. (a) Kinematic chains with L links and J joints (shaded links are ternary

links); (b) kinematic mechanisms derived from the chains in (a)

(a)

Watt (1)

Watt (2) Stephenson (1) Stephenson (2)

Stephenson (3)

(b)

The basic four-link kinematic chain and the chains made more complex by the

further addition of links are illustrated in Table 1.5(a). To transmit motion, a prac-

tical mechanism may be obtained by xing one of the links in a kinematic chain.

Thus from an n-link chain one may derive as many as n mechanisms. Some of the

10 The Robot

Table 1.6. A seven-link kinematic chain and

its velocity graphs

(a) (b)

(c) (d)

mechanisms derived may be identical to each other in a kinematic sense. Such mech-

anisms are said to be isomorphic. To nd the number of distinct mechanisms that

may be derived from a kinematic chain, it is essential that isomorphic mechanisms

be counted just once. The mechanisms in Table 1.5(b) are obtained by xing one of

the links in the kinematic chains in Table 1.5(a).

Graph-theoretic representations of kinematic mechanisms are used to detect

isomorphic mechanisms. Although these representations are by no means unique,

they are useful in the analysis of the kinematic geometry of a mechanism. One

method, for example, is based on clearly incorporating the effect of xing a link

in the corresponding graph representation of the mechanisms. Thus one obtains the

graphical pattern by substituting a xed link in a kinematic chain by a direct pin con-

nection. Such substituted pin connections are indicated with an additional rectangle

overlaid on the pin. The resulting diagram is known as a velocity graph. Thus the

problem of detecting isomorphic mechanisms is reduced to one of detecting isomor-

phisms in the graph-theoretic representations.

A graph is characterized by a certain number of vertices or nodes, a certain

number of edges, the adjacency matrix, with entries of ones and zeros, and the dis-

tance matrix with integer entries. The matrices are both square matrices with one

row and one column for each node. A nonzero element of the former, the adjacency

matrix, indicates that the vertices corresponding to the row and column numbers are

connected. It records information about every edge except when there are parallel

edges. An element of the latter, the distance matrix, indicates the minimum number

of edges between the vertices corresponding to the row and column numbers. The

distance matrix may be computed from the adjacency matrix. Thus two graphs may

be considered to be isomorphic, provided the numbers of nodes and edges and the

adjacency matrices are identical.

Considering, for example, the seven-link chain in Table 1.6(a), there are seven

possible theoretical mechanisms one could associate it with. However, considering

the velocity graphs, there are only three possible nonisomorphic velocity graphs.

1.2 Robot-Manipulator Fundamentals and Components 11

Table 1.7. Some of the mechanisms formed when a link is

replaced with a slider

The three nonisomorphic velocity graphs are also shown in Table 1.6, thus indicat-

ing that only three nonisomorphic mechanisms may be derived from the seven-link

kinematic chain in Table 1.6(a).

Often one may x an alternative link in the same kinematic chain and thus

obtain a different nonisomorphic mechanism. This process is referred to as the pro-

cess of kinematic inversion. The slidercrank mechanism in Table 1.7 is obtained by

the replacement of one of the links in the four-bar mechanismwith a slider. The joint

between the xed link and the slider is a sliding joint. Alternatively the slider may

be a collar that is pinned to the xed link and permitted to slide over the coupler.

Other mechanisms obtained by this process of kinematic inversion or by replacing a

link with a slider are shown in Table 1.7.

When a mechanism is designed to have a single relative DOF, its motion must

be completely determined by prescribing a single input variable. For design pur-

poses, it is essential that all the distinct systems possible and their structures are

identied so an optimum choice may be made. The general solution to the preced-

ing problem results in an innite number of possibilities. Yet, when certain restric-

tions are imposed, the number of possibilities reduces to a nite set. If one restricts

ones attention to planar kinematic systems with a maximum of eight components

or links, so that all components move in parallel planes, and imposes the follow-

ing four constraints, the number of possibilities reduces to only 19 essential distinct

systems:

1. all kinematic joints are of the same type (for example a pin joint);

2. the system should be kinematically closed loop so disconnecting any one joint

will not separate the system into two parts;

3. the system should not have a separating component whose removal would

reduce the system to two independent and uncoupled subsystems;

4. no connected subsystem consisting of two or more components should have

zero DOFs (i.e., immobile).

12 The Robot

Table 1.8. Number of closed-loop kinematic chains with eight

or fewer links

Joints Binary links Ternary links Quaternary links Chains

4 4 0 0 1

7 4 2 0 2

10 4 4 0 9

10 5 2 1 5

10 6 0 2 2

When these conditions, which are not really restrictive from a practical stand-

point, are imposed on a kinematic system, the number of possible systems satisfying

all of the constraints is nite in number (Table 1.8). A number of properties of these

subsystems can be deduced from their interchange graphs. Moreover, it is possible

to synthesize large spatial kinematic systems by assembling basic modules whose

interchange graphs are not only known but also satisfy certain specic properties.

1.3 From Kinematic Pairs to the Kinematics of Mechanisms

So far in our consideration of kinematic systems, we have restricted our attention

to a single type of joint, whatever it may be. In practice, however, there are several

types of binary joints of all shapes, sizes, and designs. One of the simplest joints is

the revolute joint, which interconnects the two links in such a way that the free link

is constrained to rotate only about a single axis. Each revolute joint introduces ve

constraints on a connecting link and thus permits it with one additional rotational

DOF. It is one of three one-DOF-lower kinematic pairs or binary joints, character-

ized by a surface contact (identied by the German kinematician Franz Reuleaux

in 1876), two of which are essentially nonrevolute with only a translational DOF.

Reuleaux also identied three other lower kinematic pairs, characterized by surface

contact binary joints and representing basic practical kinematic joints.

Franz Reuleaux, who is regarded as the founder of modern kinematics and as

one of the forerunners of modern design theory of machines, was born in Esch-

weiler (near Aachen), Germany, on September 30, 1829. Reuleaux, who taught

rst at the Swiss Federal Polytechnic Institute in Zurich and then at the Technis-

che Hochschule at Charlottenburg near Berlin, wrote a seminal text on theoretical

kinematics that was translated into English in 1876 by another famous kinematician,

Professor Alexander B.W. Kennedy of Great Britain. In the book the notion of a

kinematic pair was rst dened. When one element of a mechanism is constrained

to move in a denite way by virtue of its connection to another, then the two form a

kinematic pair.

Higher kinematic pairs are characterized by line contact. The ball-and-socket

joint, a spherical pair, is characterized by three rotational DOFs, the planar pair

by one rotational and two translational DOFs. and the cylindrical pair by one

translational and one rotational DOF. The spherical pair introduces three transla-

tional constraints on the motion of the rigid body, thereby implying that only three

1.4 Novel Mechanisms 13

additional parameters are required for describing the motion of the body linked to a

free body in space by such a joint. The planar pair also introduces three constraints

whereas the cylindrical pair introduces four constraints. All other lower kinematic

pairs introduce ve constraints on the motion of the rigid body so only one addi-

tional independent parameter is required for describing the relative motion of the

rigid body linked to another by such a pair. However, the joints with maximal prac-

tical import are the one-DOF binary joints, the revolute joint with one rotational

DOF, and the screw and parallel joints with one translational DOF each, which

could be actuated by in-parallel rotating (the rst two) and translational motors,

respectively. Whereas the revolute permits a rotation only through an angle and the

prismatic permits translation only through a distance, the screw allows a screw dis-

placement involving a simultaneous coupled translation only through a distance and

rotation through an angle that are related by the pitch of the screw.

Inspired by a former professor at Karlsruhe, F. J. Redtenbacher, Franz

Reuleaux embarked on a systematic method to synthesize kinematic mechanisms.

To this end, he created in Berlin a collection of over 800 models of mechanisms

based on his book. These were cataloged and classied by another German engi-

neer, Gustav Voigt. Apart from the lower and higher kinematic pairs and simple

kinematic chains, they include a variety of pump mechanisms, screw mechanisms,

slidercrank mechanisms, escapement mechanisms, cam mechanisms, chamber and

chamber-wheel mechanisms, ratchet mechanisms, planetary gear trains, jointed cou-

plings, straight-line mechanisms, guide mechanisms, coupling mechanisms, gyro-

scopic mechanisms, and a host of other kinematic mechanisms.

An industrial robot manipulator may be modeled as an open-loop articulated

chain with several rigid links connected in series by revolute or prismatic joints,

driven by suitable actuators, Motion of the joints results in the motion of the links

that position and orient the manipulators end-effector. The kinematics of the mech-

anism facilitates the analytical description of the position and orientation of the end-

effector with reference to a xed reference coordinate system in terms of relations

between the joint coordinates. Two fundamental questions are of interest. First, it

is desirable to obtain the position and orientation of the end-effector in the refer-

ence coordinate system in terms of the joint coordinates. This is known as the direct

or forward kinematics of the manipulator. Second, it is often essential to be able to

express the joint coordinates in terms of the position and orientation of the end-

effector in the reference coordinate system. This is known as inverse kinematics. In

most robotic applications it is relatively easy to nd the one, usually the forward

kinematics, but it is the other, the inverse kinematics, that must be known because

the task is usually stated in the reference coordinates and the variables that could

be controlled are the joint coordinates.

1.4 Novel Mechanisms

Although James Watt is credited with the invention of the steam engine, his singular

contribution was not so much the synthesis of a coal-red steam boiler to drive the

14 The Robot

Rack

Pinion

Figure 1.5. Rack-and-pinion mechanism.

piston in a cylinder by properly operating a set of pressure release valves; rather, it

was the successful application of the slidercrank mechanism to convert the recip-

rocating linear motion of the piston to a sustained and continuous rotary motion. It

was indeed one of a novel and practical set of inventions that triggered the industrial

revolution in Europe. Considering Gustav Voigts collection of kinematic mecha-

nisms, one could identify a basic set of novel mechanisms that serves as building

blocks of other mechanisms and machines. A sample of these mechanisms is briey

discussed in this section.

1.4.1 Rack-and-Pinion Mechanism

The rack-and-pinion mechanism illustrated in Figure 1.5 is a common mechanism

used to convert circular motion of the toothed pinion into the linear motion of the

rack that is designed to continuously mate with the pinion.

1.4.2 Pawl-and-Ratchet Mechanism

When rotary motion is not required to be continuous or if it is causing the motion

in one direction only while preventing it in the other, the pawl and ratchet in Fig-

ure 1.6 is a suitable mechanism. The toothed wheel known as the ratchet may be

rotated clockwise without any difculty as the nger known as the pawl merely slips

over the ratchet. However, when rotated in the counterclockwise direction, the pawl

obstructs the ratchet, and rotation in this direction is not permitted.

Pawl

Ratchet

Figure 1.6. Pawl-and-ratchet mechanism.

1.4 Novel Mechanisms 15

A B

C

D

O

Q

P

Figure 1.7. The planograph.

1.4.3 Pantograph

The pantograph was used by James Watt in his steam engine to exactly enlarge the

reciprocating motion of the piston in the cylinder. One form of the pantograph, the

planograph, is illustrated in Figure 1.7. The links are pin jointed at A, B, C, and

D. The links AB and DC are parallel to each other, and the links AD and BC are

parallel to each other. The link BA is extended to the xed pin O. The point Q on

the link AD and the point P on the extension of the link BC both lie on a straight

line that passes through O. It can be shown that under these circumstances the point

P will reproduce the motion of point Q to an enlarged scale; alternatively, point Q

will reproduce the motion of the point P to a reduced scale.

1.4.4 Quick-Return Mechanisms

It is often necessary to convert rotary motion into reciprocating motion, and the

slidercrank mechanism, driven by the crank, is commonly used. Moreover, what is

often necessary in practice is for the forward stroke of the reciprocating motion to

be slow and controlled because of the need for the mechanism to do work during

this stroke. On the return stroke no work is done, and therefore it is desirable that it

be completed as quickly as is possible. Thus the reciprocating motion is necessarily

asymmetric.

A suitable mechanism to meet these requirements is the crank and slotted lever

quick-return mechanism. In this mechanism, instead of the connecting rod being

driven directly by the crank pin, it is attached to one end of a slotted link, which in

turn is driven by the crank. In Figure 1.8 the crank pin O rotates at uniform speed

about the center of rotation, C, and as it slides over the link PQ, it causes this link

R

Q

O

P

C

Figure 1.8. The crank and slotted lever quick-return

mechanism.

16 The Robot

R

Q

O

P

C

Figure 1.9. Whitworth quick-return

mechanism.

to rock from side to side about the pivot at P. The connecting rod QR, which is

attached to the rocker PQ at Q, transmits the rocking motion to the slider at R.

The forward stroke begins with OC being perpendicular to PQ, with the slider at

O located to the right of C and rotating counterclockwise. The forward stroke is

completed when OC is again perpendicular to PQ, with the slider at O located to

the left of C. During the forward stroke the slider at O traces the arc of a circle,

which in turn subtends an angle greater than 180

at the center of rotation C. Thus

the forward stroke takes a relatively longer time to complete within one cycle of

rotation of the crank pin O, in comparison with that of the return stroke.

Another type of quick-return mechanism is the Whitworth quick-return mecha-

nism, as illustrated in Figure 1.9. In this mechanism, the crank pin Palso slides over a

link, although in this case the link does not merely rock but completes a full rotation

about a center, O, that is different from the center of rotation, C, of the crank. Thus

the link OQ will be slowed down and speeded up alternately as the driving crank

exerts rst a large moment and then a small moment about the center O. Thus the

quick-return motion is a direct consequence of the eccentricity between C and O.

1.4.5 Ackermann Steering Gear

The Ackermann steering gear is one of the simplest mechanisms invented for the

purposes of exerting directional control over the motor car; it is illustrated in Fig-

ure 1.10. This steering gear is based on the four-bar mechanism in which the xed

A

B

C

D

K L

P

Figure 1.10. The Ackermann steering-gear

mechanism.

1.4 Novel Mechanisms 17

Annulus

Sun

Planet

carrier

Planet

Figure 1.11. Sun and planet gear wheels meshing with an

internal gear, the annulus.

link, AC, and link KL are unequal but the links AK and LC are equal. In the sym-

metric state the parallelogram AKLC is a trapezium. In this conguration the xed

link, AC, and link KL are parallel, whereas links AK and LC are inclined to the

vertical at same angle in magnitude to the longitudinal axis.

To steer the car, link CL is turned so the angle it makes to the longitudinal

axis increases while link KL rotates and translates such that the angle that link AK

makes to the longitudinal axis decreases. As a consequence, link AB turns through

an angle that is less than the angle of turn of the link CD. That is, the left front axle

turns through a smaller angle than the right front axle, and consequently the center

of rotation is located at point to the right of the car and not at innity. Thus the car

is able to turn smoothly without slipping.

1.4.6 Sun and Planet Epicyclic Gear Train

Figure 1.11 illustrates a typical epicyclic gear train incorporating the Sun and planet-

type gears. In the gure, the central gear wheel is the Sun, and the gear wheel mesh-

ing with it, which not only rotates about a pin mounted on the carrier link but also

revolves about the central gear wheel in a circular orbit, is the planet. The planet

also meshes with the internal annulus. When the planet carrier is held stationary

and not allowed to rotate, the planet wheel acts as an idler and the gears form a

simple gear train with the Sun wheel rotating the annulus. On the other hand, when

the Sun wheel is rotated, say, clockwise, the planet wheel is driven about its axis in a

counterclockwise direction while rolling inside the annulus and carrying the planet

carrier in the clockwise direction. Between these two extreme modes of operation

there are an innite number of other modes in which this epicyclical train could be

operated, depending on the speed of the Sun and the planet carrier. Thus the con-

cept may be applied to a host of applications in bicycles, aircraft engines, automobile

rear-wheel drives, and a number of machine tools.

1.4.7 Universal Joints

The universal joint is essentially a binary link comprising two revolute joints, with

the axes of these joints being two skewed, nonintersecting, and nonparallel lines. A

universal joint is used to couple two shafts in different planes. Universal joints have

various forms and are used extensively in robot-manipulator-based equipment. An

elementary universal joint, sometimes called a Hookes joint (Figure 1.12) after its

18 The Robot

Figure 1.12. Illustration of a Hookes joint.

inventor Robert Hooke, consists of two U-shaped yokes fastened to the ends of the

shafts to be connected (Figure 1.13). Within these yokes is a cross-shaped part that

couples the yokes together and allows each yoke to bend, or pivot, in relation to

the other. With this arrangement, one shaft can drive the other even when the two

shafts are not in alignment.

A variant of the universal joint is a gyroscope-like suspension illustrated in Fig-

ure 1.14. This is a two-DOF system in which the twin-gimbaled system takes the

form of a Hookes joint that couples the wheel to the motor driveshaft. The axis of

rotation of the wheel need not be aligned to the axis of the driveshaft, which could

be exible.

1.5 Spatial Mechanisms and Manipulators

Several kinematicians have analyzed the geometrical arrangements of a number

of in-parallel actuated spatial mechanisms and manipulators recently and estab-

lished the principles of design of such systems. Thus three links linked together by

a Hookes joint could be supplemented by either two revolute joints, a revolute and

a prismatic joint, or by two prismatic joints, thereby synthesizing a spatial manipu-

lator either with three rotational DOFs and an additional optional translation DOF

or one with two rotational and two translational DOFs. By the late 1960s spatial

manipulators with three rotational DOFs in roll, pitch, and yaw and three transla-

tional DOFs in surge, sway, and heave were used in a range of applications. These

were based on the Stewart platform, which was developed in 1965.

The six-DOF Stewart platform, illustrated in Figures 1.15(a) and 1.15(b), is

also the one parallel kinematic manipulator conguration incorporating multiple

closed loops that has been used in a number of recent motion and suspension sys-

tem designs.

The Stewart platform typically consists of a movable platform connected to a

rigid base through six identically jointed and extensible struts. These struts support

the platform at three locations by six universal joints. They are also attached and

Figure 1.13. U-shaped yoke and cross pin in a typical

transmission system.

1.5 Spatial Mechanisms and Manipulators 19

Figure 1.14. Gyroscopic gimbal-like suspension system.

(a) (b)

Figure 1.15. (a) The Stewart platform and its variants, including the hexapod; (b) a cable-

actuated version of the Stewart platform.

20 The Robot

(a)

(b)

Figure 1.16. (a) A typical cable-mounted

and actuated wind-tunnel model; (b) a

spatial manipulator with three revo-

lute and three prismatic actuated joints:

Eclipse II.

supported at three locations on a xed rigid base on a ground surface by six addi-

tional universal joints. No two struts are jointed to the platform or to the xed rigid

base at more than one location. Thus the struts form a kinematic mechanism con-

sisting of a variable triangulated frame, which controls the position of the platform

with six DOFs. Although there are a number of advantages as all the actuators are

essentially linear, the kinematics and the algorithms for controlling the posture of

the platform are essentially nonlinear.

Figure 1.16 illustrates a typical cable-actuated suspension system of an aircraft

model in a wind tunnel and the spatial mechanism being developed for industrial

applications. The latter is the Eclipse II manipulator with six actuated joints, three

of which are double joints (revolute and slider) while the other three are prismatic

joints moving along a xed, horizontal circular guide ring. The vertical guide ring is

free to rotate about a vertical axis and the platform is mounted on ball joints.

1.6 Meet Professor da Vinci the Surgeon, PUMA, and SCARA

The da Vinci

TM

manipulator was developed to assist in the operating theater and is

designed to eliminate the tremor in the hands of surgeons. With the state-of-the-art

da Vinci manipulator, the surgeon uses a three-dimensional computer vision sys-

tem to manipulate robotic arms. These robotic arms hold special surgical instru-

ments that are inserted into the abdomen through tiny incisions. The robotic arms

can rotate a full 360

, allowing the surgeon to manipulate surgical instruments with

1.6 Meet Professor da Vinci the Surgeon, PUMA, and SCARA 21

Body or capstan

(can rotate about a vertical axis)

shoulder

(can rotate about

a horizontal axis)

upper arm

lower arm

elbow

(can rotate about

a horizontal axis)

wrist

Figure 1.17. Schematic drawing of the Unimation PUMA 560 manipulator.

greater precision and exibility. Furthermore, the robots pencil-thin arms and del-

icate grippers may be inserted through holes as narrow as 8 mm. Two of the hands

are used to wield surgical tools that follow nger and wrist movements made by an

operator sitting at a nearby console. A third arm carrying a miniature camera is also

inserted to track the motions of the surgical tools wielded by the other two.

The da Vinci manipulator has been used, among other tasks, to assist in a suc-

cessful kidney transplant. Its primary role was in removing the existing kidney from

the patients body, and conventional surgery was then used to implant the new

kidney.

Another robot, known as the Penelope Surgical Instrument Server, assisted in

the removal of a benign tumor on the forearm of a patient on June 16, 2005. Pene-

lope is now giving doctors at New York-Presbyterian Hospital an extra hand, as the

doctors might put it.

Penelope is really a robotic arm equipped with voice-recognition technology

that understands commands from doctors and nurses in the operating room. A sur-

geon can ask for any instrument, a scalpel for example, and Penelope then hands it

over.

The Unimation PUMA 560 (PUMA stands for Programmable Universal

Machine for Assembly) is a robot manipulator whose joints are all revolute joints

and it has six DOFs. It is shown in Figure 1.17 and should be compared with the

manipulator illustrated in Figure 1.1. There are three DOFs in the wrist, and a gear-

ing arrangement couples these three DOFs. The nature of the coupling plays an

important role in the derivation of the kinematics. Thus the number of joints is not

the same as the number of independent actuators. The brief technical specications

of the PUMA 562, a member of the PUMA 560 family, are given in Table 1.9.

22 The Robot

Table 1.9. Brief technical specications of the Puma 562

General Axes 6

Drives DC motors

Control Numerical

Positional control Incremental encoders

Coordinates Cartesian

Conguration Revolute

Cables 5 m

Work area Reach at wrist 878 mm (between joints 1 and 5)

Work volume 360

working volume in left-arm or

right-arm conguration

Limit joints 1 to 6 320

, 250

, 270

, 280

, 200

, 532

Load capacity Nominal payload 4 kg

Permitted load at wrist 4 kg at 127 mm from joint 5 and

37.6 mm from joint 6

Performance Repeatability 762 0.1 mm, 761 0.1 mm

Maximum speed 1.0 m/s

Universal Controller Programming and

language (VAL II)

Teach pendant; Virtual Device

Table (VDT) using VAL II

VAL II processor Motorola 68000 32 bit

Auxiliary processor Torque processor 68000

Interface 16 input/output exp 128 Analog I/O

Serial I/O

Serial interface RS232 RS423

Memory buffer 64 Kb, 512 Kb, 1 Mb, 2 Mb

Battery buffer 1 year

Environment Temperature/humidity 540

C (EN 60204-1)

Interface suppression 50% at 40

C, 90% at 20

C

Power supply Incorporated 208, 240, or 380 V

Option 5060 Hz 0.6 kW 1 phase

clean-room version (class 10)

Weights Arm 63 kg

Controller 200 kg

SCARA (which stands for Selectively Compliant Assembly Robot Arm) shown

in Figure 1.18 has three parallel revolute joints that allow it to move and ori-

ent within a plane, and a fourth prismatic joint moves the end-effector normal to

the plane. This is an example of a manipulator that is not fully articulated and is

not anthropomorphic. Rather, the combination of the revolute and prismatic joints

allows this manipulator to operate in a cylindrical workspace. An interesting feature

is the fact that only a minimum number of joints are load bearing, depending on the

nature of the application.

This brings us to the end of our broad survey of engineering robotic systems.

The survey is by no means exhaustive, yet is most relevant to current industrial tech-

nology. Robotics plays a key role in current mechatronic systems that are the heart

of most automated engineering systems such as unmanned autonomous vehicles or

remotely operated manipulators operating in a hazardous environment, manipula-

tors designed to assist surgeons on the operating table by letting them operate with

1.7 Back to the Future 23

Body or capstan

(can rotate about a vertical axis)

axes of rotation of

revolute joints

prismatic

joint

Figure 1.18. Schematic drawing of SCARA.

greater precision and accuracy than ever before, and industrial automated assem-

bly lines for the mass manufacture of a range of engineering products. We are

now in position to identify four broad areas that constitute the fascinating eld of

robotics: mechanisms, control, perception, and computing. In the following chapters

we restrict our attention to robot mechanisms and their control.

1.7 Back to the Future

It is indeed quite difcult to look ahead as there is no real magical crystal ball to

predict it. Yet there is a prognosis for the future. On one hand, one can predict that

the da Vinci-type manipulator would evolve into a robotic octopus. On the other

hand, it is not difcult to visualize that the development of multingered dexter-

ous hands (and legs) and the successful building of walking machines with amphibi-

ous capabilities and with machine vision and tactile sensing are set to revolution-

ize robotics. The engineering approach to robotics is a much more pragmatic one,

the emphasis being on the whole of the robot rather than just its computational

features.

Several research teams worldwide are involved in the design and development

of robot football players based on concepts associated with autonomous systems.

They involve a number of areas of robotics including sensor fusion, mobile robotics

navigation, nonlinear hybrid feedback control, and coordination. Probably the ulti-

mate test for a walking robot with multingered dexterous hands is its ability to play

cricket; to play the game like an accomplished human player with the ability to run

across the wickets at the appropriate speed and with the capacity to grip and spin

the ball and participate as a spin or pace bowler, as a batsman, as a wicket keeper,

and as a elder. One need not be surprised if such a complete robot is built within

the next 50 years.

24 The Robot

EXERCISES

1.1. Study the various kinematic mechanisms that were used in engineering indus-

tries in the past 50 years and indicate those that you think are exceptional or novel.

Highlight, with the aid of sketches, all the special features of these mechanisms.

1.2. Conduct a survey of industrial robot manipulators currently used and identify

at least two different

(a) anthropomorphic congurations,

(b) nonanthropomorphic congurations, and

(c) spatial mechanisms

from those discussed in this chapter. Name the salient features of each of these

systems and classify these manipulators based on their distinguishing features.

1.3. Study the various grasping and gripping mechanisms that may currently be in

use in various industrial applications and classify these on the basis of their kine-

matic geometry.

1.4. It is frequently necessary to constrain a point in a mechanism to move along a

straight line without the use of a sliding pair. Mechanisms designed for this purpose

are known as straight-line-motion mechanisms.

(a) Discuss your thoughts on how an exact straight-line-motion mechanism

may be designed. Based on your personal research, suggest two alternative

mechanisms that are able to generate straight-line motion.

(b) Often it is not necessary that the motion be an exact straight line. Dis-

cuss two or three alternative mechanisms that may be used as approximate

straight-line-motion mechanisms.

(c) Sketch three different forms of straight-line-motion mechanisms based on

the four-bar kinematic chain and the slidercrank mechanism.

1.5. You are a member of a team of engineers who have been assigned the task

of designing a walking robot, the SPD 408, capable of emulating humans in this

respect. Your rst task is to establish a kinematically feasible design. Draw up a set

of requirements and establish three or four feasible kinematic architectures for a

walking robot.

1.6. Isaac Asimovs three laws of robotics are that

(a) a robot may not injure a human being, or, through inaction, allow a human

being to come to harm;

(b) a robot must obey orders given to it by human beings except where such

orders conict with the rst law; and

(c) a robot must protect its own existence as long as such protection does not

conict with the rst or the second law.

Discuss the relevance and adequacy of Asimovs laws, particularly in the context

of humans and robots coexisting with each other in the not-too-distant future.

2

Biomimetic Mechanisms

2.1 Introduction

The concept of biomimetic control, i.e., control systems that mimic biological ani-

mals in the way they exercise control, rather than just humans, has led to the deni-

tion of a new class of biologically inspired robots that exhibit much greater robust-

ness in performance in unstructured environments than the robots that are currently

being built. It is believed that there is a duality between engineering and nature that

is based on optimum use of energy or an equivalent scarce resource, particularly

in exercising control over actions and over interactions with the immediate envi-

ronment. Biomimesis is generally based on this concept, and it is believed that by

mimicking animals that are most capable of performing certain specialized actions,

such as the lobster on the seabed, insects and birds in ight, and cockroaches in loco-

motion, one could build robots that can surpass any other in performance, agility,

and dexterity.

A key feature of biomimetic robots is their capacity to adapt to the environ-

ment and ability to learn and react fast. However, a biomimetic robot is not just

about learning and adaptation but also involves novel mechanisms and manipula-

tor structures capable of meeting the enhanced performance requirements. Thus

biomimetic robots are being designed to be substantially more compliant and stable

than conventionally controlled robots and will take advantage of new developments

in materials, microsystems technology, as well as developments that have led to a

deeper understanding of biological behavior.

Roboticists have a lot to learn from animals. Birds have a superior ying

machine with multielement aerofoils capable of controlling the ow around them

quite effortlessly. They use a pair of clap-and-ing or apping wings to execute

the modes such as apping translation that effectively compensate for the Wagner

effect.

1

The Wagner effect manifests itself as a time delay or transport lag in the

growth of lift over an impulsively or suddenly started and accelerated aerofoil.

1

Sane, S. P. (2003). Review: The aerodynamics of insect ight, Exp. Biol. 206, 41914208.

25

26 Biomimetic Mechanisms

To compensate for this effect, it can be shown that increasing the angle-of-attack

aerofoil by a rapid high-frequency apping action can effectively ensure an almost

instantaneous growth in the lift developed. The rapid high-frequency apping has

the added advantage of generating a net mean thrust. Thus a bird can effectively

take off by adopting a technique based on rapid apping of its wings. The clap-and-

ing mechanism also has a similar consequence.

Furthermore birds use visual perception based on the concept of optic ow

for ight control. One particular control concept that is gaining importance is

biomimetic ight control based on the principles of optic ow, which is a visual

displacement ow eld that can be used to explain changes in an image sequence.

The underlying principle that is used to dene optic ow is that the intensity level is

constant along the visual motion trajectories. Thus there exists a displacement eld

around the motion trajectories that is similar in structure to a potential ow eld.

Optic ow can be then dened as the motion of all the surface elements, in a limited

view, as perceived by the visual system. As a body moves through the limited view,

the objects and surfaces within the visual frame ow around it. The human visual

system can determine its current direction of travel from the movement of these

surfaces.

The term optic ow has been associated with ight and has been used suc-

cessfully in the design of ight simulators. The initial research on optic ow not only

inspired many cognitive psychologists and neurologists

2

with new ways of under-

standing how animals perceive motion around them but has also led to an increased

understanding of how ying animals maintain stability and perceive the world while

ying. Migratory birds use the magnetic eld as a compass does to y in the appro-

priate migratory direction! They seem to know where they want to go by using a

magnetic-eld map. Light-absorbing molecules present in the retina of a birds eye

help the bird to sense the Earths magnetic eld.

Currently roboticists are exploring ways to use optic owto provide a robot with

visual perception and the associated sensations similar to those of a bird in ight.

Although researchers were able to integrate optic-ow-based articially simulated

vision into robotic platforms and to use them to perform simple navigation tasks in

real time more than a decade ago, it was only recently that optic-ow sensing was

successfully integrated into small autonomously ying aircraft and used to provide it

with a basic degree of autonomous ight control. The methodology, which is based

on a synergy of techniques that have evolved in computational vision processing

and in biologically inspired research into human vision, has led to a better under-

standing of computer vision that may be implemented. Although computational

methods in vision processing have provided a sequence of processes that allows a

practical image to be transformed into one of a series of representations, the major

focus of computer vision has been the sensation and perception of relevant features

in the representations.

2

Tammero, L. and Dickinson, M. H. (2002). The inuence of visual landscape on the free ight

behavior of the fruit y Drosophila melanogaster, J. Exp. Biol. 205, 327343.

2.2 Principles of Legged Locomotion 27

(a) (b)

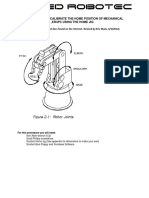

Figure 2.1. (a) Sagittal plane views of biped models of walking,

(b) in a ballistic mode.

2.2 Principles of Legged Locomotion

Interest in locomotion in humans and animals has also spurned the development of

new biomimetics mechanisms. Primarily this is due to the realization that human

joints are not lower kinematic pairs and that they could be modeled as four-bar

mechanisms and due to the insight gained into the kinematics of walking. As an

example of the former, we observe that typically in the human knee joint between

the femur and the tibia, which do not overlap each other but are strapped to each

other by two cruciate ligaments that serve as extendable links, so the entire joint

functions like a four-bar mechanism. Another example is the foot, which is in fact

a serial chain with six links in which the innermost link rolls under the tibia, the

lower leg bone, and is also strapped at the other end to the Achilles tendon, so the

entire joint functions like a four-bar mechanism. In this case the tendon serves as a

spring-loaded link, serving to return the foot to its nominal position when there is

force under it.

As for the kinematics of walking, Figure 2.1 illustrates a typical sagittal-plane

(normal to the frontal plane) view of a biped model of a walking robot in ballis-

tic motion. The ballistic movements in a dynamic walking mode, while maintaining