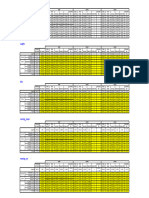

============Training fold 0============

[LightGBM] [Warning] Using sparse features with CUDA is currently not supported.

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Total Bins 4532

[LightGBM] [Info] Number of data points in the train set: 10226754, number of used

features: 22

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Start training from score -0.185507

[LightGBM] [Info] Start training from score -4.038790

[LightGBM] [Info] Start training from score -3.751649

[LightGBM] [Info] Start training from score -2.552706

[LightGBM] [Info] Start training from score -3.210648

[LightGBM] [Info] Start training from score -4.609163

[LightGBM] [Info] Start training from score -9.750277

Training until validation scores don't improve for 10 rounds

Early stopping, best iteration is:

[32] valid_0's multi_logloss: 0.0609308

Fold 0 - 62.05 seconds.

Log loss: 0.06113

Acc: 0.98500

wAUC: 0.98832

AUC: 0.89077

AUCs:

[0.98803573 0.99998738 0.99937217 0.99999817 0.9995621 0.83200546

0.41640792]

============Training fold 1============

[LightGBM] [Warning] Using sparse features with CUDA is currently not supported.

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Total Bins 4525

[LightGBM] [Info] Number of data points in the train set: 10226754, number of used

features: 22

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Start training from score -0.185507

[LightGBM] [Info] Start training from score -4.038790

[LightGBM] [Info] Start training from score -3.751649

[LightGBM] [Info] Start training from score -2.552706

[LightGBM] [Info] Start training from score -3.210648

[LightGBM] [Info] Start training from score -4.609163

[LightGBM] [Info] Start training from score -9.750277

Training until validation scores don't improve for 10 rounds

Early stopping, best iteration is:

[33] valid_0's multi_logloss: 0.061286

Fold 1 - 62.16 seconds.

Log loss: 0.06149

Acc: 0.98492

wAUC: 0.98824

AUC: 0.88832

AUCs:

[0.98795049 0.99984323 0.99936246 0.99999999 0.99959669 0.83094789

0.40052106]

============Training fold 2============

[LightGBM] [Warning] Using sparse features with CUDA is currently not supported.

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Total Bins 4536

�[LightGBM] [Info] Number of data points in the train set: 10226754, number of used

features: 22

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Start training from score -0.185507

[LightGBM] [Info] Start training from score -4.038790

[LightGBM] [Info] Start training from score -3.751649

[LightGBM] [Info] Start training from score -2.552706

[LightGBM] [Info] Start training from score -3.210648

[LightGBM] [Info] Start training from score -4.609163

[LightGBM] [Info] Start training from score -9.750277

Training until validation scores don't improve for 10 rounds

Early stopping, best iteration is:

[34] valid_0's multi_logloss: 0.0638776

Fold 2 - 61.04 seconds.

Log loss: 0.06417

Acc: 0.98494

wAUC: 0.98792

AUC: 0.88739

AUCs:

[0.98761394 0.9997365 0.99937064 0.99999988 0.99961153 0.82769225

0.39769802]

============Training fold 3============

[LightGBM] [Warning] Using sparse features with CUDA is currently not supported.

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Total Bins 4533

[LightGBM] [Info] Number of data points in the train set: 10226754, number of used

features: 22

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Start training from score -0.185507

[LightGBM] [Info] Start training from score -4.038790

[LightGBM] [Info] Start training from score -3.751649

[LightGBM] [Info] Start training from score -2.552706

[LightGBM] [Info] Start training from score -3.210648

[LightGBM] [Info] Start training from score -4.609163

[LightGBM] [Info] Start training from score -9.750277

Training until validation scores don't improve for 10 rounds

Early stopping, best iteration is:

[37] valid_0's multi_logloss: 0.0627725

Fold 3 - 64.10 seconds.

Log loss: 0.06310

Acc: 0.98496

wAUC: 0.98851

AUC: 0.86937

AUCs:

[0.98821866 0.9997862 0.99937726 0.99994208 0.99957627 0.83743435

0.26126467]

============Training fold 4============

[LightGBM] [Warning] Using sparse features with CUDA is currently not supported.

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Total Bins 4537

[LightGBM] [Info] Number of data points in the train set: 10226754, number of used

features: 22

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Start training from score -0.185507

�[LightGBM] [Info] Start training from score -4.038790

[LightGBM] [Info] Start training from score -3.751653

[LightGBM] [Info] Start training from score -2.552705

[LightGBM] [Info] Start training from score -3.210648

[LightGBM] [Info] Start training from score -4.609163

[LightGBM] [Info] Start training from score -9.750277

Training until validation scores don't improve for 10 rounds

Early stopping, best iteration is:

[34] valid_0's multi_logloss: 0.0620515

Fold 4 - 60.69 seconds.

Log loss: 0.06231

Acc: 0.98488

wAUC: 0.98832

AUC: 0.89466

AUCs:

[0.98801565 0.99996725 0.99939072 0.99999926 0.99958137 0.83337271

0.44230037]

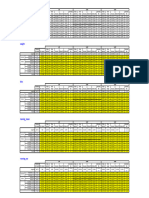

============Training fold 5============

[LightGBM] [Warning] Using sparse features with CUDA is currently not supported.

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Total Bins 4532

[LightGBM] [Info] Number of data points in the train set: 10226754, number of used

features: 22

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Start training from score -0.185507

[LightGBM] [Info] Start training from score -4.038795

[LightGBM] [Info] Start training from score -3.751653

[LightGBM] [Info] Start training from score -2.552706

[LightGBM] [Info] Start training from score -3.210645

[LightGBM] [Info] Start training from score -4.609153

[LightGBM] [Info] Start training from score -9.750277

Training until validation scores don't improve for 10 rounds

Early stopping, best iteration is:

[18] valid_0's multi_logloss: 0.0757793

Fold 5 - 47.27 seconds.

Log loss: 0.07600

Acc: 0.98493

wAUC: 0.98778

AUC: 0.91938

AUCs:

[0.98742483 0.9999127 0.99940046 0.99999842 0.9996013 0.82768585

0.62161067]

============Training fold 6============

[LightGBM] [Warning] Using sparse features with CUDA is currently not supported.

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Total Bins 4529

[LightGBM] [Info] Number of data points in the train set: 10226754, number of used

features: 22

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Start training from score -0.185507

[LightGBM] [Info] Start training from score -4.038795

[LightGBM] [Info] Start training from score -3.751653

[LightGBM] [Info] Start training from score -2.552706

[LightGBM] [Info] Start training from score -3.210645

[LightGBM] [Info] Start training from score -4.609153

�[LightGBM] [Info] Start training from score -9.750277

Training until validation scores don't improve for 10 rounds

[LightGBM] [Warning] No further splits with positive gain, training stopped with 21

leaves.

[LightGBM] [Warning] No further splits with positive gain, training stopped with 12

leaves.

[LightGBM] [Warning] No further splits with positive gain, training stopped with 10

leaves.

[LightGBM] [Warning] No further splits with positive gain, training stopped with 7

leaves.

Early stopping, best iteration is:

[24] valid_0's multi_logloss: 0.0801012

Fold 6 - 53.48 seconds.

Log loss: 0.08089

Acc: 0.98466

wAUC: 0.98707

AUC: 0.86181

AUCs:

[0.98665027 0.99997504 0.99940556 0.99999836 0.99924894 0.82387363

0.22352595]

============Training fold 7============

[LightGBM] [Warning] Using sparse features with CUDA is currently not supported.

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Total Bins 4526

[LightGBM] [Info] Number of data points in the train set: 10226754, number of used

features: 22

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Start training from score -0.185507

[LightGBM] [Info] Start training from score -4.038795

[LightGBM] [Info] Start training from score -3.751649

[LightGBM] [Info] Start training from score -2.552706

[LightGBM] [Info] Start training from score -3.210648

[LightGBM] [Info] Start training from score -4.609163

[LightGBM] [Info] Start training from score -9.750277

Training until validation scores don't improve for 10 rounds

Early stopping, best iteration is:

[28] valid_0's multi_logloss: 0.0628917

Fold 7 - 55.97 seconds.

Log loss: 0.06309

Acc: 0.98506

wAUC: 0.98810

AUC: 0.90278

AUCs:

[0.98778311 0.99993493 0.99938826 0.99996616 0.9995973 0.82999908

0.50280719]

============Training fold 8============

[LightGBM] [Warning] Using sparse features with CUDA is currently not supported.

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Total Bins 4524

[LightGBM] [Info] Number of data points in the train set: 10226754, number of used

features: 22

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Start training from score -0.185507

[LightGBM] [Info] Start training from score -4.038790

[LightGBM] [Info] Start training from score -3.751649

�[LightGBM] [Info] Start training from score -2.552706

[LightGBM] [Info] Start training from score -3.210648

[LightGBM] [Info] Start training from score -4.609163

[LightGBM] [Info] Start training from score -9.751956

Training until validation scores don't improve for 10 rounds

Early stopping, best iteration is:

[18] valid_0's multi_logloss: 0.074628

Fold 8 - 46.82 seconds.

Log loss: 0.07478

Acc: 0.98503

wAUC: 0.98756

AUC: 0.90401

AUCs:

[0.98722262 0.9999002 0.99938259 0.99999925 0.99958826 0.82303089

0.51892483]

============Training fold 9============

[LightGBM] [Warning] Using sparse features with CUDA is currently not supported.

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Total Bins 4535

[LightGBM] [Info] Number of data points in the train set: 10226754, number of used

features: 22

[LightGBM] [Warning] Metric multi_logloss is not implemented in cuda version. Fall

back to evaluation on CPU.

[LightGBM] [Info] Start training from score -0.185507

[LightGBM] [Info] Start training from score -4.038790

[LightGBM] [Info] Start training from score -3.751649

[LightGBM] [Info] Start training from score -2.552706

[LightGBM] [Info] Start training from score -3.210648

[LightGBM] [Info] Start training from score -4.609163

[LightGBM] [Info] Start training from score -9.751956

Training until validation scores don't improve for 10 rounds

Early stopping, best iteration is:

[31] valid_0's multi_logloss: 0.0635439

Fold 9 - 59.10 seconds.

Log loss: 0.06381

Acc: 0.98470

wAUC: 0.98805

AUC: 0.87902

AUCs:

[0.98776549 0.99993767 0.99933981 0.99998321 0.99942463 0.82848609

0.33818423]