0% found this document useful (0 votes)

70 views47 pagesChapter Four

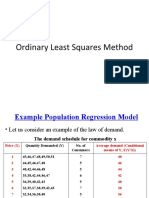

Chapter 4 discusses the assumptions and diagnostics of the Classical Linear Regression Model (CLRM), focusing on the implications of violations such as heteroscedasticity, multicollinearity, and autocorrelation. It outlines methods for detecting these issues, including the Goldfeld-Quandt test for heteroscedasticity and the Durbin-Watson test for autocorrelation, as well as potential solutions like generalized least squares and variable transformation. The chapter emphasizes the importance of ensuring the validity of regression assumptions to maintain accurate coefficient estimates and statistical inferences.

Uploaded by

Galataa A GoobanaaCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

70 views47 pagesChapter Four

Chapter 4 discusses the assumptions and diagnostics of the Classical Linear Regression Model (CLRM), focusing on the implications of violations such as heteroscedasticity, multicollinearity, and autocorrelation. It outlines methods for detecting these issues, including the Goldfeld-Quandt test for heteroscedasticity and the Durbin-Watson test for autocorrelation, as well as potential solutions like generalized least squares and variable transformation. The chapter emphasizes the importance of ensuring the validity of regression assumptions to maintain accurate coefficient estimates and statistical inferences.

Uploaded by

Galataa A GoobanaaCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd