Professional Documents

Culture Documents

Computer Apparatus Input Device For Three-Dimensional Information (US Patent 5181181)

Uploaded by

PriorSmart0 ratings0% found this document useful (0 votes)

346 views16 pagesU.S. patent 5181181: Computer apparatus input device for three-dimensional information. Granted to Glynn on 1993-01-19 (filed 1990-09-27) and assigned to Triton Technologies, Inc.. Currently involved in at least 1 patent litigation: Triton Tech of Texas, LLC v. Nintendo of America Inc. et. al. (Texas). See http://news.priorsmart.com for more info.

Original Title

Computer apparatus input device for three-dimensional information (US patent 5181181)

Copyright

© Public Domain

Available Formats

PDF or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentU.S. patent 5181181: Computer apparatus input device for three-dimensional information. Granted to Glynn on 1993-01-19 (filed 1990-09-27) and assigned to Triton Technologies, Inc.. Currently involved in at least 1 patent litigation: Triton Tech of Texas, LLC v. Nintendo of America Inc. et. al. (Texas). See http://news.priorsmart.com for more info.

Copyright:

Public Domain

Available Formats

Download as PDF or read online from Scribd

0 ratings0% found this document useful (0 votes)

346 views16 pagesComputer Apparatus Input Device For Three-Dimensional Information (US Patent 5181181)

Uploaded by

PriorSmartU.S. patent 5181181: Computer apparatus input device for three-dimensional information. Granted to Glynn on 1993-01-19 (filed 1990-09-27) and assigned to Triton Technologies, Inc.. Currently involved in at least 1 patent litigation: Triton Tech of Texas, LLC v. Nintendo of America Inc. et. al. (Texas). See http://news.priorsmart.com for more info.

Copyright:

Public Domain

Available Formats

Download as PDF or read online from Scribd

You are on page 1of 16

INANE AAA

. ‘USOOSIBIIBIA,

United States Patent 119 {1]) Patent Number: 5,181,181

Glynn [45] Date of Patent: Jan. 19, 1993

{S4] COMPUTER APPARATUS INPUT DEVICE 4,754,268 6/1988 Mori 340/710

FOR THREE-DIMENSIONAL Seeks Sei Ono el al nnn. Moros

INFORMATION $787051 11/1988 Olson ease

Agin9 |3/1989 Boine oa 30709

[75] Inventor: Brian J. Glynn, Merrit Island, Fl 835508 5/1989. Fimchbmagh "occ M0708

, Ses9a38 6/1980 Lapiche eta 304770811

173] Assignee: Triton Technologies, Inc. Burke, Va ues hastens ieee eal aa

1] Appl. Now 588, iSt6680 4/1990 Okawe nnn 364/058 X

Bt) App coe 4,922,444 5/1990 Baba vs 364/566,

[22] Filed: Sep, 27, 1990 SeoieeT 3/198). Rapijto eal ares}

(1) Im. a GOIP 7/00, GO1P 15/00; Primary Examiner—~Thomas G. Black

GO9G 1/0 Assistant Examiner—Michael Zanelli

364/866; 340/710; “Atiomey, Agent, or Firm—Burns, Dosne, Swecker &

364/709.11 Mathis

[2] Us.a.

[58] Field of Search sn, 364/560, 453, 708.11,

364/565, $66; 340/710, 706; 178/18-20; 73/508 71 aeeeeel

‘A mouse which senses six degrees of motion arising

ba Rsteresces Cited from movement of the mouse within three dimensions.

US. PATENT DOCUMENTS Avhandsheld device includes three accelerometers for

sensing linear translation along three axes of a Cartesian

3841541 11/1970 Engelbart maiex ng

sities Roker WOnis coordinate system and three angular rate sensors for

31892963 7/1075 Hawley el 3so7231.16 sensing angular rotation about the three axes. Signals

BE9RAAS 6/1975 MacLeod et. 356/isi produced by the sensors are processed to permit the

53987685 1071975 Opocensky 340/710 acceleration, velocity and relative position and attitude

‘£241,408 12/1980. Noit 30/105 of the device to be conveyed to a computer. Thus, 8

4300873 "6/1983 Kisch 340/710 person may interact with a computer with six degrees of

14408478 10/1983. Sprague ct a 331M motion int Sey compe tad

‘motion in three-dimensional space. Computer interface

3/1986 Baker et al 320/710

eee Soares'e ORS and unique adéres denieation ensure proper

a eared 364/453X Communication with the computer regardless of the

S987 Davison Soros ofientation of the mouse.

8/1987 Taniguti eta. 364/560

9/1987. Shinn 340/707 21 Claims, 7 Drawing Sheets

U.S. Patent Jan. 19, 1993 Sheet 1 of 7 5,181,181

FIG. 1(b) FIG. 1(¢)

US. Patent Jan, 19, 1993 Sheet 2 of 7 5,181,181

FIG. 2(b) FIG, 2c)

Sheet 3 of 7 5,181,181

Jan, 19, 1993

US. Patent

eo

H [0] i

i wee}

: [oo] :

i woe i

‘ [od ‘

' arr :

wainanooe—__

a Guysse001g 6. zit

aes esa) : an com 7<+{ watanous1z00v Ii

+ | “voneuBony +

z {| vou aopeaeaay TL BSESROURTBOOY 1

a 1 | ‘Buypssoord la ali

i ; mas ‘ i!

oe ten WaISNOUTIIOOV :

: ror i

: [-eweooues | nan necmenmae! Li7 — Woneouery soy x 15

: |. [i

: Zu

jevue-sw ware joan V2):

f oy ‘WOSNSS SVE "

[as ali

: WOSN3S 31VE I:

: eeu 0 i

a eo Th n7 Women) sy x | |

‘ z(_ i

Sheet 4 of 7 5,181,181

Jan, 19, 1993

U.S. Patent

; Mowen: qwewe3,

£ | pmoarera JY) surseooia

sem

ca

U.S. Patent Jan, 19, 1993 Sheet 5 of 7 5,181,181

Formatted

30 Motion and

Position Data

*Velochy

sition

santtude

‘30 Motion and

Postion Data

Tastantaneous

30 Information

FIG. 5

FIG. 6

US. Patent Jan, 19, 1993 Sheet 6 of 7 5,181,181

Tilia

Conditions

Reset

oF Hold

Conditions,

‘Attitude and

Velocity and Position

Vectors:

3D Motion and

Position Data

FIG. 7

zs

Vas

Res

y

-T-! 5 s

Origin Pp

xs

FIG. 8

5,181,181

US. Patent Jan, 19, 1993 Sheet 7 of 7

Woman].

Operator |” “Button Pushes =

and Releases "74,

Pusn *. od

‘Button

4 Status

",, Detection

Address

Reset *,

or Hold

Conditions,

al 30 Motion and

Position Date ° atitude

Posnion

Data

‘30 Motion and

Position Data

FIG. 10

5,181,181

1

COMPUTER APPARATUS INPUT DEVICE FOR

‘THREE-DIMENSIONAL INFORMATION

BACKGROUND OF THE INVENTION

‘The present invention relates to @ computer periph-

eral input device used by an operator 10 control cursor

position or the like. More particularly, the invention is

Sirected to an apparatus and method for inputting posi-

tion, attitude and motion data to a computer in, for

‘example, terms of three-dimensional spatial coordinates

‘over time,

‘Most computer systems with which humans must

interact, include a ‘cursor" which indicates current posi-

tion on a computer display. The cursor position may

indicate where data may be input, such as the case of

textual information, or where one may manipulate an

abject which is represented graphically by the com-

puter and depicted on a display. Manipulation of the

cursor on a computer display may also be used by an

‘operator to select or change modes of computer opera-

tion,

Early means of cursor control were centered around

the use of position control keys on the computer key-

board. These control keys were later augmented by

‘other devices such as the light pen, graphics tablet,

Joystick, and the track ball. Other developments utilized

' device called a ‘mouse’ 10 allow an operator to di-

rectly manipulate the cursor position by moving a small,

hand-held device across a flat surface.

‘The first embodiment of the mouse detected rotation

‘ofa ball which protrudes from the bottom of the device

to control cursor position. As the operator moves the

‘mouse on a two dimensional surface, sensors in the

mouse detect rotation of the ball along two mutually

perpendicular axes. Examples of such devices are illus-

trated in U.S. Pat. Nos. 3,541,541; 3,835,464; 3,892,963;

3,987,685; and 4,390,873. US. Pat. No. 4,409,479 dis-

closes a further development which utilizes optical

sensing techniques to detect mouse motion without

moving parts

These mouse devices all detect and convey motion

within two dimensions. However, with the increased

use of computers in the definition, representation, and

manipulation of information in terms of three-dimen-

sional space, attempts have been made to devise tech-

niques that allow for the definition of positional coordi-

nates in three dimensions. Advances of computer

‘graphical display and software technology in the area of

three-dimensional representation of information has

‘made desirable the capability of an operator to input

and manipulate or control more than merely three-di-

mensional position information, but also three-dimen-

sional motion and attitude information. This is particu-

larly true in the case of modeling, simulation, and ani-

mation of objects that are represented in either two or

three-dimensional space.

USS. Pat. No. 3,898,445, issued to MacLeod et al

relates to a means for determining position ofa target

three dimensions by measuring the time it takes for a

number of light beams to sweep between reference

points and comparing that with the time required for

the beams to sweep between the reference points and

the target.

USS. Pat. No. 4,766,423, issued to Ono et al, and US.

Pat, No, 4,812,828, issued to Ebina et a, disclose dis-

play and control devices and methods for controlling a

‘cursor in a three-dimensional space by moving the cur

5

10

s

30

«

ss

«

6s

2

sor as if to maneuver it by use of joystick and throttle

type devices to alter the direction and velocity of @

USS. Pat. No. 4,835,528, issued to Flinchbaugh, illus-

trates a cursor control system which utilizes movement

of a mouse device upon a two-dimensional surface that

contains logically defined regions within which move-

‘ment of the mouse is interpreted by the system to mean

‘movement in three-dimensional space.

‘None of the three-dimensional input devices noted

above has the intuitive simplicity that a mouse has for

interacting in two dimensions. A three-dimensional

input device disclosed in U.S. Pat. No. 4,787,081 to

Olson utilizes inertial acceleration sensors to permit an

operator to input three-dimensional spatial position.

However, the disclosed device does not consider input

of either motion or ‘orientation’, henceforth referred 10

herein as ‘attitude’

To determine changes in position within a plane, the

‘Olson system senses translation from a first accelerome~

ter. Rotation is obtained from the difference in transla-

tional acceleration sensed by the first accelerometer and

1 second accelerometer. This technique mandates preci-

sion mounting of the accelerometer pair, as well as

processing to decouple translation from rotation and to

determine both the rotational axis and rate. Thus, the

device disclosed by Olson et al. requires extensive pro-

‘cessing by the computer which may render the device

incompatible with lower end computing devices. Addi-

tionally, this technique would require highly sensitive

accelerometers to obtain low rotational rates.

In the Olson device, analog integrator circuits are

used for the first stage of integration required to obtain

displacement from acceleration. These integrator cit~

cous have limits in dynamic range and require periodic

resets to zero to eliminate inherent errors of offset and,

drift. The Olson device also constrains the use of the

remote device to a limited range of orientation with

respect to the computer in order to permit proper com-

‘munication. Furthermore, no provision is made in the

‘Olson system to prevent interference from signals origi-

nating from nearby input devices which control nearby

computer work stations.

Accordingly, one of the objects of the present inven-

tion is to provide a new and improved device that over~

‘comes the aforementioned shortcomings of previous

computer input techniques.

‘Additionally, a primary object of the present inven-

tion is to provide a means for an operator to input to a

‘computer information which allows the computer 10

directly ascertain position, motion and attitude of the

input device in terms of three-dimensional spatial coor-

dinates,

It is another object of the present invention to pro-

vvide a new and improved apparatus and method for

controlling movement of a cursor, represented on a

‘computer display in terms of three-dimensional spatial

coordinates.

Tt is yet another object of the present invention to

provide an apparatus and method for providing input to

‘computer, postion, motion, and attitude with a device

which is intuitively simple to operate by the natural

motion of a person, for the purpose of conveying a

‘change in position or attitude, in a particular case, oF a

‘change in the state of variables, in the general case.

5,181,181

3

SUMMARY OF THE INVENTION

In accordance with one embodiment of the present

invention, a computer input device apparatus detects

and conveys to a computer device positional informa:

tion within six degrees of motion—linear translation

along, and angular rotation about, each of three mutt

ally perpendicular axes of a Cartesian coordinate s95-

tem. The preferred embodiment of the present inven-

tion includes three accelerometers, each mounted with

its primary axis oriented to one of three orthogonally

oriented, or mutually perpendicular, axes. In this way,

tach accelerometer independently detects translational

Acceleration slong one ofthe primary axes ofa three-i

mensional Cartesian coordinate system. Each acceler

Cometer produces a signal which is directly proportional

to the acceleration imparted along its respective pri

mary axis,

‘Additionally, the preferred embodiment includes

three orthogonally oriented rate sensors, each mounted

insuch a way that each primary axisis parallel or collin

early oriented with each of the respective aforemen-

tioned accelerometer primary axes. Each rate sensor

independently detects angular rotational rates about an

individual axis of the Cartesian coordinate system and

produces a signal which is dizectly proportional to the

Angular momentum, or angular rotational displacement

rate, which is detected as the rate Sensor rotates about

its respective primary aris.

‘Additional aspects of the preferred embodiment of

the present invention include techniques for producing,

velocity and position vectors of the imput device. These

Vectors, each consisting of a scalar magnitude and a

direction in terms of a three-dimensional Cartesian co-

ordinate system, are continually updated to reflect the

instantaneous input device velocity as well as its post

tion and attitude, relative to an arbitrary stationary

initial position and attitude

‘The velocity vector is determined from the geomet-

ric sum ofthe acceleration integrated once overtime, as

indicated by signals produced by the three accelerome-

ters, The velocity vector may be expressed in terms of

4 velocity at which the input device is moving and a

direction of motion. The direction of motion can be

defined by two angles indicating the direction the de-

vice is moving relative to the input device coordinate

system.

The relative position vector is determined from the

seometric sum of the accelerations integrated twice

‘Overtime, as indicated by signals produced by the three

Accelerometers. The relative position vector may be

cexpresied in terms of a linear displacement from the

arbitrary inital origin “zero coordinates‘ of the input

device and a direction, defined by two angles, indicat

{ng the position ofthe device relative tothe initial pos

tion.

“The relative attitude may be determined from the rate

of change in rotational displacements integrated once

‘Overtime as indicated by signals produced by the three

rate sensors. The relative altitude is expressed in terms

of angular displacements from the arbitrary inital a

tude ‘zero angles, normalized to « maximum rotation of

360° Qa radians) about each of the three anes of the

input deviee, indicating the attude of the device rela-

tive to the intial atitude.

"Additional aspects of the preferred embodiment of

the present invention include push-button switches on

the input device which may be actuated by the operator

2s

8

©

4s

©

‘s

4

to convey to a computer a desire to change an operating.

state. Examples of operating state changes include pro-

viding a new input device initial position or attitude, or

indicating a new computer cursor position, or changing

a mode of operation of the computer and/or the input

device.

‘Additional aspects of the preferred embodiment of

the present invention include a wireless interface be-

‘tween the input device and a computer. The wireless

interface permits attributes of the input device motion

and push-button actuation to be communicated to a

‘computer in a manner that allows for motion to be

unencumbered by an attached cable. Preferably, the

interface is bi-directional, allowing the computer 0

control certain aspects of the input device operational

states. In an additional embodiment of the input device,

the interface between the device and a computer may be

acable.

BRIEF DESCRIPTION OF THE DRAWINGS

‘The aforementioned and other objects, features, and

advantages of the present invention will be apparent t0

the skilled artisan from the following detailed descrip-

tion when read in light of the accompanying drawings,

wherein:

FIG. 1(2) isa top view of an input device in accor-

dance with the present invention;

FIG. 1(6) isa front view of the input device of FIG.

3@;

FIG. 1(¢) is a side view of the input device of FIG.

3@);

FIG. 1(¢) isa perspective view of the input device of

FIG. 10},

FIGS. 2(@)-2(c) are cut-away top, front and side

views, respectively, of the device of FIG. 1, illustrating

the motion sensing assembly and ancillary components

area of the input device;

FIG. 3 is a block diagram of the major functional

lements of the preferred embodiment of the present

invention, including a wireless interface to a computer;

FIG. 4'is a block diagram showing additional detail

‘on major processing elements of the preferred embodi-

‘ment of the present invention;

FIG. 5 isa process flow diagram illustrating the func

tions used by the preferred embodiment in processing.

input device motion, push-button status and external

computer interface

FIG. 6 is a process flow diagram illustrating motion

sensing assembly interface functions, and further illus-

trating details of the sensor interface and data conver-

sion process of FIG. 5;

FIG. 7 is a process flow diagram showing velocity

and position calculation functions, and illustrating fur-

ther details of the three-dimensional computation pro-

cess of FIG. 5;

FIG. 8 depicts a tangential velocity arising from rota-

tion of the input device;

FIG. 9 depicts translation and rotation from initial

coordinates of a coordinate system centered on the

input device; and

FIG. 10 is a process flow diagram illustrating the

functions used in processing changes in status of push-

buttons, and in providing external interface to a com-

puter, and illustrating further details of the external

interface process of FIG. 5.

5,181,181

5

DETAILED DESCRIPTION OF THE

‘PREFERRED EMBODIMENT

Referring to FIGS. (2) through 1(¢), « hand-held

‘computer input device or ‘mouse’ 1 is illustrated which

includes a plurality of interface ports 7-12. As shown,

‘each external face of the mouse 1 is provided with an

interface port. Each of these interface ports may pro-

vide communication with the computer through known

wireless communication techniques, such as infrared or

radio techniques. Thus, regardless of the orientation of

the mouse 1 with respect 10 the computer work station,

at least one interface port will be directed generally at

the computer.

‘During operation, the interface ports are operated

simultaneously to transmit or receive signals. Since at

least one of the interface ports generally faces the com-

puter at all times, communication with the computer is

permitted regardiess ofthe orientation of the mouse. As

‘Will be described below in greater detail, the system

‘may include apparatus for avoiding interference from

nearby devices when operated in a relatively crowded

environment, Alternatively, communication with the

‘computer may be established through an interface cable

13,

‘The mouse 1 may also include a plurality of push-but-

tons 4, and 6 for additional interaction of the operator

‘with the host computer system. The push-buttons may

be used, for example, to provide special command sig-

nals to the computer. These command signals are trans-

mitted through the interface ports 7-12 or the cable

interface 13, to the computer.

Preferably, the push-buttons are positioned on an

angled surface of the mouse 1 to allow easy actuation by

‘an operator from at least two different orientations of

the mouse device. Alternatively, multiple sets of push-

‘buttons may be provided to permit easy actuation. Indi

vvidusl push-button sets may be arranged, for example,

‘on opposed faces of the mouse 1 and connected in paral-

Jel to permit actuation of command signals from any one

‘of the multiple push-button sets. Additionally, push-but-

tons which detect and convey a range of pressure ap-

plied to them, as opposed to merely conveying a change

between two states, may be provided in an alternative

embodiment.

‘The push-buttons can be operated to perform a num-

ber of functions. For example, actuation of a push-but-

ton may command the system to reset the zero refer

tence point from which the relative position and attitude

fof the mouse 1 is measured. Additionally, the position

‘and attitude attributes of the mouse I may be held con-

stant, despite movement, while a push-button is de-

pressed. The push-buttons may also function to indicate

8 desire by the operator to indicate a point, Hine, surface,

volume or motion in space, or to select a menu item,

ccursor position or particular attitude.

‘Numerous other examples of the function of push-

buttons 4, §, and 6 could be given, and although no

additional examples are noted here, additional functions

Of the push-buttons will be apparent to the skilled srti-

san, Furthermore, additional embodiments of the mouse

I may include more or fewer than three push-buttons. A

‘motion sensing assembly 2 and an ancillary components

area 3 are indicated by phantom dashed lines in FIGS.

1(@)-1(c). In an alternative embodiment of the present

invention, components included in the ancillary compo-

rents area 3, as described herein, may be contained

Within a separate chassis remote from the mouse 1. In

0

2s

38

“0

45

ss

©

6

such an embodiment, the mouse 1 would contain only

the motion sensing assembly 2.

‘Turning now to FIGS. 2(2)-2(0), the motion sensing.

assembly 2 includes conventional rotational rate sensors

14, 15 and 16, and accelerometers 17, 18 and 19 aligned

fn three orthogonal axes. Rotational rate sensor 14 and

accelerometer 17 are aligned on a first axis; rotational

rate sensor 15 and accelerometer 18 are aligned on @

second axis perpendicular to the first axis; and rotational

Tate sensor 16 and accelerometer 19 are aligned on @

third axis perpendicular to both the first axis and the

second axis,

‘By mounting the rotational rate sensors 14, 15 and 16

and the accelerometers 11, 18 and 19 in the foregoing,

manner, the motion sensing assembly 2 simultaneously

‘senses all six degrees of motion of the translation along,

and rotation about, three mutually perpendicular axes

of a Cartesian coordinate system. Translational acceler-

ations are sensed by the accelerometers 17, 18 and 19,

‘which detect acceleration along the X, Y and Z axes,

respectively, and produce an analog signal representa-

tive of the acceleration. Similarly, rotational angular

rates are detected by the rotational rate sensors 14, 15

‘and 16, which sense rotational rates about the X, Y and

Zaxes, respectively, and produce an analog signal rep-

resentative of the rotational rate.

‘The motion sensing assembly 2 is referred to numer-

cous times below. For simplification, the general term

‘sensors’ shall be used herein to describe the motion

sensing assembly 2 or its individual components de-

scribed above.

FIG. 3s system block diagram which depicts major

functional elements included in the motion sensing as-

sembly 2 and ancillary components area 3 of the mouse

of FIG. 1, and the wireless interface 21 between the

mouse transceivers 7-12 and a transceiver 22 associated

‘with a computer 23, Sensor signals from the individual

‘components of the motion sensing assembly 2 are pro-

Vided to circuits in the ancillary components area 3

‘which performs processing of the sensor signals. Infor-

‘mation regarding the sensor signals is then provided to

‘mouse transceivers 7-12 for communication to the com-

‘puter transceiver 22. Functions performed within the

Ancillary components area 3, labeled analog to digital

signal processing, numeric integration, and vector pro-

cessing, will be further described below.

FIG. 4 is a block diagram of the preferred embodi-

‘ment of the mouse 1 of FIG. 1. Sensor interface circuits

31 provides electrical power to the components of the

‘motion sensing assembly 2 and bias and control circuits

to ensure proper operation of the sensor components.

‘Additionally, sensor interface circuits 31 preferably

include amplification circuitry for amplifying sensor

signals from the motion sensing assembly 2.

"Amplified sensor signals are provided to analog-to-

digital converter 32 which converts the amplified ana-

log sensor signals into corresponding quantized digital

signals. The analog-to-digital converter 32 preferably

includes a single channel which multiplexes sequentially

through the respective sensor signals. Alternatively, the

analog-to-digital converter may include six channels,

‘with individual channels dedicated to individual sensor

signals,

"The digitized sensor signals from the analog-to-digi-

tal converter 32 are stored in a firstin-first-out (FIFO)

buffer memory 33 for further processing by processing

element 34. The FIFO buffer memory 33 is preferably

organized as a single memory stack into which each of

5,181,181

7

the respective digitized sensor signals is stored in se-

‘quential order, The FIFO buffer memory 33 may alter-

natively be organized with six separate memory areas

‘corresponding to the six sensor signals. In other words,

2 separate FIFO stack is provided for digitized sensor

signals from each of the six motion sensing components

of the motion sensing assembly 2. By providing the

FIFO buffer memory 33, the mouse 1 compensates for

‘varying processing speeds in processing element 34 and,

eliminates the need for elaborate synchronization

schemes controlling the operation between the ‘front-

tend’ sensor interface 31 and analog-to-digital converter

‘32, and the ‘backend’ processing element 34 and com-

puter interface control 36.

‘The processing element 34 is preferably a conven:

tional microprocessor having a suitably programmed

read-only memory. The processing element 34 may also

bbe a suitably programmed conventional digital signal

processor. Briefly, the processing element 34 periodi-

cally reads and numerically integrates the digitized

sensor signals ftom the FIFO buffer memory 33. Three-

dimensional motion, position and attitude values are

continually computed in a known manner based on the

‘most recent results of the numerical integration of the

sensor data, the current zero position and attitude infor-

‘mation, and the current processing configuration estab-

lished by push-button or computer commands, Informa-

tion processed by the processing element 34 is stored in

dual-ported memory 38 upon completion of processing,

by known techniques such as direct memory access.

This information may then be sent via interface ports

7-12 to the computer by a computer interface control

36.

‘Numerical integration is performed continvally on

the digital acceleration and rate values provided by the

sensors, Numeric integration of digital values allows for

a significant dynamic range which is limited only by the

amount of memory and processing time allocated to the

task. In addition to numerical integration, the process-

ing element 34 computes and applies correction values

which compensate for the effects of gravity and the

translational effects of rotation on the linear sensors, ie

both gravitational accelerations and tangential veloci-

ties. Additionally, by establishing. a threshold level for

motion signals above which the signals are attributable

to operator movements of the mouse 1, the processing.

element 34 effectively reduces or eliminates errors

‘which might be induced by sensor drift, earth rotational

effects and low level noise signals that may be present

when an operator is not moving the mouse.

‘The processing element 34 responds to external com-

mands by changing the processing configuration of the

calculation processes. For example, in one processing,

mode the sensor value for any axis, or axes, will be

ignored upon computation of the three-dimensional

motion and position values. These external commands

‘would normally be initiated by an operator actuating.

cone of the three push-button switches 4, 5, or 6. The

‘computer interface control 36 detects actuation of the

ppush-buttons 4-6 and sends corresponding command

data to the computer. Upon receipt of the command

data, the computer retums command data to the com-

puter interface control 36 which, in turn, provides com-

‘mand data to the processing element 34 to change the

processing configuration. Another external command

initiated from the push-buttons may result in the pro-

cessing element 34 clearing all numeric integration ac-

cumulators and setting initial condition registers 10

»

2s

0

38

0

45

55

6s

8

zero, thereby causing future position and attitude com-

pputations to be relative to a new physical location and

attitude of the mouse

Utilization of the dual-ported memory 38 permits the

‘external computer to be provided position updates in

near real-time, since the processing element 34 is pro-

vided concurrent access to the memory. In this manner,

the processing element 34 may make the current posi

tion, attitude and motion values available to the external

computer as soon as new computations are completed.

Since current relative position, attitude and motion are

continually computed, as opposed to being computed

‘upon request, this approach ensures that the most recent

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Mechanics of Materials 7th Edition Beer Johnson Chapter 6Document134 pagesMechanics of Materials 7th Edition Beer Johnson Chapter 6Riston Smith95% (96)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Astm C119-16Document8 pagesAstm C119-16Manuel Antonio Santos Vargas100% (2)

- Like Kind Card Game (US Patent 6193235)Document12 pagesLike Kind Card Game (US Patent 6193235)PriorSmartNo ratings yet

- Multicasting Method and Apparatus (US Patent 6434622)Document46 pagesMulticasting Method and Apparatus (US Patent 6434622)PriorSmartNo ratings yet

- Like Kind Money Board Table Game (US Patent 6186505)Document11 pagesLike Kind Money Board Table Game (US Patent 6186505)PriorSmartNo ratings yet

- Intelligent User Interface Including A Touch Sensor Device (US Patent 8288952)Document9 pagesIntelligent User Interface Including A Touch Sensor Device (US Patent 8288952)PriorSmartNo ratings yet

- Cell Regulatory Genes, Encoded Products, and Uses Related Thereto (US Patent 7030227)Document129 pagesCell Regulatory Genes, Encoded Products, and Uses Related Thereto (US Patent 7030227)PriorSmartNo ratings yet

- Method and Apparatus For Retrieving Data From A Network Using Linked Location Identifiers (US Patent 6226655)Document22 pagesMethod and Apparatus For Retrieving Data From A Network Using Linked Location Identifiers (US Patent 6226655)PriorSmartNo ratings yet

- User Interface With Proximity Sensing (US Patent 8035623)Document15 pagesUser Interface With Proximity Sensing (US Patent 8035623)PriorSmartNo ratings yet

- Wine Cellar Alarm System (US Patent 8710985)Document11 pagesWine Cellar Alarm System (US Patent 8710985)PriorSmartNo ratings yet

- Advance Products & Systems v. CCI Piping SystemsDocument5 pagesAdvance Products & Systems v. CCI Piping SystemsPriorSmartNo ratings yet

- Casing Spacer (US Patent 6736166)Document10 pagesCasing Spacer (US Patent 6736166)PriorSmartNo ratings yet

- High-Speed Serial Linking Device With De-Emphasis Function and The Method Thereof (US Patent 7313187)Document10 pagesHigh-Speed Serial Linking Device With De-Emphasis Function and The Method Thereof (US Patent 7313187)PriorSmartNo ratings yet

- Casino Bonus Game Using Player Strategy (US Patent 6645071)Document3 pagesCasino Bonus Game Using Player Strategy (US Patent 6645071)PriorSmartNo ratings yet

- TracBeam v. AppleDocument8 pagesTracBeam v. ApplePriorSmartNo ratings yet

- Modern Telecom Systems LLCDocument19 pagesModern Telecom Systems LLCPriorSmartNo ratings yet

- Eckart v. Silberline ManufacturingDocument5 pagesEckart v. Silberline ManufacturingPriorSmartNo ratings yet

- Senju Pharmaceutical Et. Al. v. Metrics Et. Al.Document12 pagesSenju Pharmaceutical Et. Al. v. Metrics Et. Al.PriorSmartNo ratings yet

- VIA Technologies Et. Al. v. ASUS Computer International Et. Al.Document18 pagesVIA Technologies Et. Al. v. ASUS Computer International Et. Al.PriorSmartNo ratings yet

- Richmond v. Creative IndustriesDocument17 pagesRichmond v. Creative IndustriesPriorSmartNo ratings yet

- Senju Pharmaceutical Et. Al. v. Metrics Et. Al.Document12 pagesSenju Pharmaceutical Et. Al. v. Metrics Et. Al.PriorSmartNo ratings yet

- Perrie v. PerrieDocument18 pagesPerrie v. PerriePriorSmartNo ratings yet

- ATEN International v. Uniclass Technology Et. Al.Document14 pagesATEN International v. Uniclass Technology Et. Al.PriorSmartNo ratings yet

- Sun Zapper v. Devroy Et. Al.Document13 pagesSun Zapper v. Devroy Et. Al.PriorSmartNo ratings yet

- Merck Sharp & Dohme v. Fresenius KabiDocument11 pagesMerck Sharp & Dohme v. Fresenius KabiPriorSmartNo ratings yet

- Mcs Industries v. Hds TradingDocument5 pagesMcs Industries v. Hds TradingPriorSmartNo ratings yet

- Merck Sharp & Dohme v. Fresenius KabiDocument10 pagesMerck Sharp & Dohme v. Fresenius KabiPriorSmartNo ratings yet

- TracBeam v. T-Mobile Et. Al.Document9 pagesTracBeam v. T-Mobile Et. Al.PriorSmartNo ratings yet

- GRQ Investment Management v. Financial Engines Et. Al.Document12 pagesGRQ Investment Management v. Financial Engines Et. Al.PriorSmartNo ratings yet

- Dok Solution v. FKA Distributung Et. Al.Document99 pagesDok Solution v. FKA Distributung Et. Al.PriorSmartNo ratings yet

- Multiplayer Network Innovations v. Konami Digital EntertainmentDocument6 pagesMultiplayer Network Innovations v. Konami Digital EntertainmentPriorSmartNo ratings yet

- Shenzhen Liown Electronics v. Luminara Worldwide Et. Al.Document10 pagesShenzhen Liown Electronics v. Luminara Worldwide Et. Al.PriorSmartNo ratings yet

- Determination of Hydroxymethylfurfural (HMF) in Honey Using The LAMBDA SpectrophotometerDocument3 pagesDetermination of Hydroxymethylfurfural (HMF) in Honey Using The LAMBDA SpectrophotometerVeronica DrgNo ratings yet

- Interceptor Specifications FinalDocument7 pagesInterceptor Specifications FinalAchint VermaNo ratings yet

- Smashing HTML5 (Smashing Magazine Book Series)Document371 pagesSmashing HTML5 (Smashing Magazine Book Series)tommannanchery211No ratings yet

- -4618918اسئلة مدني فحص التخطيط مع الأجوبة من د. طارق الشامي & م. أحمد هنداويDocument35 pages-4618918اسئلة مدني فحص التخطيط مع الأجوبة من د. طارق الشامي & م. أحمد هنداويAboalmaail Alamin100% (1)

- Fabric DefectsDocument30 pagesFabric Defectsaparna_ftNo ratings yet

- How Do I Predict Event Timing Saturn Nakshatra PDFDocument5 pagesHow Do I Predict Event Timing Saturn Nakshatra PDFpiyushNo ratings yet

- Module 17 Building and Enhancing New Literacies Across The Curriculum BADARANDocument10 pagesModule 17 Building and Enhancing New Literacies Across The Curriculum BADARANLance AustriaNo ratings yet

- Detailed Lesson Plan in Mathematics (Pythagorean Theorem)Document6 pagesDetailed Lesson Plan in Mathematics (Pythagorean Theorem)Carlo DascoNo ratings yet

- Promotion of Coconut in The Production of YoghurtDocument4 pagesPromotion of Coconut in The Production of YoghurtԱբրենիկա ՖերլինNo ratings yet

- Standalone Financial Results, Limited Review Report For December 31, 2016 (Result)Document4 pagesStandalone Financial Results, Limited Review Report For December 31, 2016 (Result)Shyam SunderNo ratings yet

- New KitDocument195 pagesNew KitRamu BhandariNo ratings yet

- 20150714rev1 ASPACC 2015Document22 pages20150714rev1 ASPACC 2015HERDI SUTANTONo ratings yet

- Award Presentation Speech PDFDocument3 pagesAward Presentation Speech PDFNehal RaiNo ratings yet

- List of Practicals Class Xii 2022 23Document1 pageList of Practicals Class Xii 2022 23Night FuryNo ratings yet

- A List of 142 Adjectives To Learn For Success in The TOEFLDocument4 pagesA List of 142 Adjectives To Learn For Success in The TOEFLchintyaNo ratings yet

- Service M5X0G SMDocument98 pagesService M5X0G SMbiancocfNo ratings yet

- Duties and Responsibilities - Filipino DepartmentDocument2 pagesDuties and Responsibilities - Filipino DepartmentEder Aguirre Capangpangan100% (2)

- STAB 2009 s03-p1Document16 pagesSTAB 2009 s03-p1Petre TofanNo ratings yet

- Chapter 8 - Current Electricity - Selina Solutions Concise Physics Class 10 ICSE - KnowledgeBoatDocument123 pagesChapter 8 - Current Electricity - Selina Solutions Concise Physics Class 10 ICSE - KnowledgeBoatskjNo ratings yet

- Electronic Waste Management in Sri Lanka Performance and Environmental Aiudit Report 1 EDocument41 pagesElectronic Waste Management in Sri Lanka Performance and Environmental Aiudit Report 1 ESupun KahawaththaNo ratings yet

- Using MonteCarlo Simulation To Mitigate The Risk of Project Cost OverrunsDocument8 pagesUsing MonteCarlo Simulation To Mitigate The Risk of Project Cost OverrunsJancarlo Mendoza MartínezNo ratings yet

- Sample Paper Book StandardDocument24 pagesSample Paper Book StandardArpana GuptaNo ratings yet

- Research in International Business and Finance: Huizheng Liu, Zhe Zong, Kate Hynes, Karolien de Bruyne TDocument13 pagesResearch in International Business and Finance: Huizheng Liu, Zhe Zong, Kate Hynes, Karolien de Bruyne TDessy ParamitaNo ratings yet

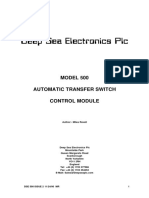

- Deep Sea 500 Ats ManDocument18 pagesDeep Sea 500 Ats ManLeo Burns50% (2)

- Python Programming Laboratory Manual & Record: Assistant Professor Maya Group of Colleges DehradunDocument32 pagesPython Programming Laboratory Manual & Record: Assistant Professor Maya Group of Colleges DehradunKingsterz gamingNo ratings yet

- TSR 9440 - Ruined KingdomsDocument128 pagesTSR 9440 - Ruined KingdomsJulien Noblet100% (15)

- Characteristics: Our in Vitro IdentityDocument4 pagesCharacteristics: Our in Vitro IdentityMohammed ArifNo ratings yet

- 2002 CT Saturation and Polarity TestDocument11 pages2002 CT Saturation and Polarity Testhashmishahbaz672100% (1)