Professional Documents

Culture Documents

411 Pessimism and The Maximin and Minimax Regret Rules

411 Pessimism and The Maximin and Minimax Regret Rules

Uploaded by

Kevin KimaniOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

411 Pessimism and The Maximin and Minimax Regret Rules

411 Pessimism and The Maximin and Minimax Regret Rules

Uploaded by

Kevin KimaniCopyright:

Available Formats

Topic 4: Decision Analysis Module 4.1: Traditional Decision Theory Lecture 4.1.

1: Pessimism and the maximin and minimax regret rules INTRODUCTION Decision theory pertains to human decision making in a world of incomplete information and incomplete human control over events. Decision theory posits two players: a cognitive human and a randomizing nature. The human, called the decision maker, performs analyses, makes calculations, and cognitively decides upon a course of action in an effort to optimize his or her own welfare. The metaphorical nature is non-cognitive, does not perform analyses or make calculations, and does not choose courses of action in any self-interested way. Rather, nature blithely selects courses of action purely in a probabilistic way. Decision Problems The two fundamental concepts of decision theory are states of nature and acts. States of nature are under the control of nature and beyond the control of the decision maker, and are probabilistically selected by nature. Acts are under the control of the decision maker and any one of the available acts can be selected by the decision maker. Further, decision theory presumes that the problem is presented to the decision maker, i.e., that the problem itself, like the states of nature, is beyond the control of the decision maker. A decision problem is represented as a pair <S,A> composed of a set S of states of nature and a set A of acts. So specified, <S,A> is a decision problem under uncertainty. If the decision maker has a probability system over the states, then <S,A> is a decision problem under risk. Decision Makers Decision Theory posits that the human decision maker brings to the resolution of a decision problem beliefs and preferences. Specifically, the theory presumes that the decision maker possesses a probability system that captures his or her (partial) beliefs about nature's selection of states, a belief system about the outcomes accruing to the performance of the acts in the various states of nature, and a preference structure over the outcomes. Thus, the decision maker is a triple <P,F,U> composed of a probability measure P, an outcome mapping F, and a utility function U. The probability measure P is defined over the set of states of nature, and captures the decision maker's view of the process whereby the states are selected by nature. The outcome mapping F is defined on the Cartesian product of the states of nature and the acts, and presents the outcome resulting from performing each act in each of the states of nature. Thus, the outcome mapping F generates a new set O of outcomes. The utility function is defined over the set O of outcomes and represents the decision maker's preferences over the outcomes. In what follows we will consider both decision making under uncertainty and decision making under risk. We will treat the former by simply ignoring the decision makers

probability system, and we will treat the latter by incorporating the decision makers probability system. The focus of this course, however, is on decision making under risk. Decision Making Decision making is composed of a two step process. First, the acts in A are ordered, and second the best act is selected. Typically, the first step is completed by assigning a number to each act, and then using the complete ordering property of the real numbers to order the acts. If the outcomes are goods, like gains, income, etc., then the best act is the act with the highest number. Contrariwise, if the outcomes are bads, like losses, costs, etc., then the best act is the act with the smallest number. Decision Rules Decision rules are composed of two commands. The first command tells the user how to assigns numbers to acts; the second command tells the user how to choose among the numbers assigned by the first command. Decision rules are designed to reflect some human attitude toward decision making. The rules considered here reflect two forms of pessimism and general rationality. In what follows we use a running example. Consider a very simple decision problem where there are two states and two acts, and where the payoffs are given in the following table: VALUE s1 s2 a1 100 -50 a2 0 20

The Maximin Value Rule One of the most popular rules for decision making under uncertainty is based on simple pessimism. The rule, called the maximin value rule, is a rational response in a world where the metaphorical nature is out to get the decision maker. Presuming the payoffs reflect a good, the maximin value rule requires the user to first assign to each act the minimum value that act can receive, and second, to choose the act with the maximum assigned value. The foregoing example yields the following: First step the numbers assigned to the acts are 50 and 0, respectively, since these are the worst payoffs for each act. Second step select act #2 because 0 is the larger of the two assigned numbers. In tabular form, we have the following: VALUE s1 s2 Min Value Max Min Value a1 100 -50 -50 a2 0 20 0 0

The maximin value rule reflects a kind of pessimism wherein the decision maker views nature as being out to get me, and thereby minimizes the worst that nature can inflict. Put differently, the maximin value rule reflects a kind of pessimism wherein the decision maker always minimizes the downside risk. This kind of pessimism is often observed among people who survived the Great Depression. The Maximin Regret Rule Another popular rule for decision making under uncertainty is also based on pessimism. Called the maximin regret rule, this rule attempts to model pessimism with more sophistication than the maximin value rule. The maximin value rule assigns a value to each act without considering the values accruing to the other acts. The Maximin Regret rule avoids this problem by replacing the payoffs with regret values. The latter, often called opportunity costs, represent regret in the sense of lost opportunities. Specifically, the regret value that replaces a payoff is the distance between the payoff and the best payoff possible in the relevant state of nature. The payoff table VALUE s1 s2 a1 100 -50 a2 0 20

yields the regret table REGRET s1 s2 a1 0 70 a2 100 0

The regret values for the first state of nature (s1 = your ticket wins) are calculated as follows: 0 = 100 - 100 100 = 100 - 0. The regret values for the second state of nature (s2 = your ticket does not win) are calculated as follows: 70 = 20 (-50) 0 = 20 20. The Minimax Regret rule requires the user to first assign to each act the maximum regret that act can receive, and second, to choose the act with the minimum assigned regret value. The foregoing example yields the following: First step the numbers assigned to the acts are 70 and 100, respectively, since these are the maximum regret values for each act. Second step select act #1 because 70 is the smaller of the two assigned numbers.

In tabular form, we have the following: REGRET s1 s2 Max Regret Min Max Regret a1 0 70 70 70 a2 100 0 100

The minimax regret rule captures the behavior of individuals who spend their postdecision time regretting their choices. These are the individuals who spend their time saying I should have don this and I should have done that. PROBLEMS: Find the maximin value and minimax regret acts for the following payoff table: VALUE state 1 state 2 state 3 state 4 act 1 -100 -50 50 100 act 2 -50 -90 0 50 act 3 10 50 -80 -50 act 4 200 100 -100 -200

Find the minimax cost and minimax regret acts for the following cost table: COST act 1 act 2 act 3 Text: Ch. 10, #16 state 1 300 500 600 state 2 400 700 300 state 3 100 600 900

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5814)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1092)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (845)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (348)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Maverick Men: The True Story Behind The Videos (FREE TEASER)Document25 pagesMaverick Men: The True Story Behind The Videos (FREE TEASER)Anthony DiFiore100% (1)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- TOEFL Diagnostic TestDocument47 pagesTOEFL Diagnostic TestLilian G. Romero L.No ratings yet

- University: Adama Science and TechnologyDocument23 pagesUniversity: Adama Science and TechnologyTesfuGebreslassieNo ratings yet

- MidtermDocument3 pagesMidtermapi-272864612No ratings yet

- Master Munaqasyah 15 KolomDocument17 pagesMaster Munaqasyah 15 KolomAch. Fikri FausiNo ratings yet

- Functionality Test CasesDocument20 pagesFunctionality Test CasesNitin PatelNo ratings yet

- ZXR10 M6000 (V1.00.30) Carrier-Class Router Configuration Guide (IPv4 Multicast)Document122 pagesZXR10 M6000 (V1.00.30) Carrier-Class Router Configuration Guide (IPv4 Multicast)Lucho BustamanteNo ratings yet

- Debian Keyring ProblemDocument2 pagesDebian Keyring Problemgabbel1986No ratings yet

- Sources of The British ConstitutionDocument4 pagesSources of The British ConstitutionFareed KhanNo ratings yet

- Official History of Improved Order of RedmenDocument672 pagesOfficial History of Improved Order of RedmenGiniti Harcum El BeyNo ratings yet

- Avoiding Shifts in Person, Number and TenseDocument3 pagesAvoiding Shifts in Person, Number and Tenseteachergrace1225No ratings yet

- Template For Request To Additional Shs Program OfferingDocument7 pagesTemplate For Request To Additional Shs Program OfferingJerome BelangoNo ratings yet

- Basic Suturing and Wound Management: Fakultas Kedokteran Universitas DiponegoroDocument77 pagesBasic Suturing and Wound Management: Fakultas Kedokteran Universitas Diponegoropranoto jaconNo ratings yet

- John McDowell, Wittgenstein On Following A Rule', Synthese 58, 325-63, 1984.Document40 pagesJohn McDowell, Wittgenstein On Following A Rule', Synthese 58, 325-63, 1984.happisseiNo ratings yet

- Delta Dentists Near Nyc ApartmentDocument50 pagesDelta Dentists Near Nyc ApartmentSteve YarnallNo ratings yet

- Macro Economics-1 PDFDocument81 pagesMacro Economics-1 PDFNischal Singh AttriNo ratings yet

- Credit Transactions: Group 1Document46 pagesCredit Transactions: Group 1Joovs Joovho100% (1)

- Unruh 2Document7 pagesUnruh 2amanNo ratings yet

- Heart Saver Cpr+Aed - HetaDocument2 pagesHeart Saver Cpr+Aed - HetaCharles JohnsonNo ratings yet

- The Role of Language in Childrens Cognitive Development Education EssayDocument9 pagesThe Role of Language in Childrens Cognitive Development Education EssayNa shNo ratings yet

- Membangun Teori Dan Konsep Asuhan Kebidanan Kehamilan, Persalinan, Nifas, BBL, KB Dan KesproDocument28 pagesMembangun Teori Dan Konsep Asuhan Kebidanan Kehamilan, Persalinan, Nifas, BBL, KB Dan KesproNikytaNo ratings yet

- Marketing Research Report:Netflix: Presented By: Rida Ahmad Ali Jawad Meeral ZiaDocument12 pagesMarketing Research Report:Netflix: Presented By: Rida Ahmad Ali Jawad Meeral ZiaAli JawadNo ratings yet

- Teaching NotesDocument13 pagesTeaching NotesmaxventoNo ratings yet

- Angleline and Angle RelationshipsDocument25 pagesAngleline and Angle Relationshipstrtl_1970No ratings yet

- Sentence Based WritingDocument3 pagesSentence Based WritingNari WulandariNo ratings yet

- MyTradeTV Glass and Glazing Digital Magazine July 2015Document148 pagesMyTradeTV Glass and Glazing Digital Magazine July 2015Lee ClarkeNo ratings yet

- Vu Re Lecture 16Document28 pagesVu Re Lecture 16ubaid ullahNo ratings yet

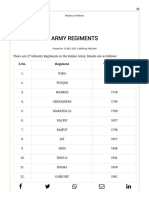

- Army Regiments: S.No. Regiment Year of RaisingDocument3 pagesArmy Regiments: S.No. Regiment Year of RaisingAsc47No ratings yet

- English in Use - Verbs - Wikibooks, Open Books For An Open World PDFDocument86 pagesEnglish in Use - Verbs - Wikibooks, Open Books For An Open World PDFRamedanNo ratings yet

- Jasleen-Resume Dec 62020Document3 pagesJasleen-Resume Dec 62020api-534110108No ratings yet