Professional Documents

Culture Documents

COMP2230 Introduction To Algorithmics: A/Prof Ljiljana Brankovic

Uploaded by

MrZaggyOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

COMP2230 Introduction To Algorithmics: A/Prof Ljiljana Brankovic

Uploaded by

MrZaggyCopyright:

Available Formats

COMP2230 Introduction to

Algorithmics

Lecture 3

A/Prof Ljiljana Brankovic

Lecture Overview

Analysis of Algorithms - Text, Section 2.3

Analysis of Algorithms

Analysis of algorithms refers to estimating the time

and space required to execute the algorithm.

We shall be mostly concerned with time complexity.

Problems for which there exist polynomial time

algorithms are considered to have an efficient

solution.

Analysis of Algorithms

Problems can be:

Unsolvable

These problems are so hard that there does not exist

an algorithm that can solve them. Example is the

famous Halting problem.

Intractable

These problems can be solved, but for some instances

the time required is exponential and thus these

problems are not always solvable in practice, even

for small sizes of input.

Analysis of Algorithms

Problems can be (continued):

Problems of unknown complexity

These are, for example, NP-complete problems; for

these problems neither there is a known polynomial

time algorithm, nor they have been shown to be

intractable.

Feasible (tractable) problems

For these problems there is a known polynomial time

algorithm.

Analysis of Algorithms

We shall not try to calculate the exact time needed to

execute an algorithm; this would be very difficult to do,

and it would depend on the implementation, platform, etc.

We shall only be estimating the time needed for algorithm

execution, as a function of the size of the input.

We shall estimate the running time by counting some

dominant instructions.

A barometer instruction is an instruction that is executed

at least as often as any other instruction in the

algorithm.

Analysis of Algorithms

Worst-case time is the maximum time needed to

execute the algorithm, taken over all inputs of size n.

Average-case time is the average time needed to

execute the algorithm, taken over all inputs of size n.

Best-case time is the minimum time needed to execute

the algorithm, taken over all inputs of size n.

Analysis of Algorithms

It is usually harder to analyse the average-case

behaviour of the algorithm than the best and the

worst case.

Behaviour of the algorithm that is the most important

depends on the application. For example, for

algorithm that is controlling a nuclear reactor, the

worst case analysis is clearly very important. On the

other hand, for an algorithm that is used in a non-

critical application and runs on a variety of

different inputs, the average case may be more

appropriate.

Example 2.3.1 Finding the Maximum

Value in an Array Using a While Loop

This algorithm finds the largest number in the array s[1], s[2], ... , s[n].

Input Parameter: s

Output Parameters: None

array_max_ver1(s)

{

large = s[1]

i = 2

while (i s.last)

{

if (s[i] > large)

large = s[i] // larger value found

i = i + 1

}

return large

}

The input is an array of size n.

What can be used as a barometer ?

The number of iterations of while loop appears to be

a reasonable estimate of execution time - The loop is

always executed n-1 times.

Well use the comparison i s.last as a barometer.

The worst-case and the average-case (as well as the

best case!) times are the same and equal to n.

Suppose that the worst-case time of an algorithm

is (for input of size n)

t(n) = 60n

2

+ 5n + 1

For large n, t(n) grows as 60n

2

:

n T(n)=60n

2

+ 5n + 1 60n

2

10 6,051 6,000

100 600,501 600,000

1000 60,005,001 60,000,000

10,000 6,000,050,001 6,000,000,000

The constant 60 is actually not important as it

does not affect the growth of t(n) with n. We

say that t(n) is of order n

2

, and we write it as

t(n) = O(n

2

)

(t(n) is theta of n

2

)

Let f and g be nonnegative functions on the positive integers.

Asymptotic upper bound: f(n) =O(g(n)) if there exist constants C

1

> 0 and N

1

such that f(n) s C

1

g(n) for all n > N

1

.

We read f(n) =O(g(n)) as f(n) is big oh of g(n)) or

f(n) is of order at most g(n).

n N

1

f(n)

C

1

g(n)

Asymptotic lower bound: f(n) =O(g(n)) if there exist constants C

2

> 0 and N

2

such that f(n) > C

2

g(n) for all n > N

2

.

We read f(n) =O(g(n)) as f(n) is of order at least g(n) or f(n) is

omega of g(n)).

n N

2

f(n)

C

2

g(n)

Asymptotic tight bound: f(n) =O(g(n)) if there exist constants

C

1

,C

2

> 0 and N such that C

2

g(n) s f(n) s C

1

g(n) for all n > N.

Equivalently, f(n) =O(g(n)) if f(n) =O(g(n)) and f(n) =O(g(n)) ).

We read f(n) =O(g(n)) as f(n) is of order g(n) or f(n) is theta of

g(n))

Note that n=O(2

n

) but n = O(2

n

).

n N

f(n)

C

1

g(n)

C

2

g(n)

Example

Let us now formally show that if t(n) = 60n

2

+ 5n + 1 then

t(n) = O(n

2

).

Since t(n) = 60n

2

+ 5n + 1 s 60n

2

+ 5n

2

+ n

2

= 66n

2

for all n > 1

it follows that t(n) = 60n

2

+ 5n + 1 = O(n

2

).

Since t(n) = 60n

2

+ 5n + 1 > 60n

2

for all n > 1

it follows that t(n) = 60n

2

+ 5n + 1 = O(n

2

).

Since t(n) = 60n

2

+ 5n + 1 = O(n

2

) and t(n) = 60n

2

+ 5n + 1 = O(n

2

)

it follows that t(n) = 60n

2

+ 5n + 1 = O(n

2

).

Asymptotic Bounds for Some

Common Functions

Let p(n) = a

k

n

k

+ a

k-1

n

k-1

+ + a

1

n +a

0

be a nonnegative

polynomial (that is, p(n) > 0 for all n) in n of degree k.

Then p(n) = O(n

k

).

log

b

n = O(log

a

n) (because log

b

n =log

a

n/log

a

b)

log n! = O (n log n)

(because log n! =log n+log(n-1)++log2+log1))

E

i=1

n

(1/i) = O (log n)

Common Asymptotic Growth

Functions

Theta form Name

O(1) Constant

O(log log n) Log log

O(log n) Log

O(n

c

), 0 < c < 1 Sublinear

O(n) Linear

O(n log n) n log n

O(n

2

) Quadratic

O(n

3

) Cubic

O(n

k

), k > 1 Polynomial

O(c

n

), c > 1 Exponential

O(n!) Factorial

The running time of different algorithms on a processor performing one million high level

instructions per second (from Algorithm Design, by J. Kleinberg and E Tardos).

n n nlogn n

2

n

3

1.5

n

2

n

n!

10 < 1 sec < 1 sec < 1 sec < 1 sec < 1 sec < 1 sec 4 sec

30 < 1 sec < 1 sec < 1 sec < 1 sec < 1 sec 18 min 10

25

y.

50 < 1 sec < 1 sec < 1 sec < 1 sec 11 min 36 years >10

25

y.

100 < 1 sec < 1 sec < 1 sec 1 sec 12,892 y. 10

17

y. >10

25

y.

1,000 < 1 sec < 1 sec 1 sec 18 min >10

25

y. >10

25

y. >10

25

y.

10,000 < 1 sec < 1 sec 2 min 12 days >10

25

y. >10

25

y. >10

25

y.

100,000 < 1 sec 2 sec 3 hours 32 years >10

25

y. >10

25

y. >10

25

y.

1,000,000 1 sec 20 sec 12 days 31,710 y. >10

25

y. >10

25

y. >10

25

y.

Properties of Big Oh

If f(x) = O(g(x)) and h(x) = O(g(x))

then f(x)+h(x) = O( max { g(x),g(x) } )

and f(x) h(x) = O( g(x) g(x) )

If f(x) = O(g(x)) and g(x) = O(h(x))

then f(x) = O( h(x) )

Examples

1. 5 n

2

1000 n + 8 = O(n

2

) ?

2. 5 n

2

1000 n + 8 = O(n

3

) ?

3. 5 n

2

+ 1000 n + 8 = O(n) ?

4. n

n

= O(2

n

) ?

5. If f(n) = O(g(n)), then 2

f(n) =

O(2

g(n)

) ?

6. If f(n) = O(g(n)), then g(n) = O(f(n)) ?

Examples

1. 5 n

2

1000 n + 8 = O(n

2

) ? True

2. 5 n

2

1000 n + 8 = O(n

3

) ? True

3. 5 n

2

+ 1000 n + 8 = O(n) ?

False n

2

grows faster than n

4. n

n

= O(2

n

) ?

False n

n

grows faster than 2

n

5. If f(n) = O(g(n)), then 2

f(n) =

O(2

g(n)

) ?

False here constants matter, as they become exponents !!!

E.g., f(n) = 3n, g(n) = n, 2

3n

= O(2

n

)

6. If f(n) = O(g(n)), then g(n) = O(f(n)) ? True if f(n) s Cg(n) for n > N,

then g(n) > f(n)/C for n > N.

Examples

7. If f(n) = O(g(n)), and g(n) = O(h(n)), then f(n) + g(n)

=

O(h(n)) ?

8. If f(n) = O(g(n)), then g(n) = O(f(n)) ?

9. If f(n) = O(g(n)), then g(n) = O(f(n)) ?

10. f(n) + g(n) = O(h(n)) where h(n) = max {f(n), g(n)} ?

11. f(n) + g(n) = O(h(n)) where h(n) = min {f(n), g(n)} ?

Examples

7. If f(n) = O(g(n)), and g(n) = O(h(n)), then f(n) + g(n)

=

O(h(n)) ?

True

8. If f(n) = O(g(n)), then g(n) = O(f(n)) ? False e.g., f(n) = n, g(n)

= n

2

9. If f(n) = O(g(n)), then g(n) = O(f(n)) ? True

10. f(n) + g(n) = O(h(n)) where h(n) = max {f(n), g(n)} ? True

11. f(n) + g(n) = O(h(n)) where h(n) = min {f(n), g(n)} ?

False f(n) = n, g(n) = n

2

, h(n) = n

Examples find theta notation

for the following

12. 6n+1

13. 2 lg n + 4n + 3n lg n

14. (n

2

+ lg n)(n + 1) / (n + n

2

)

15. for i=1 to 2n

for j=1 to n

x=x+1

16. i=n

while (i>1) {

x=x+1

i=i/2

}

17. j=n

while (j>1) {

for i=1 to j

x=x+1

j=j/2

}

Examples find theta notation

for the following

12. 6n+1 = O(n)

13. 2 lg n + 4n + 3n lg n = O(n lg n)

14. (n

2

+ lg n)(n + 1) / (n + n

2

) = O(n)

15. for i=1 to 2n

for j=1 to n

x=x+1

= O(n

2

)

16. i=n

while (i>1) {

x=x+1

i=i/2

}

= O(lg n)

17. j=n

while (j>1) {

for i=1 to j

x=x+1

j=j/2

}

= O(n)

Example: Towers of Hanoi

After creating the world, God set on Earth 3 diamond rods and 64

golden rings, all of different size. All the rings were initially on

the first rod, in order of size, the smallest at the top. God also

created a monastery nearby where monks task in life is to

transfer all the rings onto the second rod; they are only allowed

to move a single ring from one rod to another at the time, and a

ring can never be placed on top of another smaller ring.

According to the legend, when monks have finished their task,

the world will come to an end.

If monks move one ring per second and never stop, it will take

them more that 500,000 million years to finish the job (more

than 25 times the estimated age of the universe!).

Example 2

When is f(2n) = O(f(n) )?

f(n) = n

2

Big O: (2n)

2

s 4 n

2

, for all n > 0

Big O: (2n)

2

> n

2

,

for all n > 0

f(n) = 2

n

Big O: 2

2n

s C

1

2

n

, for all n > n

0

???

2

n

2

n

s C

1

2

n

???

NO!!! There is no constant C

1

such that 2

n

s C

1

for

all n > n

0.

Example: Towers of Hanoi

The following is a way to move 3 rings from rod 1 to rod

2.

Example: Towers of Hanoi

The following is an algorithm that moves m rings from

rod i to rod j.

Hanoi (m,i,j){

\\Moves the m smallest rings from rod i to rod j

if m > 0 then {Hanoi(m-1,i,6-i-j)

write i j

Hanoi(m-1,6-i-j,j)}

}

Example: Towers of Hanoi

Recurrence relation:

0 if m=0

t(m) =

2t(m-1) + 1 otherwise

Or, equivalently,

t

0

= 0

t

n

= 2t

n-1

+ 1, n > 0

Example 1

Show that for any real a and b, b > 0

(n+a)

b

= O(n

b

)

Big O: Show that there are constants C

1

and n

0

such

that (n+a)

b

s C

1

n

b

, for all n > n

0

(n+a)

b

s (2n)

b

= 2

b

n

b

for all n > a

Big O: Show that there are constants C

2

and n

0

such

that (n+a)

b

> C

2

n

b

, for all n > n

0

(n+a)

b

> (n/2)

b

= (1/2)

b

n

b

for all n > 2|a|

Solving Recurrence Relations

Iteration (substitution): Example from first weeks

lecture

C(n) = n + C(n/2), n > 1

C(1) = 0

Solution for n=2

k

, for some k.

C(2

k

) = 2

k

+ C(2

k-1

)

= 2

k

+ 2

k-1

+ C(2

k-2)

= 2

k

+ 2

k-1

+ + 2

1

+ C(2

0

)

= 2

k

+ 2

k-1

+ + 2

1

+ C(1)

= 2

k

+ 2

k-1

+ + 2

1

+ 0 (as C(1) = 0)

= 2

k+1

2 = 2n-2 = O (n).

Solving Recurrence Relations

Solving the same recurrence for any n:

C(n) = n + C(n/2), n > 1

C(1) = 0

Solution for 2

k-1

s n < 2

k

, for some k:

Since C(n) is an increasing function (prove it!):

C (2

k-1

) s C(n) < C (2

k

)

C(2

k

) = 2

k+1

-2, C(2

k-1

) = 2

k

-2

C(n) < C(2

k

) = 2

k+1

-2 < 4n, thus C(n) = O(n)

C(n) C(2

k-1

) = 2

k

2 > n-2 n/2, thus C(n) = O(n)

Thus C(n)

= O (n).

Main Recurrence Theorem

(also known as Master Theorem)

Let a, b and k be integers satisfying a > 1, b > 2 and k >

0. In the following, n/b denotes either n/b or n/b(.

In the case of the floor function the initial condition

T(0) =u is given; and in the case of the ceiling

function, the initial condition T(1) = u is given.

Recurrence Theorem

(also known as Master Theorem)

Upper Bound

If T(n) s aT(n/b) + f(n) and f(n) =O(n

k

) then

O(n

k

) if a < b

k

T(n) = O(n

k

log n) if a = b

k

O(n

log

b

a

) if a > b

k

The same theorem holds for O and O notations

as well.

Recurrence Theorem

(also known as Master Theorem)

Lower Bound

If T(n) > aT(n/b) + f(n) and f(n) =O(n

k

) then

O (n

k

) if a < b

k

T(n) = O(n

k

log n) if a = b

k

O(n

log

b

a

) if a > b

k

Recurrence Theorem

(also known as Master Theorem)

Tight Bound

If T(n) = aT(n/b) + f(n) and f(n) = O(n

k

) then

O (n

k

) if a < b

k

T(n) = O(n

k

log n) if a = b

k

O(n

log

b

a

) if a > b

k

Example 1

Solve c

n

= n + c

n/2

, n > 1; c

1

= 0

We have a = 1, b = 2, f(n) = n and k=1.

Since a < b

k

, c

n

= O(n

k

) and since k = 1 , c

n

= O(n).

If T(n) = aT(n/b) + f(n) and f(n) = O(n

k

) then

O(n

k

) if a < b

k

T(n) = O(n

k

log n) if a = b

k

O(n

log

b

a

) if a > b

k

Example 2

Solve c

n

= n + 2c

n/2(

, n > 1; c

1

= 0

We have a = 2, b = 2, f(n) = n and k=1.

Since a = b

k

, c

n

= O(n

k

log n)

and since k = 1 , c

n

= O(n log n).

If T(n) = aT(n/b) + f(n) and f(n) = O(n

k

) then

O(n

k

) if a < b

k

T(n) = O(n

k

log n) if a = b

k

O(n

log

b

a

) if a > b

k

Example 3

Solve c

n

= n + 7c

n/4

We have a = 7, b = 4, f(n) = n and k=1.

Since a > b

k

, c

n

= O(n

log

4

7

)

If T(n) = aT(n/b) + f(n) and f(n) = O(n

k

) then

O(n

k

) if a < b

k

T(n) = O(n

k

log n) if a = b

k

O(n

log

b

a

) if a > b

k

Example 4

Solve c

n

s n

2

+ c

n/2

+ c

n/2(

If c

n

is nondecreasing then c

n/2

s c

n/2(

and we have c

n

s n

2

+ 2c

n/2(

We have a = 2, b = 2, f(n) = n

2

and k=2.

Since a < b

k

, c

n

= O(n

k

) and since k=2, c

n

= O(n

2

)

If T(n) = aT(n/b) + f(n) and f(n) = O(n

k

) then

O(n

k

) if a < b

k

T(n) = O(n

k

log n) if a = b

k

O(n

log

b

a

) if a > b

k

Examples

Solve the following recurrences:

1. T(n) = T(n-1) + n

r

2. T(n) = 2 T(n-1) + n

r

(Note: The solution will depend on the value

of the parameter r and

the initial conditions, which both need to be specified)

Example

0 if n = 0

T(n) =

2 + 4T(n/2) otherwise

Restrict n to be a power of 2: n = 2

k

Look at the expansion

n T(n)

2

0

2

2

1

2 + 4 2

2

2

2 + 4 2 + 4

2

2

Example

Pattern seems to suggest:

T(n) = T(2

k

) =

i=0

k

2 4

i

= 2 (4

k+1

- 1)/(4-1) = (8n

2

- 2)/3 = O(n

2

)

Proof by mathematical induction:

Base case: k = 0, n=2

0

T(1) = (8 - 2)/3 = 2

basis holds.

Assumption: True for k.

Proof that it is true for k+1:

T(2

k+1

) = 2 + 4T(2

k

) = 2 + 4 (82

2k

2)/3 = (6 + 8 2

2k+2

-8)/3

= (8 2

2k+2

- 2)/3

We assumed n is a power of 2

This gives a conditional bound

T(n) is in O(n

2

| n is a power of 2)

Can we remove this condition?

We can use smoothness (FoA p.89)

basically show that well-behaved functions which have

bounds at certain points, have the same bounds at all

other points

Smoothness

A function f is smooth if:

f is non-decreasing over the range [N, +)

eventually non-decreasing

For every integer b > 2

f(bn) is O(f(n))

Let f(n) be smooth

Let t(n) be eventually non-decreasing

t(n) is O(f(n)) for n a power of b implies

t(n) in O(f(n))

Smoothness

Formally, a function f defined on positive integers is

smooth if for any positive integer b > 2, there are

positive constants C and N, depending on b, such

that

f(bn) s Cf(n)

and

f(n) s f(n+1)

for all n > N.

Smoothness

Lemma 1:

If t is a nondecreasing function, f is a smooth

function, and t(n) = O(f(n)) for n a power of b,

then t(n) = O(f(n)).

Smoothness

Which functions are smooth?

log and polynomial functions are smooth

eg. log(n) , log

2

(n), n

3

- n, n log(n)

superpolynomial functions are not

eg. 2

n

, n!

Using this, we can use guess-and-check a bit

more loosely: prove for easier cases, and use

smoothness for the rest

Proof of the Main Recurrence

Theorem

Let a, b and k be integers satisfying a > 1, b > 2 and k >

0. In the following, n/b denotes either n/b or n/b(.

In the case of the floor function the initial condition

T(0) =u is given; and in the case of the ceiling

function, the initial condition T(1) = u is given.

We first need to show for both floor and ceiling T(n) is

well defined for all n. We use mathematical induction

to prove that (to be done in tutorials).

Proof of the Main Recurrence

Theorem

Next we need to show that

We use mathematical induction to show this.

Base Case: m=0

x

0

y

0

= (x

1

y

1

)/(x-y), that is, 1 = 1

Inductive Assumption: m=k

x

k-i

y

i

= (x

k+1

y

k+1

)/(x-y)

y x

y x

y x

y x

m m

i

m

i

i m

=

=

+ +

=

,

1 1

0

i=0

k

Proof of the Main Recurrence

Theorem

Inductive Step: m=k+1

x

k+1-i

y

i

= (x

k+2

y

k+2

)/(x-y)

x

k+1-i

y

i

= x

k+1-i

y

i

+

x

0

y

k+1 =

xx

k-i

y

i

+

y

k+1 =

=x(x

k+1

y

k+1

)/(x-y) +y

k+1

= (x

k+2

xy

k+1

+xy

k+1

y

k+2

)/(x-y) =

= (x

k+2

y

k+2

)/(x-y)

i=0

k+1

i=0

k+1

i=0

k

i=0

k

Proof of the Main Recurrence

Theorem

We are now ready to formulate a lemma that we shall latter use to

prove the Main Recurrence Theorem.

Lemma 2:

If n>1 is a power of b and a = b

k

the solution of recurrence relation

T(n) = aT(n/b) + cn

k

is

T(n) = C

1

n

log

b

a

+ C

2

n

k

for some constants C

1

and C

2

, where C

2

>0 for a<b

k

and C

2

<0 for a>b

k

.

If n>1 is a power of b and a = b

k

the solution of recurrence relation

T(n) = aT(n/b) + cn

k

is

T(n) = C

3

n

k

+ C

4

n

k

log

b

n

for some constants C

3

and C

4

>0.

Proof of the Main Recurrence

Theorem

Proof:

Suppose that n = b

m.

Then m = log

b

n, n

k

= (b

m

)

k

=(b

k

)

m

and a

m

= (b

log

b

a

)

m

= n

log

b

a

Then we have:

T(n) = aT(n/b) + cn

k

T(n) = T(b

m

) = aT(b

m-1

) +c(b

k

)

m

= a[aT(b

m-2

) +c(b

k

)

m-1

] + c(b

k

)

m

= a

2

T(b

m-2

) +c[a(b

k

)

m-1

+(b

k

)

m

]

= a

2

[aT(b

m-3

) +c(b

k

)

m-2

] +c[a(b

k

)

m-1

+(b

k

)

m

]

= a

3

T(b

m-3

) +c[a

2

(b

k

)

m-2

+a(b

k

)

m-1

+(b

k

)

m

]

. . .

=a

m

T(b

0

)+c a

m-i

(b

k

)

i

i=1

m

Proof of the Main Recurrence Theorem

Proof (contd):

Thus T(n) = a

m

T(b

0

)+c a

m-i

(b

k

)

i

If a = b

k

, using we get

T(n) = a

m

T(1) + c[((b

k

)

m+1

-a

m+1

)/(b

k

-a)-a

m

]

= a

m

T(1) + c(b

k

)

m+1

/(b

k

-a) + c[(-a

m+1

)/(b

k

-a)-a

m

]

= a

m

T(1) + c(b

k

)

m+1

/(b

k

-a) a

m

cb

k

/(b

k

-a)

= [T(1)- cb

k

/(b

k

-a)] a

m

+ [cb

k

/(b

k

-a)] (b

k

)

m

= [T(1)- cb

k

/(b

k

-a)] n

logb a

+ [cb

k

/(b

k

-a)] (b

k

)

m

= C

1

n

logb a

+ C

2

(b

k

)

m

where C

1

=

T(1)- cb

k

/(b

k

-a) and C

2

= cb

k

/(b

k

-a).

Note that if a < b

k

then C

2

> 0 and if if a > b

k

then C

2

< 0.

i=1

m

y x

y x

y x

y x

m m

i

m

i

i m

=

=

+ +

=

,

1 1

0

Proof of the Main Recurrence Theorem

Proof (contd):

Recall T(n) = a

m

T(b

0

)+c a

m-i

(b

k

)

i

If a = b

k

, and we get

T(n) = a

m

T(1) + c a

m

= a

m

T(1) + cma

m

= C

3

n

k

+ C

4

n

k

log

b

n

where C

3

=

T(1) and C

4

= c>0.

Note that if a < b

k

then C

2

> 0 and if if a > b

k

then C

2

< 0.

i=1

m

i=1

m

Proof of the Main Recurrence Theorem

Lemma 2 proves the main Recurrence Theorem for case

where n is a power of b. We then apply Lemma 1

(smooth lemma) to generalize the proof for any n

(see text page 63 and 64 for details).

You might also like

- COMP2230 Introduction To Algorithmics: Lecture OverviewDocument19 pagesCOMP2230 Introduction To Algorithmics: Lecture OverviewMrZaggyNo ratings yet

- CS 702 Lec10Document9 pagesCS 702 Lec10Muhammad TausifNo ratings yet

- AAA-5Document35 pagesAAA-5Muhammad Khaleel AfzalNo ratings yet

- DAA ADEG Asymptotic Notation Minggu 4 11Document40 pagesDAA ADEG Asymptotic Notation Minggu 4 11Agra ArimbawaNo ratings yet

- DSA MK Lect3 PDFDocument75 pagesDSA MK Lect3 PDFAnkit PriyarupNo ratings yet

- Asymptotic NotationsDocument11 pagesAsymptotic NotationsKavitha Kottuppally RajappanNo ratings yet

- DAA ADEG Asymptotic Notation Minggu 4 - 11Document40 pagesDAA ADEG Asymptotic Notation Minggu 4 - 11Andika NugrahaNo ratings yet

- Lecture 2 Time ComplexityDocument17 pagesLecture 2 Time ComplexityKaheng ChongNo ratings yet

- AdaDocument62 pagesAdasagarkondamudiNo ratings yet

- Asymptotic Notations ExplainedDocument29 pagesAsymptotic Notations Explainedabood jalladNo ratings yet

- Algorithms and Data Structures Lecture Slides: Asymptotic Notations and Growth Rate of Functions, Brassard Chap. 3Document66 pagesAlgorithms and Data Structures Lecture Slides: Asymptotic Notations and Growth Rate of Functions, Brassard Chap. 3Đỗ TrịNo ratings yet

- Asymptotic NotationDocument66 pagesAsymptotic NotationFitriyah SulistiowatiNo ratings yet

- Algorithms and Data Structures Lecture Slides: Asymptotic Notations and Growth Rate of Functions, Brassard Chap. 3Document41 pagesAlgorithms and Data Structures Lecture Slides: Asymptotic Notations and Growth Rate of Functions, Brassard Chap. 3Phạm Gia DũngNo ratings yet

- Big O, Big Omega and Big Theta Notation: Prepared By: Engr. Wendell PerezDocument19 pagesBig O, Big Omega and Big Theta Notation: Prepared By: Engr. Wendell Perezkimchen edenelleNo ratings yet

- CS 203 Data Structure & Algorithms: Performance AnalysisDocument26 pagesCS 203 Data Structure & Algorithms: Performance AnalysisSreejaNo ratings yet

- FALLSEM2022-23 BCSE202L TH VL2022230103397 Reference Material I 26-07-2022 MODULE 1 - Asymptotic Notations and Orders of GrowthDocument19 pagesFALLSEM2022-23 BCSE202L TH VL2022230103397 Reference Material I 26-07-2022 MODULE 1 - Asymptotic Notations and Orders of GrowthUrjoshi AichNo ratings yet

- Asymtotic NotationDocument38 pagesAsymtotic NotationeddyNo ratings yet

- Session 3Document18 pagesSession 3Mahesh BallaNo ratings yet

- ALGORITHM COMPLEXITY ANALYSISDocument18 pagesALGORITHM COMPLEXITY ANALYSISMuhammad AbubakarNo ratings yet

- Tutorial1 SolutionsDocument4 pagesTutorial1 Solutionsjohn doeNo ratings yet

- Dwi Prima Handayani Putri - Ilkom VI ADocument12 pagesDwi Prima Handayani Putri - Ilkom VI ADwi Prima Handayani PutriNo ratings yet

- Asymptotic Notation: Steven SkienaDocument17 pagesAsymptotic Notation: Steven SkienasatyabashaNo ratings yet

- Basics of Algorithm Analysis: What Is The Running Time of An AlgorithmDocument21 pagesBasics of Algorithm Analysis: What Is The Running Time of An AlgorithmasdasdNo ratings yet

- CSCE 3110 Data Structures and Algorithms Assignment 2Document4 pagesCSCE 3110 Data Structures and Algorithms Assignment 2Ayesha EhsanNo ratings yet

- Algorithms 1Document10 pagesAlgorithms 1reema alafifiNo ratings yet

- Introduction to Asymptotic NotationDocument28 pagesIntroduction to Asymptotic NotationPrinceJohnNo ratings yet

- CS148 Algorithms Asymptotic AnalysisDocument41 pagesCS148 Algorithms Asymptotic AnalysisSharmaine MagcantaNo ratings yet

- Unit-1 DAA - NotesDocument25 pagesUnit-1 DAA - Notesshivam02774No ratings yet

- Basics of Algorithm Analysis: What Is The Running Time of An AlgorithmDocument6 pagesBasics of Algorithm Analysis: What Is The Running Time of An AlgorithmMark Glen GuidesNo ratings yet

- DAADocument15 pagesDAAmeranaamsarthakhaiNo ratings yet

- Algorithms AnalysisDocument21 pagesAlgorithms AnalysisYashika AggarwalNo ratings yet

- Growth of FunctionDocument8 pagesGrowth of FunctionjitensoftNo ratings yet

- Dynamic Programming SolutionsDocument14 pagesDynamic Programming Solutionskaustubh2008satputeNo ratings yet

- Analysis of Algorithms: Asymptotic NotationDocument25 pagesAnalysis of Algorithms: Asymptotic Notationhariom12367855No ratings yet

- Analysis of Algorithms: Asymptotic NotationDocument25 pagesAnalysis of Algorithms: Asymptotic Notationhariom12367855No ratings yet

- 数学分析习题课讲义(复旦大学 PDFDocument88 pages数学分析习题课讲义(复旦大学 PDFradioheadddddNo ratings yet

- Asymptotic Notation - Analysis of AlgorithmsDocument31 pagesAsymptotic Notation - Analysis of AlgorithmsManya KhaterNo ratings yet

- Algo AnalysisDocument24 pagesAlgo AnalysisAman QureshiNo ratings yet

- Analysis of Algorithms: Asymptotic Analysis and Big-O NotationDocument48 pagesAnalysis of Algorithms: Asymptotic Analysis and Big-O NotationAli Sher ShahidNo ratings yet

- MCA 202: Discrete Structures: Instructor Neelima Gupta Ngupta@cs - Du.ac - inDocument30 pagesMCA 202: Discrete Structures: Instructor Neelima Gupta Ngupta@cs - Du.ac - inEr Umesh ThoriyaNo ratings yet

- Assignment # 3Document4 pagesAssignment # 3Shahzael MughalNo ratings yet

- Week2 - Growth of FunctionsDocument64 pagesWeek2 - Growth of FunctionsFahama Bin EkramNo ratings yet

- Ada 1Document11 pagesAda 1kavithaangappanNo ratings yet

- Complexity 3Document14 pagesComplexity 3Tee flowNo ratings yet

- Asymptotic Analysis of Algorithms (Growth of Function)Document8 pagesAsymptotic Analysis of Algorithms (Growth of Function)Rosemelyne WartdeNo ratings yet

- Lec 2.2Document19 pagesLec 2.2nouraizNo ratings yet

- Analysis and Design of Algorithms: Session - 3Document18 pagesAnalysis and Design of Algorithms: Session - 3Bhargav Phaneendra manneNo ratings yet

- The Growth of Functions: Rosen 2.2Document36 pagesThe Growth of Functions: Rosen 2.2Luis LoredoNo ratings yet

- Lec - 2 - Analysis of Algorithms (Part 2)Document45 pagesLec - 2 - Analysis of Algorithms (Part 2)Ifat NixNo ratings yet

- What Is Asymptotic NotationDocument51 pagesWhat Is Asymptotic NotationHemalatha SivakumarNo ratings yet

- Data Structure: Chapter 1 - Basic ConceptsDocument32 pagesData Structure: Chapter 1 - Basic ConceptsrajfacebookNo ratings yet

- A1388404476 - 16870 - 20 - 2018 - Complexity AnalysisDocument42 pagesA1388404476 - 16870 - 20 - 2018 - Complexity AnalysisSri SriNo ratings yet

- Brief ComplexityDocument3 pagesBrief ComplexitySridhar ChandrasekarNo ratings yet

- Algo - Lecure3 - Asymptotic AnalysisDocument26 pagesAlgo - Lecure3 - Asymptotic AnalysisMd SaifullahNo ratings yet

- CS 253: Algorithms: Growth of FunctionsDocument22 pagesCS 253: Algorithms: Growth of FunctionsVishalkumar BhattNo ratings yet

- Asymptotic NotationsDocument8 pagesAsymptotic Notationshyrax123No ratings yet

- Complexity Analysis: Text Is Mainly From Chapter 2 by DrozdekDocument31 pagesComplexity Analysis: Text Is Mainly From Chapter 2 by DrozdekaneekaNo ratings yet

- Analyzing Algorithm Complexity with Big-Oh, Big Omega, and Big Theta NotationDocument21 pagesAnalyzing Algorithm Complexity with Big-Oh, Big Omega, and Big Theta Notationsanandan_1986No ratings yet

- Trigonometric Ratios to Transformations (Trigonometry) Mathematics E-Book For Public ExamsFrom EverandTrigonometric Ratios to Transformations (Trigonometry) Mathematics E-Book For Public ExamsRating: 5 out of 5 stars5/5 (1)

- LabSheet 1 PDFDocument2 pagesLabSheet 1 PDFMrZaggyNo ratings yet

- Scale Aircraft Modelling Dec 2014Document86 pagesScale Aircraft Modelling Dec 2014MrZaggy94% (17)

- How To Build An Electricity Producing Wind Turbine and Solar PanelsDocument49 pagesHow To Build An Electricity Producing Wind Turbine and Solar PanelsMrZaggy100% (1)

- Practical Projects For Self SufficiencyDocument360 pagesPractical Projects For Self SufficiencyMrZaggy100% (3)

- Power4Home Wind Power GuideDocument72 pagesPower4Home Wind Power GuideMrZaggyNo ratings yet

- Lecture 1 - 1pp PDFDocument37 pagesLecture 1 - 1pp PDFMrZaggyNo ratings yet

- Basic AerodynamicsDocument49 pagesBasic AerodynamicsJayDeep KhajureNo ratings yet

- Hand Loading For Long Range PDFDocument44 pagesHand Loading For Long Range PDFMrZaggyNo ratings yet

- Dodo Broadband ISP - ADSL - ADSL 2+ - Cheap ADSL PDFDocument3 pagesDodo Broadband ISP - ADSL - ADSL 2+ - Cheap ADSL PDFMrZaggyNo ratings yet

- UAV Circumnavigating An Unknown Target Under A GPS-denied Environment With Range-Only Measurements - 1404.3221v1 PDFDocument12 pagesUAV Circumnavigating An Unknown Target Under A GPS-denied Environment With Range-Only Measurements - 1404.3221v1 PDFMrZaggyNo ratings yet

- CSHonoursOverview v09 PDFDocument16 pagesCSHonoursOverview v09 PDFMrZaggyNo ratings yet

- Comp4251 Sem 2 14 PDFDocument5 pagesComp4251 Sem 2 14 PDFMrZaggyNo ratings yet

- Comp3330 w05 Ec PDFDocument64 pagesComp3330 w05 Ec PDFMrZaggyNo ratings yet

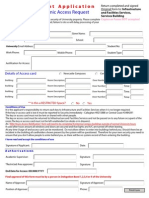

- Student Electronic Access Application PDFDocument1 pageStudent Electronic Access Application PDFMrZaggyNo ratings yet

- An Evolved Antenna For Deployment On NASA's Space Technology 5 Mission - Lohn-PaperDocument16 pagesAn Evolved Antenna For Deployment On NASA's Space Technology 5 Mission - Lohn-PaperMrZaggyNo ratings yet

- UAV GNC Chapter PDFDocument30 pagesUAV GNC Chapter PDFMrZaggyNo ratings yet

- Genetic Algorithms and Machine Learning (Holland and Goldberg) Art:10.1023/A:1022602019183Document5 pagesGenetic Algorithms and Machine Learning (Holland and Goldberg) Art:10.1023/A:1022602019183MrZaggyNo ratings yet

- Lecture11 - Proving NP-Completeness & NP-Complete ProblemsDocument39 pagesLecture11 - Proving NP-Completeness & NP-Complete ProblemsMrZaggyNo ratings yet

- Mathematical Modeling of UAVDocument8 pagesMathematical Modeling of UAVDanny FernandoNo ratings yet

- Path Planning For UAVs - Radar Sites Stealth Vs Path Length Tradeoff Via ODEsDocument5 pagesPath Planning For UAVs - Radar Sites Stealth Vs Path Length Tradeoff Via ODEsMrZaggyNo ratings yet

- An Evolution Based Path Planning Algorithm For Autonomous Motion of A UAV Through Uncertain Environments - Rathbun2002evolutionDocument12 pagesAn Evolution Based Path Planning Algorithm For Autonomous Motion of A UAV Through Uncertain Environments - Rathbun2002evolutionMrZaggyNo ratings yet

- Low Cost Navigation System For UAV's - 1-s2.0-S1270963805000829-MainDocument13 pagesLow Cost Navigation System For UAV's - 1-s2.0-S1270963805000829-MainMrZaggyNo ratings yet

- Comp3265 2014Document49 pagesComp3265 2014MrZaggyNo ratings yet

- 3-D Path Planning For The Navigation of Unmanned Aerial Vehicles by Using Evolutionary Algorithms - P1499-HasirciogluDocument8 pages3-D Path Planning For The Navigation of Unmanned Aerial Vehicles by Using Evolutionary Algorithms - P1499-HasirciogluMrZaggyNo ratings yet

- Comp3264 2014Document78 pagesComp3264 2014MrZaggyNo ratings yet

- Lecture10 - P and NPDocument55 pagesLecture10 - P and NPMrZaggyNo ratings yet

- Three-Dimensional Offline Path Planning For UAVs Using Multiobjective Evolutionary Algorithms - 72-ADocument31 pagesThree-Dimensional Offline Path Planning For UAVs Using Multiobjective Evolutionary Algorithms - 72-AMrZaggyNo ratings yet

- Lecture9 - Dynamic ProgrammingDocument41 pagesLecture9 - Dynamic ProgrammingMrZaggyNo ratings yet

- Lecture7 - Sorting AlgorithmsDocument22 pagesLecture7 - Sorting AlgorithmsMrZaggyNo ratings yet

- Lecture8 - Greedy AlgorithmsDocument61 pagesLecture8 - Greedy AlgorithmsMrZaggyNo ratings yet