Professional Documents

Culture Documents

Estimating Extract, Transform, and Load (ETL) Projects: PMI Virtual Library © 2010 Ben Harden

Estimating Extract, Transform, and Load (ETL) Projects: PMI Virtual Library © 2010 Ben Harden

Uploaded by

pratapsettyOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Estimating Extract, Transform, and Load (ETL) Projects: PMI Virtual Library © 2010 Ben Harden

Estimating Extract, Transform, and Load (ETL) Projects: PMI Virtual Library © 2010 Ben Harden

Uploaded by

pratapsettyCopyright:

Available Formats

PMI Virtual Library

2010 Ben Harden

Estimating Extract, Transform, and

Load (ETL) Projects

I

n the consulting world, project estimation is a critical

component required for the delivery of a successful

project. If you estimate correctly, you will deliver a

project on time and within budget; get it wrong and you

could end up over budget, with an unhappy client and a

burned out team. Project estimation

for business intelligence and data

integration projects is especially

dicult, given the number of

stakeholders involved across the

organization as well as the unknowns

of data complexity and quality. Add

to this mix a rm xed price RFP

(request for proposal) response for a

client your organization has not done

work for, and you have the perfect

climate for a poor estimate. In this

article, I share my thoughts about

the best way to approach a project estimate for an extract,

transform, and load (ETL) project.

For those of you not familiar with ETL, it is a common

technique used in data warehousing to move data from

one database (the source) to another (the target). In order

to accomplish this data movement, the data rst must be

extracted out of the source systemthe E. Once the data

extract is complete, data transformation may need to occur.

For example, it may be necessary to transform a state name to

a two-digit state code (Virginia to VA)the T. After the

data have been extracted from the source and transformed to

meet the target system requirements, they can then be loaded

into the target databasethe L.

By Ben Harden, PMP

Before starting your ETL estimation, you need to

understand what type of estimate you are trying to produce.

How precise does the estimate need to be? Will you be

estimating eort, schedule, or both? Will you build your

estimate top down or bottom up? Is the result being used for

inclusion in an RFP response or will it

be used in an unocial capacity? By

answering these questions, you can

assess risk and produce an estimate that

best mitigates that risk.

In many cases, the information

you have to base your estimate on

is high level, with only a few key

data points do go on, and you do

not have either the time or ability

to ask for more details. In these

situations, the response I hear most

often is that an estimate cannot be

produced. I disagree! As long as the precision of the estimate

produced is understood by the customer, there is value in

the estimate and it should be done. Te alternative to a

high-level estimate is none at all, and as someone who has to

deliver on the estimate, I would rather have a bad estimate

with clear assumptions than no baseline at all. Te key is

being clear about how the estimate should be used and what

the limitations are. I have found that one of the best ways

to frame the accuracy of the estimate with the customer

and project team is through the use of assumptions. Every

estimate is built with many assumptions in mind and having

them clearly laid out almost always generates good discussion

and, eventually, a more rened and accurate estimate.

The key is being clear

about how the estimate

should be used and what the

limitations are.

PMI Virtual Library | www.PMI.org | 2010 Ben Harden

2

A common question that comes up during the estimation

process is eort versus schedule; in other words, how many

hours will the work take versus the duration it will take to

complete the eort. To simplify the estimating process, I

start with a model that delivers the eort and completely

ignores the schedule. Once the eort has been rened, it can

be taken to the delivery team for a secondary discussion on

overlaying the estimated eort across time.

Once you know what type of estimate you are trying to

deliver and who your audience is, you can begin the process

of eectively estimating the work. All too often, this up-front

thinking is ignored and the resulting estimate does not meet

expectations.

Ive reviewed a number of the dierent ETL estimating

techniques available and have found some to be extremely

complex and others more straightforward. Ten there are

the theory of estimating and the tried and true models of

Wide Band Delphi and COCOMO. All of these theories

are interesting and have value but they dont easily produce

the data to support the questions I am always asked in

the consulting world: How much will this project cost?

How many people will you need to deliver it? What does

the delivery schedule look like? I have discovered that

most models focus on one part of the eort (generally

development) but neglect to include requirements, design,

testing, data stewardship, production deployment, warranty

support, and so forth. When estimating a project in the

consulting world, we care about the total cost, not just how

long it will take to develop the ETL code.

Estimating an ETL Project

In the ETL space I use two models (top down and bottom

up) for my estimation, if I have been provided enough data

to support both; this helps better ground the estimate and

conrms that there are no major gaps in the model.

Estimating an ETL Project Using a Top Down

Technique

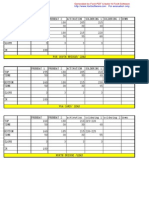

To start a top down estimate, I break down the project by

phase and then add in key oversight roles that dont pertain

specically to any single phase (i.e., project manager, technical

lead, subject matter expert, operations, etc.). Once I have the

phases that relate to the project I am estimating for, I estimate

each phase vertically as a percentage of the development

eort, as shown in the chart below. Everyone has a dierent

idea about what percentage to use in the estimate and there

is no one right answer. I start with the numbers below and

tweak them accordingly, based on the project environment

and resource experience.

Phase Percentage of Development

Requirements 50% of Development

Design 25% of Development

Development

System Test 25% of Development

Integration Test 25% of Development

Once I have my verticals established, I break my

estimate horizontally into low, medium, and high, using the

percentages below:

Complexity Percent of Medium

Low 50% of Medium

Medium N/A

High 150% of Medium

Generally, when doing a high-level ETL estimate, I know

the number of sources I am dealing with and, if Im lucky, I

also have some broad stroke level of complexity information.

Once I have my model built out, as described above, I work

with my development team to understand the eort involved

for a single source. I then take the numbers of sources and

plug them into my model, as shown below (Figure 1, in

yellow). If I dont have complexity information, I simply

record the same numbers of sources in the low, medium, and

high columns to give me an estimate range of +/50%.

I now have a framework I can share with my team

to shape my estimate. After my initial cut, I meet with

key team members to review the estimate, and I inevitably

end up with a revised estimate and, more importantly, a

comprehensive set of assumptions. Tere is no substitute for

socializing your estimate with your team or with a group of

subject matter experts; they are closest to the work and have

Sourcing Data: Task Low (Hrs) Medium (Hrs) High (Hrs)

Requirements and Data Mapping 3.0 6.0 9.0

High Level Design 4.0 4.0 8.0

Technical Design 4.0 8.0 12.0

Development & Unit Testing 16.0 24.0 40.0

System/QA test 8.0 12.0 20.0

Integration Test and Production Rollout Support 9.0 12.0 18.0

Tech Lead Support 4.4 6.6 10.7

Project Management Support 2.2 3.3 5.4

Subject Matter Expert 4.4 6.6 10.7

Totals Per Source

Total Hours 55.0 82.5 133.8

Total Days 6.9 10.3 16.7

Total Weeks 1.4 2.1 3.3

Sourcing Totals

Number of Sources 2.0 4.0 3.0

Total Effort (Hours) 110.0 330.0 401.3

Total Days 13.8 41.3 50.2

Total Weeks 2.8 8.3 10.0

Figure 1: Sample Top Down Estimate.

PMI Virtual Library | www.PMI.org | 2010 Ben Harden

3

input and ideas that help rene the estimate into something

that is accurate and defendable when cost or hours are

challenged by the client.

Estimating an ETL Project Using a Bottom Up

Estimate

When enough data are available to construct a bottom up

estimate, this estimate can provide a powerful model that

is highly defendable. To start a bottom up ETL estimate, a

minimum of two key data elements are required: the number

of data attributes required and the number of target structures

that exist. Understanding the target data structure is a critical

input to ETL estimation, because data modeling is a time-

consuming and specialized skill that can have a signicant

impact on the cost and schedule.

When starting a bottom up ETL estimate, it is important

to break up the attributes into logical blocks of information. If

a data warehouse is the target, subject areas work best as starting

points for segmenting the estimation. A subject area is a logical

grouping of data within the warehouse and is a great way to

break down the project into smaller chunks that align with how

you will deliver the work. Once you have a logical grouping

of how the data will be stored, break down the number of

attributes into the various groups, noting the percentages of

attributes that do not have a target data structure.

Target Model

Number of Data

Attributes

Percentage of

Unmodeled

Attributes

Subject Area 1 200 100%

Subject Area 2 400 25%

Subject Area 3 150 100%

Subject Area 4 200 50%

Subject Area 5 50 10%

Subject Area 6 50 100%

Subject Area 7 20 100%

Total Number of Attributes 1070

Model Inputs: Number of Attributes by Subject Area

Once you have dened the target data subject areas,

attributes, and percentages of data modeled, the time spent

per task, per attribute can be estimated. It is important to

dene all tasks that will be completed during the life cycle of

the project. Clearly dening the assumptions around each

task is also critical, because consumers of the model will

interpret the tasks dierently.

In the example shown, there is a calculation that adjusts

the modeling hours based on the percentage of attributes

that are not modeled, giving more modeling time as the

percentage increases. Tis technique can be used for any task

that has a large variance in eort based on an external factor.

Task Hours Per Attribute

Requirements & Mapping 2

High Level Design 0.1

Technical Design 0.5

Data Modeling 1

Development & Unit Testing 1

System Test 0.5

User Acceptance Testing 0.25

Production Support 0.2

Tech Lead Support 0.5

Project Management Support 0.5

Product Owner Support 0.5

Subject Matter Expert 0.5

Data Steward Support 0.5

To complete the eort, estimate the hours per task that

can be multiplied by the total number of attributes to get

eort by task. In addition, the tasks can be broken out across

the expected project resource role, providing a jump start on

how the eort should be scheduled. As with any estimate, I

always add a contingency factor at the bottom to account for

unforeseen risk.

Effort (Hours) Effort (Days)

Business System Analyst 2140.0 267.5

Developer 1886.0 235.8

Tester 475.0 59.4

Tech Lead 535.0 66.9

Project Manager 535.0 66.9

Product Owner 652.5 81.6

Data Steward 685.0 85.6

Data Modeler 610.0 76.3

Subject Matter Expert 535.0 66.9

SubTotal 8053.5 1006.7

Contingency 805.4 100.7

Grand Total 8858.9 1107.4

Effort Summary

Comparing a top down estimate with a bottom up

estimate will provide two good data sets that can drive

discussion about the quality of the estimate as well us uncover

additional assumptions.

Scheduling the Work

Once the eort estimate is complete (regardless of the

type), I can start thinking about how much time and

how many resources are needed to complete the project.

Generally, the requestor of the estimate has an expected

delivery date in mind and I know the earliest time we

can start the work. With those two data points, I can

calculate the number of business days I have to deliver the

PMI Virtual Library | www.PMI.org | 2010 Ben Harden

4

project and get a rough order of magnitude estimate of the

resources required.

Te rst thing I do is map the phases established in

the eort estimate to the various project team roles (BSA,

developer, tester, etc.). Once I break down the eort

into roles, I can then divide the eort by the number of

days available in the project to get the expected number

of resources required. In the example below (Figure 2), I

shorten the time that the BSA, developer, and tester will

work, taking into account that each life cycle phase does

not run for the duration of the project. At this stage, I also

take into consideration the cost of each resource and add in

a contingency factor. Tis method allows for the ability to

adjust the duration of the project without impacting the level

of eort needed to complete the work.

Using the techniques described above provides you with

the exibility to easily answer the what if questions that

always come up when estimating work. By keeping the

eort and the schedule separate, you have total control over

the model.

Delivering on the Estimate

Once the eort and duration of the project are stabilized, a

project planning tool (e.g., Microsoft Project) can be used

to dive into the details of the work breakdown structure and

further map out the details of the project.

It is important to continue to validate your estimate

throughout the project. As you nish each project phase,

revisiting the estimate to evaluate assumptions and estimating

factors will help make future estimates better, which is

especially important if you expect to do additional projects in

the same department.

Conclusion

In my experience, bottom up estimates produce the most

accurate results, but often the information required to

produce such an estimate is not available. Te bottom up

technique allows the work to be broken down to a very

detailed level. To eectively estimate bottom up ETL

projects, the granularity needed is typically the number of

reports, data elements, data sources, or the metrics required

for the project.

When a low level of detail is not available, using a top

down technique is the best option. Top down estimates are

derived using a qualitative model and are more likely to be

skewed based on the experience factor of the person doing

the estimate. I nd that these estimates are also much more

dicult to defend because of their qualitative nature. When

doing a top down estimate for a proposal, I like to include

additional money in the budget for contingency to cover the

unknowns that certainly lie in the unknown details.

Tere is an argument that a bottom up estimate is no

more precise than a top down estimate. Te thinking here is

that with a lower level of detail, you make smaller estimating

errors more often, netting the same result as the large errors

made in a top down approach. Although this is a compelling

argument (and why I do both estimates when I can), the more

granular the estimate you have, the quicker you can identify

aws and make corrections. With a top down estimate, errors

take longer to be revealed and are harder to correct.

An estimate is only as good as the data used to start the

estimate and the assumptions captured. Providing clear and

consistent estimates helps build credibility with business

customers and clients and provides a concrete defendable

position on how you plan to deliver against scope and it also

provides a constant reminder of the impact of additional

scope. No matter how easy or small a project appears to be,

always start with an estimate and be prepared for that estimate

to need ne tuning as new information becomes available.

Glossary of Terms

ETL Extract, Transform, and Load. A technique used to

move data from one database (the source) to another database

(the target).

Role Effort (Hours) Effort (Days) Target Days

# Resources

Needed Rate Cost

Business System Analyst 1833.0 229.1 71 3.2 10.00 $ 18,330.00 $

Developer 2420.0 302.5 71 4.2 15.00 $ 36,300.00 $

Tester 1756.0 219.5 71 3.1 12.00 $ 21,072.00 $

Tech Lead 600.9 75.1 86 0.9 14.00 $ 8,412.60 $

Project Manager 300.5 37.6 86 0.4 18.00 $ 5,408.10 $

Subject Matter Expert 600.9 75.1 86 0.9 20.00 $ 12,018.00 $

SubTotal 7511.3 938.9 12.7 - $

Contingency 751.1 93.9 86 1.1 14.83 $ 11,141.69 $

Grand Total 8262.4 1032.8 13.8 11,141.69 $

Figure 2: Effort Summary.

PMI Virtual Library | www.PMI.org | 2010 Ben Harden

5

Business Intelligence A technique used to analyze data to

support better business decision making.

Data Integration Te process of combining data from

multiple sources to provide end users with a unied view of

the data.

Data Steward Te person responsible for maintaining the

metadata repository that describes the data within the data

warehouse.

Data Warehouse A repository of data designed to facilitate

reporting and business intelligence analysis.

RFP A request for proposal (RFP) is an early stage in the

procurement process, issuing an invitation for suppliers, often

through a bidding process, to submit a proposal on a specic

commodity or service.

Source An ETL term used to describe the source system

that provides data to the ETL process.

Subject Area A term used in data warehousing that

describes a set of data with a common theme or set of related

measurements (e.g., customer, account, or claim).

Target An ETL term used to describe the database that

receives the transformed data.

About the Author

Ben Harden, PMP, is a manager in the Data Management

and Business Intelligence practice of the Richmond,

Virginiabased consulting rm CapTech. He specializes in

the project management and delivery of data integration and

business intelligence projects for Fortune 500 organizations.

Mr. Harden has successfully managed data-related projects

in the health care, nancial services, telecommunications,

and governmental sectors and can be reached via e-mail at

bharden@captechconsulting.com.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5807)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1091)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (842)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (346)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Chapter 04 Sedra Solutions PDFDocument50 pagesChapter 04 Sedra Solutions PDFMaryam Sana86% (7)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- (November-2019) Braindump2go New AWS-Certified-Solutions-Architect-Associate Dumps VCE Free Share PDFDocument5 pages(November-2019) Braindump2go New AWS-Certified-Solutions-Architect-Associate Dumps VCE Free Share PDFnavin_netNo ratings yet

- Standardizing Electronic Toll Collection: by James V. Halloran IIIDocument29 pagesStandardizing Electronic Toll Collection: by James V. Halloran IIIreasonorgNo ratings yet

- Lead GenerationDocument3 pagesLead GenerationSidra Raza100% (1)

- PLC Programming Cables: Family CPU (Or Other Device) Port CableDocument2 pagesPLC Programming Cables: Family CPU (Or Other Device) Port Cablealejandro perezNo ratings yet

- Gmark TechnologiesDocument12 pagesGmark Technologiesmanish kaushikNo ratings yet

- COS1512 Tutorial 202Document37 pagesCOS1512 Tutorial 202Lina Slabbert-van Der Walt100% (1)

- Data Transmission Using Iot in Vehicular Ad-Hoc Networks in Smart City CongestionDocument11 pagesData Transmission Using Iot in Vehicular Ad-Hoc Networks in Smart City CongestionAtestate LiceuNo ratings yet

- MS Lims ManualDocument57 pagesMS Lims ManualzballestaNo ratings yet

- Toddler Data DescriptionDocument3 pagesToddler Data DescriptionDido CharboneauNo ratings yet

- Evaluasi Pelaksanaan Jaminan Kesehatan Nasional: Arip SupriantoDocument37 pagesEvaluasi Pelaksanaan Jaminan Kesehatan Nasional: Arip SupriantoKanya Windya RaiNo ratings yet

- AHF SizingDocument1 pageAHF Sizingjignesh_sp2003No ratings yet

- 1 Tập Tin Đích: Victoria Can Tho HotelDocument81 pages1 Tập Tin Đích: Victoria Can Tho Hotelapi-26179120No ratings yet

- Srs Inventory Management System - 1801227315Document10 pagesSrs Inventory Management System - 1801227315Alone WalkerNo ratings yet

- SSFL SOP 16 Control of Meas and Test Equip 04-06-12 FNLDocument4 pagesSSFL SOP 16 Control of Meas and Test Equip 04-06-12 FNLAymeeenNo ratings yet

- Tagalog To English - Google SearchDocument1 pageTagalog To English - Google SearchmaxinejosonNo ratings yet

- IC Digital Content Plan 10874Document13 pagesIC Digital Content Plan 10874carolisdwi permanaNo ratings yet

- ### Offline Branches: November 30, 2022Document570 pages### Offline Branches: November 30, 2022Christopher LaputNo ratings yet

- AnswersDocument8 pagesAnswersDaniyal AsifNo ratings yet

- Zhuo Mao SettingsDocument4 pagesZhuo Mao SettingsStalin AlvaradoNo ratings yet

- Computer Vision Three-Dimensional Data From ImagesDocument12 pagesComputer Vision Three-Dimensional Data From ImagesminhtrieudoddtNo ratings yet

- B Ed AssignmentDocument66 pagesB Ed AssignmentrashiNo ratings yet

- 10247Document20 pages10247firoz khanNo ratings yet

- Ree602 MicroprocessorDocument2 pagesRee602 MicroprocessorBhupedra BhaskarNo ratings yet

- JD - Technical MentorDocument2 pagesJD - Technical MentorvijaykumarNo ratings yet

- APO Decomission List FY18 Phase 1 PROGDocument59 pagesAPO Decomission List FY18 Phase 1 PROGaravindascribdNo ratings yet

- Course Name: Introduction To Emerging Technologies Course (EMTE1011/1012)Document18 pagesCourse Name: Introduction To Emerging Technologies Course (EMTE1011/1012)Shewan DebretsionNo ratings yet

- Module-1 DS NotesDocument30 pagesModule-1 DS NotesVaishali KNo ratings yet

- Numerical Methods For High School Kids - InterpolationDocument13 pagesNumerical Methods For High School Kids - InterpolationDr Srinivasan Nenmeli -KNo ratings yet

- Damerau Levenshtein Algorithm by R - GDocument5 pagesDamerau Levenshtein Algorithm by R - GSambreenNo ratings yet