Professional Documents

Culture Documents

Hampson-Russell Software Services LTD.: Neural Networks: Theory and Practice

Hampson-Russell Software Services LTD.: Neural Networks: Theory and Practice

Uploaded by

sailorwebOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Hampson-Russell Software Services LTD.: Neural Networks: Theory and Practice

Hampson-Russell Software Services LTD.: Neural Networks: Theory and Practice

Uploaded by

sailorwebCopyright:

Available Formats

Neural networks: theory and practice

Todor Todorov

Hampson-Russell Software Services Ltd.

todor@hampson-russell.com

Introduction

In its most general form, an artificial neural network is a set of electronic components or

computer program that is designed to model the way in which the brain performs. The brain

is a highly complex, nonlinear, and parallel information-processing system. The structural

constituents of the brain are nerve cells called neurons, which are linked by a large number

of connections called synapses. This complex system has the great ability to build up its

own rules and store information through what we usually refer to as experience.

The neural network resembles the brain in two respects:

knowledge is acquired by the network through a learning process;

inter-neuron connection strengths known as synaptic weights are used to store the

knowledge.

The procedure used to perform the learning process is called a learning algorithm. Its

function is to modify the synaptic weights of the network in an orderly fashion to attain a

desired design objective.

Although neural networks are relatively new to the petroleum industry, their origins can be

traced back to the 1940s, when psychologists began developing models of human learning.

With the advent of the computer, researchers began to program neural network models to

simulate the complex behavior of the brain. However, in 1969, Marvin Minsky proved that

one-layer perceptrons, a simple neural network being studied at that time, are incapable of

solving many simple problems. Optimism soared again in 1986 when Rumelhart and

McClelland published a two-volume book Parallel Distributed Processing. The book

presented the back-propagation algorithm, which has become one of the most popular

learning algorithms for the multi-layer feedforward neural network. Since then, the effort to

develop and implement different architectures and learning algorithms has been enormous.

In 1990, Donald Specht published the idea of the probabilistic neural network, which has its

roots in probability theory. The trend was picked up by research-geophysicists, and a

number of successful applications were reported in the geophysical literature (Huang et al.,

1996, Todorov et al., 1998).

Two neural network architectures are described in this paper: the multi-layer feedforward

neural network and the probabilistic neural network. The basic theory and its

implementation in EMERGE are discussed.

Multi-layer feedforward neural network

Basic architecture

In this section we study one of the most used type of neural networks, the multi-layer

feedforward network, known also as the multi-layer perceptron. Figure 1 shows the basic

architecture of the multi-layer feedforward neural network. It consists of a set of neurons,

also called processing units, which are arranged into two or more layers. There is always an

input layer and an output layer, each containing at least one neuron. Between them there are

one or more hidden layers. The neurons are connected in the following fashion: inputs to

neurons in each layer come from outputs of the previous layer, and outputs from these

neurons are passed to neurons in the next layer. Each connection represents a weight. In the

example shown in Figure 1, we have four inputs (for example four seismic attributes: A1,

A2, A3, A4), one hidden layer containing three neurons and an output neuron (for example,

measured porosity). The number of connections is 15, i.e. we have 15 weights.

Figure 1: Feedforward neural network architecture.

The neurons are information-processing units that are fundamental to the operation of a

neural network. Figure 2 shows the model of a neuron. We may identify three basic

processes of the neuron model:

each of the input signals x

j

is multiplied by the corresponding synaptic weight w

j

summation of the weighted input signals

applying a nonlinear function, called the activation function, to the sum

Figure 2: A model of a neuron.

Mathematically the process is written as:

p

j

j 1

neuron's output f x

j

w

=

=

where:

w

j

synaptic (connection) weights

x

j

neuron inputs

f(.) activation function

The activation function defines the output of a neuron in terms of the activity level at its

input. The sigmoid function is by far the most common form of activation function used in

the construction of artificial neural networks. It is defined as a strictly increasing function

that exhibits smoothness and asymptotic properties. An example of the sigmoid function is

the logistic function, defined by:

x

e 1

1

) x ( f

+

=

The logistic function assumes a continuous range of values from 0 to 1. It is sometimes

desirable to have the activation function range from 1 to +1, in which case the activation

function assumes an antisymmetric form with respect to the origin. An example is the

hyperbolic tangent function, defined by:

x x

x x

e e

e e

) x tanh( ) x ( f

= =

A neural net is completely defined by the number of layers, neurons in each layer, and the

connection weights. The process of weight estimation is called training or learning.

The process of training

The major task for a neural network is to learn a model by presenting it with examples. Each

example consists of an input output pair: an input signal and the corresponding desired

response for the neural network. Thus, a set of examples represents the knowledge. For

each example we compare the outputs obtained by the network with the outputs we would

like to obtain. If y = [y

1

, y

2

, ..., y

p

] is a vector containing the outputs (p is the number of

neurons in the output layer), and d = [d

1

, d

2

, ..., d

p

] is a vector containing the desired

response, we can compute the error for the example k:

=

=

p

1 j

2

j j k

) d y (

p

1

e

If we have n examples, the total error is:

=

=

n

1 k

k

e

n

1

e

Obviously our goal is to reduce the error. It can be done by updating the weights to

minimize the error. Thus, in its basic form a neural network training algorithm is an

optimization algorithm which minimizes the error with respect to the network weights.

In 1986, the back-propagation algorithm, the first practical algorithm for multi-layer

perceptron training was presented (Rumelhart and McClelland, 1986). The basic steps of the

training are:

initialize the network weights to small uniformly distributed random numbers

present the examples to the network and compute the outputs

compute the error

update the weights backward, i.e., starting from the output layer and passing the layers to

the input layer, using the delta rule (Figure 3):

ji

ji

w

e

w

=

where is a constant called the learning rate.

Figure 3: Back-propagation of the error.

In practice we perform the above flow until we are satisfied with the neural network

performance.

The back-propagation is what numerical analysts call a gradient descent or the steepest

descent algorithm. Although the method is capable of reaching the local minimum it is

considerably slow. A better optimization method is the conjugate gradient algorithm (Press

et al., 1988, Masters, 1993).

Eluding local minima: simulated annealing

The method of conjugate gradient is extremely efficient in locating the nearest local

minimum. However, the conjugate gradient can not escape from the valley of the local

minimum and find the global minimum. In Figure 4, we have a plot of a simple error

function. There are three minima, two of which are local. The starting point for the

optimization is marked with the black square. The arrow shows the path of the conjugate

gradient it always goes down the valley, so we cannot jump over the hill and find the

global minimum.

Figure 4: The conjugate gradient finds the local minimum.

Generally, the error function is quite complex and contains a number of local minima. So if

we are looking for a good training algorithm, we have to find a way to escape from the local

minima valleys and locate the global minimum. One possible solution is to use simulated

annealing. The concept of simulated annealing is simple: we are sitting at some starting

point and we want to move to a more optimal point. To do so, we choose randomly a

number of points and calculate the error function value. The maximum distance for

searching is defined by a parameter called temperature. The point with the minimum

function value becomes a center or staring point for the next search. Figure 5 is an example

of the process. The black arrows show three randomly chosen points. We can see that one

of them is within the valley of the global minimum. Then we can repeat the process, so we

move down the valley. However, the process of simulated annealing is slower then the

conjugate gradient, so a smart way is to combine both methods. First, we perform simulated

annealing to find the valley of the global minimum, and then we use the conjugate gradient

to move faster to the bottom of the valley.

Figure 5: Simulated annealing.

Overtraining and validation

Theoretically, given enough neurons and iterations, the error based on the training set will

approach zero. However, this is undesirable since the neural net will be fitting noise and

some small details of the individual cases. That often leads to pure prediction on unseen

data. This pitfall is called overfitting or overtraining. The problem of overfitting versus

generalization is similar to one of fitting a function to known points and then using the

function for prediction. If we use a high enough order polynomial, we may fit the known

points exactly (Figure 6). However, if we use a smoother function (the dashed line), the

prediction of unknown points is better. The number of neurons in the neural network is

analogous to the polynomial degree, i.e. a large number of neurons can lead to overfitting.

So, how to determine the number of neurons?

Figure 6: Overfitting versus generalization.

To solve the problem we can divide our data into two data sets: training and validation. The

first one is used to train the neural network. Once built, the neural net is applied to the

validation data for evaluation.

Possible training scheme:

divide the data into training and validation sets;

start the training with small number of neurons;

apply to validation data and compute the error;

add new neurons until no improvement on the validation data is seen.

Probabilistic neural network

The basic idea behind the general regression probabilistic neural network is to use a set of

one or more measured values, called independent variables, to predict the value of a single

dependent variable. Let us denote the independent variable with a vector x = [x

1

, x

2

, ..., x

p

],

where p is the number of independent variables. Note that the dependent variable, denoted y,

is a scalar. The inputs to the neural network are the independent variables, x

1

, x

2

, ..., x

p

, and

the output is the dependent variable, y. The goal is to estimate the unknown dependent

variable, y, at a location where the independent variables are known. This estimation is

based on the fundamental equation of the general regression probabilistic neural network:

=

=

=

n

1 i

i

n

1 i

i i

)) , ( D exp(

)) , ( D exp( y

) ( ' y

x x

x x

x

where n is the number of examples and D(x, x

i

) is defined by:

=

p

1 j

2

j

ij j

i

x x

) , ( D x x

D(x, x

i

) is actually the scaled distance between the point we are trying to estimate, x, and

the training points, x

i

. The distance is scaled by the quantity

j

, called the smoothing

parameter, which may be different for each independent variable.

The actual training of the network consists of determining the optimal set of smoothing

parameters,

j

. The criterion for optimization is minimization of the validation error. We

define the validation result for the m

th

example as:

=

n

m i

i m

n

m i

i m i

m m

)) , ( D exp(

)) , ( D exp( y

) ( ' y

x x

x x

x

So the predicted value of the m-th sample is y

m

. Since we know the actual value, y

m

, we

can calculate the prediction error:

2

m m m

) ' y y ( e =

The total error for the n examples is:

=

=

n

1 i

2

i i

) ' y y ( e

The validation error then is minimized with respect to the smoothing parameters using the

conjugate gradient algorithm.

Neural networks in EMERGE

Multi-layer feedforward neural network (MLFN)

Figure 7 is a flowchart for the multi-layer feedforward neural network (MLFN) implemented

in EMERGE. It combines the global searching strategy of simulated annealing (SA) with the

powerful minimum-seeking conjugate gradient (CJ) algorithm. Simulated annealing is used

in two separate, independent ways. First it is used in a high temperature initialization mode,

centered at weights of zero, to find a good starting point for CJ minimization. When the CJ

algorithm subsequently converges to a local minimum, SA is called into play again. This

time, its goal is to escape from what may be a local minimum and it is centered about the

best weights as found by the CJ algorithm. The SA is called until it cannot find a better point

than the CJ. This is so-called loop A. Each pass through loop A is called an iteration.

When we exit from loop A, CJ is called again to refine the best weights found from loop A.

If we have not reached the number of iterations, specified in the MLFN menu, SA from zero

is called again, i.e., loop B is executed. The flow stops when: loop A iterations + loop B

iterations = number of iterations.

Figure 7: MLFN flowchart.

The number of neurons in the input layer is equal to the number of attributes multiplied by

the length of the convolutional operator. We have one neuron in the output layer the well

log sample. The number of neurons in the hidden layer is controlled by the nodes in hidden

layer parameter.

MLFN can work in two modes: mapping or classification. In the mapping mode, the actual

value of the target log is predicted. In classification mode, an interval value is predicted. Let

us assume a gamma ray log with readings from 10 API to 130 API. We may divide the log

into three classes:

class 1: 30 API (interval from 10 to 50 API, i.e. sand);

class 2: 70 API (interval from 50 to 90 API, i.e. sand and shale);

class 3: 110 API (interval from 90 to 130 API, i.e. shale).

Probabilistic neural network

The first step during probabilistic neural network training is to find a good single smoothing

parameter (sigma) for the conjugate gradient optimization. This is done by testing a number

of smoothing parameters, number of sigmas, within a specified interval, sigma range. The

best sigma is called the global sigma and it is used as a starting point for the CJ algorithm.

The optimum set of sigmas is found and used for prediction.

References

Haykin, S., 1994, Neural Networks: A Comprehensive Foundation, Prentice Hall

Huang, Z., Shimeld, J., Williamson, M., Katsube, J., 1996, Permeability prediction with

Artificial Neural Network Modeling in the Venture Gas Field, Offshore Eastern

Canada, Geophysics, vol. 61, p.422

Masters, T., 1993, Practical Neural Network Recipes in C++, Academic Press

Masters, T., 1994, Signal and Image Processing with Neural Networks, John Wiley and

Sons

Masters, T., 1995, Advanced Algorithms for Neural Networks, John Wiley and Sons

Press,W., Flannery,B., Teukolsky, S., Vetterling, W., Numerical Recipes in C,

Cambridge University Press

Rumelhart, D., MacClelland, J., Parallel Distributed Processing, MIT Press

Todorov, T., Stewart, R., Hampson, D., Russell, B., 1998, Well Log Prediction Using

Attributes from 3C-3D Seismic Data, Expanded Abstracts, 1998 SEG Annual

Meeting

You might also like

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (842)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5807)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1091)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Ignacio Bello, Fran Hopf - Intermediate Algebra - McGraw-Hill (2008)Document997 pagesIgnacio Bello, Fran Hopf - Intermediate Algebra - McGraw-Hill (2008)Aca Av100% (1)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- 2020 WMI Final G08 Paper BDocument4 pages2020 WMI Final G08 Paper Bchairunnisa nisa50% (2)

- Maths - MS-JMA01 - 01 - Rms - 20180822 PDFDocument11 pagesMaths - MS-JMA01 - 01 - Rms - 20180822 PDFAmali De Silva67% (3)

- Calculus 1 SyllabusDocument9 pagesCalculus 1 SyllabusEugene A. EstacioNo ratings yet

- IGCSE Math (Worked Answers)Document22 pagesIGCSE Math (Worked Answers)Amnah Riyaz100% (1)

- Power System ContingenciesDocument31 pagesPower System ContingenciesSal ExcelNo ratings yet

- Reactive Power and Voltage Stabilization: David F. TaggartDocument32 pagesReactive Power and Voltage Stabilization: David F. TaggartSal ExcelNo ratings yet

- Ee Gate'13Document16 pagesEe Gate'13menilanjan89nLNo ratings yet

- Application of Particle Swarm Optimization For Economic Load Dispatch and Loss ReductionDocument5 pagesApplication of Particle Swarm Optimization For Economic Load Dispatch and Loss ReductionSal ExcelNo ratings yet

- Linear Quadratic RegulatorDocument52 pagesLinear Quadratic RegulatorSal Excel0% (1)

- HVDC Padiyar Sample CpyDocument15 pagesHVDC Padiyar Sample CpySal Excel60% (5)

- High Voltage Engg r13 Mtech PDFDocument24 pagesHigh Voltage Engg r13 Mtech PDFSal ExcelNo ratings yet

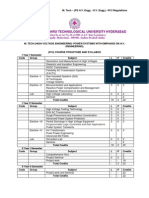

- Revised M Tech r13 RegulationsDocument9 pagesRevised M Tech r13 RegulationsSal ExcelNo ratings yet

- Control Systems r13 MtechDocument24 pagesControl Systems r13 MtechSal ExcelNo ratings yet

- Lesson PlanDocument8 pagesLesson PlanfieqaeqaNo ratings yet

- Factors: How Time and Interest Affect MoneyDocument42 pagesFactors: How Time and Interest Affect MoneyAndrew Tan LeeNo ratings yet

- Vector IntegrationDocument38 pagesVector IntegrationPreetham N KumarNo ratings yet

- Houghton Mifflin Math Expressions Grade 4 Homework and Remembering Volume 2Document5 pagesHoughton Mifflin Math Expressions Grade 4 Homework and Remembering Volume 2afmsjaono100% (1)

- Straight Lines: Various Forms of The Equation of A LineDocument7 pagesStraight Lines: Various Forms of The Equation of A LineSowjanya VemulapalliNo ratings yet

- For StudentDocument79 pagesFor StudentShekhar SharmaNo ratings yet

- AlgebraDocument97 pagesAlgebraHatim ShamsudinNo ratings yet

- Class 10 Mathematics Gist of The LessonDocument2 pagesClass 10 Mathematics Gist of The LessonBinode SarkarNo ratings yet

- 11 Boolean Algebra and Logic CircuitsDocument10 pages11 Boolean Algebra and Logic CircuitsIan BagunasNo ratings yet

- BinetDocument6 pagesBinetraskoj_1No ratings yet

- Solution Outlines For Chapter 7Document5 pagesSolution Outlines For Chapter 7juanNo ratings yet

- Homework 2Document14 pagesHomework 2Anette Wendy Quipo KanchaNo ratings yet

- 6.1 Permutations and Combinations + SolutionDocument36 pages6.1 Permutations and Combinations + SolutionJulianNo ratings yet

- What Is PFDavgDocument11 pagesWhat Is PFDavgKareem RasmyNo ratings yet

- Statics of Rigid Bodies:: CoupleDocument4 pagesStatics of Rigid Bodies:: CoupleLance CastilloNo ratings yet

- Syllabus - Guru Jambheshwar University Mca-5Document34 pagesSyllabus - Guru Jambheshwar University Mca-5binalamitNo ratings yet

- Quantitative Risk Assessment in Geotechnical Engineering: Presented byDocument20 pagesQuantitative Risk Assessment in Geotechnical Engineering: Presented byCarlos RiveraNo ratings yet

- Chapter 6 Homework AssignmentDocument4 pagesChapter 6 Homework AssignmentYesmint HusseinNo ratings yet

- LAS Q3 Weeks 3 To 4Document16 pagesLAS Q3 Weeks 3 To 4Miel GaboniNo ratings yet

- Numerical Analysis - Lecture 2: Mathematical Tripos Part IB: Lent 2010Document2 pagesNumerical Analysis - Lecture 2: Mathematical Tripos Part IB: Lent 2010Anonymous KIUgOYNo ratings yet

- Experiment - 1 AIM: To Determine The Reduced Level of An Object When Base Is Instruments Used: Theodolite, Tape, Leveling Staff, Ranging RodDocument12 pagesExperiment - 1 AIM: To Determine The Reduced Level of An Object When Base Is Instruments Used: Theodolite, Tape, Leveling Staff, Ranging RodSakthi Murugan100% (1)

- Kami Export - 6.10.2abDocument1 pageKami Export - 6.10.2abDoggyGirl5554]No ratings yet

- Qa Number Systems WB PDFDocument11 pagesQa Number Systems WB PDFparimalaseenivasanNo ratings yet

- Quadratic Equation PDF Set 2Document33 pagesQuadratic Equation PDF Set 2Niraj TiwariNo ratings yet

- LogDocument12 pagesLogJonalyn C.SallesNo ratings yet