Professional Documents

Culture Documents

Bisseccao PDF

Uploaded by

RenatoMatiasOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Bisseccao PDF

Uploaded by

RenatoMatiasCopyright:

Available Formats

Solving a Nonlinear Equation

Problem: Given f(x), nd a root (solution) of f(x) = 0.

If f is a polynomial with degree 4 or less, formulas for roots exist.

If f is a polynomial with degree larger than 4, no nite formula exists (proved

by Abel in 1824).

If f is a general nonlinear function, no formula exists

So we must be satised by a method which only computes approximate roots.

Iterative Methods

Since no formula exists for roots of f(x) = 0, iterative methods will be used to

compute approximate roots.

Iterative methods construct a sequence of numbers x

1

, x

2

, . . . , x

n

, x

n+1

, . . . that converge

to a root of f(x) = 0.

3 major issues with implementation of an iterative method:

Where to start the iteration?

Does the iteration converge, and how fast?

When to terminate the iteration?

Three iterative methods will be introduced in this course:

The bisection method

Newtons method

The secant method

1

Bisection Method Idea

Fact:

If f(x) is continuous on [a, b] and f(a) f(b) < 0, then there exists r such that f(r) = 0.

Idea:

The fact can be used to produce a sequence of ever-smaller intervals that each brackets

a root of f(x) = 0:

Let c = (a + b)/2 (midpoint of [a, b]). Compute f(c).

If f(c) = 0, c is a root.

If f(a)f(c) < 0, a root exists in [a, c].

If f(c)f(b) < 0, a root exists in [c, b].

In either case, the interval is half as long as the initial interval. The halving process

can continue until the current interval is shorter than a given tolerance .

Bisection Method Algorithm

Algorithm. Given f, a, b and , and suppose f(a)f(b) < 0:

c (a + b)/2

error bound |b a|/2

while error bound >

if f(c) = 0, then c is a root, stop.

else

if f(a)f(c) < 0, then

b c

else

a c

end

end

c (a + b)/2

error bound error bound/2

end

root c

When implementing this algorithm, avoid recomputation of values of function, and use

sign[f(a)]sign(f(c)] < 0 instead of f(a)f(c) < 0 to avoid overow and underow.

2

Bisection Method Matlab code

function root = bisection(fname,a,b,delta,display)

% The bisection method.

%

%input: fname is a string that names the function f(x)

% a and b define an interval [a,b]

% delta is the tolerance

% display = 1 if step-by-step display is desired,

% = 0 otherwise

%output: root is the computed root of f(x)=0

%

fa = feval(fname,a);

fb = feval(fname,b);

if sign(fa)*sign(fb) > 0

disp(function has the same sign at a and b)

return

end

if fa == 0,

root = a;

return

end

if fb == 0

root = b;

return

end

c = (a+b)/2;

fc = feval(fname,c);

e_bound = abs(b-a)/2;

if display,

disp( );

disp( a b c f(c) error_bound);

disp( );

disp([a b c fc e_bound])

end

while e_bound > delta

if fc == 0,

root = c;

return

end

if sign(fa)*sign(fc) < 0 % a root exists in [a,c].

b = c;

3

fb = fc;

else % a root exists in [c,b].

a = c;

fa = fc;

end

c = (a+b)/2;

fc = feval(fname,c);

e_bound = e_bound/2;

if display, disp([a b c fc e_bound]), end

end

root = c;

Convergence and Eciency of the BM

Suppose the initial interval is [a, b] [a

0

, b

0

] and r is a root. At the beginning (n = 0),

c

0

=

1

2

(a

0

+ b

0

), |r c

0

|

1

2

|b

0

a

0

|.

After n steps, we get interval [a

n

, b

n

], c

n

=

1

2

(a

n

+ b

n

),

|b

n

a

n

| =

1

2

|b

n1

a

n1

|, |r c

n

|

1

2

|b

n

a

n

|.

Therefore we have

|r c

n

|

1

2

2

|b

n1

a

n1

| = =

1

2

n+1

|b a|,

lim

n

c

n

= r.

Q. How many steps are required to ensure |rc

n

| for a general continuous function?

To ensure |r c

n

| , we require

1

2

n+1

|b a| . From this we obtain

n log

2

|b a|/ 1.

So log

2

|b a|/ 1 steps are needed.

Def. Linear convergence (LC):

A sequence {x

n

} is said to have LC to a limit x if

lim

n

|x

n+1

x|

|x

n

x|

= c, 0 < c < 1.

Obviously the right hand side of |r c

n

|

1

2

n+1

|b a| has LC to zero when n . So

we usually say the bisection method has (at least) LC.

4

Note: The bisection method uses sign information only. Given an interval in which

a root lies, it maintains a guaranteed interval, but is slow to converge. If we use

more information, such as values, or derivatives, we can get faster convergence.

Newtons Method

Idea:

Given a point x

0

. Taylor series expansion about x

0

:

f(x) = f(x

0

) + f

(x

0

)(x x

0

) +

f

(z)

2

(x x

0

)

2

.

We can use l(x) f(x

0

) +f

(x

0

)(xx

0

) as an approximation to f(x). In order to solve

f(x) = 0, we solve l(x) = 0, which gives the solution

x

1

= x

0

f(x

0

)/f

(x

0

).

So x

1

can be regarded as an approximate root of f(x) = 0. If this x

1

is not a good

approximate root, we continue this iteration. In general we have the Newton iteration:

x

n+1

= x

n

f(x

n

)/f

(x

n

), n = 0, 1, . . . .

Here we assume f(x) is dierentiable and f

(x

n

) = 0.

Understand Newtons Method Geometrically

L

f

f(x)

T

x

new

x

5

The line L is tangent to f at (x, f(x)), and so has slope f

(x).

The slope of the line L is f(x)/(x x

new

), so:

f

(x) =

f(x)

x x

new

,

and consequently

x

new

= x

f(x)

f

(x)

.

This is just the Newton iteration.

Newtons Method Algorithm

Stopping criteria

|x

n+1

x

n

| xtol, or

|f(x

n+1

)| ftol, or

The maximum number of iteration reached.

Algorithm. Given f, f

, x (initial point), xtol, ftol, n

max

(the maximum number of

iterations):

for n = 1 : n

max

d f(x)/f

(x)

x x d

if |d| xtol or |f(x)| ftol, then

root x

stop

end

end

root x

6

Newtons Method Matlab Code

function root=newton(fname,fdname,x,xtol,ftol,n_max,display)

% Newtons method.

%

%input:

% fname string that names the function f(x).

% fdname string that names the derivative f(x).

% x the initial point

% xtol and ftol termination tolerances

% n_max the maximum number of iteration

% display = 1 if step-by-step display is desired,

% = 0 otherwise

%output: root is the computed root of f(x)=0

%

n = 0;

fx = feval(fname,x);

if display,

disp( n x f(x))

disp(-------------------------------------)

disp(sprintf(%4d %23.15e %23.15e, n, x, fx))

end

if abs(fx) <= xtol

root = x;

return

end

for n = 1:n_max

fdx = feval(fdname,x);

d = fx/fdx;

x = x - d;

fx = feval(fname,x);

if display,

disp(sprintf(%4d %23.15e %23.15e,n,x,fx))

end

if abs(d) <= xtol | abs(fx) <= ftol

root = x;

return

end

end

The function f(x) and its derivative f

(x) are implemented by Matlab, for example

% M-file f.m

7

function y = f(x)

y = x^2 -2;

% M-file fd.m

function y = fd(x)

y = 2*x;

Newton on x

2

2.

>> root=newton(f,fd,2,1.e-12,1.e-12,20,1)

n x f(x)

------------------------------------------------

0 2.000000000000000e+00 2.000000000000000e+00

1 1.500000000000000e+00 2.500000000000000e-01

2 1.416666666666667e+00 6.944444444444642e-03

3 1.414215686274510e+00 6.007304882871267e-06

4 1.414213562374690e+00 4.510614104447086e-12

5 1.414213562373095e+00 4.440892098500626e-16

root =

1.414213562373095e+00

Double precision accuracy in only 5 steps!

Steps 2, 3, 4: |f(x)| 10

3

, |f(x)| 10

6

, |f(x)| 10

12

.

We say f(x) 0 with quadratic convergence:

once |f(x)| is small, it is roughly squared, and thus much smaller, at the next step.

In step 4, x is accurate to about 12 decimal digits. About 6 decimal digits at the

previous step, and about 3 decimal digits at the step before that.

The number of accurate digits of x is approximately doubled at each step. We say x

converges to the root with quadratic convergence.

Failure of Newtons Method

Newtons method does not always works well. It may not converge.

If f

(x

n

) = 0 the method is not dened.

If f

(x

n

) 0 then there may be diculties. The new estimate x

n+1

may be a

much worse approximation to the solution than x

n

is.

8

Convergence Analysis of Newtons Method

Def. Quadratic convergence (QC).

A sequence {x

n

} is said to have QC to a limit x if

lim

n

|x

n+1

x|

|x

n

x|

2

= c,

where c is some nite non-zero constant.

Newton iteration:

x

n+1

= x

n

f(x

n

)/f

(x

n

).

Suppose x

n

converges to a root r of f(x) = 0.

Taylor series expansion about x

n

is

f(r) = f(x

n

) + (r x

n

)f

(x

n

) +

(r x

n

)

2

2

f

(z

n

)

where z

n

lies between x

n

and r.

But f(r) = 0, and dividing by f

(x

n

)

0 =

f(x

n

)

f

(x

n

)

+ (r x

n

) + (r x

n

)

2

f

(z

n

)

2f

(x

n

)

.

The 1st term on the RHS is x

n

x

n+1

, so

r x

n+1

= c

n

(r x

n

)

2

, c

n

f

(z

n

)

2f

(x

n

)

.

Writing the error e

n

= r x

n

, we see

e

n+1

= c

n

(e

n

)

2

.

Since x

n

r, and z

n

is between x

n

and r, we see z

n

r and so

lim

n

|e

n+1

|

|e

n

|

2

= lim

n

|c

n

| =

|f

(r)|

|2f

(r)|

c

assuming that f

(r) = 0.

So the convergence rate of Newton method is usually quadratical. At the (n + 1)-th

step, the new error is equal to c

n

times the square of the old error.

Also we can show f(x

n

) usually converges to 0 quadratically:

Q. Under which condition, x

n

r?

It can be shown that if f

(x) is continuous in a neighborhood of r, a root of f(x) = 0,

f

(r) = 0, and if the initial point x

0

is close to r enough, then x

n

r. (For a rigorous

proof, see C&K, pp94-95.)

9

Remarks:

If f

(r) = 0 but f

(r) = 0, then

lim

n

|e

n+1

/e

2

n

| = 0.

NM converges faster than quadratic convergence.

e.g., f(x) = sin(x), r = .

Try newton(f,fd,4,1.e-12,1.e-12,20,1)

If f

(r) = 0, then we can show NM has linear convergence rate.

e.g., f(x) = (x 1)

2

, r = 1.

Try newton(f,fd,1.5,1.e-12,1.e-12,20,1)

For this specic function, try to show NM has linear convergence rate.

If the initial point is not close to the root r, NM may not converge.

e.g., f(x) = arctan(x), r = 0, x

0

= 1.5.

Try newton(f,fd,1.5,1.e-12,1.e-12,20,1)

The Secant Method

Idea:

Newton iteration: x

n+1

= x

n

f(x

n

)/f

(x

n

).

Problem with Newtons method: it requires software for the derivative f

(x) in

many instances, dicult or impossible to encode.

Q: How to get around the problem?

Use divided dierence

f(xn)f(x

n1

)

xnx

n1

to replace f

(x

n

), we get the secant iteration:

x

n+1

= x

n

x

n

x

n1

f(x

n

) f(x

n1

)

f(x

n

).

This formula can be understood geometrically:

Draw a secant line which connects two points (x

n1

, f(x

n1

)) and (x

n

, f(x

n

)) on the

graph of y = f(x). The cross point of the secant line and the x-axis is exactly the x

n+1

dened by the secant iteration formula.

10

Algorithm. Given f, x

0

, x

1

(two initial points), xtol, ftol, n

max

for n = 1 : n

max

d

x

1

x

0

fx

1

fx

0

fx

1

x

0

x

1

fx

0

fx

1

x

1

x

1

d

fx

1

f(x

1

)

if |d| xtol or |fx

1

| ftol, then

root x

1

return

end

end

root x

1

11

Convergence of the Secant Method

If x

n

converges to a root r of f(x) = 0, we can show (somewhat dicult) that

lim

k

|x

n+1

r|

|x

n

r|

p

= c

where p = (1 +

5)/2 1.618. So the secant method converges super-linearly.

The secant method may not converge for much the same reason that Newtons method

may not converge. For example, the method is not dened if

f(x

n

) = f(x

n1

).

Comparison of the Three Methods

The Bisection Method (BM):

Advantages: simple, robust (guaranteed to converge), applicable to nons-

mooth functions.

Disadvantages: generally slower than NM and SM with linear convergence.

Newtons Method (NM):

Advantages: generally faster than BM and SM with quadratic convergence.

Disadvantages: needs to compute f

, may not converge.

The Secant Method (SM):

Advantages: generally faster than BM with super-linear convergence, no need

to compute f

.

Disadvantages: slower than NM, may not converge.

Two MATLAB Commands

There are two Matlab built-in functions:

roots for nding all roots of a polynomial.

fzero for nding a root of a general function.

Check Matlab to see how to use these two functions.

12

You might also like

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Econometrics Chapter ThreeDocument70 pagesEconometrics Chapter ThreeDenaw AgimasNo ratings yet

- CNN CodeDocument6 pagesCNN CodeSudhanNo ratings yet

- Radial Basis Function Neural Network RBFNNDocument14 pagesRadial Basis Function Neural Network RBFNNClash With GROUDONNo ratings yet

- Moving Average FilterDocument5 pagesMoving Average FilterMhbackup 001No ratings yet

- Unit 2.lesson 4 Numeric and Alphanumeric CodesDocument61 pagesUnit 2.lesson 4 Numeric and Alphanumeric CodesAngelo JenelleNo ratings yet

- Solving ODE-BVP Using The Galerkin's MethodDocument14 pagesSolving ODE-BVP Using The Galerkin's MethodSuddhasheel Basabi GhoshNo ratings yet

- Department of Electronics and Communication Engineering: Subject: Vlsi Signal ProcessingDocument8 pagesDepartment of Electronics and Communication Engineering: Subject: Vlsi Signal ProcessingRaja PirianNo ratings yet

- Visual Categorization With Bags of KeypointsDocument17 pagesVisual Categorization With Bags of Keypoints6688558855No ratings yet

- Motion Control ReportDocument52 pagesMotion Control ReportJuan Camilo Pérez Muñoz100% (1)

- Quiz2 Sec C SolutionDocument2 pagesQuiz2 Sec C SolutionHuzaifa MuhammadNo ratings yet

- Data Structure Assignment - 4Document9 pagesData Structure Assignment - 4Ravi Raj100% (1)

- Spreadsheet Modeling and Decision Analysis A Practical Introduction To Business Analytics 8th Edition Ragsdale Solutions Manual DownloadDocument16 pagesSpreadsheet Modeling and Decision Analysis A Practical Introduction To Business Analytics 8th Edition Ragsdale Solutions Manual DownloadJulia Garner100% (21)

- New Fem Assign FinalDocument13 pagesNew Fem Assign FinalbasantvitsNo ratings yet

- Least-Norm Solutions of Undetermined Equations: EE263 Autumn 2007-08 Stephen BoydDocument15 pagesLeast-Norm Solutions of Undetermined Equations: EE263 Autumn 2007-08 Stephen BoydRedion XhepaNo ratings yet

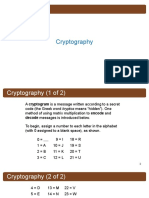

- CryptographyDocument10 pagesCryptographyKarylle AquinoNo ratings yet

- DSP Attainment 2020-2021.Xlsx Send - XLSX TestDocument41 pagesDSP Attainment 2020-2021.Xlsx Send - XLSX TestManjula BMNo ratings yet

- 50 Years of Integer Programming 1958-2008 PDFDocument811 pages50 Years of Integer Programming 1958-2008 PDFJeff Gurguri100% (3)

- Massachusetts Institute of Technology Department of Electrical Engineering and Computer Science 6.341 Discrete-Time Signal Processing Fall 2005Document24 pagesMassachusetts Institute of Technology Department of Electrical Engineering and Computer Science 6.341 Discrete-Time Signal Processing Fall 2005Nguyen Duc TaiNo ratings yet

- Deadlock PPT OsDocument11 pagesDeadlock PPT OsUrvashi BhardwajNo ratings yet

- Senaris2019 SitisundariDocument11 pagesSenaris2019 SitisundariRyan msNo ratings yet

- Linear Programming: Mcgraw-Hill/IrwinDocument20 pagesLinear Programming: Mcgraw-Hill/IrwinSagar MurtyNo ratings yet

- Slides Exercise Session 6Document23 pagesSlides Exercise Session 6khoiNo ratings yet

- Stack Applications in Data StructureDocument22 pagesStack Applications in Data StructureSaddam Hussain75% (4)

- Scan Converting CircleDocument36 pagesScan Converting CircleKashish KansalNo ratings yet

- Section 6 IntegrationDocument16 pagesSection 6 IntegrationpraveshNo ratings yet

- Cyclotomic Irreducibility Several ProofsDocument8 pagesCyclotomic Irreducibility Several ProofsjamintooNo ratings yet

- 1 Simplex MethodDocument17 pages1 Simplex MethodPATRICIA COLINANo ratings yet

- Module 5 Decision Tree Part2Document47 pagesModule 5 Decision Tree Part2Shrimohan TripathiNo ratings yet

- Chapter 24: Single-Source Shortest Paths: GivenDocument48 pagesChapter 24: Single-Source Shortest Paths: GivenNaveen KumarNo ratings yet

- Pdf&rendition 1Document4 pagesPdf&rendition 1nvbnvbnvNo ratings yet