Professional Documents

Culture Documents

Csit 2239

Uploaded by

deeptimca07Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Csit 2239

Uploaded by

deeptimca07Copyright:

Available Formats

A HYBRID CLUSTERING ALGORITHM FOR DATA MINING

Ravindra Jain 1

1

School of Computer Science & IT, Devi Ahilya Vishwavidyalaya, Indore, India

ravindra.jain57@gmail.com

ABSTRACT

Data clustering is a process of arranging similar data into groups. A clustering algorithm partitions a data set into several groups such that the similarity within a group is better than among groups. In this paper a hybrid clustering algorithm based on K-mean and K-harmonic mean (KHM) is described. The proposed algorithm is tested on five different datasets. The research is focused on fast and accurate clustering. Its performance is compared with the traditional K-means & KHM algorithm. The result obtained from proposed hybrid algorithm is much better than the traditional K-mean & KHM algorithm.

KEYWORDS

Clustering Algorithm; K-harmonic Mean; K-mean; Hybrid clustering;

1. INTRODUCTION

The field of data mining and knowledge discovery is emerging as a new, fundamental research area with important applications to science, engineering, medicine, business, and education. Data mining attempts to formulate, analyze and implement basic induction processes that facilitate the extraction of meaningful information and knowledge from unstructured data [1]. Size of databases in scientific and commercial applications is huge where the number of records in a dataset can vary from some thousands to thousands of millions [2]. The clustering is a class of data mining task in which algorithms are applied to discover interesting data distributions in the underlying data space. The formation of clusters is based on the principle of maximizing similarity between patterns belonging to distinct clusters. Similarity or proximity is usually defined as distance function on pairs of patterns and based on the values of the features of these patterns [3] [4]. Many variety of clustering algorithms have been emerged recently. Different starting points and criteria usually lead to different taxonomies of clustering algorithms [5]. At present commonly used partitional clustering methods are K-Means (KM) [6], K-Harmonic Means (KHM) [7], Fuzzy C-Means (FCM) [8][ 9], Spectral Clustering (SPC) [10][ 11] and several other methods which are proposed on the base of above mentioned methods[12]. The KM algorithm is a popular partitional algorithm. It is an iterative hill climbing algorithm and the solution obtained depends on the initial clustering. Although the K-means algorithm had been successfully applied to many practical clustering problems, it has been shown that the algorithm may fail to converge to a global minimum under certain conditions [13]. The main problem of K-means algorithm is that, the algorithm tends to perform worse when the data are organized in more complex and unknown shapes [14]. Similarly KHM is a novel centerbased clustering algorithm which uses the harmonic averages of the distances from each data point to the centers as components to its performance function [7]. It is demonstrated that KHM is

David C. Wyld, et al. (Eds): CCSEA, SEA, CLOUD, DKMP, CS & IT 05, pp. 387393, 2012. CS & IT-CSCP 2012 DOI : 10.5121/csit.2012.2239

388

Computer Science & Information Technology (CS & IT)

essentially insensitive to the initialization of the centers. In certain cases, KHM significantly improves the quality of clustering results, and it is also suitable for large scale data sets [5]. However existing clustering algorithm requires multiple iterations (scans) over the datasets to achieve a convergence [15], and most of them are sensitive to initial conditions, by example, the K-Means and ISO-Data algorithms [16][17]. This paper proposed a new hybrid algorithm which is based on K-Mean & K-Harmonic Mean approach. The remainder of this paper is organized as follows. Section II reviews the K-Means algorithm and the K-Harmonic Means algorithm. In Section III, the proposed K-Means and K-Harmonic Means based hybrid clustering algorithm is presented. Section IV provides the simulation results for illustration, followed by concluding remarks in Section V.

2. ALGORITHM REVIEWS

In this section, brief introduction of K-Means and K-Harmonic Means clustering algorithms is presented to pave way for the proposed hybrid clustering algorithm.

2.1. K-Means Algorithm

The K-means algorithm is a distance-based clustering algorithm that partitions the data into a predetermined number of clusters. The K-means algorithm works only with numerical attributes. Distance-based algorithms rely on a distance metric (function) to measure the similarity between data points. The distance metric is either Euclidean, Cosine, or Fast Cosine distance. Data points are assigned to the nearest cluster according to the distance metric used. In figure 1 the K-Mean algorithm is given. 1. begin 2. initialize N, K, C1, C2, . . . , CK; where N is size of data set, K is number of clusters, C1, C2, . . . , CK are cluster centers. 3. do assign the n data points to the closest Ci; recompute C1, C2, . . . , CK using Simple Mean function; until no change in C1, C2, . . . , CK; 4. return C1, C2, . . . , CK; 5. End Figure 1. K-means Algorithm

2.2. K-Harmonic Means Algorithm

K-Harmonic Means Algorithm is a center-based, iterative algorithm that refines the clusters defines by K centers [7]. KHM takes the harmonic averages of the squared distance from a data point to all centers as its performance function. The harmonic average of K numbers is defined as- the reciprocal of the arithmetic average of the reciprocals of the numbers in the set.

HA((a i = 1, , K)) =

(1)

The KHM algorithm starts with a set of initial positions of the centers, then distance is calculated by the function di,l =||ximi||, and then the new positions of the centers are calculated. This process is continued until the performance value stabilizes. Many experimental results show that KHM is essentially insensitive to the initialization. Even when the initialization is worse, it can also converge nicely, including the converge speed and clustering results. It is also suitable for the

Computer Science & Information Technology (CS & IT)

389

large scale data sets [5]. Because of so many advantages of KHM, it is used in proposed algorithm.

1. begin 2. initialize N, K, C1, C2, . . . , CK; where N is size of data set, K is number of clusters, C1, C2, . . . , CK are cluster centers. 3. do assign the n data points to the closest Ci; recompute C1, C2, . . . , CK using distance function; until no change in C1, C2, . . . , CK; 4. return C1, C2, . . . , CK; 5. End Figure 2. K-Harmonic Mean Algorithm

3. PROPOSED ALGORITHM

Clustering is based on similarity. In clustering analysis it is compulsory to compute the similarity or distance. So when data is too large or data arranged in a scattered manner it is quite difficult to properly arrange them in a group. The main problem with mean based algorithm is that mean is highly affected by extreme values. To overcome this problem a new algorithm is proposed, which performs two methods to find mean instead of one. The basic idea of the new hybrid clustering algorithm is based on applying two techniques to find mean one by one until or unless our goal is reached. The accuracy of result is much greater as compared to K-Mean & KHM algorithm. The main steps of this algorithm are as follows: Firstly, choose K elements from dataset DN as single element cluster. This step follow the same strategy as k-mean follows for choosing the k initial points means choosing k random points. Figure 3 shows the algorithm. In section 4, the result of experiments illustrate that the new algorithm has its own advantages, especially in the cluster forming. Since proposed algorithm apply two different mechanisms to find mean value of a cluster in a single dataset, therefore result obtained by proposed algorithm is benefitted by the advantages of both the techniques. For another aspect it also sort out the problem of choosing initial points as this paper mentioned earlier that Harmonic mean converge nicely, including result and speed even when the initialization is very poor. So in this way the proposed algorithm overcomes the problem occurred in K-Mean and K-Harmonic Mean algorithms.

1. 2.

3.

4. 5.

begin initialize Dataset DN, K, C1, C2, . . . , CK, CurrentPass=1; where D is dataset, N is size of data set, K is number of clusters to be formed, C1, C2, . . . , CK are cluster centers. CurrentPass is the total no. of scans over the dataset. do assign the n data points to the closest Ci; if CurrentPass%2==0 recompute C1, C2, . . . , CK using Harmonic Mean function; else recompute C1, C2, . . . , CK using Arithmetic Mean function; increase CurrentPass by one. until no change in C1, C2, . . . , CK; return C1, C2, . . . , CK; End Figure 3. Proposed Hybrid Algorithm

390

Computer Science & Information Technology (CS & IT)

4. EXPERIMENTATION AND ANALYSIS OF RESULTS

Extensive experiments are performed to test the proposed algorithm. These experiments are performed on a server with 2 GB RAM and 2.8 GHz Core2Duo Processor. Algorithms are written in JAVA language and datasets are kept in memory instead of database. Each dataset contains 15000 records, since heap memory of JAVA is very low so the experiments use only 10 percent of original dataset i.e. 1500 records to perform analysis. Here, the comparison between new hybrid algorithm with traditional K-mean algorithm and K mean KHM algorithm is shown. In each experiment 5 clusters are created and accuracy of clusters is tested with clusters formed using traditional K-mean algorithm and KHM algorithm. K mean Figure 4, 5, 6, 7, 8 and Table 1 shows that New Hybrid algorithm reduces the mean value of each mean cluster, which means elements of clusters, are more tightly bond with each other and resultant clusters are more dense. Because the mean is greatly reduced and efficiently calculated, the algorithms have many advantages in the aspects of computation time and iteration numbers, and computation the effectiveness is also greatly improved. From the experiments, it can also discover that the clustering results are much better. Experiment 1: In this experiment the maximum item value of our dataset is 997 and minimum item value is 1.

(a) K-Mean Algorithm Mean (b) Proposed Hybrid Algorithm Figure 4. Result obtained from applying K-Mean & Proposed algorithm on Dataset 1. . K Mean Experiment 2: In this experiment the maximum item value of our dataset is 9977 and minimum item value is 6. value

(a) K-Mean Algorithm Mean (b) Proposed Hybrid Algorithm Figure 5. Result obtained from applying K-Mean & Proposed algorithm on Dataset 2. . K Mean

Computer Science & Information Technology (CS & IT)

391

Experiment 3: In this experiment the maximum item value of our dataset is 199 and minimum item value is 2.

(a) K-Mean Algorithm Mean (b) Proposed Hybrid Algorithm Figure 6. Result obtained from applying K-Mean & Proposed algorithm on Dataset 3. . K hm Experiment 4: In this experiment the maximum item value of our dataset is 58785790 and minimum item value is 58720260.

(a) K-Mean Algorithm Mean (b) Proposed Hybrid Algorithm Figure 7. Result obtained from applying K-Mean & Proposed algorithm on Dataset 4. . K Mean Experiment 5: In this experiment the maximum item value of our dataset is 498 and minimum item value is 1.

(a) K-Mean Algorithm Mean (b) Proposed Hybrid Algorithm posed Figure 8. Result obtained from applying K-Mean & Proposed algorithm on Dataset 5. . K Mean

392

Computer Science & Information Technology (CS & IT)

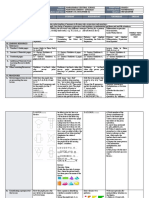

Table 1. Comparison between results obtained from applying KHM & proposed hybrid algorithm

KHM Algorithm Exp. No. Cluster No. 1 2 1. 3 4 5 1 2 2. 3 4 5 1 2 3. 3 4 5 1 2 4. 3 4 5 1 2 5. 3 4 5

Total Element in Cluster Mean Value of Cluster

New Hybrid Algorithm

Total Element in Cluster Mean Value of Cluster

263 37 365 303 352 265 220 363 304 352 289 0 367 301 354 336 275 309 283 300 269 2 368 301 353

233.08 44.17 632.90 411.49 875.60 2337.92 806.15 6337.10 4135.72 8760.48 44.36 0.00 126.03 81.20 174.46 58753679.45 58726052.77 58739334.72 58778339.25 58766278.95 115.45 1.00 316.25 206.12 437.58

263 37 365 302 352 263 219 363 303 352 288 0 367 301 354 335 275 307 284 299 268 2 365 301 352

233.06 44.16 632.90 411.44 875.59 2337.83 806.11 6337.07 4135.68 8760.36 44.38 0.00 126.00 81.20 174.45 58753679.42 58726052.69 58739334.69 58778339.27 58766278.94 115.41 1.00 316.19 206.08 437.54

5. CONCLUSIONS

This paper presents a new hybrid clustering algorithm which is based on K-mean and KHM algorithm. From the results it is observed that new algorithm is efficient. Experiments are performed using different datasets. The performance of new algorithm does not depend on the size, scale and values in dataset. The new algorithm also has great advantages in error with real results and selecting initial points in almost every case. Future enhancement will include the study of higher dimensional datasets and very large datasets for clustering. It is also planned to use of three mean techniques instead of two.

Computer Science & Information Technology (CS & IT)

393

REFERENCES

[1] [2] [3] [4] [5] [6] [7] [8] [9] [10] M H Dunham, Data Mining: Introductory and Advanced Topics, Prentice Hall, 2002. Birdsall, C.K., Landon, A.B.: Plasma Physics via Computer Simulation, Adam Hilger, Bristol (1991). A.K.Jain, R.C.Dubes, Algorithms for Clustering Data, Prentice-Hall, Englewood Cliffs, NJ,1988. J.T.Tou, R.C.Gonzalez, Pattern Recognition Principles, Addision-Wesley, Reading 1974. Chonghui GUO, Li PENG, A Hybrid Clustering Algorithm Based on Dimensional Reduction and KHarmonic Means, IEEE 2008. R C Dubes, A K Jain, Algorithms for Clustering Data, Prentice Hall, 1988. B Zhang, M C Hsu, Umeshwar Dayal, K-Harmonic Means-A Data Clustering Algorithm, HPL1999-124, October, 1999.K. Elissa. X B Gao, Fuzzy Cluster Analysis and its Applications, Xidian University Press, 2004(in china). Wu K L, Yang M S., Alternative fuzzy c-means clustering algorithm, Pattern Recognition, 2002, 35(10):2267-2278. A Y Ng, M I Jordan, Y Weiss. On spectral clustering: Analysis and an algorithm, In: Dietterich, T G Becker S, Ghahraman; Z. Advances in Neural Information Processing Systems 14, Cambridge: MITPress, 2002, 849-856. D Higham, K.A Kibble, Unified view of spectral clustering, Technical Report 02, Department of Mathematics, University of Strathclyde, 2004. R Xu, D Wunsch, Survey of Clustering Algorithms, IEEE Transactions on Neural Networks. 2005,16(3):645-678. Suresh Chandra Satapathy, JVR Murthy, Prasada Reddy P.V.G.D, An Efficient Hybrid Algorithm for Data Clustering Using Improved Genetic Algorithm and Nelder Mead Simplex Search, International Conference on Computational Intelligence and Multimedia Applications 2007. Liang Sun, Shinichi Yoshida, A Novel Support Vector and K-Means based Hybrid Clustering Algorithm, Proceedings of the 2010 IEEE International Conference on Information and Automation June 20 - 23, Harbin, China. Hockney, R.W., Eastwood, J.W., Computer Simulation using Particles, Adam Hilger, Bristol (1988). Bradley, P.S., Fayyad, U., Reina, C.: Scaling clustering algorithms to large databases, In: Proceedings of the Fourth International Conference on Knowledge Discovery and Data Mining, KDD 1998, New York, August 1998, pp. 915. AAAI Press, Menlo Park (1998). Hartigan, J.A., Wong, M.A. A k-means clustering algorithm, Applied Statistics 28, 100108 (1979)

[11] [12] [13]

[14]

[15] [16]

[17]

Author

Mr. Ravindra Jain is working as a Project Fellow under UGC-SAP Scheme, DRS Phase I at School of Computer Science & IT, Devi Ahilya University, Indore, India since December 2009. He received B.Sc. (Computer Science) and MCA (Master of Computer Applications) from Devi Ahilya University, Indore, India in 2005 and 2009 respectively. His research areas are Databases, Data Mining and Mobile Computing Technology. He is having 2+ years of experience in software development and research.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Experiment 7 - : Using Matlab To Form The Y-BusDocument2 pagesExperiment 7 - : Using Matlab To Form The Y-BusgivepleaseNo ratings yet

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Bivariate DataDocument8 pagesBivariate DataJillur HoqueNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Mathematics C2 Pure MathematicsDocument4 pagesMathematics C2 Pure MathematicsMatthew HigginsNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Mathematics Level 8 31:3 PDFDocument8 pagesMathematics Level 8 31:3 PDFLeow Zi LiangNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- 2D FFTDocument19 pages2D FFTAngel MarianoNo ratings yet

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Histogram SpecificationDocument4 pagesHistogram SpecificationAmir Mirza100% (1)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Lecture-6 (Paper 1)Document26 pagesLecture-6 (Paper 1)Ask Bulls Bear100% (1)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- North Sydney Boys 2019 3U Trials & SolutionsDocument29 pagesNorth Sydney Boys 2019 3U Trials & SolutionsNhan LeNo ratings yet

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Module 4 - Integration 1 (Self Study)Document4 pagesModule 4 - Integration 1 (Self Study)api-3827096100% (2)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- 8 Bar MechanismDocument6 pages8 Bar MechanismorkunuNo ratings yet

- USA Mathematical Talent Search Round 3 Problems Year 19 - Academic Year 2007-2008Document2 pagesUSA Mathematical Talent Search Round 3 Problems Year 19 - Academic Year 2007-2008ArsyNo ratings yet

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Lattimore Szepesvari18bandit Algorithms PDFDocument513 pagesLattimore Szepesvari18bandit Algorithms PDFAnkit PalNo ratings yet

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Maths (041) Xii PB 1 QP Set CDocument7 pagesMaths (041) Xii PB 1 QP Set Cup14604No ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Lattice Multiplication Kenesha HardyDocument6 pagesLattice Multiplication Kenesha Hardyapi-267389718No ratings yet

- Annotated Stata Output Multiple Regression AnalysisDocument5 pagesAnnotated Stata Output Multiple Regression AnalysiscastjamNo ratings yet

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Complex Integration McqnotesDocument33 pagesComplex Integration McqnotesMîssìlé Prãñëêth SåíNo ratings yet

- Wholeissue 49 6Document53 pagesWholeissue 49 6Om DwivediNo ratings yet

- Science Activity Completion ReportDocument4 pagesScience Activity Completion ReportJess DevarasNo ratings yet

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Christmas Revision 2022Document5 pagesChristmas Revision 2022Nicole Vassallo ♥️No ratings yet

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Assignment 08-09-2019Document4 pagesAssignment 08-09-2019pyp_pypNo ratings yet

- DLL - Math 6 - Q3 - W4Document9 pagesDLL - Math 6 - Q3 - W4mary rose cornitoNo ratings yet

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Aplicacion Corto 3Document5 pagesAplicacion Corto 3Garbel LemusNo ratings yet

- X X X X X X: 5.2 Vertex Form of Quadratics MathematicianDocument2 pagesX X X X X X: 5.2 Vertex Form of Quadratics Mathematicianapi-168512039No ratings yet

- Lecture 8 Multilevel FullDocument72 pagesLecture 8 Multilevel FullTÙNG NGUYỄN VŨ THANHNo ratings yet

- Department of Education: Division Diagnostic Test Grade 5 MathematicsDocument4 pagesDepartment of Education: Division Diagnostic Test Grade 5 MathematicsMichelle VillanuevaNo ratings yet

- PP FolioDocument72 pagesPP FolioArthur Yippy WooNo ratings yet

- Wulff ConstructionDocument2 pagesWulff ConstructionSgk ManikandanNo ratings yet

- Angles in Polygons Investigation v2Document8 pagesAngles in Polygons Investigation v2api-291565828No ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- 4.CI For Prop, Var, RatioDocument36 pages4.CI For Prop, Var, RatioHamza AsifNo ratings yet

- Chapter 2Document17 pagesChapter 2Omed. HNo ratings yet

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)