Professional Documents

Culture Documents

Mirage of Portfolio Performance PDF

Uploaded by

Salman ZiaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Mirage of Portfolio Performance PDF

Uploaded by

Salman ZiaCopyright:

Available Formats

CMER Working Paper Series

Working Paper No. 97-16

The Mirage of Portfolio Performance Evaluation Naim Sipra

Opposite Sector U, DHA, Lahore Cantt.54792, Lahore, Pakistan e-mail: Sipra@lums.edu.pk

November, 1997

CENTRE FOR MANAGEMENT AND ECONOMIC RESEARCH Lahore University of Management Sciences

Opp. Sector U, DHA, Lahore Cantt. 54792, Lahore, Pakistan Tel.: 92-42-5722670-79, x4222, 4201 Fax: 92-42-5722591 Website: www.lums.edu.pk/cmer

The Mirage of Portfolio Performance Evaluation

You cant tell which way the train went by looking at the tracks

ABSTRACT

Risk-adjusted performance measures are essential for evaluating portfolio performance. This is needed not only to reward or punish portfolio managers but also to conduct financial research where it is necessary to differentiate between strong and weak performance. This paper investigates the practical limitations of finding appropriate performance measures by looking at the inability of three popular portfolio measures Sharpe, Jensen and Treynorto evaluate portfolio performance in a meaningful way. This paper makes the point that the problems with portfolio performance are not restricted to these measures but are likely to exist with any other measure of performance devised since they stem from the random walk properties of the stock prices.

The Mirage of Portfolio Performance Evaluation

You cant tell which way the train went by looking at the tracks

INTRODUCTION

Portfolio performance evaluation is one of the most important areas in investment analysis, however, the available performance measures are probably the best example of The Emperor has no clothes that can be found in finance literature. In this paper we will show that all the performance measures developed so far fail to perform the task of evaluating a portfolios performance in any meaningful way. Moreover, we feel that the hope of finding an effective performance measure is also unlikely.

The problem with portfolio performance evaluation is as follows. The observed return from a portfolio is a function of the risk free rate, risk premium, managerial skill, and chance. However, the risk premium is for ex-ante risk, a non-observable quantity, regarding which there is no consensus how to even define it and which, thus, cannot be estimated in an unambiguous way by observing the outcome. Given this difficulty with the definition and measurement of risk, it is impossible to separate managerial skill from chance.

The already near impossible problem of evaluating portfolio performance is compounded by our penchant for ranking. We like to know who is number one. So we devise performance measures that can provide us with this ranking, notwithstanding, that in order

to do so we have to make heroic assumptions like unlimited short sales and unlimited borrowing at the risk free rate without which it is not possible to find a best portfolio that is best for everyone. These assumptions may be all right for modeling, but certainly not for determining fiscal rewards and punishments.

Despite these theoretical difficulties, one may justify using a particular performance measure for evaluation if it can be shown that if this measure is used as a decision making tool for future investments one gets better results than any other investment strategy. 1 Of course, what we mean by better results is problematic, as that is what we are after. Unfortunately, the hope of finding a measure that by evaluating past performance can guide us how to obtain superior performance in the future is doomed for failure given that it flies in the face of weak form of market efficiency. Nonetheless, at a minimum, we could consider a performance measure as useful if we used it as an investment selector and it gave superior performance according to its own criterion. For example, the Sharpe measure should perform well at least according to the Sharpe measure.

Since problems with using returns alone for portfolio performance are well known we will concentrate on risk-adjusted performance measures. As representatives of this class of measures we will look at the Sharpe (1966), Jensen (1968), and Treynor (1965) measures of portfolio performance2. These are the classic risk-adjusted portfolio performance measures that every textbook on investments discusses in their performance evaluation chapters. However, we hope that it will be readily obvious to everyone that our criticism

of these measures is not just restricted to these three measures but to the entire class of risk-adjusted performance measures.

TEST OF PERFORMANCE MEASURES AS PERFORMANCE MEASURES

To make the first point, that it is virtually impossible to separate superior performance from the other elements of a portfolios return, consider the simulated returns earned by twenty portfolios over twenty periods given in Table 1. For portfolios one through ten the returns for each period were determined by generating a random number between zero and one and assigning a return of 20% if the number was larger than 0.5 and -20% if the number was less than or equal to 0.5. For portfolios 11 through 15 the returns were +22% and -18% so in either case they performed 2% better than simply random portfolios, and for portfolios 16 through 20 the returns were +18% and -22%, that is, they performed 2% worse than the random portfolios. The average of these portfolios was taken as the market portfolio, and risk free rate of 0.5 percent was used to calculate the Sharpe, Jensen and Treynor indices. Column two of Table 1 records the average returns obtained over the twenty periods for each portfolio, whereas, column three records the expected returns. Columns four, five, and six record the rankings according to the Sharpe, Jensen, and Treynor measures, respectively. For an interpretation of these results, let us consider the

rankings given by the Sharpe measure. The top ten funds ranked by the Sharpe measure given in Table 2 below, are five funds -- #1,4,5,6,10 -- that had the expected value of zero, and two funds -- #16,18 -- that had the expected value of -2 percent. From amongst the superior performers, only funds #12, 13, and 14 show up in the top ten. Similarly, the rankings given by the other measures illustrate a complete lack of any differentiation between the superior, inferior and random performers by any of them. 3

Table 1 Performance rankings for randomly generated portfolios

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20

Geometric Expected Return Sharpe Mean Return Ranking 0.0412 0 10 -0.0399 -0.0202 0.0843 0.0626 0.0203 -0.0591 -0.0202 -0.0202 0.0843 -0.0388 0.0203 0.0203 -0.0195 0.0407 -0.0795 -0.0984 0.0640 -0.0406 -0.0001 0 0 0 0 0 0 0 0 0 0.02 0.02 0.02 0.02 0.02 -0.02 -0.02 -0.02 -0.02 -0.02 4 18 5 1 15 6 12 13 20 14 3 8 9 11 2 19 7 16 17

Jensen Ranking 10 4 18 5 6 15 1 20 12 13 9 14 8 3 2 11 7 19 16 17

Treynor Ranking 16 17 10 20 4 18 5 15 1 12 13 3 8 14 19 6 11 2 9 7

Table 2 Performance of Performance measures Measure Performance of Sharpe Measure Sharpe ranking Fund E(return) % 1 5 0 2 16 -2 3 12 2 4 2 0 5 4 0 6 7 0 7 18 -2 8 13 2 9 14 2 10 1 0

Performance of Jensen Measure Jensen ranking Fund E(return) % 1 7 0 2 15 2 3 14 2 4 2 0 5 4 0 6 5 0 7 17 -2 8 13 2 9 11 2 10 1 0

Performance of Treynor Measure Treynor ranking Fund E(return) % 1 9 0 2 18 -2 3 12 2 4 5 0 5 7 0 6 16 -2 7 20 -2 8 13 2 9 19 -2 10 3 0

TEST OF PERFORMANCE MEASURES AS INVESTMENT SELECTORS

Data sets To determine whether Sharpe, Jensen, and Treynor measures made good investment selectors we looked at three sets of data. The first data set consisted of monthly returns for 231 mutual funds, S&P 500 and treasury bills for the period January 1972 to December 1989. One may argue that within the mutual funds there is a lot of changing of securities over time so calculation of betas and standard deviations will not meet the stationarity criteria that are necessary for the calculation of the Sharpe, Jensen, and Treynor measures, and consequently, if one shows that these measures do not make good investment selectors our results may be biased4. To counter this possible criticism, we also looked at monthly returns for 69 securities, S&P 500, and treasury bills for the period January 1967 to December 1992, as well as 69 portfolios created out of these securities by random selection of 10 securities and equally weighting them.

Analysis For each data set, monthly returns over a five-year period were used to calculate Sharpe, Jensen, and Treynor indices for each portfolio. Each portfolio was then ranked according to the three performance criteria. For each rank of the three performance measures holding period returns for the next year were recorded. Next, the first twelve values were dropped and monthly returns for the next sixty months were used to determine each portfolios rank according to the Sharpe, Jensen, and Treynor measures. The following

years holding period returns were then recorded as before. By repeating this process a series of returns corresponding to each performance rank were obtained for the three performance measures.

This is illustrated in Table 3 for just the top five funds ranked according to the Sharpe measure for the data set of 69 individual securities. Columns two through six represent holding period returns corresponding to investing in funds ranked #1 through #5 each year. The last row shows the rankings of these holding period returns according to the Sharpe measure. As can be seen, the Sharpe measure does not fare very well as a predictor of future performance even by its own criterion.

Table 3

Holding Period Returns for Investing According to Top Five Sharpe Ranks

Original Sharpe Rank Holding period 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 Sharpe rank for holding period returns

1 -.177 -.285 .376 .634 .102 -.157 -.014 .011 .231 .549 .255 .728 .236 .366 -.049 .086 .431 -.077 .577 .210 .045 2

2 -.097 .119 .270 .264 -.069 .014 .069 .287 .217 .257 .259 .106 .534 .367 .046 .164 .244 -.028 .759 .235 .198 29

3 Returns -.233 -.134 .029 .852 -.048 .0667 .471 .131 .299 .485 .216 -.060 .660 .235 -.014 .149 .208 .071 .149 .091 .817 55

4 -.1341 -.2196 .416 .263 .019 .169 .341 .085 -.217 .383 .099 .278 .440 .268 -.184 .073 .228 .089 .328 .317 .048 7

5 -.311 -.291 .566 .400 -.163 .099 .303 -.033 .198 .077 .195 .300 .383 .146 .068 -.010 .639 .043 .210 .060 .041 60

10

The last row for the entire 69 securities was regressed against the first row to determine whether there was any correlation between the original rankings and the holding period returns ranking. A positive beta from this regression would suggest that, if one invested each year in portfolios that had high Sharpe rankings, then the outcome of such an investment strategy would also have a high Sharpe ranking. That is, investing in past high performers, according to the Sharpe measure, leads to future high performance, at least, according to the Sharpe measure. This result would validate to some extent the use of Sharpe measure as a performance measure even if it failed to distinguish between chance and managerial performance on a yearly basis.

The results of regressing original rankings with holding period rankings for the three performance measures and for the three data sets are given in Table 4.

11

Table 4

Results of Regressing Original Rankings with Rankings of Holding Period Portfolios

Regression coefficients for rankings based on: Sharpe Mutual Funds data set Portfolios from 69 securities 69 Individual Securities Beta t-ratio Beta t-ratio beta t-ratio .073 .890 .072 .595 .130 1.074 Jensen .056 .737 .048 .392 -.023 -.190 Treynor .097 .553 .048 .397 .110 .908

12

In all cases -- mutual funds, individual securities, and the random portfolios-- the beta coefficient is not significantly different from zero. This suggests that none of the performance measures did well as investment selectors even according to their own criterion.

Next, we compared investing in the #1 fund, according to each criterion, with a buy and hold strategy. The results are given in Table 5. As can be seen, in virtually all cases there was either no significant difference between the buy and hold strategy and the #1 strategy, or the buy and hold strategy performed better according to the performance measures own criterion.

13

Table 5

Comparison of Returns from a Buy and Hold Strategy with Returns from Portfolios Selected Based on # 1 Ranking

Sharpe Mutual Funds Portfolios Buy & Hold # 1 Strategy Buy & Hold # 1 Strategy Individual Securities Buy & Hold # 1 Strategy .915 .804 .437 .417 .156 .152

Jensen .166 .065 .026 .006 .051 .029

Treynor .219 .225 .077 .079 .187 .125

14

Lastly, the strategies of investing in the #1 ranked funds were compared with the strategy of randomly selecting a fund each year. One thousand trials were run for each data set. Table 5 shows the number of times the random selection strategy beat out the #1 strategy according to its own measure of performance. The results indicate the failure of these measures to outperform even completely random selection.

15

Table 6

Comparison of Completely Random Selection with Selections Based on # 1 Ranking

Sharpe Mutual Funds Portfolios 419 Individual Securities 497 797*

Jensen

Treynor

502

577

770

482

614

473

* Number of times, out of 1000 trials, random selection strategy beat out selection based on the #1 strategy according to its own criterion.

16

FUTURE OF PERFORMANCE MEASURES

While in this paper we have demonstrated the uselessness of only Sharpe, Jensen, and Treynor indices as performance measures, it is our contention that the likelihood of finding any meaningful portfolio performance measure in the future is also not very good. The reason for this pessimism is that a performance measure that can be believed to be able to separate managerial skill from mere chance has to be one that can also be relied upon to make future investment selections. And this ability for superior future selectivity has to rely exclusively on past data, a requirement that defies all available evidence in favor of weak form of market efficiency.

RELATED LITERATURE

There are two sets of papers available in the finance literature that draw similar conclusions as the one made in this paper, but neither lay out in the stark terms that we have the failure of all performance measures. As a representative of the first set of papers one may start with Rolls famous critique of CAPM tests (1977) where he showed the impossibility of testing CAPM, and in his later paper discussed the ambiguity in performance evaluation if CAPM is used as a benchmark (1978). Our point, of course, is that this ambiguity will be there no matter what performance measure is used. The second set of papers are those that talk about persistence of winners. See, for example, Goetzmann and Ibbotson (1994), and Kahn and Rudd (1995). The evidence presented in

17

these papers suggests that winners do not repeat themselves. Our response to this research is that the question of whether winners repeat themselves or not cannot be answered until we have a meaningful definition of a winner. Given the arguments presented in this paper the chances of finding such a definition appears to be remote. In terms of the spirit, the paper that comes closest to ours, though it follows different reasoning, is Robert Fergusons (1986), The Trouble with Performance Measurement: You cannot do it, you never will, and who would want to?.

CONCLUSION

How much a portfolio earns as returns is, perhaps, intuitively the most appealing measure of performance. However, it has been demonstrated conclusively that simply looking at the returns may be misleading due to the element of chance present in the outcome of any portfolio. Surprisingly, this common-sensical argument has not been applied to riskadjusted performance measures to show their inefficacy. If anything, the risk-adjusted performance measures, that are touted as improvements over the naive returns as measures of performance, have even more problems because risk cannot be defined in a universally acceptable way, not to mention the problem of needing ex-ante risk but observing only ex-post risk.

So where does this all lead us? Unfortunately, despite our fervent desire to be able to distinguish winners from losers, it leaves us where we always were -- nowhere!5 The problem with the performance measures lies in the way returns are generated. It has been

18

more than a century since we have known about the randomness of stock prices, but perhaps the problem is even older and deeper as the following verses from the Ecclesiastics so eloquently state:

I returned, and saw under the sun, that the race is not to the swift, nor the battle to the strong, neither yet bread to the wise, nor yet riches to men of understanding, nor yet favor to men of skill; but time and chance happeneth to them all.

19

REFERENCES

Ferguson, Robert. (1986), The Trouble with Performance Measures, Journal of Portfolio Management, Spring 1986, pp. 4-9.

Goetzmann, William N., Ibbotson, Roger G.(1994), Do Winners Repeat?, Journal of Portfolio Management , Winter 1994, pp. 9-18.

Jensen, Michael. (1968), The Performance of Mutual funds in the Period 1945-1964, Journal of finance, May 1968, pp. 389-416.

Kahn, Ronald N., Rudd, Andrew. (1995), Does Historical Performance Predict Future Performance, Financial Analysts Journal, November-December 1995, pp. 43-52.

Roll, Richard. (1977), A Critique of the Asset Pricing Theorys Tests; Part I: On Past and Potential Testability of the Theory, Journal of Financial Economics, 4, No. 2, March 1977, pp. 129-176.

Roll, Richard. (1978), Ambiguity When Performance is Measured by the Securities Market Line, Journal of Finance, 33, September 1978, pp. 1051-69.

20

Sharpe, William F. (1966), Mutual fund Performance, Journal of business, January 1966, pp. 119-138.

Treynor, Jack L. (1965), How to Rate Management Investment Funds, Harvard Business Review, 43, January-February 1965, pp. 63-75.

The notions of past performance and future performance are obviously linked. No one would want to reward a past performance if it was merely due to chance, that is, one did not expect it to be a predictor of good future performance. 22 Sharpe measure : (Ri - Rf)/i Jensen measure : Ri - [Rf + i (Rm Rf) Treynor measure : (Ri - Rf)/i Where Ri is return on security i, Rf is the riskfree rate, is the standard deviation of security is returns and is security is systematic risk. 3 Ofcourse, a longer period of observation will allow us to separate the superior performers from inferior performers. However, if it takes more than twenty periods to evaluate a portfolios performance, even in this relatively simple case where the element of chance is equivalent to a tossing of a coin with the same fixed outcomes each year, then one can hardly view it as a practicable way of performance evaluation in more realistic situations where the risk free rate, risk premia, chance and skill may all be varying in size over time. 4 Though this can hardly be considered a valid criticism because it is exactly in these circumstances that these measures are actually used. 5 If we are willing to forego our desire to rank, then we can at least divide the funds into groups that may be preferred by a certain class of investors on the basis of stochastic dominance rules.

21

You might also like

- Core and BssDocument1 pageCore and BssSalman ZiaNo ratings yet

- This Module Describes The Differences Between A Formula Item and A BOM Item, and The Prerequisite Setup at TheDocument1 pageThis Module Describes The Differences Between A Formula Item and A BOM Item, and The Prerequisite Setup at TheSalman ZiaNo ratings yet

- Re: Security & Maintenance of It AssetsDocument1 pageRe: Security & Maintenance of It AssetsSalman ZiaNo ratings yet

- Server PlatformDocument4 pagesServer PlatformSalman ZiaNo ratings yet

- Earnings PDFDocument42 pagesEarnings PDFSalman ZiaNo ratings yet

- Project Accounting II ManualDocument136 pagesProject Accounting II ManualSamina InkandellaNo ratings yet

- Impact of Global Financial Crisis On PakistanDocument16 pagesImpact of Global Financial Crisis On Pakistannaumanayubi93% (15)

- ARCH and GARCH Estimation: Dr. Chen, Jo-HuiDocument39 pagesARCH and GARCH Estimation: Dr. Chen, Jo-HuiSalman Zia100% (1)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5795)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Berjaya Land Berhad - Annual Report 2016Document50 pagesBerjaya Land Berhad - Annual Report 2016Yee Sook YingNo ratings yet

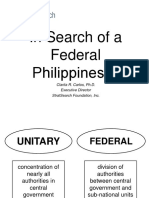

- In Search of A Federal Philippines - Dr. Clarita CarlosDocument61 pagesIn Search of A Federal Philippines - Dr. Clarita CarlosJennyfer Narciso MalobagoNo ratings yet

- The Effects of Workforce Diversity On Organizational Development and InnovationDocument6 pagesThe Effects of Workforce Diversity On Organizational Development and InnovationIqraShaheen100% (1)

- Airtel VRIO & SWOT AnalysisDocument11 pagesAirtel VRIO & SWOT AnalysisRakesh Skai50% (2)

- Gautam BhattacharyaDocument2 pagesGautam BhattacharyaMOORTHY.KENo ratings yet

- Property Surveyor CVDocument2 pagesProperty Surveyor CVMike KelleyNo ratings yet

- A Presentation On Dell Inventory ControlDocument10 pagesA Presentation On Dell Inventory Controlalamazfar100% (1)

- 2009 04 Searchcrm SaasDocument18 pages2009 04 Searchcrm SaasShruti JainNo ratings yet

- Chapter 18 Multinational Capital BudgetingDocument15 pagesChapter 18 Multinational Capital Budgetingyosua chrisma100% (1)

- Latest Form 6 Revised.1998Document6 pagesLatest Form 6 Revised.1998Chrizlennin MutucNo ratings yet

- CEO or Head of Sales & MarketingDocument3 pagesCEO or Head of Sales & Marketingapi-78902079No ratings yet

- Outlook Project1Document63 pagesOutlook Project1Harshvardhan Kakrania100% (2)

- China-Pakistan Economic Corridor Power ProjectsDocument9 pagesChina-Pakistan Economic Corridor Power ProjectsMuhammad Hasnain YousafNo ratings yet

- Comparative Vs Competitive AdvantageDocument19 pagesComparative Vs Competitive AdvantageSuntari CakSoenNo ratings yet

- Boynton SM CH 14Document52 pagesBoynton SM CH 14jeankopler50% (2)

- PDFDocument4 pagesPDFgroovercm15No ratings yet

- Singapore-Listed DMX Technologies Attracts S$183.1m Capital Investment by Tokyo-Listed KDDI CorporationDocument3 pagesSingapore-Listed DMX Technologies Attracts S$183.1m Capital Investment by Tokyo-Listed KDDI CorporationWeR1 Consultants Pte LtdNo ratings yet

- Ghaleb CV Rev1Document5 pagesGhaleb CV Rev1Touraj ANo ratings yet

- SWOT Analysis - StarbucksDocument9 pagesSWOT Analysis - StarbucksTan AngelaNo ratings yet

- Alternative Calculation For Budgeted Factory Overhead CostsDocument7 pagesAlternative Calculation For Budgeted Factory Overhead CostsChoi MinriNo ratings yet

- Angel Broking Project Report Copy AviDocument76 pagesAngel Broking Project Report Copy AviAvi Solanki100% (2)

- ACTSC 221 - Review For Final ExamDocument2 pagesACTSC 221 - Review For Final ExamDavidKnightNo ratings yet

- Parking Garage Case PaperDocument10 pagesParking Garage Case Papernisarg_No ratings yet

- Pipes and Tubes SectorDocument19 pagesPipes and Tubes Sectornidhim2010No ratings yet

- Tally ERP 9: Shortcut Key For TallyDocument21 pagesTally ERP 9: Shortcut Key For TallyDhurba Bahadur BkNo ratings yet

- Freshworks - Campus Placement - InformationDocument6 pagesFreshworks - Campus Placement - InformationRishabhNo ratings yet

- Marketing Cas StudyDocument4 pagesMarketing Cas StudyRejitha RamanNo ratings yet

- GST - Most Imp Topics - CA Amit MahajanDocument5 pagesGST - Most Imp Topics - CA Amit Mahajan42 Rahul RawatNo ratings yet

- Companies ActDocument21 pagesCompanies ActamitkrhpcicNo ratings yet

- This Study Resource Was: Painter CorporationDocument3 pagesThis Study Resource Was: Painter CorporationIllion IllionNo ratings yet