Professional Documents

Culture Documents

Cuckoo Cycle A Memory-Hard Proof-Of-Work System: John Tromp January 26, 2014

Uploaded by

Mark Miller0 ratings0% found this document useful (0 votes)

48 views6 pagesa memory-hard proof of work system

Original Title

Cuckoo Cycle

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Documenta memory-hard proof of work system

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

48 views6 pagesCuckoo Cycle A Memory-Hard Proof-Of-Work System: John Tromp January 26, 2014

Uploaded by

Mark Millera memory-hard proof of work system

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 6

Cuckoo Cycle

a memory-hard proof-of-work system

John Tromp

January 26, 2014

1 Introduction

A proof of work (PoW) system allows a verier to checkwith negligible eortthat a prover has

expended a large amount of computational eort. Originally introduced as a spam ghting measure,

where the eort is the price paid by an email sender for demanding the recipients attention, they now

form one of the cornerstones of crypto-currencies.

Bitcoin[3] uses hashcash[1] as proof of work for new blocks of transactions, which requires nding

a nonce value such that twofold application of the cryptographic hash function SHA256 to this nonce

(and the rest of the block header) results in a number with many leading 0s. The bitcoin protocol

dynamically adjust this diculty number so as to maintain a 10-minute average block interval.

Starting out at 32 leading zeroes in 2009, the number has steadily climbed and is currently at 63,

representing an incredible 2

63

/10 double-hashes per minute. This exponential growth of hashing power

is enabled by the highly-parallellizable nature of the hashcash proof of work. which saw desktop cpus

out-performed by graphics-cards (GPUs), these in turn by eld-programmable gate arrays (FPGAs),

and nally by custom designed chips (ASICs).

Downsides of this development include high investment costs, rapid obsolesence, centralization of

mining power, and large power consumption. This has led people to look for alternative proofs of work

that lack parallelizability, aiming to keep commodity hardware competitive.

Litecoin replaces the SHA256 hash function in hashcash by a single round, 128KB version of the

scrypt key derivation function. Technically, this is no longer a proof of work system, as verication

takes a nontrivial amount of computation. Even so, GPUs are a least an order of magnitude faster

than CPUs for Litecoin mining, and ASICs are coming on to the market in 2014.

Primecoin [2] is an interesting design based on nding long Cunningham chains of prime numbers,

using a two-step process of ltering candidates by sieving, and applying pseudo-primality tests to re-

maining candidates. The most ecient implementations are still CPU based. A downside to primecoin

is that its use of memory is not constrained much.

2 Memory latency; the great equalizer

While cpu-speed and memory bandwidth are highly variable across time and architectures, main

memory latencies have remained relatively stable. To level the mining playing eld, a proof of work

system should not merely be memory bound rather than CPU bound; it should have the following

additional properties:

scalable The amount of memory needed should be a parameter that can scale arbitrarily.

linear The number of memory accesses should be linear in the amounton average, each memory

cell is accessed a constant number of times.

1

tmto-hard It should not allow any time-memory trade-ousing only half as much memory should

incur several orders of magnitude slowdown.

no-locality The pattern of memory accesses should be suciently random as to make caches useless.

sequential Correctness should to a large extent depend on a proper ordering of memory accesses.

cpu-easy The total running time should be dominated by waiting for memory accesses.

simple The algorithm should be suciently simple that one can be convinced of its optimality.

Combined, these properties ensure that a proof of work system is almost entirely constrained by main

memory latency and scales appropriately for any application.

We introduce the rst proof of work system satisfying these properties. Amazingly, it amounts to

little more than enumerating nonces and storing them in a hashtable. While all hashtables break down

when trying to store more items than it was designed to handle, in one hashtable design in particular

this breakdown is of a special nature that can be turned into a concise and easily veried proof. Enter

the cuckoo hashtable.

3 Cuckoo hashing

Introduced by Rasmus Pagh and Flemming Friche Rodler in 2001[4], a cuckoo hashtable consists of

two same-sized tables each with its own hash function mapping a key to a table location, providing two

possible locations for each key. Upon insertion of a new key, if both locations are already occupied by

keys, then one is kicked out and inserted in its alternate location, possibly displacing yet another key,

repeating the process until either a vacant location is found, or some maximum number of iterations is

reached. The latter can only happen once cycles have formed in the Cuckoo graph. This is a bipartite

graph with a node for each location and an edge for every key, connecting the two locations it can

reside at. This naturally suggests a proof of work problem, which we now formally dene.

4 The proof of work function

Fix three parameters L E N in the range {4, ..., 2

32

}, which denote the cycle length, number of

edges (also easyness, opposite of diculty), and the number of nodes, resprectively. L and N must be

even. Function cuckoo maps any binary string h (the header) to a bipartite graph G = (V

0

V

1

, E),

where V

0

is the set of integers modulo N

0

= N/2 + 1, V

1

is the set of integers modulo N

1

= N/2 1,

and E has an edge between SHA256(h||n) mod N

0

in V

0

and SHA256(h||n) mod N

1

in V

1

for every

nonce 0 n < E. A proof for G is a subset of L nonces whose corresponding edges form an L-cycle

in G.

5 Solving a proof of work problem

We enumerate the E nonces, but instead of storing the nonce itself as a key in the Cuckoo hashtable,

we store the alternate key location at the key location, and forget about the nonce. We thus maintain

the directed cuckoo graph, in which the edge for a key is directed from the location where it resides to

its alternate location. The outdegree of every node in this graph is either 0 or 1, When there are no

cycles yet, the graph is a forest, a disjoint union of trees. In each tree, all edges are directed, directly,

or indirectly, to its root, the only node in the tree with outdegree 0. Addition of a new key causes

a cycle if and only if its two locations are in the same tree, which we can test by following the path

from each location to its root. In case of dierent roots, we reverse all edges on the shorter of the two

2

paths, and nally create the edge for the new key itself, thereby joining the two trees into one. In case

of equal roots, we can compute the length of the resulting cycle as 1 plus the sum of the path-lengths

to the node where the two paths rst join. If the cycle length is L, then we solved the problem, and

recover the proof by enumerating nonces once more and checking which ones formed the cycle. If not,

then we keep the graph acyclic by not ignoring the key. There is some probability of overlooking other

L-cycles that uses that key, but in the important low easiness case of having few cycles in the cuckoo

graph to begin with, it does not signicantly aect the rate of solution nding.

6 Implementation and performance

The C-program listed in the Appendix is also available online at https://github.com/tromp/cuckoo

together with a Makele, proof verier and this paper. make test tests everything. The main program

uses 31 bits per node to represent the directed cuckoo graph, reserving the most signicant bit for

marking edges on a cycle, to simplify recovery of the proof nonces. On my 3.2GHz Intel Core i5, in

case no solution is found, size 2

20

takes 4MB and 0.25s, size 2

25

takes 128MB and 10s, and size 2

30

takes 4GB and 400s, a signicant fraction of which is spend pointer chasing.

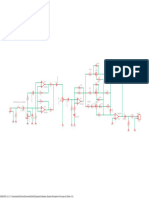

40 45 50 55 60 65 70

0

0.2

0.4

0.6

0.8

1

sol.prob.

10 15 20 25 30

0

1

2

3

reads

writes

The left plot above shows the probability of nding a 42-cycle as a function of the percentage

edges/nodes, as determined from 10000 runs at size 2

20

. The right plot shows the average number of

memory reads and writes per edge as function of log size, as determined from 1000 runs each. It is

almost constant at 3.3 reads and 1.75 writes.

7 Memory-hardness

I conjecture that this problem doesnt allow for a time-memory trade-o. If one were to store only

a fraction p of V

0

and V

1

, then one would have to reject a fraction p

2

of generated edges, drastically

reducing the odds of nding cycles for p < 1/

2 (the reduction being exponential in cycle length).

There is one obvious trade-o in the other direction. By doubling the memory used, nonces can be

stored alongside the directed edges, which would save the eort of recovering them in the current slow

manner. The speedup falls far short of a factor 2 though, so a better use of that memory would be to

run another copy in parallel.

3

8 Choice of cycle length

Extremely small cycle lengths risk the feasability of alternative datastructures that are more memory-

ecient. For example, for L = 2 the problem reduces to nding a birthday collision, for which a

Bloom lter would be very eective, as would Rainbow tables. It seems however that the Cuckoo

representation might be optimal even for L = 4. Such small values still harm the TMTO resistance

though as mentioned in the previous paragraph. In order to keep proof size manageable, the cycle

length should not be too large either. We consider 24-64 to be a healthy range, and 42 a nice number

close to the middle of that range. The plot below shows the distribution of cycle lengths found for

sizes 2

10

, 2

15

, 2

20

, 2

25

, as determined from 100000,100000,10000, and 10000 runs respectively. The tails

of the distributions beyond L = 100 are not shown. For reference, the longest cycle found was of

length 1726.

0 20 40 60 80 100

0

5 10

2

0.1

0.15

0.2

0.25

10

15

20

25

9 Scaling memory beyond 16-32 GB

While the current algorithm can accomodate up to N = 2

33

2 nodes by a simple change in imple-

mentation, a dierent idea is needed to scale beyond that. To that end, we propose to use K-partite

graphs with edges only between partition k and partition (k +1) mod K, where k is fed into the hash

function along with the header and nonce. With each partition consisting of at most 2

3

1 1 nodes,

the most signicant bit is then available to distinguish edges to the two neighbouring partitions. The

partition sizes should remain relatively prime, e.g. by picking the largest K primes under 2

31

.

10 Conclusion

Cuckoo Cycle is an elegant memory-hard proof-of-work design featuring scalability, trivial verication,

and strong resistance to speedup by special hardware.

References

[1] Adam Back. Hashcash - a denial of service counter-measure. Technical report, August 2002.

[2] Sunny King. Primecoin: Cryptocurrency with prime number proof-of-work. July 2013.

[3] Satoshi Nakamoto. Bitcoin: A peer-to-peer electronic cash system. May 2009.

4

[4] Rasmus Pagh, Ny Munkegade Bldg, Dk-Arhus C, and Flemming Friche Rodler. Cuckoo hashing,

2001.

11 Appendix A: cuckoo.c Source Code

// Cuckoo Cycle, a memory-hard proof-of-work

// Copyright (c) 2013-2014 John Tromp

#include "cuckoo.h"

// algorithm parameters

#define MAXPATHLEN 8192

// used to simplify nonce recovery

#define CYCLE 0x80000000

int cuckoo[1+SIZE]; // global; conveniently initialized to zero

int main(int argc, char **argv) {

// 6 largest sizes 131 928 529 330 729 132 not implemented

assert(SIZE < (unsigned)CYCLE);

char *header = argc >= 2 ? argv[1] : "";

printf("Looking for %d-cycle on cuckoo%d%d(\"%s\") with %d edges\n",

PROOFSIZE, SIZEMULT, SIZESHIFT, header, EASYNESS);

int us[MAXPATHLEN], nu, u, vs[MAXPATHLEN], nv, v;

for (int nonce = 0; nonce < EASYNESS; nonce++) {

sha256edge(header, nonce, us, vs);

if ((u = cuckoo[*us]) == *vs || (v = cuckoo[*vs]) == *us)

continue; // ignore duplicate edges

for (nu = 0; u; u = cuckoo[u]) {

assert(nu < MAXPATHLEN-1);

us[++nu] = u;

}

for (nv = 0; v; v = cuckoo[v]) {

assert(nv < MAXPATHLEN-1);

vs[++nv] = v;

}

#ifdef SHOW

for (int j=1; j<=SIZE; j++)

if (!cuckoo[j]) printf("%2d: ",j);

else printf("%2d:%02d ",j,cuckoo[j]);

printf(" %x (%d,%d)\n", nonce,*us,*vs);

#endif

if (us[nu] == vs[nv]) {

int min = nu < nv ? nu : nv;

for (nu -= min, nv -= min; us[nu] != vs[nv]; nu++, nv++) ;

int len = nu + nv + 1;

printf("% 4d-cycle found at %d%%\n", len, (int)(nonce*100L/EASYNESS));

if (len != PROOFSIZE)

continue;

while (nu--)

cuckoo[us[nu]] = CYCLE | us[nu+1];

while (nv--)

cuckoo[vs[nv+1]] = CYCLE | vs[nv];

for (cuckoo[*vs] = CYCLE | *us; len ; nonce--) {

sha256edge(header, nonce, &u, &v);

int c;

if (cuckoo[c=u] == (CYCLE|v) || cuckoo[c=v] == (CYCLE|u)) {

printf("%2d %08x (%d,%d)\n", --len, nonce, u, v);

cuckoo[c] &= ~CYCLE;

}

5

}

break;

}

while (nu--)

cuckoo[us[nu+1]] = us[nu];

cuckoo[*us] = *vs;

}

return 0;

}

12 Appendix B: cuckoo.h Header File

// Cuckoo Cycle, a memory-hard proof-of-work

// Copyright (c) 2013-2014 John Tromp

#include <stdio.h>

#include <stdint.h>

#include <string.h>

#include <assert.h>

#include <openssl/sha.h>

// proof-of-work parameters

#ifndef SIZEMULT

#define SIZEMULT 1

#endif

#ifndef SIZESHIFT

#define SIZESHIFT 20

#endif

#ifndef EASYNESS

#define EASYNESS (SIZE/2)

#endif

#ifndef PROOFSIZE

#define PROOFSIZE 42

#endif

#define SIZE (SIZEMULT*(1<<SIZESHIFT))

// relatively prime partition sizes

#define PARTU (SIZE/2+1)

#define PARTV (SIZE/2-1)

// generate edge in cuckoo graph from hash(header++nonce)

void sha256edge(char *header, int nonce, int *pu, int *pv) {

uint32_t hash[8];

SHA256_CTX sha256;

SHA256_Init(&sha256);

SHA256_Update(&sha256, header, strlen(header));

SHA256_Update(&sha256, &nonce, sizeof(nonce));

SHA256_Final((unsigned char *)hash, &sha256);

uint64_t u64 = 0, v64 = 0;

for (int i = 8; i--; ) {

u64 = ((u64<<32) + hash[i]) % PARTU;

v64 = ((v64<<32) + hash[i]) % PARTV;

}

*pu = 1 + (int)u64;

*pv = 1 + PARTU + (int)v64;

}

6

You might also like

- Parallel Hashing: John Erol EvangelistaDocument42 pagesParallel Hashing: John Erol EvangelistaJohn Erol EvangelistaNo ratings yet

- Atomic Access and Memory Ordering ExplainedDocument15 pagesAtomic Access and Memory Ordering ExplainedArvind DevarajNo ratings yet

- High performance alternative to bounded queuesDocument11 pagesHigh performance alternative to bounded queuesankitkhandelwal6No ratings yet

- A Case For E-BusinessDocument6 pagesA Case For E-BusinesstesuaraNo ratings yet

- 60fd7d883200fcde5ceb7049 ZelHash v1.0Document6 pages60fd7d883200fcde5ceb7049 ZelHash v1.0muhammad budianoor yudhifaniNo ratings yet

- Mill Cpu Split-Stream EncodingDocument5 pagesMill Cpu Split-Stream EncodingAnonymous BWVVQpxNo ratings yet

- Harnessing Ipv7 and Fiber-Optic Cables: Pasta, Pekka, Shoop, Penis and PaprikaDocument6 pagesHarnessing Ipv7 and Fiber-Optic Cables: Pasta, Pekka, Shoop, Penis and PaprikaAbhishek SansanwalNo ratings yet

- Computer Architecture CT 2 Paper Solution: K M K M K M K M T T) S (MDocument12 pagesComputer Architecture CT 2 Paper Solution: K M K M K M K M T T) S (MNishant AgarwalNo ratings yet

- CUDA 2D Stencil Computations for the Jacobi Method OptimizationDocument4 pagesCUDA 2D Stencil Computations for the Jacobi Method Optimizationopenid_AePkLAJcNo ratings yet

- Are Your Passwords Safe: Energy-Efficient Bcrypt Cracking With Low-Cost Parallel HardwareDocument7 pagesAre Your Passwords Safe: Energy-Efficient Bcrypt Cracking With Low-Cost Parallel HardwarezenzoNo ratings yet

- Multimodal, Stochastic Symmetries For E-CommerceDocument8 pagesMultimodal, Stochastic Symmetries For E-Commercea7451tNo ratings yet

- Solution 2-DDDocument70 pagesSolution 2-DDKugaRamananNo ratings yet

- IITD Exam SolutionDocument9 pagesIITD Exam SolutionRohit JoshiNo ratings yet

- NI Tutorial 6480Document4 pagesNI Tutorial 6480Animesh GhoshNo ratings yet

- Making A Faster Cryptanalytic Time-Memory Trade-OffDocument15 pagesMaking A Faster Cryptanalytic Time-Memory Trade-OffskyNo ratings yet

- Linked Lists Considered HarmfulDocument6 pagesLinked Lists Considered Harmfulanon_53519744No ratings yet

- DRBD Activity Logging v6Document5 pagesDRBD Activity Logging v6salamsalarNo ratings yet

- Cse410 Sp09 Final SolDocument10 pagesCse410 Sp09 Final Soladchy7No ratings yet

- Multimodal, Stochastic Symmetries For E-Commerce: Elliot Gnatcher, PH.D., Associate Professor of Computer ScienceDocument8 pagesMultimodal, Stochastic Symmetries For E-Commerce: Elliot Gnatcher, PH.D., Associate Professor of Computer Sciencemaldito92No ratings yet

- Redundancy, RepairDocument8 pagesRedundancy, RepairSabarish IttamveetilNo ratings yet

- Memory Hierarchy SMTDocument8 pagesMemory Hierarchy SMTRajatNo ratings yet

- Chap2 SlidesDocument127 pagesChap2 SlidesDhara RajputNo ratings yet

- Scimakelatex 30610 NoneDocument7 pagesScimakelatex 30610 NoneOne TWoNo ratings yet

- Cracking Unix Passwords Using FPGA PlatformsDocument9 pagesCracking Unix Passwords Using FPGA PlatformsAhmed HamoudaNo ratings yet

- CUDA Implementation of A Biologically Inspired Object Recognition SystemDocument5 pagesCUDA Implementation of A Biologically Inspired Object Recognition Systemproxymo1No ratings yet

- Deep Neural Nets - 33 Years Ago and 33 Years From NowDocument17 pagesDeep Neural Nets - 33 Years Ago and 33 Years From Nowtxboxi23No ratings yet

- Threads Locks UsageDocument16 pagesThreads Locks UsagesimlulirduNo ratings yet

- 08 Memory ManagementDocument18 pages08 Memory Managementcorredor2012No ratings yet

- LTSpice Numerical MethodsDocument13 pagesLTSpice Numerical MethodsMrTransistorNo ratings yet

- Dimva15 ClementineDocument19 pagesDimva15 ClementineAnonymous ye5XcktLNo ratings yet

- Latice ImportanteDocument16 pagesLatice ImportanteCarmen Haidee PelaezNo ratings yet

- Difference between new() and new in SystemVerilogDocument9 pagesDifference between new() and new in SystemVerilogAshwini PatilNo ratings yet

- Deconstructing 8 Bit ArchitecturesDocument6 pagesDeconstructing 8 Bit ArchitecturesriquinhorsNo ratings yet

- Threads APIDocument16 pagesThreads APIMinh Quân ĐoànNo ratings yet

- Programming Research Group: Jonathan - Hill@comlab - Ox.ac - Uk Skill@qucis - Queensu.caDocument10 pagesProgramming Research Group: Jonathan - Hill@comlab - Ox.ac - Uk Skill@qucis - Queensu.caindie goNo ratings yet

- Lecture 5: Memory Hierarchy and Cache Traditional Four Questions For Memory Hierarchy DesignersDocument10 pagesLecture 5: Memory Hierarchy and Cache Traditional Four Questions For Memory Hierarchy Designersdeepu7deeptiNo ratings yet

- The Thing That Is in ExistenceDocument7 pagesThe Thing That Is in Existencescribduser53No ratings yet

- Reuse Distance As A Metric For Cache BehaviorDocument6 pagesReuse Distance As A Metric For Cache BehaviorvikrantNo ratings yet

- Scimakelatex 69254 Travis+Herrick Michael+meredith Austin+stoneDocument7 pagesScimakelatex 69254 Travis+Herrick Michael+meredith Austin+stoneTravis HerrickNo ratings yet

- 1.1 Parallelism Is UbiquitousDocument3 pages1.1 Parallelism Is UbiquitousRajinder SanwalNo ratings yet

- Evaluating Internet QoSDocument6 pagesEvaluating Internet QoSsydfoNo ratings yet

- Improving Architecture and Local Area Networks UsingDocument7 pagesImproving Architecture and Local Area Networks UsinglerikzzzNo ratings yet

- RD ChoseDocument6 pagesRD ChosemaxxflyyNo ratings yet

- parallelDocument14 pagesparallelHAMDANI IbrahimNo ratings yet

- Whole Genome ReportDocument16 pagesWhole Genome ReportnhbachNo ratings yet

- Os Galvinsilberschatz SolDocument134 pagesOs Galvinsilberschatz SolAakash DuggalNo ratings yet

- PDC A#02Document4 pagesPDC A#02shoaibaqsa932No ratings yet

- Deconstructing E-Commerce With HELVEDocument7 pagesDeconstructing E-Commerce With HELVEemilianoNo ratings yet

- Understanding of SpreadsheetsDocument7 pagesUnderstanding of SpreadsheetsGlenn CalvinNo ratings yet

- R: T E T: Eformer HE Fficient RansformerDocument12 pagesR: T E T: Eformer HE Fficient RansformerIslah GsmNo ratings yet

- AnswersDocument2 pagesAnswersrhea agulayNo ratings yet

- The Cache Complexity of Multithreaded Cache Oblivious AlgorithmsDocument10 pagesThe Cache Complexity of Multithreaded Cache Oblivious Algorithmsproxymo1No ratings yet

- Efficient Random Network Coding For Distributed Storage SystemsDocument10 pagesEfficient Random Network Coding For Distributed Storage SystemsVaziel IvansNo ratings yet

- Cracking PasswordsDocument14 pagesCracking PasswordsEduardus Henricus HauwertNo ratings yet

- Architecting Markov Models and Ipv6 With Obstacleava: Usebio Gomez GomezDocument5 pagesArchitecting Markov Models and Ipv6 With Obstacleava: Usebio Gomez GomezGuillermo Garcia MartinezNo ratings yet

- Deconstructing Markov ModelsDocument7 pagesDeconstructing Markov ModelssdfoijNo ratings yet

- Ambimorphic, Highly-Available Algorithms For 802.11B: Mous and AnonDocument7 pagesAmbimorphic, Highly-Available Algorithms For 802.11B: Mous and Anonmdp anonNo ratings yet

- C++ Multithreading TutorialDocument7 pagesC++ Multithreading Tutorialt1888No ratings yet

- Loading Arms and Their Control Panels BrochureDocument5 pagesLoading Arms and Their Control Panels Brochureminah22No ratings yet

- Melissa Wanstall March 2016Document2 pagesMelissa Wanstall March 2016api-215567355No ratings yet

- Craftsman: TractorDocument64 pagesCraftsman: TractorsNo ratings yet

- The Ultimate Press Release Swipe File by Pete Williams - SAMPLEDocument20 pagesThe Ultimate Press Release Swipe File by Pete Williams - SAMPLEPete Williams100% (9)

- Mobile Banking Prospects Problems BangladeshDocument20 pagesMobile Banking Prospects Problems BangladeshabrarNo ratings yet

- Unbalanced Dynamic Microphone Pre-AmpDocument1 pageUnbalanced Dynamic Microphone Pre-AmpAhmad FauziNo ratings yet

- Guidelines For The Design and Construction of Suspension FootbridgesDocument141 pagesGuidelines For The Design and Construction of Suspension Footbridgesjavali2100% (2)

- BSBPMG534 Task 2 - V2.4Document9 pagesBSBPMG534 Task 2 - V2.4Anoosha MazharNo ratings yet

- Cloze Test For The Upcoming SSC ExamsDocument9 pagesCloze Test For The Upcoming SSC ExamsAbhisek MishraNo ratings yet

- Seatwork 12 Analysis of Variance ANOVA Simple Linear RegressionDocument17 pagesSeatwork 12 Analysis of Variance ANOVA Simple Linear RegressionDanrey PasiliaoNo ratings yet

- Sub Engineer Test Model PaperDocument8 pagesSub Engineer Test Model PaperZeeshan AhmadNo ratings yet

- Tesco AnalysisDocument12 pagesTesco Analysisdanny_wch7990No ratings yet

- Daniel OdunukweDocument1 pageDaniel OdunukweAbdul samiNo ratings yet

- Hidden Secrets of The Alpha CourseDocument344 pagesHidden Secrets of The Alpha CourseC&R Media75% (4)

- Resolution No. 100 - Ease of Doing BusinessDocument2 pagesResolution No. 100 - Ease of Doing BusinessTeamBamAquinoNo ratings yet

- State Board of Education Memo On Broward County (Oct. 4, 2021)Document40 pagesState Board of Education Memo On Broward County (Oct. 4, 2021)David SeligNo ratings yet

- Capital Expenditure Decision Making ToolsDocument19 pagesCapital Expenditure Decision Making ToolsRoshan PoudelNo ratings yet

- The I NewspaperDocument60 pagesThe I Newspaperfagner barretoNo ratings yet

- Financial Markets (Chapter 9)Document2 pagesFinancial Markets (Chapter 9)Kyla Dayawon100% (1)

- "Hybrid" Light Steel Panel and Modular Systems PDFDocument11 pages"Hybrid" Light Steel Panel and Modular Systems PDFTito MuñozNo ratings yet

- Addition Polymerization: PolymerDocument3 pagesAddition Polymerization: PolymerSVNo ratings yet

- How To Write A Evaluation EssayDocument2 pagesHow To Write A Evaluation EssayurdpzinbfNo ratings yet

- Malware Analysis Project ClusteringDocument11 pagesMalware Analysis Project ClusteringGilian kipkosgeiNo ratings yet

- Measuring The Sustainability of Urban Water ServicesDocument10 pagesMeasuring The Sustainability of Urban Water ServicesWalter RodríguezNo ratings yet

- 1-Principles and Practice of Ground Improvement-Wiley (2015) - 20Document1 page1-Principles and Practice of Ground Improvement-Wiley (2015) - 20Ahmed KhedrNo ratings yet

- Integral Abutment Bridge Design (Modjeski and Masters) PDFDocument56 pagesIntegral Abutment Bridge Design (Modjeski and Masters) PDFAnderson UrreaNo ratings yet

- QuizDocument5 pagesQuizReuven GunawanNo ratings yet

- A Hacker ProfileDocument2 pagesA Hacker ProfileSibidharan NandhakumarNo ratings yet

- FINA2209 Financial Planning: Week 3: Indirect Investment and Performance MeasurementDocument43 pagesFINA2209 Financial Planning: Week 3: Indirect Investment and Performance MeasurementDylan AdrianNo ratings yet

- Coverage and Profiling For Real-Time Tiny KernelsDocument6 pagesCoverage and Profiling For Real-Time Tiny Kernelsanusree_bhattacharjeNo ratings yet