Professional Documents

Culture Documents

IEEE 754floatingpt

Uploaded by

Brijesh B Naik0 ratings0% found this document useful (0 votes)

19 views16 pagesieee floating point representation

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Documentieee floating point representation

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

19 views16 pagesIEEE 754floatingpt

Uploaded by

Brijesh B Naikieee floating point representation

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

You are on page 1of 16

IEEE 754-1985

From Wikipedia, the free encyclopedia

The first IEEE Standard for Binary Floating-Point Arithmetic (IEEE 754-1985) set the standard for

floating-point computation for 23 years. It became the most widely-used standard for floating-

pointcomputation, and is followed by many CPU and FPU implementations. Its binary floating-point

formats and arithmetic are preserved in the new IEEE 754-2008 standard which replaced it.

The 754-1985 standard defines formats for representing floating-point numbers (including negative

zero and denormal numbers) and special values (infinities and NaNs) together with a set of floating-point

operations that operate on these values. It also specifies four rounding modes and five exceptions

(including when the exceptions occur, and what happens when they do occur).

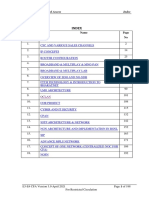

Contents

[hide]

1 Summary

2 Structure of a floating-point number

o 2.1 Bit conventions used in this article

o 2.2 General layout

2.2.1 Exponent biasing

2.2.2 Cases

o 2.3 Single-precision 32-bit

2.3.1 Notes

2.3.2 Range and Precision Table

o 2.4 A more complex example

o 2.5 Double-precision 64 bit

3 Comparing floating-point numbers

4 Rounding floating-point numbers

5 Extending the real numbers

6 Functions and predicates

o 6.1 Standard operations

o 6.2 Recommended functions and predicates

7 History

8 See also

9 References

10 Further reading

11 External links

[edit]Summary

IEEE 754-1985 specifies four formats for representing floating-point values: single-precision (32-bit),

double-precision (64-bit), single-extended precision ( 43-bit, not commonly used) and double-extended

precision ( 79-bit, usually implemented with 80 bits). Only 32-bit values are required by the standard; the

others are optional. Many languages specify that IEEE formats and arithmetic be implemented, although

sometimes it is optional. For example, the C programming language, which pre-dated IEEE 754, now

allows but does not require IEEE arithmetic (the C float typically is used for IEEE single-precision

and double uses IEEE double-precision).

The full title of the standard is IEEE Standard for Binary Floating-Point Arithmetic (ANSI/IEEE Std

754-1985), and it is also known as IEC 60559:1989, Binary floating-point arithmetic for

microprocessor systems (originally the reference number was IEC 559:1989).

[1]

Later there was

an IEEE 854-1987 for "radix independent floating point" as long as the radix is 2 or 10. In June 2008, a

major revision to IEEE 754 and IEEE 854 was approved by the IEEE. See IEEE 754r.

[edit]Structure of a floating-point number

Following is a description of the standard's format for floating-point numbers.

[edit]Bit conventions used in this article

Bits within a word of width W are indexed by integers in the range 0 to W1 inclusive. The bit with index

0 is drawn on the right. Unless otherwise stated, the lowest indexed bit is the LSB (Least Significant Bit,

the one that if changed would cause the smallest variation of the represented value).

[edit]General layout

The three fields in an IEEE 754 float

Binary floating-point numbers are stored in a sign-magnitude form where the most significant bit is

the sign bit,exponent is the biased exponent, and "fraction" is the significand without the most significant

bit.

[edit]Exponent biasing

The exponent is biased by (2

e 1

) 1, where e is the number of bits used for the exponent field (e.g. if

e=8, then (2

8 1

) 1 = 128 1 = 127 ). See also Excess-N. Biasing is done because exponents have

to be signed values in order to be able to represent both tiny and huge values, but two's complement, the

usual representation for signed values, would make comparison harder. To solve this, the exponent is

biased before being stored by adjusting its value to put it within an unsigned range suitable for

comparison.

For example, to represent a number which has exponent of 17 in an exponent field 8 bits wide:

exponentfield = 17 + (2

8 1

) 1 = 17 + 128 1 = 144.

[edit]Cases

The most significant bit of the significand (not stored) is determined by the value of biased exponent. If 0

< exponent < 2

e

1, the most significant bit of the significand is 1, and the number is said to

benormalized. If exponent is 0 and fraction is not 0, the most significant bit of the significand is 0 and the

number is said to be de-normalized. Three other special cases arise:

1. if exponent is 0 and fraction is 0, the number is 0 (depending on the sign bit)

2. if exponent = 2

e

1 and fraction is 0, the number is infinity (again depending on the sign bit),

and

3. if exponent = 2

e

1 and fraction is not 0, the number being represented is not a number (NaN).

This can be summarized as:

Type Exponent Fraction

Zeroes 0 0

Denormalized numbers 0 non zero

Normalized numbers 1 to 2

e

2 any

Infinities 2

e

1 0

NaNs 2

e

1 non zero

[edit]Single-precision 32-bit

A single-precision binary floating-point number is stored in 32 bits.

Bit values for the IEEE 754 32bit float 0.15625

The exponent is biased by 2

8 1

1 = 127 in this case (Exponents in the range 126 to +127 are

representable. See the above explanation to understand why biasing is done). An exponent of 127

would be biased to the value 0 but this is reserved to encode that the value is a denormalized number or

zero. An exponent of 128 would be biased to the value 255 but this is reserved to encode an infinity or not

a number (NaN). See the chart above.

For normalized numbers, which are the most common, the exponent is the biased exponent

and fraction is the significand without the most significant bit.

The number has value v:

v = s 2

e

m

or

Where

s = +1 (positive numbers and +0) when the sign bit is 0

s = 1 (negative numbers and 0) when the sign bit is 1

e = exponent 127 (in other words the exponent is stored with 127 added to it, also called

"biased with 127")

m = 1.fraction in binary (that is, the significand is the binary number 1 followed by the radix point

followed by the binary bits of the fraction). Therefore, 1 m < 2.

In the example shown above, the sign is zero so s is +1, the exponent is 124

so e is 3, and the significand m is 1.01 (in binary, which is 1.25 in decimal).

The represented number is therefore +1.25 2

3

, which is +0.15625.

[edit]Notes

1. Denormalized numbers are the same except that e = 126 and m is

0.fraction. (e is not 127 : The fraction has to be shifted to the right by

one more bit, in order to include the leading bit, which is not always 1

in this case. This is balanced by incrementing the exponent to 126

for the calculation.)

2. 126 is the smallest exponent for a normalized number

3. There are two Zeroes, +0 (s is 0) and 0 (s is 1)

4. There are two Infinities + (s is 0) and (s is 1)

5. NaNs may have a sign and a fraction, but these have no meaning

other than for diagnostics; the first bit of the fraction is often used to

distinguish signaling NaNs from quiet NaNs

6. NaNs and Infinities have all 1s in the Exp field.

7. The positive and negative numbers closest to zero (represented by

the denormalized value with all 0s in the Exp field and the binary

value 1 in the Fraction field) are

2

149

1.4012985 10

45

8. The positive and negative normalized numbers closest to zero

(represented with the binary value 1 in the Exp field and 0 in the

fraction field) are

2

126

1.175494351 10

38

9. The finite positive and finite negative numbers furthest from zero

(represented by the value with 254 in the Exp field and all 1s in the

fraction field) are

(1-2

-24

)2

128[2]

3.4028235 10

38

Here is the summary table from the previous section with some 32-bit single-

precision examples:

Type Sign Exp Exp+Bias Exponent

Significand

(Mantissa)

Value

Zero 0

-

127

0

0000

0000

000 0000

0000 0000

0000 0000

0.0

Negative zero 1

-

127

0

0000

0000

000 0000

0000 0000

0.0

0000 0000

One 0 0 127

0111

1111

000 0000

0000 0000

0000 0000

1.0

Minus One 1 0 127

0111

1111

000 0000

0000 0000

0000 0000

1.0

Smallest denormalized

number

*

-

127

0

0000

0000

000 0000

0000 0000

0000 0001

2

23

2

126

=

2

149

1.4 10

45

"Middle"

denormalized number

*

-

127

0

0000

0000

100 0000

0000 0000

0000 0000

2

1

2

126

=

2

127

5.88 10

39

Largest denormalized

number

*

-

127

0

0000

0000

111 1111

1111 1111

1111 1111

(12

23

)

2

126

1.18 10

38

Smallest normalized

number

*

-

126

1

0000

0001

000 0000

0000 0000

0000 0000

2

126

1.18 10

38

Largest normalized

number

* 127 254

1111

1110

111 1111

1111 1111

1111 1111

(22

23

)

2

127

3.4 10

38

Positive infinity 0 128 255

1111

1111

000 0000

0000 0000

0000 0000

+

Negative infinity 1 128 255

1111

1111

000 0000

0000 0000

0000 0000

Not a number * 128 255

1111

1111

non zero NaN

* Sign bit can be either 0 or 1 .

[edit]Range and Precision Table

Some example range and precision values for given exponents:

Exponent Minimum Maximum Precision

0 1 1.999999880791 1.19209289551e-7

1 2 3.99999976158 2.38418579102e-7

2 4 7.99999952316 4.76837158203e-7

10 1024 2047.99987793 1.220703125e-4

11 2048 4095.99975586 2.44140625e-4

23 8388608 16777215 1

24 16777216 33554430 2

127 1.7014e38 3.4028e38 2.02824096037e31

As an example, 16,777,217 can not be encoded as a 32-bit float as it will be

rounded to 16,777,216. This shows why floating point arithmetic is unsuitable

for accounting software. However, all integers within the representable range

that are a power of 2 can be stored in a 32-bit float without rounding.

[edit]A more complex example

Bit values for the IEEE 754 32bit float -118.625

The decimal number 118.625 is encoded using the IEEE 754 system as

follows:

1. The sign, the exponent, and the fraction are extracted from the

original number. Because the number is negative, the sign bit is "1".

2. Next, the number (without the sign; i.e., unsigned, no two's

complement) is converted to binary notation, giving 1110110.101.

The 101 after the binary point has the value 0.625 because it is the

sum of:

1. (2

1

) 1, from the first digit after the binary point

2. (2

2

) 0, from the second digit

3. (2

3

) 1, from the third digit.

3. That binary number is then normalized; that is, the binary point is

moved left, leaving only a 1 to its left. The number of places it is

moved gives the (power of two) exponent: 1110110.101 becomes

1.110110101 2

6

. After this process, the first binary digit is always a

1, so it need not be included in the encoding. The rest is the part to

the right of the binary point, which is then padded with zeros on the

right to make 23 bits in all, which becomes the significand bits in the

encoding: That is, 11011010100000000000000.

4. The exponent is 6. This is encoded by converting it to binary and

biasing it (so the most negative encodable exponent is 0, and all

exponents are non-negative binary numbers). For the 32-bit IEEE 754

format, the bias is +127 and so 6 + 127 = 133. In binary, this is

encoded as 10000101.

[edit]Double-precision 64 bit

The three fields in a 64bit IEEE 754 float

Double precision is essentially the same except that the fields are wider:

The fraction part is much larger, while the exponent is only slightly larger.

NaNs and Infinities are represented with Exp being all 1s (2047). If the fraction

part is all zero then it is Infinity, else it is NaN.

For Normalized numbers the exponent bias is +1023 (so e is exponent

1023). For Denormalized numbers the exponent is (1022) (the minimum

exponent for a normalized numberit is not (1023) because normalised

numbers have a leading 1 digit before the binary point and denormalized

numbers do not). As before, both infinity and zero are signed.

Notes:

1. The positive and negative numbers closest to zero (represented by

the denormalized value with all 0s in the Exp field and the binary

value 1 in the Fraction field) are

2

1074

5 10

324

2. The positive and negative normalized numbers closest to zero

(represented with the binary value 1 in the Exp field and 0 in the

fraction field) are

2

1022

2.2250738585072020 10

308

3. The finite positive and finite negative numbers furthest from zero

(represented by the value with 2046 in the Exp field and all 1s in the

fraction field) are

((1-(1/2)

53

)2

1024

)

[2]

1.7976931348623157 10

308

[edit]Comparing floating-point numbers

Every possible bit combination is either a NaN or a number with a unique

value in the affinely extended real number system with its associated order,

except for the two bit combinations negative zero and positive zero, which

sometimes require special attention (see below). The binary representation

has the special property that, excluding NaNs, any two numbers can be

compared like sign and magnitudeintegers (although with modern computer

processors this is no longer directly applicable): if the sign bit is different, the

negative number precedes the positive number (except that negative zero and

positive zero should be considered equal), otherwise, relative order is the

same as lexicographical order but inverted for two negative

numbers; endianness issues apply.

Floating-point arithmetic is subject to rounding that may affect the outcome of

comparisons on the results of the computations.

Although negative zero and positive zero are generally considered equal for

comparison purposes, some programming language relational operators and

similar constructs might or do treat them as distinct. According to

the Java Language Specification,

[3]

comparison and equality operators treat

them as equal, but Math.min() and Math.max() distinguish them (officially

starting with Java version 1.1 but actually with 1.1.1), as do the comparison

methods equals(), compareTo() and even compare() of classes Float and

Double. For C++, the standard does not have anything to say on the subject,

so it is important to verify this (one environment tested treated them as equal

when using a floating-point variable and treated them as distinct and with

negative zero preceding positive zero when comparing floating-point literals).

[edit]Rounding floating-point numbers

The IEEE standard has four different rounding modes; the first is the default;

the others are called directed roundings.

Round to Nearest rounds to the nearest value; if the number falls

midway it is rounded to the nearest value with an even (zero) least

significant bit, which occurs 50% of the time (in IEEE 754r this mode is

called roundTiesToEven to distinguish it from another round-to-nearest

mode)

Round toward 0 directed rounding towards zero

Round toward directed rounding towards positive infinity

Round toward directed rounding towards negative infinity.

[edit]Extending the real numbers

The IEEE standard employs (and extends) the affinely extended real number

system, with separate positive and negative infinities. During drafting, there

was a proposal for the standard to incorporate theprojectively extended real

number system, with a single unsigned infinity, by providing programmers with

a mode selection option. In the interest of reducing the complexity of the final

standard, the projective mode was dropped, however. The Intel 8087 and Intel

80287 floating point co-processors both support this projective mode.

[4][5][6]

[edit]Functions and predicates

[edit]Standard operations

The following functions must be provided:

Add, subtract, multiply, divide

Square root

Floating point remainder. This is not like a normal modulo operation, it can

be negative for two positive numbers. It returns the exact value of x-

(round(x/y)*y).

Round to nearest integer. For undirected rounding when halfway between

two integers the even integer is chosen.

Comparison operations. NaN is treated specially in that NaN=NaN always

returns false.

[edit]Recommended functions and predicates

Under some C compilers, copysign(x,y) returns x with the sign of y, so

abs(x) equals copysign(x,1.0). This is one of the few operations which

operates on a NaN in a way resembling arithmetic. The function copysign

is new in the C99 standard.

x returns x with the sign reversed. This is different from 0x in some

cases, notably when x is 0. So (0) is 0, but the sign of 00 depends on

the rounding mode.

scalb (y, N)

logb (x)

finite (x) a predicate for "x is a finite value", equivalent to Inf < x < Inf

isnan (x) a predicate for "x is a nan", equivalent to "x x"

x <> y which turns out to have different exception behavior than NOT(x =

y).

unordered (x, y) is true when "x is unordered with y", i.e., either x or y is a

NaN.

class (x)

nextafter(x,y) returns the next representable value from x in the direction

towards y

[edit]History

In 1976 Intel began planning to produce a floating point coprocessor. Dr John

Palmer, the manager of the effort, persuaded them they they should try to

develop a standard for all their floating point operations. William Kahan was

hired as a consultant; he had helped improve the accuracy of Hewlett

Packard's calculators. Kahan initially recommended that the floating point

base be decimal

[7]

but the hardware design of the coprocessor was too far

advanced to make that change.

The work within Intel worried other vendors, who set up a standardization

effort to ensure a 'level playing field'. Kahan attended the second IEEE 754

standards working group meeting, held in November 1977. Here, he received

permission from Intel to put forward a draft proposal based on the standard

arithmetic part of their design for a coprocessor. The formats were similar to

but slightly different from existing VAX and CDC formats and introduced

gradual underflow. The arguments over gradual underflow lasted till 1981

when an expert commissioned by DEC to assess it sided against the

dissenters.

Even before it was approved, the draft standard had been implemented by a

number of manufacturers.

[8][9]

.

The Zuse Z3, the worlds first working computer, implemented floating point

with infinities and undefined values which were passed through operations.

These were not implemented properly in hardware in any other machine till

the Intel 8087 which was announced in 1980. This was the first chip to

implement the draft standard.

[edit]See also

IEEE 754-2008

0 (negative zero)

Intel 8087

minifloat for simple examples of properties of IEEE 754 floating point

numbers

Q (number format) For constant resolution

1. ^ "Referenced documents". "IEC 60559:1989, Binary Floating-Point

Arithmetic for Microprocessor Systems (previously designated IEC

559:1989)"

2. ^

a

b

Prof. W. Kahan (PDF). Lecture Notes on the Status of IEEE 754.

October 1, 1997 3:36 am. Elect. Eng. & Computer Science University

of California. Retrieved 2007-04-12.

3. ^ The Java Language Specification

4. ^ John R. Hauser (March 1996). "Handling Floating-Point Exceptions

in Numeric Programs" (PDF). ACM Transactions on Programming

Languages and Systems 18 (2).

5. ^ David Stevenson (March 1981). "IEEE Task P754: A proposed

standard for binary floating-point arithmetic". IEEE Computer 14 (3):

5162.

6. ^ William Kahan and John Palmer (1979). "On a proposed floating-

point standard". SIGNUM Newsletter 14 (Special): 13

21. doi:10.1145/1057520.1057522.

7. ^ W. Kahan 2003, pers. comm. to Mike Cowlishaw and others after

an IEEE 754 meeting

8. ^ Charles Severance (20 February 1998). "An Interview with the Old

Man of Floating-Point".

9. ^ Charles Severance. "History of IEEE Floating-Point Format".

Connexions.

[edit]References

[edit]Further reading

Charles Severance (March 1998). "IEEE 754: An Interview with William

Kahan" (PDF). IEEE Computer 31 (3): 114

115. doi:10.1109/MC.1998.660194. Retrieved 2008-04-28.

David Goldberg (March 1991). "What Every Computer Scientist Should

Know About Floating-Point Arithmetic" (PDF). ACM Computing

Surveys 23 (1): 548. doi:10.1145/103162.103163. Retrieved 2008-04-28.

Chris Hecker (February 1996). "Let's Get To The (Floating)

Point" (PDF). Game Developer Magazine: 1924. ISSN 1073-922X.

David Monniaux (May 2008). "The pitfalls of verifying floating-point

computations". ACM Transactions on Programming Languages and

Systems 30 (3): article #12. doi:10.1145/1353445.1353446.ISSN 0164-

0925.: A compendium of non-intuitive behaviours of floating-point on

popular architectures, with implications for program verification and

testing.

[edit]External links

Comparing floats

Coprocessor.info: x87 FPU pictures, development and manufacturer

information

IEEE 754 references

IEEE 854-1987 History and minutes

Online IEEE 754 Calculators

IEEE754 (Single and Double precision) Online Converter

Categories: Computer arithmetic | IEEE standards

article

discussion

edit this page

history

Try Beta

Log in / create account

navigation

Main page

Contents

Featured content

Current events

Random article

search

Go

Search

interaction

About Wikipedia

Community portal

Recent changes

Contact Wikipedia

Donate to Wikipedia

Help

toolbox

What links here

Related changes

Upload file

Special pages

Printable version

Permanent link

Cite this page

languages

Catal

esky

Deutsch

Espaol

Franais

Italiano

Magyar

Nederlands

Polski

/ Srpski

Trke

This page was last modified on 26 February 2010 at 20:14.

Text is available under the Creative Commons Attribution-ShareAlike License;

additional terms may apply. See Terms of Use for details.

Wikipedia is a registered trademark of the Wikimedia Foundation, Inc., a non-

profit organization.

Contact us

Privacy policy

About Wikipedia

Disclaimers

You might also like

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Webscarab InstructionsDocument12 pagesWebscarab InstructionsLin DengNo ratings yet

- MP 8086 Lab Manual TRAINER KITDocument70 pagesMP 8086 Lab Manual TRAINER KITKavitha Subramaniam100% (1)

- ACI Muti-PodDocument59 pagesACI Muti-PodAlisamiirNo ratings yet

- BTIC18114 CaliDocument3 pagesBTIC18114 CaliBrijesh B NaikNo ratings yet

- Transducers and Data Acquisition SystemsDocument95 pagesTransducers and Data Acquisition SystemsBrijesh B NaikNo ratings yet

- Noise RemovalDocument9 pagesNoise RemovalBrijesh B NaikNo ratings yet

- Gujarat Technological University: Page 1 of 5Document5 pagesGujarat Technological University: Page 1 of 5Brijesh B Naik100% (1)

- Gujarat Technological University: Type of Course: Core EngineeringDocument4 pagesGujarat Technological University: Type of Course: Core EngineeringBrijesh B NaikNo ratings yet

- Network TopologyDocument44 pagesNetwork TopologyBrijesh B NaikNo ratings yet

- Chapter 4 Image Processing PDFDocument88 pagesChapter 4 Image Processing PDFBrijesh B NaikNo ratings yet

- The Sliding Mode Control For Power Electronics DevicesDocument45 pagesThe Sliding Mode Control For Power Electronics DevicesBrijesh B NaikNo ratings yet

- Sensor Based Energy Conservation System For Corporate Computers and Lighting SystemDocument43 pagesSensor Based Energy Conservation System For Corporate Computers and Lighting SystemSaravanan ViswakarmaNo ratings yet

- Pelco System 9760 System Software Upgrade Manual PDFDocument20 pagesPelco System 9760 System Software Upgrade Manual PDFperroncho2No ratings yet

- Blockchain Unconfirmed Transaction Hack Script 3 PDF FreeDocument4 pagesBlockchain Unconfirmed Transaction Hack Script 3 PDF FreenehalNo ratings yet

- GrlibDocument85 pagesGrlibshrikul.joshi100% (1)

- Lab-1 CasestudiesDocument7 pagesLab-1 CasestudiesEswarchandra PinjalaNo ratings yet

- OS Mini Project Report FinalizedDocument25 pagesOS Mini Project Report FinalizedSharanesh UpaseNo ratings yet

- Introduction To QbasicDocument5 pagesIntroduction To QbasicGeorge LaryeaNo ratings yet

- TracebackDocument3 pagesTracebackCapNo ratings yet

- #Include #Include #Include VoidDocument3 pages#Include #Include #Include VoidTanya SunishNo ratings yet

- OVM VerificationDocument45 pagesOVM VerificationRamesh KumarNo ratings yet

- Assignment #1 by G4Document6 pagesAssignment #1 by G4Mohammad Mahad FarooqNo ratings yet

- JJJJJJJDocument3 pagesJJJJJJJJoãoLucianiFerreiraNo ratings yet

- Nuance Safecom G4 Cluster: Administrator'S ManualDocument55 pagesNuance Safecom G4 Cluster: Administrator'S Manualalesitoscribd123No ratings yet

- DXC Windows 10 Image For Lenovo or HP Supported DevicesDocument3 pagesDXC Windows 10 Image For Lenovo or HP Supported Devicesyuva razNo ratings yet

- Lab 3 CCNDocument4 pagesLab 3 CCNAreeba NoorNo ratings yet

- XPrinter User GuideDocument58 pagesXPrinter User GuideRaje FaizulNo ratings yet

- D-Lab 1 MPU Kit V2.00Document13 pagesD-Lab 1 MPU Kit V2.00Luiz MoraisNo ratings yet

- All Config Cfa - 4 April (SfileDocument7 pagesAll Config Cfa - 4 April (SfileRiyo ParhanNo ratings yet

- PUB00138R6 Tech Series EtherNetIPDocument8 pagesPUB00138R6 Tech Series EtherNetIPAnderson SilvaNo ratings yet

- NameDocument24 pagesNameSumbulNo ratings yet

- Intro To Computers - FlashcardsDocument1 pageIntro To Computers - FlashcardsknightttttNo ratings yet

- Md110 and The Mx-One™ Telephony System - Telephony Switch Call CenterDocument8 pagesMd110 and The Mx-One™ Telephony System - Telephony Switch Call CenterAfif OrifansyahNo ratings yet

- Index: No. Name NoDocument198 pagesIndex: No. Name NovivekNo ratings yet

- Basic Concepts of ComputerDocument18 pagesBasic Concepts of Computerapi-380337188% (8)

- Digital Logic Design PDFDocument106 pagesDigital Logic Design PDFsushanth paspulaNo ratings yet

- Hierarchical Structures With Java EnumsDocument2 pagesHierarchical Structures With Java EnumsstarsunNo ratings yet

- Ncse Level 1Document4 pagesNcse Level 1Hugo AstudilloNo ratings yet