Professional Documents

Culture Documents

1 s2.0 0005109870900981 Main

Uploaded by

Murilo Teixeira SilvaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

1 s2.0 0005109870900981 Main

Uploaded by

Murilo Teixeira SilvaCopyright:

Available Formats

Automatica, Vol. 6, pp. 271-287. Pergamon Press, 1970. Printed in Great Britain.

An Instrumental Variable Method for Real-time

Identification of a Noisy Process*

Une m6thode de la variable op6ratoire pour l'identification en temps

r6el d'un proc6d6 contenant du bruit

Eine Hilfsvariablenmethode zur Kennwertermittlung an einem stochastisch

gest6rten Procel3 im Echtzeitbetrieb

MeTo~ orIepaTrmno~ nepeMeHUO~ )~.~ oHo3Hasann~ B ~e~CTBI,ITeYI~,HOM

BpeMei-iH o5~,erTa c myMOM

P. C. Y O U N G ~

A practical real-time process identification scheme must be relatively simple yet have a

reasonable basis in statistical estimation theory. The Instrumental Variable EquationError Method represents one attempt to satisfy these requirements.

Summary--The problem of identifying a dynamic process

from its normal operating data has received considerable

attention in recent years. The various techniques developed

range from largely deterministic procedures to sophisticated

statistical methods based on the results of optimal estimation

theory. The instrumental variable (IV) technique outlined

in this paper is intended as a compromise between these two

extremes; it has a basis in classical statistical estimation

theory, but does not require a priori information on the

signal and noise statistics.

The paper describes an IV approach to the problem of

identifying a linear process described by a differential

equation model and outlines the development of a simple

digital recursive estimation algorithm. It also discusses

briefly how the choice of input signal and the form of the

mathematical model can affect the identifiability of a process.

Finally, a number of representative experimental results are

included both to demonstrate the practical feasibility of this

particular approach to process identification, and to show

that it can be used to estimate either time invariant or

slowly variable process parameters.

these two extremes; it has a basis in classical statistical estimation theory, but does not require a priori

information on the signal and noise statistics.

The IV a p p r o a c h to least squares parameter

estimation has its foundations in classical statistical

estimation theory, where it represents one a p p r o a c h

to the problem of estimating structural model parameters [4, 5]. Probably the first application o f the

IV m e t h o d in the field o f process identification

was by P. JOSEPH, J. LEWIS and J. Tot; [6]. Although

they did not refer to it by name, Joseph et al.

suggested an IV procedure for identifying the

parameters of a process described by a difference

equation model. Subsequently, R. E. ANDEEN and

P. P. SHIPLEY [7] proposed an improved version of

the Joseph et al. method and applied it to the

theoretical design o f a digital adaptive flight control

system for an aero-space vehicle. Shortly afterwards

V. S. LEVADI [8] described the mechanisation o f a

purely analog IV method for identifying a linear

dynamic process described by a differential equation

model.

The identification scheme described in the present

paper is mechanised in a hybrid (analog-digital)

form. Here, the measured signals obtained f r o m

an u n k n o w n single-input, single-output process are

passed through analog "state variable filters"* and

then sampled to provide the input data to a simple

digital estimation algorithm. This special recursive

least squares algorithm was first suggested in an

unpublished research note [10]. The overall

estimation procedure can be considered as a

INTRODUCTION

THE PROBLEMo f identifying a dynamic process f r o m

its normal operating data has received considerable

attention in recent years [1]. The various techniques

developed range from largely deterministic procedures [2] to elegant statistical methods based on

the results o f optimal estimation theory [3]. The

instrumental variable (IV) technique outlined in

this paper is intended as a compromise between

* Received 17 March 1969; revised July 14, 1969. The

original version of this paper was presented at the 4th IFAC

Congress which was held in Warsaw, Poland during June

1969. It was recommended for publication in revised form

by associate editor A. Sage.

Control Group, Department of Engineering, University

of Cambridge, England.

* A term first used in Ref. [9].

271

272

P . C . YOUNG

hybrid development of Levadi's purely analog

approach and it also represents a logical extension

of earlier differential equation-error estimation

schemes described by the author [11-13].

The IV identification procedure is intended

primarily as a general method for identifying the

time-invariant or slowly variable parameters of

a continuous single-input, single-output dynamic

process. However, it can be extended directly for

use with both difference equation models and multiinput, single-output processes. In addition certain

multivariable problems can be handled in a similar

manner provided they possess a suitable structural

form.

THE GENERAL PROBLEM

Consider a single-input dynamic process that can

be described by a linear differential equation model

u

d"x

~t

dmu

n~=oan-~:U"~m~=lbm'~

(1)

where u=u(t) is the input command signal and

x = x ( t ) is the process output response to u(t). The

parameters a, and bm are a set of M + N + 1 unknown coefficients which may have time variations

that are slow in comparison with the response time

of the process. If x,, n = O ~ N and u,,, m=O--*M

are used to denote d"x]dt" and dmu/dt m, respectively, then Eq. (1) can be written

M

anXn=UO-k Z bmum

n=O

m=l

(2)

which can be converted into the alternative vector

equation

(3)

where

x =Exoxl..,

uM]

aT=[aoal ... aN-bl-b2

. . . --bM].

GENERALIZED EQUATION ERROR METHOD

Equations (3), (5), and (6) represent a fairly

general model for a single-input linear dynamic

process with unmeasurable input disturbances and

measurement noise. The nature of the observation

matrix H, and therefore the observed vector y is

dependent upon the particular process under

investigation. In this paper only the classical singleinput, single-output process is considered in detail,

and so it is assumed that H is of the special form,

H=[100...0]

x~a=uo

vector, x a. The symbol ~ is a j random vectol

denoting the noise present on the observations.

By the principle of superposition for linear systems,

it can be assumed that ~ represents the combined

effects of all unmeasurable disturbances and

measurement noise affecting the process.

For

generality, the observed input signal, v, is contaminated by measurement noise, n, which does

not enter the process.

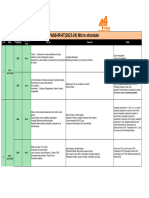

The overall signal topology of the system as described by Eqs. (3), (5) and (6) is shown in Fig. l(a).

Identifying this process during normal operation

is a problem of statistical parameter estimation.

To solve such a problem, it is necessary to choose

a method for utilizing the observed data, y and v,

to derive an estimate ~ of a, whereby the resulting

estimated model will adequately describe the

dynamic characteristics of the process.

(4)

Equation (3) does not supply details of any

unmeasurable disturbances or measurement noise

that may affect the process, nor does it provide a

picture of the process as seen by an external observer. It will be assumed here that these details can

be included by adjoining the observation equations.

y = Hx A+ ~

(5)

v = Uo+ 11.

(6)

Here, H is a j x ( M + N + I )

observation matrix

relating the observed ./ vector,

Y=[YoYJ-..35"-1] T, with the augmented state

and y is reduced to a scalar observation Yo as

defined by,

Yo = Xo + ~o.

(7)

Although this model is a special case, it is of

particular interest since many practical single-input

processes are of this type. The identification procedure can be extended to handle other forms of

mathematical model, however, and this is discussed briefly in a subsequent section.

Previous publications by the author describe a

fairly restricted approach to the problem of identifying a process described by Eqs. (3), (6) and (7) which

can be used with either continuous [l l, 12] or

discrete [11, 13] data. This approach is based on

the definition of an "estimation model" that obeys

a similar differential equation law to that of the

basic process, but which does not include pure

time derivatives of the process input and output

signals. This estimation model is obtained by

operating on each of the terms appearing in the

differential Eq. (1) with a linear time invariant filter,

Di, where in general

(j<M+N+I)

Di(s) = P~(s)/Qi(s)

(8)

An instrumental variable method for real-time identification of a noisy process

- I

273

PROCESS

-I

M+N+

= Uo

V_. = H x A +

I

i

V = Uo+

(a)

I

U0

_]

STATE

VARIABLE

FILTERS

PROCESS

I

=

VARIABLE

FILTERS

z = XAD + ~D

Ill

uo

--

+ no

D i Is)

I

(b)

FIG. 1. Signal topology of (a) a general single input process; (b) single-input, single-output process with

state variable filters.

and Pi(s); Qi(s) are constant coefficient polynomials

in the Laplace operator, s, with orders I and J

respectively. The subscript i in (8) merely emphasises the fact that the filter is not unique, but merely

a single member of a whole class of filters. If a,

and bm are assumed constant, then it is possible

to write the following identities:

Di{a . x.(t)} =

a.D,{x.(t)} = a.[(x.)]o,

D,{bm " Urn(t)} = bmDi{Um(O}

= bm[(Um)]D ,

(9)

where the terms [(x.)]o, and [(Um)]O , represent the

response of the filter to inputs x. and Urn, respectively.

Equation (9) states that because the process is

linear and the parameters a. and b m are time invariant, then the operator, D~, commutes with the

functions a . . x n and bin.urn. If this is the case, then

it has been shown [11, 13] that the parameters

a. and bm can be related by the estimation model

N

n=0

a.[(x.)]o,=[(Uo)]o,+

~ bm[(U..)]o,.

m=l

(I0)

This relationship is valid subsequent to an initial

small interval of time, e, following the initiation of

the filtration at t = t o provided that: (1) the form

of Di(s ) is such that any initial conditions on the

process variables at t = t o have insignificant effect

on the filter outputs [(x,)]o, and [(Um)]O , for all

time, t > t o + e; (2) the frequency bandwidth of the

filter Di(s) approximately encompasses the frequency band covered by the differential equation

model of the process--in other words, the frequency

band of interest in the identification. Condition 1

is required so that the deleterious effects of any

initial condition on the measured state are removed

as soon as possible. It simply implies that the

filter should have well damped transient response

characteristics and a frequency bandwidth which

is as high as possible consistent with condition

#2.

The significance of Eq. (10) is that it provides a

relationship between the unknown parameters

an and bm that is capable of replacing the original

differential Eq. (2) for identification purposes. The

practical utility of the estimation model becomes

274

P . C . YOUNG

clearer if the commutation carried out in Eq. (9)

is taken one step further, i.e.

[(x,)]D,

[(Xo)]D,.

[(u m)] o ,

[(u o)] D,.

~, a,[(yo)]o, =[(v)]D,o+ ~', bm[(v)]o,.,..

(12)

m= [

n=O

This can be written in the alternative vector form

and

zTa = w

where D,,(s)=s"Di(s); Di,,(s)=s"Di(s ). It is now

clear that the estimation model can be written in

the alternative form

N

n=O

where

zT=

[[(Yo)]o,o[(Yo)]o,

. . . [(Yo)]o,N[(v)]o,,

...

[(O]o,M]

a.[(Xo)]D,.=[(Uo)]D,o+

(13)

[(Uo)]D,.,

(11)

m=l

in which the variables [(X0)]D,, and [(Uo)]D,m

represent the response of the state variable filters

D~, and Dim to the process output Xo, and input

Uo, respectively. The filters can be made physically

realisable provided the order J of Qi(s) is selected

so pure differentiation, with all its attendant

practical limitations, is not specified. This requires

that J should be made greater than or equal to the

total sum of the order I of P~(s) and the order of

the maximum differential coefficient appearing in

the model of the process, since for most physical

processes N>_M, this condition is usually of the

form J > I + N.

The high-pass or D.C. blocking filter is the

simplest example of a physical realisable single

dimensional state variable filter [12]. Other related

but more subtle multi-dimensional state variable

filter mechanisations are the method of KOHR [14]

and the method of multiple filters [l l, 13]. A more

detailed discussion regarding the choice of particular state variable filter configurations and the

general philosophy of the state variable filter

approach is given in Ref. [15].

The reader may detect a superficial similarity

between the function of the state variable filter

and that of the state observer suggested by D. G.

LUENBERGER [16, 17]. However, the state variable

filter described here is a much cruder device than

the observer; its purpose is merely to avoid direct

differentiation of the measured signals and not to

reproduce the unmeasurable state variables of the

process. As a result, state variable filter synthesis

uses only a minimum of a priori information,

namely the approximate process bandwidth and the

order of the differential equation model; it does

not require detailed knowledge of the process

dynamics as in the case of the observer.

In any practical situation, the process output,

Xo, and input, Uo, are not directly measurable and

must be replaced by their observed values, Yo and v,

respectively. The signal topology of the system

obtained in this manner is shown in Fig. l(b). The

estimation model Eq. (11) must now be modified

as shown in Eq. (12).

w=[(v)]o,o.

Note that by the principle of superposition, the

following relationships are also true [see Fig. l(b)]:

z T = xSo + ~ = [ [ ( X o ) ] o , o

+ [[(~o)],,o.

[(Uo)]o,~,]

[(n)],,,]

w = u~ + n~ = [(Uo) ]~,o + [ ( n ) ] o , o

(14)

Referring to Eq. (13), it is now possible to define

a generalised equation error function, ei, at an

arbitrary ith instant of time by the relationship

ei=azf~- wi.

(15)

Here, fi represents an estimate of the parameter

vector, a, and the suffix, i, denotes values applying

at the ith instant. The estimate, fi, can be obtained

by minimising some positive definite-criterion function in the generalised equation error.

If the parameters are assumed time invariant,

a useful criterion ftmction is the sum of the squares

over the observation interval, J2. For a set of k

observations, J2 takes the form

k

J2 = ~ [-ZT~--Wi]2.

(16)

i=1

The estimate, ak, that minimises this function can

be obtained by differentiating with respect to ~ and

equating to zero; i.e.

The solution of these M + N + 1 linear simultaneous

algebraic equations is given by

?lk= Ck 1Bk = PkBg

where

k

P~-~ = C~ = Z zizY

i=1

and

k

Bk =

E

i=1

ZiWi "

(17)

An instrumental variable method for real-time identification of a noisy process

The similarity in form between these equations

and the 'normal equations' of multivariable linear

regression analysis [4] is important and should be

noted.

In Eq. (17), h can be obtained by direct matrix

inversion, or by using a gradient technique, such

as the method of conjugate gradients [15, 18].

However, an alternative approach is available,

which also avoids matrix inversion and is more

suitable for real-time applications. This technique

uses a particular recursive solution of linear least

squares problems of this type, where the parameter

estimate is updated as new information is received.

The derivation of this algorithm is straightforward;

it is merely a stepwise solution of the fixed sample

length problem [15, 19, 20]. In the recursive algorithm, Eq. (17) is replaced by

1] -1

ak=ak_l--ek-lz~[zrPk-lzk+

{zrt~k- 1 --Wk}

(Ia)

degree which is dependent upon the noise/signal

ratio. This unfortunate characteristic arises because

the vector, z, is composed of elements subjected to

noise contamination. Therefore, Eq. (13) is an

example of a "structural" rather than a "regression" model [4].

The reason for the estimation bias becomes

apparent if stationary statistical properties are

assumed and the expected value of the matrix zz r

is examined. The following is obtained from Eq.

(14):

E[zz T]= E[(XAO+ ~D)(xAO+ ~ D)T].

Suppose that any D.C. bias levels are removed from

the signals by the inclusion of suitable D.C.

blocking elements in each of the state variable

filter channels as described subsequently under

Experimental Results. Then, since xao and ~o are

uncorrelated,

or

E[zz r] =E[xAoxro] + E[~o~ r]

ak = a k - 1 - P ~ { z k z r a k - 1 - zkw~}.

Here, the matrix, Pk is given by a second recursive

relationship,

G " 1 = G - 2 ~ + zkz r

which can be put in the following more useful form

by application of a well known matrix inversion

lemma [21],

Pk=Pk-, --Pk- ~ZR[Z[Pk-:Zk+ 1]- 'zrek_~. (Ib)

Using this approach, the estimate at the kth instant

is obtained by the repeated application of (I)

from assumed initial conditions ao and Po.

Fortunately, the choice of go and Po does not

present any real problem; the unimodal nature

of the criterion function-parameter hypersurface

means that an aribtrary finite g0 coupled with a

diagonal Po having large positive elements will

yield performance comensurate with the stagewise

solution of the same problem. If a priori information on a is available then it can be used and should

be coupled with an associated reduction of the Po

elements. In this connection, it should be noted

that algorithm (I) only performs a least squares fit

to the measured data. Consequently, the P matrix

is simply a time variable weighting matrix without

statistical significance; it cannot be interpreted as

an error covariance matrix as in the analogous but

limited regression approach to parameter estimation

[201.

NOISE CONSIDERATIONS--THE

INSTRUMENTAL

VARIABLE ALGORITHM

If there is noise present on the observed signals,

the generalized equation error estimate discussed in

the previous section is asymptotically biased to a

275

(18)

where the noise-induced term E[~/9~T] is identically zero only when there is no noise on the

observed data. It can be shown [13, 15] that the

presence of this noise-induced term introduces the

asymptotic bias on the parameter estimates.

One approach to the above problem that does

not require a priori knowledge of the noise statistics

has its foundation in statistical estimation theory

where it has been termed the "instrumental variable

method" [5]. The asymptotic bias is removed by

modifying the solution given by Eq. (17) in the

following manner

a=

PkBk

(19)

where

k

i=1

and

k

B k = Y, ~tiw i .

(20)

i=1

Here, ~t is an instrumental variable (IV) vector

composed of elements chosen to be highly correlated

with the unobservable noise-free process variables,

xAo, but totally uncorrelated with the various

additive noise components that corrupt these

signals. As a result, the matrix [~zr], which now

replaces the matrix [zzr], of the uncompensated

algorithm, has the expected value

E[ zT] = E[ xlo] # 0.

Furthermore, it is clear that

E[~xro]~E[xaoxrao] as Jt~Xao.

(21)

276

P.C. YOUNG

In the long term, therefore, the inclusion of the IV

vector, ~, eliminates the troublesome noise term,

E[~o~or], while preserving the basic structure and

existence of the solution. In this way, the asymptotic bias is removed from the estimates, ensuring

only small bias for finite sample lengths.

Unfortunately, the elimination of bias in the

above manner is usually accompanied by a certain

loss of efficiency in the statistical sense [5]. However, as might be expected from Eq. (21), the greater

the correlation between ~ and the noise-free signals,

the smaller the estimation variance. In fact, a

simple theoretical analysis shows that the asymptotic variance should approach zero if ~ can be

made equal to xao [15].

The major problem with the IV approach is

the generation of the instrumental variables, themselves. The method suggested in Ref. [15] is a development of the analog technique used by V. S.

LEVADI [8]. The procedure for the more general

case, where the process is contained within a noisy

feedback loop, is shown in Fig. 2. The basic philosophy of this approach is that by prefiltering the

deterministic input command, u*, by an "auxiliary

model" of the process, it is possible to generate an

IV vector, ~, which is highly correlated with the

noise-free process vector, xao. In addition, the

elements of ~ will be uncorrelated with any other

noise in the system provided the input command is

noise-free. In practice, low levels ( < 5 ~) of noise

on u* can be tolerated without introducing noticeable bias.

Two approaches to the problem of selecting the

auxiliary model parameters are suggested in ref. [15].

The first approach is an off-line iterative routine.

The model parameters are initially selected on the

basis of either a priori information, or previous

uncompensated (i.e. biased) estimates of the process

parameters which are then updated by a series of

IV runs. The second approach, initially outlined

by the author in Ref. [10], is based on a recursive

solution to the problem and can be used in realtime. The method of deriving this recursive

algorithm is similar to that used in the uncompensated case. The constituent equations are as follows

([la)

v_l VARIABLE ~I

STATE

?

NOISE

INPUTS

PROCESS

T

x A a = uo

FILTERS

STATE

VARIABLE

FILTERS I

w J

v

z

-

I'~

A-D

U"

45

ll^

STATE

VARIABLE

FILTERS

A

x

-

*""

DIGITAL

COMPUTER

ESTIMATION

ALOGRITHM

oo6,I VARIABLE

s,,TE ]

q

FILTERS

II

II

FIG. 2. Instrumental variable method for identifying a process within a closed l o o p - - t h e auxiliary model

approach where F and K are k n o w n control system elements.

An instrumental variable method for real-time identification of a noisy process

With the help of this algorithm, it is possible to

develop a fully adaptive approach: the estimates

are first low-pass filtered to avoid significant

correlation between ~ and ~a; then they are used

to update the auxiliary model on a continuous

basis. In both of the above cases, convergence can

be argued [15]. However, because of equipment

problems, practical verification has been possible

only for the iterative method.

1DENTIFIABILITY

It has been noted that the equation error approach is closely related to the procedures of linear

regression analysis. One important and often overlooked feature of regression analysis is that the

regressors should be linearly independent if accurate

low-variance estimation is to be possible [4]. In the

equation error procedures described here, the place

of the regressors is taken by the elements of the

vector, z. Since these elements are dependent upon

both the process input signals and the nature of

the assumed model, it is clear that both factors

have an important bearing on the identifiability

of the process. Here a process is said to be identifiable from the observed data if the estimates obtained from II are statistically consistent; i.e. if they

are asymptotically unbiased and possess a variance

which decreases with increasing observation interval.

The choice of input signals

There are certain cases in which the process is

not identifiable because the input signal does not

activate the process sufficiently to allow for complete identification. Since this subject has been

discussed by several authors [8, 14], it will not be

considered in detail here. However, it will be noted

that if the process is controllable* [22], it should also

be identifiable if the input is sufficiently exciting

to allow sensible parameter estimation. This

requires:

277

necessary; any method of statistical inference can

produce consistent estimates only if sufficient data is

available. The second condition is necessary to

ensure that the elements of xa are not completely

linearly dependent.

Conditions (a) and (b) are only a guide to

selecting input signals and should be considered

merely as minimum requirements in this respect.

Practical experiments suggest that a random-noisetype signal usually gives excellent results if input

excitation can be specified [9, 14, 15].

Nature of the mathematical model

Unfortunately, the choice of a sufficiently exciting input signal does not guarantee low-variance

estimation. There usually will be some measure

of partial linear dependence [15, 23] between the

elements of the augmented state vector arising

because of the model structure. In the present

differential equation case, problems of this sort

arise when the assumed model has input derivative

terms. A simple but interesting example demonstrating the kind of results obtained in such situations is discussed later.

One method of checking the results of a pure

regression experiment to test for linear dependence

is "multiple correlation analysis" [24]. The same

approach can be used to good effect in the lownoise, uncompensated equation error case; however,

it must be used with caution since it is well known

that correlation coefficients can be biased by errors

in observations [24]. The special properties of the

adaptive IV method are rather useful in this respect

since they enable the development of a pseudo

multiple correlation analysis [15, 23] that appears to

give good qualitative results and may have quantitative significance.

blput noise effects

The first of the above conditions is obviously

As pointed out earlier, a statistical analysis of

the IV estimation scheme shows that the estimate

will be asymptotically biased by input signal measurement noise. In most servomechanisms this

will not cause too much difficulty since there will

often be a deterministic input command signal,

u*, available somewhere in the system as indicated

in Fig. 2. If this is not the case, then other methods

of removing the estimation bias must be found.

When the statistics of the noise and signals are

known, for instance, it is possible to use an approach similar to that ofM. J. LEVlN [25]. However,

such a technique is highly susceptible to incorrect

assumptions about the noise and signal statistics,

and must be used with care.

* Experimental results [23] confirm that if the process is

not controllable because of exact pole-zero cancellation then

identification is particularly poor. As such a situation rarely

occurs in the real world, it should not normally cause

problems.

DETECTING PARAMETER VARIATION

Since the accumulated square-type criterion

function weights all data equally over the observation interval, it contains an implicit assumption

(a) the input signal to be persistently exciting

in the sense that it continues to activate the process

throughout the observation interval. If this is not

the case and the process becomes inactive except

for noise effects, then the estimates will cease to be

consistent.

(b) the number of distinct frequency components

present in any purely periodic input signal to be

equal to or exceed d where

~(M+N+I)/2;

d=[(M+N+2)/2;

M+N+I

M+N+I

even

odd.

278

P . C . YOUNG

that the parameters are constant over this period.

If slow parameter variation is possible, precautions

must be taken to ensure that outdated estimates

are not obtained. A particularly simple approach

to this problem is obtained by weighting the data

exponentially into the past to gradually remove

information as it becomes obsolete. An analog

method of exponential weighting by low-pass

averaging filters is described in Ref. [12]. A discrete

data equivalent of this procedure can be developed

quite easily [15], and has been used to detect the

nonstationary characteristics of both simulated

[15] and practical [26] processes.

A more attractive general approach to the problem of parameter variation can be developed by

referring to the equivalent pure regression situation

[15, 19, 20]. Here, a nonstationary version of the

recursive least-squares equations is obtained by

considering a stochastic interpretation of the

problem, then introducing the following statistical

model of the parameter variations.

ak = ~ ( k , k - 1)ak_ 1 + r(k, k - 1)qk-

1"

(22)

Here, @(k, k - 1 ) is an assumed known ( M + N + 1)

x ( M + N + I ) transition matrix, F(k, k - 1 ) is an

assumed known ( M + N + 1) x ( M + N + 1) input

matrix included for generality, and qk-~ is a ( M + N

+1) random disturbance vector with zero mean

value and covariance matrix E[qrqsT] =Q6,s where

6 is the Kronecker delta function. In equation (22),

the transition matrix @ allows for any deterministic

variations in the parameters while the vector q

provides the statistical degree of freedom required

to model any random parameter variations between

samples. It is interesting to note that the predictioncorrection algorithm obtained in this manner is a

particular example of the optimal discrete filterestimation equations suggested by R. E. KALMAN

[27].

By using a purely heuristic argument based on

the similarity between the equation error method

and linear regression analysis, it is possible to

construct a dynamic equation error algorithm.

The algorithm is of very limited practical utility

as it stands because it requires knowledge of the

matrices, and F. Fortunately, it is a straightforward matter to simplify the algorithm by letting

each of these matrices equal the identity matrix,

I, for all k; implying that any parameter variation

is due to small random perturbations between

samples (a random walk process). In this case, the

IV algorithm can be written

^

ak = ak- 1 -- Pk/k- l~tk[ZkPk/g-- l~k + 1] - 1

(z~_,

- w~}

(H~a)

Pk/k- 1 = ~ k - 1 + D

( [ I lb)

P~ = P~I~- ~ - P~/~- ~~k [ z~ P~/~_ ~~k + 1 ] - '

z~P~/k-1.

( I i lc)

The only difference between this procedure and

the stationary parameter procedure given by

algorithm II is the inclusion of Eq. (IIIb). According to tile heuristic argument, D in this equation

is analogous to the covariance matrix of the parameter variations, Q, in the pure regression case.

As a result, the choice of D proves to be fairly

straightforward, since it can be selected initially

by reference to the expected relative rates of

parameter variation between samples, and then

adjusted by experimentation.

The physical effect of introducing the D matrix

is to limit the lower bound on the P matrix elements,

preventing the elements from becoming too small

and allowing for continuous correction of the

parameter est:mates as time progresses. Since D is a

matrix, it is possible to limit individual elements to

different degrees. In this way, different expected

rates of parameter variation can be specified on the

elements of a as described later under Experimental Results.

The major disadvantage of algorithm Ill is its

inability to differentiate between actual parameter

variations and indicated parameter variations,

caused by noise on the data. As a result, the

approach only proves satisfactory for the estimation of parameter variations larger than the

residual estimation variance due to noise.

In general, the dynamic equation error algorithm

--although not optimal in any sense--is to be

preferred to the alternative data weighting approach. The inclusion of the parameter variation

model means that the algorithm has much greater

inherent flexibility. For instance, the choice of a

random walk model for the parameter variations is

arbitrary; in certain situations it may be more

realistic to specify other statistical models, and this

is mentioned in ref. [21], p. 320, et seq. The virtue

of the model approach is that it allows this type of

a priori information to be used directly in the

estimation algorithm if it is available.

By including a model of the parameter variations,

it is possible to conceive of more sophisticated

procedures in which the parameter variation model

is updated by either a secondary "learning" scheme,

or by additional measured data obtained from the

process. As a practical example of the latter

approach, consider the problem of identifying the

longitudinal dynamic characteristics of an aircraft

or missile [28]. Here the variations in the unknown

parameters result from in-flight changes in environmental factors such as the dynamic pressure, qo,

and mass, m. Bearing this in mind, it is reasonable

An instrumental variable method for real-time identification of a noisy process

to assume [29] that the time variable parameter

vector a k can be decomposed into the product of a

known, non-singular, time variable transformation

(or modulation) matrix Tk and an unknown but

fixed or only slowly variable basis parameter

vector a*. Thus,

ak = Tka * .

(23)

Here Tk will be a function of those known or

measurable variables such as qo or m, that are

associated with the changing environment.

a* in Eq. (23) is constrained to be only slowly

variable. Consequently, it should be possible to

model its behaviour fairly adequately by a random

walk model, i.e.

* +qk- ~

ak* =ak--~

(24)

Substituting (24) in (23) and noting that a*_~

=Tk_XXak_l then yields the following vectormatrix equation for ak variations.

a, = [TkTk_~]ak_ 1 + Tkqk_ 1 .

(25)

Since this equation is of the same form as (22), it

can be used to develop a special dynamic equationerror algorithm [28]. If Tk provides a suitable decomposition, then much of the rapid variation in

a k will be accounted for deterministically by the

transition matrix, O = T k T k _ l l ;

in effect the

algorithm has only to estimate and track the

unknown but slow residual variations of the basis

vector a~'.

EXTENSIONS

For simplicity, the discussion up to this point

has been restricted to continuous single-input,

single-output processes. However, the estimation

procedure described here can be extended to handle

other, less specific, identification problems. For

instance, it can be generalised immediately for use

with difference equation models by replacing the

state variable filters by appropriate delay lines and

then redefining z, ~ and w accordingly. Two similar

recursive schemes of this type have been described

recently by D. Q. MAYNE [30] and K. Y. WONG and

E. POLAK [31]. Both papers discuss only time invariant parameter estimation (see algorithm II).

The extension to multiple command inputs is

trivial; the algorithm can be generalised in this way

simply by enlarging the augmented state vector

to include the additional input terms. The extension

to general multivariable problems is less straightforward. However, provided all of the principal

inputs and outputs can be observed directly, it is

quite often possible to find a model structure which

allows the overall identification problem to be

decomposed into a number of smaller separate

estimation problems. Examples of this kind of

279

decomposition are given in refs. [15, 32, 33] for

continuous systems and in [30] for discrete systems.

Finally, it should be noted that the general

nature of the state variable filters allows them to

handle processes where only higher order derivatives

of the basic signal can be observed. In a similar

way, if higher order derivatives are available in

addition to the basic output, they can be used to

reduce the mechanisational requirements of the

state variable filters.

EXPERIMENTAL RESULTS

A number of experiments designed to test the

practical utility of the techniques discussed in this

paper were made with the experimental equipment

shown in Fig. 3. The process and the auxiliary

model were simulated on an analog computer,

which also was used to synthesise the track-store

devices and state variable filters required by the

method of multiple filters. It will be noted from

Fig. 3, that all filtering channels contain identical

high-pass or D.C. blocking filters, S/l+s, which

effectively remove any D.C. bias levels from the

process signals. Such a procedure is quite in order

because the high-pass elements merely modify

the overall transfer characteristics of the state

varaible filter set. This ability to remove bias levels

in such a simple manner is an added advantage of

the state variable filter concept.

When the parameter, a3, of the second-order

process shown in Fig. 3 is known, the process is

clearly identifiable. In this situation the estimation

results obtained with the above equipment were

excellent [34]. However, when all four parameters

were assumed unknown, the results were not so

satisfactory--with particularly high variance on the

estimates dl and d 3. The following table illustrates

this phenomenon for various additive noise levels.

TABLE 1

Estimated parameter values

Noise/signal

ratio, 6

0"269

0.359

0"538

0.897

d0

0-955

1"055

0-964

1-104

dl

1.056

2-038

1"091

1-800

d2

0-677

1"600

0"748

1"212

d3

1"069

2"233

1-099

1'515

Actual parameter values

1.000

1.400

1.000

1.500

These results were obtained after 170 samples using

algorithm II. The process was activated by a

pseudo-random step input and the measurement

noise was generated by low-pass filtering a white

noise disturbance shown in Fig. 4. Although they

look rather poor at first sight, these estimates tend

280

P.C. YOUN(;

[(%)1 c ~

l+s

l+s I

STATE V A R I A B L E FILTERS I

-I MOOELI

uo'

'

'NP~ I,

SIGNAL

~-I'q-I'+'iT-I'+'IT-I"II

I

GENERATOR J

---t

'

"x"'~' I-Zl_

'

{(xo)lL~

--

PAPER

I [

? <~

OFF-LINE

DIGITAL

COMPUTER

i-~ TAPE I

OUTPUT

--

PAPER

TAPE

PUNCH

PUNCH

ENCODER

DIE- T A L

V( LT

ME FER

FIG. 3. Equipment used in the experimental investigations. The method of multiple filters provides the

following type of relationship:

j!(n--j+l)[(yo)]L~-i...I"

[(Yo)]o,.=(-1)" [(Y0)]L --n[(yo)]ce- 1 + ...+(--1) ~n(n-1)''"

1'0

0'5

8= ,897

o.

FO

(a)

-1,0

50

100

150

TIME, SEC

-0"I

50

100

150

TIME, SEC

FIG. 4. Typical signals used in the estimation experiments. Process output for: (a) pseudo random input

and random noise disturbance; (b) without noise disturbance.

to be misleading since the step, initial condition,

and frequency responses for the estimated model,

as given in Fig. 5 and 6, show good agreement with

the actual process responses. Thus, if the object

of the identification experiment is merely to predict

process output response to input activation, the

results may be considered satisfactory.

The poor parameter identification shown in Table

An instrumental variable method for real-time identification of a noisy process

INITIAL CONDITION RESPONSE

281

STEP RESPONSE

+ 1"0

2-0

~

ACTUAL

ESTIMATED

f"----

-~'0

10

1,0

o

15

10

TIME, sec

15

TIME, sec

+ 1"0

2.0

1.0

~3

o

<

r.-J

(3

5

z

1.0

10

0

15

10

TIME, sec

+I'O

2,0

1-0

co

-x0

0

10

TIME, sec

FIG. 5.

15

TIME, sec

0

15

....

I

10

15

TIME, sec

Comparison of actual and estimated time responses obtained when there is partial linear

dependence between the elements composing the augmented state vector.

1 can be explained by the high degree of partial

linear dependence existing between the elements of

the vector xAo due to the presence of the input

derivative term. This fact becomes apparent if a

multiple correlation analysis, which was discussed

in the section on Identifiability, is performed on the

measurement data: with a a assumed known, the

multiple correlation coefficients are small compared

with the total correlation coefficient; with a 3

unknown, they assume much higher values, indicating strong linear relationships between certain

of the elements of Xan [23]. A physical explanation

of this peculiar phenomenon is that the sensitivity

of the process response to changes in certain parameters is small. Consequently, the criterion function does not possess a clearly defined minimum

and the estimates tend to "drift", thus producing

high variance.

Figure 7 shows the results obtained when algorithm III was used to identify a process in which the

parameters, al, a2, and a 3 were time-invariant, while

an was varied sinusoidally. It should be stressed

282

P. C, YOUNG

2o[

0

0

--20

z

0

Q

o

--40

6--269

6 = .538

~-.3.59

~2 6 - .s97

ACTUAL

O C} ~ ~

40

ESTIMATED

(.)

--80

I-0

0-!

I0'0

FREQUENCY, r~/tec

-20

-40

I

t~ 6-.3s9 I

0

<

~t[:)

t~

6 = .269

-60

0 r'l A ~

ESTIMATED

-80

(b)

-100

I I 1 Ill

0.1

1 I I 111

1.0

I I I1

10-0

FREQUENCY, rm:l/141

FIG. 6. Comparison of actual and estimated frequency response obtained when there is partial linear

dependence between the elements composing the augmented state vector.

that Fig. 7 illustrates optimum performance in

the sense that the auxiliary model parameter was

varied in accordance with the actual process parameter variation. This approach proved necessary

because equipment delays prevented full hybrid

operation. Figure 8 is a similar example in which

both ao and ax were varied simultaneously. The

results shown in Figs. 7 and 8 were obtained with

a 3 assumed known; in other words, with the process

dearly identifiable.

Fortunately, the instrumental variable estimation

algorithm does not appear to be particularly

sensitive to the choice of the auxiliary model parameters, even in the time variable parameter case.

An instrumental variable method for real-time identification of a noisy process

283

15

0

O0

.0

0

1.0 -

O0

co

o

~u

<

n-

a "l" = [ a 0 1.4 1.0-1"5]

0'5

~_T 200

= [ ~ 0 1,37 0.93 -1'5]

= 0.359

ACTUAL VARIATION, a 0

O

0-0

ESTIMATED VARIATION, ~ 0

50

100

150

200

SAMPLES

FIG. 7. E s t i m a t i o n results for a single variable p a r a m e t e r :

o o -1.5];

10-4

10

P0 =

10

D=

10

0

0

An illustration of this is shown in Fig. 9. In this

experiment, reasonable parameter tracking performance was achieved despite the fact that the

auxiliary model had a fixed parameter vector

a r = [ 1 . 0 , 0"42, 0.11, 0], while the process had a

parameter vector a r = [ao, al, 0" 11, - 0"2], in which

ao and al were varied as shown.

CONCLUSIONS

The paper describes a relatively simple yet widely

applicable method for identifying a noisy process

described by a linear differential equation model.

This generalised equation-error method is mechanised in a hybrid (analog-digital) form in which the

signals obtained from the process during its normal

operation are passed through special analog state

variable filters and then sampled for use in a digital

recursive estimation algorithm. The state variable

filters not only overcome the time derivative

measurement problem associated with the basic

equation-error method but also remove D.C. bias

levels or high frequency noise outside the process

bandpass.

The most attractive feature of the recursive

least squares algorithm is its use of an instrumental

variable procedure which enables it to obtain

asymptotically unbiased estimates of the process

parameters even when the measured data from the

output of the process are contaminated with high

levels of noise with unknown statistical properties.

Although theoretically limited to the estimation

of time-invariant process parameters, the basic

estimation algorithm can be modified in a heuristic

manner to detect parameter variation. As a result

it is in an ideal form for certain on-line applications

such as systems performance assessment and selfadaptive process control. In such applications, its

rugged nature and ability to function satisfactorily

with a minimum of a priori statistical information

are added advantages.

284

P.C. YOUNG

1"5

0

1,0

=:LU

t,LU

~E

C

eL.

aT

0.5

[ a0

a I

1"0 - 1,5]

[a" 0 a* 1 1 . 0 5 - 1 5 ]

-aT190

6 = o.359

=

ACTUAL VARIATION

O

ESTIMATED VARIATION

(a)

5O

100

150

200

SAMPL ES

2~0

/2

aT = [ a 0 a 1 1.0-1.5]

IV190

-

(J

~)f~.a~

( go ~1 1.os - 1.5)

.359

1"5

Lid

I'-tll

~E

O~

1.0

_2/0

OO

ACTUAL VARIATION

O

ESTIMATED VARIATION

(b)

0'5

100

150

SAMPLES

FIG. 8. Estimation results for two variable parameters,

200

(a) ao, and (b) al:

rior = [ 0 0 0 - 1 . 5 ] ;

10-4

10

Po'=

10

D=

10-4

0

10

0

An instrumental variable m e t h o d for real-time identification o f a noisy process

285

1"5

oooo

1.0

d"

I T " [1011 0"11-0-2l

~TI~S " t';O'l 0.118-o~l

</</,

6 - 0-369

[

<

a-

\

0'

o to I

~ ~12J ESTIMATEDVAR|ATION

~.zx

aa~t:baaaaaaaoaaa#aaaa:a~..~a~

I

50

I

100

I

150

SAMPLES

FIG. 9. Estimation results for two variable parameters, a0 and at; time invariant, approximate auxiliary model:

~or = [0 0 0 - 0-2"] ;

10-4

10

P0 =

10

D=

10

10-*

0

Acknowledgements--This paper provides a brief r&um6 of

research carried out between 1965 and 1967 in the Department of Engin~ring, University of Cambridge, England.

The research was supported by the Whitworth Foundation.

The author is indebted to Professor J. F. Coales, Head of

the Control Group at Cambridge and to his supervisor at

Cambridge, Dr. J. D. Roberts for their valuable advice and

guidance throughout the undertaking of this research.

REFERENCES

[1] P. C. YOUNG: Process parameter estimation. Control

and Automation Progress 12, 931-937 (1968).

[2] P. M . LION: Rapid identification of linear and nonlinear systems. Proc. J A C C 605-615 (1966).

[3] R. E. KoPP and R. J. ORtORD: Linear regression

applied to system identification for adaptive control

systems. A I A A Journal 1, 2300-2306 (1963).

286

P . C . YOUNG

[4] M. G. KENDALLand A. STUART: The Advanced Theory

o f Statistics, Vol. 2. Griffin, London (1961).

[5] J. DURBIN: Errors in variables. Rev. Int. Statist. Inst.

22, 23-32 (1954).

[6] P. JOSEPH,J. LEWIS and J. Tou: Plant identification in

the presence of disturbances and application to digital

adaptive systems. A I E E Trans March (1961).

[7] R. E. ANDEEN and P. P. SHIPLEY: Digital adaptive

flight control system for aerospace vehicles. A1AA Journal 1, 1105-1110 (1963).

[8] V. S. LEVADI: Parameter Estimation of Linear Systems

in the Presence of Noise. Presented at the 1964 International Conference on Microwaves, Circuit Theory,

and Information Theory, September 7-11, Tokyo,

Japan.

[9] L. G. HOFMANN,P. M. LION and J. J. BEST: Theoretical

and Experimental Research on Parameter Tracking

Systems. NASA Contractor Report No. CR-452, April

(1966).

[10] P. C. YOUNG: On a Weighted Steepest Descent Method

of Process Parameter Estimation. Engineering Laboratory, Cambridge University, England, Internal Report,

December 1965 (available from author).

[11] P. C. YOUNG: In flight dynamic checkout. 1EEE Trans,

Aerospace AS-2, 1106-1111 (1964).

[12] The determination of the parameters of a dynamic

process. Radio Electron. Engnr J. 1ERE 29, 345-362

(1965).

[13] Process parameter estimation and self adaptive control.

Proc. 1965 IFAC (Teddington) Conf. In Theory o f Self

Adaptive Control System (Ed. P. H. HAMMOND), pp.

118-139. Plenum Press, New York (1966).

[14] L. L. HOBEROCK and R. H. KOF1R: An experimental

determination of differential equations to describe

simple non-linear systems. Proc. J A C C 616-623 (1966).

[15] P. C. YOUNG: Doctoral Thesis to be submitted to

Department of Engineering, University of Cambridge,

England, 1969 (unpublished).

[16] D. G. LUENBERGER" Observing the state of a linear

system. IEEE Trans Mil. Elect. MIL-8, 74-80 (1964).

[17] P. C. YOUNG: Adaptive Pitch Autostabilisation of an

Air-Surface Missile. Presented at Southwestern 1EEL

Conf., San Antonio, Texas, April (1969), (available

from author).

[18] P. C. YOUNG: Parameter estimation and the method

of conjugate gradients. Proc. IEEE 54, 1965 (1966).

[19] R. C. K. LEE: Optimal Estimation Identification and

Control. MIT Press, Research Monograph 28 (1964).

[20] P. C. YOUNG: Recursive methods of parameter estimation and their application to process identification.

Parts I & II ControlEngineering 16, Nos. 10 & 11 (1969).

[21] A. E. BRYSONand Y. C. Ho: Opt:re:sat:on Estimation

and Control, p. 320 et seq. Blaisdell Publishing Co.

(1969).

[22] P. M. DE"USSO, R. J. RoY and C. M. CLOSE: State

Variables for Engineers. John Wiley, New York (1965).

[23] P. C. YOUNG: Identification Problems Associated with

the Equation Error Approach to Process Parameter

Estimation. Proceedings of the Second Asilomar Conference on Circuits and Systems, pp. 416-422, October

(1968).

[24] K. A. BROWNLEE: Statistical Theory and Methodology

in Science and Engineering. John Wiley, New York

(1965).

[25] M. J. LEVIN: Estimation of system pulse transfer function in the presence of noise. Proc. JACC, 452-458

(1963).

[26] J. M. BRAYet al. : On line model making for a chemical

plant. Trans. Soc. Instrum. Technol. 17, No. 8 (1965).

[27] R. E. KALMAN: A new approach to linear filtering and

prediction theory. A S M E Trans. J. Basic Engng 82-D,

35-45 (1960).

[28] P. C. YOUNG: On The Use of a priori Parameter

Variation Information to Enhance the Performance of

a Recursive Least Squares Estimator. Tech. Note

404-90, Code 4041, Naval Weapons Center, China Lake,

California, May (1969).

[29] J. M. MENDEL: A priori and a posteriori Identification

of Time Varying Parameters. Presented at Second

Hawaii hit. Conf. of System Sciences, January (1969).

[30] D. Q. MAYNE: A Method for Estimating Discrete Time

Transfer Functions. Proceedings of Second UKAC

Control Convention, Advances in Computer Control,

University of Bristol, England, pp. C2.1-C2.9, April

(1967).

[31] K. Y. WONG and E. POLAK: Identification of linear

discrete time systems using an instrumental variable

method. IEEE Trans. Aut. Control AC-12, 707-719

(1967).

[32] A. I. RUBIN, S. DRIBAN and W. W. MEISSNER: Regression analysis and parameter identification. Simulation 9, 39-47 (t967).

[33] J. HOWARD: The determination of lateral stability and

control derivatives from flight data. Can. Aeronaut.

Space J. 13, 127-135 (1967).

[34] P. C. YOUNG: Regression analysis and process parameter estimation--a cautionary message. Simulation

10, 125-128 (1968).

R 6 s u m 6 - - L e probl6me d'identification d'un proc6d6 dy-

namique /~ partir de son information en fonctionnement

normal a 6t6 objet de beaucoup d'attention durant les

r6centes ann6es. Les diverses techniques mises au point vont

de m6thodes largement deterministes /t des methodes

statistiques complexes bas6es sur les r6sultats de la th6orie

de l'estimation optimale. La technique de la variable

op6ratoire (V.O.) d6crite darts cet article a pour but un

compromis entre ces deux extr6mes; elle est bas6e sur la

th6orie classique d'estimation statistique mais ne n6c6ssite

pas d'information a priori sur les statistiques du signal et du

bruit.

L'atticle d6crit une approche V.O. au probl6me d'identification d'une proc6d6 lin6aire d6crit par un mod61e fi

6quation diff6rentielle et met en 6vidence la cr6ation d'un

algorithme d'estimation r6cursif num6rique simple. I1

discute 6galement, d'une mani~re br~ve, de l'influence du

choix du signal d'entr6e et de la forme du mod~le math6matique sur l'aptitude ~ identifier un proc6d6. I1 comprend

finalement un certain hombre de r6sultats exp6rimentaux

repr6sentatifs,/t la fois pour montrer la possibilit6 de r6aliser

pratiquement cette approche/t l'identification des proc6d6s

et pour montrer qu'elle peut 6tre employ6e pour l'estimation de paramStres de proc6d6s suit invariables dans le

temps, suit variant lentement.

Z u s a m m e n f a s s u n g - - D e m Problem der Kennwertermittlung

im Echt-Zeit-Verfahren dutch Auswertung von Betriebsdaten wurde in den letzten Jahren starke Aufmerksamkeit

geschenkt. Die verschiedenen cntwickeltcn Techniken

reichcn von deterministischen Prozeduren bis zu statistischen

Methodcn, die auf den Ergebnissen der Theorie der optimalen Sch/itzung beruhen.

Die Benutzung von Hilfsvariablen soll einen Kompromig

zwischcn den beiden Extrema darstcllcn. Sic griindet sich

auf die klassische statistische Sch~itzungstheorie, ohne eine a

priori-Information tiber das Signal und das Rauschen zu

benStigcn.

Die Arbeit beschreibt die Ausarbeitung eines einfachcn

durch tin Differcntialgleichungsmodell beschriebcnen digitalen rekursivcn Sch/itzungsalgorithmus zur LSsung des

Problems. Es wird diskuticrt, wie die Wahl des Eingangssignals und die Form des mathematischen Modells die

ldentifizierbarkeit eines Prozesses beeinflussen k6nnen.

SehlieBlich werden repr/isentative experimentelle Ergebnisse

angeftihrt, um die praktische Durchf'tihrbarkeit dieses

slaeziellen Verfahrens zur Kennwertermittlung zu veranschaulichen und seine Anwendung zur Sch~itzung von

zeitinvarianten oder langsam veriinderlichen ProzeBparametern zu zeigen.

Pe3mMe---Hpo6neMa OHO3HaBaHH~JIHHaMR~ecKoro 06~KTa

HCXO~IH3 e r o HHOOpMaIIHHB HOpManbHOt~pa60Te BI~3BaJIa

Maoro s m ~ a m ~

3a

nocne~n~M ro~.

Pa3Jm~tI~Ie

An instrumental variable method for real-time identification of a noisy process

paBHTMe TeXHHr,H H~yT OT Hmpo~o-jIeTepMHHHp0BaHHSIX

M~TO~OB ~ 0 CTIO)KI~IX CTaTHCTH~eCKIIX MCTO~OB OCHOBaHHbLX Ha pC3yJH,TaTaX ~ e o p r m ~mHaM~ecxoR oUeHKH.

TeXHHXa oHepaTHBHOIR nepeMeHHO~ (O.H.) oimcaHHaS B

HaCTO:qme~ CTaTI,e HMO~T HCYIIJtO ICOMHpOM~C M e r e l y 3THMH

~ B y M a Kpai~OCTaMH;

OHa OCHOBaHa Ha K.qaccw-ICCKOi~

T e o p m ~ CTaTHCTH~ICCKOi~ OI~CHKH HO He T p e 6 y e T anpuoplto~

HHqbopMatlHH 0 CTaTHCTHKC C n r H a H a a HIyMa.

CTaTbfl OIIHC/alBaCT IIO~XO~ O . l ' I . K n p o 6 H e M e OHO3HaBaHH~[ YIHHCI(iHOrO O6"i,CKTa OHHCaHHOrO MO~C.q'bIO C ~IHqbq~C[~HII~aJIbHbIM ypaBHCI-IHCM H rioKa3~,IaaCT c o 3 ~ a m 4 c HpOCT-

287

o r o pcKypch'BHOrO ~ 0 p p o B o r o a n r o p n T M a oI~eHI~. O H a

T a K ~ e x p a T K O o6cy~]laeT BHHKI-IH BI~16opa BblXO~HOrO

c a r H a n a H ~ O p M M MaTCMaTh~/CCKOfi MO~CJIH Ha CHOCOSHOCTb

K OHO3HaBaHHIO 0 6 I , CKTa. O H a BI~IOtlaCT, HaKOHCI.[ HCKOT o p o c ~.~ICJ-IOxapaKTepHbIX 3KCrlepHMeHTaJII~HbIX p e 3 y ~ TaTOB, C ~BOI~HOI~ I~CJIbIO IlOXa3aTb BO3MO~KHOCTb npaKTHICCKOrO ocylI~eCTBHCHH~I 3TOFO n o n x o ~ a K OH~)3HaBaHHIO

O~KTOB

H rioKa3aTb ~ITO O H MO)KCT ~I~ITb HCHOJIb3OBaH ~21g

OI~eHKH, JIH~O HCH3MeHHMX HO BpCMCHH~ n i l 6 0 Me~.rlCHHO H3MeHHIOmHXC~I IIapaMCTpOB O~bCKTa.

You might also like

- StabilityDocument242 pagesStabilityMurilo Teixeira Silva100% (1)

- How To Use The Ieeetran Latex Class: Michael Shell, Member, IeeeDocument13 pagesHow To Use The Ieeetran Latex Class: Michael Shell, Member, IeeeiordacheNo ratings yet

- How To Use The Ieeetran Latex Class: Michael Shell, Member, IeeeDocument13 pagesHow To Use The Ieeetran Latex Class: Michael Shell, Member, IeeeiordacheNo ratings yet

- Fortran TUtorialDocument4 pagesFortran TUtorialAllanDongNo ratings yet

- PolarDocument1 pagePolarMurilo Teixeira SilvaNo ratings yet

- Finite Element Method in Fracture Mechanics: The University of Texas at AustinDocument18 pagesFinite Element Method in Fracture Mechanics: The University of Texas at AustinKirubha GershomeNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Lab1: Access Control: Posix AclDocument7 pagesLab1: Access Control: Posix AclAla JebnounNo ratings yet

- MD Manu en Megaflex WebDocument58 pagesMD Manu en Megaflex WebPhu, Le HuuNo ratings yet

- Articles On SidisDocument146 pagesArticles On SidisMircea492003100% (1)

- Tutoriales Mastercam V8 6-11Document128 pagesTutoriales Mastercam V8 6-11Eduardo Felix Ramirez PalaciosNo ratings yet

- Algebra 2: 9-Week Common Assessment ReviewDocument5 pagesAlgebra 2: 9-Week Common Assessment Reviewapi-16254560No ratings yet

- GMS60CSDocument6 pagesGMS60CSAustinNo ratings yet

- EConsole1 Quick Start Guide ENGDocument21 pagesEConsole1 Quick Start Guide ENGManuel Casais TajesNo ratings yet

- 16620YDocument17 pages16620YbalajivangaruNo ratings yet

- Notes For Class 11 Maths Chapter 8 Binomial Theorem Download PDFDocument9 pagesNotes For Class 11 Maths Chapter 8 Binomial Theorem Download PDFRahul ChauhanNo ratings yet

- Chapter 16 - Oral Radiography (Essentials of Dental Assisting)Document96 pagesChapter 16 - Oral Radiography (Essentials of Dental Assisting)mussanteNo ratings yet

- Three - Dimensional Viscous Confinement and Cooling of Atoms by Resonance Radiation PressureDocument6 pagesThree - Dimensional Viscous Confinement and Cooling of Atoms by Resonance Radiation PressureWenjun ZhangNo ratings yet

- Chem 17 Exp 3 RDR Chemical KineticsDocument4 pagesChem 17 Exp 3 RDR Chemical KineticscrazypatrishNo ratings yet

- Review Problems Chapter 4 Solutions PDFDocument4 pagesReview Problems Chapter 4 Solutions PDFAntoninoNo ratings yet

- Harmony XB4 - XB4BD53Document5 pagesHarmony XB4 - XB4BD53vNo ratings yet

- Syllabus EMSE6760 DDLDocument4 pagesSyllabus EMSE6760 DDLlphiekickmydogNo ratings yet

- Redox TitrationDocument5 pagesRedox TitrationchristinaNo ratings yet

- 12th Maths EM Second Mid Term Exam 2023 Question Paper Thenkasi District English Medium PDF DownloadDocument2 pages12th Maths EM Second Mid Term Exam 2023 Question Paper Thenkasi District English Medium PDF Downloadharishsuriya1986No ratings yet

- TitleDocument142 pagesTitleAmar PašićNo ratings yet

- LBX 6513DS VTMDocument4 pagesLBX 6513DS VTMsergiocuencascribNo ratings yet

- Quant Short Tricks PDFDocument183 pagesQuant Short Tricks PDFAarushi SaxenaNo ratings yet

- Narayana Xii Pass Ir Iit (2023 24) PDFDocument16 pagesNarayana Xii Pass Ir Iit (2023 24) PDFRaghav ChaudharyNo ratings yet

- Oculus SDK OverviewDocument47 pagesOculus SDK OverviewparaqueimaNo ratings yet

- Tech Specs - TC 5540 PDFDocument2 pagesTech Specs - TC 5540 PDFziaarkiplanNo ratings yet

- Famous MathematicianDocument116 pagesFamous MathematicianAngelyn MontibolaNo ratings yet

- Chapter 3 Selections - WhiteBackgroundDocument67 pagesChapter 3 Selections - WhiteBackgroundyowzaNo ratings yet

- Allison 1,000 & 2,000 Group 21Document4 pagesAllison 1,000 & 2,000 Group 21Robert WhooleyNo ratings yet

- Drop ForgingDocument18 pagesDrop ForgingpunkhunkNo ratings yet

- Pioneer Car Stereo System DVH-735AVDocument85 pagesPioneer Car Stereo System DVH-735AVJs LópezNo ratings yet

- IK Gujral Punjab Technical University: 1. Electric ChargeDocument12 pagesIK Gujral Punjab Technical University: 1. Electric ChargeJashandeep KaurNo ratings yet

- VFS1000 6000Document126 pagesVFS1000 6000krisornNo ratings yet