Professional Documents

Culture Documents

Determining The Oral Construct of The Test of English Communication Skills

Uploaded by

Dao Mai PhuongOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Determining The Oral Construct of The Test of English Communication Skills

Uploaded by

Dao Mai PhuongCopyright:

Available Formats

International Journal of Economics and Financial

Issues

ISSN: 2146-4138

available at http: www.econjournals.com

International Journal of Economics and Financial Issues, 2015, 5(Special Issue) 139-143.

2nd AFAP INTERNATIONAL CONFERENCE ON ENTREPRENEURSHIP AND BUSINESS MANAGEMENT (AICEBM 2015),

10-11 January 2015, Universiti Teknologi Malaysia, Kuala Lumpur, Malaysia.

Determining the Oral Construct of the Test of English

Communication Skills

Anie Attan1*, Abdul Halim Abdul Raof2, Masdinah Alauyah Mohammed Yusof3,

Noor Abidah Mohammed Omar4, Masputeriah Hamzah5

Language Academy, Universiti Teknologi Malaysia, 81310 UTM Johor Bahru, Johor, Malaysia, 2Language Academy, Universiti

Teknologi Malaysia, 81310 UTM Johor Bahru, Johor, Malaysia, 3Language Academy, Universiti Teknologi Malaysia, 81310 UTM

Johor Bahru, Johor, Malaysia, 4Language Academy, Universiti Teknologi Malaysia, 81310 UTM Johor Bahru, Johor, Malaysia,

5

Language Academy, Universiti Teknologi Malaysia, 81310 UTM Johor Bahru, Johor, Malaysia. *Email: anie.kl@utm.my

1

ABSTRACT

Exit tests to measure and inform new graduates of their oral and written communicative abilities are non-existent. This paper is our attempt to determine

the oral construct of the exit test through a study involving graduating students and workplace professionals. Information regarding oral tasks, minimum

standards and quality expected from new graduate employees were gathered through interviews and discussions with the human resource personnel

from various industries. Based on the information gathered, a pilot test of group oral interaction was designed and administered to a group of four

graduating students. The interactions of the group were video-recorded and were then assessed by professionals from various specialisations based on

their respective criteria of assessment. The professionals responses, comments and suggestions to interview questions posed were noted. The results

were analysed to establish the different categories of criteria being applied by the professionals in their assessment of the new graduates performances.

Findings show that thinking ability, interactive ability and professional image, which go beyond language skills, were other equally important criteria

of assessment, besides language accuracy. From the analysis, the construct of oral communication ability for the exit test was determined, followed

by the development of a six-band oral rating scale.

Keywords: Oral Contruct, Exit Test, Minimum Standard Expected, Workplace Assessment Criteria, Oral Communication Ability

JEL Classifications: M000

1. INTRODUCTION

Language test scores have been in use as tools to implement

stipulated educational, employment and political policies of

nations. Currently, a minimum expected score on the International

English Language Test System (IELTS) is being imposed on

foreign students seeking admission into certain courses and

institutions in countries where English is the medium of instruction

as evidence of their ability to cope with academic study and to

perform future tasks in non-test contexts. Similarly, evidence

of professionalism and language proficiency is also expected of

non-native English speaking professionals seeking employment in

English speaking countries through the imposition of a minimum

expected level on the IELTS as well as on required occupational

English tests by the host countries. In other instances, similar

certification of a satisfactory score on the IELTS has also to be met

by foreigners applying for permanent residence in some Englishspeaking countries. In the local context, applicants aspiring to

pursue tertiary education must show certification of a functional

command of the English language, in this instance, a required

level on the Malaysian University English Test (MUET) expected

by the receiving institutions, as proof of their capacity to cope

with future academic study and tasks. The above emphases on

language test scores imply that there exist a theoretical construct

International Journal of Economics and Financial Issues | Vol 5 Special Issue 2015

139

Attan, et al.: Determining the Oral Construct of the Test of English Communication Skills

and availability of relevant and adequate tests for describing

language test performance for each purpose, to correspond to the

language abilities needed for expression of intentions in non-test

contexts of language use (Bachman, 1991; Qureshi et al., 2014).

In setting up the criteria for describing language test performance

and validating the theoretical construct, opinions vary on whose

standards should be applied. In the case of an exit test for graduating

students in which there is none available, presently, it is our strong

conviction that the gap in exit language and communication

certification of these students is addressed and they being informed

that a measure of their workplace readiness communicative ability

is being developed. In light of this a number of issues need to

be addressed. How do we, academics, determine that construct

of workplace language and communication ability? How do we

measure it? From whose perspective should these concerns be

addressed? Should it be from the perspective of the academics

or the employers? In our earlier paper (Attan et al., 2012) we

have identified difficulties with determining the test of english

communication skills (TECS) written construct from both the

real life approach and components ability approach perspectives.

From the real life approach perspective, specifically seen from

the point of view of domain experts, because the students have

yet to join the job market and thus lacking the experience and

abilities needed for effective performance in the workplace, any

measurement of expected abilities may be deemed inaccurate.

From the components skills approach perspective, since the

expectations are viewed from the academics point of view, they

may be unrealistic and its adoption may not meet actual skills

needed for performance of tasks in the workplace, and this again

may result in inaccuracy of measurement (Naz et al., 2014). On a

similar note, we believe the same argument applies for determining

the TECS oral construct.

In determining the criteria for the development of the university

entrance test construct, the opinion of the academics is sought

as these academics have been immersed in the teaching-learning

process for a long time and have had extensive knowledge and

experience of the levels and abilities displayed on various tasks.

Thus they would be in a strong position to determine the levels and

the abilities that fit the specifications at each level. Validation of the

construct, in turn, should involve the opinion and performance of

1st-year students as these students have had substantial experience

of the knowledge and skills needed for satisfactory pursuit of

tertiary education.

2. LITERATURE REVIEW

Despite its increasing prominence, a literature search on validation

of the MUET from undergraduate test takers opinion revealed only

one. Rethinasamy and Chuahs (2011) study comparing 1st-year

students performance on the MUET and the university preparatory

English 1 (obligatory for students achieving Bands 1, 2 and 3 on

the MUET) revealed findings of a significant positive relationship

between MUET band and preparatory English 1 grades, in which

students achieving a higher MUET band were indeed performing

well in the university English language proficiency course.

Similarly, students achieving lower MUET bands tend to obtain

140

average and weak pass in the preparatory English 1 course. It was

further highlighted that since the benchmark study reported by the

Malaysian Examination Council (2005) showed a good correlation

between IELTS and MUET bands (r = 0.662), it is also indicative

that MUET is a reliable measure of students English language

ability. In like manner, they asserted that it can also be inferred

that the English preparatory 1 test results which correlate strongly

with MUET band is an indication of its validity (p. 243).

Validation of workplace readiness communicative ability

construct from domain experts point of view has shown a mixed

response. Using domain experts as test-takers in different work

contexts, the results obtained were both positive and negative. In

Browns (1993) study of test-takers response on a tape-mediated

oral proficiency test of Japanese for the tourism and hospitality

industry, findings confirmed that the test tasks elicited the type

of language use required in the industry. Thus the results were

treated as evidence that the test was measuring relevant language

skills. In a separate study, Elder (2007) compares the adequacy

and relevance of the International English Language Testing

System (IELTS) as a screening device, which was originally

designed to measure proficiency for academic purposes with

the occupational english test (OET) which was designed to

measure the communicative competence of health professionals

for registration. In the study involving 53 health professionals

on two tests, it was found that while IELTS could be considered

a valid measure of general English proficiency, the OET was,

over and above, a better measure of the health professionals

communicative competence. From the above two studies, it can

be shown that feedback from domain experts can be considered

by responsible bodies in determining the relevance of the

test construct and workplace communication readiness of the

participants to the target language use domain.

In other contexts, test validity is a challenge when adverse

feedbacks were obtained. In Bessettes (2005) study of a

proficiency testing policy implemented aimed at ensuring

adequacy of civil servants provision of service in both French

and English in bilingual regions in Canada, findings showed

negative feedback received from the civil servants on many

aspects ranging from the training programme to the test itself,

which they considered irrelevant to their jobs. On a similar note,

Murray et al., (2012) study in New South Wales, Australia, on the

relevance of the Professional English Assessment for Teachers, a

professional screening test, found negative attitudes shown by 105

qualified overseas-trained teacher test takers towards the test as

they felt that the test was irrelevant to their needs. Further negative

feedbacks were found in Kim and Elders (2014) exploration of

pilots and air traffic controllers perceptions of tests administered

in Korea. A large majority of the participants responded that

the test did not reflect their communicative competence in

radiotelephony communication in that the test content was

inappropriate and irrelevant to the demands of the job and that

test development procedures were unclear. Likewise, Knochs

(2014) study of criteria used by native English speaking pilots

to evaluate speech samples from a number of different aviation

English tests, found a far wider range of criteria being applied

including non-linguistic factors such as technical knowledge,

International Journal of Economics and Financial Issues | Vol 5 Special Issue 2015

Attan, et al.: Determining the Oral Construct of the Test of English Communication Skills

and availability of relevant and adequate tests for describing

language test performance for each purpose, to correspond to the

language abilities needed for expression of intentions in non-test

contexts of language use (Bachman, 1991; Qureshi et al., 2014).

In setting up the criteria for describing language test performance

and validating the theoretical construct, opinions vary on whose

standards should be applied. In the case of an exit test for graduating

students in which there is none available, presently, it is our strong

conviction that the gap in exit language and communication

certification of these students is addressed and they being informed

that a measure of their workplace readiness communicative ability

is being developed. In light of this a number of issues need to

be addressed. How do we, academics, determine that construct

of workplace language and communication ability? How do we

measure it? From whose perspective should these concerns be

addressed? Should it be from the perspective of the academics

or the employers? In our earlier paper (Attan et al., 2012) we

have identified difficulties with determining the test of english

communication skills (TECS) written construct from both the

real life approach and components ability approach perspectives.

From the real life approach perspective, specifically seen from

the point of view of domain experts, because the students have

yet to join the job market and thus lacking the experience and

abilities needed for effective performance in the workplace, any

measurement of expected abilities may be deemed inaccurate.

From the components skills approach perspective, since the

expectations are viewed from the academics point of view, they

may be unrealistic and its adoption may not meet actual skills

needed for performance of tasks in the workplace, and this again

may result in inaccuracy of measurement (Naz et al., 2014). On a

similar note, we believe the same argument applies for determining

the TECS oral construct.

In determining the criteria for the development of the university

entrance test construct, the opinion of the academics is sought

as these academics have been immersed in the teaching-learning

process for a long time and have had extensive knowledge and

experience of the levels and abilities displayed on various tasks.

Thus they would be in a strong position to determine the levels and

the abilities that fit the specifications at each level. Validation of the

construct, in turn, should involve the opinion and performance of

1st-year students as these students have had substantial experience

of the knowledge and skills needed for satisfactory pursuit of

tertiary education.

2. LITERATURE REVIEW

Despite its increasing prominence, a literature search on validation

of the MUET from undergraduate test takers opinion revealed only

one. Rethinasamy and Chuahs (2011) study comparing 1st-year

students performance on the MUET and the university preparatory

English 1 (obligatory for students achieving Bands 1, 2 and 3 on

the MUET) revealed findings of a significant positive relationship

between MUET band and preparatory English 1 grades, in which

students achieving a higher MUET band were indeed performing

well in the university English language proficiency course.

Similarly, students achieving lower MUET bands tend to obtain

140

average and weak pass in the preparatory English 1 course. It was

further highlighted that since the benchmark study reported by the

Malaysian Examination Council (2005) showed a good correlation

between IELTS and MUET bands (r = 0.662), it is also indicative

that MUET is a reliable measure of students English language

ability. In like manner, they asserted that it can also be inferred

that the English preparatory 1 test results which correlate strongly

with MUET band is an indication of its validity (p. 243).

Validation of workplace readiness communicative ability

construct from domain experts point of view has shown a mixed

response. Using domain experts as test-takers in different work

contexts, the results obtained were both positive and negative. In

Browns (1993) study of test-takers response on a tape-mediated

oral proficiency test of Japanese for the tourism and hospitality

industry, findings confirmed that the test tasks elicited the type

of language use required in the industry. Thus the results were

treated as evidence that the test was measuring relevant language

skills. In a separate study, Elder (2007) compares the adequacy

and relevance of the International English Language Testing

System (IELTS) as a screening device, which was originally

designed to measure proficiency for academic purposes with

the occupational english test (OET) which was designed to

measure the communicative competence of health professionals

for registration. In the study involving 53 health professionals

on two tests, it was found that while IELTS could be considered

a valid measure of general English proficiency, the OET was,

over and above, a better measure of the health professionals

communicative competence. From the above two studies, it can

be shown that feedback from domain experts can be considered

by responsible bodies in determining the relevance of the

test construct and workplace communication readiness of the

participants to the target language use domain.

In other contexts, test validity is a challenge when adverse

feedbacks were obtained. In Bessettes (2005) study of a

proficiency testing policy implemented aimed at ensuring

adequacy of civil servants provision of service in both French

and English in bilingual regions in Canada, findings showed

negative feedback received from the civil servants on many

aspects ranging from the training programme to the test itself,

which they considered irrelevant to their jobs. On a similar note,

Murray et al., (2012) study in New South Wales, Australia, on the

relevance of the Professional English Assessment for Teachers, a

professional screening test, found negative attitudes shown by 105

qualified overseas-trained teacher test takers towards the test as

they felt that the test was irrelevant to their needs. Further negative

feedbacks were found in Kim and Elders (2014) exploration of

pilots and air traffic controllers perceptions of tests administered

in Korea. A large majority of the participants responded that

the test did not reflect their communicative competence in

radiotelephony communication in that the test content was

inappropriate and irrelevant to the demands of the job and that

test development procedures were unclear. Likewise, Knochs

(2014) study of criteria used by native English speaking pilots

to evaluate speech samples from a number of different aviation

English tests, found a far wider range of criteria being applied

including non-linguistic factors such as technical knowledge,

International Journal of Economics and Financial Issues | Vol 5 Special Issue 2015

Attan, et al.: Determining the Oral Construct of the Test of English Communication Skills

experience and level of training, besides those prescribed by

the International Civil Aviation Organisation guidelines. With a

mixed response obtained on the above validation studies from

the views of domain experts, more research needed to be done

to obtain more conclusive results.

3. THE STUDY

For our purpose to determine the workplace communicative ability

construct, specifically the oral construct, since our graduating

students have yet to obtain work experience and to be immersed

in actual work context, we believe the appropriate persons for us

to obtain relevant information would be the human resource (HR)

personnel from technical and technology-related industries. Since

these personnel will be responsible for recruitment of deserving

applicants, they would be in a better position to assess the quality

of potential recruits for their organisation. Based on the above

scenario of a gap in the exit oral communicative test construct

development, two important considerations have prompted us to

conduct the study. First, the critical need to know the employers

expectations of graduating students oral communicative ability

in light of the graduating students effective participation in the

workplace. Second, the urgent need to develop valid instruments

that can accurately measure the true oral communicative ability

of the graduates when they enter the job market.

In this paper, we attempt to identify and describe the workplace

oral language and communication construct. First, we discuss

the process and procedures that we have adopted to determine

that oral construct; and next, we present our proposed construct

of workplace oral language and communication for feedback

and suggestions. Two research questions have been formulated

in our attempt to establish the workplace oral language and

communication construct. They are:

Research Question 1

Research Question 2

What criteria do employers adopt when

evaluating the quality of oral output?

What is the minimum level expected

for acceptance of completed oral tasks

in the workplace?

3.1. Participants of the Study

In our attempt to find answers to the research questions three

groups of participants were identified. One was a group of five

language practitioners from Universiti Teknologi Malaysia (UTM)

with teaching experience ranging between 18 and 25 years.

The second group comprised of nine workplace professionals

from various technical and technology-related industries. These

professionals were either HR Managers or personnel responsible

for recruitment in their respective organisations in Malaysia. These

two groups served as informants or assessors for this study.

The third group of participants was a group of four final year

undergraduates of UTM; three male students and one female

student (Table 1). All, with the exception of two male students,

had different English language proficiency levels based on their

MUET band scores.

3.2. Procedure of the Study

The study involved two phases, adapting the procedures used

by Abdul Raof (2002). Prior to the video recording of the group

of students (Table 1) preliminary input were obtained through

informal discussions with a few HR personnel to guide the

researchers in designing the oral communicative task. Based on

the information gained, a speaking task was designed based on

the assumption that new graduate employees would be involved

in a discussion with fellow employees. For the pilot study the

students were asked to deliberate, give their views, interact with

each other and eventually come up with a collective decision on

what they think is the most important quality in a worker. The

task lasted for about 20 min and was moderated by an English

language lecturer. The session was video-recorded and used as

speech sample in this study.

In the first phase the video-recording was then shown to all five

language practitioners. Each was asked to view the recording,

rank and assess the oral interaction of the four students without

referring to any rating scale. After the assessment was done a

group interview cum discussion was conducted with the language

practitioners to compare rankings and to know how these were

determined.

This procedure was repeated in the second phase, this time with

the workplace professional group. Each personnel was approached

individually and was asked to view the video-recording, rank and

assess the students. As with the language practitioners, it was done

without the aid of any rating scale. At the end of the assessment

each of them participated in a semi-structured interview revolving

around the criteria used to rank and assess a student, the reasons

why a student was ranked higher than another, and which of the

student(s) would most likely be recruited and the reasons behind

such a decision.

Data from both groups were analysed to look for similarities and

differences in the rankings made. In addition, data were further

studied to elicit the criteria used by the two groups of assessors.

4. RESULTS AND DISCUSSION

The analysis revealed that there is a disparity in terms of the

ranking of the performances made by the language practitioners

and workplace professionals. The language practitioners group had

no dispute over who the best speaker was i.e., Student D. However,

no workplace professionals ranked this student as the best. Instead,

majority of the workplace professionals chose Student C as the

one who had performed the best in the task given.

From the analysis of the interview responses it was evident that the

language practitioner group based their assessment of the students

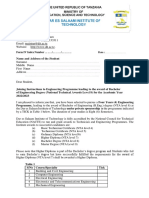

Table 1: Background of student participants

Student

A

B

C

D

Gender

Male

Male

Female

Male

International Journal of Economics and Financial Issues | Vol 5 Special Issue 2015

MUET band

Band 2

Band 2

Band 4

Band 5

Faculty

Engineering

Engineering

Management

Education

141

Attan, et al.: Determining the Oral Construct of the Test of English Communication Skills

mainly on the criterion of language proficiency (Khan et al., 2012).

Thus, Student D who demonstrated good control of the English

language was their choice. On the other hand, the workplace

professionals were not impressed by the proficiency of Student D

as to them he did not contribute much to the task assigned. He

was merely managing the discussion; initiating the discussion,

asking opinion from the others, making sure the task gets done and

concluding the discussion. Basically, his voice was not heard

and hence, contribution to the task was considered minimal.

In contrast, Student C was ranked highest by majority of the

workplace professionals on the basis of her maturity in the

arguments put forth and her interactive ability. She was able to

make significant contribution to the discussion and elaborated

her viewpoints. She also listened to what others had to say and

considered their alternative opinion but at the same time defended

her arguments well. Though her language proficiency was good,

this was not the main reason she was chosen as the best by many.

The language practitioner group ranked Student C as second

behind Student D. The reason being she was not as fluent and

accurate as Student D in her language proficiency.

Nevertheless, it was pointed out by the professional group that

Student C could improve on the image she portrayed or as some of

the professionals termed it as the package. From the interview

responses this criterion could be described as how one carries

or projects oneself in communicating with others, and ones

confidence when voicing ones views, and composure which

make up the overall image of a good speaker. It was noted also

that this aspect of the students performance was not given much

prominence or importance in the ranking criteria of the language

pratitioners.

With regards to Students A and B, the language practitioners

ranked both as equal i.e., the lowest due to their lack of proficiency.

Despite this, the professionals, however, were willing to recruit

Student B as he was seen to have some potential based on the

contributions made in carrying out the task. He also demonstrated

the ability to defend his arguments, though to a lesser extent,

as compared to Student C. One HR manager even thought that

Student B was better than the others apparently due to the potential

that he has as observed by the HR personnel (Khan et al., 2014).

From here, it is clear that language proficiency was not used as

the main criterion in determining the choice of candidate to be

recruited.

In evaluating the quality of oral interaction (refer to Research

Question 1) it seems that while the language practitioners were

paying more attention to language skills (as expected), the

workplace professionals were focusing on some other criteria

beyond language ability. These were found to be related to thinking

ability, interactive ability, and professional image. Based on these

criteria, rankings given by both groups were therefore different.

The discussion with both groups of assessors was also aimed

at determining the minimal level of language ability expected

by employers for acceptance of potential employees into the

workplace (Research Question 2). It was established that the

142

Table 2: Proposed construct of workplace oral language

and communication competence

Criteria

Contribution to task

Interactive ability

Language

Personality

Description

Able to analyse

Shows creativity of ideas

Shows maturity of ideas

Able to express opinion

Able to convince

Accuracy

Fluency

Vocabulary

Sentence variety

Professional image

Confidence

Enthusiasm

ability to express opinion, to respond to viewpoints, to participate

in discussion, to be able to analyse problems, to keep the

communication going with a fair command of the language but

the lack of confidence and sophistication of language and adequate

amount of content and level of maturity to dwell further on the

topic or discussion merit as acceptable level of communicative

ability for the workplace. These abilities were deemed as functional

that is, equivalent to Level 3 on a 6-Level scale. A graduate at this

level would have many errors in his language and his vocabulary

lacks variety. Nevertheless, this is regarded as the minimum

acceptable level that can sustain the potential employee in the

workplace. With exposure and further on-the-job training, the new

employee is expected to be able to gain better control of and use

the language more correctly and appropriately.

Our proposed workplace oral language and communication

construct, conceived from the collaboration between the academics

and workplace professionals, is captured in the Table 2.

5. CONCLUSION AND SUGGESTIONS

The approach advocated by this study was empirically-based and

utilized insights of workplace specialists. Similar to the study by

Abdul Raof (2002), this study proves that engaging workplace

specialists in developing a rating scale is possible, in fact highly

recommended. From the analysis of the data gathered, a rating

scale of oral communication ability for the oral exit test was

devised. It comprised the criteria of assessment which are thinking

ability, interactive ability, professional image, and language ability.

Continuous collaboration with workplace specialists seems to

be the way forward to further validate the draft scale before it

can be widely used. More importantly, the study highlights the

importance of engaging the workplace professionals in the design

of the exit test, most critically in determining their assessment of

new graduates performance, which this study reveals, go beyond

language skills.

REFERENCES

Abdul Raof, A.H. (2002), The production of a performance rating

scale: An alternative methodology. Unpublished Ph.D Thesis. The

University of Reading, UK.

International Journal of Economics and Financial Issues | Vol 5 Special Issue 2015

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Boost Your Vocabulary For IELTS Cam 12Document32 pagesBoost Your Vocabulary For IELTS Cam 12Dao Mai PhuongNo ratings yet

- 1 Cam 9Document49 pages1 Cam 9Dao Mai PhuongNo ratings yet

- Ôn Tập Ngữ Pháp Lớp KETDocument1 pageÔn Tập Ngữ Pháp Lớp KETDao Mai PhuongNo ratings yet

- Entrance TestDocument20 pagesEntrance TestDao Mai PhuongNo ratings yet

- De Kiem Tra Tieng Anh 7 Ton Nu Cam TuDocument125 pagesDe Kiem Tra Tieng Anh 7 Ton Nu Cam TuDao Mai PhuongNo ratings yet

- Atg Worksheet PreptimeDocument2 pagesAtg Worksheet PreptimeGabriela Ionela0% (1)

- Toeic Speaking - DucDocument1 pageToeic Speaking - DucDao Mai PhuongNo ratings yet

- (Thaytro - Net) Family and Friends Grade 5 Special Edition Workbook PDFDocument98 pages(Thaytro - Net) Family and Friends Grade 5 Special Edition Workbook PDFDao Mai Phuong100% (1)

- (Thaytro - Net) Family and Friends Grade 3 Special Edition Workbook PDFDocument95 pages(Thaytro - Net) Family and Friends Grade 3 Special Edition Workbook PDFDao Mai PhuongNo ratings yet

- CÁC LINK SPEAKING PART 1 HayDocument4 pagesCÁC LINK SPEAKING PART 1 HayDao Mai PhuongNo ratings yet

- Review Unit 6 Lesson 1: I He She We You They ItDocument5 pagesReview Unit 6 Lesson 1: I He She We You They ItDao Mai PhuongNo ratings yet

- Cakes and desserts: sweet treats for your moodDocument1 pageCakes and desserts: sweet treats for your moodDao Mai PhuongNo ratings yet

- How Celebrities Influence Fans in VietnamDocument2 pagesHow Celebrities Influence Fans in VietnamDao Mai PhuongNo ratings yet

- Islcollective Worksheets Elementary A1 PR Around The World Countries and Nationalities Crossword 205094e71cc06b79cb3 32852418Document3 pagesIslcollective Worksheets Elementary A1 PR Around The World Countries and Nationalities Crossword 205094e71cc06b79cb3 32852418Danilo A. Pereira43% (7)

- General Reading 2Document3 pagesGeneral Reading 2Dao Mai PhuongNo ratings yet

- General Reading 3Document3 pagesGeneral Reading 3Dao Mai PhuongNo ratings yet

- WT2 - Describe SomethingDocument1 pageWT2 - Describe SomethingDao Mai PhuongNo ratings yet

- Tet HolidayDocument17 pagesTet HolidayDao Mai PhuongNo ratings yet

- WT2 - Describe SomethingDocument1 pageWT2 - Describe SomethingDao Mai PhuongNo ratings yet

- All of Me John Legend Present Simple and PresentDocument1 pageAll of Me John Legend Present Simple and PresentDiana GonzalezNo ratings yet

- Sec C - Cost Behavior and Cost ObjectsDocument11 pagesSec C - Cost Behavior and Cost ObjectsDao Mai PhuongNo ratings yet

- Sam Smith Im Not The Only OneDocument1 pageSam Smith Im Not The Only OneDao Mai PhuongNo ratings yet

- Task 4 Mats Dev 2016Document3 pagesTask 4 Mats Dev 2016Dao Mai PhuongNo ratings yet

- Jobs, English learning, weekends, routines, family, hobbies, sports, TVDocument3 pagesJobs, English learning, weekends, routines, family, hobbies, sports, TVDao Mai PhuongNo ratings yet

- TheoristsDocument3 pagesTheoristsDao Mai PhuongNo ratings yet

- Likes Dislikes Lesson Plan PDFDocument7 pagesLikes Dislikes Lesson Plan PDFDao Mai PhuongNo ratings yet

- Sec C - Topic 4 IntroductionDocument8 pagesSec C - Topic 4 IntroductionDao Mai PhuongNo ratings yet

- Esl Teachers GuideDocument195 pagesEsl Teachers Guidecidaufmg100% (3)

- Reading 2016Document32 pagesReading 2016Dao Mai PhuongNo ratings yet

- The Application of Communicative Language TeachingDocument8 pagesThe Application of Communicative Language TeachingDao Mai PhuongNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Tutorial 1 Discussion Document - Batch 03Document4 pagesTutorial 1 Discussion Document - Batch 03Anindya CostaNo ratings yet

- CS709 HandoutsDocument117 pagesCS709 HandoutsalexNo ratings yet

- Wheeled Loader L953F Specifications and DimensionsDocument1 pageWheeled Loader L953F Specifications and Dimensionssds khanhNo ratings yet

- Kathy Davis - Dancing Tango - Passionate Encounters in A Globalizing World-New York University Press (2015)Document236 pagesKathy Davis - Dancing Tango - Passionate Encounters in A Globalizing World-New York University Press (2015)Csongor KicsiNo ratings yet

- ITP Exam SuggetionDocument252 pagesITP Exam SuggetionNurul AminNo ratings yet

- Strategies For StartupDocument16 pagesStrategies For StartupRoshankumar BalasubramanianNo ratings yet

- A Database of Chromatographic Properties and Mass Spectra of Fatty Acid Methyl Esters From Omega-3 ProductsDocument9 pagesA Database of Chromatographic Properties and Mass Spectra of Fatty Acid Methyl Esters From Omega-3 ProductsmisaelNo ratings yet

- Training Customer CareDocument6 pagesTraining Customer Careyahya sabilNo ratings yet

- Prasads Pine Perks - Gift CardsDocument10 pagesPrasads Pine Perks - Gift CardsSusanth Kumar100% (1)

- BIT 4107 Mobile Application DevelopmentDocument136 pagesBIT 4107 Mobile Application DevelopmentVictor NyanumbaNo ratings yet

- Statistical Decision AnalysisDocument3 pagesStatistical Decision AnalysisTewfic SeidNo ratings yet

- Navistar O & M ManualDocument56 pagesNavistar O & M ManualMushtaq Hasan95% (20)

- Reading Comprehension Exercise, May 3rdDocument3 pagesReading Comprehension Exercise, May 3rdPalupi Salwa BerliantiNo ratings yet

- Trillium Seismometer: User GuideDocument34 pagesTrillium Seismometer: User GuideDjibril Idé AlphaNo ratings yet

- Link Ratio MethodDocument18 pagesLink Ratio MethodLuis ChioNo ratings yet

- Joining Instruction 4 Years 22 23Document11 pagesJoining Instruction 4 Years 22 23Salmini ShamteNo ratings yet

- 2021 Impact of Change Forecast Highlights: COVID-19 Recovery and Impact On Future UtilizationDocument17 pages2021 Impact of Change Forecast Highlights: COVID-19 Recovery and Impact On Future UtilizationwahidNo ratings yet

- An Introduction To Ecology and The BiosphereDocument54 pagesAn Introduction To Ecology and The BiosphereAndrei VerdeanuNo ratings yet

- Draft SemestralWorK Aircraft2Document7 pagesDraft SemestralWorK Aircraft2Filip SkultetyNo ratings yet

- SDS OU1060 IPeptideDocument6 pagesSDS OU1060 IPeptideSaowalak PhonseeNo ratings yet

- Real Estate Broker ReviewerREBLEXDocument124 pagesReal Estate Broker ReviewerREBLEXMar100% (4)

- Ball Valves Pentair Valves and ControlsDocument16 pagesBall Valves Pentair Valves and ControlsABDUL KADHARNo ratings yet

- Excess AirDocument10 pagesExcess AirjkaunoNo ratings yet

- !!!Логос - конференц10.12.21 копіяDocument141 pages!!!Логос - конференц10.12.21 копіяНаталія БондарNo ratings yet

- October 2009 Centeral Aucland, Royal Forest and Bird Protecton Society NewsletterDocument8 pagesOctober 2009 Centeral Aucland, Royal Forest and Bird Protecton Society NewsletterRoyal Forest and Bird Protecton SocietyNo ratings yet

- Composite Structures: A. Grimaldi, A. Sollo, M. Guida, F. MaruloDocument15 pagesComposite Structures: A. Grimaldi, A. Sollo, M. Guida, F. MaruloSharan KharthikNo ratings yet

- Techniques in Selecting and Organizing InformationDocument3 pagesTechniques in Selecting and Organizing InformationMylen Noel Elgincolin ManlapazNo ratings yet

- Induction ClassesDocument20 pagesInduction ClassesMichelle MarconiNo ratings yet

- Critical Methodology Analysis: 360' Degree Feedback: Its Role in Employee DevelopmentDocument3 pagesCritical Methodology Analysis: 360' Degree Feedback: Its Role in Employee DevelopmentJatin KaushikNo ratings yet

- Bio310 Summary 1-5Document22 pagesBio310 Summary 1-5Syafiqah ArdillaNo ratings yet