Professional Documents

Culture Documents

Outline: Part 1: Basic Concepts of Data Clustering Non-Supervised Learning and Clustering

Outline: Part 1: Basic Concepts of Data Clustering Non-Supervised Learning and Clustering

Uploaded by

Rachman Nova SumartaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Outline: Part 1: Basic Concepts of Data Clustering Non-Supervised Learning and Clustering

Outline: Part 1: Basic Concepts of Data Clustering Non-Supervised Learning and Clustering

Uploaded by

Rachman Nova SumartaCopyright:

Available Formats

Unsupervised Learning

Clustering Algorithms

Outline

Part 1: Basic Concepts of data clustering

Non-Supervised Learning and Clustering

:

:

:

:

Problem formulation cluster analysis

Taxonomies of Clustering Techniques

Data types and Proximity Measures

Difficulties and open problems

Part 2: Clustering Algorithms

Hierarchical methods

:

:

:

Single-link

Complete-link

Clustering Based on Dissimilarity Increments Criteria

Unsupervised Learning -- Ana Fred

1 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Hierarchical Clustering

Use proximity matrix: nxn

D(i,j): proximity (similarity or distance) between patterns i and j

Step 0

Step 1

Step 2 Step 3 Step 4

agglomerative

ab

abcde

cde

de

divisive

Step 4

Step 3

Step 2 Step 1 Step 0

Unsupervised Learning -- Ana Fred

2 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Hierarchical Clustering: Agglomerative Methods

1. Start with n clusters containing one object

2. Find the most similar pair of clusters Ci e Cj from the proximity

matrix and merge them into a single cluster

3. Update the proximity matrix (reduce its order by one, by replacing

the individual clusters with the merged cluster)

4. Repeat steps (2) e (3) until a single cluster is obtained (i.e. N-1

times)

Unsupervised Learning -- Ana Fred

3 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Hierarchical Clustering: Agglomerative Methods

Similarity measures between clusters:

:Well known similarity measures can be written using the Lance-Williams

formula, expressing the distance between cluster k and cluster i+j,

obtained by the merging of clusters i and j:

d (i j, k ) ai d (i, k ) a j d ( j, k ) bd (i, j ) c d (i, k ) d ( j, k )

Single-link

d i j, k min d (i, k ), d ( j, k )

ai a j 0.5 ; b 0 ; c -0.5

Complete-link

ai a j 0.5 ; b 0 ; c 0.5

Centroid

ai

ni

ni n j

aj

nj

ni n j

d i j, k maxd (i, k ), d ( j, k )

ni n j

n n

c0

d (i j, k ) d ( i j , k )

ai a j 0.5 ; b 0.25 ; c 0

Median

(Average link)

Wards Method

(minimum variance)

ni

ai

ni n j

ai

aj

nk ni

nk ni n j

nj

bc0

ni n j

aj

nk n j

nk ni n j

d (Ci , C j )

1

ni n j

d ( a , b)

aCi ,bC j

nk

c0

nk ni n j

Unsupervised Learning -- Ana Fred

4 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Hierarchical Clustering: Agglomerative Methods

Single Link: Distance between two clusters

is the distance between the closest points.

Also called neighbor joining.

d i j, k min d (i, k ), d ( j, k )

Single-link

ai a j 0.5 ; b 0 ; c -0.5

Complete-link

ai a j 0.5 ; b 0 ; c 0.5

Centroid

ni

ai

ni n j

aj

nj

ni n j

d i j, k maxd (i, k ), d ( j, k )

ni n j

n n

c0

d (i j, k ) d ( i j , k )

ai a j 0.5 ; b 0.25 ; c 0

Median

(Average link)

Wards Method

(minimum variance)

ni

ai

ni n j

ai

aj

nk ni

nk ni n j

nj

bc0

ni n j

aj

d (Ci , C j )

nk n j

nk ni n j

1

ni n j

d ( a , b)

aCi ,bC j

nk

c0

nk ni n j

Unsupervised Learning -- Ana Fred

5 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Hierarchical Clustering: Agglomerative Methods

Complete Link: Distance between clusters

is distance between farthest pair of points.

Single-link

d i j, k min d (i, k ), d ( j, k )

ai a j 0.5 ; b 0 ; c -0.5

Complete-link

ai a j 0.5 ; b 0 ; c 0.5

Centroide

ai

ni

ni n j

aj

nj

ni n j

d i j, k maxd (i, k ), d ( j, k )

ni n j

n n

c0

d (i j, k ) d ( i j , k )

ai a j 0.5 ; b 0.25 ; c 0

Median

(Average link)

Wards Method

(minimum variance)

ni

ai

ni n j

ai

aj

nk ni

nk ni n j

nj

bc0

ni n j

aj

nk n j

nk ni n j

d (Ci , C j )

1

ni n j

d ( a , b)

aCi ,bC j

nk

c0

nk ni n j

Unsupervised Learning -- Ana Fred

6 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Hierarchical Clustering: Agglomerative Methods

Centroid: Distance between clusters is

distance between centroids.

d i j, k min d (i, k ), d ( j, k )

Single-link

ai a j 0.5 ; b 0 ; c -0.5

Complete-link

ai a j 0.5 ; b 0 ; c 0.5

Centroide

ni

ai

ni n j

aj

nj

ni n j

d i j, k maxd (i, k ), d ( j, k )

ni n j

n n

c0

d (i j, k ) d ( i j , k )

ai a j 0.5 ; b 0.25 ; c 0

Median

(Average link)

Wards Method

(minimum variance)

ni

ai

ni n j

ai

aj

nk ni

nk ni n j

nj

bc0

ni n j

aj

d (Ci , C j )

nk n j

nk ni n j

1

ni n j

d ( a , b)

aCi ,bC j

nk

c0

nk ni n j

Unsupervised Learning -- Ana Fred

7 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Hierarchical Clustering: Agglomerative Methods

Average Link: Distance between clusters is

average distance between the cluster points.

Single-link

d i j, k min d (i, k ), d ( j, k )

ai a j 0.5 ; b 0 ; c -0.5

Complete-link

ai a j 0.5 ; b 0 ; c 0.5

Centroid

ai

ni

ni n j

aj

nj

ni n j

d i j, k maxd (i, k ), d ( j, k )

ni n j

n n

c0

d (i j, k ) d ( i j , k )

ai a j 0.5 ; b 0.25 ; c 0

Median

(Average link)

Wards Method

(minimum variance)

ni

ai

ni n j

ai

aj

nk ni

nk ni n j

nj

bc0

ni n j

aj

nk n j

nk ni n j

d (Ci , C j )

1

ni n j

d ( a , b)

aCi ,bC j

nk

c0

nk ni n j

Unsupervised Learning -- Ana Fred

8 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Hierarchical Clustering: Agglomerative Methods

Wards Link: Minimizes the sum-of-squares

criterion (measure of heterogenity)

d i j, k min d (i, k ), d ( j, k )

Single-link

ai a j 0.5 ; b 0 ; c -0.5

Complete-link

ai a j 0.5 ; b 0 ; c 0.5

Centroid

ni

ai

ni n j

aj

nj

ni n j

d i j, k maxd (i, k ), d ( j, k )

ni n j

n n

c0

d (i j, k ) d ( i j , k )

ai a j 0.5 ; b 0.25 ; c 0

Median

ni

ai

ni n j

(Average link)

Wards Method

ai

(minimum variance)

aj

nj

bc0

ni n j

nk ni

nk ni n j

aj

nk n j

nk ni n j

d (Ci , C j )

1

ni n j

d ( a , b)

aCi ,bC j

nk

c0

nk ni n j

Unsupervised Learning -- Ana Fred

9 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

d Ci , C j min d (a, b)

Single Linkage:

x

15

24

5

1

1

24

-

2

12

4

Clustering Algorithms

aCi ,bC j

3

1

11.7

20

21.5

8.1

16

17.9

11.7

8.1

9.8

9.8

20

1,2

16

3

9.8

4

- 5

5

1,2

21.5

-

17.9

8.1

9.8

16

8 17.9 -

8.1

9.8

9.8

16

9.8

17.9

9.8

3

1

3

1

5

4

Unsupervised Learning -- Ana Fred

10 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

d Ci , C j min d (a, b)

Single Linkage:

aCi ,bC j

1,2

4,5

1,2

8.1

16

8.1

9.8

4,5

16

9.8

1,2,3

4,5

1,2,3

9.8

4,5

9.8

Dendrogram

Unsupervised Learning -- Ana Fred

11 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

d (a, b)

Complete-Link: d Ci , C j amax

C ,bC

i

11.7

20

21.5

8.1

16

17.9

11.7

8.1

9.8

9.8

20

16

9.8

1,2

21.5

3

17.9

4

9.8

5

8

1,2

11.7

20

21.5

11.7

9.8

9.8

20

9.8

21.5

1,2

9.8

3

8

4,5

1,2

11.7

21.5

3

4,5

11.7

9.8

21.5

9.8

5

4

5

4

5

4

Unsupervised Learning -- Ana Fred

12 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

d (a, b)

Complete-Link: d Ci , C j amax

C ,bC

i

1,2,3

4,5

1,2,3

21.5

4,5

21.5

3

1

Dendrogram

Unsupervised Learning -- Ana Fred

13 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Single-link and Complete-Link

:

A clustering of the data objects is obtained by cutting the dendrogram at

the desired level, then each connected component forms a cluster.

3

1

3

1

Single-link

Favours connectedness

Complete-link

Favours compactness

Unsupervised Learning -- Ana Fred

14 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Single-link and Complete-Link

10

9

SL algorithm:

Favors connectedness

7

6

5

Equivalent to building a MST

And cutting at weak links

3

10

2

9

1

10

8

10

10

9

9

8

8

7

7

6

6

5

5

4

10

4

3

3

2

2

1

10

1

2

10

Unsupervised Learning -- Ana Fred

15 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Single-link and Complete-Link

SL algorithm:

:

:

:

Favors connectedness

Detects arbitrary-shaped clusters with even densities

Cannot handle distinct density clusters

Single-link method, th=0.49

Unsupervised Learning -- Ana Fred

16 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Single-link and Complete-Link

SL algorithm:

:

:

:

:

Favors connectedness

Detects arbitrary-shaped clusters with even densities

Cannot handle distinct density clusters

Is sensitive to in-between patterns

4

2

2

0

0

-2

-4

-5

4

2

-2

10

-4

-5

10

0

-2

Unsupervised Learning -- Ana Fred

-4

0

5 2009

17 From-5

Single Clustering to Ensemble

Methods - April

10

Unsupervised Learning

Clustering Algorithms

Single-link and Complete-Link

SL algorithm:

:

:

:

:

:

Favors connectedness

Detects arbitrary-shaped clusters with even densities

Cannot handle distinct density clusters

Is sensitive to in-between patterns

Needs criteria to set the final number of clusters

CL algorithm:

Favors compactness

44

22

00

-2-2

-4

-5-5

Unsupervised Learning -- -4

Ana Fred

00

55

1010

18 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Single-link and Complete-Link

1.5

SL algorithm:

:

:

:

:

:

Favors connectedness 0.5

Detects arbitrary-shaped clusters with even densities

0

Cannot handle distinct density clusters

-0.5

Is sensitive to in-between

patterns

Needs criteria to set the-1final number of clusters

-1.5

CL algorithm:

:

:

-2

-2

-1

0

Favors compactness

Imposes spherical-shaped clusters on data

Unsupervised Learning -- Ana Fred

19 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Single-link and Complete-Link

SL algorithm:

:

:

:

:

:

Favors connectedness

Detects arbitrary-shaped clusters with even densities

Cannot handle distinct density clusters

Is sensitive to in-between patterns

Needs criteria to set the final number of clusters

CL algorithm:

:

:

:

Favors compactness

Imposes spherical-shaped clusters on data

Needs criteria to set the final number of clusters

Unsupervised Learning -- Ana Fred

20 From Single Clustering to Ensemble Methods - April 2009

10

Unsupervised Learning

Clustering Algorithms

Hierarchical Clustering

Weakness

:

:

do not scale well: time complexity of O(n2), where n is the number

of total objects

can never undo what was done previously

Integration of hierarchical with distance-based clustering

:

:

:

BIRCH (Zhang, Ramakrishnan, Livny, 1996): uses a

ClusteringFeature-tree and incrementally adjusts the quality of subclusters

CURE (Guha, Rastogi & Shim, 1998): selects well-scattered points

from the cluster and then shrinks them towards the center of the

cluster by a specified fraction

CHAMELEON (G. Karypis, E.H. Han and V. Kumar, 1999):

hierarchical clustering using dynamic modeling

1. Use a graph partitioning algorithm: cluster objects into a large

number of relatively small sub-clusters

2. Use an agglomerative hierarchical clustering algorithm: find the

genuine clusters by repeatedly combining these sub-clusters

Unsupervised Learning -- Ana Fred

21 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Smoothness Hypothesis:

:

:

:

:

:

A cluster is a set of patterns sharing important characteristics in a

given context

A dissimilarity measure encapsulates the notion of pattern

resemblance

Higher resemblance patterns are more likely to belong to the same

cluster and should be associated first

Dissimilarity between neighboring patterns within a cluster should

not occur with abrupt changes

The merging of well separated clusters results in abrupt changes

in dissimilarity values

A Fred, J Leito, A New Cluster Isolation Criterion Based on Dissimilarity Increments, IEEE PAMI, 2003.

Unsupervised Learning -- Ana Fred

22 From Single Clustering to Ensemble Methods - April 2009

11

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Dissimilarity Increments:

x ,x ,x

i

xk

- nearest neighbors

x j : j argmin d xl , xi , l i

l

xk : k argmin d xl , x j , l i

l

d xi , x j

d xi , x j

xj

xi

Dissimilarity increment:

d inc xi , x j , xk d xi , x j d x j , xk

d inc

Unsupervised Learning -- Ana Fred

23 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Distribution of Dissimilarity Increments:

Uniformly distributed data

Unsupervised Learning -- Ana Fred

24 From Single Clustering to Ensemble Methods - April 2009

12

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Distribution of Dissimilarity Increments:

2D Gaussian data

Unsupervised Learning -- Ana Fred

25 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Distribution of Dissimilarity Increments:

Ring-shaped data

Unsupervised Learning -- Ana Fred

26 From Single Clustering to Ensemble Methods - April 2009

13

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Distribution of Dissimilarity Increments:

Directional expanding data

Unsupervised Learning -- Ana Fred

27 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Distribution of Dissimilarity Increments:

x

Exponential distribution: p( x ) exp

Unsupervised Learning -- Ana Fred

28 From Single Clustering to Ensemble Methods - April 2009

14

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Exponential distribution:

:

:

Higher density patterns -> higher

Well separated clusters -> dinc on the tail of p(x)

Unsupervised Learning -- Ana Fred

29 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Gap between clusters: gapi d C i ,C j d t C i

10

Ci

Cj

13

8

17

gapi

1

dt(C i)

14

dt(Ci)

16

dt(C j)

12

d(C i,Cj)

dt(Cj)

18

15

gapj

5

6

11

Unsupervised Learning -- Ana Fred

30 From Single Clustering to Ensemble Methods - April 2009

15

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Gap between clusters: gapi d C i ,C j d t C i

dt(Cj )

dt(Ci )

d(Ci ,C j )

Ci

gapi

gapj

Cj

Unsupervised Learning -- Ana Fred

31 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Cluster Isolation criterion:

Let Ci, Ck be two clusters which are candidates for merging, and let i , k

be the respective mean values of the dissimilarity increments in each

cluster. Compute the increments for each cluster, gapi and gapk. If

gapi i (gapk k), isolate cluster Ci (Ck) and proceed the

clustering strategy with the remaining patterns. If neither cluster exceeds

the gap limit, merge them.

Unsupervised Learning -- Ana Fred

32 From Single Clustering to Ensemble Methods - April 2009

16

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Setting the Isolation Criterion Parameter :

Result: the crossings of the tangential line, at points which are multiple of

the distribution mean value, /, with the x axis, is given by (+1)/

exponential distribution,

=.05

tangential lines at points multiple of

=.05

3,5 cover the significant part of the distribution

Unsupervised Learning -- Ana Fred

33 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Hierarchical Clustering Algorithm:

A statistic of the dissimilarity increments within a cluster is maintained and

updated during cluster merging

Clusters are obtained by comparing dissimilarity increments with a dynamic

threshold, i , based on cluster statistics

Unsupervised Learning -- Ana Fred

34 From Single Clustering to Ensemble Methods - April 2009

17

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Results:

:Ring-Shaped Clusters

Unsupervised Learning -- Ana Fred

35 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Results:

:Ring-Shaped Clusters

Single-link method, th=0.49

Unsupervised Learning -- Ana Fred

36 From Single Clustering to Ensemble Methods - April 2009

18

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Results:

:Ring-Shaped Clusters

Dissimilarity Increments-base method:

3/3

g2

d1

3/

d2

3/1

g1

d1

d2

d2

Unsupervised Learning -- Ana Fred

37 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Results:

:Ring-Shaped Clusters

Dissimilarity Increments-base method:

3/3

g2

3/

3/1

g1

d1

d2

d2

Unsupervised Learning -- Ana Fred

38 From Single Clustering to Ensemble Methods - April 2009

19

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Results:

:2-D Patterns with Complex Structure

Dissimilarity Increments-base method

2 < < 9

Single-link

Single Link

th=1.1

Unsupervised Learning -- Ana Fred

39 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Clustering of Contour Images

:

:

The data set is composed by 634 contour images of 15 types of hardware

tools: t1 to t15.

When counting each pose as a distinct sub-class in the object type, we

obtain a total of 24 classes.

Unsupervised Learning -- Ana Fred

40 From Single Clustering to Ensemble Methods - April 2009

20

Unsupervised Learning

Clustering Algorithms

Clustering of Contour Images

Contour extraction

Contour

String Contour

Extraction

Description

Based on a

thresholding

method

:

:

8 directional

differential

chain code

the object boundary is sampled at 50 equally spaced points

the angle between consecutive segments is quantized in 8 levels.

Unsupervised Learning -- Ana Fred

41 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Clustering of Contour Images

Unsupervised Learning -- Ana Fred

42 From Single Clustering to Ensemble Methods - April 2009

21

Unsupervised Learning

Clustering Algorithms

Clustering of Contour Images

Single-link

Dissimilarity Increments-based method

t1

t1

t2

t2

t3

t3

t4

t4

t5

t5

(string-edit distance)

Unsupervised Learning -- Ana Fred

43 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Clustering Based on Dissimilarity Increments Criteria

Description:

Hierarchical agglomerative algorithm adopting a cluster isolation

criterion based on dissimilarity increments

Strength

:

:

:

Method is not conditioned by a particular dissimilarity measure

(examples used Euclidean and string-edit distances)

Ability to identify arbitrary shaped and sized clusters

The number of clusters is intrinsically found

Weakness

Sensitive to in-between points connecting touching clusters

Unsupervised Learning -- Ana Fred

44 From Single Clustering to Ensemble Methods - April 2009

22

Unsupervised Learning

Clustering Algorithms

Outline

Partitional Methods

:

:

:

K-Means

Spectral Clustering

EM-based Gaussian Mixture Decomposition

Part 3.: Validation of clustering solutions

Cluster Validity Measures

Part 4.: Ensemble Methods

Evidence Accumulation Clustering

Unsupervised Learning -- Ana Fred

45 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Partitional Methods

K-Means

Minimizes the cost function:

Algorithm:

Input: k, the number of clusters; data set

1. Randomly select k seed points from the data set, and take them as initial

centroids

2. Partition the data into k clusters by assigning each object to the cluster with

the nearest centroid.

3. Compute centroids of the clusters of the current partition.

The centroid is

the center (mean point) of the cluster.

4. Go back to step 2 or stop when no more new assignment.

Unsupervised Learning -- Ana Fred

46 From Single Clustering to Ensemble Methods - April 2009

23

Unsupervised Learning

Clustering Algorithms

Partitional Methods: K-Means

Favors compactness

4

2

0

-2

4

-4

-5

2

10

10

0

-2

-4

-5

Unsupervised Learning -- Ana Fred

47 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Partitional Methods: K-Means

Favors compactness

Strength

:

:

:

Fast algorithm (O(tkn) t is the number of iterations; normally, k, t << n.)

Scalability

Often terminates at a local optimum.

Weakness

K-means clustering of uniform data (k=4)

Imposes spherical-shaped clusters

Unsupervised Learning -- Ana Fred

48 From Single Clustering to Ensemble Methods - April 2009

24

Unsupervised Learning

Clustering Algorithms

Partitional Methods: K-Means

10

10

Favors compactness

Strength

:

:

:

66

Fast algorithm (O(tkn) t is the number of iterations; normally, k, t << n.)

Scalability

44

Often terminates at a local optimum.

22

Weakness

:

:

88

Imposes spherical-shaped00clusters

22

44 clusters

66

Is sensitive to the number00of objects

in

88

10

10

Unsupervised Learning -- Ana Fred

49 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Partitional Methods: K-Means

Favors compactness

Strength

:

:

:

Fast algorithm (O(tkn) t is the number of iterations; normally, k, t << n.)

Scalability

Often terminates at a local optimum.

Weakness

:

:

:

Imposes spherical-shaped clusters

Is sensitive to the number of objects in clusters

Dependence on initialization

Unsupervised Learning -- Ana Fred

50 From Single Clustering to Ensemble Methods - April 2009

25

Unsupervised Learning

10

10

Favors compactness

Strength

:

:

:

2

10

0

0

8

:

:

:

Fast

Scalability

Robust to noise

4

6

Weakness

Clustering Algorithms

10

0

0

10

Imposes spherical-shaped clusters

Is sensitive to the number of objects in clusters

Dependence on initialization

0

0

4 Unsupervised

6 Learning

8

-- Ana Fred

10

51 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Partitional Methods: K-Means

Favors compactness

Strength

:

:

:

Fast algorithm (O(tkn) t is the number of iterations; normally, k, t << n.)

Scalability

Often terminates at a local optimum.

Weakness

:

:

:

:

:

Imposes spherical-shaped clusters

Is sensitive to the number of objects in clusters

Dependence on initialization

Needs criteria to set the final number of clusters

Applicable only when mean is defined (what about categorical

data?)

Unsupervised Learning -- Ana Fred

52 From Single Clustering to Ensemble Methods - April 2009

26

Unsupervised Learning

Clustering Algorithms

Variations of K-Means Method

K-Means(MacQueen67): each cluster is represented by the center

of the cluster

A few variants of the k-means which differ in

:

:

:

:

Selection of the initial k means

Dissimilarity calculations (Mahalanobis distance -> elliptic clusters)

Strategies to calculate cluster means

Medoid - each cluster is represented by one of the objects in the

cluster

Fuzzy version: Fuzzy K-Means

Handling categorical data: k-modes (Huang98)

:

:

:

Replacing means of clusters with modes

Using new dissimilarity measures to deal with categorical objects

Using a frequency-based method to update modes of clusters

Unsupervised Learning -- Ana Fred

53 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Spectral Clustering

For a given data set, X, Spectral Clustering finds a set of data clusters on

the basis of spectral analysis of a similarity graph

The clustering problem is defined in terms of a complete graph, G, with

vertices V={1, , N}, corresponding to the data points in the data set, and

each edge between two vertices is weighted by the similarity between them.

The weight matrix is also called the affinity matrix or the similarity matrix.

x x

Aij e

j

2

Gaussian Kernel

Unsupervised Learning -- Ana Fred

54 From Single Clustering to Ensemble Methods - April 2009

27

Unsupervised Learning

Clustering Algorithms

Spectral Clustering

Cutting edges of G we obtain disjoint subgraphs of G as the clusters of X

The goal of clustering is to organize the dataset into disjoint subsets with

high intra-cluster similarity and low inter-cluster similarity

The resulting clusters should be as compact and isolated as possible

Unsupervised Learning -- Ana Fred

55 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Spectral Clustering

The graph partitioning for data clustering can be interpreted as a

minimization problem of an objective function, in which the compactness

and isolation are quantified by the subset sums of edge weights.

Common objective functions

Ratio cut (Rcut)

Rcut(C1 , , Ck ) :

l 1

cut(Cl , X \ Cl )

card Cl

Normalised cut (Ncut)

Ncut(C1 , , Ck ) :

l 1

Min-max cut (Mcut)

M cut(C1 , , Ck ) :

l 1

cut(Cl , X \ Cl )

cut(Cl , X )

cut(Cl , X \ Cl )

cut(Cl , Cl )

cut(A, B) is the sum of the edge weights between p A and p B

P\Cl is the complement of Cl X,

card Cl denotes the number of points in Cl

Unsupervised Learning -- Ana Fred

56 From Single Clustering to Ensemble Methods - April 2009

28

Unsupervised Learning

Clustering Algorithms

Spectral Clustering

The solution of the minimization problem of any of the previous objective

functions is obtained from the matrix of the first k eigenvectors of a matrix

derived from the affinity matrix (Laplacian matrix)

The eigenvectors for Ncut and Mcut are identical, and obtained from the

symmetrical Laplacian

L Lsym : D 1/ 2 AD 1/ 2

D is a diagonal matrix whose ii-th entry is the sum of the i-th row of A

Another common choice is L Lrw : D 1 D A

Distinct algorithms differ on the way of producing and using the

eigenvectors and how to derive clusters from them.

:

:

Some use each eigenvector one at a time

Other, use top k eigenvectors simultaneously

Closely related with spectral graph partitioning, in which the second

eigenvector of a graphs Laplacian is used to define a semi-optimal cut; the

second eigenvector solves a relaxation of an NP-hard discrete graph

partitioning problem, giving an approximation to the optimal cut.

Unsupervised Learning -- Ana Fred

57 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Spectral Clustering

Clustering Algorithms

(Ng et al, 2001)

Maps the feature space into a new space, Y, based on the

eigenvectors of a matrix derived from an affinity matrix associated

with the data set.

The data partition is obtained by applying the K-means algorithm

x x

on the new space.

(NG. and Al. 2001)

Aij e

Original feature

space

j

2

Eigen vector

Feature space

A. Y. Ng and M. I. Jordan and Y. Weiss, On Spectral Clustering: Analysis and an algorithm, NIPS 2001

Unsupervised Learning -- Ana Fred

58 From Single Clustering to Ensemble Methods - April 2009

29

Unsupervised Learning

Spectral Clustering

Clustering Algorithms

(Ng et al, 2001)

Algorithm:

Unsupervised Learning -- Ana Fred

59 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Spectral Clustering

Clustering Algorithms

(Ng et al, 2001)

Unsupervised Learning -- Ana Fred

60 From Single Clustering to Ensemble Methods - April 2009

30

Unsupervised Learning

Spectral Clustering

Clustering Algorithms

(Ng et al, 2001)

Results strongly depend on parameter values: k e

K=2, =0.1

K=2, =0.4

Unsupervised Learning -- Ana Fred

61 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Spectral Clustering

Clustering Algorithms

(Ng et al, 2001)

Results strongly depend on parameter values: k e

K=2, =0.1

K=2, =0.4

Unsupervised Learning -- Ana Fred

62 From Single Clustering to Ensemble Methods - April 2009

31

Unsupervised Learning

Spectral Clustering

Clustering Algorithms

(Ng et al, 2001)

Results strongly depend on parameter values: k e

1.5

1.5

0.5

0.5

-0.5

-0.5

-1

-1

-1.5

-1.5

-2

-1.5

-1

-0.5

0.5

1.5

-2

-1.5

-1

-0.5

0.5

1.5

K=3, =0.45

K=3, =0.1

Unsupervised Learning -- Ana Fred

63 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Spectral Clustering

Clustering Algorithms

(Ng et al, 2001)

Results strongly depend on parameter values: k e

1.5

1.5

0.5

0.5

-0.5

-0.5

-1

-1

-1.5

-1.5

-2

-1.5

-1

-0.5

0.5

1.5

-2

K=3, =0.1

-1.5

-1

-0.5

0.5

1.5

K=3, =0.45

Unsupervised Learning -- Ana Fred

64 From Single Clustering to Ensemble Methods - April 2009

32

Unsupervised Learning

Clustering Algorithms

Spectral Clustering

Selection of parameter values:

MSEY

MSE:

Eigengap

1

N

( A) 1

Rcut

Rcut K

y

k

ni

i 1 j 1

mi

2

1

k 1

l 1,l k

i

jS k

jSl

A jk

ij

Unsupervised Learning -- Ana Fred

65 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Selection of Parameters: Global Results on selecting and K

None of the studied methods is

suitable for the automatic

selection of the spectral

clustering parameters

A majority voting decision did not

significantly improve the results

Percentage of correct classification:

Unsupervised Learning -- Ana Fred

66 From Single Clustering to Ensemble Methods - April 2009

33

Unsupervised Learning

Clustering Algorithms

Spectral Clustering

Strength

:

:

Detects arbitrary-shaped clusters.

By using an adequate similarity measure between patterns, can be

applied to all types of data

Weakness

:

:

Computationally heavy

Needs criteria to set the final number of clusters and scaling factor

Unsupervised Learning -- Ana Fred

67 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Model-Based Clustering: Finite Mixtures

k random sources, with probability density functions fi(x), i=1,,k

f1(x)

Choose at random

f2(x)

random variable

fi(x)

Conditional: f (x|source i) = fi (x)

Joint: f (x and source i) = fi (x) i

fk(x)

f (x and source i) i f i (x)

all sources

k

Unconditional: f(x) =

i 1

Unsupervised Learning -- Ana Fred

68 From Single Clustering to Ensemble Methods - April 2009

34

Unsupervised Learning

Clustering Algorithms

Model-Based Clustering: Finite Mixtures

each component models one cluster

clustering = mixture fitting

k

f(x|) = i f(x|i )

i=1

f1(x)

f2(x)

f3(x)

Unsupervised Learning -- Ana Fred

69 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Gaussian Mixture Decomposition

Mixture Model:

k

f(x|) = i f(x|i )

i=1

Component densities

k

Mixing probabilities: i 0 and

: f ( x | i )

i 1

Gaussian

Arbitrary covariances: f (x | i ) N(x | i , Ci )

{ 1 , 2 ,..., k , C1 , C2 ,..., Ck , 1 , 2 ,...k 1}

Common covariance: f ( x | i ) N( x | i , C)

{ 1 , 2 ,..., k , C, 1 , 2 ,...k 1}

Unsupervised Learning -- Ana Fred

70 From Single Clustering to Ensemble Methods - April 2009

35

Unsupervised Learning

Clustering Algorithms

Mixture Model Fitting

n independent observations x {x (1) , x ( 2) ,..., x ( n ) }

Mixture density model: f(x|) =

f(x|i )

i=1

Estimate that maximizes (log)likelihood (ML estimate of ):

arg max L( x, )

L( x , ) log

f ( x

( j)

| ) log

j1

j 1

f (x

i 1

( j)

| i )

mixture

Unsupervised Learning -- Ana Fred

71 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Gaussian Mixture Model Fitting

Problem: the likelihood function is unbounded as det(Ci ) 0

.

.

There is no global maximum

Unusual goal: a good local maximum

Example: a 2-component Gaussian mixture

f ( x | 1 , 2 , , )

2

some data points

2 2

( x 1 ) 2

2 12

1

e

2

( x 2 ) 2

2

{x1 , x 2 ,..., x n }

1 x1

( x ) 2

1 1 2 2

L( x, ) log

e

2 2

2

n

log(...)

j 2

as 2 0

Unsupervised Learning -- Ana Fred

72 From Single Clustering to Ensemble Methods - April 2009

36

Unsupervised Learning

Clustering Algorithms

Mixture Model Fitting

ML estimate has no closed-form solution

Standard alternative: expectation-maximization (EM) algorithm:

Missing data problem:

Observed data:

x {x (1) , x ( 2) ,..., x ( n ) }

Unsupervised Learning -- Ana Fred

73 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Mixture Model Fitting

ML estimate has no closed-form solution

Standard alternative: expectation-maximization (EM) algorithm:

Missing data problem:

.

.

Observed data: x {x , x ,..., x }

(1)

( 2)

(n)

Missing data: z {z , z ,..., z }

(1)

( 2)

(n)

Missing labels (colors)

z ( j) z1( j) , z (2j) , ..., z (kj) , 0 ... 0 1 0 ... 0 T

1 at position i

x( j) generated

by component i

Complete log-likelihood function:

n

Lc (x, z, ) z i( j) log i f i (x ( j) | i )

j1 i 1

log f ( x ( j) , z ( j) | )

Unsupervised Learning -- Ana Fred

74 From Single Clustering to Ensemble Methods - April 2009

37

Unsupervised Learning

Clustering Algorithms

The EM Algorithm

The E-step: compute the expected value of Lc (x, z, )

( t ) ] Q(,

(t) )

E[Lc (x, z, ) | x,

The M-step: update parameter estimates

( t 1) arg max Q(,

(t) )

Unsupervised Learning -- Ana Fred

75 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

EM-Algorithm for Mixture of Gaussian

( 0) ,

(1) ,...,

(t) ,

( t 1) ,...

Iterative procedure:

The E-step: wi( j ,t )

i f ( x ( j ) | i( t ) )

k

n 1

f ( x ( j ) | n( t ) )

w i( j,t ) Estimate, at iteration t, of the probability that x ( j) was

produced by component i

The M-step:

i( t 1)

1 n ( j, t )

wi

n j1

n

i( t 1)

w

j1

( j, t )

i

x ( j)

w i( j,t )

( t 1)

i

w

j1

j1

( j, t )

i

( x ( j) i( t 1) ) ( x ( j) i( t 1) ) T

n

w

j1

( j, t )

i

Unsupervised Learning -- Ana Fred

76 From Single Clustering to Ensemble Methods - April 2009

38

Unsupervised Learning

Clustering Algorithms

Mixture Gaussian Decomposition: Model Selection

How many components?

:

:

The maximized likelihood never decreases when k increases

Usually:

Criteria in this cathegory:

), k k , k 1,..., k

k arg min C (

(k)

min

min

max

.

.

.

) L(x,

) P(

)

C (

(k)

(k)

(k)

Minimum description length (MDL), Rissanen and Ristad, 1992.

Akaikes information criterion (AIC), Whindham and Cutler, 1992.

Schwarzs Bayesian inference criterion (BIC), Fraley and Raftery,

1998.

Resampling-based techniques

.

.

.

Bootstrap for clustering, Jain and Moreau, 1987.

Bootstrap for Gaussian mixtures, McLachlan, 1987.

Cross validation, Smyth, 1998.

Unsupervised Learning -- Ana Fred

77 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Mixture Gaussian Decomposition: Model Selection

Given (k) , shortest code-length for x (Shannons):

L(x | ( k ) ) log f (x | ( k ) )

MDL criterion: parameter code length

Total code-length (two part code):

L(x, ( k ) ) log f (x | ( k ) ) L(( k ) )

Parameter

code-length

MDL criterion:

arg min log f (x | ) L( )

(k)

(k)

(k)

( k )

L(each component of (k) ) =

1

log( n ' )

2

n ' Amount of data from which the parameter is estimated

Unsupervised Learning -- Ana Fred

78 From Single Clustering to Ensemble Methods - April 2009

39

Unsupervised Learning

Clustering Algorithms

Mixture Gaussian Decomposition: Model Selection

Classical MDL: n = n

arg min log f (x | ) k log( n )

(k)

(k)

( k )

2

Mixtures MDL (MMDL) (Figueiredo, 2002)

arg min log f (x | ) k ( N p 1) log( n ) N p

(k)

(k)

( k )

2

2

Using EM and redefining the M-Step

Np

n

i w i( j, t )

2

j1

( t 1)

i

Gaussian, arbitrary covariances

Np = d + d(d+1)/2

y+

Gaussian, common covariance:

Np = d

m 1

m 1

Np is the number of parameters

of each component.

log(

y

This M-step may annihilate components

M. Figueiredo and A. K. Jain, Unsupervised Learning of Finite Mixture Models, IEEE TPAMI, 2002

Unsupervised Learning -- Ana Fred

79 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Gaussian Mixture Decomposition EM MMDL

Examples

Unsupervised Learning -- Ana Fred

80 From Single Clustering to Ensemble Methods - April 2009

40

Unsupervised Learning

Clustering Algorithms

Gaussian Mixture Decomposition EM MMDL

Examples

Unsupervised Learning -- Ana Fred

81 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

Gaussian Mixture Decomposition

Strength

:

:

:

Model-based approach

Good for Gaussian data

Handles touching clusters

Weakness

:

:

Unable to detect arbitrary shaped clusters

Dependence on initialization

Unsupervised Learning -- Ana Fred

82 From Single Clustering to Ensemble Methods - April 2009

41

Unsupervised Learning

Clustering Algorithms

Gaussian Mixture Decomposition

It is a local (greedy) algorithm (likelihood never decreases)

=> Initialization dependent

74 iterations

270 iterations

Unsupervised Learning -- Ana Fred

83 From Single Clustering to Ensemble Methods - April 2009

Unsupervised Learning

Clustering Algorithms

References

A. K. Jain and R. C. Dubes. Algorithms for Clustering Data. Prentice Hall, 1988.

A.K. Jain and M. N. Murty and P.J. Flynn, Data Clustering: A Review, ACM Computing Surveys, vol 31. No

3,pp 264-323, 1999.

Data Mining: Concepts and Techniques, J. Han and M. Kamber, Morgan Kaufmann Pusblishers, 2001.

A. Y. Ng and M. I. Jordan and Y. Weiss, On Spectral Clustering: Analysis and an algorithm, in Advances in

Neural Information Processing Systems 14, T. G. Dietterich and S. Becker and Z. Ghahramani, MIT

Press, 2002,

M. Figueiredo and A. K. Jain, Unsupervised Learning of Finite Mixture Models, IEEE TPAMI, 2002

A. L. N. Fred, J. M. N. Leito, A New Cluster Isolation Criterion Based on Dissimilarity Increments, IEEE

Trans. On Pattern Analysis and Machine Intelligence, vol 25, NO. 8, pp 2003.

Unsupervised Learning -- Ana Fred

84 From Single Clustering to Ensemble Methods - April 2009

42

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5814)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1092)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (844)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (348)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Expansions Booklet AIESEC USDocument17 pagesExpansions Booklet AIESEC USNasr CheaibNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- ProTec Catalog 4.2019Document4 pagesProTec Catalog 4.2019Nasr CheaibNo ratings yet

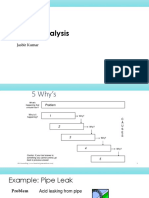

- 5 Why Analysis: Jasbir KumarDocument8 pages5 Why Analysis: Jasbir KumarNasr CheaibNo ratings yet

- HW 8 Fall 2014Document1 pageHW 8 Fall 2014Nasr CheaibNo ratings yet

- IE 478 Homewwork 2Document2 pagesIE 478 Homewwork 2Nasr CheaibNo ratings yet

- HW 8 FALL 2014 SolutionsDocument3 pagesHW 8 FALL 2014 SolutionsNasr CheaibNo ratings yet

- Home Work Set 3: EOY (T) Description Amount ($)Document3 pagesHome Work Set 3: EOY (T) Description Amount ($)Nasr CheaibNo ratings yet

- Selection of Material Shape and Manufacturing Process For A Connecting RodDocument12 pagesSelection of Material Shape and Manufacturing Process For A Connecting RodNasr Cheaib0% (2)

- Solution To Problems From HW Set # 6Document4 pagesSolution To Problems From HW Set # 6Nasr CheaibNo ratings yet

- HW 1 Fall 2014Document1 pageHW 1 Fall 2014Nasr CheaibNo ratings yet

- Homework Set 2BDocument1 pageHomework Set 2BNasr CheaibNo ratings yet

- HW 1 Solution Fall 2014Document3 pagesHW 1 Solution Fall 2014Nasr CheaibNo ratings yet

- Respiratory System: - Chapter 23Document25 pagesRespiratory System: - Chapter 23Nasr CheaibNo ratings yet

- IE516 10/12 Markov Chain: Thursday, October 12, 2017 9:06 AMDocument6 pagesIE516 10/12 Markov Chain: Thursday, October 12, 2017 9:06 AMNasr CheaibNo ratings yet

- 2005, MATH3801 Assignment 4 With SolutionsDocument3 pages2005, MATH3801 Assignment 4 With SolutionsNasr CheaibNo ratings yet

- IE516 10/17 DTMC Notes: Tuesday, October 17, 2017 9:05 AMDocument8 pagesIE516 10/17 DTMC Notes: Tuesday, October 17, 2017 9:05 AMNasr CheaibNo ratings yet

- IE 519 Dynamic ProgrammingDocument25 pagesIE 519 Dynamic ProgrammingNasr CheaibNo ratings yet

- Module 3 Lesson 2 Alloy Forging Ranges Typical Composition, % Temperature, °FDocument2 pagesModule 3 Lesson 2 Alloy Forging Ranges Typical Composition, % Temperature, °FNasr CheaibNo ratings yet

- Ie 330 HW 4Document2 pagesIe 330 HW 4Nasr CheaibNo ratings yet

- Renal PhysiologyDocument19 pagesRenal PhysiologyNasr Cheaib100% (2)

- Song ListDocument22 pagesSong ListNasr CheaibNo ratings yet

- Basic Lab Equipment Handling of Chemicals and Waste: Chem 211 Tools - 1Document7 pagesBasic Lab Equipment Handling of Chemicals and Waste: Chem 211 Tools - 1Nasr CheaibNo ratings yet

- Mathematics 1 QB - PART - ADocument6 pagesMathematics 1 QB - PART - ADivya SoundarajanNo ratings yet

- AI Unit-5 NotesDocument25 pagesAI Unit-5 NotesRajeev SahaniNo ratings yet

- 01Document12 pages01Junaid Ahmad100% (1)

- CT5123 LectureNotesThe Finite Element Method An Introduction PDFDocument125 pagesCT5123 LectureNotesThe Finite Element Method An Introduction PDFarjunNo ratings yet

- Petroleum EnggDocument102 pagesPetroleum EnggHameed Bin AhmadNo ratings yet

- Chapter 11 DataReductionDocument33 pagesChapter 11 DataReductionYuhong WangNo ratings yet

- Sdre LQR AreDocument40 pagesSdre LQR AreChao-yi HsiehNo ratings yet

- ANSYS Model of A Cylindrical Fused Silica Fibre-01Document15 pagesANSYS Model of A Cylindrical Fused Silica Fibre-01lamia97No ratings yet

- Linear Regression Analysis HUDM 5122: Introduction To R Johnny WangDocument17 pagesLinear Regression Analysis HUDM 5122: Introduction To R Johnny WangKatherine GreenbergNo ratings yet

- Frost Multidimensional Perfectionism Scale RevisitedDocument28 pagesFrost Multidimensional Perfectionism Scale RevisitedDebora LingaNo ratings yet

- EE704 Control Systems 2 (2006 Scheme)Document56 pagesEE704 Control Systems 2 (2006 Scheme)Anith KrishnanNo ratings yet

- Wiggers S.L., Pedersen P. Structural Stability and Vibration. An Integrated Introduction by Analytical and Numerical Methods.2018. 160pDocument169 pagesWiggers S.L., Pedersen P. Structural Stability and Vibration. An Integrated Introduction by Analytical and Numerical Methods.2018. 160ptrueverifieduser100% (2)

- Stability Analysis For A Consensus Control With Communication Time delays-KNFN-ver6Document6 pagesStability Analysis For A Consensus Control With Communication Time delays-KNFN-ver6Raheel AnjumNo ratings yet

- Mathematics-II MATH F112 Dr. Balram Dubey: BITS PilaniDocument27 pagesMathematics-II MATH F112 Dr. Balram Dubey: BITS PilaniRahul SaxenaNo ratings yet

- Sturm-Liouville Boundary Value ProblemsDocument7 pagesSturm-Liouville Boundary Value ProblemsmahmuthuysuzNo ratings yet

- Howto Octave C++Document14 pagesHowto Octave C++Simu66No ratings yet

- Syllabus (Linear System Theory) 2019 SPRINGDocument3 pagesSyllabus (Linear System Theory) 2019 SPRINGKavi Ya100% (1)

- European Journal of Operational Research Paper FormatDocument5 pagesEuropean Journal of Operational Research Paper Formategxnjw0kNo ratings yet

- Levida Besir, Aldea Anamaria (T)Document18 pagesLevida Besir, Aldea Anamaria (T)Mihai AlbuNo ratings yet

- B.SC Computer SCDocument62 pagesB.SC Computer SCAnshu KumarNo ratings yet

- Chapter 08 - Linear TransformationsDocument35 pagesChapter 08 - Linear TransformationsTsiry TsilavoNo ratings yet

- Optimal Subspaces With PCADocument11 pagesOptimal Subspaces With PCAAnonymous ioD6RbNo ratings yet

- Assessing The Digital Transformation Maturity of Motherboard Industry: A Fuzzy AHP ApproachDocument15 pagesAssessing The Digital Transformation Maturity of Motherboard Industry: A Fuzzy AHP ApproachBOHR International Journal of Finance and Market Research (BIJFMR)No ratings yet

- Paper: Iit-Jam 2010Document6 pagesPaper: Iit-Jam 2010Mr MNo ratings yet

- Syllabus of Mathematical Economics 1Document12 pagesSyllabus of Mathematical Economics 1Thư NguyễnNo ratings yet

- Small Signal Analysis Tool: ToolsDocument4 pagesSmall Signal Analysis Tool: ToolsSudhir RavipudiNo ratings yet

- Handout Numerical AnalysisDocument3 pagesHandout Numerical AnalysisBhavesh ChauhanNo ratings yet

- (Ebook - PDF - Mathematics) - Abstract AlgebraDocument113 pages(Ebook - PDF - Mathematics) - Abstract Algebrambardak100% (2)

- CS Curriculam With SyllabusDocument46 pagesCS Curriculam With Syllabusprabu061No ratings yet

- Linear Algebra and Multivariable CalculusDocument3 pagesLinear Algebra and Multivariable Calculusj73774352No ratings yet