Professional Documents

Culture Documents

Swam Report PDF

Swam Report PDF

Uploaded by

Sravan KumarOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Swam Report PDF

Swam Report PDF

Uploaded by

Sravan KumarCopyright:

Available Formats

SWARM INTELLIGENCE

1.2 BIOLOGICAL BASIS AND ARTIFICIAL LIFE

Researchers try to examine how collections of animals, such as flocks, herds

and schools, move in a way that appears to be orchestrated. A flock of birds moves

like a well choreographed dance troupe. They veer to the left in unison, and then

suddenly they may all dart to the right and swoop down toward the ground. How can

they coordinate their actions so well? In 1987, Reynolds created a boid model, which

is a distributed behavioral model, to simulate on a computer the motion of a flock of

birds. Each boid is implemented as an independent actor that navigates according to

its own perception of the dynamic environment. A boid must observe the following

rules. First, the avoidance rule" says that a boid must move away from boids that are

too close, so as to reduce the chance of in-air collisions. Second, the copy rule" says

a boid must go in the general direction that the flock is moving by averaging the other

boids' velocities and directions. Third, the center rule" says that a boid should

minimize exposure to the flock's exterior by moving toward the perceived center of

the flock. Flake added a fourth rule, view," that indicates that a boid should move

laterally away from any boid the blocks its view. This boid model seems reasonable if

we consider it from another point of view, that of it acting according to attraction and

repulsion between neighbours in a flock. The repulsion relationship results in the

avoidance of collisions and attraction makes the flock keep shape, i.e., copying

movements of neighbours can be seen as a kind of attraction. The center rule plays a

role in both attraction and repulsion. The swarm behaviour of the simulated flock is

the result of the dense interaction of the relatively simple behaviours of the individual

boids. To summarize, the flock is more than a set of birds; the sum of the actions

results in coherent behaviour. One of the swarm-based robotic implementations of

cooperative transport is inspired by cooperative prey retrieval in social insects. A

single ant finds a prey item which it cannot move alone. The ant tells this to its nest

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

SWARM INTELLIGENCE

mate by direct contact or trail-laying. Then a group of ants collectively carries the

large prey back. Although this scenario seems to be well understood in biology, the

mechanisms underlying cooperative transport remain unclear. Roboticists have

attempted to model this cooperative transport. For instance, Kube and Zhang

introduce a simulation model including stagnation recovery with the method of task

modeling. The collective behavior of their system appears to be very similar to that of

real ants.

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

SWARM INTELLIGENCE

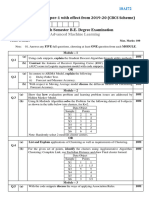

Table 2.1: Advantages of Swarm Systems

There are certain disadvantages:

A. Collective behavior cannot be inferred from individual agent behavior. This

implies that observing single agents will not necessarily allow swarm-defeating

behavior to be chosen. (This can be viewed as an advantage too from an aggressive

point of view).

B. Individual behavior looks like noise as action choice is stochastic.

C. Designing swarm-based systems is hard. There are almost no analytical

mechanisms for design.

D. Parameters can have a dramatic effect on the emergence (or not) of collective

behavior.

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

SWARM INTELLIGENCE

Table 2.2: Disadvantages of Swarm systems

2.2.4 CREATING SWARMING SYSTEMS

A swarm-based system can be generated using the following principles:

1. Agents are independent, they are autonomous. They are not simply functions as in

the case of a conventional object oriented system.

2. Agents should be small, with simple behaviors. They should be situated and

capable of dealing with noise. In fact, noise is a desirable characteristic.

3. Decentralized do not rely on global information. This makes things a lot more

reliable.

4. Agents should be behaviorally diverse typically stochastic.

5. Allow information to leak out of the system; i.e. introduce disorder at some rate.

6. Agents must share information locally is preferable.

7. Planning and execution occur concurrently the system is reactive.

The principles outlined above come from Parunak . More recently, the

importance of gradient creation and maintenance has been stressed and that digital

pheromones can be made to react in the environment, thereby creating new signals of

use to other swarm agents.

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

SWARM INTELLIGENCE

Figure 2.1: Agent Environment Interaction

The above figure summarizes the interactions between agent and environment.

Agent state along with environment state drives agent dynamics; i.e. agent action

selection. Agent action selection changes environment state through the creation or

modification of signals. Environment state is used as input to environment dynamics.

The dynamics of the environment causes changes to occur in environment state. What

is important in the above figure is that agent state is hidden only the agent has

access to it. Environment state is visible to the agent but has to be stored by the agent

if it is to be reused at some later point in time when the agent has (presumably)

moved to a different location.

2.2.5 TOOLS FOR INVESTIGATING SWARM SYSTEMS

As mentioned in a previous section, predicting the emergent behavior of

swarm systems based upon the behavior of individual agents is generally not

analytically tractable. Consequently, agent-based simulation is used to investigate the

properties of these systems. There are two tools useful for such investigations.

2.2.5.1 NETLOGO

NetLogo is a simple agent simulation environment based upon StarLogo, an

environment by Resnick and described in his book entitled, Turtles, Termites and

Traffic Jams. Users program using agents and patches (the environment). In

NetLogo, the environment has active properties and is ideal in its support of

stigmergy as agents can easily modify or sense information of the local patch or

patches within some neighborhood. Unlike conventional programming languages, the

programmer does not have control over agent execution and cannot assume

uninterrupted execution of agent behavior. A fairly sophisticated user interface is

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

SWARM INTELLIGENCE

provided and new interface components can be introduced using a drag-and-drop

mechanism. Interaction with model variables is easily achieved through form-based

interfaces. The user codes in NetLogos own language, which is simple and type-free

(i.e. dynamically bound).

2.2.5.2 REPAST

Repast is a more sophisticated Java-based simulation environment that forces

the developer to provide Java classes in order to create an application. The Recursive

Porous Agent Simulation Toolkit (Repast) is one of several agent modeling toolkits

that are available. Repast is differentiated from Swarm since Repast has multiple pure

implementations in several languages and built-in adaptive features such as genetic

algorithms and regression. Repast is at the moment the most suitable simulation

framework for the applied modeling of social interventions. Of particular interest is

the built-in support for genetic algorithms (which can be used to evolve controllers

for robot swarms, for example) and sophisticated modeling neighbourhoods. Repast is

widely used for social simulation and models in crowd dynamics, economics and

policy making among others have been constructed.

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

SWARM INTELLIGENCE

3. APPLICATION OF SWARM INTELLIGENCE

3.1 ANT COLONY OPTIMIZATION

In nature, ants usually wander randomly, and upon finding food return to their

nest while laying down pheromone trails. If other ants find such a path (pheromone

trail), they are likely not to keep travelling at random, but to instead follow the trail,

returning and reinforcing it if they eventually find food. However, as time passes, the

pheromone starts to evaporate. The more time it takes for an ant to travel down the

path and back again, the more time the pheromone has to evaporate (and the path to

become less prominent). A shorter path, in comparison will be visited by more ants

(can be described as a loop of positive feedback) and thus the pheromone density

remains high for a longer time.

ACO is implemented as a team of intelligent agents which simulate the ants

behavior, walking around the graph representing the problem to solve using

mechanisms of cooperation and adaptation. ACO algorithm requires to define the

following:

The problem needs to be represented appropriately, which would allow the ants to

crementally update the solutions through the use of a probabilistic transition rules,

based on the amount of pheromone in the trail and other problem specific knowledge.

It is also important to enforce a strategy to construct only valid solutions

corresponding to the problem definition.

A problem-dependent heuristic function that measures the quality of components

that can be added to the current partial solution.

A rule set for pheromone updating, which specifies how to modify the pheromone

value .

A probabilistic transition rule based on the value of the heuristic function and the

pheromone value that is used to iteratively construct a solution.

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

10

SWARM INTELLIGENCE

ACO was first introduced using the Travelling Salesman Problem (TSP).

Starting from its start node, an ant iteratively moves from one node to another. When

being at a node, an ant chooses to go to a unvisited node at time t with a probability

given by

Pki,j(t)= [i,j(t)][i,j(t)]

lnta[i,j(t)][i,j(t)]

where Nik is the feasible neighborhood of the antk, that is, the set of cities which

antkhas not yet visited; i,j(t) is the pheromone value on the edge (i, j) at the time t,

is the weight of pheromone; i,j(t) is a priori available heuristic information on the

edge (i, j) at the time t, is the weight of heuristic information. Two parameters and

determine the relative influence of pheromone trail and heuristic information. A

generalized version of the pseudo-code for the ACO algorithm with reference to the

TSP is illustrated in Algorithm.

3.1.1 ANT COLONY OPTIMIZATION ALGORITHM

01. Initialize the number of antsn, and other parameters.

02. While (the end criterion is not met) do

03. t= t + 1;

04. For k= 1 to n

05. antkis positioned on a starting node;

06. For m= 2 to problem size

07. Choose the state to move into

08. according to the probabilistic transition rules;

09. Append the chosen move into tabuk(t) for the antk;

10. Next m

11. Compute the length Lk(t) of the tour Tk(t) chosen by the antk;

12. Compute i,j(t) for every edge (i, j) in Tk(t) according to Eq.(1.18);

13. Next k

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

11

SWARM INTELLIGENCE

14. Update the trail pheromone intensity for every edge (i, j).

15. Compare and update the best solution;

16. End While.

3.1.2 ANT COLONY ALGORITHMS FOR OPTIMIZATION PROBLEMS

3.1.2.1 TRAVELLING SALESMAN PROBLEM (TSP)

Given a collection of cities and the cost of travel between each pair of them,

the travelling salesman problem is to find the cheapest way of visiting all of the cities

and returning to the starting point. It is assumed that the travel costs are symmetric in

the sense that travelling from city X to city Y costs just as much as travelling from Y

to X. The parameter settings used for ACO algorithm are as follows:

Number of ants = 5

Maximum number of iterations = 1000

=2

=2

= 0.9

Q = 10

A TSP with 20 cities is used to illustrate the ACO algorithm.

The best route obtained is depicted as 1 14 11 4 8 10 15 19 7

18 16 5 13 20 6 17 9 2 12 3 1, and is illustrated

in Fig with a cost of 24.5222. The search result for a TSPfor 198 cities is illustrated in

Figure 1.7 with a total cost of 19961.3045.

Figure 3.1 An ACO solution for the TSP (20 cities)

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

12

SWARM INTELLIGENCE

3.1.2.2 ANT COLONY ALGORITHMS FOR DATA MINING

The study of ant colonies behavior and their self-organizing capabilities is of

interest to knowledge retrieval/management and decision support systems sciences,

because it provides models of distributed adaptive organization, which are useful to

solve difficult classification, clustering and distributed control problems. Ant colony

based clustering algorithms have been first introduced by Deneubourg et al. by

mimicking different types of naturally-occurring emergent phenomena. Ants gather

items to form heaps (clustering of dead corpses or cemeteries) observed in the

species of Pheidole Pallidulaan D Lasius, Niger. If sufficiently large parts of corpses

are randomly distributed in space, the workers form cemetery clusters within a few

hours, following a behavior similar to segregation. If the experimental arena is not

sufficiently large, or if it contains spatial heterogeneities, the clusters will be formed

along the edges of the arena or, more generally, following the heterogeneities. The

basic mechanism underlying this type of aggregation phenomenon is an attraction

between dead items mediated by the ant workers: small clusters of items grow by

attracting workers to deposit more items. It is this positive and auto-catalytic

feedback that leads to the formation of larger and larger clusters. A sorting approach

could be also formulated by mimicking ants that discriminate between different kinds

of items and spatially arrange them according to their properties. This is observed in

the Leptothoraxunifasciatus species where larvae are arranged according to their size.

The general idea for data clustering is that isolated items should be picked up and

dropped at some other location where more items of that type are present. Ramos et

al. proposed ACLUSTER algorithm to follow real antlike behaviors as much as

possible. In that sense, bio-inspired spatial transition probabilities are incorporated

into the system, avoiding randomly moving agents, which encourage the distributed

algorithm to explore regions manifestly without interest. The strategy allows guiding

ants to find clusters of objects in an adaptive way. In order to model the behavior of

ants associated with different tasks (dropping and picking up objects), the use of

combinations of different response thresholds was proposed. There are two major

factors that should influence any local action taken by the ant-like agent: the number

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

13

SWARM INTELLIGENCE

of objects in its neighborhood, and their similarity. Lumer and Faieta used an average

similarity, mixing distances between objects with their number, incorporating it

simultaneously into a response threshold function like the algorithm proposed by

Deneubourg et al. ACLUSTER uses combinations of two independent response

threshold functions, each associated with a different environmental factor depending

on the number of objects in the area, and their similarity.

3.2 PARTICLE SWARM OPTIMIZATION

The canonical PSO model consists of a swarm of particles, which are

initialized with a population of random candidate solutions. They move iteratively

through the d-dimension problem space to search the new solutions, where the fitness,

f, can be calculated as the certain qualities measure. Each particle has a position

represented by a position-vector xi (i is the index of the particle), and a velocity

represented by a velocity-vector vi. Each particle remembers its own best position so

far in a vector xi , and its j-th dimensional value is x ij. The best position-vector

among the swarm so far is then stored in a vector x, and its j-th dimensional value is x

j . During the iteration time t, the update of the velocity from the previous velocity to

the new velocity is determined by eq. The new position is then determined by the sum

of the previous position and the new velocity by

vij(t + 1) = wvij(t) + c1r1(xij(t) xij(t)) + c2r2(xj(t) xij(t)).

xij(t + 1) = xij(t) + vij(t + 1).

where w is called as the inertia factor, r1 and r2 are the random numbers, which are

used to maintain the diversity of the population, and are uniformly distributed in the

interval [0,1] for the j-th dimension of the i-th particle. c1 is a positive constant,

called as coefficient of the self-recognition component, c2is a positive constant,

called as coefficient of the social component. From Eq a particle decides where to

move next, considering its own experience, which is the memory of its best past

position, and the experience of its most successful particle in the swarm. In the

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

14

SWARM INTELLIGENCE

particle swarm model, the particle searches the solutions in the problem space with a

range [s, s] (If the range is not symmetrical, it can be translated to the corresponding

symmetrical range.) In order to guide the particles effectively in the search space, the

maximum moving distance during one iteration must be clamped in between the

maximum velocity [vmax, vmax] given in Eq:

vij= sign(vij)min(|vij| , vmax).

The value of vmax is p s, with 0.1 p 1.0 and is usually chosen to be s, i.e. p = 1.

The pseudo-code for particle swarm optimization algorithm is illustrated in Algorithm

3.2.1 PARTICLE SWARM OPTIMIZATION ALGORITHM

01. Initialize the size of the particle swarm n, and other parameters.

02. Initialize the positions and the velocities for all the particles randomly.

03. While (the end criterion is not met) do

04. t= t + 1;

05. Calculate the fitness value of each particle;

06. x= argminn

i=1(f(x(t 1)), f(x1(t)), f(x2(t)), , f(xi(t)), , f(xn(t)));

07. For i= 1 to n

08. x#i(t) = argminn

i=1(f(x#i(t 1)), f(xi(t));

09. For j = 1 to Dimension

10. Update the j-th dimension value of xi and vi

10. according to Eqs.

12. Next j

13. Next i

14. End While.

The end criteria are usually one of the following:

Maximum number of iterations: the optimization process is terminated after a fixed

number of iterations, for example, 1000 iterations.

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

15

SWARM INTELLIGENCE

Number of iterations without improvement: the optimization process is terminated

after some fixed number of iterations without any improvement.

Minimum objective function error: the error between the obtained objective function

value and the best fitness value is less than a pre-fixed anticipated threshold.

3.2.2 THE PARAMETERS OF PSO

The role of inertia weight w, in Eq, is considered critical for the convergence

behavior of PSO. The inertia weight is employed to control the impact of the previous

history of velocities on the current one. Accordingly, the parameter w regulates the

trade-off between the global (wide-ranging) and local (nearby) exploration abilities of

the swarm. A large inertia weight facilitates global exploration (searching new areas),

while a small one tends to facilitate local exploration, i.e. fine-tuning the current

search area. A suitable value for the inertia weight w usually provides balance

between global and local exploration abilities and consequently results in a reduction

of the number of iterations required to locate the optimum solution. Initially, the

inertia weight is set as a constant. However, some experiment results indicates that

it is better to initially set the inertia to a large value, in order to promote global

exploration of the search space, and gradually decrease it to get more refined

solutions. Thus, an initial value around 1.2 and gradually reducing towards 0 can be

considered as a good choice for w. A better method is to use some adaptive

approaches (example: fuzzy controller), in which the parameters can be adaptively

fine tuned according to the problems under consideration. The parameters c1 and c2,

in Eq.(1.1), are not critical for the convergence of PSO. However, proper fine-tuning

may result in faster convergence and alleviation of local minima. As default values,

usually, c1 = c2 = 2 are used, but some experiment results indicate that c1 = c2 = 1.49

might provide even better results. Recent work reports that it might be even better to

choose a larger cognitive parameter, c1, than a social parameter, c2, but with c1+c2

4. The particle swarm algorithm can be described generally as a population of vectors

whose trajectories oscillate around a region which is defined by each individuals

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

16

SWARM INTELLIGENCE

previous best success and the success of some other particle. Various methods have

been used to identify some other particle to influence the individual. Eberhart and

Kennedy called the two basic methods as gbest model and lbest model. In the

lbest model, particles have information only of their own and their nearest array

neighbors best (lbest), rather than that of the entire group. Namely, in Eq., gbest is

replaced by lbest in the model. So a new neighborhood relation is defined for the

swarm:

vid(t+1) = w vid(t)+c1 r1 (pid(t)xid(t))+c2 r2 (pld(t)xid(t)).

xid(t + 1) = xid(t) + vid(t + 1).

In the gbest model, the trajectory for each particles search is influenced by the best

point found by any member of the entire population. The best particle acts as an

attractor, pulling all the particles towards it. Eventually all particles will converge to

this position. The lbest model allows each individual to be influenced by some

smaller number of adjacent members of the population array. The particles selected to

be in one subset of the swarm have no direct relationship to the other particles in the

other neighborhood. Typically lbest neighborhoods comprise exactly two neighbors.

When the number of neighbors increases to all but itself in the lbest model, the case is

equivalent to the gbest model. Some experiment results testified that gbest model

onverges quickly on problem solutions but has a weakness for becoming trapped in

local optima, while lbest model converges slowly on problem solutions but is able to

flow around local optima, as the individuals explore different regions. The gbest

model is recommended strongly for unimodal objective functions, while a variable

neighborhood model is recommended for multimodal objective functions. Kennedy

and Mendes studied the various population topologies on the PSO performance.

Different concepts for neighborhoods could be envisaged. It can be observed as a

spatial neighborhood when it is determined by the Euclidean distance between the

positions of two particles, or as a sociometric neighborhood (e.g. the index position in

the storing array). The different concepts for neighborhood leads to different

neighborhood topologies. Different neighborhood topologies primarily affect the

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

17

SWARM INTELLIGENCE

communication abilities and thus the groups performance. Different topologies are

illustrated in Fig. In the case of a global neighborhood, the structure is a fully

connected network where every particle has access to the others best position. But in

local neighborhoods there are more possible variants. In the von Neumann topology,

neighbors above, below, and each side on a two dimensional lattice are connected.

Fig. illustrates the von Neumann topology with one section flattened out. In a

pyramid topology, three dimensional wire frame triangles are formulated as

illustrated in Fig. As shown in Fig, one common structure for a local neighborhood

is the circle topology where individuals are far away from others (in terms of graph

structure, not necessarily distance) and are independent of each other but neighbors

are closely connected. Another structure is called wheel (star) topology and has a

more hierarchical structure, because all members of the neighborhood are connected

to a leader individual as shown in Fig. So all information has to be communicated

though this leader, which then compares the performances of all others.

Figure 3.2 Some neighborhood topologies

3.2.3 APPLICATIONS OF PARTICLE SWARM OPTIMIZATION

3.2.3.1 JOB SCHEDULING ON COMPUTATIONAL GRIDS

Grid computing is a computing framework to meet the growing computational

demands. Essential grid services contain more intelligent functions for resource

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

18

SWARM INTELLIGENCE

management, security, grid service marketing, collaboration and so on. The load

sharing of computational jobs is the major task of computational grids. To formulate

our objective, define Ci,j(i {1, 2, ,m}, j {1, 2, , n}) as the completion time

that the grid node Gi finishes the job Jj, _Ci represents the time that the grid node Gi

finishes all the scheduled jobs. Define Cmax= max{_Ci} as the make span, and _m

i=1(_Ci) as the flow time. An optimal schedule will be the one that optimizes the

flow time and make span. The conceptually obvious rule to minimize _m i=1(_Ci) is

to schedule Shortest Job on the Fastest Node (SJFN). The simplest rule to minimize

Cmax is to schedule the Longest Job on the Fastest Node (LJFN). Minimizing _m

i=1(_Ci) asks the average job finishes quickly, at the expense of the largest job taking

a long time, whereas minimizing Cmax, asks that no job takes too long, at the

expense of most jobs taking a long time. Minimization of Cmax will result in the

maximization of _mi=1(_Ci).

Figure 3.3 Performance for job scheduling

3.2.3.2 PSO FOR DATA MINING

Data mining and particle swarm optimization may seem that they do not have

many properties in common. However, they can be used together to form a method

which often leads to the result, even when other methods would be too expensive or

difficult to implement. Ujjinn and Bentley provided internet-based recommender

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

19

SWARM INTELLIGENCE

system, which employs a particle swarm optimization algorithm to learn personal

preferences of users.

When compared with K-means, Fuzzy C-means, K-Harmonic means and

genetic algorithm approaches, in general, the PSO algorithm produced better results

with reference to inter- and intra-cluster distances, while having quantization errors

comparable to the other algorithms. Sousa et al. proposed the use of the particle

swarm optimizer for data mining. Tested against genetic algorithm and Tree

Induction Algorithm (J48), the obtained results indicates that particle swarm

optimizer is a suitable and competitive candidate for classification tasks and can be

successfully applied to more demanding problem domains. The basic idea of

combining particle swarm optimization with data mining is quite simple. To extract

this knowledge, a database may be considered as a large search space, and a mining

algorithm as a search strategy. PSO makes use of particles moving in an ndimensional space to search for solutions for an n-variable function (that is fitness

function) optimization problem. The datasets are the sample space to search and each

attribute is a dimension for the PSO-miner. During the search procedure, each particle

is evaluated using the fitness function which is a central instrument in the algorithm.

Their values decide the swarms performance. The fitness function measures the

predictive accuracy of the rule for data mining, and it is given by Eq.

predictive accuracy =|A C| 1/2|A|

where |A C| is the number of examples that satisfy both the rule antecedent and the

consequent, and |A| is the number of cases that satisfy only the rule antecedent. The

term 1/2 is subtracted in the numerator of Eq.to penalize rules covering few training

examples. So we use predictive accuracy to the power minus one as fitness function

in PSO-miner.

Rule pruning is a common technique in data mining. The main goal of rule

pruning is to remove irrelevant terms that might have been unduly included in the

rules. Rule pruning potentially increases the predictive power of the rule, helping to

avoid its over-fitting to the training data. Another motivation for rule pruning is that it

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

20

SWARM INTELLIGENCE

improves the simplicity of the rule, since a shorter rule is usually easier to be

understood by the user than a longer one. As soon as the current particle completes

the construction of its rule, the rule pruning procedure is called. The quality of a rule,

denoted by Q, is computed by the formula: Q = sensitivity specificity. Just after the

covering algorithm returns a rule set, another post-processing routine is used: rule set

cleaning, where rules that will never be applied are removed from the rule set. The

purpose of the validation algorithm is to statistically evaluate the accuracy of the rule

set obtained by the covering algorithm. This is done using a method known as tenfold

cross validation [29]. Rule set accuracy is evaluated and presented as the percentage

of instances in the test set correctly classified. In order to classify a new test case,

unseen during training, the discovered rules are applied in the order they were

discovered.

The performance of PSO-miner was evaluated using four public-domain data

sets from the UCI repository [4]. The used parameters settings are as following:

swarm size=30; c1 = c2 = 2; maximum position=5; maximum velocity=0.10.5;

maximum uncovered cases = 10 and maximum number of iterations=4000. The

results are reported in Table 1.3. The algorithm is not only simple than many other

methods, but also is a good alternative method for data mining.

3.3 RIVER FORMATION DYNAMICS

Instead of associating pheromone values to edges, we associate altitude values

to nodes. Drops erode the ground (they reduce the altitude of nodes) or deposit the

sediment (increase it) as they move. The probability of the drop to take a given edge

instead of others is proportional to the gradient of the down slope in the edge, which

in turn depends on the difference of altitudes between both nodes and the distance

(i.e. the cost of the edge). At the beginning, a flat environment is provided, that is, all

nodes have the same altitude. The exception is the destination node, which is a hole.

Drops are unleashed at the origin node, which spread around the flat environment

until some of them fall in the destination node. This erodes adjacent nodes, which

creates new down slopes, and in this way the erosion process is propagated. New

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

21

SWARM INTELLIGENCE

drops are inserted in the origin node to transform paths and reinforce the erosion of

promising paths. After some steps, good paths from the origin to the destination are

found. These paths are given in the form of sequences of decreasing edges from the

origin to the destination.

3.3.1 ALGORITHM OF RIVER FORMATION DYNAMICS

The basic scheme of the RFD algorithm follows:

Initialize Drops()

Initialize Nodes()

while (not all Drops Follow The Same Path())

and (not other Ending Condition())

move Drops()

erode Paths()

deposit Sediments()

analyze Paths()

end while

The scheme shows the main ideas of the proposed algorithm .We comment on the

behavior of each step. First, drops are initialized (initialize Drops()), i.e., all drops are

put in the initial node. Next, all nodes of the graph are initialized (initialize Nodes()).

This consists of two operations. On the one hand, the altitude of the destination node

is fixed to 0. In terms of the river formation dynamics analogy, this node represents

the sea, that is, the final goal of all drops. On the other hand, the altitude of the

remaining nodes is set to some equal value. The while loop of the algorithm is

executed until either

all drops find the same solution (allDropsFollow

TheSamePath()), that is, all drops traverse the same sequence of nodes, or another

alternative finishing condition is satisfied (otherEndingCondition()). This condition

may be used, for example, for limiting the number of iterations or the execution time.

Another choice is to finish the loop if the best solution found so far is not surpassed

during the lastn iterations. The first step of the loop body consists in moving the drops

across the nodes of the graph (moveDrops()) in a partially random way. The

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

22

SWARM INTELLIGENCE

following transition rule defines the probability that a drop k at a node i chooses the

node j to move next:

Pk(i, j) = decreasingGrad(i,j) l Vk(i)decreasingGrad(i,l)ifj Vk(i)

0 if j _ Vk(i)

whereVk(i) is the set of nodes that are neighbors of node I that can be visited by the

drop k and decreasingGrad(i,j) represents the negative gradient between nodes i and j,

which is defined as follows:

decreasingGrad(i, j) = altitude(j) altitude(i) distance(i, j)

wherealtitude(x) is the altitude of the node x and distance(i,j) is the length of the edge

connecting node iand node j. Let us note that, at the beginning oft_he algorithm, the

altitude of all nodes is the same, so l Vk(i) decreasingGradient(i, l) is 0. In order to

give a special treatment to flat gradients, we modify this scheme as follows: We

consider that the probability that a drop moves through an edge with 0 gradient is set

to some (non null) value. This enables drops to spread around a flat environment,

which is mandatory, in particular, at the beginning of the algorithm. In fact, going one

step further, we also introduce this improvement:

We let drops climb increasing slopes with a low probability. This probability will be

inverse proportional to the increasing gradient, and it will be reduced during the

execution of the algorithm by using a similar method to the one used in Simulated

Annealing. This new feature improves the search of good paths. In the next phase

(erodePaths()) paths are eroded according to the movements of drops in the previous

phase. In particular, if a drop moves from node A to node B then we erode A. That is,

the altitude of this node is reduced depending on the current gradient between A and

B. The erosion is higher if the down slope between A and B is high. If the edge is flat

or increasing then a small erosion is performed.

The altitude of the final node (i.e., the sea) is never modified and it remains equal to 0

during all the execution. Once the erosion process finishes, the altitude of all

nodes is slightly increased (depositSediments()). The objective is to avoid that, after

some iterations, the erosion process leads to a situation where all altitudes are close

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

23

SWARM INTELLIGENCE

to 0, which would make gradients negligible and would ruin all formed paths. In fact,

we also enable drops to deposit sediment in nodes. This happens when all movements

available for a drop imply to climb an increasing slope and the drop fails to climb any

edge (according to the probability assigned to it). In this case, the drop is blocked and

it deposits the sediments it transports. This increases the altitude of the current node.

The increment is proportional to the amount of cumulated sediment. Finally, the last

step (analyzePaths()) studies all solutions found by drops and stores the best one.

3.3.2 ADAPTING THE METHOD TO TSP

In this section we show how the previous general scheme is particularized to the TSP

problem .The adaptation of our method to TSP has several similarities with the way

ACO is applied to this problem. Like ants, each drop must remember the nodes

traversed so far in previous movements. In particular, this is necessary to avoid

repeating nodes. In spite of the fact that the use of gradients strongly minimizes these

situations in RFD, sequences of (improbable) climbing movements can still lead a

drop to a local cycle. Besides, altitudes are not eroded after each individual drop

movement. On the contrary, the altitudes of traversed nodes are eroded all together

when the drop actually completes a round-trip (as ants do when they increase

pheromone trails). This is another reason to keep track of the sequence of traversed

nodes. As in ACO, when a drop finds that all adjacent nodes have already been

visited, the drop disappears (again, this is much less probable in RFD than in ACO).

There is an additional reason in RFD why a drop may disappear: When all adjacent

nodes are higher than the actual node (i.e., the node is valley) and the drop fails to

climb any up gradient, the drop is removed and it deposits the sediment it carries at

the current node. Note that, in this case, the computations performed to move the drop

should not be considered as lost despite of the fact that it did not found a solution.

This is because the cumulation of sediments increases the node altitude, and thus

gradients coming from adjacent nodes are flatted. This eventually avoids that other

drops fall in the same blind alley. The adaptation of RFD to TSP has other

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

24

SWARM INTELLIGENCE

peculiarities that do not appear in ACO. Our algorithm provides an intrinsic method

to avoid that drops traverse cycles, but the goal of TSP consists in finding a cycle

indeed. In order to allow drops to follow a cycle involving all nodes, the origin node

(which, as we said before, can be any fix node) is cloned as well as all edges

involving it. The original node play the role of origin node and the cloned node plays

the role of destination node. In this way, drops can form decreasing gradients from

the origin node to the destination node (for us, from a node to itself). Finally, the

adaptation of RFD to TSP requires to introduce other additional nodes for different

technical reasons. The number of these additional nodes, called barrier nodes, belongs

to O(n2) where n is the number of nodes of the original graph. In the next section,

ACO and RFD will be compared in terms of graphs with the same number of

standard nodes. That is, if an experiment deals with a 30 nodes graph, then the quality

of solutions and the consumed time are computed and compared in the case where

ACO deals with the original 30-nodes graph and RFD deals with the corresponding

adapted bigger graph.

3.3.3 APPLYING RFD TO DYNAMIC TSP

In this section we describe the application of our approach to solve dynamic

TSP and we report some experimental results. We compare the results found by using

ACO methods and the solutions found by using our method. All the experiments were

performed in an Intel T2400 processor with 1.83 GHz. In these experiments we

follow the next procedure. First, we calculate the solution of an instance of the

problem by using both algorithms. Next, we introduce one of the following changes

in the graph: (a) We delete an edge that is common to the solutions found by both

methods; (b) We delete a node of the graph; (c) We add a new node. Once a change is

introduced, we calculate a solution for this instance of the problem. Let us remark that

we do not restart the algorithms, but we keep the state of the methods (that is, the

amount of pheromone at each edge and the altitudes of the nodes, respectively) just

before the change.The figures show the best solution found by each method for each

execution time before andafter the changes are introduced. These graphs have been

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

25

SWARM INTELLIGENCE

randomly generated assuming that each node is connected with only a few other

nodes (between 10 and 20 connections per host) as it is the most common case in

complex networks. The basic shape shown in the figures is also obtained with other

benchmark examples. Next we comment the experimental results.

3.3.3.1 DELETING EDGES

In this experiment we can see how RFD and ACO recalculate the solution

after deleting an edge of the graph (i.e. after removing a connection of the

network).This specially remarkable that the difference between the best solutions

obtained by RFD and ACO is increased after the change is introduced. That is, RFD

has more capacity to reconstruct good solutions after a change happens.

3.3.3.2 DELETING NODE

In this experiment we show how both methods recalculate the solution after

deleting a node of the graph( i.e. after a host of the network breaks down). Note that

the results are similar to those obtained when removing one edge.

3.3.3.3 ADDING NODES

Finally, we show how both methods recalculate their solutions after adding a

new host in the network. When we are using our algorithm and we add a new node,

we have to set its altitude to some value. This initial altitude should ease as much as

possible the task of finding new solutions. In particular, the altitude of the new node

is set to the average of its neighbors. We use this altitude because it is the value that is

less aggressive with its environment, and in fact we observed that it allows to find

good solutions. On the other hand, there are a lot of different strategies to treat

dynamic graphs in ACO. When a change is introduced in the graph, it is necessary to

reset part of the pheromone information. Let us remark that, in this case, ACO did not

find any solution to the problem after the node is added. However, RFD obtains a

solution. Hence, in this case the advantage of RFD over ACO is quite relevant.

Obviously,we could completely restart the computation of ACO from the beginning.

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

26

SWARM INTELLIGENCE

3.3.3.4 INTRODUCING SEVERAL CHANGES

Now, instead of introducing only one change in the graph, we use a more

dramatic approach: We introduce several modifications at the same time. The changes

we have introduced in these experiments consist in simultaneously making these

modifications: Deleting two edges that are common to the solutions found by both

methods; Adding two new edges; Adding two new nodes (with its corresponding

edges to other nodes); and deleting two old nodes. We consider an experiment where

a randomly generated graph of 150 nodes is used. The average degree of nodes is 15.

As shown in Figure , the basic shape of the results is basically the same as in the

previous examples. that is, ants are better in the short term but drops obtain better

results in the medium term. Thus, the basic results are the same even if several

modifications are performed at the same time.

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

27

SWARM INTELLIGENCE

4. CONCLUSION

In computational science as well, swarms provide a very useful and

insightful metaphor for the imprecise and robust field of soft computing.

The idea of multiple potential solutions, even computer programs,

interacting throughout a computer run is a relatively revolutionary and

important one. Also new is the idea that potential problem solutions

(system designs, etc.) can irregularly oscillate (with stochasticity, no less)

in the system parameter hyperspace on their way to a near-optimal

configuration. It is almost unbelievable, even to us, that the computer

program that started out as a social-psychology simulation is now used to

optimize power grids in Asia; develop high-tech products in the United

States; and to solve high-dimensional, nonlinear, noisy mathematical

problems. But it seems that the paradigm is in its youth. Perhaps the most

powerful insight from swarm intelligence is that complex collective

behavior can emerge from individuals following simple rules. Possible

applications of swarm intelligence may be limited only by the imagination.

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

28

SWARM INTELLIGENCE

DEPARTMENT OF ELECTRONICS AND COMMUNICATION

COLLEGE OF ENGINEERING, ADOOR

29

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5814)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1092)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (845)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (348)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Lisa Blackman-Immaterial Bodies - Affect, Embodiment, Mediation (2012) PDFDocument241 pagesLisa Blackman-Immaterial Bodies - Affect, Embodiment, Mediation (2012) PDFCristina PeñamarínNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Forms of CommunicationDocument12 pagesForms of Communicationdominic laroza50% (2)

- Module 3.2 Time Series Forecasting LSTM ModelDocument23 pagesModule 3.2 Time Series Forecasting LSTM ModelDuane Eugenio AniNo ratings yet

- 18AI72Document3 pages18AI72Vaishnavi patilNo ratings yet

- Nature of CommunicationDocument2 pagesNature of CommunicationExcel Joy MarticioNo ratings yet

- Micromachines 13 00947Document12 pagesMicromachines 13 00947Đỗ QuảngNo ratings yet

- Assignment IT (402) PRACTICALDocument31 pagesAssignment IT (402) PRACTICALLt Dr Rajendra RajputNo ratings yet

- Chapter 06 - Sharda - 11e - Full - Accessible - PPT - 06Document39 pagesChapter 06 - Sharda - 11e - Full - Accessible - PPT - 06rorog345gNo ratings yet

- Pragmatic EquivalenceDocument15 pagesPragmatic EquivalenceAnisa KurniawatiNo ratings yet

- OneFormer: One Transformer To Rule Universal Image SegmentationDocument18 pagesOneFormer: One Transformer To Rule Universal Image SegmentationYann SkiderNo ratings yet

- Natural Language DatabaseDocument68 pagesNatural Language DatabaseRoshan TirkeyNo ratings yet

- Marie Thompson - Feminised Noise and The Dotted Line' of Sonic ExperimentalismDocument18 pagesMarie Thompson - Feminised Noise and The Dotted Line' of Sonic ExperimentalismLucas SantosNo ratings yet

- Cyclical Learning Rate For Training Neural Networks (Leslie Smith)Document10 pagesCyclical Learning Rate For Training Neural Networks (Leslie Smith)NothenNo ratings yet

- Core Libraries For Machine LearningDocument5 pagesCore Libraries For Machine LearningNandkumar KhachaneNo ratings yet

- FeedbackDocument39 pagesFeedbackhazem ab2009No ratings yet

- Understanding Self-Supervised Features For Learning Unsupervised Instance SegmentationDocument12 pagesUnderstanding Self-Supervised Features For Learning Unsupervised Instance SegmentationdrewqNo ratings yet

- Advanced Lectures On Machine Learning ML Summer SCDocument249 pagesAdvanced Lectures On Machine Learning ML Summer SCKhaled LahianiNo ratings yet

- Deep Learning Lecture 0 Introduction Alexander TkachenkoDocument31 pagesDeep Learning Lecture 0 Introduction Alexander TkachenkoMahmood KohansalNo ratings yet

- m3 Lec1 3Document7 pagesm3 Lec1 3ud54No ratings yet

- Question Paper Code:: Reg. No.Document3 pagesQuestion Paper Code:: Reg. No.HOD ECE KNCETNo ratings yet

- Synopsis Minor Project-2Document5 pagesSynopsis Minor Project-2Satyajeet RoutNo ratings yet

- Chapter1 (Classification)Document16 pagesChapter1 (Classification)110me0313No ratings yet

- Implementing A PID Controller Using A PICDocument15 pagesImplementing A PID Controller Using A PICJavier CharrezNo ratings yet

- One Page Resume TemplateDocument1 pageOne Page Resume TemplateEmma HaileyNo ratings yet

- Deep ResNetDocument9 pagesDeep ResNetAvanish SinghNo ratings yet

- 1.3.3.3 Hands-On Lab Explore Your Dataset Using SQL Queries - MDDocument3 pages1.3.3.3 Hands-On Lab Explore Your Dataset Using SQL Queries - MDAlaa BaraziNo ratings yet

- ALVINNDocument45 pagesALVINNjosephkumar58No ratings yet

- Bda Unit II Lecture1Document10 pagesBda Unit II Lecture1Anju2No ratings yet

- Automation 2 Topic 1: Design A Simple Process Control SystemDocument12 pagesAutomation 2 Topic 1: Design A Simple Process Control SystemChriscarl De LimaNo ratings yet

- Artificial Intelligence in HR PracticesDocument24 pagesArtificial Intelligence in HR PracticesSHIREEN IFTKHAR AHMED-DMNo ratings yet