Professional Documents

Culture Documents

WCECS2015 pp814-818 2

Uploaded by

SigitPangestuTuNdanOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

WCECS2015 pp814-818 2

Uploaded by

SigitPangestuTuNdanCopyright:

Available Formats

Proceedings of the World Congress on Engineering and Computer Science 2015 Vol II

WCECS 2015, October 21-23, 2015, San Francisco, USA

A K-Means Algorithm Application on Big Data

Beste Eren, Ezgi lga Karabulut, S. Emre Alptekin, Glfem Iklar Alptekin

learning, artificial intelligence, pattern recognition, and data

Abstract As more and more data is becoming available due management [3]. The idea is to build computer programs

to advances in information and communication technologies, that examine the databases automatically, search for rules or

gaining knowledge and insights from this data is replacing patterns in order to make accurate predictions about future

experience and intuition based decision making in data. Since actual data are usually imperfect, it is expected

organizations. Big data mining can be defined as the capability that there will be problems, exceptions to each rule.

of extracting useful information from massive and complex

datasets or data streams. In this paper, one of the commonly

Therefore, the algorithms must be robust enough to adapt to

used data mining algorithm, K-means, is used to extract the imperfect data and extract regularities that are

information from a big dataset. Doing so, MapReduce inaccurate, but useful [2]. One of the most important

framework with Hadoop is used. As the dataset, the results of problem sources in Big Data and data mining is still the

the social evolution experiment of MIT Human Dynamics Lab heterogeneity of data structure and data resources when

are used. The aim is to derive meaningful relationships gathering data [4]. This is why understanding and

between students eating habits and the tendency of getting examination of these structural features of Big Data have

cold. impact on the effectiveness and efficiency of data mining.

In this paper, the aim is to analyze and get meaningful

Index Terms Big data, data mining, clustering, K-means

algorithm.

results from the Big Data by using one of the most known

data mining algorithms: k-Means. As data, we have utilized

I. INTRODUCTION datasets called "Reality Commons". They are the mobile

The advancement of technology, the further datasets containing the dynamics of several communities of

development of Internet and recent social media revolution about 100 people each. They are collected with tools

has accelerated the speed, and the volume of the produced developed in the MIT Human Dynamics Lab, which are

data. Companies and businesses have started to collect more available as open source. They invite researchers to propose

data than they know what to do with. Hence, Big Data is and submit their own applications of the data to demonstrate

still in the process of rapidly expanding in different the scientific and business values of these datasets, suggest

application areas and it began to dominate future how to meaningfully extend these experiments to larger

technologies. Big data can be defined as high-volume, high- populations, and develop the math that fits agent-based

velocity and high-variety information assets that demand models or systems dynamics models to larger populations.

cost-effective, innovative forms of information processing The paper is structured as follows: Section 2 describes

for enhanced insight and decision making. This type of huge the K-means algorithm that is used to explore data. The

volume of data of course brings several challenges. Several utilized software technologies are presented in Section 3,

challenges include analysis, retrieval, search, storage, while Section 4 presents the dataset used in the application

sharing, visualization, transfer and security of data. Each along with its preparation. Section 5 reveals the results and

one of these challenges constitutes a wide research area. the concluding remarks of the application. Future works and

Data generation capacity has never been so big: Every the conclusion are given in Section 6.

day 2.5 trillion bytes of data are generated and 90% of II. K-MEANS ALGORITHM

produced data in the world so far were produced in the last

two years [1]. However, the data are not in a meaningful K-means is one of the unsupervised learning and

form; they are in the raw form. It is necessary to process clustering algorithms [5]. This algorithm classifies a given

them to explore patterns, hence transforming them to dataset by finding a certain number of clusters (K). The

meaningful information [2]. If they are used in an accurate clusters are differentiated by their centers. The best choice is

manner, Big Data have the ability to give results on these to place them as much as possible far away from each other.

massive data piles, which shed light to future technologies. The algorithm is highly sensitive to initial placement of the

Doing so, data mining methodologies play an important role cluster centers. A disadvantage of K-means algorithm is that

to explore data. it can only detect compact, hyperspherical clusters that are

Data mining is defined as the science of extracting useful well separated [6]. Another disadvantage is that due to its

information from large datasets or databases. This area gradient descent nature, it often converges to a local

combines several disciplines such as statistics, machine minimum of the criterion function [7]. The algorithm is

composed of the following steps:

i. Initial value of centroids: Deciding K points randomly

Beste Eren, Galatasaray University, Industrial Engineering

beste.eren.91@gmail.com into the space which represent the clustered objects. These K

Ezgi lga Karabulut, Galatasaray University, Computer Engineering, points constitute the group of initial centroids.

cilgakarabulut@gmail.com ii. Objects-centroid distance: Calculating the distance of

S. Emre Alptekin, Galatasaray University, Industrial Engineering,

ealptekin@gsu.edu.tr each object to each centroid, and assigning them to the

Glfem Isiklar Alptekin (corresponding author), Galatasaray University, closest cluster that is determined by a minimum distance

Computer Engineering, gisiklar@gsu.edu.tr measure. (A simple distance measure that is commonly used

ISBN: 978-988-14047-2-5 WCECS 2015

ISSN: 2078-0958 (Print); ISSN: 2078-0966 (Online)

Proceedings of the World Congress on Engineering and Computer Science 2015 Vol II

WCECS 2015, October 21-23, 2015, San Francisco, USA

is Euclidean distance.) files by clients. In addition, there are a number of

iii Determine centroids: After all objects are assigned to DataNodes, usually with a cluster node, which manages

a cluster, recalculating the positions of the K centroids. storage of nodes. HDFS exposes a file system namespace

vi. Object-centroid distance: Calculating the distances of and allows user data to be stored in files. A file is divided

each object to the new centroids and generating a distance into one or more blocks and these blocks are stored in a set

matrix. of DataNode. NameNode determines the mapping of blocks

The whole process is carried out iteratively until the to DataNodes [12].

centroid values become constant. C. MapReduce

III. THE STRUCTURE OF BIG DATA AND UTILIZED SOFTWARE MapReduce is a parallel computing model for batch

processing and for generating large datasets [4]. Users

In order to address the problem of partitioning (a.k.a.

specify computers in terms of a map and a reduce function.

clustering), several researchers propose parallel partitioning

The run-time system automatically parallelizes underlying

algorithms and/or parallel programming [8]. Currently, Big

computers, manages failures of inter-computer

Data exploration are generated mainly using parallel

communications equipment and programs to make efficient

programming models such as MapReduce, providing with a

use of the network and discs [8].

cloud computing platform. MapReduce has been

First, a master node performs a Map function. The entered

popularized by Google, but today the MapReduce paradigm

data are partitioned into smaller sub-problems and they are

is been implemented in many open source projects, the

distributed to workers nodes [12]. The output of map

largest being the Apache Hadoop [9].

function is a set of records in the form of key-value pairs.

A. Hadoop Environment and Hadoop Architecture The records for any given key are collected at the running

Apache Hadoop is an open source software framework Reduce node for that key. After that all data from the Map

that supports the massive data storage and its processing. function were transferred to the right machine, the second

Instead of relying on expensive proprietary hardware to stage begins the Reduce progressing and produces another

store and process data, Hadoop enables distributed set of key-value pairs, collects the answers to all the sub-

processing of large amounts of data across large server problems and combines them as the output [12]. Although

partitions. Hadoop possess various advantages. First, there is this simple model is limited to the use of key-value pairs,

no need to change the data formats as the system many tasks and algorithms may be integrated in this

automatically redistributes Hadoop data. It brings massively software structure [11].

parallel computing servers, which makes the massively D. Apache Mahout

parallel computing affordable for the ever increasing volume

Mahout is an open-source software framework for

of Big Data. In addition, Hadoop is free and it can absorb

scalable machine learning and data mining based mainly on

any type of data from any number of sources. Hadoop can

Hadoop. It is implemented on a wide variety of machine

also recover data failures and the computer that causes the

learning and data mining algorithms such as clustering,

rupture of the node or network congestion [10].

classification, collaborative filtering and extracting frequent

Hadoop provides with the Hadoop Distributed File

patterns [13].

System (HDFS) which is robust and tolerant of mistakes,

inspired by the file system from Google, as well as a Java E. Orange

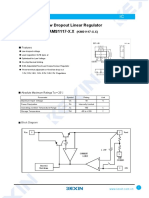

API that allows parallel processing on the partition of nodes Orange is an open-source data mining and analytics

using the MapReduce. Using the code written in other program that offers opportunities such as data preparation,

languages, such as Python and C, it is possible with Hadoop exploratory data analysis and data modeling. With Orange,

Streaming, to create and run the executable works with the data exploration can be achieved by visual programming or

mapper and/ or the gearbox. In addition, Hadoop has a task by Python scripts. It has components for machine learning

tracker that keeps track of the execution of programs across and plug-ins for bioinformatics and text mining. Orange

the cluster nodes [11]. brings data analysis functions in it. It contains modules for

B. Hadoop Distributed File System (HDFS) visually rich and flexible programming, analysis and user-

friendly data visualization. Orange has Python libraries for

Hadoop Distributed File System (HDFS) is a distributed

connecting and coding. It provides complete set of

file system that provides with fault tolerance and designed to

components such as data preprocessing, rating and filtering

be run on commodity hardware. HDFS provides high

functionality, modeling, evaluation of models and

throughput access to application data and is suitable for

exploration techniques. The detailed elements and how they

applications that have large datasets.

interacts with each other are given in Fig. 1

HDFS can store data on thousands of servers, and it can

perform the given work (Map/Reduce jobs) through these

machines. HDFS has master/slave architecture. Large data

are automatically divided into pieces that are managed by

different nodes of the Hadoop cluster [12]. An HDFS cluster

consists of a single NameNode, a master server that

manages the file system namespace and regulates access to

ISBN: 978-988-14047-2-5 WCECS 2015

ISSN: 2078-0958 (Print); ISSN: 2078-0966 (Online)

Proceedings of the World Congress on Engineering and Computer Science 2015 Vol II

WCECS 2015, October 21-23, 2015, San Francisco, USA

Fig. 1. Elements used in Orange software

IV. DATA PREPARATION very good health and without cough and sore throat is 23.

The dataset created by MIT Human Dynamics Lab is Furthermore, the total population of the group with good

utilized [14]. The objective of gathering such a dataset was health and with very good health and with a little bit of

to explore the capabilities of the smart phones on cough and sore throat is 15. Hence, we can conclude that the

investigating human interactions. The subjects were 75 persons with healthy diet and very healthy diet have

students in the MIT Media Laboratory, and 25 incoming tendency to have less cough and sore throat.

students at the MIT Sloan business school. The dataset is The sieve diagram (Fig. 4) is a graphical method for

one of the pioneering sets studying community dynamics by visualizing the frequencies in a two-way contingency table

tracking a sufficient amount people with their personal and comparing them to the expected frequencies under

assumption of independence. In Fig. 4, the correlation

mobile phones.

between healthy diet and cough and sore throat is shown.

In this paper, the Social Evolution dataset is chosen which

The observed frequency in each cell is represented with the

contains data that follows the life of a student in the

number of squares drawn in the related rectangle. The

dormitory [14]. The data include proximity, location, and difference between the observed frequency and the expected

call log, collected through a cell-phone application that frequency is the density of shading. The color blue indicates

scans nearby Wi-Fi access points and Bluetooth devices the derivation from independence is positive, and the color

every six minutes. Survey data includes sociometric survey red indicates that it is negative. The frequency of the group

for relationships (choose from friend, acquaintance, or do with average diet with the value cough and sore throat

not know), political opinions (democratic vs. republican), less or equal to 0 is higher than the frequency of the group

recent smoking behavior, attitudes towards exercise and with below average diet. This means that the persons with

fitness, attitudes towards diet, attitudes towards academic average diet have the tendency to not have any cough and

performance, current confidence and anxiety level, music sore throat. In Table I, the observed value of the number of

sharing from 1500 independent music tracks from a wide examples (probability) is 33.49% for average diet and

assortment of genres. Two tables are selected and data are cough and sore throat0; but the observed probability is

combined with the primary key: user id. The Health Table 19.40% when cough and sore throat 0 and with below

contains user id, current weight, current height, survey average diet.

month, number of eaten salad per week, number of fruit and

vegetables eaten per day, healthy diet, and aerobics per

week. The other table, Disease Symptoms includes user id,

cough and sore throat, discharge, nasal congestion and

sneezing, fever, depression, open stressed. Most of these

attributes are binary defined.

V. RESULTS

The k-means function of Orange is utilized and the data is

clustered. The clusters established with Orange software are

depicted in Figure 2. Two attributes are selected for

analyzing the relationships between them: healthy diet and

cough and sore throat. Several results that are obtained can

be given as: The persons which have not any cough and sore

throat (the value of cough and sore throat=0) are usually in

good health. According to the point clouds graph (Fig. 3), Fig. 2. The clusters shown in Orange software

the total population of the group with good health and with

ISBN: 978-988-14047-2-5 WCECS 2015

ISSN: 2078-0958 (Print); ISSN: 2078-0966 (Online)

Proceedings of the World Congress on Engineering and Computer Science 2015 Vol II

WCECS 2015, October 21-23, 2015, San Francisco, USA

Fig. 3. Point clouds representation of relationships

Fig. 4. Sieve diagram showing the relationship between healthy diet and cough and sore throat

ISBN: 978-988-14047-2-5 WCECS 2015

ISSN: 2078-0958 (Print); ISSN: 2078-0966 (Online)

Proceedings of the World Congress on Engineering and Computer Science 2015 Vol II

WCECS 2015, October 21-23, 2015, San Francisco, USA

Table I. Comparison of the frequencies of diet below average and average diet when cough and sore throat 0

X Attribute: healthy diet X Attribute: healthy diet

Value: Average Value: Below average

Number of examples (p(x)): 7975 (38.66%) Number of examples (p(x)): 4804 (23.29%)

Y Attribute: sore.throat.cough Y Attribute: sore.throat.cough

Value: 0.00 Value: 0.00

Number of examples (p(y)): 17868 (86.62%) Number of examples (p(y)): 17868 (86.62%)

Number of examples (Probabilities): Number of examples (Probabilities):

Expected (p(x)p(y)): 6908.3 (33.49%) Expected (p(x)p(y)): 4161.4 (20.17%)

Actual (p(x)p(y)): 6853 (33.22%) Actual (p(x)p(y)): 4001 (19.40%)

Statistics: Statistics:

Chi-square: 109.33 Chi-square: 109.33

Standardized Pearson residual:-0.67 Standardized Pearson residual:-2.49

[6] elebi, M.E., Kingravi, H.A., Vela, P.A., 2013, A comparative

VI. CONCLUSION study of efficient initialization methods for the k-means clustering

algorithm, Expert Systems with Applications, 40, 200-210.

In this study, one of the clustering algorithms (K-means) [7] Selim, S.Z., Ismail, M.A., 1984, K-means-type algorithms: A

is applied to extract meaningful rules from Big Data. As generalized convergence theorem and characterization of local

data, the mobile datasets containing the dynamics of several optimality, IEEE Transactions on Pattern Analysis and Machine

Intelligence, 6(1), 81-87.

communities collected by the MIT Human Dynamics Lab is

[8] Zhao, W., Ma, H. & He Q., 2009, Parallel K-Means Clustering Based

used. The aim of our work is to demonstrate how Hadoop on MapReduce, First International Conference, CloudCom, Beijing,

and MapReduce can be used to get insights from data that 674-679.

are not readily available. We have selected Hadoop platform [9] Grolinger, K., Hayes, M., Higashino W.A., L'Heureux A., Allison

as it is an open-source project written in Java for distributed D.S. & Capretz M.A.M., 2014, Challenges for MapReduce in Big

Data. IEEE 10th World Congress on Services, Alaska, IEEE.

storage and distributed processing of very large data sets on [10] Hu, H., Wen, Y., Chua, T., & Li, X., 2014. Toward Scalable Systems

computer clusters. It is highly scalable and flexible in terms for Big Data Analytics: A Technology Tutorial. Access, IEEE, 2,

of storage and accessing new data sources, more cost 652-687.

effective compared to a traditional database management [11] Taylor, R.C., 2010, An Overview Of The Hadoop/Mapreduce/Hbase

Framework And Its Current Applications In Bioinformatics,

system, fast and more fault tolerant in an event of failure. Washington: BioMed Central Ltd.

Hadoops fault tolerant structure also constitutes one of its [12] Patel, A. B., Birla, M. & Nair, U., 2012, Addressing Big Data

weakest points. It ensures its efficiency by data locality, Problem Using Hadoop and Map Reduce, 2012 Nirma Universty

hence it necessitates several copies of data. Its limited SQL International Conference On Engineering, Ahmedabat.

support, lack of query optimizer, and inconsistently [13] Fan, W., Bifet, A., 2012, Mining Big Data: Current Status, and

Forecast to the Future, SIGKDD Explorations. 14(2), 1-5.

implemented data mining libraries can be considered in the

[14] Madan, A., Cebrian, M., Moturu, S., Farrahi, K., Pentland, A., 2012,

weaknesses of Hadoop. Sensing the 'Health State' of a Community, Pervasive Computing,

On the other hand the Orange platform, which has Python 11 (4), 36-45.

scripting interface, is an open-source general-purpose data

visualization and analysis software. Orange is intended both

for experienced users and programmers, as well as students

of data mining. As the dataset in question was clean, we

have been able to fully work in Orange, with its pre-defined

classes and functions.

Further research may include writing our own data

mining algorithm code, and compare the results of different

algorithms, select the most accurate one for given dataset

and use different distance measurement techniques for

clustering the data.

ACKNOWLEDGMENT

This research has been financially supported by Galatasaray

University Research Fund, with the project number

15.402.005.

REFERENCES

[1] IBM, 2012, What Is Big Data: Bring Big Data to the Enterprise.

http://www-01.ibm.com/software/data/bigdata/what-is-big-data.html.

[2] Witten, I.H., Frank, E., 2011, Data Mining: Practical Machine

Learning Tools And Techniques, Third Edition. San Fransisco:

Elsevier Inc.

[3] Hand D., Mannila H. & Smyth P., 2001, Principles of Data Mining.

Cambridge: The MIT Press.

[4] Xu X., Zhu X., Wu G., & Ding W., 2014, Data Mining with Big

Data, IEEE Transactions On Knowledge And Data Engineering, 26

(1), 97-107.

[5] Jain, A.K., Murty, M.N., Flynn, P.J., 1999, Data Clustering: A

Review, ACM Computing Surveys, 31(3), 264-323.

ISBN: 978-988-14047-2-5 WCECS 2015

ISSN: 2078-0958 (Print); ISSN: 2078-0966 (Online)

You might also like

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Win7 AllkeysDocument9 pagesWin7 AllkeysWalter Alexandre Dos Santos SilvaNo ratings yet

- Ipf Troubleshooting GuideDocument173 pagesIpf Troubleshooting GuideSpy Ball100% (2)

- F7D7602V2 8820ed01325uk RevC00 NetCamDocument46 pagesF7D7602V2 8820ed01325uk RevC00 NetCamEmeric SzelesNo ratings yet

- Robotics Real-Time ProgrammingDocument112 pagesRobotics Real-Time ProgrammingNazar KurylkoNo ratings yet

- Course Instructor Dr.M.K.Kavitha Devi, Professor/CSE, TCEDocument43 pagesCourse Instructor Dr.M.K.Kavitha Devi, Professor/CSE, TCEbaby sivakumarNo ratings yet

- Eaton 93E 100-200kVA UPS Technical Specification: Manufacturer's Declaration in Accordance With IEC 62040-3Document5 pagesEaton 93E 100-200kVA UPS Technical Specification: Manufacturer's Declaration in Accordance With IEC 62040-3Suliman AlghurairNo ratings yet

- Final Mad-Unit-4Document69 pagesFinal Mad-Unit-4Pooja jadhavNo ratings yet

- ITU T A5 TD New G.1028.2Document7 pagesITU T A5 TD New G.1028.2Dana AlhalabiNo ratings yet

- Motor Management System: Grid SolutionsDocument110 pagesMotor Management System: Grid Solutionsivan lopezNo ratings yet

- PG DV250Document14 pagesPG DV250admirduNo ratings yet

- Ap Controller (Capsman)Document20 pagesAp Controller (Capsman)Raul Cespedes IchazoNo ratings yet

- PlotShareOneDX v2.02 Install Guide v100Document3 pagesPlotShareOneDX v2.02 Install Guide v100TestoutNo ratings yet

- 1.2 Describe The Concept of Cache MemoryDocument18 pages1.2 Describe The Concept of Cache Memory00.wonderer.00No ratings yet

- Flashing Firmware Image Files Using The Rockchip ToolDocument10 pagesFlashing Firmware Image Files Using The Rockchip ToolEl PapiNo ratings yet

- Rhythm Acquire Manual EnglishDocument172 pagesRhythm Acquire Manual EnglishAnu AnoopNo ratings yet

- Physics Investigatory Project: Mr. Shailendra Pratap Physics Teacher Nps International SchoolDocument4 pagesPhysics Investigatory Project: Mr. Shailendra Pratap Physics Teacher Nps International Schoolabhigyan priyadarshanNo ratings yet

- Arduino PPT AkhilDocument15 pagesArduino PPT Akhilakhil gharuNo ratings yet

- JBL MRX612M Specsheet Eng 20140724Document2 pagesJBL MRX612M Specsheet Eng 20140724casefaNo ratings yet

- FC6 DatasheetDocument20 pagesFC6 DatasheetSetyo Budi MulyonoNo ratings yet

- Nee2102 Experiment Report 4Document7 pagesNee2102 Experiment Report 4Lynndon VillamorNo ratings yet

- Dynalink Telemetry System: Reliable Wireless Downhole Telemetry TechnologyDocument2 pagesDynalink Telemetry System: Reliable Wireless Downhole Telemetry TechnologyRefisal BonnetNo ratings yet

- Computer Science Nios Senior Secondary 330Document7 pagesComputer Science Nios Senior Secondary 330raju baiNo ratings yet

- Driving Forces Behind Client/server: Business Perspective: Need ForDocument27 pagesDriving Forces Behind Client/server: Business Perspective: Need ForDeepak Dhiman0% (1)

- Manual de Servicio Escaner Scanelite 5400Document34 pagesManual de Servicio Escaner Scanelite 5400erizo999No ratings yet

- Cisco Small Form-Factor Pluggable (SFP) Transceiver Modules Maintenance and TroubleshootingDocument24 pagesCisco Small Form-Factor Pluggable (SFP) Transceiver Modules Maintenance and TroubleshootingMelody4uNo ratings yet

- MMA WELDING PRODUCT REPAIR PROCESS OF GYSMI 160P 1 - 16. Auteur - Alexis Gillet Date - 30 - 06 - 2016.Document16 pagesMMA WELDING PRODUCT REPAIR PROCESS OF GYSMI 160P 1 - 16. Auteur - Alexis Gillet Date - 30 - 06 - 2016.jakalae5263No ratings yet

- Sai Vidya Institute of Technology: Course: 15ec663 - Digital System Design Using VerilogDocument27 pagesSai Vidya Institute of Technology: Course: 15ec663 - Digital System Design Using VerilogShivaprasad B KNo ratings yet

- IdeaPad Flex 5 14ITL05 SpecDocument7 pagesIdeaPad Flex 5 14ITL05 SpecghassanNo ratings yet

- MONiMAX8800-Error Code Manual - V01.00.00Document610 pagesMONiMAX8800-Error Code Manual - V01.00.00immortalNo ratings yet

- SMD Type IC: Low Dropout Linear Regulator AMS1117-X.XDocument3 pagesSMD Type IC: Low Dropout Linear Regulator AMS1117-X.XcarlosmarciosfreitasNo ratings yet