Professional Documents

Culture Documents

Unesco - Eolss Sample Chapters: Fundamentals of Communication Systems

Uploaded by

Wadhah IsmaeelOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Unesco - Eolss Sample Chapters: Fundamentals of Communication Systems

Uploaded by

Wadhah IsmaeelCopyright:

Available Formats

TELECOMMUNICATION SYSTEMS AND TECHNOLOGIES – Vol.

I - Fundamentals of Communication Systems - Przemysław

Dymarski and Sławomir Kula

FUNDAMENTALS OF COMMUNICATION SYSTEMS

Przemysław Dymarski and Sławomir Kula

Warsaw University of Technology, Poland

Keywords: Analog and digital transmission systems, modulation, communication

channel, source coding, channel coding, channel capacity, multiplexing,

synchronization, carrier synchronization, symbol synchronization, clock

synchronization, frame synchronization, packet synchronization, bit synchronization,

multimedia synchronization, network synchronization

Contents

TE SS

1. Introduction

2. Sources of information and source coding

S

3. Communication channels, modulation, channel coding and synchronization

R

3.1. Channels and Introduced Distortions

AP L

3.2. Analog and Digital Modulations

H O

3.3. Reception and channel coding

3.4. Synchronization

C E

4. Performance evaluation

4.1. Criteria for Comparing Telecommunication Systems

E O–

4.2. Channel Capacity and its Impact on System Performance

5. Concluding remarks

Glossary

Bibliography

PL C

Biographical Sketches

M ES

Summary

General presentation of the communication systems is given, including their

SA N

classification, basic functions, performance measures and limits. Generic scheme of the

analog and digital system is described and the advantages of the digital transmission are

U

discussed. Problems of analog to digital conversion, lossless and lossy compression and

source coding are briefly outlined. The channel codes and modulations are described

and performance measures of the transmission system are presented. The impact of the

limited capacity of the channel to the system performance is discussed. Special attention

has been paid to the synchronization problems of signals and networks.

1. Introduction

Communication consists in transferring information in various form (text, audio, video)

from one place to the other. This process needs at least three elements: an information

source, a medium carrying information to a remote point and a sink collecting the

transmitted information. We usually imagine that people are directly involved, at least

as sources and addressees of the information, but nowadays it is not always the case: the

equipment itself exchanges information e.g. remote control data. In the simplest case a

messenger may transmit the information but usually some technical means are used for

©Encyclopedia of Life Support Systems (EOLSS)

TELECOMMUNICATION SYSTEMS AND TECHNOLOGIES – Vol. I - Fundamentals of Communication Systems - Przemysław

Dymarski and Sławomir Kula

it. Therefore, a communication system may be defined as a set of facilities making

possible the communication by means of signals. The last remark is necessary in order

to exclude delivery of material objects e.g. letters. Post offices form a special kind of

system, but discussion of its activities is out of the scope of this text.

Signals representing the information may be of an analog or digital form. Speech

(represented by the varying acoustical pressure or continuously varying voltage) is an

example of analog signal, text (e.g. represented by ASCII) is a digital signal.

Historically the transmission of digital signals preceded the analog transmission:

Samuel Morse invented his telegraph in 1837 but Graham Bell proposed his telephone

in 1875. In the same way a digital wireless transmission preceded the analog one:

Guglielmo Marconi initiated wireless communication over the English Channel in 1899

using electromagnetic waves caused by sparks jumping between two conductors,

TE SS

wireless transmission of the analog signals appeared later, with the invention of a triode.

Soon the analog telephony and broadcast rapidly developed, but in the fifties of 20th

S

century a comeback of digital transmission was noted. This process is at its final stage

R

nowadays – telephony is almost entirely digital, audio and TV broadcast gradually

AP L

evolves towards digital transmission. If the original signals appear in the analog form,

H O

the analog-to-digital (A/D) conversion and source coding is necessary, transforming

these signals into a bit stream – series of logical values, zeros and ones. Further

C E

comments upon these techniques will be given in Section 2.

E O–

Why the transmission of digital signals is preferred to the transmission of analog

signals? First of all, it is due to the immunity to noise. Transmitting medium (e.g. a

cable) attenuates the signal and, in the case of analog transmission, regularly spaced

amplifiers must be applied in order to keep the signal power above the noise level. In

PL C

this way noise (e.g. thermal noise), introduced at the early stages, is amplified with the

signal and gets accumulated. This process yields a long distance analog transmission not

M ES

feasible. In the case of digital transmission the repertoire of signals being transmitted is

finite (in the simplest case there are only two elementary signals - representing the

logical zero and one). Therefore, instead of the amplifiers, the regenerative repeaters are

SA N

used, which do not simply amplify the signal (and noise), but rather recognize which

signal is being transmitted. If this recognition is proper (i.e. errorless), the signal is

U

regenerated in its original form, without any noise. This description is simplified – in

digital transmission there are some factors causing problems in a long distance

transmission, e.g. difficulty in localizing the beginning and the end of each elementary

signal being transmitted in presence of noise (i.e. problem of symbol synchronization).

There are the other advantages of digital transmission. Fiber optics, yielding low-cost

and high capacity communication links, is more adapted to digital than analog

transmission (e.g. it is easier to detect a presence of light than properly measure its

intensity). Digital data assure high degree of security, due to cryptographic techniques,

but the analog scrambling techniques (e.g. applied to speech signal) are quite easy to

“pirate”.

Communication systems use many transmission media, but each medium is usually

shared by many signals carrying different information at the same time. This is possible

due to multiplexing techniques. They were introduced in 1874 by Emile Baudot, who

©Encyclopedia of Life Support Systems (EOLSS)

TELECOMMUNICATION SYSTEMS AND TECHNOLOGIES – Vol. I - Fundamentals of Communication Systems - Przemysław

Dymarski and Sławomir Kula

has installed a rotary switch, synchronized by the electrical signal, enabling to connect 6

telegraph operators to the same line. The transmission line could carry up to 90 bits per

second in his system. Today this approach is called a Time Division Multiplexing

(TDM), because each user obtains distinct time slots to transmit the information. It may

use the same band (frequency range) as the other users. Though the concept of TDM

dates from the 19th century, its worldwide career started much later. In the early 1960s

in the USA, the TDM technique was applied in the 24-channel T1 carrier system. A few

years later this technique was employed in the European PCM-32 transmission system.

Presently this technique is widely used in SDH transmission systems, mobile

communication systems and many others.

On the other hand, the Frequency Division Multiplexing (FDM) consists in attributing

different frequency bands to the transmitted (at the same time) signals. An example is a

TE SS

commercial radio, where listeners must tune in to select a proper band. In the middle of

1920s, the FDM technique found a practical application in a transmission system

S

operated on twisted pair line, afterwards it was implemented in wireless transmission,

R

but a commercial success came with using FDM to carry multiple signals over coaxial

AP L

cable. Presently, FDM technique is used for example in mobile telephone systems, in

H O

subscriber line system, and in cable TV.

C E

In the WDM (Wavelength Division Multiplexing), the FDM concept is applied to the

transmission using the optical fibers. Each of WDM optical channels operates at a

different optical wavelength λi . Because length λi and frequency fi of any wave are

E O–

related by the formula

ν

λi =

PL C

fi

M ES

where ν is a propagation speed of the wave, the WDM technique formally could be

considered as the FDM, but the WDM is applied in conjunction with fiber transmission

while the FDM found its application either in wireless transmission systems or in

SA N

metallic cables. Fiber optic transmission makes use of three, so called optical windows -

the first around 850 nm, the second around 1300 nm and the third around 1550 nm.

U

Today the 3rd window is commonly used in long distance transmission. Light emitted by

a diode or laser is a narrowband signal. Width of light signal spectrum depends on

emitter features, and varies from << 1 nm for SLM laser, 3 nm for MLM laser, to > 100

nm for LED diode. Because of it, if we want to transmit more than one light wave using

the same fiber, neighboring wavelengths have to be separated by an unused band to

prevent interfering. The most common spacing is about 100 GHz. In a case of so called

DWDM (Dense Wavelength Division Multiplexing) smaller spacing values 50 GHz, 25

GHz, and even 12.5 GHz are used. Presently several hundred of wavelengths can be

simultaneously transmitted over one fiber, so a maximum total bit rate of the DWDM

transmission system reaches several Tbps.

In the Code Division Multiplexing (CDM) signals appear in the same time and use the

same frequency band. Nevertheless it is possible to separate them, because each signal

is orthogonal with respect to the others and a projection of the received sum of signals

onto a proper direction in signal space yields the desired information and sets to zero the

©Encyclopedia of Life Support Systems (EOLSS)

TELECOMMUNICATION SYSTEMS AND TECHNOLOGIES – Vol. I - Fundamentals of Communication Systems - Przemysław

Dymarski and Sławomir Kula

unnecessary information. CDM technique is used as an access technology, namely

CDMA (Code Division Multiple Access), in UMTS (Universal Mobile

Telecommunications System) standard for the third generation mobile communication.

Another important application of the CDMA is GPS (Global Positioning System).

Classification of communication systems depends on criteria being used. For

transmission of the analog signals, without using the A/D conversion, the analog

communication systems are applied. For transmission of a bit stream (which may

represent the original digital information, e.g. ASCII string, or the analog signal, after

its conversion to a digital form), digital communication systems are used. The basic

blocks of an analog communication system are given in Figure 1.

TE SS

S

R

AP L

H O Figure 1: The analog communication system

C E

The basic function of this system consists in adapting the properties of the analog signal

carrying information (called the modulating signal) to the properties of a transmission

E O–

channel (medium). The modulating signals (speech, audio, TV) have rather a baseband

character – they occupy frequency band from (almost) 0 Hz to the maximum frequency

(i.e. bandwidth) f M . On the other hand, the transmission channel has a passband

PL C

character, for example, commercial radio stations use some band with bandwidth B ,

centered at the carrier frequency f 0 . The analog modulator, using one of known

M ES

modulation techniques (AM – amplitude modulation, FM – frequency modulation, PM

– phase modulation), pushes the spectrum of the modulating signal towards the high

frequencies, thus making possible to pass the modulated signal through the channel.

SA N

Generally a bandwidth is increased in this process (i.e. B > f M ), but it has some

advantage: the immunity to the channel noise also increases (this paradox shall be

U

discussed in Section 4).

Several signals may be modulated using different channel subbands, thus making use of

the FDM technique. At the receiver’s side, demultiplexing is performed, using bandpass

filters. Then a demodulator, performing an inverse operation with respect to a

modulator, yields a demodulated signal. Due to distortions introduced in the channel

(e.g. an additive noise) this signal is not an exact copy of the modulating signal.

In Figure 1 a network aspect of the analog communication is omitted. The role of

network consists mainly in preparing a direct link between a source and a sink of signal.

In a case of the analog telephony, in a telephone exchange (central office) cables are

connected by means of switches, subbands are attributed in a frequency division

multiplexer – thus yielding a circuit being unchanged during a call. This switching

technique is called circuit switching. The switching techniques are described in Analog

and Digital Switching and the problems concerning networks are discussed in

©Encyclopedia of Life Support Systems (EOLSS)

TELECOMMUNICATION SYSTEMS AND TECHNOLOGIES – Vol. I - Fundamentals of Communication Systems - Przemysław

Dymarski and Sławomir Kula

Fundamentals of Telecommunication Networks.

The digital communication system is presented in Figure 2.

TE SS

S

R

AP L

H O

C E

Figure 2: The digital communication system

E O–

If the input information appears in a form of an analog signal, it must be converted to a

bit stream using a proper A/D converter. In this process some part of the information is

lost – there exists an infinite number of analog signals of finite duration, but only a

PL C

finite number of bit streams of finite length. The A/D conversion is sometimes

performed in the moment of the acquisition of the analog information, e.g. in a digital

M ES

video camera. Producers of the digital audio/video equipment tend to make this

information loss perceptually insignificant, so they deliver a bit stream of a high rate

(expressed in bits/s).

SA N

The bit rate may be decreased using compression algorithms based on source coding

U

theory. The lossless compression yields a bit stream of reduced bit rate, which contains

the same information as the initial bit stream. It is possible because of the redundancy of

the information source – Samuel Morse used it in his alphabet proposed in thirties of

the 19th century, coding frequently appearing letters in short code words (e.g. A=dot and

dash, E=dot) and rarely appearing letters in long code words (e.g. Q=dash, dash, dot and

dash). Further reduction of bit rate may be accomplished using lossy compression. In

this process some information is lost and the original bit stream cannot be reproduced

without errors. This is not acceptable if the original bit stream represents e.g. text data,

but in the case of speech or video sequence some difference between the input and the

output signal (i.e. the quantization error) may be accepted. This will be discussed in

Section 2. At the source coding stage the encryption process may be applied, yielding

the secure bit stream, which may be decoded using a secret key at the decryption stage.

At the channel coding stage the extra bits are appended to the bit stream, in order to

detect and correct transmission errors. In this way, redundancy is increased, but the bit

©Encyclopedia of Life Support Systems (EOLSS)

TELECOMMUNICATION SYSTEMS AND TECHNOLOGIES – Vol. I - Fundamentals of Communication Systems - Przemysław

Dymarski and Sławomir Kula

error rate (BER) is decreased (or, at least, the system is informed about the error and

may react appropriately, e.g. it may demand a repetition of an erroneous codeword).

The most elementary channel coder adds a parity control bit to each codeword, thus

making possible to detect single errors within the codeword.

At this stage it is possible to mix bit streams coming from different sources using the

TDM technique - thus forming a composite bit stream. Till now the set of logical values

(zero and one) has been processed, but the channel transmits waveforms, which must be

adapted to the properties of transmission medium. E.g. the optical fiber accepts light

pulses, the radio transmission – signals based on a sinusoidal carrier. Thus a process of

modulation is necessary, attributing elementary signals to the logical values or their

strings. The simplest binary modulation attributes a signal s0 (t ) to the logical zero and

s1 (t ) to the logical one. Waveforms s0 (t ) , s1 (t ) must be adapted to the channel – their

TE SS

spectra must fit to the transfer function of the channel. In the case of baseband

S

transmission spectra of the transmitted signals occupy the low frequency band and the

corresponding modulation is called the baseband modulation, line coding or

R

AP L

transmission coding. In the case of M-ary modulation there are M different signals

H O

(called symbols), each one carrying log 2 M binary logical values. The modulation and

channel coding may be linked together, yielding a very efficient way of transmission –

C E

the trellis coded modulation (TCM).

E O–

Modulator may mix many bit streams using the FDM or CDM techniques. CDM

requires orthogonalization of signals coming from one source with respect to signals

coming from the other sources. This process increases bandwidth, so it is called a

spread spectrum technique. It is advantageous from the point of view of immunity to

PL C

distortions and interference introduced in the channel.

M ES

In a digital network the circuit switching technique has been used, but now the other

technique appeared – the packet switching. The bit stream is cut into packets, usually of

the same length, and the address is attributed to each packet. Network directs packets to

SA N

the destination point, using the available means. No physical link is created between the

sender and the addressee – packets of the same bit stream may use different routes to the

U

sink.

At the receiver side, the transmitted elementary signals (symbols) must be recognized

with a minimum number of errors. This is not possible without the synchronization at

the symbol level - the decision instants must be aligned with time slots attributed to the

elementary signals (Section 3.4). The proper recognition is difficult in presence of

channel noise and the other distortions (fading, interference, etc.). Some packets may be

lost or delayed in the network so they cannot be used in reconstruction of the original

signal.

The received symbols are then transformed to a bit stream, the error detection and

correction mechanism is applied, then, after decryption (if necessary) and decoding of

the source code the output bit stream is directed to a digital sink. It may be used to

reproduce (with finite precision) the analog signal carrying the information being

transmitted.

©Encyclopedia of Life Support Systems (EOLSS)

TELECOMMUNICATION SYSTEMS AND TECHNOLOGIES – Vol. I - Fundamentals of Communication Systems - Przemysław

Dymarski and Sławomir Kula

The more detailed description and comparison of the analog and digital transmission

systems may be found in Analog and Digital Transmission.

Different criteria may be used for classification of communication systems. There are

systems enabling duplex communication (in two directions simultaneously, e.g.

telephony), half-duplex (signals flow in one direction at a time) and simplex (signals

flow in one direction, e.g. TV, commercial radio broadcast).

If the transmission media are used as a criterion, there are systems using guided

transmission (e.g. open cable, twisted pair, coaxial cable, optical fiber), and systems

using wireless (terrestrial or satellite) transmission. Modern systems use many

transmission media at a time, e.g. cellular systems use optical fibers and wireless

TE SS

transmission. Systems may be also classified according to the frequency range used for

transmission: baseband, radio frequency, microwave, infrared, optical systems.

S

According to the bandwidth there are narrowband and wideband transmission systems.

R

Bandwidth depends on the transmission medium: the open cables offer much smaller

AP L

bandwidth than e.g. the coaxial cables.

H O

Taking into consideration synchronization aspects, we have asynchronous systems,

C E

plesiochronous systems and synchronous systems (see Section 3.4).

E O–

The cellular communication systems are classified according to the technical parameters

and technology applied. Thus there were the first generation (analog) systems, the

second generation (2G) systems like the GSM, offering basically the digital voice

communication links, the third generation (3G) systems like the UMTS, processing also

PL C

the interactive multimedia like teleconferencing and Internet access at the rates up to 2

Mb/s, and the future 4G systems, yielding high data rates up to 20 Mb/s. See Wireless

M ES

Terrestrial Communications: Cellular Telephony for more details.

2. Sources of Information and Source Coding

SA N

The modern digital communication systems process many kinds of signals carrying

U

information. Some of them appear in an analog form and must be converted to a bit

stream. In the case of wideband systems using optical fibers the resulting bit rate

(number of bits per second) is not very critical. In the case of wireless transmission, e.g.

cellular telephony, the bandwidth is limited and the bit rate must be reduced as much as

possible. In the Table1 some examples of the input signals are given, with the available

bit rates of the compressed bit stream. Note a wide range of bit rates, indicating that

there is always a tradeoff between signal quality and the bit rate.

signal carrying information bit rate (after compression)

telephonic speech (300-3400 Hz) 0.8 – 64 kb/s

wideband speech (< 7 kHz) 16 – 64 kb/s

wideband audio ( < 22 kHz) 32 – 400 kb/s (mono)

videophone 16 – 64 kb/s

videostreaming (e.g. for UMTS) 50 – 150 kb/s

TV 1.5 – 15 Mb/s

©Encyclopedia of Life Support Systems (EOLSS)

TELECOMMUNICATION SYSTEMS AND TECHNOLOGIES – Vol. I - Fundamentals of Communication Systems - Przemysław

Dymarski and Sławomir Kula

Table 1: Examples of the input signals and their bit rates

There are two processes involved in A/D conversion: the discretization in time domain

(sampling) and in amplitude domain (quantization). Sampling process may be, under

certain conditions, fully reversible – no information is lost. According to the widely

known Shannon sampling theorem, any signal x(t ) of a bandwidth f M may be sampled

at the sampling frequency greater than 2 f M without any loss of information. This

theorem stems from the Whittaker – Shannon interpolation formula, expanding the band

limited baseband signal in the orthogonal basis of sinc functions:

sinπ (t − nT ) / T

x(t ) =∑ x n

π (t − nT ) / T

TE SS

n

S

where xn = x(nT ) and T is a sampling interval, the inverse of the sampling frequency.

R

AP L

The above formula states that the continuous analog signal x(t ) may be reconstructed,

with an infinite precision, from its samples. The mechanism of this reconstruction is

H O

presented in Figure 3.

C E

E O–

PL C

M ES

SA N

U

Figure 3: Reconstruction of the baseband analog signal from its samples

The quantization process is generally not reversible – the difference between the input

signal samples ( xn ) and the quantized samples ( x *n ) is called a quantization error

(en = xn - x *n ) . The quantizer tends to minimize the quantization error power

(σ e 2 = E(en 2 ) , where E denotes the expected value) at the demanded resolution b bits

per sample. Minimum of the error power yields maximum of the signal to noise ratio

SNR = E( xn 2 ) / E(en 2 ) .

©Encyclopedia of Life Support Systems (EOLSS)

TELECOMMUNICATION SYSTEMS AND TECHNOLOGIES – Vol. I - Fundamentals of Communication Systems - Przemysław

Dymarski and Sławomir Kula

In the most simple case of the scalar quantizer, every signal sample is represented by

one of the L quantization levels (the nearest one, in order to reduce the quantization

error), and the coded number (index) of the chosen level is transmitted to the receiver.

Different techniques are used to increase the SNR:

• Quantization levels are nonuniformly spaced, in order to reduce the quantization

error power for signals of small amplitude (so called logarithmic quantizer used

in the most popular pulse code modulation technique applied in digital

telephony).

• Quantization levels are expanded or “shrunk”, in order to adapt the quantizer to

the varying amplitude of signal being quantized (the adaptive quantizer).

• Each sample is predicted using the previous samples – the quantizer processes

only unpredictable part of the sample and the predictable part is reconstructed at

TE SS

the receiver side using the same predictor as at the transmitter side (this

technique is called differential coding or adaptive differential pulse code

S

modulation).

R

• Adjacent samples of an audio signal or pixels of a picture are grouped in N-

AP L

dimensional vectors – each vector is then represented by one of the L codebook

H O

vectors. This technique is called vector quantization – Figure 4.

• The codebook of the vector quantizer is filtered using an adaptive predictive

C E

filter, in order to adapt the codebook waveforms to the varying properties of

speech signal (this is called a Code Excited Linear Prediction and is widely used

E O–

in cellular telephony).

• Blocks of audio signal samples or adjacent pixels of a picture are transformed to

frequency domain and then quantization is performed. This is called the

transform coding and makes it possible to exploit the human perception in order

PL C

to mask the quantization error.

M ES

SA N

U

©Encyclopedia of Life Support Systems (EOLSS)

TELECOMMUNICATION SYSTEMS AND TECHNOLOGIES – Vol. I - Fundamentals of Communication Systems - Przemysław

Dymarski and Sławomir Kula

Figure 4: Vector quantization in N=2-dimensional space using a codebook of L=12

vectors

The success of the above mentioned techniques depends not only on the quantizer but

also on the signal itself. According to the Shannon source coding theory for each signal

and the resolution b it exists a lower bound for the coding distortion (e.g. for a

quantization error power). It is given by the Rate Distortion Function (RDF). For the

uncorrelated Gaussian signal the RDF is given by the formula:

σ e2,min =σ x2 2 −2b

For a correlated signal lower distortions may be obtained (Figure 5), because the coding

TE SS

algorithm may use correlations e.g. for prediction of consecutive samples. The vector

S

quantizer also uses correlations and other relations between N samples forming a vector.

At a given resolution e.g. b=1 bit per sample, a scalar quantizer having L=2b

R

AP L

quantization levels may be used, yielding a poor performance (a point N=1 in Figure 5).

However at the same resolution a N-dimensional vector quantizer may be used, having

H O

L=2bN codebook vectors. Due to the exploitations of signal correlations and the other

properties of the N-dimensional probability density function of the signal samples,

C E

distortion is reduced and gradually approaches (as N tends to infinity) the RDF limit.

Thus the vector quantizer is an asymptotically optimal source coder. Processing of high-

E O–

dimensional vectors causes a complexity problem: the number of codebook vectors

increases rapidly. Therefore the other techniques are used together with the vector

quantization (e.g. prediction and vector quantization form a basis of the code excited

linear predictive speech coders).

PL C

Figure 5: The RDF of the uncorrelated and some correlated Gaussian signal and

M ES

distortion obtained due to the scalar and N-dimensional vector quantization of this

correlated signal at 1 bit per sample

SA N

-

U

-

-

TO ACCESS ALL THE 31 PAGES OF THIS CHAPTER,

Visit: http://www.eolss.net/Eolss-sampleAllChapter.aspx

Bibliography

Bregni S. (2002) Synchronization of Digital Telecommunications Networks. Wiley. [Overview of

synchronization of networks and systems]

Haykin S. (2001). Communication Systems. Wiley. [A coherent system approach to the analysis of

telecommunications]

©Encyclopedia of Life Support Systems (EOLSS)

TELECOMMUNICATION SYSTEMS AND TECHNOLOGIES – Vol. I - Fundamentals of Communication Systems - Przemysław

Dymarski and Sławomir Kula

Muller N.J. (2002). Desktop Encyclopedia of Telecommunications. McGraw-Hill. [Explanation of basic

terms, addressed to the nontechnical professionals]

Smith R.D. (2003). Digital Transmission Systems. Kluwer. [Presentation of modern transmission

systems]

Wozencraft J.M. Jacobs I.M. (1965,1990) Principles of Communication Engineering (Wiley) [Theoretical

background to transmission, coding, channel capacity etc.]

Ziemer R.E., Peterson R.L. (2000) Introduction to Digital Communication. (Prentice-Hall). [Concepts of

digital modulation, coding, spread spectrum etc.]

Biographical Sketches

Przemysław Dymarski received the M.Sc and Ph.D degrees in electrical engineering from the Wrocław

University of Technology, Wrocław, Poland, in 1974 and 1983, respectively, and the D.Sc degree in

telecommunications from the Faculty of Electronics and Information Technology of the Warsaw

University of Technology, Warsaw, Poland, in 2004. Now he is with the Institute of

TE SS

Telecommunications, Warsaw University of Technology. His research includes various aspects of digital

S

signal processing, particularly speech and audio compression for telecommunications and multimedia,

text-to-speech synthesis and audio watermarking. Results of his research have been published in the IEEE

R

Trans. on Speech and Audio Processing, IEEE Trans. on Audio, Speech and Language Processing,

AP L

Annales de Télécommunications, and in the proceedings of international conferences like ICASSP,

H O

EUROSPEECH, EUSIPCO, etc.

C E

Sławomir Kula received the M.Sc degree in electronic engineering from the Warsaw University of

Technology, Poland, in 1977 and the Ph.D. degree in telecommunications from the Faculty of Electronics

and Information Technology of the Warsaw University of Technology in 1982. Now he is a Vice Dean of

E O–

that Faculty. His research includes all aspects of transmission systems and core, metropolitan and access

networks, and signal (speech and video) processing. He gave lectures in the following countries: Algeria,

Great Britain, France, Korea, Mexico and Poland. He was a senior expert of ITU-T, and he is an expert of

PCA. He is the author of the book: Transmission Systems, edited by WKŁ (Warsaw, 2004) and coeditor

and coauthor of the book: Synchronous Transmission Systems and Networks, also edited by WKŁ

PL C

(Warsaw, 1996). Dr Kula is a member of IEEE ComSoc, a member of Communication Engineering

Society (SIT) and the President of the SIT at Warsaw University of Technology.

M ES

SA N

U

©Encyclopedia of Life Support Systems (EOLSS)

You might also like

- Answers 3Document5 pagesAnswers 3Carl SigaNo ratings yet

- Introduction: Communication DefinitionsDocument27 pagesIntroduction: Communication DefinitionsThangaPrakashPNNo ratings yet

- DSLAMDocument45 pagesDSLAMPaulpandi MNo ratings yet

- Paper Presentation On Mobile Communications: Submitted byDocument14 pagesPaper Presentation On Mobile Communications: Submitted byapi-19799369100% (1)

- Sahil DcsDocument28 pagesSahil DcsPrasun SinhaNo ratings yet

- CSE 306 Computer NetworksDocument6 pagesCSE 306 Computer NetworksvishallpuNo ratings yet

- Handout 1 - Introduction To Communications SystemDocument9 pagesHandout 1 - Introduction To Communications System0322-3713No ratings yet

- VARUN KumarDocument9 pagesVARUN KumarVaSuNo ratings yet

- Bio MimeticDocument20 pagesBio MimeticRajat PratapNo ratings yet

- Unesco - Eolss Sample Chapters: Modulation and DetectionDocument8 pagesUnesco - Eolss Sample Chapters: Modulation and DetectionAnulal SSNo ratings yet

- Fiber Optics Transmission FundamentalsDocument22 pagesFiber Optics Transmission FundamentalsCarlos Andres Torres OnofreNo ratings yet

- Affiah Telecommunication Systems IDocument286 pagesAffiah Telecommunication Systems IKrista JacksonNo ratings yet

- Telecom-Chapter OneDocument7 pagesTelecom-Chapter Onetsegab bekele100% (2)

- Seminar Report Optical Multiplexing and Demultiplexing: Prateek Rohila 1120330 ECE-4Document9 pagesSeminar Report Optical Multiplexing and Demultiplexing: Prateek Rohila 1120330 ECE-4GautamSikkaNo ratings yet

- WCN Wireless Communication and Networks OverviewDocument35 pagesWCN Wireless Communication and Networks Overviewlaiba noorNo ratings yet

- 1st ChapterDocument15 pages1st ChapterMd Raton AliNo ratings yet

- Wireless AssignmentDocument30 pagesWireless AssignmentSolaNo ratings yet

- Unit II Bca NotesDocument24 pagesUnit II Bca NotesSiyana SriNo ratings yet

- Coputer Networks and SecurityDocument11 pagesCoputer Networks and SecurityPraveen MohanNo ratings yet

- Wireless CommunicationDocument15 pagesWireless Communicationgirish nawaniNo ratings yet

- Telecommunication: Basic ElementsDocument11 pagesTelecommunication: Basic ElementsakililuNo ratings yet

- Telecommunication (Written Report)Document12 pagesTelecommunication (Written Report)Wafa L. AbdulrahmanNo ratings yet

- Data Communication CompiledDocument83 pagesData Communication CompiledlalithaNo ratings yet

- A Review of DWDMDocument14 pagesA Review of DWDMapi-3833108No ratings yet

- Data Communication and NetworkingDocument15 pagesData Communication and NetworkingDonaBarcibalNo ratings yet

- Sec. 3.2Document29 pagesSec. 3.2Saber HussainiNo ratings yet

- Data Communications - A.K MhazoDocument158 pagesData Communications - A.K MhazoGloria Matope100% (1)

- Name: Syeda Kashaf Zehra BB-28285 Data Computer Communications and Networking Cs371 Section A "Paper A"Document7 pagesName: Syeda Kashaf Zehra BB-28285 Data Computer Communications and Networking Cs371 Section A "Paper A"Techrotics TechnologiesNo ratings yet

- Chapter One: Data Communication BasicsDocument46 pagesChapter One: Data Communication BasicsWiki EthiopiaNo ratings yet

- Chapter 1: IntroductionDocument42 pagesChapter 1: IntroductionmanushaNo ratings yet

- How Fiber Optics WorksDocument4 pagesHow Fiber Optics Worksxyz156No ratings yet

- Qustion 2Document11 pagesQustion 2Yohannis DanielNo ratings yet

- Switching and Intelligent Networks Course Handout Chapter1Document6 pagesSwitching and Intelligent Networks Course Handout Chapter1Kondapalli Lathief100% (1)

- Basic PDHDocument14 pagesBasic PDHFauzan Ashraf MushaimiNo ratings yet

- Introduction to Digital Communication SystemsDocument10 pagesIntroduction to Digital Communication SystemsJanine NaluzNo ratings yet

- 1-2nd Year Electrical Comm.2023Document24 pages1-2nd Year Electrical Comm.2023MOSTAFA TEAIMANo ratings yet

- DCN Chapter 1 - Part 1Document45 pagesDCN Chapter 1 - Part 1Esta AmeNo ratings yet

- A Project Report On "Pilot Based OFDM Channel Estimation"Document57 pagesA Project Report On "Pilot Based OFDM Channel Estimation"Omkar ChogaleNo ratings yet

- Rahu FinalDocument14 pagesRahu FinalkirankumarikanchanNo ratings yet

- Introduction To Communication SystemDocument15 pagesIntroduction To Communication SystemLara Jane ReyesNo ratings yet

- Cse Notes PDFDocument77 pagesCse Notes PDFAshutosh AcharyaNo ratings yet

- Unit 1: Introduction To Communication Systems Historical Notes-Communication SystemsDocument55 pagesUnit 1: Introduction To Communication Systems Historical Notes-Communication Systemsbad is good tatyaNo ratings yet

- A Seminar On: Wireless & GSM ConceptsDocument34 pagesA Seminar On: Wireless & GSM Conceptsrahularora218No ratings yet

- Unit 3 - Data Transmission and Networking MediaDocument58 pagesUnit 3 - Data Transmission and Networking MediaAzhaniNo ratings yet

- DC 1Document13 pagesDC 1kaligNo ratings yet

- Propagacion en Comunicaciones MovilesDocument24 pagesPropagacion en Comunicaciones MovilesJuan Gerardo HernandezNo ratings yet

- Data Comm and Comp Networking Lecture NoteDocument35 pagesData Comm and Comp Networking Lecture NoteMikiyas GetasewNo ratings yet

- Analysis of Chromatic Scattering in 2 8 Channel at 4 10 Gbps at Various DistancesDocument5 pagesAnalysis of Chromatic Scattering in 2 8 Channel at 4 10 Gbps at Various DistanceswahbaabassNo ratings yet

- Computer Network (503) - Unit IIDocument29 pagesComputer Network (503) - Unit IIKashishNo ratings yet

- CH 1 Optical Fiber Introduction - 2Document18 pagesCH 1 Optical Fiber Introduction - 2Krishna Prasad PheluNo ratings yet

- Five components of data communication systemDocument4 pagesFive components of data communication systemTanishaSharmaNo ratings yet

- Data Transmission 52353Document5 pagesData Transmission 52353Siva GuruNo ratings yet

- Digital Communication Report on Wireless CommunicationDocument22 pagesDigital Communication Report on Wireless CommunicationShrishti HoreNo ratings yet

- What Does Communication (Or Telecommunication) Mean?Document5 pagesWhat Does Communication (Or Telecommunication) Mean?tasniNo ratings yet

- Lecture 1 MDocument24 pagesLecture 1 MIrfan FikriNo ratings yet

- Digital Transmission TechniquesDocument85 pagesDigital Transmission TechniquesTafadzwa MurwiraNo ratings yet

- Distinction Between Related SubjectsDocument5 pagesDistinction Between Related SubjectsSiva GuruNo ratings yet

- Mobile Computing Unit 1Document7 pagesMobile Computing Unit 1smart IndiaNo ratings yet

- ACS - Lab ManualDocument15 pagesACS - Lab ManualGautam SonkerNo ratings yet

- PropertyDocument3 pagesPropertyWadhah IsmaeelNo ratings yet

- RF Signals and Systems 1. Introduction. Communication SystemsDocument13 pagesRF Signals and Systems 1. Introduction. Communication SystemsWadhah IsmaeelNo ratings yet

- Introduction to Key Communication ModelsDocument18 pagesIntroduction to Key Communication ModelsRamaduguKiranbabu100% (1)

- Lecture 1 2Document56 pagesLecture 1 2rakhiNo ratings yet

- Wadhah CV PDFDocument5 pagesWadhah CV PDFWadhah IsmaeelNo ratings yet

- EENG 457 Experiment 4Document3 pagesEENG 457 Experiment 4Wadhah IsmaeelNo ratings yet

- Ctia Test Plan For Mobile Station Over The Air Performance Revision 3 1 PDFDocument410 pagesCtia Test Plan For Mobile Station Over The Air Performance Revision 3 1 PDFAnte VučkoNo ratings yet

- 2011 NDR Bomb Jammer Presentation-1Document36 pages2011 NDR Bomb Jammer Presentation-1hardedcNo ratings yet

- MRD 3187b Manual 7632dDocument231 pagesMRD 3187b Manual 7632dalbertoJunior2011No ratings yet

- Ground To Air Catalogue 2017Document88 pagesGround To Air Catalogue 2017Constructel GmbHNo ratings yet

- 2G And 3G KPI OptimizationDocument7 pages2G And 3G KPI OptimizationLily PuttNo ratings yet

- DWR-116 Modem Card Supporting List - 20161208 (0124120557)Document12 pagesDWR-116 Modem Card Supporting List - 20161208 (0124120557)blbutikadmin 7No ratings yet

- AnglemodulationDocument49 pagesAnglemodulationSatyendra VishwakarmaNo ratings yet

- Atheros AR8327AR8327N Seven-Port Gigabit Ethernet Switch PDFDocument394 pagesAtheros AR8327AR8327N Seven-Port Gigabit Ethernet Switch PDFJuan Carlos SrafanNo ratings yet

- Ed1 - 1830 - PSS - CDC - F - and - C - F - Building - Blocks - Part 3Document32 pagesEd1 - 1830 - PSS - CDC - F - and - C - F - Building - Blocks - Part 3Anil Gupta100% (1)

- ZTE RRU Case CadingDocument5 pagesZTE RRU Case Cadingtonygrt12100% (1)

- 3transmission ImpairmentsDocument26 pages3transmission Impairmentsgokul adethyaNo ratings yet

- Datasheet PDFDocument26 pagesDatasheet PDFSamy GuitarsNo ratings yet

- Wireless Communication TechnologyDocument31 pagesWireless Communication TechnologyMaria Cristina LlegadoNo ratings yet

- Multiplexing and Multiple Access Techniques Multiplexing and Multiple Access TechniquesDocument89 pagesMultiplexing and Multiple Access Techniques Multiplexing and Multiple Access TechniquesSantosh Thapa0% (1)

- APT-AWG-REP-15Rev.4 APT Report On Mobile Band UsageDocument30 pagesAPT-AWG-REP-15Rev.4 APT Report On Mobile Band Usagemohd arsyeedNo ratings yet

- PREDICTIVE DECONVOLUTION FOR MULTIPLE REMOVALDocument19 pagesPREDICTIVE DECONVOLUTION FOR MULTIPLE REMOVALSumanyu DixitNo ratings yet

- Chapter 7: Networking Concepts: IT Essentials v6.0Document7 pagesChapter 7: Networking Concepts: IT Essentials v6.0Chan ChanNo ratings yet

- 50 Ohm Wideband Detectors PDFDocument15 pages50 Ohm Wideband Detectors PDFΑΝΔΡΕΑΣ ΤΣΑΓΚΟΣNo ratings yet

- RF Electronic Circuits For The Hobbyist - CompleteDocument8 pagesRF Electronic Circuits For The Hobbyist - CompleteQuoc Khanh PhamNo ratings yet

- TX-SAW / 433 S-Z Transmitter DatasheetDocument5 pagesTX-SAW / 433 S-Z Transmitter DatasheetAbbass88No ratings yet

- Technical Standard and Specifications: Broadcast & OperationsDocument21 pagesTechnical Standard and Specifications: Broadcast & OperationsAbhishek S AatreyaNo ratings yet

- Instruction Manual JHS770S PDFDocument158 pagesInstruction Manual JHS770S PDFvu minh tienNo ratings yet

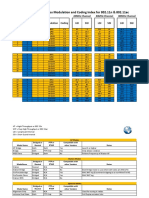

- Mikrotik Wireless Modulation and Coding Index For 802.11N & 802.11acDocument2 pagesMikrotik Wireless Modulation and Coding Index For 802.11N & 802.11acCesar Del Castillo100% (1)

- Massive MIMO (FDD) (eRAN15.1 - 04)Document104 pagesMassive MIMO (FDD) (eRAN15.1 - 04)Umar MiskiNo ratings yet

- Syllabus Content:: 1.2 Images, Sound and CompressionDocument17 pagesSyllabus Content:: 1.2 Images, Sound and Compressionjoseph mawutsaNo ratings yet

- Balanced Rectifier Using Antiparallel-Diode Configuration: Muh-Dey Wei and Renato NegraDocument4 pagesBalanced Rectifier Using Antiparallel-Diode Configuration: Muh-Dey Wei and Renato NegraAziztaPutriNo ratings yet

- Numerical QuestionDocument17 pagesNumerical QuestionJasman SinghNo ratings yet

- MTK User Manual A4 V1.0Document27 pagesMTK User Manual A4 V1.0Valter FogaçaNo ratings yet

- MCQ ElectronDocument10 pagesMCQ ElectronDeepak SharmaNo ratings yet

- AWS Certified Cloud Practitioner Study Guide: CLF-C01 ExamFrom EverandAWS Certified Cloud Practitioner Study Guide: CLF-C01 ExamRating: 5 out of 5 stars5/5 (1)

- ITIL 4: Digital and IT strategy: Reference and study guideFrom EverandITIL 4: Digital and IT strategy: Reference and study guideRating: 5 out of 5 stars5/5 (1)

- Microsoft Azure Infrastructure Services for Architects: Designing Cloud SolutionsFrom EverandMicrosoft Azure Infrastructure Services for Architects: Designing Cloud SolutionsNo ratings yet

- Computer Systems and Networking Guide: A Complete Guide to the Basic Concepts in Computer Systems, Networking, IP Subnetting and Network SecurityFrom EverandComputer Systems and Networking Guide: A Complete Guide to the Basic Concepts in Computer Systems, Networking, IP Subnetting and Network SecurityRating: 4.5 out of 5 stars4.5/5 (13)

- Evaluation of Some Websites that Offer Virtual Phone Numbers for SMS Reception and Websites to Obtain Virtual Debit/Credit Cards for Online Accounts VerificationsFrom EverandEvaluation of Some Websites that Offer Virtual Phone Numbers for SMS Reception and Websites to Obtain Virtual Debit/Credit Cards for Online Accounts VerificationsRating: 5 out of 5 stars5/5 (1)

- Computer Networking: The Complete Beginner's Guide to Learning the Basics of Network Security, Computer Architecture, Wireless Technology and Communications Systems (Including Cisco, CCENT, and CCNA)From EverandComputer Networking: The Complete Beginner's Guide to Learning the Basics of Network Security, Computer Architecture, Wireless Technology and Communications Systems (Including Cisco, CCENT, and CCNA)Rating: 4 out of 5 stars4/5 (4)

- Set Up Your Own IPsec VPN, OpenVPN and WireGuard Server: Build Your Own VPNFrom EverandSet Up Your Own IPsec VPN, OpenVPN and WireGuard Server: Build Your Own VPNRating: 5 out of 5 stars5/5 (1)

- The CompTIA Network+ Computing Technology Industry Association Certification N10-008 Study Guide: Hi-Tech Edition: Proven Methods to Pass the Exam with Confidence - Practice Test with AnswersFrom EverandThe CompTIA Network+ Computing Technology Industry Association Certification N10-008 Study Guide: Hi-Tech Edition: Proven Methods to Pass the Exam with Confidence - Practice Test with AnswersNo ratings yet

- AWS Certified Solutions Architect Study Guide: Associate SAA-C02 ExamFrom EverandAWS Certified Solutions Architect Study Guide: Associate SAA-C02 ExamNo ratings yet

- Software-Defined Networks: A Systems ApproachFrom EverandSoftware-Defined Networks: A Systems ApproachRating: 5 out of 5 stars5/5 (1)

- CCNA: 3 in 1- Beginner's Guide+ Tips on Taking the Exam+ Simple and Effective Strategies to Learn About CCNA (Cisco Certified Network Associate) Routing And Switching CertificationFrom EverandCCNA: 3 in 1- Beginner's Guide+ Tips on Taking the Exam+ Simple and Effective Strategies to Learn About CCNA (Cisco Certified Network Associate) Routing And Switching CertificationNo ratings yet

- FTTx Networks: Technology Implementation and OperationFrom EverandFTTx Networks: Technology Implementation and OperationRating: 5 out of 5 stars5/5 (1)

- The Compete Ccna 200-301 Study Guide: Network Engineering EditionFrom EverandThe Compete Ccna 200-301 Study Guide: Network Engineering EditionRating: 5 out of 5 stars5/5 (4)

- Concise Guide to OTN optical transport networksFrom EverandConcise Guide to OTN optical transport networksRating: 4 out of 5 stars4/5 (2)

- The Ultimate Kali Linux Book - Second Edition: Perform advanced penetration testing using Nmap, Metasploit, Aircrack-ng, and EmpireFrom EverandThe Ultimate Kali Linux Book - Second Edition: Perform advanced penetration testing using Nmap, Metasploit, Aircrack-ng, and EmpireNo ratings yet

- Advanced Antenna Systems for 5G Network Deployments: Bridging the Gap Between Theory and PracticeFrom EverandAdvanced Antenna Systems for 5G Network Deployments: Bridging the Gap Between Theory and PracticeRating: 5 out of 5 stars5/5 (1)

- Hacking Network Protocols: Complete Guide about Hacking, Scripting and Security of Computer Systems and Networks.From EverandHacking Network Protocols: Complete Guide about Hacking, Scripting and Security of Computer Systems and Networks.Rating: 5 out of 5 stars5/5 (2)

- ITIL® 4 Create, Deliver and Support (CDS): Your companion to the ITIL 4 Managing Professional CDS certificationFrom EverandITIL® 4 Create, Deliver and Support (CDS): Your companion to the ITIL 4 Managing Professional CDS certificationRating: 5 out of 5 stars5/5 (2)