Professional Documents

Culture Documents

Linear Algebra Mit Cheatsheet PDF

Uploaded by

Vivek Ananth R POriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Linear Algebra Mit Cheatsheet PDF

Uploaded by

Vivek Ananth R PCopyright:

Available Formats

18.

085 :: Linear algebra cheat sheet :: Spring 2014

1. Rectangular m-by-n matrices

• Rule for transposes:

(AB)T = B T AT

• The matrix AT A is always square, symmetric, and positive semi-definite. If in addition A has

linearly independent columns, then AT A is positive definite.

• Take C a diagonal matrix with positive elements. Then AT CA is always square, symmetric, and

positive semi-definite. If in addition A has linearly independent columns, then AT CA is positive

definite.

2. Square n-by-n matrices

• Eigenvalues and eigenvectors are defined only for square matrices:

Av = λv

The eigenvalues may be complex. There may be fewer than n eigenvectors (in that case we say

the matrix is defective).

• In matrix form, we can always write

AS = SΛ,

where S has the eigenvectors in the columns (S could be a rectangular matrix), and Λ is diagonal

with the eigenvalues on the diagonal.

• If A has n eigenvectors (a full set), then S is square and invertible, and

A = SΛS −1 .

• If eigenvalues are counted with their multiplicity, then

tr(A) = A11 + . . . + Ann = λ1 + . . . + λn .

• If eigenvalues are counted with their multiplicity, then

det(A) = λ1 × . . . × λn .

• Addition of a multiple of the identity shifts the eigenvalues:

λj (A + cI) = λj (A) + c.

No such rule exists in general when adding A + B.

• Gaussian elimination gives

A = LU.

L is lower triangular with ones on the diagonal, and with the elimination multipliers below the

diagonal. U is upper triangular with the pivots on the diagonal.

• A is invertible if either of the following criteria is satisfied: there are n nonzero pivots; the deter-

minant is not zero; the eigenvalues are all nonzero; the columns are linearly independent; the

only way to have Au = 0 is if u = 0.

• Conversely, if either of these criteria is violated, then the matrix is singular (not invertible).

3. Invertible matrices.

• The system Au = f has a unique solution u = A−1 f .

• (AB)−1 = B −1 A−1 .

• (AT )−1 = (A−1 )T .

• If A = SΛS −1 , then A−1 = SΛ−1 S −1 (same eigenvectors, inverse eigenvalues).

4. Symmetric matrices (AT = A.)

• Eigenvalues are real, eigenvectors are orthogonal, and there are always n eigenvectors (full set).

(Precision concerning eigenvectors: accidentally, they may not come as orthogonal. But that

case always corresponds to a multiple eigenvalue. There exists another choice of eigenvectors

such that they are orthogonal.)

• Can write

A = QΛQT ,

where Q−1 = QT (orthonormal matrix).

• Can modify A = LU into A = LDLT with diagonal D, and the pivots on the diagonal of D.

• Can define positive definite matrices only in the symmetric case. A symmetric matrix is positive

definite if either of the following criteria holds: all the pivots are positive; all the eigenvalues are

positive; all the upper-left determinants are positive; or xT Ax > 0 unless x = 0.

• Similar definition for positive semi-definite. The creiteria are: all the pivots are nonnegative; all

the eigenvalues are nonnegative; all the upper-left determinants are nonnegative; or xT Ax ≥ 0.

• If A is positive definite, we have the Cholesky decomposition

A = RT R,

√

which corresponds to the choice R = DLT .

5. Skew-symmetric matrices (AT = −A.)

• Eigenvalues are purely imaginary, eigenvectors are orthogonal, and there are always n eigen-

vectors (full set).

You might also like

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (589)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (842)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5806)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1091)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Bench WorkDocument103 pagesBench Workgetu abrahaNo ratings yet

- A. A. Allen The Price of Gods Miracle Working PowerDocument52 pagesA. A. Allen The Price of Gods Miracle Working Powerprijiv86% (7)

- Ancient Indian History - Notes PDFDocument38 pagesAncient Indian History - Notes PDFKaranbir Randhawa86% (22)

- The Better Momentum IndicatorDocument9 pagesThe Better Momentum IndicatorcoachbiznesuNo ratings yet

- PAMDocument14 pagesPAMRashed IslamNo ratings yet

- Iec 60812-2006Document11 pagesIec 60812-2006Refibrian Dwiki100% (1)

- Social StratificationDocument246 pagesSocial StratificationKaranbir RandhawaNo ratings yet

- Religion Unit M2: SecularisationDocument27 pagesReligion Unit M2: SecularisationKaranbir RandhawaNo ratings yet

- Dept:Of Co-Operative Management and Rural Studies, Faculty of CommerceDocument40 pagesDept:Of Co-Operative Management and Rural Studies, Faculty of CommerceKaranbir RandhawaNo ratings yet

- Geomeetry PDFDocument136 pagesGeomeetry PDFKaranbir RandhawaNo ratings yet

- Central Conicoids PDFDocument27 pagesCentral Conicoids PDFKaranbir RandhawaNo ratings yet

- ME101 Lecture02 KDDocument28 pagesME101 Lecture02 KDKaranbir RandhawaNo ratings yet

- Rectilinear Motion NotesDocument3 pagesRectilinear Motion NotesKaranbir Randhawa100% (1)

- Ch05 Rectilinear Motion PDFDocument24 pagesCh05 Rectilinear Motion PDFKaranbir RandhawaNo ratings yet

- DSPDocument2 pagesDSPKaranbir RandhawaNo ratings yet

- SEC - Connection Guidelines - v3 - CleanDocument54 pagesSEC - Connection Guidelines - v3 - CleanFurqan HamidNo ratings yet

- Temba KauDocument50 pagesTemba KaufauzanNo ratings yet

- ACP Supplement For SVR AUG 2017Document104 pagesACP Supplement For SVR AUG 2017Kasun WijerathnaNo ratings yet

- Meat PieDocument13 pagesMeat Piecrispitty100% (1)

- Case Report - Anemia Ec Cervical Cancer Stages 2bDocument71 pagesCase Report - Anemia Ec Cervical Cancer Stages 2bAnna ListianaNo ratings yet

- PT Activity 3.4.2: Troubleshooting A VLAN Implementation: Topology DiagramDocument3 pagesPT Activity 3.4.2: Troubleshooting A VLAN Implementation: Topology DiagramMatthew N Michelle BondocNo ratings yet

- Country Item Name Litre Quart Pint Nip 700 ML IndiaDocument17 pagesCountry Item Name Litre Quart Pint Nip 700 ML Indiajhol421No ratings yet

- S800 SCL SR - 2CCC413009B0201 PDFDocument16 pagesS800 SCL SR - 2CCC413009B0201 PDFBalan PalaniappanNo ratings yet

- Georgia Habitats Lesson PlansDocument5 pagesGeorgia Habitats Lesson PlansBecky BrownNo ratings yet

- Valores de Laboratorio Harriet LaneDocument14 pagesValores de Laboratorio Harriet LaneRonald MoralesNo ratings yet

- Dr. M. Syed Jamil Asghar: Paper Published: 80Document6 pagesDr. M. Syed Jamil Asghar: Paper Published: 80Awaiz NoorNo ratings yet

- Queueing TheoryDocument6 pagesQueueing TheoryElmer BabaloNo ratings yet

- 2023.01.25 Plan Pecatu Villa - FinishDocument3 pages2023.01.25 Plan Pecatu Villa - FinishTika AgungNo ratings yet

- Example Lab ReportDocument12 pagesExample Lab ReportHung Dang QuangNo ratings yet

- Hemiplegia Case 18.12.21Document5 pagesHemiplegia Case 18.12.21Beedhan KandelNo ratings yet

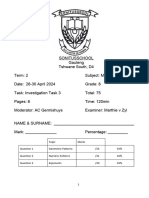

- Investigation Gr. 8Document6 pagesInvestigation Gr. 8Marthie van zylNo ratings yet

- Piping - Fitings HandbookDocument240 pagesPiping - Fitings HandbookzohirNo ratings yet

- Island Super Kinis Skim Coat White (RP)Document4 pagesIsland Super Kinis Skim Coat White (RP)coldfeetNo ratings yet

- Unit P1, P1.1: The Transfer of Energy by Heating ProcessesDocument9 pagesUnit P1, P1.1: The Transfer of Energy by Heating ProcessesTemilola OwolabiNo ratings yet

- A 388 - A 388M - 03 - Qtm4oc9bmzg4tqDocument8 pagesA 388 - A 388M - 03 - Qtm4oc9bmzg4tqRod RoperNo ratings yet

- Theoretical Foundations of Nursing - Review MaterialDocument10 pagesTheoretical Foundations of Nursing - Review MaterialKennethNo ratings yet

- Compliant Offshore StructureDocument50 pagesCompliant Offshore Structureapi-27176519100% (4)

- KIN LONG Shower Screen CatalogueDocument58 pagesKIN LONG Shower Screen CatalogueFaiz ZentNo ratings yet

- Cramers Rule 3 by 3 Notes PDFDocument4 pagesCramers Rule 3 by 3 Notes PDFIsabella LagboNo ratings yet

- International StandardDocument8 pagesInternational Standardnazrul islamNo ratings yet