Professional Documents

Culture Documents

Sachin Chaudhary and Subrahmanyam Murala Computer Vision and Pattern Recognition Lab, Indian Institute of Technology Ropar, INDIA

Sachin Chaudhary and Subrahmanyam Murala Computer Vision and Pattern Recognition Lab, Indian Institute of Technology Ropar, INDIA

Uploaded by

sachin chaudharyOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Sachin Chaudhary and Subrahmanyam Murala Computer Vision and Pattern Recognition Lab, Indian Institute of Technology Ropar, INDIA

Sachin Chaudhary and Subrahmanyam Murala Computer Vision and Pattern Recognition Lab, Indian Institute of Technology Ropar, INDIA

Uploaded by

sachin chaudharyCopyright:

Available Formats

TSNet: Deep Network for Human Action Recognition

in Hazy Videos

Sachin Chaudhary and Subrahmanyam Murala

Computer Vision and Pattern Recognition Lab,

Indian Institute of Technology Ropar, INDIA

CNN

C

CNN

O

C2MSNet Rank Pooling N

Fully Connected Layer

CNN C

A

Dynamic Depth Image T

Softmax

CNN feature extraction E

Transmission Map

Hazy Input

N

Video A

CNN

T

I

CNN O

N

De-Hazing Rank Pooling CNN

Dynamic Appearance CNN feature extraction

Image

Hazy Free Video

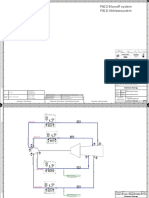

Fig. 1: The process of proposed approach for HAR in hazy videos.

Abstract: 𝐷𝑒 − 𝐻𝑎𝑧𝑖𝑛𝑔

Due to poor quality, it is difficult to analyze the haze degraded video for the

human activities using the existing state-of-the-art methods.

A new two level saliency based end-to-end network (TSNet) for HAR in hazy

videos is proposed.

The concept of rank pooling given in [2] is further utilized to efficiently

represent the temporal saliency of the video.

The transmission map information is utilized here to fix the spatial saliency in

𝑎

each frame. 𝑂𝑝𝑡𝑖𝑐𝑎𝑙 𝐹𝑙𝑜𝑤

𝑎

𝐷𝑒𝑝𝑡ℎ 𝑀𝑎𝑝

A new dataset of hazy video is generated from two benchmark datasets

namely HMDB51 and UCF101 by adding synthetic haze.

Outline of Proposed TSNet: 𝑏 𝑏

A B A

Spatial Saliency Detection: Fig 1. Overview of the problem of HAR in hazy videos. (A) Sample optical flow (OF),

Generally, the actor in any video is in foreground and hence, have a different dynamic image (DI) and dynamic optical flow (DOF) estimation from haze free video. (A)

depth as compared to the background objects. (a) DI of normal RGB frame. (A) (b) DOF of normal RGB frame. (B) Sample OF, DI and

Using transmission map, we can segregate actor from the background. DOF estimation from hazy video. (B) (a) DI of hazy frame. (B) (b) DOF of hazy frame. (C)

Haze is a clue to estimate the scene transmission map. The proposed method of estimating spatial and temporal saliency in hazy video. (C) (a) DI

C2 MSNet [1] is used to estimate scene transmission map. of de-hazed frame. (C) (b) DI of the TrMap. The figure depicts a clear visible difference in

Transmission map is used to define the spatial saliency. OF, DI and DOF of normal frame, hazy frame and de-hazed frame.

Temporal Saliency Detection:

The concept of dynamic image inspired from [2] is used to estimate the

temporal saliency.

The dynamic image is basically a summary of the appearance and dynamics

of the whole video.

Thus, we obtained Appearance Dynamic Image of haze free video and not

of hazy video (see in Fig. 1).

Also, the concept of Depth Dynamic Image is proposed.

Transmission map obtained while image de-hazing is used to obtain the

effective depth dynamic image.

Learning Human Actions:

Fig. 1 depicts the procedure of proposed Human Action Recognition.

Appearance based features:

• For appearance based features, the de-hazed video frames are utilized.

• VGG-19 is used to extract appearance based features.

Depth based features:

• For depth based features, similar network as used for appearance based features is

utilized. The input here is the depth dynamic image.

Fig 1. The qualitative analysis of the hazy vs haze free video frames. The OF is calculated

Experimental Results and Analysis: between the frame shown here with the previous frame of that video.

Datasets: Table III: Comparison of average recognition rate of the proposed method with

UCF101: 101 action contains 13000 videos. some of the existing methods

HMDB51: 51 action classes contains 6766 videos. Methods Hazy-HMDB51 Hazy-UCF101

Synthetic haze is added using optical model. DI [2] 50.2 82.1

Table I: Decrement in the average recognition rate (ARR) of the existing methods SI+DI + OF + DOF [2] 65.2 88.7

when applied on the hazy datasets, DE: decrement, HF: haze Free. TS [4] 55.1 84.6

TSF [3] 60.2 86.3

HMDB51 DE UCF101 DE

Methods STResNet + IDT [3] 66.2 89.3

HF Hazy HF Hazy

Proposed Method 68.7 96.1

DI [2] 57.3 50.2 7.1 86.6 82.1 4.5

SI + DI + OF + DOF [2] 71.5 65.2 6.3 95.0 88.7 6.3

References:

TS [4] 59.4 55.1 4.3 88.0 84.6 3.4

TSF [3] 65.4 60.2 5.2 92.5 86.3 6.2 [1] Dudhane A, Murala S. C2MSNet: A Novel Approach for Single Image Haze Removal, in Winter

Conference on Applications of Computer Vision (WACV), 2018, pp. 1397-1404.

STResNet + IDT [5] 70.3 66.2 4.1 94.6 89.3 5.3

[2] H. B. and B. F. and E. G. and A. Vedaldi, “Action Recognition with Dynamic Image Networks,”

IEEE Trans. Pattern Anal. Mach. Intell., vol. PP, no. 99, pp. 1–1, 2017.A new two level saliency based

end-to-end network (TSNet) for HAR in hazy videos is proposed.

Table II: Comparison of ARR of the proposed method

[3] A. Z. C. Feichtenhofer, A. Pinz, “Convolutional two-stream network fusion for video action

with some of the existing methods. recognition,” in 2016 EEE Conference on Computer Vision and Pattern, 2016, p. 2,6,7.

Methods Hazy-HMDB51 Hazy-UCF101 [4] K. Simonyan and A. Zisserman, “Two-Stream Convolutional Networks for Action Recognition in

Hazy frame 50.3 82.1 Videos,” Adv. Neural Inf. Process. Syst., pp. 568–576, 2014.

Hazy frame + OF 61.2 86.4 [5] R. P. W. Christoph Feichtenhofer, Axel Pinz, “Spatiotemporal Residual Networks for Video Action

Recognition,” in Advances in Neural Information Processing Systems, 2016, pp. 3468–3476.

Hazy frame + TrMap 68.7 96.1

You might also like

- Driving NC II Progress Chart.Document8 pagesDriving NC II Progress Chart.Seniorito Louiesito75% (4)

- HRN PDFDocument7 pagesHRN PDFMisha KulibaevNo ratings yet

- Anggaran Laboratorium MicroteachingDocument2 pagesAnggaran Laboratorium MicroteachingDodik Handoyo100% (2)

- UN46H6302 SeminarDocument64 pagesUN46H6302 SeminarJavier Vasquez LopezNo ratings yet

- Zenith XBR413Document59 pagesZenith XBR413usslcc19No ratings yet

- Cruise Control System: SectionDocument3 pagesCruise Control System: SectioncesarNo ratings yet

- Cruise Control System: SectionDocument3 pagesCruise Control System: SectionZona Educación Especial ZacapaoaxtlaNo ratings yet

- Energean Israel Limited Karish & Tanin Field Development Project Iepci STD Installation For Lighting Cover PageDocument20 pagesEnergean Israel Limited Karish & Tanin Field Development Project Iepci STD Installation For Lighting Cover PageRhona Mae Piodena ToroyNo ratings yet

- Mama Don't Take My Kodachrome AwayDocument1 pageMama Don't Take My Kodachrome AwayTinto RajNo ratings yet

- Section: Driver ControlsDocument7 pagesSection: Driver ControlsNestor RosalesNo ratings yet

- Data Flow Chart - 2Document2 pagesData Flow Chart - 2shaik sameeruddinNo ratings yet

- Utility Duct - 300 MM Single Cell-LayoutDocument1 pageUtility Duct - 300 MM Single Cell-LayoutsombansNo ratings yet

- Lavezares MPS-WCPD-Form-8-GBV-Month - YearDocument3 pagesLavezares MPS-WCPD-Form-8-GBV-Month - YearAllan IgbuhayNo ratings yet

- S1-041-Walls Package 1 Seg 1 - Plans - NDC-830Document107 pagesS1-041-Walls Package 1 Seg 1 - Plans - NDC-830dorevNo ratings yet

- Section: Driver ControlsDocument6 pagesSection: Driver ControlsJuan Diego AvendañoNo ratings yet

- Nissan March k13 Htr12de Factory Service ManualDocument20 pagesNissan March k13 Htr12de Factory Service ManualWillie100% (52)

- Proposed Terminal Building: Archt. Herminiano R. HuelgasDocument4 pagesProposed Terminal Building: Archt. Herminiano R. HuelgasMark Anthony TajonNo ratings yet

- SM-T239M Esquematico Completo Anibal Garcia IrepairDocument9 pagesSM-T239M Esquematico Completo Anibal Garcia IrepairJ Ignacio MartinezNo ratings yet

- Cruise Control System: SectionDocument4 pagesCruise Control System: Sectionyarik0819No ratings yet

- Winter PMParking Ban Map 20092010Document1 pageWinter PMParking Ban Map 20092010Chicago Sun-TimesNo ratings yet

- Cruise Control System: SectionDocument2 pagesCruise Control System: SectionNallely' VillanueevaNo ratings yet

- Accelerator Control System: SectionDocument4 pagesAccelerator Control System: SectionmadurangaNo ratings yet

- Gantt Chart NewDocument9 pagesGantt Chart NewpokjatNo ratings yet

- Schematic LayoutDocument1 pageSchematic Layout8lume.enterpriseNo ratings yet

- 493M GHC MG Mep WS L3 205 1B 00Document1 page493M GHC MG Mep WS L3 205 1B 00shehanNo ratings yet

- Cruise Control System: SectionDocument2 pagesCruise Control System: SectionEgoro KapitoNo ratings yet

- Acc PDFDocument4 pagesAcc PDFHanselPerezAguirreNo ratings yet

- Acd4 MRLDocument66 pagesAcd4 MRLRafael BrunoNo ratings yet

- Body Repair: SectionDocument11 pagesBody Repair: SectionAkramKassisNo ratings yet

- VMS GANTRY FOR 22mDocument6 pagesVMS GANTRY FOR 22mCHANDAN VATSNo ratings yet

- Cruise Control System: SectionDocument2 pagesCruise Control System: SectionAgustin Borge GarciaNo ratings yet

- Achievement and Progressive ChartsDocument6 pagesAchievement and Progressive ChartsMalou RaferNo ratings yet

- Acc PDFDocument4 pagesAcc PDFIsaac Galvez EscuderoNo ratings yet

- HRN - FrontierDocument5 pagesHRN - FrontierIvan A. VelasquezNo ratings yet

- Nissan Titan 13/CCSDocument3 pagesNissan Titan 13/CCSgiancarlo sanchezNo ratings yet

- Strcutures DrawingsDocument59 pagesStrcutures DrawingspanikarickyNo ratings yet

- Accelerator Control System: SectionDocument5 pagesAccelerator Control System: SectionOmar MolinaNo ratings yet

- CCS - Cruise Control System PDFDocument2 pagesCCS - Cruise Control System PDFAxxNo ratings yet

- HS2 design案例Document201 pagesHS2 design案例zhaotian zhangNo ratings yet

- Auto Cruise Control System: SectionDocument2 pagesAuto Cruise Control System: SectionskpppNo ratings yet

- 2006 3500 Maintenance ManualDocument22 pages2006 3500 Maintenance ManualWho CaresNo ratings yet

- Cruise Control System: SectionDocument2 pagesCruise Control System: SectionZatovonirina RazafindrainibeNo ratings yet

- Accelerator Control System: SectionDocument3 pagesAccelerator Control System: SectionRolfy Jampol AyzanoaNo ratings yet

- Accelerator Control System: SectionDocument4 pagesAccelerator Control System: Sectionภาคภูมิ ถ้ำทิมทองNo ratings yet

- 12 - Blow OffDocument2 pages12 - Blow Offdaniel60No ratings yet

- NC PresentationDocument20 pagesNC PresentationwahyudiNo ratings yet

- Centro Colan 2 13-06 Esc 1 en 125Document1 pageCentro Colan 2 13-06 Esc 1 en 125claudia valdiviezoNo ratings yet

- Product Brochure M9 Cardiovascular 20171212Document4 pagesProduct Brochure M9 Cardiovascular 20171212Boqing GuiNo ratings yet

- Section: Driver ControlsDocument6 pagesSection: Driver ControlsWilmer Elias Quiñonez HualpaNo ratings yet

- Rivers Lakes (U) PDFDocument1 pageRivers Lakes (U) PDFElijah MendozaNo ratings yet

- t31 English 07t31e Acc 0001 PDFDocument1 paget31 English 07t31e Acc 0001 PDFMuhammad ArifNo ratings yet

- Accelerator Control System: SectionDocument3 pagesAccelerator Control System: SectionServicio KorazaNo ratings yet

- Road Network: Proposed Bataan DevelopmentDocument1 pageRoad Network: Proposed Bataan DevelopmentJigo AlianganNo ratings yet

- Tech Bullseye 2020Document1 pageTech Bullseye 2020Виталий ВласюкNo ratings yet

- States (U) PDFDocument1 pageStates (U) PDFWinston BaughcomeNo ratings yet

- Acc PDFDocument4 pagesAcc PDFJavier GuerreroNo ratings yet

- Accelerator Control System: SectionDocument3 pagesAccelerator Control System: SectionmadurangaNo ratings yet

- CCS PDFDocument2 pagesCCS PDFАндрей НадточийNo ratings yet

- Cruise Control System: SectionDocument2 pagesCruise Control System: Sectionpepe copiapoNo ratings yet

- CCS PDFDocument2 pagesCCS PDFАндрей НадточийNo ratings yet

- CCS PDFDocument2 pagesCCS PDFАндрей НадточийNo ratings yet

- BEM 126 Mikroskop Digital - V2 English - Win10Document40 pagesBEM 126 Mikroskop Digital - V2 English - Win10Jeven Hanbert JeremyNo ratings yet

- Reflect ADocument4 pagesReflect ATündik SándorNo ratings yet

- Sony CDP 761Document16 pagesSony CDP 761MokaNo ratings yet

- Manual Micro Teatro PhilipsDocument269 pagesManual Micro Teatro PhilipsOscar LeañoNo ratings yet

- Modern Arms Guide-Chapter9Document22 pagesModern Arms Guide-Chapter9nevdull100% (2)

- ALi M3602 Pinout JtagDocument14 pagesALi M3602 Pinout JtagdistefanoNo ratings yet

- Evolution of Computer EquipmentDocument11 pagesEvolution of Computer EquipmentK. Légrádi FruzsinaNo ratings yet

- HIK VisionDocument80 pagesHIK VisionsinisambNo ratings yet

- TV Engineering Question PapersDocument5 pagesTV Engineering Question PapersRambabu ChNo ratings yet

- Manual Tvbox 1440 Manual Eng KworldDocument16 pagesManual Tvbox 1440 Manual Eng KworldCristian BaptistaNo ratings yet

- Realtek: RTD 2271W / 2281W Family Dual-Input LCD Display Controller Brief SpecDocument14 pagesRealtek: RTD 2271W / 2281W Family Dual-Input LCD Display Controller Brief SpecAcronix SolucionesNo ratings yet

- Operationmanual: Cilo C-101 DVD-PlayerDocument28 pagesOperationmanual: Cilo C-101 DVD-PlayerStefan UnuNo ratings yet

- HD DVR Watch Manual: (Waterproof-720P)Document11 pagesHD DVR Watch Manual: (Waterproof-720P)Anonymous bCsszvmNo ratings yet

- Modern Communication Media:: Video CassettesDocument18 pagesModern Communication Media:: Video CassettesmasqueradingNo ratings yet

- HP DreamColor Z27x G2 16 - 9 IPS Studio Display 2NJ08A8#ABA B&HDocument4 pagesHP DreamColor Z27x G2 16 - 9 IPS Studio Display 2NJ08A8#ABA B&HNar UmaliNo ratings yet

- Beginners Guide For 9xxxHD Series 231208 enDocument37 pagesBeginners Guide For 9xxxHD Series 231208 enelgeneral-forokeys7539100% (5)

- Computer Graphics NotesDocument64 pagesComputer Graphics NotesMarianinu antonyNo ratings yet

- Service Manual: 8M19B ChassisDocument38 pagesService Manual: 8M19B ChassisStoneAge1No ratings yet

- Gaming Content Picture Settings and Tips For: Samsung Nu7100 Nuxxxx TV Series (European)Document7 pagesGaming Content Picture Settings and Tips For: Samsung Nu7100 Nuxxxx TV Series (European)Lougan LuzNo ratings yet

- 2017 18 Multi MediaDocument101 pages2017 18 Multi MediaPuskesmas BatujajarNo ratings yet

- Rubric For 2 Minute Promotional VideoDocument3 pagesRubric For 2 Minute Promotional VideoPRC TahilNo ratings yet

- AMEEncoding LogDocument13 pagesAMEEncoding LognielNo ratings yet

- Raspberry Pi As A Video ServerDocument4 pagesRaspberry Pi As A Video ServerBoopathi RNo ratings yet

- Datasheet-Of DS-7232HGHI-M2 V4.71.120 20221123Document4 pagesDatasheet-Of DS-7232HGHI-M2 V4.71.120 20221123garyNo ratings yet

- Tamil HD 1080p Video Songs Free Download PDFDocument2 pagesTamil HD 1080p Video Songs Free Download PDFAkhilNo ratings yet

- Television, Sometimes Shortened To TV or Telly, Is ADocument1 pageTelevision, Sometimes Shortened To TV or Telly, Is AOnly BusinessNo ratings yet

- Led Colour TV Maintenance Manual: Chasis: Model: (JUC7.820.00191640/00186536 (CKD) )Document28 pagesLed Colour TV Maintenance Manual: Chasis: Model: (JUC7.820.00191640/00186536 (CKD) )charly softNo ratings yet