Professional Documents

Culture Documents

6 - Evaluation and User Experience

Uploaded by

Hasnain AhmadOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

6 - Evaluation and User Experience

Uploaded by

Hasnain AhmadCopyright:

Available Formats

Advanced Human Computer Interaction 12/1/2019

Advanced Human Computer

Interaction

Riphah Institute for Computing and

Applied Sciences

Dr. Ayesha Kashif

Evaluation and User Experience

Dr. Ayesha Kashif – Riphah Int Univ 1

Advanced Human Computer Interaction 12/1/2019

Why Evaluation

▪ design process support the design of usable

interactive systems

▪ We still need to assess our designs and test our systems to

ensure that they actually behave as we expect and meet user

requirements

▪ Ideally, evaluation should occur throughout the design life

cycle, with the results of the evaluation feeding back into

modifications to the design

Evaluation Techniques

▪ Evaluation

▪ tests usability and functionality of system

▪ evaluation by the designer or a usability expert, without

direct involvement by users

▪ useful for assessing early designs and prototypes

▪ occurs in laboratory, field and/or in collaboration with users

▪ requires a working prototype or implementation

▪ evaluates both design and implementation

▪ should be considered at all stages in the design life cycle

Dr. Ayesha Kashif – Riphah Int Univ 2

Advanced Human Computer Interaction 12/1/2019

▪ Expert Reviews and Heuristics

▪ Usability Testing and Laboratories

▪ Survey Instruments

▪ Acceptance Tests

▪ Evaluation during Active Use and Beyond

▪ Controlled Psychologically Oriented Experiments

▪ Expert Reviews and Heuristics

▪ Heuristic evaluation

▪ Guidelines review

▪ Consistency inspection

▪ Cognitive walkthrough

▪ Formal usability inspection

Dr. Ayesha Kashif – Riphah Int Univ 3

Advanced Human Computer Interaction 12/1/2019

Expert Reviews and Heuristics

▪ Heuristic evaluation

▪ Today, there are many different types of devices that may be subject to a

heuristic evaluation,

▪ it is important that the heuristics match the application.

▪ The expert reviewers critique an interface to determine conformance with a

short list of design heuristics, such as the Eight Golden Rules

▪ Specialized heuristics exist, such as for mobile app design ( Joyce et al.,

2014) and interactive systems (Masip et al., 2011)

heuristics developed specifically for video games.

Expert Reviews and Heuristics

▪ Guidelines review

▪ The interface is checked for conformance with the

organizational or other guidelines document

▪ Because guidelines documents may contain a thousand

items or more, it may take the expert reviewers some time

to absorb them and days or weeks to review a large

interface.

Dr. Ayesha Kashif – Riphah Int Univ 4

Advanced Human Computer Interaction 12/1/2019

Expert Reviews and Heuristics

▪ Guidelines review

▪ Interface Navigation

Expert Reviews and Heuristics

▪ Guidelines review

▪ Interface Navigation

Dr. Ayesha Kashif – Riphah Int Univ 5

Advanced Human Computer Interaction 12/1/2019

Expert Reviews and Heuristics

▪ Guidelines review

▪ Getting the user's attention

Expert Reviews and Heuristics

▪ Guidelines review

▪ Getting the user's attention

Dr. Ayesha Kashif – Riphah Int Univ 6

Advanced Human Computer Interaction 12/1/2019

Expert Reviews and Heuristics

▪ Consistency inspection

▪ The experts verify consistency across a family of interfaces,

checking the terminology, fonts, color schemes, layout, input

and output formats, and so on, within the interfaces as well

as any supporting materials.

▪ Software tools can help automate the process as well as

produce concordances of words and abbreviations.

▪ Often large-scale interfaces may be developed by several

groups of designers; this can help smooth over the interface

and provide a common and consistent look and feel.

Expert Reviews and Heuristics

▪ Cognitive walkthrough

▪ The experts simulate users walking through the interface to

carry out typical tasks.

▪ High-frequency tasks are a starting point, but rare critical

tasks, such as error recovery, also should be walked

through.

▪ An expert might try the walkthrough privately and explore

the system, but there also should be a group meeting with

designers, users, or managers to conduct a walkthrough and

provoke discussion (Babaian et al., 2012).

Dr. Ayesha Kashif – Riphah Int Univ 7

Advanced Human Computer Interaction 12/1/2019

Expert Reviews and Heuristics

▪ Cognitive walkthrough

▪ Identify the action sequence for this task. We specify this in

terms of the user’s action (UA) and the system’s display or

response (SD).

Expert Reviews and Heuristics

▪ Cognitive walkthrough

▪ Having determined our action list we are in a position to proceed with

the walkthrough. For each action (1–10) we must answer the four

questions and tell a story about the usability of the system. Beginning

with UA 1:

Dr. Ayesha Kashif – Riphah Int Univ 8

Advanced Human Computer Interaction 12/1/2019

Expert Reviews and Heuristics

▪ Formal usability inspection

▪ The experts hold a courtroom-style meeting, with a

moderator or judge, to present the interface and to discuss

its merits and weaknesses.

▪ Design-team members may rebut the evidence about

problems in an adversarial format.

▪ Formal usability inspections can be educational experiences

for novice designers and managers, but they may take

longer to prepare and need more personnel to carry out

than do other types of review.

▪ Expert Reviews and Heuristics

▪ Usability Testing and Laboratories

▪ Survey Instruments

▪ Acceptance Tests

▪ Evaluation during Active Use and Beyond

▪ Controlled Psychologically Oriented Experiments

Dr. Ayesha Kashif – Riphah Int Univ 9

Advanced Human Computer Interaction 12/1/2019

▪ Usability Testing and Laboratories

▪ Usability labs

▪ Ethics in research practices with human participants

▪ Think-aloud and related techniques

▪ The spectrum of usability testing

▪ Paper mockups and prototyping

▪ Discount usability testing

▪ Competitive usability testing

▪ A/B testing

▪ Universal usability testing

▪ Field tests and portable labs

▪ Remote usability testing

▪ Can-you-break-this tests

▪ Usability test reports

Usability Testing and Laboratories

▪ Usability labs

▪ A typical modest usability laboratory would have two areas,

divided by a half-silvered mirror-one for the participants to do

their work and the other for the testers and observers ( designers,

managers, and customers).

▪ The laboratory staff meet with the user experience architect or

manager at the start of the project to make a test plan with

scheduled dates and budget allocations.

▪ Usability laboratory staff

members participate in early

task analysis or design reviews,

▪ provide information on

software tools or literature

references, and help to develop

the set of tasks for the usability

test.

Dr. Ayesha Kashif – Riphah Int Univ 10

Advanced Human Computer Interaction 12/1/2019

Usability Testing and Laboratories

▪ Two to six weeks before the usability test, the detailed test plan is

developed; it contains

▪ the list of tasks plus

▪ subjective satisfaction and

▪ debriefing questions (interview the person about an experience)

▪ The number, types, and sources of participants are also

identified –

▪ sources might be customer sites, temporary personnel agencies,

or advertisements placed in newspapers.

▪ A pilot test of the procedures, tasks, and questionnaires with one

to three participants is conducted approximately one week before

the test, while there is still time for changes.

▪ This typical preparation process can be modified in many ways to

suit each project's unique needs

Usability Testing and Laboratories

▪ Ethics in research practices with human

participants

▪ Participants should always be treated with

respect and should be informed that it is not

they who are being tested; rather, it is the

software and user interface that are under

study.

▪ They should be told about what they will be doing,

▪ for example, finding products on a website,

▪ creating a diagram using a mouse, or

▪ studying a restaurant guide on a touchscreen and

▪ how long they will be expected to stay.

Dr. Ayesha Kashif – Riphah Int Univ 11

Advanced Human Computer Interaction 12/1/2019

Usability Testing and Laboratories

▪ Think-aloud and related techniques

▪ Think-aloud protocols yield interesting clues for observant

usability testers; for example, they may hear comments such as

"This webpage text is too small ... so I'm looking for something on

the menus to make the text bigger ... maybe it's on the top in the

icons ... I can't find it ... so I'll just carry on.“

▪ Another related technique is called retrospective think-aloud.

With this technique, after completing a task, users are asked

what they were thinking as they performed the task.

▪ The drawback is that the users may not be able to wholly and

accurately recall their thoughts after completing the task;

▪ however, this approach allows users to focus all their attention on

the tasks they are performing and generates more accurate

timings.

Usability Testing and Laboratories

▪ The spectrum of usability testing

▪ Paper mockups and prototyping

▪ Early usability studies can be conducted using paper

mockups of pages to assess user reactions to wording,

layout, and sequencing.

▪ A test administrator plays the role of the computer by

flipping the pages while asking a participant user to carry

out typical tasks. This informal testing is inexpensive, rapid,

and usually productive.

▪ Typically designers create low-fidelity paper prototypes of the

design,

▪ but today there are computer programs (e.g., Microsoft Visio,

SmartDraw, Gliffy, Balsamiq, MockingBird) that can allow

designers to create more detailed high-fidelity prototypes with

minimal effort.

Dr. Ayesha Kashif – Riphah Int Univ 12

Advanced Human Computer Interaction 12/1/2019

Usability Testing and Laboratories

▪ The spectrum of usability testing

▪ Discount usability testing

▪ formative evaluation (while designers are changing substantially)

and more extensive usability testing as a summative evaluation

(near the end of the design process).

▪ The formative evaluation identifies problems that guide redesign,

▪ while the summative evaluation provides evidence for product

announcements ("94% of our 120 testers completed their

shopping tasks without assistance")

▪ and clarifies training needs ("with four minutes of instruction,

every participant successfully programmed the device").

▪ Small numbers may be valid for some projects, but when dealing

with web companies with a large public web-facing presence,

experiments may need to be run at large scale with thousands of

users

Usability Testing and Laboratories

▪ The spectrum of usability testing

▪ A/B testing

▪ This method tests different designs of an interface.

▪ Typically, it is done with just two groups of users to observe and

record differences between the designs. Sometimes referred to as

bucket testing, it is similar to a between subjects design

▪ This method of testing is often used with large-scale online

controlled experiments (Kohavi and Longbotham, 2015).

▪ A/B testing involves randomly assigning two groups of users to

either the control group (no change) or the treatment group

(with the change) and then having some dependent measure that

can be tested to see if there is a difference between the groups

▪ This testing method has been used at Bing, where more than 200

experiments are run concurrently with 100 million customers

spanning billions of changes.

▪ Some of the items tested may be new ideas and others are

modifications of existing items (Kohavi et al., 2013).

Dr. Ayesha Kashif – Riphah Int Univ 13

Advanced Human Computer Interaction 12/1/2019

Usability Testing and Laboratories

▪ The spectrum of usability testing

▪ Universal usability testing

▪ This approach tests interfaces with highly diverse users,

hardware, software platforms, and networks.

▪ When a wide range of international users is anticipated, such as

for

▪ consumer electronics products,

▪ Web based information services, or

▪ e-govemment services,

▪ ambitious testing is necessary to clean up problems and thereby help

ensure success.

▪ Being aware of any perceptual or physical limitations of the

users (e.g., vision impairments, hearing difficulties, motor or

mobility impairments)

▪ and modifying the testing to accommodate these limitations will

result in the creation of products that can be used by a wider

variety of users

Usability Testing and Laboratories

▪ The spectrum of usability testing

▪ Field tests and portable labs

▪ This testing method puts new interfaces to work in realistic

environments or in a more naturalistic environment in the

field for a fixed trial period.

▪ These same tests can be repeated over longer time periods to

do longitudinal testing.

▪ Portable usability laboratories with recording and logging

facilities have been developed to support more thorough

field testing.

▪ Sometimes the interface requires a true immersion into the

environment and an "in-the-wild" testing procedure is

required (Rogers et al., 2013).

Dr. Ayesha Kashif – Riphah Int Univ 14

Advanced Human Computer Interaction 12/1/2019

Usability Testing and Laboratories

▪ The spectrum of usability testing

▪ Remote usability testing

▪ Since web-based applications are available across the world, it is

tempting to conduct usability tests online, avoiding the complexity and

cost of bringing participants to a lab.

▪ This makes it possible to have larger numbers of participants with more

diverse backgrounds, and it may add to the realism, since participants

do their tests in their own environments and use their own equipment.

▪ Participants can be recruited by e-mail from customer lists or through

online communities including Amazon Mechanical Turk.

▪ These tests can be performed both synchronously (users do tasks at the

same time while the evaluator observes) and asynchronously (users

perform tasks independently and the evaluator looks at the results

later).

▪ There are many platforms that support this type of testing. They include

Citrix GoToMeeting, Cisco WebEx, IBM Sametime, join. me, and Google

Hangouts.

Usability Testing and Laboratories

▪ The spectrum of usability testing

▪ Can-you-break-this tests

▪ Game designers pioneered the can-you-break-this

approach to usability testing by providing energetic

teenagers with the challenge of trying to beat new games.

▪ This destructive testing approach, in which the users try to find

fatal flaws in the system or otherwise destroy it, has been

used in other types of projects as well and should be

considered seriously.

Dr. Ayesha Kashif – Riphah Int Univ 15

Advanced Human Computer Interaction 12/1/2019

▪ Expert Reviews and Heuristics

▪ Usability Testing and Laboratories

▪ Survey Instruments

▪ Acceptance Tests

▪ Evaluation during Active Use and Beyond

▪ Controlled Psychologically Oriented Experiments

Survey Instruments

▪ User surveys (written or online)

▪ are familiar, inexpensive, and generally acceptable companions

for usability tests and expert reviews.

▪ Managers and users can easily grasp the notion of surveys, and

the typically large numbers of respondents (hundreds to

thousands of users) confer a sense of authority compared to the

potentially biased and highly variable results from small numbers

of usability-test participants or expert reviewers.

▪ The keys to successful surveys are clear goals in advance and

development of focused items that help to attain those goals.

▪ Two critical aspects of survey design are validity and reliability.

Experienced surveyors know that care is needed during design,

administration, and data analysis (Lazar et al., 2009; Cairns and

Cox, 2011; Kohavi et al., 2013; Tullis and Albert, 2013).

Dr. Ayesha Kashif – Riphah Int Univ 16

Advanced Human Computer Interaction 12/1/2019

Survey Instruments

▪ Preparing and designing survey questions

▪ Users can be asked for their subjective impressions about

specific aspects of the interface, such as the representation

of:

▪ Task domain objects and actions

▪ Interface domain metaphors and action handles

▪ Syntax of inputs and design of screen displays

Survey Instruments

▪ Preparing and designing survey questions

Dr. Ayesha Kashif – Riphah Int Univ 17

Advanced Human Computer Interaction 12/1/2019

Survey Instruments

▪ Sample questionnaires

▪ Questionnaire for User Interaction Satisfaction (QUIS).

▪ The QUIS has been applied in many projects with

thousands of users, and new versions have been created

that include items relating to website design.

Survey Instruments

▪ Sample questionnaires

▪ System Usability Scale (SUS)

▪ Developed by John Brooke, it is sometimes referred to as the "quick and

dirty" scale. The SUS consists of 10 statements

▪ with which users rate their agreement (on a 5-point scale). Half of the

questions are positively worded, and the other half are negatively

worded. A score is computed that can be viewed as a percentage.

Dr. Ayesha Kashif – Riphah Int Univ 18

Advanced Human Computer Interaction 12/1/2019

▪ Expert Reviews and Heuristics

▪ Usability Testing and Laboratories

▪ Survey Instruments

▪ Acceptance Tests

▪ Evaluation during Active Use and Beyond

▪ Controlled Psychologically Oriented Experiments

Acceptance Tests

measurable criteria for the user interface can be established

for the following:

▪ Time for users to learn specific functions

▪ Speed of task performance

▪ Rate of errors by users

▪ User retention of commands over time

▪ Subjective user satisfaction

Dr. Ayesha Kashif – Riphah Int Univ 19

Advanced Human Computer Interaction 12/1/2019

Acceptance Tests

▪ Expert Reviews and Heuristics

▪ Usability Testing and Laboratories

▪ Survey Instruments

▪ Acceptance Tests

▪ Evaluation during Active Use and Beyond

▪ Controlled Psychologically Oriented Experiments

Dr. Ayesha Kashif – Riphah Int Univ 20

Advanced Human Computer Interaction 12/1/2019

Evaluation during Active Use and Beyond

▪ Interviews and focus-group discussions

▪ direct contact with users often leads to specific, constructive

suggestions.

▪ Professionally led focus groups can elicit surprising patterns

of usage or hidden problems, which can be quickly

explored and confirmed by participants.

▪ Interviews and focus groups can be arranged to target

specific sets of users, such as

▪ experienced or long-term users of a product, generating

different sets of issues

▪ than would be raised with novice users.

Evaluation during Active Use and Beyond

▪ Continuous user-performance data logging

▪ The software architecture should make it easy for system

managers to collect data about the

▪ patterns of interface usage,

▪ speed of user performance, rate of errors, and/ or

▪ frequency of requests for online assistance.

▪ Logging data provide guidance in the

▪ acquisition of new hardware,

▪ changes in operating procedures,

▪ improvements to training,

▪ plans for system expansion, and so on.

Dr. Ayesha Kashif – Riphah Int Univ 21

Advanced Human Computer Interaction 12/1/2019

Evaluation during Active Use and Beyond

▪ Online or chat consultants, e-mail, and online

suggestion boxes

▪ Online or chat consultants can provide extremely effective

and personal assistance to users who are experiencing

difficulties.

▪ Many users feel reassured if they know that there is a

human being to whom they can turn when problems arise.

▪ These consultants are an excellent source of information

about problems users are having and can suggest

improvements and potential extensions.

Evaluation during Active Use and Beyond

▪ Discussion groups, wikis, newsgroups, and search

▪ Many interaction designers and website managers offer

users discussion groups, newsgroups, or wikis to permit

posting of open messages and questions.

▪ Discussion groups usually offer lists of item headlines,

allowing users to scan for relevant topics. User-generated

content fuels these discussion groups.

▪ Mostly anyone can add new items, but usually someone

moderates the discussion to ensure that offensive, useless,

outdated, or repetitious items are removed.

Dr. Ayesha Kashif – Riphah Int Univ 22

Advanced Human Computer Interaction 12/1/2019

Evaluation during Active Use and Beyond

▪ Tools for automated evaluation

▪ Software tools can be effective in evaluating user interfaces

for applications, websites, and mobile devices.

▪ Even straightforward tools to check spelling or concordance

of terms benefit interaction designers.

▪ Simple metrics that report numbers of pages, widgets, or

links between pages capture the size of a user interface

project, but the

▪ inclusion of more sophisticated evaluation procedures can

allow interaction designers to assess whether a menu tree is

too deep or contains redundancies,

▪ whether labels have been used consistently,

▪ whether all buttons have proper transitions associated with

them, and so on.

Evaluation during Active Use and Beyond

▪ Tools for automated evaluation

▪ Research Recommendations

▪ Keep average link text to

▪ two to three words,

▪ using sans serif fonts and

▪ applying colors to highlight headings.

▪ in e-commerce, mobile, entertainment, and gaming applications,

▪ the attractiveness may be more important than rapid task execution.

▪ users may prefer designs that are

▪ comprehensible,

▪ predictable,

▪ visually appealing and

▪ that incorporate relevant content.

Dr. Ayesha Kashif – Riphah Int Univ 23

Advanced Human Computer Interaction 12/1/2019

Evaluation during Active Use and Beyond

▪ Tools for automated evaluation

▪ run-time logging software

▪ captures the users’ patterns of activity

▪ Simple reports-such as the

▪ frequency of each error message,

▪ menu-item selection,

▪ dialog-box appearance,

▪ help invocation,

▪ form-field usage, or

▪ webpage access-

▪ are of great benefit to maintenance personnel and to revisers of

the initial design.

Evaluation during Active Use and Beyond

▪ Tools for automated evaluation

▪ evaluating mobile devices in the field

▪ A log-file-recording tool that captures

▪ clicks with associated timestamps and positions on the display,

▪ keeping track of items selected and display changes,

▪ capturing the page shots, and

▪ recording when a user is finished, can provide valuable

information for analysis.

Dr. Ayesha Kashif – Riphah Int Univ 24

Advanced Human Computer Interaction 12/1/2019

▪ Expert Reviews and Heuristics

▪ Usability Testing and Laboratories

▪ Survey Instruments

▪ Acceptance Tests

▪ Evaluation during Active Use and Beyond

▪ Controlled Psychologically Oriented Experiments

Controlled Psychologically Oriented Experiments

▪ Experimental approaches

▪ The classic scientific method for interface research which is

based on controlled experimentation, has this basic outline:

▪ Understanding of a practical problem and related theory

▪ Lucid statement of a testable hypothesis

▪ Manipulation of a small number of independent variables

▪ Measurement of specific dependent variables

▪ Careful selection and assignment of subjects

▪ Control for bias in subjects, procedures, and materials

▪ Application of statistical tests

▪ Interpretation of results, refinement of theory, and guidance for

experimenters

Dr. Ayesha Kashif – Riphah Int Univ 25

Advanced Human Computer Interaction 12/1/2019

Controlled Psychologically Oriented Experiments

▪ Experimental design

▪ it is important that the sample is representative of the target

users for the interface

▪ Users are frequently grouped or categorized by some sort of

demographics, such as age, gender, computer experience, or

other attribute.

▪ When selecting participants from a population to create the

sample, the sampling technique needs to be considered.

▪ Are people selected randomly?

▪ Is there a stratified subsample that should be used?

▪ The sample size is another consideration.

▪ It is important to define a confidence level that needs to be

met for the study

Controlled Psychologically Oriented Experiments

▪ Experimental design

▪ within subject design

▪ each subject performs experiment under each condition.

▪ transfer of learning possible

▪ less costly and less likely to suffer from user variation.

▪ between subject design

▪ each subject performs under only one condition

▪ no transfer of learning

▪ more users required

▪ variation can bias results

Dr. Ayesha Kashif – Riphah Int Univ 26

Advanced Human Computer Interaction 12/1/2019

Controlled Psychologically Oriented Experiments

▪ Experimental factors

▪ Subjects

▪ who – representative, sufficient sample

▪ chosen to match the expected user population as closely as possible

▪ Variables

▪ things to modify and measure

▪ Hypothesis

▪ what you’d like to show

▪ Experimental design

▪ how you are going to do it

▪ independent variable (IV)

characteristic changed to produce different conditions

e.g. interface style, number of menu items

▪ dependent variable (DV)

characteristics measured in the experiment

e.g. time taken, number of errors.

Controlled Psychologically Oriented Experiments

▪ Hypothesis

▪ prediction of outcome

▪ A hypothesis is a prediction of the outcome of an experiment.

▪ framed in terms of IV (independent variable) and DV

(dependent variable)

e.g. “error rate will increase as font size decreases”

▪ null hypothesis:

▪ states no difference between conditions

▪ aim is to disprove this

e.g. null hyp. = “no change with font size”

Dr. Ayesha Kashif – Riphah Int Univ 27

Advanced Human Computer Interaction 12/1/2019

Controlled Psychologically Oriented Experiments

▪ An example: evaluating icon designs

▪ Imagine you are designing a new interface to a document-

processing package, which is to use icons for presentation.

▪ You are considering two styles of icon design and you wish to

know which design will be easier for users to remember.

▪ One set of icons uses naturalistic images (based on a paper

document metaphor),

▪ the other uses abstract images.

▪ How might you design an experiment to help you decide

which style to use?

Controlled Psychologically Oriented Experiments

▪ An example: evaluating icon designs

▪ devise two interfaces composed of blocks of icons, one for

each condition.

▪ task (say ‘delete a document’) and is required to select the

appropriate icon.

Dr. Ayesha Kashif – Riphah Int Univ 28

Advanced Human Computer Interaction 12/1/2019

Controlled Psychologically Oriented Experiments

▪ An example: evaluating icon designs

▪ Form a Hypothesis

▪ what do you consider to be the likely outcome?

▪ In this case, you might expect the natural icons to be easier to recall

since they are more familiar to users.

▪ The null hypothesis in this case is that there will be no difference

between recall of the icon types.

▪ Independent Variable

▪ style of icon

▪ Dependent Variable

▪ the number of mistakes in selection

▪ the time taken to select an icon

▪ Experimental Method

▪ within-subjects

Controlled Psychologically Oriented Experiments

▪ Analysis of data

▪ Before you start to do any statistics:

▪ look at data

▪ save original data

▪ Choice of statistical technique depends on

▪ type of data

▪ information required

▪ Type of data

▪ discrete - finite number of values

▪ for example, a screen color that can be red, green or blue

▪ continuous - any value

▪ for example a person’s height or the time taken to complete a task

▪ continuous to discrete

▪ A continuous variable can be rendered discrete by clumping it into

classes, for example we could divide heights into short (<5 ft (1.5

m)), medium (5–6 ft (1.5–1.8 m)) and tall (>6 ft (1.8 m)).

Dr. Ayesha Kashif – Riphah Int Univ 29

Advanced Human Computer Interaction 12/1/2019

Controlled Psychologically Oriented Experiments

▪ Analysis - types of test

▪ If the form of the data follows a known distribution then

special and more powerful statistical tests can be used.

▪ parametric

▪ assume normal distribution

▪ This means that if we plot a histogram(a histogram groups numbers into ranges)

of the random errors, they will form the well-known bell-shaped

graph.

▪ Robust

▪ they give reasonable results even when the data are not precisely

normal

▪ powerful

Controlled Psychologically Oriented Experiments

▪ Analysis - types of test

▪ Checking whether data are really normal

▪ As a general rule, if data can be seen as the sum or average of

many small independent effects they are likely to be normal.

▪ For example, the time taken to complete a complex task is the

sum of the times of all the minor tasks of which it is

composed.

▪ On the other hand, a subjective rating of the usability of an

interface will not be normal.

Dr. Ayesha Kashif – Riphah Int Univ 30

Advanced Human Computer Interaction 12/1/2019

Analysis - types of test

▪ Analysis - types of test

▪ non-parametric

▪ do not assume normal distribution

▪ These are statistical tests that make no assumptions about the

particular distribution and are usually based purely on the ranking

of the data.

▪ That is, each item of a data set (for example, 57, 32, 61, 49) is

reduced to its rank (3, 1, 4, 2), before analysis begins.

▪ powerful

▪ given the same set of data, a parametric test might detect a difference

that the non-parametric test would miss

▪ more reliable

▪ more resistant to outliers

▪ contingency table

▪ classify data by discrete attributes

▪ count number of data items in each group

Controlled Psychologically Oriented Experiments

▪ Analysis - types of test

Dr. Ayesha Kashif – Riphah Int Univ 31

Advanced Human Computer Interaction 12/1/2019

References

▪ QUIS (http://lap.umd.edu/quis/)

▪ http://www.usability.gov

▪ http://www.allaboutux.org/all-methods

▪ http://www.usabilityfirst.com/

▪ https://www.nngroup.com/articles/ten-usability-heuristics/

▪ https://blinkux.com/

▪ Masip, L., GranoUers, T., and OJjva, M., A heuristic evaluation

experiment to validate the new set of usability heuristics, 2011 Eighth

International Conference on Information Technology: New Generations

(2011).

▪ Joyce, G., Lilley, M., Barker, T., and Jefferies, A., Adapting heuristics for

the mobile panorama, Interaction '14 (2014).

Dr. Ayesha Kashif – Riphah Int Univ 32

You might also like

- Requirements Management Plan A Complete Guide - 2019 EditionFrom EverandRequirements Management Plan A Complete Guide - 2019 EditionNo ratings yet

- Operational Readiness Review A Complete Guide - 2020 EditionFrom EverandOperational Readiness Review A Complete Guide - 2020 EditionNo ratings yet

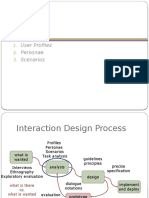

- User profiles, personae and scenarios analysisDocument32 pagesUser profiles, personae and scenarios analysisSalmanNo ratings yet

- User Experience DesignDocument6 pagesUser Experience DesignMarkus SantosoNo ratings yet

- User Story PDFDocument21 pagesUser Story PDFreachluckyyNo ratings yet

- Project CharterDocument2 pagesProject CharterkaranNo ratings yet

- MCE ERPIP Functional RequirementsDocument62 pagesMCE ERPIP Functional Requirementshddon100% (1)

- Business vs. IT: Solving The Communication GapDocument12 pagesBusiness vs. IT: Solving The Communication GapiarnabNo ratings yet

- User Interface Evaluation Methods For Internet Banking Web Sites: A Review, Evaluation and Case StudyDocument6 pagesUser Interface Evaluation Methods For Internet Banking Web Sites: A Review, Evaluation and Case StudyPanayiotis ZaphirisNo ratings yet

- Bahria University HCI Assignment on Usability and User Experience GoalsDocument3 pagesBahria University HCI Assignment on Usability and User Experience GoalsZubair TalibNo ratings yet

- 5 - Designing For Mobile and Other DevicesDocument25 pages5 - Designing For Mobile and Other DevicesHasnain AhmadNo ratings yet

- Create Functional Design for Proposed Nonprofit WebsiteDocument4 pagesCreate Functional Design for Proposed Nonprofit WebsiteRedwan Nehmud RazyNo ratings yet

- Discipline of HCIDocument39 pagesDiscipline of HCIAnum Jutt0% (1)

- SRS for Hamro Ramro TV SoftwareDocument11 pagesSRS for Hamro Ramro TV SoftwareNisha BaruwalNo ratings yet

- SRS On Online Billing and Stocking ManagementDocument16 pagesSRS On Online Billing and Stocking Managementrashivadekar_nitish100% (1)

- Wku TRANSPORTATION MANAGEMENT SYSTEM SadDocument11 pagesWku TRANSPORTATION MANAGEMENT SYSTEM SadnebyuNo ratings yet

- Emergency Services Directory Software ArchitectureDocument9 pagesEmergency Services Directory Software Architectureram0976928No ratings yet

- Software Requirements TraceabilityDocument20 pagesSoftware Requirements TraceabilityKishore ChittellaNo ratings yet

- Measuring Customer SatisfactionDocument11 pagesMeasuring Customer Satisfactionbogs_sm4544No ratings yet

- User Experience and Interface DesignDocument8 pagesUser Experience and Interface DesignjosephsubinNo ratings yet

- User Story Artifacts Dor DodDocument10 pagesUser Story Artifacts Dor DodJason NdekelengweNo ratings yet

- Requirements Traceability Matrix Sample - Airline BookingDocument5 pagesRequirements Traceability Matrix Sample - Airline Bookingsheilaabad8766No ratings yet

- Louw, Door Janne, (2006, May 10,2006) - Description With UML Hotel Reservation System. Developed A Hotel Management System That Can Be Used OnlineDocument12 pagesLouw, Door Janne, (2006, May 10,2006) - Description With UML Hotel Reservation System. Developed A Hotel Management System That Can Be Used OnlineSherry IgnacioNo ratings yet

- Agile Vs Waterfall DebateDocument5 pagesAgile Vs Waterfall DebatemarvinmayorgaNo ratings yet

- Heuristic Evaluation of Informatica Axon by 4 UX Experts PDFDocument43 pagesHeuristic Evaluation of Informatica Axon by 4 UX Experts PDFpixeljoyNo ratings yet

- Project Scope Management: Collecting Requirements, Defining Scope, Creating WBSDocument24 pagesProject Scope Management: Collecting Requirements, Defining Scope, Creating WBSAbdallah BehriNo ratings yet

- Requirements GatheringDocument13 pagesRequirements GatheringNidaliciousNo ratings yet

- Business Requirement Document (BRD) - Real-Time Chat ApplicationDocument4 pagesBusiness Requirement Document (BRD) - Real-Time Chat Applicationaadityaagarwal33No ratings yet

- User Documentation PlanDocument8 pagesUser Documentation PlanImran ShahidNo ratings yet

- SRS - Software Requirement SpecificationDocument5 pagesSRS - Software Requirement SpecificationMeet VaghasiyaNo ratings yet

- Cost EstimationDocument77 pagesCost EstimationFahmi100% (1)

- Mobile Bus Ticketing System DevelopmentDocument8 pagesMobile Bus Ticketing System Developmentralf casagdaNo ratings yet

- Benchmark - Mobile App Planning and Design MTDocument14 pagesBenchmark - Mobile App Planning and Design MTMarisa TolbertNo ratings yet

- Final Web Experience Statement of WorkDocument13 pagesFinal Web Experience Statement of Workapi-106423440No ratings yet

- Explain User Story MappingDocument3 pagesExplain User Story MappingMadhavi LataNo ratings yet

- Project ProposalDocument6 pagesProject ProposalKuro NekoNo ratings yet

- Communications For Development and International Organisations in PalestineDocument128 pagesCommunications For Development and International Organisations in PalestineShehab ZahdaNo ratings yet

- SRS TemplateDocument29 pagesSRS TemplateZain Ul IslamNo ratings yet

- Typical Elements in A Project CharterDocument28 pagesTypical Elements in A Project CharterKGrace Besa-angNo ratings yet

- 10 Reqs GatheringDocument21 pages10 Reqs GatheringRhea Soriano CalvoNo ratings yet

- RPA Projects SynopsisDocument4 pagesRPA Projects Synopsisshruthi surendranNo ratings yet

- Requirements Elicitation OverviewDocument45 pagesRequirements Elicitation OverviewSaleem IqbalNo ratings yet

- Srs 140914025914 Phpapp02Document53 pagesSrs 140914025914 Phpapp02hardik4mailNo ratings yet

- Intranet Best Practices GuideDocument5 pagesIntranet Best Practices GuidemaheshdbaNo ratings yet

- Internet Cafe Management - Plain SRSDocument13 pagesInternet Cafe Management - Plain SRSRaulkrishn50% (2)

- Writing Software Requirements SpecificationsDocument16 pagesWriting Software Requirements Specificationskicha0230No ratings yet

- Valentinos Computerised System: MSC in Software Engineering With Management StudiesDocument19 pagesValentinos Computerised System: MSC in Software Engineering With Management StudiesGaurang PatelNo ratings yet

- Formal Usability ProposalDocument9 pagesFormal Usability ProposalCamilo ManjarresNo ratings yet

- Analysis: User Profiles Personae ScenariosDocument32 pagesAnalysis: User Profiles Personae ScenariosSalmanNo ratings yet

- Requirements Engineer JobDocument3 pagesRequirements Engineer JobFaiz InfyNo ratings yet

- Free Custom Software Comparison ReportDocument7 pagesFree Custom Software Comparison ReportMiguel Angel AlonsoNo ratings yet

- Uml Deployment DiagramDocument3 pagesUml Deployment DiagramMaryus SteauaNo ratings yet

- Intro To HCI and UsabilityDocument25 pagesIntro To HCI and UsabilityHasnain AhmadNo ratings yet

- 5 - Designing For Mobile and Other DevicesDocument25 pages5 - Designing For Mobile and Other DevicesHasnain AhmadNo ratings yet

- 4 - Designing For WebDocument25 pages4 - Designing For WebHasnain AhmadNo ratings yet

- Quiz4 AHCI Case StudiesDocument1 pageQuiz4 AHCI Case StudiesHasnain AhmadNo ratings yet

- Quiz3 AHCIDocument1 pageQuiz3 AHCIHasnain AhmadNo ratings yet

- Interactive DesignDocument37 pagesInteractive DesignHasnain AhmadNo ratings yet

- AHCI OutlineDocument1 pageAHCI OutlineHasnain AhmadNo ratings yet

- 9 - Design Case StudiesDocument22 pages9 - Design Case StudiesHasnain Ahmad100% (1)

- Designing and Evaluating Usable Technology in Industrial ResearchDocument118 pagesDesigning and Evaluating Usable Technology in Industrial ResearchHasnain AhmadNo ratings yet

- 7 - Ideation and PrototypingDocument44 pages7 - Ideation and PrototypingHasnain AhmadNo ratings yet

- Nutrition PAKDocument2 pagesNutrition PAKHasnain AhmadNo ratings yet

- MgstreamDocument2 pagesMgstreamSaiful ManalaoNo ratings yet

- Target products to meet 20% demandDocument12 pagesTarget products to meet 20% demandAlma Dela PeñaNo ratings yet

- Fabric Trademark and Brand Name IndexDocument15 pagesFabric Trademark and Brand Name Indexsukrat20No ratings yet

- Landman Training ManualDocument34 pagesLandman Training Manualflashanon100% (2)

- DepEd Memorandum on SHS Curriculum MappingDocument8 pagesDepEd Memorandum on SHS Curriculum MappingMichevelli RiveraNo ratings yet

- Engineers Guide To Microchip 2018Document36 pagesEngineers Guide To Microchip 2018mulleraf100% (1)

- TNPSC Vas: NEW SyllabusDocument12 pagesTNPSC Vas: NEW Syllabuskarthivisu2009No ratings yet

- Density and Buoyancy Practice Test AnswersDocument9 pagesDensity and Buoyancy Practice Test AnswersYesha ShahNo ratings yet

- Report On Foundations For Dynamic Equipment: Reported by ACI Committee 351Document14 pagesReport On Foundations For Dynamic Equipment: Reported by ACI Committee 351Erick Quan LunaNo ratings yet

- Lead and Manage Team Effectiveness for BSBWOR502Document38 pagesLead and Manage Team Effectiveness for BSBWOR502roopaNo ratings yet

- Major Discoveries in Science HistoryDocument7 pagesMajor Discoveries in Science HistoryRoland AbelaNo ratings yet

- Geraldez Vs Ca 230 Scra 329Document12 pagesGeraldez Vs Ca 230 Scra 329Cyrus Pural EboñaNo ratings yet

- SD NEGERI PASURUHAN PEMERINTAH KABUPATEN TEMANGGUNGDocument5 pagesSD NEGERI PASURUHAN PEMERINTAH KABUPATEN TEMANGGUNGSatria Ieea Henggar VergonantoNo ratings yet

- PCC 3300 PDFDocument6 pagesPCC 3300 PDFdelangenico4No ratings yet

- Surveying 2 Practical 3Document15 pagesSurveying 2 Practical 3Huzefa AliNo ratings yet

- Anne Frank Halloween Costume 2Document5 pagesAnne Frank Halloween Costume 2ive14_No ratings yet

- GES1003 AY1819 CLS Tutorial 1Document4 pagesGES1003 AY1819 CLS Tutorial 1AshwinNo ratings yet

- OsteoporosisDocument57 pagesOsteoporosisViviViviNo ratings yet

- Unit 2 - LISDocument24 pagesUnit 2 - LISThục Anh NguyễnNo ratings yet

- Merger Case AnalysisDocument71 pagesMerger Case Analysissrizvi2000No ratings yet

- Researching Indian culture and spirituality at Auroville's Centre for Research in Indian CultureDocument1 pageResearching Indian culture and spirituality at Auroville's Centre for Research in Indian CultureJithin gtNo ratings yet

- BIODATADocument1 pageBIODATAzspi rotc unitNo ratings yet

- Evosys Fixed Scope Offering For Oracle Fusion Procurement Cloud ServiceDocument12 pagesEvosys Fixed Scope Offering For Oracle Fusion Procurement Cloud ServiceMunir AhmedNo ratings yet

- Ume Mri GuideDocument1 pageUme Mri GuideHiba AhmedNo ratings yet

- Use VCDS with PC lacking InternetDocument1 pageUse VCDS with PC lacking Internetvali_nedeleaNo ratings yet

- Unit 1 Advanced WordDocument115 pagesUnit 1 Advanced WordJorenn_AyersNo ratings yet

- Ky203817 PSRPT 2022-05-17 14.39.33Document8 pagesKy203817 PSRPT 2022-05-17 14.39.33Thuy AnhNo ratings yet

- Research Proposal HaDocument3 pagesResearch Proposal Haapi-446904695No ratings yet

- BATCH Bat Matrix OriginalDocument5 pagesBATCH Bat Matrix OriginalBarangay NandacanNo ratings yet

- 1967 Painting Israeli VallejoDocument1 page1967 Painting Israeli VallejoMiloš CiniburkNo ratings yet