Professional Documents

Culture Documents

A Genetic Algorithm Approach Mtech With Sandeep Sir PDF

A Genetic Algorithm Approach Mtech With Sandeep Sir PDF

Uploaded by

VikasThadaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A Genetic Algorithm Approach Mtech With Sandeep Sir PDF

A Genetic Algorithm Approach Mtech With Sandeep Sir PDF

Uploaded by

VikasThadaCopyright:

Available Formats

IJRIM Volume 1, Issue 4(August, 2011) (ISSN 2231-4334)

A GENETIC ALGORITHM APPROACH FOR IMPROVING THE

AVERAGE RELEVANCY OF RETRIEVED DOCUMENTS USING

JACCARD SIMILARITY COEFFICIENT

Mr. Vikas Thada*

Mr. Sandeep Joshi**

ABSTRACT

The rapid growth of the world-wide web poses unprecedented scaling challenges for

general-purpose crawlers and search engines. A focused crawler aims at selectively seek

out pages that are relevant to a pre-defined set of topics. Besides specifying topics by some

keywords, it is customary also to use some exemplary documents to compute the similarity

of a given web document to the topic. In this paper we present a method for finding out the

most relevant document for the given set of keyword by using the method of similarity

measure of jaccard coefficient. We use genetic algorithm to show that the average

similarity of documents to the query increases when initial set of keyword is expanded by

some new keywords. The similarity coefficient for a set of documents retrieved for a given

query from google are find out then average relevancy is calculated. The query is

expanded with new keywords and we show that average relevancy for this new set of

documents increases.

Keywords : algorithm ,average, coefficient, genetic, jaccard,, relevancy.

*M.Tech(CS) IV Sem , Department of Computer Engineering, Sobhasaria Engineering

College, Sikar(Rajasthan).

** HOD(CS &IT), Department of Computer Engineering, Sobhasaria Engineering College,

Sikar(Rajasthan).

International Journal of Research in IT & Management

http://www.mairec org 50

IJRIM Volume 1, Issue 4(August, 2011) (ISSN 2231-4334)

1. INTRODUCTION

The rapid growth of the World-Wide Web poses unprecedented scaling challenges for

general-purpose crawlers and search engines. The first generation of crawlers on which

most of the web search engines are based rely heavily on traditional graph algorithms, such

as breadth-first or depth-first traversal, to index the web. A core set of URLs are used as a

seed set, and the algorithm recursively follows hyperlinks down to other documents.

Document content is paid little heed, since the ultimate goal of the crawl is to cover the

whole Web [1]. The motivation for focused crawler comes from the poor performance of

general-purpose search engines, which depend on the results of generic Web crawlers. So,

focused crawler aim to search and retrieve only the subset of the world-wide web that

pertains to a specific topic of relevance. Focused crawling search algorithm is a key

technology of focused crawler which directly affects the search quality.

The ideal focused crawler retrieves the maximal set of relevant pages while simultaneously

traversing the minimal number of irrelevant documents on the web. Compare to the

standard web search engines, focused crawler yield good recall as well as good precision

by restricting themselves to a limited domain [2].

Focused crawlers look for a subject, usually a set of keywords dictated by search engine, as

they traverse web pages. Instead of extracting so many documents from the web without

any priority, a focused crawler follows the most appropriate links, leading to retrieval of

more relevant pages and greater saves in resources. They usually use a best-first search

method called the crawling strategy to determine which hyperlink to follow next. Better

crawling strategies result in higher precision of retrieval.

2. GENETIC ALGORITHM

Genetic Algorithms [6] are based on the principle of heredity and evolution which claims

“in each generation the stronger individual survives and the weaker dies”. Therefore, each

new generation would contain stronger (fitter) individuals in contrast to its ancestors. In a

typical Genetic Algorithm problem, the search space is usually represented as a number of

individuals called chromosomes which make the initial population and the goal is to obtain

International Journal of Research in IT & Management

http://www.mairec org 51

IJRIM Volume 1, Issue 4(August, 2011) (ISSN 2231-4334)

a set of qualified chromosomes after some generations. The quality of a chromosome is

measured by a fitness function (Jaccard in our experiment). Each generation produces new

children by applying genetic crossover and mutation operators. Usually, the process ends

while two consecutive generations do not produce a significant fitness improvement or

terminates after producing a certain number of new generations.

3. THE VECTOR SPACE DOCUMENT MODEL[7]

In the vector space model, we are given a collection of documents and a query. The goal of

the query is to retrieve relevant documents out of the collection. In this model, both the

documents and the query are represented as vectors.

Once we have document and query vectors, the process of retrieving relevant documents is

to compare two vectors together. Given the following terminology, we can compare vectors

in several different ways:

Given vectors :

X=(x1,x2,…, xt) and

Y=(y1, y2,…,yt)

Where:

xi - weight of term i in the document and

yi - weight of term i in the query

Additionally, for binary weights, let:

|X| = number of 1s in the document and

|Y| = number of 1s in query

The following are some methods for comparing vectors:

Figure 1: various similarity coefficients

International Journal of Research in IT & Management

http://www.mairec org 52

IJRIM Volume 1, Issue 4(August, 2011) (ISSN 2231-4334)

From the above we have used jaccard similarity coefficient for comparing document and

query vector.

4. EXPERIMENT WORK AND EMPIRICAL RESULTS

In our experiment we have selected few queries initially and retrieved first 1o documents

from the Google search engine. This we have done for generating chromosomes and

extracts the keyword

with the highest frequency from each of these pages. These keywords are arranged in the

same order as their

associated documents were downloaded in an array with n elements which is chromosome

length. The length of chromosome is a matter of choice and depends upon number of

keywords collectively from the 10 documents. We have chosen chromosome length to be

of 21.

Average relevancy of each set of document for a single query was calculated using jaccard

quotient as fitness function and applying the selection, crossover and mutation operation.

We have selected roulette function for selection of fittest chromosomes after each

generation.

Here dj is the any document and dq is query document. Both are represented as vector of n

terms. For each term appearing in the query if appears in any of the 10 documents in the set

a 1 was put at that position else 0 was put.

International Journal of Research in IT & Management

http://www.mairec org 53

IJRIM Volume 1, Issue 4(August, 2011) (ISSN 2231-4334)

After finding average relevancy for a set of documents for a single query the same query

was extended using one or two new keywords that was mostly found in almost all of the

downloaded pages. The results were quite promising and are presented below:

1. Probability of crossover Pc=0.8

2. Probability of mutation Pm=0.01

Table 1: Average relevancy using jaccard coefficient

5. CONCLUSION & FUTURE WORK

We have conducted several experiments using number of initial keyword as shown above

in the table. But we selected only the first 10 pages out of the google search result. This can

be extended for 30-50 pages for a precise calculation of efficiency. Further we have shown

only the result for Pc=0.8 and Pm=0.01. For various values of Pc and Pm results can be

International Journal of Research in IT & Management

http://www.mairec org 54

IJRIM Volume 1, Issue 4(August, 2011) (ISSN 2231-4334)

obtained. In conclusion, although the initial results are encouraging, there is still a long

way to achieve the greatest possible crawling efficiency.

The work can be extended by using other similarity coefficients like dice and cosine and

comparing the result.

6. REFERENCES

[1] D. Michelangelo, C. Frans, L.Steve, C. Lee , G.Marco(2000) Focused Crawling

using Context Graphs: Proceedings of the 26th International Conference on Very

Large Databases, pp. 527–534.

[2] E. Martin Ester, G.Matthias, K. Hans-Peter Kriegel, Focused Web

Crawling(2001): A Generic Framework for Specifying the User Interest and for

Adaptive Crawling Strategies : Proceedings of the 27th International Conference on

Very Large Database, pp.633-637.

[3] F. Menczer, G. Pant, P. Srinivasan and M. Ruiz(2001) Evaluating Topic-Driven

Web Crawlers: In Proceedings of the 24th annual International ACM/SIGIR

Conference, pp.531-535.

[4] J. Holland(1975),Adaption in natural and artificial systems : University of

Michigan Press,

[5] D. E. Goldberg(1989),Genetic Algorithms in Search, Optimization, and Machine

Learning: Addison-Wesley

[6] Shokouhi, M.; Chubak, P.; Raeesy, Z((2005), Enhancing focused crawling with

genetic algorithms, Vol: 4-6, pp.503-508

[7] Information retrieval.pdf

International Journal of Research in IT & Management

http://www.mairec org 55

You might also like

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (843)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5810)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1092)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (346)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- ABC's of AutolispDocument263 pagesABC's of Autolisptyke75% (8)

- Fire Pump Test Form: (Sign Here)Document2 pagesFire Pump Test Form: (Sign Here)Sofiq100% (1)

- Sap PP SopDocument35 pagesSap PP SopMarbs GraciasNo ratings yet

- Astm D2444Document8 pagesAstm D2444Hernando Andrés Ramírez GilNo ratings yet

- Ethics 2Document6 pagesEthics 2Heba BerroNo ratings yet

- Solving Generalized Assignment Problem With Genetic Algorithm and Lower Bound TheoryDocument5 pagesSolving Generalized Assignment Problem With Genetic Algorithm and Lower Bound TheoryVikasThadaNo ratings yet

- An Alternate Approach For Question Answering System in Bengali Language Using Classification TechniquesDocument11 pagesAn Alternate Approach For Question Answering System in Bengali Language Using Classification TechniquesVikasThadaNo ratings yet

- Efficient and Scalable Iot Service Delivery On CloudDocument8 pagesEfficient and Scalable Iot Service Delivery On CloudVikasThadaNo ratings yet

- Web Information RetrievalDocument4 pagesWeb Information RetrievalVikasThadaNo ratings yet

- Mohanty IEEE-CEM 2017-Oct CurationDocument8 pagesMohanty IEEE-CEM 2017-Oct CurationVikasThadaNo ratings yet

- Ieee Soli Ji 07367408Document6 pagesIeee Soli Ji 07367408VikasThadaNo ratings yet

- Computer Networks: M. Shamim Hossain, Ghulam MuhammadDocument11 pagesComputer Networks: M. Shamim Hossain, Ghulam MuhammadVikasThadaNo ratings yet

- Iot and Cloud Computing in Automation of Assembly Modeling SystemsDocument10 pagesIot and Cloud Computing in Automation of Assembly Modeling SystemsVikasThadaNo ratings yet

- Secure Data Analytics For Cloud-Integrated Internet of Things ApplicationsDocument12 pagesSecure Data Analytics For Cloud-Integrated Internet of Things ApplicationsVikasThadaNo ratings yet

- Advanced Encryption StandardDocument43 pagesAdvanced Encryption StandardVikasThadaNo ratings yet

- Towards Automated Iot Application Deployment by A Cloud-Based ApproachDocument8 pagesTowards Automated Iot Application Deployment by A Cloud-Based ApproachVikasThadaNo ratings yet

- Dr. Vikas Thada - Google Scholar Citations PDFDocument2 pagesDr. Vikas Thada - Google Scholar Citations PDFVikasThadaNo ratings yet

- Dr. Vikas Thada - Google Scholar Citations PDFDocument2 pagesDr. Vikas Thada - Google Scholar Citations PDFVikasThadaNo ratings yet

- Anmol 2 PDFDocument6 pagesAnmol 2 PDFVikasThadaNo ratings yet

- Documentation (VB Project)Document39 pagesDocumentation (VB Project)ankush_drudedude996264% (14)

- Education Short Answer Questions 2020Document22 pagesEducation Short Answer Questions 2020Mac DoeNo ratings yet

- ScoresheetDocument6 pagesScoresheetdohnny donNo ratings yet

- Grup 2Document6 pagesGrup 2FatihNo ratings yet

- 5C - Awalia Dewi - Selection and GradingDocument10 pages5C - Awalia Dewi - Selection and GradingAwalia DewiNo ratings yet

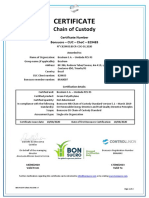

- BSCR-CERT - ChoC.F02 ENG v7 - BraskemPE5 - 2020 - AssinadoDocument2 pagesBSCR-CERT - ChoC.F02 ENG v7 - BraskemPE5 - 2020 - AssinadoAdrian BotanaNo ratings yet

- Summary Report On The Development of The SERT I SpacecraftDocument309 pagesSummary Report On The Development of The SERT I SpacecraftBob AndrepontNo ratings yet

- EventsmedialibraryresourcesesES 2023 Final Program PDFDocument52 pagesEventsmedialibraryresourcesesES 2023 Final Program PDFRobert Michael CorpusNo ratings yet

- A Critical Genre Based Approach To Teach Academic PDFDocument618 pagesA Critical Genre Based Approach To Teach Academic PDFOktari HendayantiNo ratings yet

- Bio Course Outline First YearDocument10 pagesBio Course Outline First YearFULYA YALDIZNo ratings yet

- African CTDDocument109 pagesAfrican CTDGuruprerna sehgalNo ratings yet

- 5th Investigations Yeast Lesson PlanDocument3 pages5th Investigations Yeast Lesson Planapi-547797701No ratings yet

- Quezon CityDocument13 pagesQuezon CityJojit PascualNo ratings yet

- Service Blueprint FamsDocument1 pageService Blueprint FamsAnwar Maulana PutraNo ratings yet

- Cambridge IGCSE™ (9-1) : Biology 0970/32 October/November 2020Document12 pagesCambridge IGCSE™ (9-1) : Biology 0970/32 October/November 2020Reem AshrafNo ratings yet

- Venugopal P: ObjectiveDocument3 pagesVenugopal P: ObjectiveVenu GopalNo ratings yet

- EM 3 Test FinalDocument6 pagesEM 3 Test FinalLinguata SchoolsNo ratings yet

- Quarter 2 Week 7: A. Panuto: Mag Bigay NG 5 Halimbawa Na Nag Papakita NG Pakikilahok Sa Mga Programa o ProyektoDocument5 pagesQuarter 2 Week 7: A. Panuto: Mag Bigay NG 5 Halimbawa Na Nag Papakita NG Pakikilahok Sa Mga Programa o ProyektoShawn AldrinNo ratings yet

- Hiab Xs 177KDocument12 pagesHiab Xs 177KJoaquín TodhinNo ratings yet

- 10.1038@s41570 019 0080 8Document17 pages10.1038@s41570 019 0080 8Hassan BahalooNo ratings yet

- Selling The Premium in Freemium: Xian Gu, P.K. Kannan, and Liye MaDocument18 pagesSelling The Premium in Freemium: Xian Gu, P.K. Kannan, and Liye MaPsubbu RajNo ratings yet

- The Saaga of NalapatDocument18 pagesThe Saaga of NalapatDr Suvarna Nalapat100% (1)

- 11x17 Fluke 80 Series V DMMs Quick Reference GuideDocument2 pages11x17 Fluke 80 Series V DMMs Quick Reference Guidefu yuNo ratings yet

- 30 Monitor de Signos Vitales Mindray PM 8000Document200 pages30 Monitor de Signos Vitales Mindray PM 8000Juan Sebastian Murcia TorrejanoNo ratings yet