Professional Documents

Culture Documents

Algorithm-L3-Time Complexity

Uploaded by

علي عبد الحمزهOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Algorithm-L3-Time Complexity

Uploaded by

علي عبد الحمزهCopyright:

Available Formats

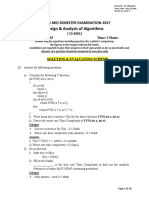

Algorithm Analysis and Design L.

Mais Saad Safoq 2020-Lec3

Time Complexity

The time complexity of an algorithm is the amount of computer time required by an algorithm

to run to completion.

In multiuser system , executing time depends on many factors such as:

System load

Number of other programs running

Instruction set used

Speed of underlying hardware

So, the time complexity is the number of operations an algorithm performs to complete its task

(considering that each operation takes the same amount of time). The algorithm that performs

the task in the smallest number of operations is considered the most efficient one in terms of the

time complexity. Let consider the following examples

Pseudocode for sum of two numbers

int Sum(a,b){

return a+b //Takes 2 unit of time(constant) one for arithmetic operation and one for

return. cost=2 no of times=1

}

Pseudocode: Sum of all elements of a list

int Sum(int A[n],int n){//A->array and n->number of elements in the array

int sum =0, i; // cost=1 no of times=1 [required 1]

for i=0 ;i< n-1 ; i++ // cost=2 no of times=n+1 (+1 for the end false condition) and n for

i++ [required 1+(n+1)+n)]

sum = sum + A[i] // cost=2 no of times=n [required 2n]

return sum // cost=1 no of times=1 [required 1]

}

Tsum=1 + 2 * (n+1) + 2 * n + 1 = 4n + 4

Algorithm Analysis and Design L.Mais Saad Safoq 2020-Lec3

Time Complexity Analysis of Recursive functions

The time complexity of an algorithm gives an idea of the number of operations

needed to solve it. In this section we work out the number of operations for some of

the recursive functions. To do this we make use of recurrence relations. A

recurrence relation, also known as a difference equation, is an equation which

defines a sequence recursively: each term of the sequence is defined as a function

of the preceding terms.

Let us look at the powerA function (exponentiation).

int powerA( int x, int n)

{

if (n==0)

return 1;

if (n==1)

return x;

else

return x * powerA( x , n–1 );

}

The problem size reduces from n to n – 1 at every stage, and at every stage two

arithmetic operations are involved (one multiplication and one subtraction). Thus

total number of operations needed to execute the function for any given n, can be

expressed as sum of 2 operations and the total number of operations needed to

execute the function for n-1. Also when n=1, it just needs one operation to execute

the function

In other words T(n) can be expressed as sum of T(n-1) and two operations using the

following recurrence relation:

T(n) = T(n – 1 ) + 2

T(1) = 1

Algorithm Analysis and Design L.Mais Saad Safoq 2020-Lec3

We need to solve this to express T(n) in terms of n. The solution to the recurrence

relation proceeds as follows. Given the relation

T(n) = T(n – 1 ) + 2 ..(1)

we try to reduce the right hand side till we get to T(1) , whose solution is known to

us. We do this in steps. First of all we note (1) will hold for any value of n. Let us

rewrite (1) by replacing n by n-1 on both sides to yield

T(n – 1 ) = T(n – 2 ) + 2 …(2)

Substituting for T(n – 1 ) from relation (2) in relation (1) yields

T(n ) = T(n – 2 ) + 2 (2) …(3)

Also we note that

T(n – 2 ) = T(n – 3 ) + 2 …(4)

Substituting for T(n – 2 ) from relation(4) in relation (3) yields

T(n ) = T(n – 3 ) + 2 (3) … (5)

Following the pattern in relations (1) , (3) and (5), we can write a generalized

relation

T(n ) = T(n – k ) + 2(k) …(6)

To solve the generalized relation (6), we have to find the value of k. We note that

T(1) , that is the number of operations needed to raise a number x to power 1 needs

just one operation. In other words

T(1) = 1 ...(7)

We can reduce T(n-k) to T(1) by setting

n–k=1

and solve for k to yield

k=n–1

Substituting this value of k in relation (6), we get

T(n) = T(1) + 2(n – 1 )

Now substitute the value of T(1) from relation (7) to yield the solution

T(n) = 2n– 2 ..(8)

Algorithm Analysis and Design L.Mais Saad Safoq 2020-Lec3

When the right hand side of the relation does not have any terms involving T(..), we

say that the recurrence relation has been solved. We see that the algorithm is linear

in n.

- Complexity of the efficient recursive algorithm for exponentiation:

We now consider the powerB function.

int powerB( int x, int n)

{

if ( n==0)

return 1;

if(n==1)

return x;

if (n is even)

return powerB( x * x, n/2);

else

return powerB(x, n-1) * x ;

}

At every step the problem size reduces to half the size. When the power is an odd

number, an additional multiplication is involved. To work out time complexity, let

us consider the worst case, that is we assume that at every step an additional

multiplication is needed. Thus total number of operations T(n) will reduce to

number of operations for n/2, that is T(n/2) with three additional arithmetic

operations (the odd power case). We are now in a position to write the recurrence

relation for this algorithm as

T(n) = T(n/2) + 3 ..(1)

T(1) = 1 ..(1)

To solve this recurrence relation, we note that T(n/2) can be expressed as

T(n/2) = T(n/4) + 3 ..(2)

Algorithm Analysis and Design L.Mais Saad Safoq 2020-Lec3

Substituting for T(n/2) from (2) in relation (1) , we get

T(n) = T(n/4) + 3(2)

By repeated substitution process explained above, we can solve for T(n) as follows

T(n) = T(n/2) + 3

= [ T(n/4) + 3 ] + 3

= T(n/4) + 2(3)

= [ T(n/8) + 3 ] + 2(3)

= T(n/8) + 3(3)

= T(n/2k) + k(3) and 3 is constant then T(n)= T(n/2k) + kc

Since T(0)=1 then

n/2k=1 then 2k=n

k=log2 n

Algorithm Analysis and Design L.Mais Saad Safoq 2020-Lec3

Algorithm Analysis and Design L.Mais Saad Safoq 2020-Lec3

Thus always upper bounds of existing time is obtained by big oh notation

Algorithm Analysis and Design L.Mais Saad Safoq 2020-Lec3

3- Ɵ Notation

c1 g(n) ≤ F(n) ≤ c2 g(n)

Algorithm Analysis and Design L.Mais Saad Safoq 2020-Lec3

You might also like

- RecurrenceDocument2 pagesRecurrencemohsen_dehghaniNo ratings yet

- Apex Institute of Technology Department of Computer Science & EngineeringDocument23 pagesApex Institute of Technology Department of Computer Science & EngineeringAngad SNo ratings yet

- Transforming Education Through Algorithmic AnalysisDocument19 pagesTransforming Education Through Algorithmic AnalysisSayak MallickNo ratings yet

- 5-Stages of Algorithm Development & Time Complexity Analysis-05-01-2024Document31 pages5-Stages of Algorithm Development & Time Complexity Analysis-05-01-2024vanchagargNo ratings yet

- CSCI 3110 Assignment 6 Solutions: December 5, 2012Document5 pagesCSCI 3110 Assignment 6 Solutions: December 5, 2012AdamNo ratings yet

- rohini_20313798233Document5 pagesrohini_20313798233Hari HaranNo ratings yet

- Big O complexity analysis of algorithmsDocument3 pagesBig O complexity analysis of algorithmslixus mwangiNo ratings yet

- Divide Et ImperaDocument9 pagesDivide Et ImperaAdrian TolanNo ratings yet

- 12 Asymptotic Notations 04-04-2023Document18 pages12 Asymptotic Notations 04-04-2023Praveena SureshkumarNo ratings yet

- Analysis of Recursive Algorithms: Solving Recurrence Relations and Determining ComplexityDocument18 pagesAnalysis of Recursive Algorithms: Solving Recurrence Relations and Determining ComplexitySwaty GuptaNo ratings yet

- OPTIMIZING ALGORITHM RUNNING TIMESDocument14 pagesOPTIMIZING ALGORITHM RUNNING TIMESSuhail AlmoshkyNo ratings yet

- CPT212 04 RecursiveDocument26 pagesCPT212 04 RecursiveTAN KIAN HONNo ratings yet

- Ada 1Document11 pagesAda 1kavithaangappanNo ratings yet

- Recurrence Relations PDFDocument16 pagesRecurrence Relations PDFSrinivasaRao JJ100% (1)

- Recurrence Relations: Week 5Document21 pagesRecurrence Relations: Week 5SyedHassanAhmedNo ratings yet

- COMP3121 2 Basic Tools For Analysis of AlgorithmsDocument23 pagesCOMP3121 2 Basic Tools For Analysis of Algorithmsriders29No ratings yet

- Algo PPTDocument146 pagesAlgo PPTAnjani KumariNo ratings yet

- Algo - Mod2 - Growth of FunctionsDocument46 pagesAlgo - Mod2 - Growth of FunctionsISSAM HAMADNo ratings yet

- Recurrence-Relations Time ComplexityDocument14 pagesRecurrence-Relations Time ComplexitySudharsan GVNo ratings yet

- Recurrence-Relations Time ComplexityDocument14 pagesRecurrence-Relations Time ComplexitySudharsan GVNo ratings yet

- Adc 3Document49 pagesAdc 3ati atiNo ratings yet

- hw1Document8 pageshw1Rukhsar Neyaz KhanNo ratings yet

- Algorithm Complexity AnalysisDocument26 pagesAlgorithm Complexity AnalysisAeron-potpot RamosNo ratings yet

- Divide and Conquer Approach to Merge SortDocument21 pagesDivide and Conquer Approach to Merge SortHarshit RoyNo ratings yet

- Btech Degree Examination, May2014 Cs010 601 Design and Analysis of Algorithms Answer Key Part-A 1Document14 pagesBtech Degree Examination, May2014 Cs010 601 Design and Analysis of Algorithms Answer Key Part-A 1kalaraijuNo ratings yet

- 1-Divide and Conquer AlgorithmsDocument99 pages1-Divide and Conquer AlgorithmsShivani SrivastavaNo ratings yet

- Section IV.5: Recurrence Relations From AlgorithmsDocument9 pagesSection IV.5: Recurrence Relations From AlgorithmsSam McGuinnNo ratings yet

- RecurDocument41 pagesRecurraj527No ratings yet

- 0.1 Worst and Best Case AnalysisDocument6 pages0.1 Worst and Best Case Analysisshashank dwivediNo ratings yet

- Recurrence Relations PDFDocument8 pagesRecurrence Relations PDFGunjan AgarwalNo ratings yet

- Analysis of Merge SortDocument6 pagesAnalysis of Merge Sortelectrical_hackNo ratings yet

- Practice ProblemsDocument4 pagesPractice ProblemsGanesh PeketiNo ratings yet

- Notes On Divide-and-Conquer and Dynamic Programming.: 1 N 1 n/2 n/2 +1 NDocument11 pagesNotes On Divide-and-Conquer and Dynamic Programming.: 1 N 1 n/2 n/2 +1 NMrunal RuikarNo ratings yet

- Unit 3 For ADADocument21 pagesUnit 3 For ADArutvik jobanputraNo ratings yet

- Algorithm AssignmentDocument7 pagesAlgorithm Assignmentzayn alabidinNo ratings yet

- Analysis of Algorithms II: The Recursive CaseDocument28 pagesAnalysis of Algorithms II: The Recursive CaseKamakhya GuptaNo ratings yet

- Asymptotic Analysis of Algorithms (Growth of Function)Document8 pagesAsymptotic Analysis of Algorithms (Growth of Function)Rosemelyne WartdeNo ratings yet

- InterviewBit - Time ComplexityDocument7 pagesInterviewBit - Time ComplexityAbhinav Unnam100% (1)

- Analysis of Algorithms NotesDocument72 pagesAnalysis of Algorithms NotesSajid UmarNo ratings yet

- Midterm CS-435 Corazza Solutions Exam B: I. T /F - (14points) Below Are 7 True/false Questions. Mark Either T or F inDocument8 pagesMidterm CS-435 Corazza Solutions Exam B: I. T /F - (14points) Below Are 7 True/false Questions. Mark Either T or F inRomulo CostaNo ratings yet

- 1 IntroductionDocument38 pages1 IntroductionJai RamtekeNo ratings yet

- AOA-Paper SolutionsDocument68 pagesAOA-Paper SolutionsSahil KNo ratings yet

- Be Computer Engineering Semester 4 2019 May Analysis of Algorithms CbcgsDocument19 pagesBe Computer Engineering Semester 4 2019 May Analysis of Algorithms CbcgsMysterious Mr.MisterNo ratings yet

- CMSC-272XX Karatsuba Algorithm for Large Integer MultiplicationDocument3 pagesCMSC-272XX Karatsuba Algorithm for Large Integer MultiplicationChristofora Diana YuliawatiNo ratings yet

- Signals & Systems Lab.-ManualDocument15 pagesSignals & Systems Lab.-ManualsibascribdNo ratings yet

- Module1 Lecture3Document18 pagesModule1 Lecture3Vivek GargNo ratings yet

- Problem Set 2 SolDocument7 pagesProblem Set 2 SolsristisagarNo ratings yet

- Algorithms - : SolutionsDocument11 pagesAlgorithms - : SolutionsDagnachewNo ratings yet

- Intro Notes on Design and Analysis of AlgorithmsDocument25 pagesIntro Notes on Design and Analysis of AlgorithmsrocksisNo ratings yet

- Midterm SolutionDocument6 pagesMidterm SolutionYu LindaNo ratings yet

- 2017 Mid-Daa SolutionDocument16 pages2017 Mid-Daa SolutionDebanik DebnathNo ratings yet

- Solving Recurrence Relations with Closed Form SolutionsDocument44 pagesSolving Recurrence Relations with Closed Form SolutionsAhmed HussainNo ratings yet

- Recurrence Relation For Complexity Analysis of AlgorithmsDocument4 pagesRecurrence Relation For Complexity Analysis of AlgorithmsAwais ArshadNo ratings yet

- Discussions 2Document4 pagesDiscussions 2prostrikemadnessNo ratings yet

- DAA Notes JntuDocument45 pagesDAA Notes Jntusubramanyam62No ratings yet

- Programacion Dinamica Sin BBDocument50 pagesProgramacion Dinamica Sin BBandresNo ratings yet

- GATE OverflowDocument485 pagesGATE Overflowlokenders801No ratings yet

- Mathematical Formulas for Economics and Business: A Simple IntroductionFrom EverandMathematical Formulas for Economics and Business: A Simple IntroductionRating: 4 out of 5 stars4/5 (4)

- Binder 1Document21 pagesBinder 1علي عبد الحمزهNo ratings yet

- Statistics: Afrah UmranDocument27 pagesStatistics: Afrah Umranعلي عبد الحمزهNo ratings yet

- William Shakespeare Course 1Document7 pagesWilliam Shakespeare Course 1علي عبد الحمزهNo ratings yet

- William Shakespeare Course 1Document7 pagesWilliam Shakespeare Course 1علي عبد الحمزهNo ratings yet

- Karbala University Class: 2 College of Science Subject:Algorithm Design Computer Science DepartmentDocument1 pageKarbala University Class: 2 College of Science Subject:Algorithm Design Computer Science Departmentعلي عبد الحمزهNo ratings yet

- Lecture 5 Propositional Logic (PL)Document7 pagesLecture 5 Propositional Logic (PL)علي عبد الحمزهNo ratings yet

- Indefinite PronounsDocument3 pagesIndefinite Pronounsعلي عبد الحمزهNo ratings yet

- Will and Going ToDocument6 pagesWill and Going Toعلي عبد الحمزهNo ratings yet

- KR Lecture 1 Intro Covers AI Cycle, Knowledge TypesDocument5 pagesKR Lecture 1 Intro Covers AI Cycle, Knowledge Typesعلي عبد الحمزهNo ratings yet

- Algorithm-L1-Introduction To Algorithm Design 2020Document8 pagesAlgorithm-L1-Introduction To Algorithm Design 2020علي عبد الحمزهNo ratings yet

- Algorithm-L1-Introduction To Algorithm Design 2020Document8 pagesAlgorithm-L1-Introduction To Algorithm Design 2020علي عبد الحمزهNo ratings yet

- Data Structures & Algorithm Design: TreesDocument38 pagesData Structures & Algorithm Design: Treesعلي عبد الحمزهNo ratings yet

- Algorithm-L4 - SearchingDocument14 pagesAlgorithm-L4 - Searchingعلي عبد الحمزهNo ratings yet

- Recursion: L. Mais Saad Safoq Computer Science Department Kerbala UniversityDocument13 pagesRecursion: L. Mais Saad Safoq Computer Science Department Kerbala Universityعلي عبد الحمزهNo ratings yet

- PDA1Document7 pagesPDA1علي عبد الحمزهNo ratings yet

- 7-Finite State Automata With OutputDocument9 pages7-Finite State Automata With Outputعلي عبد الحمزهNo ratings yet

- Context-Free Grammars ExplainedDocument10 pagesContext-Free Grammars Explainedعلي عبد الحمزهNo ratings yet

- 9 - CFG SimplificationDocument7 pages9 - CFG Simplificationعلي عبد الحمزه100% (1)

- BBD Bucket StandfordDocument33 pagesBBD Bucket StandfordKotorrNo ratings yet

- CompresionDocument9 pagesCompresionDurgarao NagayalankaNo ratings yet

- Shellsort - WikipediaDocument5 pagesShellsort - WikipediaRaja Muhammad Shamayel UllahNo ratings yet

- Q1 Peta Factoring WheelDocument4 pagesQ1 Peta Factoring WheelAlice Del Rosario CabanaNo ratings yet

- LPPDocument2 pagesLPPSayli JadhavNo ratings yet

- Hypo DDDocument4 pagesHypo DDLia SpadaNo ratings yet

- Single-objective Optimization ProblemsDocument18 pagesSingle-objective Optimization Problemskrishna murtiNo ratings yet

- Reading 2 Time-Series AnalysisDocument47 pagesReading 2 Time-Series Analysistristan.riolsNo ratings yet

- Finite State Machines - 2Document15 pagesFinite State Machines - 2ravi jaiswalNo ratings yet

- Error Control DiscussionDocument3 pagesError Control DiscussionGeorji kairuNo ratings yet

- NumericalDocument18 pagesNumericalZaynab Msawat100% (1)

- Data Analysis - Groups - INCOMPLETEDocument24 pagesData Analysis - Groups - INCOMPLETEvivekNo ratings yet

- Experiment:2.1: Write A Program To Simulate Bankers Algorithm For The Purpose of Deadlock AvoidanceDocument6 pagesExperiment:2.1: Write A Program To Simulate Bankers Algorithm For The Purpose of Deadlock AvoidanceAnkit ThakurNo ratings yet

- Appendix A MatlabTutorialDocument49 pagesAppendix A MatlabTutorialNick JohnsonNo ratings yet

- 1 s2.0 S0016003220302544 MainDocument22 pages1 s2.0 S0016003220302544 MainCristian BastiasNo ratings yet

- IT5204-Information Systems SecurityDocument385 pagesIT5204-Information Systems SecuritynuwanthaNo ratings yet

- Tutorial Exercises Clustering - K-Means, Nearest Neighbor and HierarchicalDocument7 pagesTutorial Exercises Clustering - K-Means, Nearest Neighbor and HierarchicalAbdulKalamNo ratings yet

- OE5450: Numerical Techniques in Ocean Hydrodynamics: Implementation of FEM On A Rectangular DomainDocument15 pagesOE5450: Numerical Techniques in Ocean Hydrodynamics: Implementation of FEM On A Rectangular DomainJason StanleyNo ratings yet

- Chapter 6 - TreeDocument90 pagesChapter 6 - TreeNguyen DanhNo ratings yet

- Digital Image Processing Using Matlab: Basic Transformations, Filters, OperatorsDocument20 pagesDigital Image Processing Using Matlab: Basic Transformations, Filters, OperatorsThanh Nguyen100% (2)

- MTI RadarDocument18 pagesMTI RadarShady DerazNo ratings yet

- Deep Learning Regularization Techniques for Reducing OverfittingDocument35 pagesDeep Learning Regularization Techniques for Reducing OverfittingPranav BNo ratings yet

- New Methods in Bayer Demosaicking Algorithms: Nick Dargahi Vin DeshpandeDocument51 pagesNew Methods in Bayer Demosaicking Algorithms: Nick Dargahi Vin DeshpandeothmanenaggarNo ratings yet

- Huffman Coding For Your Digital Music Hearing PleasureDocument6 pagesHuffman Coding For Your Digital Music Hearing Pleasurejetz3rNo ratings yet

- Advanced MLDocument176 pagesAdvanced MLRANJIT BISWAL (Ranjit)No ratings yet

- Insertion Sort and Binary AdditionDocument8 pagesInsertion Sort and Binary AdditionHager ElzayatNo ratings yet

- Power Method For EV PDFDocument9 pagesPower Method For EV PDFPartho MukherjeeNo ratings yet

- A State Diagram and ASM Chart For Both Mealy and Moore Sequence Detector That Can Detect A Sequence of "1101"Document11 pagesA State Diagram and ASM Chart For Both Mealy and Moore Sequence Detector That Can Detect A Sequence of "1101"Taga Phase 7No ratings yet

- Digital Image Processing, 4th Editio: Chapter 1 IntroductionDocument13 pagesDigital Image Processing, 4th Editio: Chapter 1 Introductionfreeloadtailieu2017No ratings yet

- Review Paper On Comparative Analysis of Selection Sort and Insertion SortDocument10 pagesReview Paper On Comparative Analysis of Selection Sort and Insertion SortAli Raja100% (1)