Professional Documents

Culture Documents

Mimiw Assignment

Uploaded by

Tyria BanksCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Mimiw Assignment

Uploaded by

Tyria BanksCopyright:

Available Formats

Lopez, Trisha Larraine P.

March 5, 2020

BSA 1-23 Mathematics in the Modern World

PARAMETRIC AND NON-PARAMETRIC TESTS

PARAMETRIC TESTS

Parametric statistics are based on the parameters of the normal curve. Because parametric

statistics are based on the normal curve, data must meet certain assumptions, or parametric

statistics cannot be calculated. Parametric tests have greater statistical power, which means

they are likely to find a true significant effect.

PARAMETRIC TESTS ARE USED WHEN:

1. The data given are quantitative data.

2. There are continuous variables

3. Data are measured on approximate interval or ratio scales of measurement.

4. Data should follow normal distribution.

TYPES OF PARAMETRIC STATISTICS

a) Z-test - A z-score is calculated with population parameters such as “population

mean” and “population standard deviation” and is used to validate a hypothesis that

the sample drawn belongs to the same population. Null: Sample mean is same as the

population mean; and Alternative: Sample mean is not same as the population mean.

The statistics used for this hypothesis testing is called z-statistic, the score for which is

calculated as z = (x — μ) / (σ / √n), where x= sample mean; μ = population mean; and

σ / √n = population standard deviation. If the test statistic is lower than the critical

value, accept the hypothesis or else reject the hypothesis.

b) T-test - A t-test is used to compare the mean of two given samples. Like a z-test, a t-test

also assumes a normal distribution of the sample. A t-test is used when the population

parameters (mean and standard deviation) are not known. There are three versions of

t-test: (1) Independent samples t-test which compares mean for two groups. (2) Paired

sample t-test which compares means from the same group at different times. (3) One

sample t-test which tests the mean of a single group against a known mean. The

statistic for this hypothesis testing is called t-statistic, the score for which is calculated

as t = (x1 — x2) / (σ / √n1 + σ / √n2), where x1 = mean of sample 1; x2 = mean of sample

2; n1 = size of sample 1; and n2 = size of sample

.

c) ANOVA - also known as analysis of variance, is used to compare multiple (three or

more) samples with a single test. There are 2 major flavors of ANOVA: (1) One-way

ANOVA: It is used to compare the difference between the three or more

samples/groups of a single independent variable. (2) MANOVA: MANOVA allows us to

test the effect of one or more independent variable on two or more dependent

variables. In addition, MANOVA can also detect the difference in co-relation between

dependent variables given the groups of independent variables. The hypothesis being

tested in ANOVA is: Null: All pairs of samples are same i.e. all sample means are equal;

and Alternative: At least one pair of samples is significantly different. The statistics used

to measure the significance, in this case, is called F-statistics. The F value is calculated

using the formula F= ((SSE1 — SSE2)/m)/ SSE2/n-k, where SSE = residual sum of

squares; m = number of restrictions; and k = number of independent variables. There

are multiple tools available such as SPSS, R packages, Excel etc. to carry out ANOVA on a

given sample

d) Chi-Square Test - Chi-square test is used to compare categorical variables. There are

two type of chi-square test: (1) Goodness of fit test, which determines if a sample

matches the population. (2) A chi-square fit test for two independent variables is used to

compare two variables in a contingency table to check if the data fits. (A small chi-

square value means that data fits and a high chi-square value means that data doesn’t fit)

The hypothesis being tested for chi-square is: Null: Variable A and Variable B are

independent; and Alternative: Variable A and Variable B are not independent. The

statistic used to measure significance, in this case, is called chi-square statistic. The

formula used for calculating the statistic is Χ2 = Σ [ (Or,c — Er,c)2 / Er,c ] where Or,c =

observed frequency count at level r of Variable A and level c of Variable B Er,c =

expected frequency count at level r of Variable A and level c of Variable

e) .

Examples

NON-PARAMETRIC TESTS

Non-parametric tests are methods of statistical analysis that do not require a distribution to

meet the required assumptions to be analyzed (especially if the data is not normally

distributed). Due to such a reason, they are sometimes referred to as distribution-free tests.

NON-PARAMETRIC TESTS IS USED WHEN:

1. The data uses nominal scales or ordinal scales.

2. There is a skewed distribution.

3. One or more assumptions of a parametric test have been violated.

4. The sample size is too small to run a parametric test.

5. The data has outliers that cannot be removed.

6. You want to test for the median rather than the mean (you might want to do this if you

have a very skewed distribution).

TYPES OF NON-PARAMETRIC STATISTICS

1. 1-sample sign test. Use this test to estimate the median of a population and compare it

to a reference value or target value.

2. 1-sample Wilcoxon signed rank test. With this test, you also estimate the population

median and compare it to a reference/target value. However, the test assumes your

data comes from a symmetric distribution (like the Cauchy distribution or uniform

distribution).

3. Friedman test. This test is used to test for differences between groups with ordinal

dependent variables. It can also be used for continuous data if the one-way ANOVA

with repeated measures is inappropriate (i.e. some assumption has been violated).

4. Goodman Kruska’s Gamma: a test of association for ranked variables.

5. Kruskal-Wallis test. Use this test instead of a one-way ANOVA to find out if two or more

medians are different. Ranks of the data points are used for the calculations, rather

than the data points themselves.

6. The Mann-Kendall Trend Test looks for trends in time-series data.

7. Mann-Whitney test. Use this test to compare differences between two independent

groups when dependent variables are either ordinal or continuous.

8. Mood’s Median test. Use this test instead of the sign test when you have two

independent samples.

9. Spearman Rank Correlation. Use when you want to find a correlation between two sets

of data.

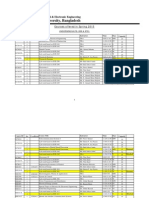

PARAMETRIC TEST NON-PARAMETRIC TEST

1-sample sign test One-sample Z-test, One sample t-test

1-sample Wilcoxon Signed Rank test One sample Z-test, One sample t-test

Friedman test Two-way ANOVA

Kruskal-Wallis test One-way ANOVA

Mann-Whitney test Independent samples t-test

Mood’s Median test One-way ANOVA

Spearman Rank Correlation Correlation Coefficient

You might also like

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (589)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5796)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1091)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Calculation of Pipe SupportDocument6 pagesCalculation of Pipe Supportnaveen_86No ratings yet

- All About Immanuel KantDocument20 pagesAll About Immanuel KantSean ChoNo ratings yet

- Introduction To Device ManagementDocument72 pagesIntroduction To Device ManagementMOHAMMED SHEHBAAZNo ratings yet

- Further MathematicsDocument44 pagesFurther MathematicsMohd Tirmizi100% (1)

- Ethiopian Geology 2&3 2015Document51 pagesEthiopian Geology 2&3 2015amanuel buzunaNo ratings yet

- Automobile Engineering NotesDocument120 pagesAutomobile Engineering Notesakshay2992No ratings yet

- HOSTILE - Colony Module Schematics (Updated)Document18 pagesHOSTILE - Colony Module Schematics (Updated)Oleksandr TrifanNo ratings yet

- Business Plan Guide QuestionsDocument7 pagesBusiness Plan Guide QuestionsTyria BanksNo ratings yet

- ITALY Service CultureDocument61 pagesITALY Service CultureTyria BanksNo ratings yet

- Accountalympics SchedDocument9 pagesAccountalympics SchedTyria BanksNo ratings yet

- Monday Time Subject Professor: Prof. Melcor de GuzmanDocument1 pageMonday Time Subject Professor: Prof. Melcor de GuzmanTyria BanksNo ratings yet

- Psychological ContextDocument11 pagesPsychological ContextTyria BanksNo ratings yet

- Grade 8 RespirationDocument6 pagesGrade 8 RespirationShanel WisdomNo ratings yet

- Obermeier1985 - Thermal Conductivity, Density, Viscosity, and Prandtl-Numbers of Di - and Tri Ethylene GlycolDocument5 pagesObermeier1985 - Thermal Conductivity, Density, Viscosity, and Prandtl-Numbers of Di - and Tri Ethylene GlycolNgoVietCuongNo ratings yet

- Rubrics - Reporting - RizalDocument2 pagesRubrics - Reporting - RizaljakeNo ratings yet

- Earth and Life Science LAS UpdatedDocument7 pagesEarth and Life Science LAS UpdatedAnthony HawNo ratings yet

- Curs FinalDocument8 pagesCurs FinalandraNo ratings yet

- Bulk MicromachiningDocument14 pagesBulk MicromachiningSrilakshmi MNo ratings yet

- Department of Human Services: Course Information Course DescriptionDocument9 pagesDepartment of Human Services: Course Information Course DescriptionS ElburnNo ratings yet

- Mathchapter 2Document12 pagesMathchapter 2gcu974No ratings yet

- Watson SPEECH TO TEXT Code Snippet.rDocument2 pagesWatson SPEECH TO TEXT Code Snippet.rLucia Carlina Puzzar QuinteroNo ratings yet

- Ductile-Iron Pressure Pipe: Standard Index of Specifications ForDocument2 pagesDuctile-Iron Pressure Pipe: Standard Index of Specifications ForTamil funNo ratings yet

- Load Tracing PDFDocument19 pagesLoad Tracing PDFAngelique StewartNo ratings yet

- Measurement of Hindered Phenolic Antioxidant Content in HL Turbine Oils by Linear Sweep VoltammetryDocument6 pagesMeasurement of Hindered Phenolic Antioxidant Content in HL Turbine Oils by Linear Sweep VoltammetryMohanadNo ratings yet

- Punjab Medical Faculty Registration Form: Personal DetailsDocument2 pagesPunjab Medical Faculty Registration Form: Personal DetailsAamir Khan PtiNo ratings yet

- An Isolated Bridge Boost Converter With Active Soft SwitchingDocument8 pagesAn Isolated Bridge Boost Converter With Active Soft SwitchingJie99No ratings yet

- Why Are You Applying For Financial AidDocument2 pagesWhy Are You Applying For Financial AidqwertyNo ratings yet

- Steinway Sons 1210010201644895 8Document41 pagesSteinway Sons 1210010201644895 8Nadia ShamiNo ratings yet

- Courses Offered in Spring 2015Document3 pagesCourses Offered in Spring 2015Mohammed Afzal AsifNo ratings yet

- Franchise Model For Coaching ClassesDocument7 pagesFranchise Model For Coaching ClassesvastuperceptNo ratings yet

- Topic 1: Introduction To Telecommunication: SPM1012: Telecommunication and NetworkingDocument22 pagesTopic 1: Introduction To Telecommunication: SPM1012: Telecommunication and Networkingkhalfan athmanNo ratings yet

- W170B-TC NEW HoOLLANDDocument6 pagesW170B-TC NEW HoOLLANDMeleștean MihaiNo ratings yet

- Assignment / Tugasan HBEF3703 Introduction To Guidance and Counselling / May 2021 SemesterDocument8 pagesAssignment / Tugasan HBEF3703 Introduction To Guidance and Counselling / May 2021 SemesterTHURGANo ratings yet

- Middle East Product Booklet 5078 NOV18Document56 pagesMiddle East Product Booklet 5078 NOV18Mohamed987No ratings yet

- Pugh ChartDocument1 pagePugh Chartapi-92134725No ratings yet