Professional Documents

Culture Documents

16 - Camera Ready Paper Tk001 003 Pc07

Uploaded by

Rayban PolarOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

16 - Camera Ready Paper Tk001 003 Pc07

Uploaded by

Rayban PolarCopyright:

Available Formats

ISSN (Online) : 2319 - 8753

ISSN (Print) : 2347 - 6710

International Journal of Innovative Research in Science, Engineering and Technology

An ISO 3297: 2007 Certified Organization Volume 6, Special Issue 5, March 2017

National Conference on Advanced Computing, Communication and Electrical Systems - (NCACCES'17)

24th - 25th March 2017

Organized by

C. H. Mohammed Koya

KMEA Engineering College, Kerala- 683561, India

Brain Tumor Detection and Classification in

MRI Images

Athency Antony 1, Ancy Brigit M.A 2, Fathima K.A. 3, Dilin Raju 4, Binish M.C. 5

UG Student, Department of ECE, KMEA Engineering College, Edathala, Ernakulam, India1

UG Student, Department of ECE, KMEA Engineering College, Edathala, Ernakulam, India2

UG Student, Department of ECE, KMEA Engineering College, Edathala, Ernakulam, India3

UG Student, Department of ECE, KMEA Engineering College, Edathala, Ernakulam, India4

Assistant Professor, Department of ECE, KMEA Engineering College, Edathala, Ernakulam, India5

ABSTRACT: Early and precise detection of tumors improve the quality of life of oncological patients. Keeping this in

mind, in this paper we propose an effective method of brain tumor detection and classification. Simply, brain tumor is

an abnormal growth caused by cells reproducing themselves in an uncontrolled manner. Brain tumors are having large

variability in their characteristics compared to other cancer tissue. So that for effective classification the deep learning

technique convolutional neural network (CNN) is used. This method pre-processes MRI image classifies and extracts

tumors using MATLAB.

KEYWORDS: Brain Tumor, MRI images, Deep Learning, Convolutional Neural Network, MATLAB

I. INTRODUCTION

Tumor is a deathly disease. According to certain surveys there were an estimate around 14.1 million cancer

cases are reported in the world in 2012, of thee 7.4 million cases were in men and 6.7 million in women. This number

is expected to increase to 24 million by 2035. Among different cancers brain tumor is most aggressive. The average

survival rate of brain tumour is only 34.4%.The prognosis and early cure of brain tumour depends on the early

detection and treatment. For early detection and effective treatment of the brain tumour a precise and reliable method

for cancer detection is needed. So, in this paper we use an automatic segmentation method. In this method we are using

convolutional neural network for the classification of tumor.

Tumors can be benign or malignant, can occur in different parts of the brain, and may be primary or

secondary. A primary tumor is one that has started in the brain, as opposed to a metastatic tumor, which is something

that has spread to the brain from another part of the body. The incidence of metastatic tumors is more prevalent than

primary tumors by 4:1 ratio. Tumors may or may not be symptomatic: some tumors are discovered because the patient

has symptoms, others show up incidentally on an imaging scan, or at an autopsy. A glioma is a type of tumor that starts

in the brain or spine. It is called a glioma because it arises from glial cells. The most common site of gliomas is the

brain Gliomas [1] make up about 30% of all brain and central nervous system tumors and 80% of all malignant brain

tumors.

Magnetic Resonance Imaging (MRI) is a widely used imaging technique to assess these tumors. MRI is

preferred because of its higher resolution than CT and also no known harmful effects from the strong magnetic field

used for MRI. But the large amount of data produced by MRI prevents manual segmentation in a reasonable time,

limiting the use of precise quantitative measurements in the clinical practice. MRI images [1] may present some

problems, such as intensity inhomogeneity, or different intensity ranges among the same sequences and acquisition

scanners. To remove these disadvantages in the MRI images we are using pre-processing techniques such as bias field

Copyright to IJIRSET www.ijirset.com 84

IJIRSET - NCACCES'17 Volume 6, Special Issue 5, March 2017

and intensity normalization. Magnetic Resonance Imaging (MRI) is one of the power full visualization techniques [1],

which is mainly used for the treatment of cancer. Using MRI image technology, the internal structure of the body can

be acquired in a safe and invasive way. Magnetic Resonance Imaging is a radiation-based technique; it represents the

internal structure of the body in terms of intensity variation of radiated wave generated by the biological system, when

it is exposed to radio frequency pulses. Magnetic Resonance Imaging is a very useful medical modality for the

detection of brain abnormalities and tumor. It does not produce any damage to healthy tissue with its radiation, it

provides high tissue information. Brain imaging allows a look into the brain and providing a detailed map of brain

connectivity

II. LITEREATURE SURVEY

The classification of Brain tumor segmentation methods can be made depending on the degree of human

interaction as:

Manual segmentation involves delineation of the boundaries of tumor manually and representing region of

anatomic structures with various labels. Manual segmentation requires software tools for the ease of drawing regions of

interest (ROI), is a tedious and exhausting task. MRI scanners produce multiple 2-D slices and the human expert has to

mark tumor regions carefully, otherwise it will generate jaggy images that lead to poor segmentation results.

In semi-automatic brain tumor segmentation, human interaction is least as possible. According to Olabarriaga,

the semiautomatic or interactive brain tumor segmentation components consist of computational part, interactive part

and the user interface. Since it involves both computer and humans’ expertise, result depends on both the combination.

Efficient segmentation of brain tumor is possible through this strategy but it is also subjected to variations between

expert users and within same user.

In Fully automatic segmentation method, there is no intervention of human and segmentation of tumor is

determined with the help of computer. It involves the human intelligence and is developed with soft computing

techniques, which is a difficult task. Brain tumor segmentation has various properties which reduce the advantage of

humans over machines. These methods are likely to be used for large batch of images in research environment.

However; these methods have not gained popularity for clinical practice, due to lack of transparency and

interpretability.In brain tumor segmentation, we find several methods that explicitly develop a parametric or non-

parametric probabilistic model for the underlying data. These models usually include a likelihood function

corresponding to the observations and aprior model. Being abnormalities, tumors can be segmented as outliers of

normal tissue, subjected to shape and connectivity constrains. Other approaches rely on probabilistic atlases . In the

case of brain tumors, the atlas must be estimated at segmentation time, because of the variable shape and location of the

neoplasms . Tumor growth models can be used as estimates of its mass effect, being useful to improve the atlases. The

neighbourhood of the voxels provides useful information for achieving smoother segmentations through Markov

Random Fields (MRF). Zhao [5] also used a MRF to segment brain tumors after a first overs segmentation of the image

into super voxels, with a histogram-based estimation of the likelihood function. As observed by Menze et, generative

models generalize well in unseen data, but it may be difficult to explicitly translate prior knowledge into an appropriate

probabilistic model.

Another class of methods learns a distribution directly from the data. Although a training stage can be a

disadvantage, these methods can learn brain tumor patterns that do not follow a specific model. This kind of approaches

commonly considers voxels as independent and identically distributed, although context information may be introduced

through the features. Because of this, some isolated voxels or small clusters may be mistakenly classified with the

wrong class, sometimes in physiological and anatomically unlikely locations. To overcome this problem, some authors

include information of the neighbourhood by embedding the probabilistic predictions of the classifier into a

Conditional Random Field [10] Classifiers such as Support Vector Machines and more recently, Random Forests (RF)

were successfully applied in brain tumor segmentation. The RF became very used due to its natural capability in

handling multi-class problems and large feature vectors. A variety of features were proposed in the literature: encoding

context, first-order and fractals-based texture, gradients, brain symmetry, and physical properties. Using supervised

classifiers, some authors developed other ways of applying them.

Tustison developed a two-stage segmentation framework based on RFs, using the output of the first classifier

to improve a second stage of segmentation. Geremia proposed a Spatially Adaptive RF for hierarchical segmentation,

going from coarser to finer scales. Meier used a semi-supervised RF to train a subject-specific classifier for post-

operative brain tumor segmentation.

Other methods known as Deep Learning deal with representation learning by automatically learning an

hierarchy of increasingly complex features directly from data. So, the focus is on designing architectures instead of

developing handcrafted features, which may require specialized knowledge. CNNs have been used to win several

object recognition and biological image segmentation challenges. Since a CNN operates over patches using kernels, it

Copyright to IJIRSET www.ijirset.com 85

IJIRSET - NCACCES'17 Volume 6, Special Issue 5, March 2017

has the advantages of taking context into account and being used with raw data. In the field of brain tumor

segmentation, recent proposals also investigate the use of CNNs. Zikic [9] used a shallow CNN with two convolutional

layers separated by max-pooling with stride 3, followed by one fully-connected (FC) layer and a softmax layer. Urban

e, evaluated the use of 3D filters, although the majority of authors opted for 2D filters [11]. 3D filters can take

advantage of the 3D nature of the images, but it increases the computational load. Some proposals evaluated two-

pathway networks to allow one of the branches to receive bigger patches than the other, thus having a larger context

view over the image. In addition to their two-pathway network, Havaei built a cascade of two networks and performed

two-stage training, by training with balanced classes and then refining it with proportions near the originals. Lyksborg

et, use a binary CNN to identify the complete tumor. Then, a cellular automata smooths the segmentation, before a

multiclass CNN discriminates the sub-regions of tumor. Rao, extracted patches in each plane of each voxel and trained

a CNN in each MRI sequence, the outputs of the last FC layer with softmax of each CNN are concatenated and used to

train a RF classifier. Dvo˘r´ak and Menze divided the brain tumor regions segmentation tasks into binary sub-tasks and

proposed structured predictions using a CNN as learning method. Patches of labels are clustered into a dictionary of

label patches, and the CNN must predict the membership of the input to each of the clusters.

In this project, inspired by the ground-breaking work of Simonyan and Zisserman [12] on deep CNNs, we

investigate the potential of using deep architectures with small convolutional kernels for segmentation of gliomas in

MRI images. Simonyan and Zisserman proposed the use of small 3 x 3 kernels to obtain deeper CNNs. With smaller

kernels we can stack more, convolutional layers, while having the same receptive field of bigger kernels. For instance,

two 3x3 cascaded convolutional layers have the same effective receptive field of one 5x5 layer, but fewer weights. At

the same time, it has the advantages of applying more non-linearities and being less prone to overfitting because small

kernels have fewer weights than bigger kernels. We also investigate the use of the intensity normalization method

proposed by Ny´ul as a pre-processing step that aims to address data heterogeneity caused by multi-site multi-scanner

acquisitions of MRI images. The large spatial and structural variability in brain tumors are also an important concern

that we study using two kinds of data augmentation.

In this project we are using the method proposed by the S´ergio Pereira, Adriano Pinto, Victor Alves and

Carlos A. Silva in which brain tumor segmentation is done by using convolutional neural networks

III. PROPOSED METHOD

This method has three main stages: pre-processing, classification of tumor and post-processing

TRAINING PHASE

MRI IMAGE BIAS FIELD INTENSITY FEATURE CONVOLUTIONA

DATABASE PREPROCESSING CORRECTION NORMALISATION EXTRACTION L NEURAL

BY N4ITK NTETWORK

Percentiles and landmarks Mean and Variance Weights

TEST IMAGE BIAS FIELD INTENSITY FEATURE CONVOLUTIONAL

IMAGE PREPROCESSING CORRECTION NORMALISATION EXTRACTION NEURAL

BY N4ITK NTETWORK

TESTING PHASE

IMAGE

ENHANCEMENT

Fig 1. Block diagram of brain tumor detection and classification system

A. PREPROCESSING

MRI images are suffered of bias field distortion and intensity inhomogeneity. There already exist several

algorithms for correcting non uniform intensity in the magnetic resonance images, due to field in homogeneities. To

correct bias field distortion N4ITK method [2] adopted. This is not enough to ensure the intensity distribution of the

tissue. Because there will be variation in intensity even if MRI of same patient is acquired in same scanner at different

time slots. So we are using intensity normalisation method in this system. It makes the contrast and intensity ranges

more similar across patients and acquisition.

Popular intensity inhomogeneity correction method, N3 (nonparametric non uniformity normalisation ) is used

in the N4ITK algorithm[2]. It is iterative and seek smooth multiplicative field that maximizes high frequency content of

distribution contents of tissue intensity. This is fully automatic method. And it is having an advantage that it doesn’t

require a prior tissue model for its application. It can apply to almost any MRI images.

Copyright to IJIRSET www.ijirset.com 86

IJIRSET - NCACCES'17 Volume 6, Special Issue 5, March 2017

Fig 2. Original image Fig 3. Bias corrected image

Fig 4. Histogram of orginal image Fig 5. Histogram of bias corrected image

Intensity normalization standardizes the intensity scales in MRI. Without standardized intensity scale, it will

be difficult to generalize the relative behaviour of various tissue types across different volumes in the presence of

tumor. The intensity normalization consisting of 2-stage method, proposed by Ny’ul [4] et al is used here for

evaluation. The two stages are training stage and transformation stage. The main purpose of training stage is to find the

parameters of the standard scale and in transformation stage the histograms of candidate volume are mapped to a

standard histogram scale. By this for each sequence more similar histogram is obtained. The mean intensity value and

standard deviation are computed during the training stage. These values are used to normalize the testing patches.

Intensity normalisation consists of training stage and transformation stage. In training stage, we find the parameters of

standard scale and in transformation stage histogram of input image is mapped to a standard scale (intensity of interest

(IOI)).

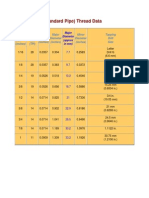

The 2 stage intensity normalisation algorithm [4] can be summarised as below. As first step determine the

histogram of each image in the training set of images. Form this histogram determine the range of intensity value of

interest. and denotes the minimum and maximum percentile value of the overall intensity range that defines

the boundaries of this IOI. The value lies outside this range are discarding as outliers. The minimum and maximum

intensity value of the image, and are mapped to corresponding minimum and maximum intensity value

and of standard intensity scale. User can choose the value of and according to their requirement

from the learning algorithm. After mapping new landmark are calculated from the rounded mean of each of the

landmark from the mapped image from the training set of images

At the end of training phase standard scale landmark are obtained. It I used to perform transformation of test

image. For transformation of image compute the histogram of the test image. Then find out the minimum and

maximum intensity values corresponding to and . From this determine the intensity values corresponding to

each decile of histogram respect to and . Now the each decile is intensity normalization for the new image.

These values enable segmenting the image in ten segments. Each segments is then linearity mapped to the

corresponding segments of the standard image intensity scale.

Copyright to IJIRSET www.ijirset.com 87

IJIRSET - NCACCES'17 Volume 6, Special Issue 5, March 2017

Fig 6. Input image and intensity normalized image

B. CLASSIFICATION

In this approach, classification is done by using convolutional neural network (CNN) [7]. Convolutional

neural networks are deep learning techniques mainly for image recognition. The segmentation is done by exploring

small 3x3 kernels. Feature maps are obtained by convolving an image with these small kernels. Each unit in feature

map is connected to previous layer by weights of kernels. These weights are obtained by back propagation during

training phase. This network has fewer weights to train which makes training easier and reduces over fitting, since the

kernels are shared among all units of feature maps. The same feature is detected independently of the location, because

the same kernel is convolved over the entire image. A non-linear activation function is applied on the output of each

neural unit.

The convolutional layers are stacked to increase the depth, by which the extracted features become more

abstract.

The important concepts are:

i Initialization

Initialization is to achieve convergence. This maintains the back-propagated gradients.

ii Activation function

For non-linear transformation of data, Rectifier linear units (ReLU), is defined as

Activation functions helps in fast learning and speed up training. The limitations due to imposing constant 0 are

eliminated by using a variant called leaky rectifier linear unit (LReLU). This functions is defined as

Where α is the leakiness parameter. In last FC layer softmax is used.

iii Pooling

In feature maps, spatially nearby features are combined by pooling. This makes the representation more compact,

reduces computational load and invariant to small changes in the images. Max-pooling is used to join the features.

iv Regularization

Regularization reduces over fitting. Drop out used in each fully connected layer, removes nodes from the network

with probability p. This prevents the nodes from co-adapting to each other. But all the nodes are used during

testing phase.

v Data Augmentation

Data Augmentation increases the size of the training sets and reduce over fitting. Data set is increased by

generating new patches through the rotation of the original patch. In this approach, angles multiple of 90 ◌֯ are

evaluated.

vi Loss Function

This function should be minimized during training.

C. POST-PROCESSING

There is a chance to recognize small clusters as tumor. Post processing done to avoid these kinds of errors. In

this stage small clusters in the segmentation, obtained by the CNN are removed which are smaller than a predefined

threshold value τ.

IV. RESULTS AND DISCUSSION

The pre-processing methods made segmentation more effective and accurate. N4ITK algorithm is used to

correct bias field distortion in MRI images. In intensity normalization intensities of images are transformed to [0, 1].

Copyright to IJIRSET www.ijirset.com 88

IJIRSET - NCACCES'17 Volume 6, Special Issue 5, March 2017

Then we normalized each sequence to have zero mean and unit variance. CNN based classification also improve after

intensity normalization. Since we used convolutional neural network for the classification brain tumor variabilities

segmented more accurately. CNN classifies mainly four tumor classes- Edema, Necrosis, Non enhancing and

enhancing tumor.

(a) (b) (c)

Fig 7. (a) input MRI, (b) output segmented image of proposed method, (c) manually segmented image. Each colour represents a tumor class: Green-

Edema, Blue-Necrosis, Yellow-Non enhancing tumor, Red-enhancing tumor

V. CONCLUSION

We presented a method for segmentation of brain tumors in MRI images based on convolutional neural

networks. This method consists of mainly, pre-processing stage, classification stage and post processing stage. Bias

field correction, patch and intensity normalization are done in the pre-processing stage. In our method the distortion

caused by multi-site multi-scanner acquisition of MRI images is removed by intensity normalisation method proposed

by Nyul et al. intensity normalisation is help to improve the effectiveness of the classification. For classification CNN

found to be effective due to the use of deal with variability in brain tumor. On comparing this method with

conventional methods it takes only less computational time. This method has higher potential in tumor detection and

classification.

REFERENCES

[1] S. Bauer et al., “A survey of mri-based medical image analysis for brain tumor studies,” Physics in medicine and biology, vol. 58, no. 13, pp.

97– 129, 2013.

[2] N. J. Tustison et al. “N4itk: improved n3 bias correction,” IEEE Transactions on Medical Imaging, vol. 29, no. 6, pp. 1310–1320, 2010.

[3] Olivier Salvado, Claudia Hillenbrand, Shaoxiang Zhang, and David L. Wilson “Method to Correct Intensity Inhomogeneity in MR Images for

Atherosclerosis Characterization, Member,” IEEE Transactions On Medical Imaging, Vol. 25, No. 5, May 2006

[4] L. G. Ny´ul, J. K. Udupa, and X. Zhang, “New variants of a method of mri scale standardization,” IEEE Transactions on Medical Imaging,

vol. 19, no. 2, pp. 143–150, 2000

[5] B. Menze et al., “The multimodal brain tumor image segmentation benchmark (brats),” IEEE Transactions on Medical Imaging, vol. 34,no. 10,

pp. 1993–2024, 2015.

[6] M. Shah et al., “Evaluating intensity normalization on mris of human brain with multiple sclerosis,” Medical image analysis, vol. 15, no. 2, pp.

267–282, 2011

[7] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Image net classification with deep convolutional neural networks,” in Advances in neural

information processing systems, 2012, pp. 1097–1105.

[8] Y. LeCun et al., “Back propagation applied to handwritten zip code recognition,” Neural computation, vol. 1, no. 4, pp. 541–551, 1989.

[9] D. Zikic et al., “Segmentation of brain tumor tissues with convolutional neural networks,” MICCAI Multimodal Brain Tumor Segmentation

Challenge (BraTS), pp. 36–39, 2014.

[10] B. H. Menze et al., “A generative model for brain tumor segmentation in multi-modal images,” in Medical Image Computing and Computer-

Assisted Intervention–MICCAI 2010. Springer, 2010, pp. 151–159.

[11] A. Davy et al., “Brain tumor segmentation with deep neural networks,” MICCAI Multimodal Brain Tumor Segmentation Challenge (BraTS),

pp. 31–35, 2014.

[12] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint arXiv:1409.1556v6,

2014. [Online]. Available: http://arxiv.org/abs/1409.1556

Copyright to IJIRSET www.ijirset.com 89

You might also like

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- CT Scanner Asteion Super 4 PDFDocument16 pagesCT Scanner Asteion Super 4 PDFYusef ManasrahNo ratings yet

- ASTM D 558-96 Standard Method For Misture-Density Relations of Soil-Cement MixturesDocument5 pagesASTM D 558-96 Standard Method For Misture-Density Relations of Soil-Cement MixturesPablo Antonio Valcárcel Vargas50% (2)

- TM-1861 AVEVA Administration (1.4) System Administration Rev 1.0Document106 pagesTM-1861 AVEVA Administration (1.4) System Administration Rev 1.0praveen jangir100% (2)

- Јohn Lakos - Large-Scale C++ Volume I - Process and Architecture (2020, Pearson)Document1,023 pagesЈohn Lakos - Large-Scale C++ Volume I - Process and Architecture (2020, Pearson)asdasd asdasdNo ratings yet

- HVAC Listing - Addendum #1Document3 pagesHVAC Listing - Addendum #1Jhonny Velasquez PerezNo ratings yet

- C+source Code On +scienific CalculatorDocument22 pagesC+source Code On +scienific CalculatorBittu76% (29)

- Documents - Pub - Boardworks LTD 2004 1 of 27 A4 Sequences ks3 MathematicsDocument69 pagesDocuments - Pub - Boardworks LTD 2004 1 of 27 A4 Sequences ks3 MathematicsYan EnNo ratings yet

- Module2-Signals and SystemsDocument21 pagesModule2-Signals and SystemsAkul PaiNo ratings yet

- Group 1 Revised Proposal. Patient Records Management SystemDocument2 pagesGroup 1 Revised Proposal. Patient Records Management SystemAlioding M. MacarimbangNo ratings yet

- International Journal of Accounting & Information ManagementDocument23 pagesInternational Journal of Accounting & Information ManagementAboneh TeshomeNo ratings yet

- Manual de Usuario Ecografo DC40 PDFDocument291 pagesManual de Usuario Ecografo DC40 PDFFederico Rodriguez MonsalveNo ratings yet

- Pay Order Application-Bangla-englishDocument1 pagePay Order Application-Bangla-englishTasnim RaihanNo ratings yet

- Jumbo C Saver CatalogueDocument19 pagesJumbo C Saver CatalogueMuhammad Iqbal ANo ratings yet

- Fetch Statementand NoticesDocument4 pagesFetch Statementand NoticesElizabethNo ratings yet

- Icon Line Trimmer - ICPLT26 Operator ManualDocument10 pagesIcon Line Trimmer - ICPLT26 Operator ManualAnirudh Merugu67% (3)

- Voice ProcedureDocument246 pagesVoice ProcedureManaf Kwame Osei Asare100% (1)

- OTC Family of Battery Testers: Sabre HPDocument4 pagesOTC Family of Battery Testers: Sabre HPenticoNo ratings yet

- MT131 Tutorial - 2 Sets Functions Sequences and Summation - 2023-2024Document63 pagesMT131 Tutorial - 2 Sets Functions Sequences and Summation - 2023-2024johnnader1254No ratings yet

- Innovation ManagementDocument39 pagesInnovation Managementmoraiskp321No ratings yet

- Ficha Tecnica Rotametro 7510 PDFDocument2 pagesFicha Tecnica Rotametro 7510 PDFJohn Armely Arias GuerreroNo ratings yet

- VU Provider For CS619 2021-2022Document10 pagesVU Provider For CS619 2021-2022zeeshan dogarNo ratings yet

- BSP Pipe ThreadDocument1 pageBSP Pipe ThreadgvmindiaNo ratings yet

- Lecture 5. Industry 4.0 Technologies and Enterprise ArchitectureDocument13 pagesLecture 5. Industry 4.0 Technologies and Enterprise ArchitectureBobo SevenNo ratings yet

- Hafiz CVDocument6 pagesHafiz CVkashi4ashiNo ratings yet

- Christmas Tree Safety TipsDocument1 pageChristmas Tree Safety Tipskailashch.msihraNo ratings yet

- Weekly Home Learning Plan: (4 Quarter: Week 1)Document8 pagesWeekly Home Learning Plan: (4 Quarter: Week 1)JenicaEilynNo ratings yet

- Cropyield Prediction Using Flask AND Machine LearningDocument11 pagesCropyield Prediction Using Flask AND Machine LearningVenkata Nikhil Chakravarthy KorrapatiNo ratings yet

- Full Catalog 2023 - Email VerDocument80 pagesFull Catalog 2023 - Email VeradamNo ratings yet

- Types of Circuit BreakersDocument23 pagesTypes of Circuit Breakerspmankad60% (5)

- Mn00224e-ALPlus2 - ALCPlus2 - ALCPlus2eDocument388 pagesMn00224e-ALPlus2 - ALCPlus2 - ALCPlus2eAlberto Rodrigues de SouzaNo ratings yet