Professional Documents

Culture Documents

Advanced Numerical Analysis

Advanced Numerical Analysis

Uploaded by

michael Anyanwu0 ratings0% found this document useful (0 votes)

22 views28 pagesOriginal Title

ADVANCED NUMERICAL ANALYSIS

Copyright

© © All Rights Reserved

Available Formats

PDF or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

0 ratings0% found this document useful (0 votes)

22 views28 pagesAdvanced Numerical Analysis

Advanced Numerical Analysis

Uploaded by

michael AnyanwuCopyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

You are on page 1of 28

ADVANCED NUMERICAL ANALYSIS

BY

EDWIN CHUKWUDI OBINABO

B.Sc. (Portsmouth), M.Eng. (Sheffield), Ph.D. (Nigeria), AMIMechE.

Department of Mechanical Engineering,

AMBROSE ALLI University, P. M. B, 14, EKPOMA,

Edo State, Nigeria.

Time is too slow for those who wait,

Too swift for those who fear,

Too long for those who grieve, and

Too short for those who rejoice.

But for those who love, time is Eternity.

Numerical Methods

The aim of numerical methods is to provide an account of numerical

analysis and computation which serves the needs of undergraduate

physicists, chemists, engineers and economists. The first need is for a

Gescription of the various entities, their nature and manipulation, and a

derivation of the mathematical properties most frequently needed in

applications. The second need is for a short selection of efficient methods

ef solving linear equations and eigenvalue problems, adequate for

practical numerical application and description in sufficient detail to be

pred confidently. These methods should be suitable for hand-calculation

Snd include the information needed to use them on an electronic

computer. With this in mind, this book starts, instead, from the other side,

putting into the hands of the users of mathematics an array of powerful

fools, of whose existence they may be unaware, with precise directions for

their use. To achieve this in a reasonable compass something had to be

cacrificed and the author took the bold step of omitting virtually all proofs

sae unorthodox but highly sensible procedure, since ‘otherwise the book

might have been more than ten times its present size. The primary

difficulty encountered with numerical methods is in applying well-defined

mathematical theories to day-to-day industrial processts, and translating

ideal models to the frequently far-from ideal real world scenario. In both

vemtent and presentation this book is intended to bridge the gap between

fooke and ‘courses designed to introduce the subject to science

cians. This gap is felt

undergraduates and treatises wri

fh and facing urgent numerical

most keenly by those begin I

problems. It is hoped that this account, if not itself sufficient to solve a

problem, may gi@ ¢nough background to enable specialized textbooks

dnd journals to be consulted fruitfully. The examples after each chapter

have the same purpose. Some provide direct illustrations of the ideas and

procedures in the text but others are introductions to more advanced

topics or more specialized applications.

Numerical methods have a number of significant advantages. As

always, the primary factor in any operation is cost. Use of numerical

Scanned with CamScanner

-2-

methods enables criteria to be computed for maximum profitability of the

plant to be derived. They also allow operators to approach more closely

the optimum operation of the process. As the degree of numerical methods

is increased, so do the related advantages which too become more

significant. Further improvements in the computation are attained by

model-based optimization. Almost every problem in the design, operation

and analysis of manufacturing plants and industrial processes, and the

associated problems such as production scheduling can be reduced in the

final analysis to the problem of determining the largest or smallest value

of a function of several variables. In an industrial process, for example, the

criterion for optimum operation is often in the form of minimum cost,

where the product cost can depend on a large number of interrelated

controlled parameters in the manufacturing process. In mathematics, the

performance criterion could be to minimize the integral of the squared

difference between a specified function and an approximation to it

generated as a function of the controlled parameters. Both of these

examples have in common the requirement that a single quantity is to be

minimized by variation of a number of controlled parameters. In addition,

there may also be parameters which are not controlled but which can be

measured, and possibly some which cannot even be measured.

Free Response and the Eigenvalue Problem

EIGENVALUE PROBLEMS are associated with determining those values of a

scalar parameter for which there exists nontrivial solutions to a set of

homogeneous equations. Such a problem is known as eigenvalue problem.

Consider a state variable description of an electrical passive RLC oscillator

shown in the fig. below.

Resistor

Inductor

Voureur

1

2

Substituting (2) and (1) for i we obtain

Vo ae 3

i-Vo LC

Vi-Vo= ROT LCT

d 4

d a,

ivi yi = (1 RCA + LCS Wo

giving Vin ROT VLC)

From (4) the transfer operator becomes

Scanned with CamScanner

aw Ld 1c

From (5) let the state variable be defined as, x(/) and represented in (6) as

follows:

=—— 6

On P Rd 1

staat

de Ld LC

so that Vo) = z x(t) 7

Equation (6) is the state equation, which on cross-multiplication gives

a Rd 1

= Sx()+ 2 £x()+ ae 8

VO= Fa) TG pO+ ze)

If we let x(¢

then i, =x, 9

R

so that &, =

1

Rey 10

gC!

Equation (9) and (1C) are a set of two first order differential equations

that described the problem of (1) and (2) in state space. Using matrix

notation for (9) and (10), we obtain matrix equations as follows:

E-2

11

IC

Equation (7), which is the output equation becomes

1 fx,

Vott) = +.|** 12

= Telo

These results may be expressed generally as follows:

x(0) = Ax(1) + Bult)

that is, 4.0) Ax(0)+ Bult) 13

and y(t) =Cx(t) 14

where u(r) is the input vector, and }(') the output vector. 4 is the

coefficient matrix, 2 is the driving matrix and C is the output matrix.

Taking the Laplace transform equations of (13), we obtain

sX(s)-(0)= AX (s)+ BU(s) 15

or —X(ss—A)= X(0)+ BU(s) 16

where (0) is the vector of initial state of x('). The term s-4 in equation

(16) is not defined as a matrix; the term s on the left hand side of (16) is a

unity scalar quantity; it is not a matrix quantity while the next term 4 is a

Scanned with CamScanner

-4-

matrix quantity. We then introduce a unit matrix J into s—A so that the

equation becomes:

N(sXo1- 4)=X(0)+ BU(s) 17

from which

X(s)=[s1- ay" X(0) + [sr - aT BU(s) 18

Zero input response, Zero state response,

ie., response when j.¢., response when

the input is zero. _ state of the system

is zero.

The free response of the system (that is, the response when U(s)=0) can

be determined from knowledge of the eigenvalues and eigenvectors of the

coefficient matrix 4..When U(s)=0, equation (18) becomes

X(s)=[s1- 4} X00) 19

To obtain the time response of the state vector x('), 120, we take the

inverse Laplace transform of (19) as follows:

x()=£'x(s)= [st - Ay XO),

because [s/- 4J' opty 20

The RHS of (20) may be expanded into an i finite series using the Binomial

theorem.

First, we re-write this matrix equation as follows:

1

[tay =tfr-

By expanding the RHS of (24) into an infinite series, it may be shown from

the series expansion zn 14x48 +. (for [x] <1) that for || large enough

the following may be obtained.

(sf-4y" orelaehe that ]

=

elie

Fz

s

Next, we find the inverse Laplace transform of this expansion (22), and it

is defined as follows:

uf u \- 10) 23

giving as follows:

7

(2) =P)

s nt

We may therefore invert (22) to obtain:

oats jo-% 4 iQ) =exp(A) 24

Bette 22

5

nl

L sp -ar'}e[ioae i

Scanned with CamScanner

where we define:

exolat) = SD 2

Hence the solution of x(/)= A()x(0) is found by using (24) in (23) to obtain:

x(r)=exp(ta0), t> 0 | 26

This result may be checked by using (24) for the matrix exp(4/) and

substituting it into (1). The matrix e: (41) is called the state transition

matrix. The reason for this name is easily seen from (26), for multiplying

the state x(0) by exp(4r) does the transition of the unforced system to the

state at time 1, x(/).

1. Why are Eigenvalues so called?

SOLUTION:

The problem of solving sets of linear differential equations reduces to an

algebraic eigenvalue problem. The characte! equation of the

differential equations is the same as the characteristic equation of the

matrix 4, and the characteristic roots are the eigenvalues of A. The matrix

Ais-the coefficient matrix. The equation /(i)= 0 is characteristic equation

of the matrix 4, and the solution of this equation gives the characteristic

roots otherwise known as latent roots or eigenvalues of the matrix A,

which, in general, will be complex. The eigenvalue analysis associated with

a square matrix A introduces certain characteristic properties, which are of

particular significance in the study of dynamical systems. The eigenvalue

analysis is associated with the transformation of a vector x to a new vector

yby the square matrix 4, represented by

Ax=y 1

where yis required to have the same direction as, or be proportional to, x.

Thus

Ax = ix

2

where 2 is a scalar and y, =x, This represents a set of homogeneous

linear equations in m unknowns x,, thus

(al-4)x =0 3

for solutions x, + 0, the characteristic matrix (21-4) must be singular with

The determinant or characteristic function is then an nth degree

polynomial in 2, thus

S(a)=|ar-A|

Raa" tota, Ata, 5

Scanned with CamScanner

-6-

The equation /(2)= 0 is characteristic equation of the matrix 4, and the

solution of this equation gives the characteristic roots otherwise known as

latent roots or eigenvalues of the matrix 4, which, in general, will be

complex. Also, for every 2, a solution of the homogeneous equations will

produce a set of a-vectors x (or u) called the characteristic solutions,

latent vectors or eigenvectors of the matrix A.

Agape

or wt

Uh us vey

Since the eigenvectors are solutions of a homogeneous equation they are

of arbitrary length (or absolute value) and are determined only to within a

constant of proportionality. Thus, they may be multiplied by an arbitrary

factor. With distinct roots, the matrix (4/-4) has rank n-1, and the

eigenvectors are linearly independent. The matrix adj(2/ - 4) has unit rank.

With an r-fold root, the matrix (4/-4) has rank #-r The algebraic

eigenvalue probl2m arises in the study of sets of linear differential

equations. Thus for the set of simultaneous differential equations

2. The shaft power delivered to each propeller of a twin screw ship is

described by a second order lag with inertia J, viscous damping 6 and

torque per unit error K has @,=1 and 6,=-1 when :=0. Find the

eigenvalues of the ship when @, is abruptly changed to 2at ¢=0.

SOLUTION:

The system equation is easily obtained as follows:

1

2

i) 3

), (1) becomes:

ds, a

Jo Be, =K(0,-x) 4

de, KB K

ag gt J

Since (5), the resulting equation, involves x; and xz, and since we also

ds,

know that “1 - x,, we can then have the following equations:

dt

ds,

dt

6

Scanned with CamScanner

er -—Cltit—t—t—‘SCSwS ~~

dy Ky BY Ko 5

yoayt

a TO

Expressing (6) and (7) in matrix form, we obtain:

ate sei

From the general form:

(0) + Bult)

“x(0) + Dult)

we obtain the following:

1 0

, B=|_|, C=[l 0), D=0-

6 -s\ 7 (< bol

s oj fo 1 scl

(1-ak[, ilglee ‘-e ws

The characteristic equation is |s/-)=0, thatis, | ° =0

7 6 st5.

s(s+5)-(-1}6 =0

(s+2Xs+3)

from which the

envalues are obtained as 7,

0

3. Obtain the coefficient matrix for computer simulation, and hence the

eigenvalues of the passive electrical network shown below.

Resistor

A Inductor

Viweur Capacitor = Vourrur

SOLUTION:

The system equation is easily obtained as follows:

J oo + BO, = K(0,-9,) 1

d

The state variables are:

0,=%, 2

0,=%,(=%) 3

From (2) and (3), (1) becomes:

Scanned with CamScanner

poh + Bx = KO, (9, -%,) 4

a

dey ‘ 5, +X,

dt J

since (5), the resulting equation,

we can then have the following equations:

5

involves x, and xz, and since we also

that “=

know adi xy,

eng 6) and 7) in matrix form, we obtain:

“its are .

Let

fa 1 Jf ],[°

wer stale “slal*ll* °

From the general ror:

¥(1)= Ax(t)+ Bult)

ye) = Cx(¢)+ Due)

we obtain the following:

af’, ‘ a-[{], c= 9} D0.

ware HE AG 2

is jd a)=0, thats, de ris] 0.

The characteristic equati

s(s+5)-(-1)6=0

(s+2Xs+

from which the eigenvalues are obtained as 2,

4. (a) Explain what is meant by the term: “Inexact numbers”.

(b) Outline types of errors and their sources in numerical analysis of

rational numbers.

(c) Explain the term: ERROR BOUNDS and show how they may be

ied in inexact numbers.

Scanned with CamScanner

SoLINEXACT NUMBERS are those numbers that are associated with errors,

@

i tess or inaccuracy.

i ders, but with unavoidable inexact

cated be eliminated through careful checking, but errors are

inevitable; and as far as possible must be kept within bounds. We use

ancy etters for exact numbers (that is, numbers without errors), am

Small letters for inexact numbers. INEXACT NUMBERS are they t at are

Sesociated with ERRORS. By this legend, x is an approximation to .

erar in x is denoted by «iv, so that exact numbers may be expressed as:

where ik may be positive or negative, The absolute error in the inexact

number x is defined as ||.

Types of Errors in Numbers a /

fat rrational and most rational numbers are non-terminating decimals,

it is usually necessary to round them. There are two (2) well-known

rounding techniques namely,

1. Decimal places (abbreviated as D).

2. Significant figures (abbreviated as S).

Several different ways have been adopted to deal with the case in which

the final digit is a 5. We shall always round such numbers upwards.

Sources of Errors

As a result of rounding or chopping, there is a round-off error in most of

the numbers we use

1. One of the sources of errors in our data or numbers is through

rounding or chopping. The error thus generated is called ROUND-

OFF ERROR.

2. Another source of errors in the data we are working with,

especially if these data are generated through measurements, is

INHERENT ERROR. The data generated through these

measurements can never be exact, but will depend on the

Precision of the measuring instruments.

3. There is a third type of error known as TRUNCATION ERROR

which occurs when we use only the first few terms of an infinite

series in the evaluation of a function.

(c) Error bounds are the limits between which thi

analysis, errors are unavoidable. It is therefore essential to investigate the

ting from the calculation involving inexact numbers

and specify the ERROR BOUNDS for the number we have calculated. For

example, if we know that the number 2.51 has been rounded, we know

automatically that the exact value lies between 2.505 and 2.515. This is

usually expressed as 2.51 « 0.005 and the ERROR BOUNDS in this number

eerie eO5. Again, a man’s height measured as 183 cm te the nearest

centimetre. It therefore lies between 182.5 and 183.5 cm., and may be

é error lies. In numerical

Scanned with CamScanner

-10-

expressed as 183 + 0.5 cm with error bounds being expressed as + 0.5. Ifa

physical quantity such as the density of a substance is determined by

experiment, its value may be expressed as 4.32+0.13 xem’ where the

calculated error bounds are + 0.13 and the true value lies between 4.19

and 4.45 gen”.

5

5. Evaluate the numerical expression: u i

2, where all the numbers

are rounded.

SOLUTION:

Working value= 1224-1 = 9.477745

052318

17.245 (maximum) _ 11.685 (minimum) _ 29 946677

Maximum value ; 5

Canin imumm) 3.185 (maximum)

(minimum) _ 11.695 (maximum) _ 99445106

(maximum) 3.175 (minimum)

Minimum value =

9.816677 + 29.145106) = 29.480892

1

2

Rounding the error to 2S and either the working or the mid-value to D

gives: 29.48+ 0.34

Maximum absolute error = 16677 -29.145106) = 0.3357855

6. (a) If a=2.41, 6=3.37 and both numbers are rounded. Find A+B stating

the error bounds of the answer.

SOLUTION:

The working value of «a+

The maximum value of a+

The minimum value of a+b =2.405 + 3.365

Hence,

The mid-value =

2.414337 =5.78

The error bound = 36.19- 5.77)=0.01

It should be observed in this example that the worki

value are the same, and

A+ B=5.78+£0.01

ing value and the mid-

(b) If a= 4.63, ane! b= 7.35, both rounded 2D. Find 4B and obtain the error

bounds.

Scanned with CamScanner

SOLUTION:

The working value Of «h = 4.63x7.35 =3

The maximum value of a! .635 x 7.355 = 34,090425

The minimum value of ab 5x 7.345 = 3.970625

Hence,

the mid-value = 4 04.090405 + 33.970625) = 34.030525

and

the error bound 4 64.090425 ~33.970625) = 0.0599

In this problem, the working value and the mid-value differ slightly.

However, it is usual to round the absolute error to 2S, and then to round

the answer to the same number of decimal places as in the rounded error.

Here, the error bounds are: + 0.060 (3D) and to 3D both working value

and the mid-value are equal to 34.031.

Hence, AB =34.031+0.060 which means that the true value of 48 lies

between 34.031 — 0.060 and 34.031+0.060 or 33.971 < AB < 34.091

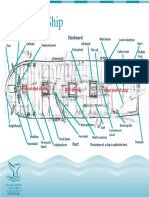

7(A) Define the following terms: Length between perpendicular, moulded

depth, extreme draught, midship section coefficient, block coefficient,

longitudinal prismatic coefficient, sheer, length of run.

(b) A ship has the following particulars

Lbp\L,,) = 400ft.

C, entrance (C,,)= 0.63

C, run (C,,) 0.75

Cu 0.95

B 56 ft.

T 24.5 ft

4 11200 ton

J

Ve 35 ft3/ton

ms = 0.25 Ly

Find 1,, L,and Ly

SOLUTION:

(a)

+ _ Lop >

Load water line

<|— Bow profile

AFT PERP FEW PERP

Scanned with CamScanner

-12-

Aw

ficient= “= Cb= C

Midship section coefficient= 77

Longitudinal prismatic coefficient Sail

sheer = distance of deck of side above deck of side of midships

|_—————»|_ Parallel Ls entrance:

un middle le

Length of run is distance of after end of parallel middle body from aft

perpendiculars

(b) Equating volumes

Volume of ships = vol. of E + vol. of P + vol. of R

¥=(0.63% Ly, xAG)+ (Ly x Ap)#(0.75xLR x49)

= 0.95 x56 x 24.5?

0.63 Ly, + Ly + 0.751, = 300.75 (2)

Ly ty + Lap = Ly = 400

Ly, = 0.25 Ly 2)

nate |, in (1) to (2) to obtain an then solve with (2) for L, and L,.

Elimi

Substitute to find /,.

Ly, = W8fi

Ly = 226 fi.

ly = 56 fi.

8. A ship has the following particulars

L, = 30m

= 075

45m

= 0.69

Calculate ;,, and the distance of the centre of the parallel middle body

from amidships.

Scanned with CamScanner

SOLUTION: F

pureance

CP, = 0.69

Required to find:

(a) L

(b) Distance of the centre of the parallel middle body from amidships

xf = Hot le 8x Vy

ax+L, xCP

x+(45 x0.69) 10

11

exh,

Now substitute th's for fe in (10) we obtain

0.75 (x + Ly J=x* (45 x 0.69)

0.75. + (45%0.69) =x 31.05

x (1-0.75) = (45x 0.69) - 31.05

33.75 - 31.05 _ 198

0.25

= 45 + 10.8

x

Hence

2

= 55.8m

giving 1, = (2% 55.8) m

= 111.6m

Scanned with CamScanner

-14-

(p) Let the distance of the centre of the parallel middle body from a

amidships = y

Sie y= 30-15-x

= 30 - (15 + x)

= 30-(15 + 10.8)

= 4.2m

Numerical Solution of Linear Systems

Almost every problem in the design, operation and analysis of

manufacturing plants and industrial processes, and the associated

problems such as production scheduling can be reduced in the final

Snalysis to the problem of determining the largest or smallest value of a

function of several variables. In an industrial process, for example, the

criterion for optimum operation is often in the form of minimum cost,

(here the product cost can depend on a large number of interrelated

controlled parameters in the manufacturing process. In mathematics, the

performance criterion could be to minimize the integral of the squared

Sifference between a specified function and an approximation to it

generated as a function of the controlled parameters. Both of these

examples have in common the requirement that a single quantity is to be

minimized by variation of a number of controlled parameters. In addition,

there may also be parameters which are not controlled but which can be

measured, and possibly some which cannot even be measured. The basic

mathematical optimization problem is to minimize a scalar quantity £

which is the value of a function of » system parameters x, x,, 33, x,- These

variables must be adjusted to obtain the minimum required, thus we

formulate the problem as follows:

minimize = f(%. X35 5 %,)= S(8)

The optimization may however be a maximization of ¢ with respect to x

but sufficient gen2rality can be obtained by considering only minimization

since

minimum{f(x)}=—min imum{- f(x)}

The value ¢ embodies the design criteria of the system into a single

number obtained by evaluating the function /(x), or objective function, for

agiven x. If the function /(x) can be expressed analytically then it may be

possible to apply differential calculus in order to determine the minimum.

Thus, for example, the stationary points of a function

S(x)=x° +2x? 4x43

can be found by taking the derivative of /(x) with respect to x and

equating to zero, hu:

lx)

de

Hence there are two stationary points and the second derivative is

required to determine the nature of each point: a maximum, a minimum,

or a point of inflection. The method may easily be extended for problems

with several variables by using partial derivatives, provided that all the

=3x7 +4x4+1=0

Scanned with CamScanner

-15-

of(x) 1,

first partial derivatives ~— ~~", / .nexist at all points. A necessary

condition for a minimum of /(x) is then

oly) Fe) Fe) _ 4

oy ax, ay

A sufficient condition for a point satisfying these equations to be a

minimum is that all the second partial derivatives FIG), k= ..n) exist

ax, ar,

at this point and that ), >0 for i=1,2,...7

where

1

In problems of several variables it is easily possible to have several

minima, maxima or saddle points. However it is most unlikely that every

minimum will hae the same value and consequently the minimum of all

minima is known as the global minimum while the remainder are referred

to as local minima. In general, it is the global minimum which is required.

Unfortunately, the majority of optimization problems involve many

variables in a complex nonlinear function /(x) for which an analytic

solution cannot be obtained. Solutions can, however, often be obtained by

numerical techniques and indeed the availability of high speed digital

computers has led to a proliference of such methods. In numerical

methods, initial values are assigned to each of the elements of x and

subsequently adjusted by the particular ‘algorithm’, each set of alteration

to x being referred to as ‘iteration’, Some optimization methods require

gradient informacion about the objective function and for the first

derivatives this is referred to as the Jacobean gradient vector and is defied

by

vf -( x Z| 2

ow

The x,

1 Fe Xe

Figure 1

Two further points x, and x, are chosen such that

6

imum lies in the interval x,>,, whilst if

> x, Having determined in

<4 6S

then if /(x,)2/(s,), the mi

F(x)< f(s, the minimum lies in the interval x,

which interval the minimum now lies this interval is again subdivided and

vo on. The method of subdivision is the important feature of these

methods since many function evaluations would be wasted if for instance

trisection of the ieagih x, x, was used, asin Figure 2.

Ky Ay Ry Nee

Figure 2

Fibonacci search’

This method uses a sequence of po:

fined by the relations

Scanned with CamScanner

GMs

\ -18-

nd the sequence begins 1, 1, 2, 3, 5, 8, 13, 21, 34

ine i iteration are determined by

=; es 0

The test points for

and

fori = 2, 2... N-4, where (x\").x,”) is the initial interval and N the number

of test points required. Now it can be shown that the final interval within

which the minimum lies has a width 2c given by

1

peel

Fa :

Hence the implementation of a Fibonacci search is quite simple. Two initial

function evaluations are performed at x, and x, followed by determination

1 -

1 and x4. The function values at x4 and xt are the

3

of the points

3

computed and all four function values compared. If i{=4)> As4); then

is selected. The result is the most efficient univariate

d can guarantee an interval,

However a

in is advance which often presents

the interval x, > x,

search procedure, since no other methot

reduction factor as large as Fin N function evaluations.

suitable value of W must be know!

problems.

Example:

Determine to within +0.0sthe value of x on the unit interval which

maximizes the function x(1.5 — x).

Now for « =0.05, = =0.05

Nol

Since F, =21, only 6 evaluations will be required.

13. and 1, =, hence

= 2 ()+0=038, s(s')=033

Also

5,

x

8 9 rle,

7 pill) 0= 0.02, sve)

Since j(:!)> s(x) in interval 0.x

becomes

xf 30.38 and x}=1.0.

thus x)= 2(1.0-0.38)+ 0,38 = 0.62: f(x )= 0.58

is eliminated and the new interval

2 31,0 0.38)+ 0.38 = 0.81; f(xi) = 0.56

8

x

Scanned with CamScanner

-19-

now /(xi)>/(v3) hence the new interval is x) =0.62 0 x}=1.0. This

procedure is Fepeated for the remainder of the Fibonacci fone

turrent solution also looks sensible since the maximum is jocatedat x's

0.75 with a function value of 0.5625. =

Golden Section Search

The number of function evaluations required cannot always be determined

in practical problems which make the Fibonacci search difficult. However

an approximation to the sequence for large N can be made such that

i :

Fu 2 1(145)=r, the reduction factor. Hence

(oa

463° =x()e 10

It can also be shown that the Fibonacci search asymptotically achieves an

interval reduction 17 percent greater than search by Golden section

method.

Point Methods

These methods have been shown to require in general fewer functions

evaluations than interval methods and are therefore usually faster. The

methods require “haf. an initial approximation to the minimum is known

rich that the algorithm can then evaluate a function value nearly and

determine a step length.

The Algorithm of Davies, Swann and Compey

The first function evaluation made by the algorithm is at point 2 in Fig. 3,

the initial step length being determined by the user. If the function value

is lees than or equal to the initial approximation, then the step length is

doubled, and a further step taken in the same direction and so on. If

however, at some stage the function value increases then the step length

is halved using the last successful point again. There are then four equally

spaced function evaluations of which the one furthest from the smallest

function value is neglected and the three remaining values used for

quadratic interpolation.

>

}+$—_———}

Te

Figure 3

Scanned with CamScanner

YY

-20-

tial approximation may be located on the ri

p i : ight of the mini

3 in which case the first step will fail. In this case the sign of the st 7

versed and the above search procedure repeated. If the first ste ‘in

styersed direction fails then the minimum has been boxed in and the

Betolation may be performed directly. The three points chosen for

serpolation may be written as rs

inter ex, -Se; and X=%,+S 11

an corresponding function values ff; and f,. Then it can be shown that

of the fitted quadratic lies at x pt

Sf,

it S.=

where Oh fi)

jowever, the true minimum may not yet have been reached and so a new

foge with reduced step length is begun using Fle) or fle +S.)

whichever is the smaller, as the initial point.

init

greminimum

12

powell’s Algorithm

Touse this algorithm the user must specify an

length S The function values at x, and x, =%/+

used to select a third point x,such that

x=4428 if h2h

mex-S I L Jo the pattern move plus

the whole procedure is reco!

pasic point B,. If however, fs <

direction B, 10 Band n

all exploratory moves fail, the step length is re

tontinued. Convergence is ‘assumed when the s\

reduced by pre-assigned factors.

ew exploratory moves

y.

X

Fig. 8 The Method of Hooke and Jeeves

Scanned with CamScanner

-24-

Rosenbrock’s Method

At the initial trial point, n mutually orthogonal vectors are set up with n

associated step lengths. Perturbations along each search direction in turn

are made until a ‘failure’ is encountered. This occurs if the new trial point

function value is larger than the current value, in which case the step

length is multiplied by /(--0.5). If the function value is reduced then the

step is considered a ‘success’ and the step length is multiplied by «(~3.0).

ultimately a success followed by a failure will occur in every direction. The

mutually orthogonal axes are then moved to the new trial point derived

from all the success in each direction. The first vector is aligned in the

direction of total progress made during the last stage thus defining a new

set of search vectors. No definite convergence criterion exists but

sometimes the process is made to terminate after a specified number of

iterations. If significant ridges or valleys exist in the objective function

then the first vector will tend to align itself in the direction along a ridge.

Fast progress will then be made in this direction, with relatively small

progress in the otiter mutually orthogonal directions.

The Method of Davies, Swann and Cameny

The method is similar to that of Rosenbrock except that one step of a

univariate search is made in each of the orthogonal directions. The result

is a faster convergence rate. Conjugate Directions and Quadratic

Convergence. By approximating the objective function with a quadratic

function the convergence of the search in the vicinity of the minimum may

be considerably accelerated. If the function were truly quadratic in n

variables then the minimum could be found in !n(2-1) linear

minimizations. The more nonlinear the objective function the longer this

method will take, however, very few problems are sufficiently nonlinear to

defeat the algorithm.

Powell’s Method

The method due to Powell (1964) is based on the above technique;

however, certain disadvantages concerned with linearly dependent

direction vectors of the conjugate direction method are removed.

Gradient Methods

For these methods the first derivative of the function with respect to each

variable is required and is normally estimated numerically from

Lle,+H)- fs)

h,

a

+0(17) 17

Steepest Descent

From the gradient values the method determines the direction of steepest

descent and takes a step in that direction. At the new point the line of

steepest descent is again determined and another step taken. Eventually

the function value will increase compared with the last trial point, in which

Scanned with CamScanner

-25-

case the step length is reduced and the procedure continued. If the

minimum along each selected direction is found (Fig. 9) then the method

can be made faster but the progress is a zig-zag. Booth (1957)

suggested that instead of moving to the minimum in each direction, zig-

zag could be reduced by instead moving only 0.9 of this distance. However,

scaling difficulties prevent these methods having a wide application.

Newton's Method

The method uses a second order Taylor expansion of the objective function

about the minimum point. However, the minimum is not known and so the

current point must be used as an approximation. Thus in matrix notation

fla; + Pysenrdl, +2, )= Sy tH a4 Woh 18

where f,,d and Jare evaluated at the current point (q,,2,,.. ,q,) and are

known. The extremum of this approximating quadratic will provide and

hence a new estimate of the position of the extremum of the true function.

Let the column vector ¢, be defined by ¢,' =(0.0/..1.0-.0) with 1 in the i

th place. Differentiating the approximating quadratic with respect to /,

gives the extremum.

x _,t

om

but since #’Je, =(2"Je,

This equation gives

o=e"(d+Jh)

and since this is true for each ¢

O=d+Jh

or the required column vector can be calculated as

h=-J'd

Thus the extremum of the function is at

xea-J"d

d+je)Jh+ih Je, =0

INTIAL,

Fig, 9 The Method of Steepest Descent

Scanned with CamScanner

~ 26 -

qhe Newton algorithm now takes the form

1. At the current point « evaluate f.d,./,

2. Computer o ., =u, -J,'d.and return to 1, Unless sufficient accuracy

has been obtained.

The algorithm has two very distinct advantages, first it avoids the

ariate search and secondly when it works the convergente is very

rapic indeed. If the function is an exact quadratic the extremum is

achieved in a single iteration, but in general the method will only work

Well if the quadratic expansion is a good approximation to f J must

therefore be positive or negative definite for a maximization for

minimization respectively which is a major restriction. In practice the

method is very unreliable. Further, the matrix of second derivatives, J,

must be computed which often presents difficulties and the subsequent

inversion is also time consuming. Thus whilst Newton's method is very

efficient in the neighbourhood of the minimum, away from this point it has

nothing to recommend it, and the method of steepest descent is

preferable.

Davidon’s Method

Basically the method uses the steepest descent technique initially with a

progressive change over to Newton’s method as the minimum is

approached. A linear search using the function values and gra‘

points with cubic interpolation is recommended, rather than quadratic

interpolation using only function values.

Example:

Find the minimum of the function

f=10(x?-») #0-a)

Starting at (-1, 1), using Newton's algorithm.

)-aq-x) s 40(3x? - y)+2

242-40

-40 20

Scanned with CamScanner

-27-

Thus it so happens in this case that the minimum is achieved in two

iteration despite a poor value of the objective function at the end of the

first iteration.

The general constrained optimization problem

The original objective function (x)remain unchanged but constraints on

the independent variables are introduced such that

)s0 (f=1-m)

where m is the

normally represented by the region on wi

equality.

Penalty Function Solution

(a). Rosenbrock’s Method

The type constraints that may be handled by his method are

Lexsu, i-12,m(m>n)

Boundary zones are introduced such that if the unconstrained solution

enters such a zone the objective function is weighted so that no further

progress is attempted in that direction. These zones must be relatively

Farrow in order that the variables can closely approximate to the

constraint values.

(0). Carroll's Created Response Surface Technique

The method constructs special surface within the feasible region and then

proceeds to find the minimum on each surface, By reduction of the region

of these surfaces the minimum is eventually approached (The

mathematical description of the method is rather involved hence for

further informaticn sce Carroll (1961).

number of such constraints. These constraints are

hich the inequality becomes an

(c). The Complex Method

The Simplex method for unconstrained minimization has been modified by

Box (1965) to handle inequality constraints of the form

1,$x,54,

Constraint Orientated Methods

(a). Riding the constraint

The method due to Roberts and Lyvers (1961) endeavours to ‘ride’ any

constraint which is encountered, such that the current point is not able to

leave such a constraint at any time in the subsequent search. It should be

noted that a convex nonlinear constraint will only be approximately

followed by this method even if small step lengths are used.

(6). Hemstitching

ae billed also due to Roberts and Lyvers (1961) uses a step taken in

the grac ient direction and a test for constraint violation. If the constraints

re al satisfied, another step and test are made, If, however, a constraint

lated, a step is taken orthogonal to the constraint back toward the

Scanned with CamScanner

-28-

feasible region. Repetition of the above will eventually guarantee a

feasible solution but reduction of the step length is also needed in order to

move toward the minimum.

(c). Davidon’s Method with Linear Constraints

An extension of Davidon’s unconstrained technique was developed by

Goldfarb and Lapidus (1968) such that linear constraints could be included

by reducing the inverse of the matrix of second derivatives already used.

The resultant method is considered a useful tool for general non-linear

programming.

O Lord JESUS CHRIST my Blessed Saviour,

Help me not to major on minors.

Scanned with CamScanner

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5807)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1092)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (843)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (346)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Materials Fact Sheet: $8000 Expense Turns Into A Money-Making MachineDocument2 pagesMaterials Fact Sheet: $8000 Expense Turns Into A Money-Making Machinemichael AnyanwuNo ratings yet

- Historical Developments of Safety and Reliability AssessmentDocument7 pagesHistorical Developments of Safety and Reliability Assessmentmichael AnyanwuNo ratings yet

- Comparative Study of Various Strip-Theory Seakeeping Codes in Predicting Heave and Pitch Motions of Fast Displacement Ships in Head SeasDocument11 pagesComparative Study of Various Strip-Theory Seakeeping Codes in Predicting Heave and Pitch Motions of Fast Displacement Ships in Head Seasmichael AnyanwuNo ratings yet

- Certified By.... : A Strip Theory Approximation For Wave Forces OnDocument84 pagesCertified By.... : A Strip Theory Approximation For Wave Forces Onmichael AnyanwuNo ratings yet

- New Strip Theory Approach To Ship Motions PredictionDocument8 pagesNew Strip Theory Approach To Ship Motions Predictionmichael AnyanwuNo ratings yet

- Precautions On Use: (Handling)Document3 pagesPrecautions On Use: (Handling)michael AnyanwuNo ratings yet

- Ship PartsDocument1 pageShip Partsmichael AnyanwuNo ratings yet

- Precautions: Series DPL Paddle Wheel Flow SensorDocument2 pagesPrecautions: Series DPL Paddle Wheel Flow Sensormichael AnyanwuNo ratings yet