Professional Documents

Culture Documents

Bangladesh Mask Study - Do Not Believe The Hype

Uploaded by

Krutarth Patel0 ratings0% found this document useful (0 votes)

14 views1 pageOriginal Title

bangladesh mask study_ do not believe the hype

Copyright

© © All Rights Reserved

Available Formats

PDF or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

0 ratings0% found this document useful (0 votes)

14 views1 pageBangladesh Mask Study - Do Not Believe The Hype

Uploaded by

Krutarth PatelCopyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

You are on page 1of 1

bangladesh mask study: do not believe the hype

this is one of the worst studies i've ever seen in any field. it proves nothing apart from

the credulity of many mask advocates.

el gato malo

Sep2 0113 O90 &

people tend to call economics “the dismal science” because it’s so difficult to do

controlled experiments. you cannot simply run the economy once with interest rates at

4% then again at 6% and compare. it’s inherently limiting to the discipline.

but this bangladesh study, originally published by “the national bureau of economic

research” is so bad that i fear this is way past that. (newer version HERE but this the

same study with new data cuts added.) even those legends of non-replication and bias

injection in sociology and psychology would not accept a study like this. i have no idea

how NBER fell for it.

(addendum: and they just published the new one)

this one would get you laughed out of a 7th grade science fair.

it violates pretty much every single tenet of setting up and running a randomized

controlled experiment. its output is not even sound enough to be wrong. it’s complete

gibberish.

truly, a dismal day for the dismal science and those pushing it into public health.

and yet the twitterati (and there are MANY) are treating this like some sort of gold

standard study.

“This should basically end any scientific debate about whether masks can be effective in

combating covid at the population level,” Jason Abaluck... who helped lead the study said...

calling it “a nail in the coffin

despite wild claims by the authors, it’s not.

this setup and execution is so unsound as to make it hard to even know where to start

picking it apart, but it’s easy to throw shade and backing it up is the essence of debate

and refutation, so let’s start with the basics on how to do a medical RCT.

1. establish the starting condition. if you do not know this, you have no idea what

any later numbers or changes mean. it also makes step 2 impossible.

2. randomize cohorts into even groups in terms of start state and risk. this way,

they are truly comparable. randomizing by picking names out of a hat is not

good enough. you can wind up doing a trial on a heart med where 20% of active

arm and 40% of control have hypertension. you cannot retrospectively risk

adjust in a prospective trial. this needs to be done with enrollment and study

arm balancing. fail this, and you can never recover.

3. isolate the variable you seek to measure. to know if X affects Y, you need to

hold all other variables constant. only univariate analysis is relevant to a unitary

intervention. if you give each patient 4 drugs, you cannot tell how much effect

only drug 1 had. you've created a multivariate system. get this wrong, and you

have no idea what you measured.

4. collect outcomes data in a defined, measurable, and equal fashion. uniformity

is paramount. if you measure unevenly and haphazardly, you have no idea what

manner of cross confounds and bias you have added. you will never be able to

separate signal from measurement artifacts. this means your data is junk and

cannot support conclusions.

5. set clear outcomes and measures of success. you need to decide these ahead of

time and lay them out. data mining for them afterwards is called “p-hacking”

and it’s literal cheating. patterns emerge in any random data set. finding them

proves nothing. this is how you perform a study that will not replicate. it’s a

junk analysis,

OK. there’s our start point for an RCT.

get any one of these steps wrong, and your study is junk. get several wrong and your

study is gibberish. get them ALL wrong, and you're either using data to mislead or you

really have no business running such studies.

now let's look at what was done in bangladesh:

establish starting condition and balance cohorts: this was a total fail and doomed them

before they even began.

Figure 1: Intervention Effect on Symptomatic Seroprevalence

(a) Intervention Effect on Symptomatic Seroprevalence

ges 9.3% relative reduct

E p= 0.043

(b) Intervention Effect on Symptomatic Seroprevalence by Mask Type

Cloth Mask

(n=54,

relat

Surgical Mask Villages

this is their key claim in the finding. but notice what is jarringly absent: any clear idea

what prior disease exposure was in each of these villages and village cohorts at study

commencement. small current seroprevalence probes cannot tell us this. there is no

way to know if one had had a big wave and another had not. so, we literally have no

idea what had happened here and on what sort of population. we have no idea if we are

studying a naive population or not.

performing this study without a clear and broad based baseline and sense of past

exposure is absurd. this is a tiny signal (7 in 10,000) we need a very high precision in

start state. it’s absent. that’s the ballgame right there. we do not know and can never

know what happened. even miniscule variance in prior exposure would swamp this.

for cohorts, they paired by past covid cases (so thinly reported as to be near

meaningless and a possible injection of bias as testing rates may vary by village) and

tried to establish a “cases per person” pair metric to sort cohorts. this modality is

invalid on its face without reference to testing levels. you have no idea if high cases are

high testing or high disease prevalence. you have no idea how much testing varied.

and testing levels are so low, the authors estimate a 0.55% case detection rate. so a

modality invalid even if data were good is multiplied in terms of error because the data

is terrible (and likely wildly variable by geography)

Bangladesh is a densely populated country with 165 million inhabitants. A serosurvey conducted

in July 2020 found 45% of Dhaka residents had antibodies against SARS-CoV-2. This suggests

a 0.55% case detection rate based on reported cases, implying that the true infection rate may be

nowhere here does the word “testing” or “sample rate” appear.

B_ Pairwise Randomization Procedure

Villages were assigned to strata as follows:

1. We began with 1,000 villages in 1,000 separate unions to ensure sufficient geographic dis-

tance to prevent spillovers (Bangladesh is divided into 4,562 unions)

2. We collected these unions into “Units”, defined as the intersection of upazila x (above/below)

median population x case trajectory, where above/below median population was a 0-1 indi-

cator for whether the union had above-median population for that upazila and case trajectory

takes the values -1, 0, 1 depending on whether the cases per 1,000 are decreasing, flat or

increasing. We assessed cases per person us

1g data provided to us from the Bangladeshi

government for the periods June 27th-July 10th and July 11th-July 24th, 2020,

3. Ifa unit contained an odd number of unions, we randomly dropped one union.

4, We then sort unions by “cases per person” based on the July L1th-July 24th data, and creare

pairs of unions, We randomly kept 300 such pairs.

5. We randomly assigned one union in each pair to be the intervention union.

6. We then tested for balance with respect to cases, cases per population, and density.

7. Finally, we repeated this entire procedure 50 times, selecting the seed that minimized the

maximum of the absolute value of the Cstat of the balance tests with respect to case trajectory

and cases per person.

so this study ended before it even began.

this was not useful randomization. this was garbage in garbage out especially when

you later seek to use “symptomatic seroprevalence” as primary outcome. that’s false

equivalence. if you're going to use seroprevalence as an outcome, you need to measure

it as a start state and balance the cohorts using it. period. failure to do so invalidates

everything. you cannot run a “balance test” on current IgG and presume you know

what happened last year, certainly not to the kind of precision needed to find a 0.0007

signal meaningful.

this was an unknown start state in terms of highly relevant variables and the cohorts

were not normalized (or even measured) for it.

they also failed to measure masked vs unmasked seroprev in any given village. that

would have been useful control data. it seems like they really just missed all the

relevant info here.

it’s pure statistical legerdemain.

strike 1 and 2.

but it gets worse. much worse.

isolate the variable you seek to measure.

to claim that masks caused any given variance in outcome, you need to isolate masks

asa variable. they didn’t. this was a whole panoply of interventions, signage, hectoring,

nudges, payments, and psychological games. it had hundreds of known effects and

who knows how many unknown ones.

we have zero idea what's being measured and even some of those variables that were

measured showed high correlation and thus pose confounds. when you're upending

village life, claiming one aspect made the difference becomes statistically impossible.

the system becomes hopelessly multivariate and cross-confounded.

the authors admit it themselves (and oddly do not seem to grasp that this invalidates

their own mask claims)

Physical distancing, measured as the fraction of individuals at least one arm’s length apart,

also increased by 5.2 percentage points (95% Cl: 4.2%-6.3%). Beyond the core intervention

who knows what the effects of the “intervention package” were outside of masking?

maybe it also leads to more hand washing or taking of vitamins. it seemed to effect

distancing (though i doubt that mattered).

but the biggest hole here is that that which affects one attitude can affect another.

if you've been co-opted to “lead by example” and put up signage etc or are being paid

to mask you may change your attitude about reporting symptoms.

people want to please researchers and paymasters and this is a classic violation of a

double blind system. the subjects should no know if they are control or active arm, but

in the presence of widespread positive mask messaging, they do

so maybe they think “i don’t not want to tell them i’m sick.” especially if “being sick”

has been vilified.

or maybe they fail to focus on minor symptoms because they are masked and feel safe

or were having more trouble breathing anyhow.

this makes a complete mess and injects all manner of unpredictable bias into the

“results” because the results are based on “self reporting” a notoriously inaccurate

modality.

look at the wide variance in self reporting on masks and reality cited in this very study.

attempts by police and NGOs to confront those who were seen in public without masks. When

we surveyed respondents at the end of April 2020, (6ver 80% self-reported Wearing a mask and)

(97% Self-reported owning a mask, Anecdotal reports from surveyors suggested that mask-use

‘was pervasive, The Bangladeshi government formally mandated mask use in late May 2020 and

threatened to fine those who did not comply, although enforcement was weak to non-existent,

especially in rural areas. Anecdotally, mask-wearing was substantially lower than indicated by our

self-reported surveys. To investigate, we conducted surveillance studies throughout public areas in

Bangladesh in two waves. The first wave of surveillance took place between May 21 and May 25,

2020 in 1,441 places in 52 districts. About 51% Out Of more than 152,000 individuals We Observed:

(WERE WEAFNG WHISK, The second wave of surveillance was conducted between June 19 and June

22, 2020 in the same 1,441 locations, and We found that mask Wearing dropped to 26%, with 20%

‘Wearing masks that covered their mouth and nose) These observations, coupled with the increasing

case load, motivated the interventions we implemented to increase mask use.

yet we're to accept self reporting of symptoms in the face of widespread and persistent

moral suasion in one arm and not the other and assume that the same interventions

that had a large effect on mask wear affected no other attitudes?

no way. this non-blinded issue combining with self reporting adds one tailed error

bars so large to this system that they swamp any signal.

as is so often the case, gatopal @Emily_Burns-V has a great take here:

Emily Burns@ #SmilesMatter DM's OK

@Emily_Burns_V

212)

What the study ACTUALLY measures is the impact of mask promotion on

symptom reporting. Only if a person reports symptoms, are they asked to

participate in a serology study—and only 40% of those with symptoms

chose to have their blood taken

bateline. We were able to callect follow-up symptom data (whether symptomatic or not) from

335,382 (98%) Of these, 27,166 (7.9%) reported COVID-like symptoms during the 8-weeks

intervention in their village. We attempted to callect blood samples from all symptomatic individ-

uals, Of these, 10,952 (40.3%) consented to have blood collected, including 40.8% in the treatment

group and 39.99% inthe contol group (he difference in consent rates isnot statistically significant,

1p = 0.24). We show in Table A2 that consent rates are about 40% across all demographic groups

in both treatment and control villages.

September 1st 2021

11 Retweets 58 Likes

Emily Burns@ #SmilesMatter DM's OK

@Emily_Burns_V

3/ Is it possible that that highly moralistic framing and monetary

incentives given to village elders for compliance might dissuade a person

from reporting symptoms representing individual and collective moral

failure—one that could cost the village money? Maybe?

2

We

announced that the monetary reward or the certificate would be awarded if village-tevel

‘mask-wearing among adults exceeded 75% 8-weeks after the intervention started,

September 1st 2021

BRetweets 44 Likes

this is exactly why good studies are blinded. if they are not, the subjects seek to please

the researchers and it wrecks the data. adding self reporting is a multiplier on this

problem. this whole methodology is junk and so is the data it produced.

for proof of this, one need only look at the age stratification:

Figure 3; Effect on Symptomatic Seroprevalence by Age Groups, Surgical Masks Only

(a) Above 60 Years Old

Decrease of 34.7%

60+) Id

(b) 50-60 Years Old

Decrease of 23.0%

50-60 years old eee

1.08'

(©) 40-50 Years Old

No statistically

40-50 years old t significant decrease

(d) Younger than 40 Years Old

No statistically significant decrease

<40 years old assy) 518

first off, this proves conclusively that “your mask does not protect me.” (though we

already knew that) if it did, it would protect everyone, not just old people. but it didn’t.

and the idea that it stopped old people from getting sick but not young people is

similarly implausible.

the odds on bet here is that old people were more inclined to please the researchers

than young people and that they failed to report symptoms as a result.

i establish this as the null hypothesis.

can anyone demonstrate that this data makes a more compelling case for “masks worked

on old people but not young people and thus decreased overall disease”?

because i very much doubt it.

and unless you can, you must abandon this study as a possibly interesting piece of

sociology, but as having zero validated epidemiological relevance.

so, that’s strikes 3 and 4.

i’m honestly a bit unsure about whether we can go on to call it a perfect 5. they did

pre-register the study and describe end states, but they never established start states so

we have no idea what actual change was.

so, they called their end results shot beforehand (as they should) but thea left us with

no way to measure change even if the measurement was good, and as we have seen, the

measurement was terrible.

so i’m going to sort of punt on scoring this one and assign it an N/A. establishing an

outcome and then providing no meaningful way to measure it is not p-hacking per se,

but it is also not in any way useful.

so, all in all, it’s just impossible to take this study seriously, especially as it flies in the

face of about 100 other studies that WERE well designed.

read many HERE including RCT’s showing not only a failure as source control, but in

higher rates of post op infections from surgeons wearing masks in operating theaters

vs those that did not.

the WHO said so in 2019.

vena he soe: USA Ute Sate of America

and the DANMASK study in denmark was a gold standard study for variable isolation

and showed no efficacy.

perhaps most hilariously, the very kansas counties data the CDC tried to cherry pick to

claim masks worked went on to utterly refute them when the covid surge came.

o

the evidence that masks fail to stop covid spread is strong, deep, wide, and has a lot of

high quality studies. (many more here from the swiss)

to refute them would take very high quality data from well performed studies and

counter to the current breathless histrionics of masqueraders desperate for a study to

wave around to confirm their priors, this is not that.

to claim “masks worked” with ~40% compliance in the light of total fails with 80-95%

is so implausible as to require profound and solid evidence that is nowhere provided

here.

this is a junk output from a junk methodology imposed upon an invalid randomization

without reference to a meaningful start state for data.

this is closer to apples to orangutans than even apples and oranges.

thus, this and many more like is an absurd and impossible take from this study.

Lyman Stone GARE om

@lymanstoneky

This is arguably the most important single piece of

epidemiological research of the entire pandemic.

A MASSIVE randomized trial launched a pro-mask

campaign in some Bangladeshi villages, but not others.

The result: masking villages got less COVID. poverty-

action.org/sites/default/...

6:51 AM - Sep 1, 2021 - TweetDeck

it does not show efficacy. it does not show ANYTHING outside of how an object

lesson in poor study design and data collection can be weaponized into a political

talking point.

this study is an outright embarrassment and a huge black eye for the NBER et al.

this is not even wrong.

it’s just an epic concatenation of bad techniques and worse data handling used to

provide pretext for an idea the researchers clearly favored. there is no way to separate

bias from fact or data from artifact.

calling this proof of anything is simply proof of either incompetence or malfeasance.

which one makes you want to listen to the folks pushing it?

You might also like

- Expansion: Conformal Geometric AlgebraDocument1 pageExpansion: Conformal Geometric AlgebraKrutarth PatelNo ratings yet

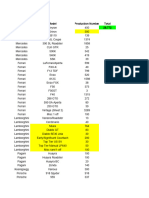

- Make Model Production Number Total 29,772Document9 pagesMake Model Production Number Total 29,772Krutarth PatelNo ratings yet

- Year Make Model VINDocument8 pagesYear Make Model VINKrutarth PatelNo ratings yet

- Covid-19: Researcher Blows The Whistle On Data Integrity Issues in Pfizer's Vaccine TrialDocument3 pagesCovid-19: Researcher Blows The Whistle On Data Integrity Issues in Pfizer's Vaccine TrialKrutarth PatelNo ratings yet

- 10 PrinciplesDocument1 page10 PrinciplesKrutarth PatelNo ratings yet

- Post-Quantum' CryptographyDocument1 pagePost-Quantum' CryptographyKrutarth PatelNo ratings yet

- Association of Social Distancing and Face Mask Use With Risk of COVID-19Document10 pagesAssociation of Social Distancing and Face Mask Use With Risk of COVID-19Krutarth PatelNo ratings yet

- Sociology ProblemsDocument11 pagesSociology ProblemsKrutarth PatelNo ratings yet

- Association of Social Distancing and Face Mask Use With Risk of COVID-19Document10 pagesAssociation of Social Distancing and Face Mask Use With Risk of COVID-19Krutarth PatelNo ratings yet

- A New Method For Sampling and Detection of Exhaled Respiratory Virus AerosolsDocument3 pagesA New Method For Sampling and Detection of Exhaled Respiratory Virus AerosolsKrutarth PatelNo ratings yet

- (Tutorial) A Way To Competitive Programming - From 1900 To 2200Document6 pages(Tutorial) A Way To Competitive Programming - From 1900 To 2200Krutarth PatelNo ratings yet

- Problems in Geometry - ModenovDocument407 pagesProblems in Geometry - ModenovKrutarth PatelNo ratings yet

- (Tutorial) Math Note - Möbius Inversion - CodeforcesDocument6 pages(Tutorial) Math Note - Möbius Inversion - CodeforcesKrutarth PatelNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)