Professional Documents

Culture Documents

Deep Transfer Learning For Aortic Root Dilation Identification in 3D Ultrasound Images

Uploaded by

blackwidow23Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Deep Transfer Learning For Aortic Root Dilation Identification in 3D Ultrasound Images

Uploaded by

blackwidow23Copyright:

Available Formats

See discussions, stats, and author profiles for this publication at: https://www.researchgate.

net/publication/326479358

Deep transfer learning for aortic root dilation identification in 3D ultrasound

images

Conference Paper · July 2018

DOI: 10.1515/cdbme-2018-XXXX

CITATIONS READS

0 59

3 authors:

J. Hagenah Mattias Paul Heinrich

University of Oxford Universität zu Lübeck

24 PUBLICATIONS 22 CITATIONS 99 PUBLICATIONS 3,917 CITATIONS

SEE PROFILE SEE PROFILE

Floris Ernst

Universität zu Lübeck

161 PUBLICATIONS 1,124 CITATIONS

SEE PROFILE

Some of the authors of this publication are also working on these related projects:

Modeling of the aortic valve geometry in valve-sparing procedures View project

High-accuracy head tracking View project

All content following this page was uploaded by J. Hagenah on 19 July 2018.

The user has requested enhancement of the downloaded file.

Current Directions in Biomedical Engineering 2018;4(1):1–4

Jannis Hagenah*, Mattias Heinrich, and Floris Ernst

Deep transfer learning for aortic root dilation

identification in 3D ultrasound images

https://doi.org/10.1515/cdbme-2018-XXXX king, an approach for pre-operative planning of the surgery has

been published [2]. The aim of this approach is to predict the

Abstract: Pre-operative planning of valve-sparing aortic root

individually optimal prosthesis size based on ultrasound ima-

reconstruction relies on the automatic discrimination of heal-

ges of the dilated aortic root. One step of this method relies

thy and pathologically dilated aortic roots. The basis of this

on the automatic discrimination of healthy and pathologically

classification are features extracted from 3D ultrasound ima-

dilated aortic roots. For this purpose, geometric features were

ges. In previously published approaches, handcrafted features

extracted manually from the ultrasound images and a classifier

showed a limited classification accuracy. However, feature le-

was trained on these handcrafted features. A first study revei-

arning is insufficient due to the small data sets available for

led limited accuracy of this classification based on the hand-

this specific problem. In this work, we propose transfer lear-

crafted features [2].

ning to use deep learning on these small data sets. For this pur-

Automatic feature learning presents an alternative to handcraf-

pose, we used the convolutional layers of the pretrained deep

ted features. Recently, numerous studies showed the effecti-

neural network VGG16 as a feature extractor. To simplify the

veness of deep learning with convolutional neural networks

problem, we only took two prominent horizontal slices throgh

for feature learning in various medical applications [3]. Ho-

the aortic root, the coaptation plane and the commissure plane,

wever, training deep convolutional neural networks requires

into account by stitching the features of both images together

huge data sets, that are rarely available for specific problems in

and training a Random Forest classifier on the resulting fe-

image guided medical interventions like the planning of valve-

ature vectors. We evaluated this method on a data set of 48

sparing aortic root reconstruction. One possible way to use

images (24 healthy, 24 dilated) using 10-fold cross validation.

deep learning on small data sets is transfer learning [4]. The

Using the deep learned features we could reach a classifica-

basic idea of this approach is that image features are similar

tion accuracy of 84 %, which clearly outperformed the han-

for different analytic tasks. Hence, convolutional neural net-

dcrafted features (71 % accuracy). Even though the VGG16

works that are trained on huge data sets can serve as feature

network was trained on RGB photos and for different classi-

extractors for other image analysis applications. In this work,

fication tasks, the learned features are still relevant for ultra-

we propose transfer learning as an alternative to handcrafted

sound image analysis of aortic root pathology identification.

features for the identification of pathological dilations of the

Hence, transfer learning makes deep learning possible even on

aortic root in 3D ultrasound images.

very small ultrasound data sets.

Keywords: transfer learning, deep learning, ultrasound, aor-

tic valve, computer assisted surgery, aortic root dilation

2 Methods

1 Introduction The aim is to replace the handcrafted features by deep learned

features using transfer learning. For this purpose, we extract

Valve-sparing aortic root reconstruction surgery presents an prominent 2D slices from the ultrasound volume, use a pre-

alternative to classical valve replacement, especially for youn- trained deep neural network as a feature extractor and train a

ger patients. In this procedure, the pathologically dilated aortic robust classifier on the small data set. The single steps of our

root tissue is replaced by a graft prosthesis that remodels the workflow are visualised in Fig. 1 and described in detail in the

original, healthy state of the aortic root [1]. However, choosing following paragraphs.

the right prosthesis size for the individual patient is a critical The data set consists of 48 3D ultrasound images of ex-vivo

task during surgery. To assist the surgeon in his decision ma- porcine aortic roots, of which 24 are healthy and 24 are di-

lated. The images were acquired using the setup and proce-

*Corresponding author: Jannis Hagenah, Floris Ernst, Institute dure described in [5]. During this procedure, the aortic roots

for Robotics and Cognitive Systems, University of Lübeck, Lübeck, are cut out of the heart and integrated into an imaging setup.

Germany, e-mail: {hagenah,ernst}@rob.uni-luebeck.de Hence, no tissue around the aortic root is visible in the ul-

Mattias Heinrich, Institute of Medical Informatics, University of trasound images. All roots are aligned vertically along the z-

Lübeck, Lübeck, Germany

2 J. Hagenah et al., Deep transfer learning for aortic root dilation identification in 3D ultrasound images

consists of 16 convolutional layers with very small convoluti-

onal filter masks of the size (3 × 3), followed by several fully-

connected layers. It was trained on the ILSVRC-2012 data set

3D ultrasound

image [7] to classify approximately 1.4 Million photos into 1,000 dif-

ferent classes. The network showed good generalisation per-

formance in different image classification tasks and thus is a

popular network for transfer learning [8]. To use the network

as a feature extractor, we cut away the fully-connected layers

and used the activations of the last convolutional layer as the

coaptation plane commissure plane

features of the input image. The implementation was done in

Python using Keras with TensorFlow backend [9].

One problem is that VGG16 was pretrained on 2D colour ima-

Manual feature

VGG16 VGG16 ges (RGB). Hence, we manually extracted two prominent ho-

(convolutional (convolutional

identification layers only) layers only) rizontal slices of the aortic root from the 3D image: the coapta-

tion plane and the commissure plane. In the coaptation plane,

the coaptation lines of all three leaflets are visible, i.e. the areas

where two neighbored leaflets meet each other. The commis-

… sure plane is a slice through the aortic root at the height of the

feature vector feature vector three commissure points, the highest points where the leaflets

are attached to the root wall. Fig. 2 shows examples of these

image slices. The resulting 2D images were resized to fit the

input image size of VGG16. Thus, the image size was reduced

Random Forest Random Forest

Classifier Classifier

from (506 × 693) to (224 × 224). Additionally, the image was

replicated in each RGB channel to use VGG16 on the grays-

cale ultrasound images. By feeding these 2D images through

the convolutional part of VGG16, we derived the image fea-

{healthy, dilated} {healthy, dilated}

ture representation with a dimensionality of 25, 088.

resulting resulting

One 2D slice only carries a limited amount of the informa-

classification classification tion given in the 3D image. Hence, we additionally stitched

together the feature vectors from both slices of each root, the

Fig. 1: Workflow of the classification based on handcrafted featu- coaptation and the commissure plane. This results in a repre-

res (left branch) and the proposed transfer learning method (right sentation of one aortic root sample by 2 · 25, 088 = 50, 176

branch). In the up to date approach, the features are identified features.

manually in the ultrasound images based on specific landmarks. Due to the small number of samples in combination with the

In the transfer learning approach, two slices are selected from the

high-dimensional feature description, we used a Random Fo-

3D volume image. These slices are fed through the convolutional

rest with 400 trees as a robust classifier [10]. The implemen-

layers of the deep neural network. The resulting feature vectors

are stitched together and serve as an input for the random forest tation was done in Python using the Scikit package [11]. The

classifier. evaluation was performed on the full dataset of 48 samples

using a 10-fold cross validation. The classification accuracy

was calculated as the mean relative number of correctly clas-

axis. However, the rotation of the roots around the central ver-

sified images in the test set over all folds.

tical axis is distributed. In these images, the three commissure

Like this, we evaluated five different feature descriptors: the

points, i.e. the highest points where the leaflets are attached to

handcrafted features as described in [2], the features derived

the root wall, and the coaptation point, i.e. the point where all

using VGG16 from one of the coaptation or commissure sli-

three leaflets meet each other, were identified manually. Based

ces only, respectively, the features of both slices stitched toget-

on these landmarks, geometric features were calculated, for

her and the combination of all those features, stitching toget-

example the distance between the commissure points or the

her the handcrafted features and the transferred features from

height difference between the commissurepoints and the co-

both slices. By evaluating the combination of handcrafted and

aptation point. A more detailed description of the handcrafted

transferred features, it is possible to analyse whether the hand-

features can be found in [2].

crafted features provide additional information that is not con-

We used the pretrained network VGG16 [6]. The architecture

tained in the transferred ones.

J. Hagenah et al., Deep transfer learning for aortic root dilation identification in 3D ultrasound images 3

Tab. 1: Classification accuracy for different feature descriptions.

All results were calculated with a random forest classifier using

10-fold cross-validation.

Feature set Accuracy

Handcrafted Features 71 %

Transfer Learning: Coaptation only 76 %

Transfer Learning: Commissure only 82 %

Transfer Learning: Coaptation and Commissure 84 %

Handcrafted Features and Transfer Learning 83 %

formance analysis of the surgery planning with the integrated

transfer learning workflow is part of future work.

Our results show that transfer learning enables deep le-

arning on very small medical image data sets. Even though

Fig. 2: Example slices through aortic root. First row: Healthy coap- the VGG16 network was trained on RGB photos and different

tation (a) and commissure (b) plane. Second row: Dilated coapta- classification tasks, the learned feaures are still relevant for ul-

tion (c) and commissure (d) plane. trasound image analysis in the case of aortic root pathology

identification. We presented a very simple workflow without

many manual interaction steps. While the manual identifica-

3 Results and Discussion tion of image features is time consuming and prone to indivi-

dual and systematic errors, our proposed transfer learning ap-

The results of the evaluation are listed in Table 1. All variants proach works out-of-the-box using the built-in functions pro-

of the transfer learning approach reach a higher classification vided by Keras and Scikit. We believe that transfer learning

accuracy than the handcrafted features. The commissure plane might provide good performances in similar problems as well.

seems to carry more information about the dilation of the aor- Especially in the area of image guided interventions, in which

tic root than the coaptation plane. However, the combination of often only small data sets are available, transfer learning ma-

both slices reached the highest accuracy that lies 13 % above kes applied deep learning possible in a very easy way.

the accuracy of the handcrafted features. The combination of

handcrafted and transferred features does not lead to a better Acknowledgment: The authors would like to thank Dr. Mi-

performance. Hence, the handcrafted features seem to carry no chael Scharfschwerdt, Department for Cardiac Surgery, Uni-

information that is not given in the transferred ones. This indi- versity Hospital Schleswig-Holstein, Lübeck, for his help col-

cates that transfer learning presents a promising alternative to lecting the data set.

the manual identification of relevant geometric features.

One interesting observation is that including a second Author Statement

slice just slightly increases the accuracy compared to the per- Research funding: Supported by the Ministry of Economic Af-

formance of taking only the commissure plane into account, fairs, Employment, Transport and Technology of Schleswig-

indicating that the commissure slice carries most of the infor- Holstein. Conflict of interest: Authors state no conflict of in-

mation about the dilation of the aortic root. The effect might terest. Informed consent: Informed consent has been obtained

also be related to the curse of dimensionality [12] because the from all individuals included in this study. Ethical approval:

feature dimension is more then doubled while the sample size The image data has been acquired in previous studies. The

stays constantly small. A further study of the accuracy gain aortic roots are from pig hearts bought at the slaughterhouse.

achieved by including more slices could lead to further in- Hence, no ethical conflicts are present. The research related

sights into the transfer of 2D image features to 3D image ana- to human use has been complied with all the relevant national

lysis problems. The optimal choice of 2D slices remains an regulations, institutional policies and in accordance the tenets

interesting research question as well. The data used in this ini- of the Helsinki Declaration, and has been approved by the aut-

tial study is from an ex-vivo study. Hence, only the aortic root hors’ institutional review board or equivalent committee.

is visible in the images. A study of the influence of surroun-

ding tissue on the classification accuracy is needed to evaluate

the workflow in a more realistic setting. Additionally, the per-

4 J. Hagenah et al., Deep transfer learning for aortic root dilation identification in 3D ultrasound images

References

[1] David, T. Aortic valve-sparing operations. Seminars in thora-

cic and cardiovascular surgery 2011; 23(2):146-148.

[2] Hagenah J, Scharfschwerdt M, Schweikard A, Metzner C.

Combining deformation modeling and machine learning for

personalized prosthesis size prediction in valve-sparing aor-

tic root reconstruction. International Conference on Functio-

nal Imaging and Modeling of the Heart 2017; 2017:461-470.

[3] Litjens G, Kooi T, Bejnordi B, Setio A, Ciompi F, Ghafoor-

ian M, and van der Laak J, van Ginneken B, Sánchez C. A

survey on deep learning in medical image analysis. Medical

image analysis 2017; 42:60-88.

[4] Pan S, Yang Q. A survey on transfer learning. IEEE Tran-

sactions on knowledge and data engineering 2010; 22:1345-

1359.

[5] Hagenah J, Scharfschwerdt M, Stender B, Ott S, Friedl R,

Sievers HH, Schlaefer A. A setup for ultrasound based as-

sessment of the aortic root geometry. Biomedical Engineer-

ing/Biomedizinische Technik 2013; 58(Suppl. 1, O). Available

online: https://doi.org/10.1515/bmt-2013-4379

[6] Simonyan K, Zisserman A. Very deep convolutional net-

works for large-scale image recognition. arXiv preprint

arXiv:1409.1556 2014.

[7] Deng J, Dong W, Socher R, Li L, Li K, Fei-Fei L. Imagenet:

A large-scale hierarchical image database. IEEE Confe-

rence on Computer Vision and Pattern Recognition 2009;

2009:248-255.

[8] Long J, Shelhamer E, Darrell T. Fully convolutional networks

for semantic segmentation. IEEE Conference on Computer

Vision and Pattern Recognition 2015; 2015:3431-3440.

[9] Chollet F, and others. Keras. GitHub:https://github.com/keras-

team/keras; 2015.

[10] Breiman L, Friedman J, Olshen R, Stone C. Classification

and regression trees. Chapman and Hall/CRC; 1984.

[11] Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion

B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V,

Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot

M, Duchesnay E. Scikit-learn: Machine learning in Python.

Journal of Machine Learning Research 2011; 12:2825-2830.

[12] Keogh E, Mueen A. Curse of dimensionality. Encyclopedia of

Machine Learning and Data Mining 2017; 2017:314-315.

View publication stats

You might also like

- 978-1-5090-1172-8/17/$31.00 ©2017 Ieee 667Document4 pages978-1-5090-1172-8/17/$31.00 ©2017 Ieee 6671. b3No ratings yet

- Automated Segmentation of Head CT Scans for Computerassisted Craniomaxillofacial Surgery Applying a Hierarchical Patchbased Stack of Convolutional Neural NetworksInternational Journal of Computer Assisted RadiologyDocument9 pagesAutomated Segmentation of Head CT Scans for Computerassisted Craniomaxillofacial Surgery Applying a Hierarchical Patchbased Stack of Convolutional Neural NetworksInternational Journal of Computer Assisted Radiologyhajar.outaybiNo ratings yet

- Comparing Different Deep Learning Architectures For Classification of Chest RadiographsDocument16 pagesComparing Different Deep Learning Architectures For Classification of Chest RadiographsGovardhan JainNo ratings yet

- MLMIDocument9 pagesMLMIAFFAFFNo ratings yet

- Paper BRAIN TUMOR IMAGE CLASSIFICATION MODEL WITH EFFICIENTNETB3 (1909)Document5 pagesPaper BRAIN TUMOR IMAGE CLASSIFICATION MODEL WITH EFFICIENTNETB3 (1909)Sathay AdventureNo ratings yet

- Impact of Local Histogram Equalization On Deep Learning Architectures For Diagnosis Ofcovid-19 On Chest X-RaysDocument5 pagesImpact of Local Histogram Equalization On Deep Learning Architectures For Diagnosis Ofcovid-19 On Chest X-RaysSerhanNarliNo ratings yet

- 1 s2.0 S2666522022000065 MainDocument13 pages1 s2.0 S2666522022000065 Mainamrusankar4No ratings yet

- Liver Segmentation in CT Images Using Three Dimensional To Two Dimensional Fully Convolutional NetworkDocument5 pagesLiver Segmentation in CT Images Using Three Dimensional To Two Dimensional Fully Convolutional Networkmanju.dsatmNo ratings yet

- Medical Image Imputation From Image CollectionsDocument14 pagesMedical Image Imputation From Image CollectionsAnonymous HUY0yRexYfNo ratings yet

- Locating Cephalometric Landmarks With Multi Phase Deep LearningDocument14 pagesLocating Cephalometric Landmarks With Multi Phase Deep LearningAthenaeum Scientific PublishersNo ratings yet

- 5 VNet PDFDocument11 pages5 VNet PDFSUMOD SUNDARNo ratings yet

- Vander Laak 2021Document10 pagesVander Laak 2021typp3t5r3gzNo ratings yet

- Transfer Learning For Ultrasound ImagesDocument10 pagesTransfer Learning For Ultrasound ImagesKoundinya DesirajuNo ratings yet

- Comparison of Algorithms For Segmentation of Blood Vessels in Fundus ImagesDocument5 pagesComparison of Algorithms For Segmentation of Blood Vessels in Fundus Imagesyt HehkkeNo ratings yet

- MRI - Diffusion IQTDocument16 pagesMRI - Diffusion IQTRaiyanNo ratings yet

- Ijcrt2105065 1Document4 pagesIjcrt2105065 1Sheba ParimalaNo ratings yet

- Efficacy of Algorithms in Deep Learning On Brain Tumor Cancer Detection (Topic Area Deep Learning)Document8 pagesEfficacy of Algorithms in Deep Learning On Brain Tumor Cancer Detection (Topic Area Deep Learning)International Journal of Innovative Science and Research TechnologyNo ratings yet

- Diffuse Optical Tomography ThesisDocument6 pagesDiffuse Optical Tomography Thesisbk1hxs86100% (2)

- Retina Blood Vessel SegmentationDocument28 pagesRetina Blood Vessel SegmentationRevati WableNo ratings yet

- RACE-Net: A Recurrent Neural Network For Biomedical Image SegmentationDocument12 pagesRACE-Net: A Recurrent Neural Network For Biomedical Image SegmentationSnehaNo ratings yet

- NIVE Final - Comments IncorporatedDocument17 pagesNIVE Final - Comments IncorporatedSaad AzharNo ratings yet

- Detecting Anatomical Landmarks From Limited Medical Imaging Data Using Two-Stage Task-Oriented Deep Neural NetworksDocument30 pagesDetecting Anatomical Landmarks From Limited Medical Imaging Data Using Two-Stage Task-Oriented Deep Neural Networksqiaowen wangNo ratings yet

- Attention U-Net Learning Where To Look For The PancreasDocument10 pagesAttention U-Net Learning Where To Look For The PancreasMatiur Rahman MinarNo ratings yet

- Elasticity Detection of IMT of Common Carotid ArteryDocument4 pagesElasticity Detection of IMT of Common Carotid Arterypurushothaman sinivasanNo ratings yet

- Plant Disease DetectionDocument20 pagesPlant Disease DetectionYashvanthi SanisettyNo ratings yet

- Recent Advances in Echocardiography: SciencedirectDocument3 pagesRecent Advances in Echocardiography: SciencedirecttommyakasiaNo ratings yet

- Machine Learning in UltrasouDocument10 pagesMachine Learning in UltrasouBudi Utami FahnunNo ratings yet

- MethodDocument19 pagesMethodLê ThùyNo ratings yet

- Convolutional Neural Networks: An Overview and Application in RadiologyDocument20 pagesConvolutional Neural Networks: An Overview and Application in RadiologyAnnNo ratings yet

- Deep Learning Algorithms For Convolutional Neural Networks (CNNS) Using An Appropriate Cell-Segmentation MethodDocument14 pagesDeep Learning Algorithms For Convolutional Neural Networks (CNNS) Using An Appropriate Cell-Segmentation MethodTJPRC PublicationsNo ratings yet

- Eyeing The Human Brain'S Segmentation Methods: Lilian Chiru Kawala, Xuewen Ding & Guojun DongDocument10 pagesEyeing The Human Brain'S Segmentation Methods: Lilian Chiru Kawala, Xuewen Ding & Guojun DongTJPRC PublicationsNo ratings yet

- Swin-Unet: Unet-Like Pure Transformer For Medical Image SegmentationDocument14 pagesSwin-Unet: Unet-Like Pure Transformer For Medical Image SegmentationSAIDULU TNo ratings yet

- V2i3 Pices0015Document4 pagesV2i3 Pices0015KeraNo ratings yet

- Multimodal Transfer Learning-Based Approaches For Retinal Vascular SegmentationDocument8 pagesMultimodal Transfer Learning-Based Approaches For Retinal Vascular SegmentationOscar Julian Perdomo CharryNo ratings yet

- A Fully Convolutional Neural Network For Cardiac Segmentation in Short-Axis MRIDocument21 pagesA Fully Convolutional Neural Network For Cardiac Segmentation in Short-Axis MRIandikurniawannugroho76No ratings yet

- Lung Cancer DetectionDocument8 pagesLung Cancer DetectionAyush SinghNo ratings yet

- A Comparative Study of Tuberculosis DetectionDocument5 pagesA Comparative Study of Tuberculosis DetectionMehera Binte MizanNo ratings yet

- Brain Tumor Segmentation ThesisDocument7 pagesBrain Tumor Segmentation Thesisafksooisybobce100% (2)

- GRUUNetDocument12 pagesGRUUNetAshish SinghNo ratings yet

- (Journal Q4 2023) Ensemble of Deep Learning Models For Classification of Heart Beats Arrhythmias DetectionDocument12 pages(Journal Q4 2023) Ensemble of Deep Learning Models For Classification of Heart Beats Arrhythmias DetectionVideo GratisNo ratings yet

- Article Semi Supervised Data AugmentationDocument17 pagesArticle Semi Supervised Data AugmentationChristian Huapaya ContrerasNo ratings yet

- DatasheetDocument11 pagesDatasheetAGNo ratings yet

- Ultimate RPDocument3 pagesUltimate RPsoniamtech2014No ratings yet

- Robust Motion Estimation Using Trajectory Spectrum Learning: Application To Aortic and Mitral Valve Modeling From 4D TEEDocument9 pagesRobust Motion Estimation Using Trajectory Spectrum Learning: Application To Aortic and Mitral Valve Modeling From 4D TEEherusyahputraNo ratings yet

- An Improved Target Detection Algorithm Based On EfficientnetDocument10 pagesAn Improved Target Detection Algorithm Based On EfficientnetNeha BhatiNo ratings yet

- Human Brain Mapping - 2021 - Doyen - Connectivity Based Parcellation of Normal and Anatomically Distorted Human CerebralDocument12 pagesHuman Brain Mapping - 2021 - Doyen - Connectivity Based Parcellation of Normal and Anatomically Distorted Human Cerebralnainasingh2205No ratings yet

- Sai Paper Format PDFDocument7 pagesSai Paper Format PDFwalpolpoNo ratings yet

- Cplex Imrt PDFDocument12 pagesCplex Imrt PDFDan MatthewsNo ratings yet

- Biomedical Research PaperDocument7 pagesBiomedical Research PaperSathvik ShenoyNo ratings yet

- Peer Reviewed JournalsDocument7 pagesPeer Reviewed JournalsSai ReddyNo ratings yet

- Research Papers On Medical Image SegmentationDocument6 pagesResearch Papers On Medical Image Segmentationwonopwwgf100% (1)

- Diagnostics 11 01589 v2Document19 pagesDiagnostics 11 01589 v2sekharraoNo ratings yet

- Tran-Pham2020 Chapter DesigningAndBuildingTheVeinFinDocument6 pagesTran-Pham2020 Chapter DesigningAndBuildingTheVeinFinhanrong912No ratings yet

- Febrianto 2020 IOP Conf. Ser. Mater. Sci. Eng. 771 012031Document7 pagesFebrianto 2020 IOP Conf. Ser. Mater. Sci. Eng. 771 012031Asma ChikhaouiNo ratings yet

- Comput Methods Programs Biomed 2021 206 106130Document11 pagesComput Methods Programs Biomed 2021 206 106130Fernando SousaNo ratings yet

- Develop An Extended Model of CNN Algorithm in Deep Learning For Bone Tumor Detection and Its ApplicationDocument8 pagesDevelop An Extended Model of CNN Algorithm in Deep Learning For Bone Tumor Detection and Its ApplicationInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Intracranial Stereotactic Radiotherapy With A JawlDocument8 pagesIntracranial Stereotactic Radiotherapy With A JawlG WNo ratings yet

- Segmentation of Brain Structures in Presence of A Space-Occupying LesionDocument7 pagesSegmentation of Brain Structures in Presence of A Space-Occupying LesionzuhirdaNo ratings yet

- Temporal Bone Imaging: Comparison of Flat Panel Volume CT and Multisection CTDocument6 pagesTemporal Bone Imaging: Comparison of Flat Panel Volume CT and Multisection CTNovi DwiyantiNo ratings yet

- Fluorescence Microscopy: Super-Resolution and other Novel TechniquesFrom EverandFluorescence Microscopy: Super-Resolution and other Novel TechniquesAnda CorneaNo ratings yet

- Prob-J - Sum Mod Pair of ADocument2 pagesProb-J - Sum Mod Pair of Ablackwidow23No ratings yet

- Prob-B - Wooden FenceDocument2 pagesProb-B - Wooden Fenceblackwidow23No ratings yet

- Prob-A - Suspicious EventDocument2 pagesProb-A - Suspicious Eventblackwidow23No ratings yet

- Exercises Pert 11-12Document2 pagesExercises Pert 11-12blackwidow23No ratings yet

- Qualitative Evaluation of RFID Implementation On Warehouse Management SystemDocument7 pagesQualitative Evaluation of RFID Implementation On Warehouse Management Systemblackwidow23No ratings yet

- W7-Assignment Software Testing StrategiesDocument1 pageW7-Assignment Software Testing Strategiesblackwidow23No ratings yet

- Quiz 1 Compilation Technique 2021 PPTIDocument1 pageQuiz 1 Compilation Technique 2021 PPTIblackwidow23No ratings yet

- Exercises Pert 9 Dan 10Document1 pageExercises Pert 9 Dan 10blackwidow23No ratings yet

- Tutor CTDocument41 pagesTutor CTblackwidow23No ratings yet

- Business Model CanvasDocument1 pageBusiness Model Canvasblackwidow23No ratings yet

- Top - Down Parsing Exercise 1: - Given A Grammar As FollowDocument2 pagesTop - Down Parsing Exercise 1: - Given A Grammar As Followblackwidow23No ratings yet

- Ipv6 Address Notation Exercise Answers: 2001:0Db8:0000:0000:B450:0000:0000:00B4Document1 pageIpv6 Address Notation Exercise Answers: 2001:0Db8:0000:0000:B450:0000:0000:00B4blackwidow23No ratings yet

- Modified Schedule For Class Test - II Session 2020-21 ODD SemesterDocument3 pagesModified Schedule For Class Test - II Session 2020-21 ODD SemesterGobhiNo ratings yet

- Repair Estimate2724Document5 pagesRepair Estimate2724Parbhat SindhuNo ratings yet

- Admissions Open 2012: Manipal UniversityDocument1 pageAdmissions Open 2012: Manipal UniversityTFearRush0No ratings yet

- Mantis 190/240 Flyaway Antenna System: DatasheetDocument2 pagesMantis 190/240 Flyaway Antenna System: DatasheetDanny NjomanNo ratings yet

- Pendahuluan: Rekayasa Trafik Sukiswo Sukiswo@elektro - Ft.undip - Ac.idDocument9 pagesPendahuluan: Rekayasa Trafik Sukiswo Sukiswo@elektro - Ft.undip - Ac.idBetson Ferdinal SaputraNo ratings yet

- SDK FullDocument72 pagesSDK FullRafael NuñezNo ratings yet

- Product Information X-Ray Suitcase Leonardo DR Mini II - Vet - ENDocument6 pagesProduct Information X-Ray Suitcase Leonardo DR Mini II - Vet - ENVengatNo ratings yet

- Certona TechDesignDocument8 pagesCertona TechDesignVivek AnandNo ratings yet

- Ch04 OBE-MultiplexingDocument28 pagesCh04 OBE-MultiplexingNooraFukuzawa NorNo ratings yet

- 5-Axis Milling MachineDocument1 page5-Axis Milling MachineSero GhazarianNo ratings yet

- Characteristics of A ProductDocument8 pagesCharacteristics of A ProductCamilo OrtegonNo ratings yet

- 8 Project ReviewDocument28 pages8 Project Reviewmurad_ceNo ratings yet

- LKPD X 3 Procedure - 092809Document4 pagesLKPD X 3 Procedure - 092809dheajeng ChintyaNo ratings yet

- StreamDocument8 pagesStreamtanajm60No ratings yet

- An Introduction To RoboticsDocument43 pagesAn Introduction To Roboticsaman kumarNo ratings yet

- Manual Crossover Focal Utopia Be CrossblockDocument31 pagesManual Crossover Focal Utopia Be CrossblockJason B.L. ReyesNo ratings yet

- NRC Document On GCBDocument38 pagesNRC Document On GCBrobinknit2009No ratings yet

- Data Stucture LAB Manual 03Document12 pagesData Stucture LAB Manual 03Sheikh Abdul MoqeetNo ratings yet

- MPBRMSQRC Chapter1-3 RevisedDocument44 pagesMPBRMSQRC Chapter1-3 RevisedCheed LlamadoNo ratings yet

- Network Communication Protocol (UDP/TCP) : Part One: GPRS Protocol Data StructureDocument10 pagesNetwork Communication Protocol (UDP/TCP) : Part One: GPRS Protocol Data StructureCENTRAL UNITRACKERNo ratings yet

- Vstavek PKP 1Document248 pagesVstavek PKP 1Goran JurosevicNo ratings yet

- Assignment 1 - EER ModelingDocument2 pagesAssignment 1 - EER ModelingHaniya Elahi0% (1)

- x3530 m4 Installation PDFDocument402 pagesx3530 m4 Installation PDFMatheus Gomes VieiraNo ratings yet

- Dry Run 10Document4 pagesDry Run 10anshiNo ratings yet

- Product Overview UK BDDocument28 pagesProduct Overview UK BDNaser Jahangiri100% (1)

- Harmonic Filter Design PDFDocument14 pagesHarmonic Filter Design PDFserban_elNo ratings yet

- Supplier Quality ManualDocument19 pagesSupplier Quality ManualDurai Murugan100% (2)

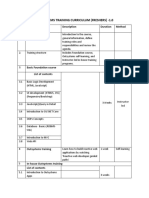

- Outsystems Training Curriculum (Freshers)Document3 pagesOutsystems Training Curriculum (Freshers)Chethan BkNo ratings yet

- Hospital Management QuestionnaireDocument8 pagesHospital Management QuestionnaireDibna Hari80% (5)

- Network Location Awareness (NLA) and How It Relates To Windows Firewall Prof PDFDocument11 pagesNetwork Location Awareness (NLA) and How It Relates To Windows Firewall Prof PDFPeterVUSANo ratings yet