Professional Documents

Culture Documents

Machine Learning 2

Uploaded by

Viswanadh JvsCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Machine Learning 2

Uploaded by

Viswanadh JvsCopyright:

Available Formats

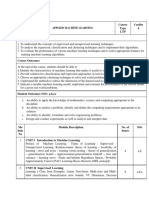

L T P C

IV Year – II Semester

4 0 0 3

MACHINE LEARNING

OBJECTIVES:

• Familiarity with a set of well-known supervised, unsupervised and semi-supervised

• learning algorithms.

• The ability to implement some basic machine learning algorithms

• Understanding of how machine learning algorithms are evaluated

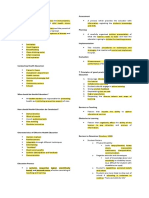

UNIT -I:The ingredients of machine learning, Tasks: the problems that can be solved with

machine learning, Models: the output of machine learning, Features, the workhorses of machine

learning. Binary classification and related tasks: Classification, Scoring and ranking, Class

probability estimation

UNIT- II:Beyond binary classification:Handling more than two classes, Regression,

Unsupervised and descriptive learning. Concept learning: The hypothesis space, Paths through

the hypothesis space, Beyond conjunctive concepts

UNIT- III: Tree models: Decision trees, Ranking and probability estimation trees, Tree learning

as variance reduction. Rule models:Learning ordered rule lists, Learning unordered rule sets,

Descriptive rule learning, First-order rule learning

UNIT -IV:Linear models: The least-squares method, The perceptron: a heuristic learning

algorithm for linear classifiers, Support vector machines, obtaining probabilities from linear

classifiers, Going beyond linearity with kernel methods.Distance Based Models: Introduction,

Neighbours and exemplars, Nearest Neighbours classification, Distance Based Clustering,

Hierarchical Clustering.

UNIT- V:Probabilistic models: The normal distribution and its geometric interpretations,

Probabilistic models for categorical data, Discriminative learning by optimising conditional

likelihoodProbabilistic models with hidden variables.Features: Kinds of feature, Feature

transformations, Feature construction and selection. Model ensembles: Bagging and random

forests, Boosting

UNIT- VI: Dimensionality Reduction: Principal Component Analysis (PCA), Implementation

and demonstration. Artificial Neural Networks:Introduction, Neural network representation,

appropriate problems for neural network learning, Multilayer networks and the back propagation

algorithm.

OUTCOMES:

• Recognize the characteristics of machine learning that make it useful to real-world

• Problems.

• Characterize machine learning algorithms as supervised, semi-supervised, and

• Unsupervised.

• Have heard of a few machine learning toolboxes.

• Be able to use support vector machines.

• Be able to use regularized regression algorithms.

• Understand the concept behind neural networks for learning non-linear functions.

TEXT BOOKS:

1. Machine Learning: The art and science of algorithms that make sense of data, Peter Flach,

Cambridge.

2. Machine Learning, Tom M. Mitchell, MGH.

REFERENCE BOOKS:

1. UnderstandingMachine Learning: From Theory toAlgorithms, Shai Shalev-Shwartz, Shai

Ben-

David, Cambridge.

2. Machine Learning in Action, Peter Harington, 2012, Cengage.

You might also like

- Bringing Words To LifeDocument23 pagesBringing Words To LifeKatarina Mijatovic100% (3)

- Regenerative Braking SystemDocument23 pagesRegenerative Braking SystemAnkit BurnwalNo ratings yet

- Cell Transport WorksheetDocument2 pagesCell Transport WorksheetVictoria Niño D100% (1)

- Perfect Rigor - A Genius and The Mathematical Breakthrough of The CenturyDocument217 pagesPerfect Rigor - A Genius and The Mathematical Breakthrough of The CenturyChin Mun LoyNo ratings yet

- Vikash Kumar: Career ObjectiveDocument2 pagesVikash Kumar: Career ObjectiveAnikesh SinghNo ratings yet

- Statistics With R ProgrammingDocument2 pagesStatistics With R ProgrammingShoba DevulapalliNo ratings yet

- Operating Systems Course DescriptionDocument5 pagesOperating Systems Course DescriptionNarasimYNo ratings yet

- MACHINE LEARNING ALGORITHM - Unit-1-1Document78 pagesMACHINE LEARNING ALGORITHM - Unit-1-1akash chandankar100% (1)

- MTCSE1201Document2 pagesMTCSE1201sirishaksnlpNo ratings yet

- ML SyllabusDocument3 pagesML SyllabusKasi BandlaNo ratings yet

- 1.introduction To Machine Learning (ML), OBEDocument7 pages1.introduction To Machine Learning (ML), OBEFARHANA BANONo ratings yet

- ML AiDocument2 pagesML AiSUYASH SHARTHINo ratings yet

- Machine Learning (Open Elective) B.Tech. ECE LTPC 3 0 0 3 ObjectivesDocument2 pagesMachine Learning (Open Elective) B.Tech. ECE LTPC 3 0 0 3 ObjectivesDevadas M ECENo ratings yet

- R18B Tech MinorIVYearISemesterTENTATIVESyllabusDocument22 pagesR18B Tech MinorIVYearISemesterTENTATIVESyllabuspreeti2002isaNo ratings yet

- 3rd SemDocument25 pages3rd Semsaish mirajkarNo ratings yet

- CSAXXXX Applied Machine LearningDocument3 pagesCSAXXXX Applied Machine LearningAadarsh ChauhanNo ratings yet

- Gujarat Technological UniversityDocument3 pagesGujarat Technological Universityrashmin tannaNo ratings yet

- Machine Lerning Syllabus FinalDocument3 pagesMachine Lerning Syllabus Finals sNo ratings yet

- Machine Learning SyllabusDocument5 pagesMachine Learning SyllabusVinodh GauNo ratings yet

- B.E. Nano Technology Updated On 21.09.2017 Syla 6Document70 pagesB.E. Nano Technology Updated On 21.09.2017 Syla 6sayaliNo ratings yet

- ML 18ai61Document2 pagesML 18ai61sharonsajan438690No ratings yet

- Syllabus Pattern Recognition and Machine LearningDocument1 pageSyllabus Pattern Recognition and Machine Learningcool_spNo ratings yet

- EEPC1T6ADocument2 pagesEEPC1T6AolexNo ratings yet

- AIML SyllabusDocument3 pagesAIML Syllabussbhardwaj7542879404No ratings yet

- Soft Computing SyllabusDocument3 pagesSoft Computing SyllabusAnil V. WalkeNo ratings yet

- CS467 Machine LearningDocument3 pagesCS467 Machine LearningEbin PunnethottathilNo ratings yet

- Machine Learning SyllabusDocument1 pageMachine Learning SyllabusmrameshmeNo ratings yet

- Pattern Recognition and Machine Learning Teaching SchemeDocument1 pagePattern Recognition and Machine Learning Teaching SchemeSubramanyam AvvaruNo ratings yet

- Syllabus of Iii Semester B.E. Computer Science (Semester Pattern) 33CS1: Applied MathematicsDocument3 pagesSyllabus of Iii Semester B.E. Computer Science (Semester Pattern) 33CS1: Applied MathematicsVaidehiBaporikarNo ratings yet

- DS-DS Lab-1Document4 pagesDS-DS Lab-1Chaitanya 2003No ratings yet

- Book Review Aditi Anchaliya - 20060641001Document3 pagesBook Review Aditi Anchaliya - 20060641001ADITI ANCHALIYA (200703076)No ratings yet

- ML SyllabusDocument1 pageML SyllabusGee JayNo ratings yet

- Gujarat Technological University: TotalDocument3 pagesGujarat Technological University: TotalDhruv DesaiNo ratings yet

- Original 1676265125 DAA SyllabusDocument2 pagesOriginal 1676265125 DAA SyllabusVignesh DevamullaNo ratings yet

- DAA SyllabusDocument2 pagesDAA SyllabusLalithya TumuluriNo ratings yet

- CS482 Data Structures (Ktustudents - In)Document3 pagesCS482 Data Structures (Ktustudents - In)Sreekesh GiriNo ratings yet

- Syllabus Sem 7Document15 pagesSyllabus Sem 7Shankar SinghNo ratings yet

- Machine LearningDocument46 pagesMachine LearningZarfa Masood100% (1)

- M.SC - II MathematicsDocument12 pagesM.SC - II MathematicsSwalikNo ratings yet

- Course Code Course Name L-T-P Credits Year of CS467 Machine Learning 3-0-0-3 2016 Course ObjectivesDocument3 pagesCourse Code Course Name L-T-P Credits Year of CS467 Machine Learning 3-0-0-3 2016 Course ObjectivesSidharth SanilNo ratings yet

- M.E. Computer Science and Engineering - Curriculum - R2019 PageDocument2 pagesM.E. Computer Science and Engineering - Curriculum - R2019 PageHRIDYA VENUGOPAL CSENo ratings yet

- Website SyllabusDocument1 pageWebsite SyllabusMonis KhanNo ratings yet

- CS360 ML Syllabus - 12102022Document5 pagesCS360 ML Syllabus - 12102022helloNo ratings yet

- UG 4-2 CSE-revised Syllabus-Updated On 20-07-2017Document15 pagesUG 4-2 CSE-revised Syllabus-Updated On 20-07-2017Prasad KpNo ratings yet

- Syllabus 4 ThsemDocument11 pagesSyllabus 4 ThsemARPITA ARORANo ratings yet

- CS4T2Document2 pagesCS4T2aaroon blackNo ratings yet

- Week 1Document3 pagesWeek 1Micheal BudhathokiNo ratings yet

- BCA Data Analytics V2Document39 pagesBCA Data Analytics V2Ruby PoddarNo ratings yet

- CS482 Data StructuresDocument3 pagesCS482 Data StructureshaseenaNo ratings yet

- Course Code CSA400 8 Course Type LTP Credits 4: Applied Machine LearningDocument3 pagesCourse Code CSA400 8 Course Type LTP Credits 4: Applied Machine LearningDileep singh Rathore 100353No ratings yet

- Introduction To Machine LearningDocument2 pagesIntroduction To Machine LearningsirishaksnlpNo ratings yet

- OR SyllabusDocument2 pagesOR SyllabusyogikorramutlaNo ratings yet

- 11 - BE-VI Sem - Introduction To Machine Learning - ITDocument1 page11 - BE-VI Sem - Introduction To Machine Learning - ITShashank RocksNo ratings yet

- IV Sem BTech AIML230222083345Document10 pagesIV Sem BTech AIML230222083345rajtiwari7284No ratings yet

- DAA SyllabusDocument2 pagesDAA Syllabussushankreddy0712No ratings yet

- MathDocument1 pageMathjatinNo ratings yet

- Mth213:Complex Algebra: Page:1/1 Print Date: 6/23/2016 2:48:24 PMDocument1 pageMth213:Complex Algebra: Page:1/1 Print Date: 6/23/2016 2:48:24 PMvenkatNo ratings yet

- The Various Classification and Clustering TechniquesDocument2 pagesThe Various Classification and Clustering TechniqueskayyurNo ratings yet

- ObjectiveDocument3 pagesObjectiveMaha KhanNo ratings yet

- 3CP10Document1 page3CP10bharatNo ratings yet

- CS8082 MLT 2017 SyllabusDocument1 pageCS8082 MLT 2017 SyllabusThamil Selvi RaviNo ratings yet

- ANN SyllabusDocument2 pagesANN SyllabusSushma SJNo ratings yet

- M.sc. MathematicsDocument78 pagesM.sc. MathematicsSiddha FasonNo ratings yet

- SyllabusDocument1 pageSyllabusseema sharmaNo ratings yet

- Mastering Python: A Comprehensive Guide to ProgrammingFrom EverandMastering Python: A Comprehensive Guide to ProgrammingNo ratings yet

- Welcome To The Great Logo Adventure!Document4 pagesWelcome To The Great Logo Adventure!scribdmuthuNo ratings yet

- Seuring+and+Muller 2008Document12 pagesSeuring+and+Muller 2008Stephanie Cutts CheneyNo ratings yet

- Effects of New Normal Education To Senior High Students of San Roque National High School RESEARCH GROUP 2 2Document11 pagesEffects of New Normal Education To Senior High Students of San Roque National High School RESEARCH GROUP 2 2Elle MontefalcoNo ratings yet

- Natal Chart (Data Sheet) : Jul - Day 2442664.865117 TT, T 46.1 SecDocument1 pageNatal Chart (Data Sheet) : Jul - Day 2442664.865117 TT, T 46.1 SecДеан ВеселиновићNo ratings yet

- Principles of Health EducationDocument3 pagesPrinciples of Health EducationJunah DayaganonNo ratings yet

- Help Utf8Document8 pagesHelp Utf8Jorge Diaz LastraNo ratings yet

- Clean India Green India: Hindi VersionDocument3 pagesClean India Green India: Hindi VersionkaminiNo ratings yet

- Kotak Mahindra Bank: Presented by Navya.CDocument29 pagesKotak Mahindra Bank: Presented by Navya.CmaheshfbNo ratings yet

- TVL IACSS9 12ICCS Ia E28 MNHS SHS CAMALIGDocument4 pagesTVL IACSS9 12ICCS Ia E28 MNHS SHS CAMALIGKattie Alison Lumio MacatuggalNo ratings yet

- Lab CPD-2Document35 pagesLab CPD-2Phu nguyen doanNo ratings yet

- Cambridge International Examinations Cambridge International Advanced LevelDocument8 pagesCambridge International Examinations Cambridge International Advanced LevelIlesh DinyaNo ratings yet

- SCE - EN - 032-600 Global Data Blocks - S7-1500 - R1508Document39 pagesSCE - EN - 032-600 Global Data Blocks - S7-1500 - R1508rakesgNo ratings yet

- English: Quarter 1 - Module 1Document34 pagesEnglish: Quarter 1 - Module 1Roel MabbayadNo ratings yet

- Material No 982981Document23 pagesMaterial No 982981Yelena ObyazovaNo ratings yet

- Information TechnologyDocument7 pagesInformation TechnologyDEVANAND ANo ratings yet

- LP Grade 8 MolaveDocument19 pagesLP Grade 8 MolaveBlessing Shielo VicenteNo ratings yet

- Word 2016, Using Mail MergeDocument6 pagesWord 2016, Using Mail MergePelah Wowen DanielNo ratings yet

- Algorithm Exam HelpDocument9 pagesAlgorithm Exam HelpProgramming Exam HelpNo ratings yet

- Manual Usuario Hiki User-Manual-5392612Document174 pagesManual Usuario Hiki User-Manual-5392612Rafael MunueraNo ratings yet

- 7 How To Build The Super-Parasol Part IDocument6 pages7 How To Build The Super-Parasol Part IkroitorNo ratings yet

- Our Proper Home Garbage Disposal GuideDocument7 pagesOur Proper Home Garbage Disposal GuideNovelNo ratings yet

- Mid Term Asssignment - The Five Senses - PrimaryDocument5 pagesMid Term Asssignment - The Five Senses - PrimaryPrajna MoodabidriNo ratings yet

- WGS RXS - FTXS15 24lvjuDocument6 pagesWGS RXS - FTXS15 24lvjuRicky ViadorNo ratings yet

- TErmination Letter-ContractorDocument1 pageTErmination Letter-ContractorBojoNo ratings yet