Professional Documents

Culture Documents

Lecture 3-Features, Neural Networks

Uploaded by

Sai LakshmiOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Lecture 3-Features, Neural Networks

Uploaded by

Sai LakshmiCopyright:

Available Formats

LECTURE 3-FEATURES,NEURAL NETWORKS

Score: It is a dot product between the

wave vector and feature vector.

Score drives the prediction, in Linear Regression we output score as number whereas

in binary classification we output the sign of the score.

We have already learnt to select w via optimization, now our interest will be

on φ.

In real life feature extraction (specifying φ(x) based on our knowledge) plays a

key role. A feature extractor takes an input and outputs a bunch of features which

are useful for our prediction. We need to have a principle to decide how many

features do we select and how to select them.

If a group of features are all computed in same way then they are all known as

“feature template”. Describing multiple features in a unified way is highly helpful for

clarity especially when we are working with complex data for example image plus

some metadata. But a feature template can have a number of features. Most

efficient way to represent all of them is in the form of a vector. If we have few

features with non-zero values then we can also use maps or dictionaries of python

,which are used in NLP.

A “Hypothesis class” is a set

of all predictors we can get by

varying wave vector w. If we take a

linear function φ(x)=x then by varying w we get a large number of functions which

are nothing but straight lines passing through origin with different slope. All these

lines together are considered to be a hypothesis class.

Above picture shows the full pipeline of machine learning. From all possible functions

we select a set of functions and then through available data we select the required

function. Here we face two problems, either we didn’t have enough features or we

fail to optimize the features efficiently. Either way our predictor score will be not up

to mark.

NEURAL NETWORKS:

When we face with complex situations we shift to neural networks from linear

classifiers. In a simple way, Neural networks are a bunch of linear classifiers which

are stitched together with some non-linearity.

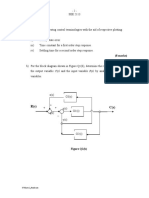

Above picture shows that neural networks tries to break down the problem into a set

of subproblems (each one is an output of a linear classifier) and again applies a linear

classifier and finally gets the score. The sigmoid function is our choice but it should

be a non-linear function(ex: logistic function/RLU).

Now training loss depends on both V and w. We know that

TrainLoss(V,w)=1/|Dtrain| ∑ Loss(x,y,w,V).We need to minimise the gradient of

TrainLoss as we did in Linear Classifiers. In neural networks we have 5 basic building

blocks - +,-,*,max and σ. With these 5 blocks we can have a number of layers and can

design any function.

Backpropagation is an algorithm that allows us to compute gradients for any

computation graph.It computes 2 values forward and backward. Nowadays pytorch

and tensorflow automatically calculate gradient ,which often becomes a tedious task

when we have many layers.

You might also like

- ML Module IiDocument24 pagesML Module IiCrazy ChethanNo ratings yet

- Machine Learning and Pattern Recognition Week 8 Neural Net IntroDocument3 pagesMachine Learning and Pattern Recognition Week 8 Neural Net IntrozeliawillscumbergNo ratings yet

- Neural NetworksDocument37 pagesNeural NetworksNAIMUL HASAN NAIMNo ratings yet

- Artificial Neural Network Image Processing: Presented byDocument88 pagesArtificial Neural Network Image Processing: Presented bymadhu shree mNo ratings yet

- Unit 03 - Neural Networks - MDDocument24 pagesUnit 03 - Neural Networks - MDMega Silvia HasugianNo ratings yet

- Types of Machine Learning: Supervised Learning: The Computer Is Presented With Example Inputs and TheirDocument50 pagesTypes of Machine Learning: Supervised Learning: The Computer Is Presented With Example Inputs and TheirJayesh GadewarNo ratings yet

- Anuranan Das Summer of Sciences, 2019. Understanding and Implementing Machine LearningDocument17 pagesAnuranan Das Summer of Sciences, 2019. Understanding and Implementing Machine LearningAnuranan DasNo ratings yet

- SOS Final SubmissionDocument36 pagesSOS Final SubmissionAyush JadiaNo ratings yet

- Notes On Introduction To Deep LearningDocument19 pagesNotes On Introduction To Deep Learningthumpsup1223No ratings yet

- Deep Learning Andrew NGDocument173 pagesDeep Learning Andrew NGkanakamedala sai rithvik100% (3)

- Unit 4Document38 pagesUnit 4Abhinav KaushikNo ratings yet

- Image Classification Using CNN: Page - 1Document13 pagesImage Classification Using CNN: Page - 1BhanuprakashNo ratings yet

- Project Report: Optical Character Recognition Using Artificial Neural NetworkDocument9 pagesProject Report: Optical Character Recognition Using Artificial Neural NetworkRichard JamesNo ratings yet

- Functii de Activare1Document89 pagesFunctii de Activare1adrian IosifNo ratings yet

- cs188 sp23 Note25Document8 pagescs188 sp23 Note25sondosNo ratings yet

- Linear Separability: CEC414: AI - Revision QuestionsDocument3 pagesLinear Separability: CEC414: AI - Revision QuestionswisdomNo ratings yet

- Unit 2Document10 pagesUnit 2Lakshmi Nandini MenteNo ratings yet

- Unit 2 v1.Document41 pagesUnit 2 v1.Kommi Venkat sakethNo ratings yet

- Character Recognition Using Neural Networks: Rókus Arnold, Póth MiklósDocument4 pagesCharacter Recognition Using Neural Networks: Rókus Arnold, Póth MiklósSharath JagannathanNo ratings yet

- CS 188 Introduction To Artificial Intelligence Fall 2017 Note 10 Neural Networks: MotivationDocument9 pagesCS 188 Introduction To Artificial Intelligence Fall 2017 Note 10 Neural Networks: MotivationEman JaffriNo ratings yet

- Unit 4Document9 pagesUnit 4akkiketchumNo ratings yet

- PerceptronDocument2 pagesPerceptronMarcelo ZanzottiNo ratings yet

- Multi-Layer Perceptron TutorialDocument87 pagesMulti-Layer Perceptron TutorialSari AyuNo ratings yet

- Convolutional Neural Network Architecture - CNN ArchitectureDocument13 pagesConvolutional Neural Network Architecture - CNN ArchitectureRathi PriyaNo ratings yet

- Bai 1 EngDocument10 pagesBai 1 EngHanh Hong LENo ratings yet

- Understanding and Coding Neural Networks From Scratch in Python and RDocument12 pagesUnderstanding and Coding Neural Networks From Scratch in Python and RKhương NguyễnNo ratings yet

- Deep Learning - DL-2Document44 pagesDeep Learning - DL-2Hasnain AhmadNo ratings yet

- Experiment #3Document9 pagesExperiment #3Jhustine CañeteNo ratings yet

- Neural Networks: Aroob Amjad FarrukhDocument6 pagesNeural Networks: Aroob Amjad FarrukhAroob amjadNo ratings yet

- An Introduction To Neural Networks: Instituto Tecgraf PUC-Rio Nome: Fernanda Duarte Orientador: Marcelo GattassDocument45 pagesAn Introduction To Neural Networks: Instituto Tecgraf PUC-Rio Nome: Fernanda Duarte Orientador: Marcelo GattassGiGa GFNo ratings yet

- Deep Learning Algorithms Report PDFDocument11 pagesDeep Learning Algorithms Report PDFrohillaanshul12No ratings yet

- Fundamentals Deep Learning Activation Functions When To Use ThemDocument15 pagesFundamentals Deep Learning Activation Functions When To Use ThemfaisalNo ratings yet

- SVM - AnnDocument29 pagesSVM - AnnRajaNo ratings yet

- CS231n Convolutional Neural Networks For Visual Recognition 2Document12 pagesCS231n Convolutional Neural Networks For Visual Recognition 2famasyaNo ratings yet

- A Probabilistic Theory of Deep Learning: Unit 2Document17 pagesA Probabilistic Theory of Deep Learning: Unit 2HarshitNo ratings yet

- Exercise 2: Hopeld Networks: Articiella Neuronnät Och Andra Lärande System, 2D1432, 2004Document8 pagesExercise 2: Hopeld Networks: Articiella Neuronnät Och Andra Lärande System, 2D1432, 2004pavan yadavNo ratings yet

- Demystifying The Mathematics Behind Convolutional Neural Networks (CNNS)Document19 pagesDemystifying The Mathematics Behind Convolutional Neural Networks (CNNS)faisalNo ratings yet

- Deep-Learning Notes 01Document8 pagesDeep-Learning Notes 01Ankit MahapatraNo ratings yet

- Deep Learning Assignment 1 Solution: Name: Vivek Rana Roll No.: 1709113908Document5 pagesDeep Learning Assignment 1 Solution: Name: Vivek Rana Roll No.: 1709113908vikNo ratings yet

- DL Unit1Document10 pagesDL Unit1Ankit MahapatraNo ratings yet

- Deep Learning PDFDocument55 pagesDeep Learning PDFNitesh Kumar SharmaNo ratings yet

- Applied Deep Learning - Part 1 - Artificial Neural Networks - by Arden Dertat - Towards Data ScienceDocument34 pagesApplied Deep Learning - Part 1 - Artificial Neural Networks - by Arden Dertat - Towards Data ScienceMonika DhochakNo ratings yet

- Content-Based Image Retrieval TutorialDocument16 pagesContent-Based Image Retrieval Tutorialwenhao zhangNo ratings yet

- Lecture 2: Basics and Definitions: Networks As Data ModelsDocument28 pagesLecture 2: Basics and Definitions: Networks As Data ModelsshardapatelNo ratings yet

- Predictive Analysis Unit 4Document40 pagesPredictive Analysis Unit 4nenavathprasad170No ratings yet

- Image Classification Using Small Convolutional Neural NetworkDocument5 pagesImage Classification Using Small Convolutional Neural NetworkKompruch BenjaputharakNo ratings yet

- Deep Learning and Its ApplicationsDocument21 pagesDeep Learning and Its ApplicationsAman AgarwalNo ratings yet

- Neural Networks and Deep LearningDocument19 pagesNeural Networks and Deep LearningNitesh YadavNo ratings yet

- Deep LearningDocument12 pagesDeep Learningshravan3394No ratings yet

- The Weka Multilayer Perceptron Classifier: Daniel I. MORARIU, Radu G. Creţulescu, Macarie BreazuDocument9 pagesThe Weka Multilayer Perceptron Classifier: Daniel I. MORARIU, Radu G. Creţulescu, Macarie BreazuAliza KhanNo ratings yet

- Demystifying Deep Learning2Document82 pagesDemystifying Deep Learning2Mario CordinaNo ratings yet

- 10 Neural NetworksDocument12 pages10 Neural NetworksJiahong HeNo ratings yet

- 07 The Basics of Ai & Machine LearningDocument57 pages07 The Basics of Ai & Machine LearningPatrick HugoNo ratings yet

- Unit 2 - Machine LearningDocument19 pagesUnit 2 - Machine LearningGauri BansalNo ratings yet

- Understanding of Convolutional Neural Network (CNN) - Deep LearningDocument7 pagesUnderstanding of Convolutional Neural Network (CNN) - Deep LearningKashaf BakaliNo ratings yet

- From Perceptron To Deep Neural Nets - Becoming Human - Artificial Intelligence MagazineDocument36 pagesFrom Perceptron To Deep Neural Nets - Becoming Human - Artificial Intelligence MagazineWondwesen FelekeNo ratings yet

- Support Vactor Machine FinalDocument11 pagesSupport Vactor Machine Finalsesixad248No ratings yet

- Convolutional Neural NetworksDocument21 pagesConvolutional Neural NetworksHarshaNo ratings yet

- NNDL Assignment AnsDocument15 pagesNNDL Assignment AnsCS50 BootcampNo ratings yet

- Full Download Problem Solving With C 10th Edition Savitch Test BankDocument32 pagesFull Download Problem Solving With C 10th Edition Savitch Test Bankarrowcornet0100% (31)

- Perl Programming TutorialDocument43 pagesPerl Programming TutorialGábor ImolaiNo ratings yet

- An Arithmetic Series Is The Sum of An Arithmetic SequenceDocument6 pagesAn Arithmetic Series Is The Sum of An Arithmetic SequenceRaeley JynNo ratings yet

- Our Hydraulic ReportDocument12 pagesOur Hydraulic Report功夫熊貓100% (1)

- Mathematical Description of OFDMDocument8 pagesMathematical Description of OFDMthegioiphang_1604No ratings yet

- Michelle Bodnar, Andrew Lohr January 3, 2018Document12 pagesMichelle Bodnar, Andrew Lohr January 3, 2018ankit pandeyNo ratings yet

- Recurrence Relation: Fall 2002 CMSC 203 - Discrete Structures 1Document23 pagesRecurrence Relation: Fall 2002 CMSC 203 - Discrete Structures 1stumpydumptyNo ratings yet

- Dcs Project1Document13 pagesDcs Project1Sujeet KumarNo ratings yet

- Bcoc - 134 (1) (2) - 230309 - 205414Document4 pagesBcoc - 134 (1) (2) - 230309 - 205414Nikesh KumarNo ratings yet

- R(S) C(S) : 0708sem1 - FinalexamDocument27 pagesR(S) C(S) : 0708sem1 - FinalexamAmiruddin Mohd ArifuddinNo ratings yet

- M201 Case Study #1 - Decision TheoryDocument2 pagesM201 Case Study #1 - Decision Theoryblack_belter789No ratings yet

- Shear Force and Bending Moment Diagrams ZP 14 PDFDocument17 pagesShear Force and Bending Moment Diagrams ZP 14 PDFZdenkoNo ratings yet

- Conditional Expectation NotesDocument21 pagesConditional Expectation NotesKarimaNo ratings yet

- RIS Book List Grade 6Document4 pagesRIS Book List Grade 6saud sheikhNo ratings yet

- Kütük Cubuk Uyum StandartlariDocument2 pagesKütük Cubuk Uyum StandartlariÖZGÜRNo ratings yet

- ICE-EM Year 8 Homework Chapter 1ADocument2 pagesICE-EM Year 8 Homework Chapter 1AsamyfotoworksNo ratings yet

- Nature's Number's By: Ian Stewart: CHAPTER 8: Do Dice Play God?Document5 pagesNature's Number's By: Ian Stewart: CHAPTER 8: Do Dice Play God?Patricia Bawiin †No ratings yet

- CFA GuideDocument5 pagesCFA Guideshresth toshniwalNo ratings yet

- Fault Resistance ValuesDocument9 pagesFault Resistance ValuesYogesh Dethe100% (1)

- Demostration of Boyle's Law ReportDocument5 pagesDemostration of Boyle's Law ReportميسرةNo ratings yet

- C++ Programming: Chapter 1: Revision On Problem Solving TechniquesDocument21 pagesC++ Programming: Chapter 1: Revision On Problem Solving TechniquesShumet WoldieNo ratings yet

- General Physics 1 Lecture Practice Final ExaminationDocument17 pagesGeneral Physics 1 Lecture Practice Final ExaminationJithin GeorgeNo ratings yet

- Thermodynamical Modeling of A Car CabinDocument64 pagesThermodynamical Modeling of A Car Cabinchristopher_moran_6No ratings yet

- CP and CPK SolutionDocument5 pagesCP and CPK SolutionlawtonNo ratings yet

- Deleuze and Badiou On Being and The Even PDFDocument16 pagesDeleuze and Badiou On Being and The Even PDFLógica UsbNo ratings yet

- CoreJAVA PracticalsDocument2 pagesCoreJAVA PracticalsKarmik PatelNo ratings yet

- Chapter 6 ConvertersDocument50 pagesChapter 6 ConvertersGohgulan Murugan100% (2)

- Introduction To Experimental ErrorsDocument24 pagesIntroduction To Experimental ErrorsDendi ZezimaNo ratings yet

- Silo Vent FilterDocument2 pagesSilo Vent FilterSUPERMIX EquipmentsNo ratings yet

- Laminar and Turbulent in Pipe-2 PDFDocument20 pagesLaminar and Turbulent in Pipe-2 PDFBoos yousufNo ratings yet