Professional Documents

Culture Documents

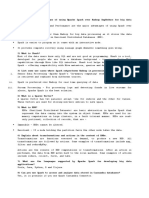

Pyspark SQL Basics Cheat Sheet: Python For Data Science

Uploaded by

Gary Joel Pimentel RosarioOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Pyspark SQL Basics Cheat Sheet: Python For Data Science

Uploaded by

Gary Joel Pimentel RosarioCopyright:

Available Formats

> Duplicate Values > GroupBy

Python For Data Science >>> df = df.dropDuplicates() >>> df.groupBy("age")\ #Group by age, count the members in the groups

.count() \

.show()

PySpark SQL Basics Cheat Sheet > Queries

>>> q

from pyspark.s l import functions as F > Sort

Learn PySpark SQL online at www.DataCamp.com

Select >>> peopledf.sort(peopledf.age.desc()).collect()

>>> df.select("firstName").show() #Show all entries in firstName column

>>> df.sort("age", ascending=False).collect()

>>> df.select("firstName","lastName") \

>>> df.orderBy(["age","city"],ascending=[0,1])\

.show()

.collect()

>>> df.select("firstName", #Show all entries in firstName, age and type

PySpark & Spark SQL "age",

explode("phoneNumber") \

.alias("contactInfo")) \

.select("contactInfo.type",

> Repartitioning

Spark SQL is Apache Spark's module

"firstName",

"age") \

>>> df.repartition(10)\ #df with 10 partitions

for working with structured data. .rdd \

.show()

>>> df.select(df["firstName"],df["age"]+ 1)

#Show all entries in firstName and age,

.getNumPartitions()

.show()

add 1 to the entries of age >>> df.coalesce(1).rdd.getNumPartitions() #df with 1 partition

>>> df.select(df['age'] > 24).show() #Show all entries where age >24

> Initializing SparkSession

A SparkSession can be used create DataFrame, register DataFrame as tables,

When

>>> df.select("firstName", #Show firstName and 0 or 1 depending

on age >30

> Running Queries Programmatically

execute SQL over tables, cache tables, and read parquet files. F.when(df.age > 30, 1) \

>>> q

from pyspark.s l import SparkSession

.otherwise(0)) \

Registering DataFrames as Views

>>> spark = SparkSession \

.show()

>>> df[df.firstName.isin("Jane","Boris")] #Show firstName if in the given options

>>> peopledf.createGlobalTempView("people")

d \

.buil er

>>> df.createTempView("customer")

.appName("Python Spark SQL basic example") \

.collect()

>>> df.createOrReplaceTempView("customer")

.config("spark.some.config.option", "some-value") \

Like

.getOrCreate() Query Views

>>> df.select("firstName", #Show firstName, and lastName is

TRUE if lastName is like Smith

df.lastName.like("Smith")) \

>>> df5 = spark.sql("SELECT * FROM customer").show()

.show()

>>> peopledf2 = spark.sql("SELECT * FROM global_temp.people")\

.show()

> Creating DataFrames Startswith - Endswith

>>> df.select("firstName", #Show firstName, and TRUE if lastName starts with Sm

df.lastName \

From RDDs .startswith("Sm")) \

> Inspect Data

.show()

>>> q * >>> df.select(df.lastName.endswith("th"))\ #Show last names ending in th

from pyspark.s l.types import >>> df.dtypes #Return df column names and data types

.show()

>>> df.show() #Display the content of df

Infer Schema

b

Su string >>> df.head() #Return first n rows

>>> sc = C

spark.spark ontext >>> df.first() #Return first row

>>> lines = sc.textFile("people.txt")

>>> df.select(df.firstName.substr(1, 3) \ #Return substrings of firstName

>>> df.take(2) #Return the first n rows >>> df.schema Return the schema of df

.alias("name")) \

>>> parts = lines.map(lambda l: l.split(","))

>>> df.describe().show() #Compute summary statistics >>> df.columns Return the columns of df

.collect()

>>> people = parts.map(lambda p: Row(name=p[0],age=int(p[1])))

>>> df.count() #Count the number of rows in df

>>> peopledf = spark.createDataFrame(people) >>> df.distinct().count() #Count the number of distinct rows in df

Between

>>> df.printSchema() #Print the schema of df

Specify Schema >>> df.select(df.age.between(22, 24)) \ #Show age: values are TRUE if between

22 and 24

>>> df.explain() #Print the (logical and physical) plans

.show()

>>> people = (

parts.map lamb a p d : Row(name=p[0],

age=int(p[1].strip())))

>>> schemaString =

"name age"

>>> fields = [StructField(field_name, StringType(), True) for

> Output

field_name in schemaString.split()]

>>> schema = StructType(fields)

> Add, Update & Remove Columns

>>> spark.createDataFrame(people, schema).show() Data Structures

Adding Columns

>>> rdd1 = df.rdd #Convert df into an RDD

>>> df = df.withColumn('city',df.address.city) \

>>> df.toJSON().first() #Convert df into a RDD of string

.withColumn('postalCode',df.address.postalCode) \

>>> df.toPandas() #Return the contents of df as Pandas

DataFrame

From Spark Data Sources .withColumn('state',df.address.state) \

.withColumn('streetAddress',df.address.streetAddress) \

Write & Save to Files

.withColumn('telePhoneNumber', explode(df.phoneNumber.number)) \

>>> df.select("firstName", "city")\

JSON .withColumn('telePhoneType', explode(df.phoneNumber.type))

.write \

>>> df = spark.read.json("customer.json")

.save("nameAndCity.parquet")

>>> df.show() Updating Columns >>> df.select("firstName", "age") \

>>> df = df.withColumnRenamed('telePhoneNumber', 'phoneNumber') .write \

.save("namesAndAges.json",format="json")

Removing Columns

>>> df2 = d

spark.rea .loa d("people.json", = j )

format " son" >>> df = df.drop("address", "phoneNumber")

>>> df = df.drop(df.address).drop(df.phoneNumber)

Parquet files

>>> df3 = d

spark.rea .loa d("users.parquet") > Stopping SparkSession

TXT files

>>> df4 = d (

spark.rea .text "people.txt" )

> Missing & Replacing Values >>> spark.stop()

>>> df.na.fill(50).show() #Replace null values

>>> df.na.drop().show() #Return new df omitting rows with null values

>>> df.na \ #Return new df replacing one value with another

> Filter .replace(10, 20) \

.show()

#Filter entries of age, only keep those records of which the values are >24

>>> df.filter(df["age"]>24).show() Learn Data Skills Online at www.DataCamp.com

You might also like

- Cheat Sheet: From Spark Data Sources SQL QueriesDocument1 pageCheat Sheet: From Spark Data Sources SQL Queriesanuja shindeNo ratings yet

- Cleaning Data With PySpark Chapter2Document25 pagesCleaning Data With PySpark Chapter2FgpeqwNo ratings yet

- Practical Machine Learning with Spark: Uncover Apache Spark’s Scalable Performance with High-Quality Algorithms Across NLP, Computer Vision and MLFrom EverandPractical Machine Learning with Spark: Uncover Apache Spark’s Scalable Performance with High-Quality Algorithms Across NLP, Computer Vision and MLNo ratings yet

- Py SparkDocument195 pagesPy SparkZerihun Alemayehu75% (4)

- Notes of Azure Data BricksDocument16 pagesNotes of Azure Data BricksVikram sharmaNo ratings yet

- Spark SQLDocument24 pagesSpark SQLJaswanth ChowdarysNo ratings yet

- PySpark RDD Basics PDFDocument1 pagePySpark RDD Basics PDFCOCONo ratings yet

- Data EngineeringDocument121 pagesData Engineeringk2sh07100% (1)

- My Pyspark Practice NotesDocument63 pagesMy Pyspark Practice NotesStudy TableNo ratings yet

- Cleaning Data With PySpark Chapter1Document20 pagesCleaning Data With PySpark Chapter1Fgpeqw0% (1)

- Pyspark PDFDocument406 pagesPyspark PDFjaferNo ratings yet

- Spark With BigdataDocument94 pagesSpark With BigdataNagendra VenkatNo ratings yet

- Apache Spark Interview Questions and Answers For 2020Document8 pagesApache Spark Interview Questions and Answers For 2020Shashank AbhishekNo ratings yet

- SparksqlDocument33 pagesSparksqlmihirhotaNo ratings yet

- Ultimate Data Engineering with Databricks: Develop Scalable Data Pipelines Using Data Engineering's Core Tenets Such as Delta Tables, Ingestion, Transformation, Security, and ScalabilityFrom EverandUltimate Data Engineering with Databricks: Develop Scalable Data Pipelines Using Data Engineering's Core Tenets Such as Delta Tables, Ingestion, Transformation, Security, and ScalabilityNo ratings yet

- Sparksql PDFDocument119 pagesSparksql PDFKarteekMudigonda100% (1)

- Pyspark Interview CodeDocument197 pagesPyspark Interview Codemailme me100% (1)

- Pyspark Interview Questions: Click HereDocument35 pagesPyspark Interview Questions: Click HereRajachandra Voodiga0% (1)

- Beginning Azure Synapse Analytics: Transition from Data Warehouse to Data LakehouseFrom EverandBeginning Azure Synapse Analytics: Transition from Data Warehouse to Data LakehouseNo ratings yet

- Spark Interview QuestionsDocument7 pagesSpark Interview QuestionsRajesh Sugumaran100% (1)

- Introduction To PySparkDocument21 pagesIntroduction To PySparkJason White100% (1)

- Databricks For The SQL Developer: Gerhard BruecklDocument40 pagesDatabricks For The SQL Developer: Gerhard BruecklTirumalesh ReddyNo ratings yet

- Databricks Sparkconfig 1669383836Document1 pageDatabricks Sparkconfig 1669383836SirigiriHariKrishnaNo ratings yet

- Introduction to KNN Classification with Python Implementation (40Document125 pagesIntroduction to KNN Classification with Python Implementation (40Abhiraj Das100% (1)

- Pyspark Basic TasksDocument8 pagesPyspark Basic TasksTalha TariqNo ratings yet

- Impala and BigQueryDocument47 pagesImpala and BigQuerydurdurkNo ratings yet

- 99 Apache Spark Interview Questions For ProfessionalsDocument11 pages99 Apache Spark Interview Questions For Professionalsyku4933% (12)

- Learning Apache Spark With PythonDocument10 pagesLearning Apache Spark With PythondalalroshanNo ratings yet

- Some of The Frequently Asked Interview Questions For Hadoop Developers AreDocument72 pagesSome of The Frequently Asked Interview Questions For Hadoop Developers AreAmandeep Singh100% (1)

- Machine Learning in the AWS Cloud: Add Intelligence to Applications with Amazon SageMaker and Amazon RekognitionFrom EverandMachine Learning in the AWS Cloud: Add Intelligence to Applications with Amazon SageMaker and Amazon RekognitionNo ratings yet

- Spark Interview Ques1Document20 pagesSpark Interview Ques1Nareshkumar NakirikantiNo ratings yet

- Cleaning Data With PySpark Chapter4Document23 pagesCleaning Data With PySpark Chapter4FgpeqwNo ratings yet

- Top Answers To Spark Interview QuestionsDocument4 pagesTop Answers To Spark Interview QuestionsEjaz AlamNo ratings yet

- Operationalizing Machine Learning Pipelines: Building Reusable and Reproducible Machine Learning Pipelines Using MLOpsFrom EverandOperationalizing Machine Learning Pipelines: Building Reusable and Reproducible Machine Learning Pipelines Using MLOpsNo ratings yet

- Pandas Practice QuestionsDocument2 pagesPandas Practice QuestionsAbu ShahmaNo ratings yet

- Spark SQL, RDDs, and DataFramesDocument12 pagesSpark SQL, RDDs, and DataFramesRambabu GiduturiNo ratings yet

- Spark Intreview FAQDocument21 pagesSpark Intreview FAQharanadhc100% (1)

- Data Handing Using Pandas-IDocument46 pagesData Handing Using Pandas-IPriyanka Porwal JainNo ratings yet

- W3 Text Reading Challenge CalificableDocument6 pagesW3 Text Reading Challenge CalificableDavid MunarNo ratings yet

- Week 4 Class 2 Student WorksheetDocument9 pagesWeek 4 Class 2 Student Worksheetson_gotenNo ratings yet

- W4 - C1 - Student WorksheetDocument15 pagesW4 - C1 - Student WorksheetjorgeNo ratings yet

- Service: Led TVDocument163 pagesService: Led TVson_gotenNo ratings yet

- Reto Lectura3 Texto PDFDocument5 pagesReto Lectura3 Texto PDFhunterstar322No ratings yet

- Reading Exam TextDocument4 pagesReading Exam Textson_gotenNo ratings yet

- W4 C1 Student WorksheetDocument7 pagesW4 C1 Student WorksheetDavid MunarNo ratings yet

- Reto Lectura 4 TEXTODocument3 pagesReto Lectura 4 TEXTOhunterstar322No ratings yet

- Welcome To English For Programming 2 Week 2 - Class 1Document5 pagesWelcome To English For Programming 2 Week 2 - Class 1son_gotenNo ratings yet

- Annex SL - ISO 9001 2015Document24 pagesAnnex SL - ISO 9001 2015Alex VargasNo ratings yet

- Text Exam Ciclo 3Document4 pagesText Exam Ciclo 3son_gotenNo ratings yet

- Reading Challenge 2 - TextDocument3 pagesReading Challenge 2 - Textson_gotenNo ratings yet

- DS4A Resources 2Document9 pagesDS4A Resources 2Martin CordobaNo ratings yet

- NetAcad Learning TranscriptDocument1 pageNetAcad Learning Transcriptson_gotenNo ratings yet

- Documento 1Document1 pageDocumento 1son_gotenNo ratings yet

- 081 ICPMDemos2020 VDD AVisualDriftDetectionSystemforProcessMining POSTPRINTDocument4 pages081 ICPMDemos2020 VDD AVisualDriftDetectionSystemforProcessMining POSTPRINTson_gotenNo ratings yet

- I Manual Adobe Connet Sesiones VivoDocument13 pagesI Manual Adobe Connet Sesiones Vivoson_gotenNo ratings yet

- Arinc 429 PDFDocument32 pagesArinc 429 PDFparantnNo ratings yet

- Annex SL - ISO 9001 2015Document24 pagesAnnex SL - ISO 9001 2015Alex VargasNo ratings yet

- MC145031 Encoder Manchester PDFDocument10 pagesMC145031 Encoder Manchester PDFson_gotenNo ratings yet

- iCE40UltraFamilyDataSheet PDFDocument42 pagesiCE40UltraFamilyDataSheet PDFson_gotenNo ratings yet

- Annex SL - ISO 9001 2015Document24 pagesAnnex SL - ISO 9001 2015Alex VargasNo ratings yet

- 429P1-17 Errata1 PDFDocument309 pages429P1-17 Errata1 PDFrsookramNo ratings yet

- iCE40UltraFamilyDataSheet PDFDocument42 pagesiCE40UltraFamilyDataSheet PDFson_gotenNo ratings yet

- ARINC 429 Protocol Tutorial 1Document26 pagesARINC 429 Protocol Tutorial 1jnewell88No ratings yet

- MC145031 Encoder Manchester PDFDocument10 pagesMC145031 Encoder Manchester PDFson_gotenNo ratings yet

- ADS-B For BeginnersDocument12 pagesADS-B For Beginnersson_gotenNo ratings yet

- ADS-B For Dummies-1090ES v04Document25 pagesADS-B For Dummies-1090ES v04justfrens80% (5)

- Reading and Writing Grade 6 Science (A Closer Look)Document308 pagesReading and Writing Grade 6 Science (A Closer Look)son_goten68% (25)

- Guillermo Cabrera Infante, The Art of Fiction No. 75: Interviewed by Alfred Mac AdamDocument21 pagesGuillermo Cabrera Infante, The Art of Fiction No. 75: Interviewed by Alfred Mac AdamRajamohanNo ratings yet

- MVN DepDocument8 pagesMVN DepStefan MirkovicNo ratings yet

- Technical Writing Syllabus: Course DescriptionDocument7 pagesTechnical Writing Syllabus: Course DescriptionBill Russell CecogoNo ratings yet

- Hearing But Not Listening - An Analysis of Nina Raine's TribesDocument12 pagesHearing But Not Listening - An Analysis of Nina Raine's TribesHailey ScottNo ratings yet

- HarikambhojiDocument8 pagesHarikambhojiDasika SunderNo ratings yet

- Bromley Coverage PaperDocument20 pagesBromley Coverage PaperQuý Trương QuangNo ratings yet

- (Word) STUDENT S BOOK ANSWERS. LIVING ENGLISH 2Document52 pages(Word) STUDENT S BOOK ANSWERS. LIVING ENGLISH 2Ryu Celma CasalsNo ratings yet

- Ict Set A PDFDocument2 pagesIct Set A PDFReyster LimNo ratings yet

- ErrorDocument2 pagesErrortejukmNo ratings yet

- DATA SCIENCE with PYTHON: A Concise GuideDocument20 pagesDATA SCIENCE with PYTHON: A Concise GuideShubhomoy PaulNo ratings yet

- Administrator GuideDocument3 pagesAdministrator GuidenebimeNo ratings yet

- Rangkuman Text Bahasa InggrisDocument15 pagesRangkuman Text Bahasa InggrisNovia 'opy' RakhmawatiNo ratings yet

- Complete Hindi - Simon WeightmanDocument425 pagesComplete Hindi - Simon WeightmanRickvanWichen100% (15)

- Relation of Lanuage With Law, Legal Language...Document8 pagesRelation of Lanuage With Law, Legal Language...Anshul NataniNo ratings yet

- AcademyCloudFoundations Module 01Document47 pagesAcademyCloudFoundations Module 01Dhivya rajanNo ratings yet

- Anritsu QuiCCA SoftwareDocument8 pagesAnritsu QuiCCA SoftwareSEHATI TEKNOLOGINo ratings yet

- Inversor de Sursa LovatoDocument16 pagesInversor de Sursa LovatoRifki FajriNo ratings yet

- TestingDocument33 pagesTestingEmanuel NolascoNo ratings yet

- L. Strauss - On The Intention of RousseauDocument52 pagesL. Strauss - On The Intention of RousseauKristiaan Knoester0% (1)

- Dspic® Digital Signal ControllersDocument6 pagesDspic® Digital Signal ControllersAron Van100% (2)

- Computer SkillsDocument99 pagesComputer SkillsBridget KarimiNo ratings yet

- Stylistic Analysis of McGough's "40-LoveDocument3 pagesStylistic Analysis of McGough's "40-LoveVanina Oroz De GaetanoNo ratings yet

- Connect.: Unit 3 TestDocument2 pagesConnect.: Unit 3 TestJaerdes LimaNo ratings yet

- DirectX 9 Managed Code With VBDocument7 pagesDirectX 9 Managed Code With VBDSNo ratings yet

- 5c211d2b3f107 PDFDocument4 pages5c211d2b3f107 PDFKANCHAN KUMARINo ratings yet

- 15 ER-EER To RelationalDocument22 pages15 ER-EER To RelationalNathan WilsonNo ratings yet

- Functional Light v3Document188 pagesFunctional Light v3jowkeNo ratings yet

- SPEECH AND THEATER Communication UniversalsDocument3 pagesSPEECH AND THEATER Communication UniversalsMa'am Roma GualbertoNo ratings yet

- Gsd-CodesDocument12 pagesGsd-CodesPriya Ghosh100% (2)

- Phrasal VerbDocument6 pagesPhrasal VerbKaseh DhaahNo ratings yet