Professional Documents

Culture Documents

C12 IR M2021 TermWeighting

Uploaded by

Naveen Kumar Kalagarla0 ratings0% found this document useful (0 votes)

9 views19 pagesThe document discusses Monsoon 2021 and covers the following topics:

1. It recaps various information retrieval techniques like phrase queries, proximity search, spell correction, index construction, and vector space models.

2. It then discusses different term weighting approaches, specifically covering five different term weighting approaches.

3. It provides an illustration of term weighting and previews that more topics will be covered in upcoming sessions.

Original Description:

Original Title

C12-IR-M2021-TermWeighting

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThe document discusses Monsoon 2021 and covers the following topics:

1. It recaps various information retrieval techniques like phrase queries, proximity search, spell correction, index construction, and vector space models.

2. It then discusses different term weighting approaches, specifically covering five different term weighting approaches.

3. It provides an illustration of term weighting and previews that more topics will be covered in upcoming sessions.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

9 views19 pagesC12 IR M2021 TermWeighting

Uploaded by

Naveen Kumar KalagarlaThe document discusses Monsoon 2021 and covers the following topics:

1. It recaps various information retrieval techniques like phrase queries, proximity search, spell correction, index construction, and vector space models.

2. It then discusses different term weighting approaches, specifically covering five different term weighting approaches.

3. It provides an illustration of term weighting and previews that more topics will be covered in upcoming sessions.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 19

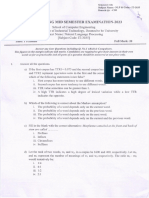

Monsoon 2021

Term Weighting

- Vector Space, 5 Different Term Weighting approaches

Dr. Rajendra Prasath

Indian Institute of Information Technology Sri City, Chittoor

3rd September 2021 (rajendra.2power3.com)

> Topics to be covered

➤ Recap:

➤ Phrase Queries / Proximity Search

➤ Spell Correction / Noisy Channel Modelling

➤ Index Construction

➤ BSBI / SPIMI

➤ Distributed Indexing

➤ Vector Space Models

➤ Term Weighting Approaches

➤ 5 Different Approaches

Overview

➤ An Illustration

➤ More topics to come up … Stay tuned …!!

IR - M2021 @ IIIT Sri City 05/10/2021 2

Recap: Information Retrieval

• Information Retrieval (IR) is finding material

(usually documents) of an unstructured nature

(usually text) that satisfies an information need

from within large collections (usually stored on

computers).

• These days we frequently think first of web

search, but there are many other cases:

• E-mail search

• Searching your laptop

• Corporate knowledge bases

• Legal information retrieval

• and so on . . .

IR - M2021 @ IIIT Sri City 05/10/2021 3

Bag of words model

² We do not consider the order of words in a document.

² John is quicker than Mary and Mary is quicker than

John are represented in the same way.

² This is called a bag of words model.

² In a sense, this is a step back: The positional index was

able to distinguish these two documents.

² We will look at “recovering” positional information later

in this course.

² For now: bag of words model

IR - M2021 @ IIIT Sri City 05/10/2021 4

Term frequency (tf)

² The term frequency tft,d of term t in document d is

defined as the number of times that t occurs in d

² Use tf to compute query-doc. match scores

² Raw term frequency is not what we want

² A document with tf = 10 occurrences of the term is

more relevant than a document with tf = 1

occurrence of the term

² But not 10 times more relevant

² Relevance does not increase proportionally with term

frequency

IR - M2021 @ IIIT Sri City 05/10/2021 5

Log frequency weighting

² The log frequency weight of term t in d is defined as

follows

² tft,d → wt,d : 0 → 0, 1 → 1, 2 → 1.3, 10 → 2, 1000 → 4, etc.

² Score for a document-query pair: sum over terms t in

both q and d:

² tf-matching-score(q, d) = å t∈q∩d (1 + log tft,d )

² The score is 0 if none of the query terms is present in the

document

IR - M2021 @ IIIT Sri City 05/10/2021 6

Term Weighting

² The Importance of a term increases with the number of

occurrences of a term in a text.

² So we can estimate the term weight using some

monotonically increasing function of the number of

occurrences of a term in a text

² Term Frequency:

² The number of occurrences of a term in a text is

called Term Frequency

IR - M2021 @ IIIT Sri City 05/10/2021 7

Term Frequency Factor

² What is Term Frequency Factor?

² The function of the term frequency used to

compute a term’s importance

² Some commonly used factors are:

² Raw TF factor

² Logarithmic TF factor

² Binary TF factor

² Augmented TF factor

² Okapi’s TF factor

IR - M2021 @ IIIT Sri City 05/10/2021 8

The Raw TF factor

² This is the simplest factor

² This counts simply the number of occurrences of a term

in a text

² Simply count the number of terms in each document

² More the number, higher the ranking of the

document!!

IR - M2021 @ IIIT Sri City 05/10/2021 9

The Logarithmic TF factor

² This factor is computed as

1 + ln(tf)

where tf is the term frequency of a term

² Proposed by Buckley (1993 - 94)

² Motivation:

² If a document has one query term with a very high

term frequency then the document is (often) not

better than another document that has two query

terms with somewhat lower term frequencies

² More occurrences of a match should not out-

contribute fewer occurrences of several matches

IR - M2021 @ IIIT Sri City 05/10/2021 10

Example – log TF factor

² Consider the query: “recycling of tires”

² Two documents:

D1: with 10 occurrences of the word “recycling”

D2: with “recycling” and “tires” 3 times each

² Everything else being equal, if we use raw tf, D1(10)

gets higher similarity score than D2 (3+3=6)

² But D2 addresses the needs of the query

² Log TF: reflects usefulness of D2 in similarities

D1: 1 + ln (10) = 3.3 and

D2: 2(1+ln(3)) = 4.1

IR - M2021 @ IIIT Sri City 05/10/2021 11

The Binary TF factor

² The TF factor is completely disregards the number of

occurrences of a term.

² It is either one or zero depending upon the presence

(one) or the absence (zero) of the term in a text.

² This factor gives a nice baseline to measure the

usefulness of the term frequency factors in document

ranking

IR - M2021 @ IIIT Sri City 05/10/2021 12

The Augmented TF factor

² This TF factor reduces the range of the contributions of

a term from the freq. of a term

² How: Compress the range of the possible TF factor

values (say between 0.5 & 1.0)

² The augmented TF factor is used with a belief that

mere presence of a term in a text should have

some default weight (say 0.5)

² Then additional occurrences of a term could

increase the weight of the term to some max.

value (usually 1.0).

IR - M2021 @ IIIT Sri City 05/10/2021 13

Augmented TF factor - Scoring

² This TF factor is computed as follows:

² The augmented TF factor emphasizes that more

matches are more importance than fewer matches

(like log TF factor)

² A single match contributes at least 0.5 and high TFs

can only contribute at most another 0.5

² This was motivated by document length considerations

and does not work as well as log TF factor in practice.

IR - M2021 @ IIIT Sri City 05/10/2021 14

Okapi‘s TF factor

² Robertson et. Al (1994) developed Okapi Information

Retrieval System and proposed another TF factor

² This TF factor is based on Approximations to the 2-Poisson

Model:

² This factor

is quite close to the log TF factor in its behavior

² In practice, log TF factor is effective for good document

ranking

IR - M2021 @ IIIT Sri City 05/10/2021 15

Exercise – Try Yourself

² Consider a collection of n documents

² Let n be sufficiently large (at least 100 docs)

² You can take our dataset

² Sports News Dataset (ths-181-dataset.zip)

² Find two lists:

² The most frequency words and

² The least frequent words

² Form k (=10) queries each with exactly 3-words taken

from above lists (at least one from each)

² Compute Similarity between each query and and

documents

IR - M2021 @ IIIT Sri City 05/10/2021 16

Summary

In this class, we focused on:

(a) Recap: Positional Indexes

i. Wild card Queries

ii. Spelling Correction

iii. Noisy Channel modelling for Spell Correction

(b) Various Indexing Approaches

i. Block Sort based Indexing Approach

ii. Single Pass In Memory Indexing Approach

iii. Distributed Indexing using Map Reduce

iv. Examples

IR - M2021 @ IIIT Sri City 05/10/2021 17

Acknowledgements

Thanks to ALL RESEARCHERS:

1. Introduction to Information Retrieval Manning, Raghavan

and Schutze, Cambridge University Press, 2008.

2. Search Engines Information Retrieval in Practice W. Bruce

Croft, D. Metzler, T. Strohman, Pearson, 2009.

3. Information Retrieval Implementing and Evaluating Search

Engines Stefan Büttcher, Charles L. A. Clarke and Gordon V.

Cormack, MIT Press, 2010.

4. Modern Information Retrieval Baeza-Yates and Ribeiro-Neto,

Addison Wesley, 1999.

5. Many Authors who contributed to SIGIR / WWW / KDD / ECIR

/ CIKM / WSDM and other top tier conferences

6. Prof. Mandar Mitra, Indian Statistical Institute, Kolkatata

(https://www.isical.ac.in/~mandar/)

IR - M2021 @ IIIT Sri City 05/10/2021 18

Questions

It’s Your Time

IR - M2021 @ IIIT Sri City 05/10/2021 19

You might also like

- C11 IR M2021 VectorSpaceModelDocument31 pagesC11 IR M2021 VectorSpaceModelNaveen Kumar KalagarlaNo ratings yet

- Data Hiding Fundamentals and Applications: Content Security in Digital MultimediaFrom EverandData Hiding Fundamentals and Applications: Content Security in Digital MultimediaRating: 5 out of 5 stars5/5 (1)

- IR Assigne 4Document8 pagesIR Assigne 4jewarNo ratings yet

- LOTED: a semantic web portal for the management of tenders from the European CommunityFrom EverandLOTED: a semantic web portal for the management of tenders from the European CommunityNo ratings yet

- Experiment No. 4: Kjsce/It/Lybtech/Sem Viii/Ir/2023-24Document4 pagesExperiment No. 4: Kjsce/It/Lybtech/Sem Viii/Ir/2023-24ma3No ratings yet

- Testing Different Log Bases For Vector Model Weighting TechniqueDocument15 pagesTesting Different Log Bases For Vector Model Weighting TechniqueDarrenNo ratings yet

- TF-IDF - From - Scratch - Towards - Data - ScienceDocument20 pagesTF-IDF - From - Scratch - Towards - Data - SciencebanstalaNo ratings yet

- Text Pre Processing With NLTKDocument42 pagesText Pre Processing With NLTKMohsin Ali KhattakNo ratings yet

- Perfectionof Classification Accuracy in Text CategorizationDocument5 pagesPerfectionof Classification Accuracy in Text CategorizationIJAR JOURNALNo ratings yet

- 3 TermweightingDocument34 pages3 TermweightinggcrossnNo ratings yet

- Term Weighting and Similarity MeasuresDocument35 pagesTerm Weighting and Similarity Measuresmilkikoo shiferaNo ratings yet

- Irt AnsDocument9 pagesIrt AnsSanjay ShankarNo ratings yet

- FinBERT - DeSola, Hanna, NonisDocument11 pagesFinBERT - DeSola, Hanna, NonisMarco TranNo ratings yet

- What Does Tf-IdfDocument2 pagesWhat Does Tf-IdfbalaNo ratings yet

- Course Name: Advanced Information RetrievalDocument6 pagesCourse Name: Advanced Information RetrievaljewarNo ratings yet

- Consistency and Structure Analysis of Scholarly Papers Using Based On Natural Language ProcessingDocument18 pagesConsistency and Structure Analysis of Scholarly Papers Using Based On Natural Language Processingindex PubNo ratings yet

- Context Based Document Indexing and Retrieval Using Big Data Analytics - A ReviewDocument3 pagesContext Based Document Indexing and Retrieval Using Big Data Analytics - A Reviewrahul sharmaNo ratings yet

- International Journal On Natural Language Computing (IJNLC)Document15 pagesInternational Journal On Natural Language Computing (IJNLC)DarrenNo ratings yet

- Project Requirements 2021Document1 pageProject Requirements 2021Seif RedaNo ratings yet

- Effective Term Based Text Clustering AlgorithmsDocument29 pagesEffective Term Based Text Clustering AlgorithmsAbsheer KhanNo ratings yet

- TF Idf AlgorithmDocument4 pagesTF Idf AlgorithmSharat DasikaNo ratings yet

- Thesis RsuDocument6 pagesThesis RsuJackie Gold100% (2)

- A Language Independent Approach To Develop URDUIR SystemDocument10 pagesA Language Independent Approach To Develop URDUIR SystemCS & ITNo ratings yet

- Chapter-3 TermweightingDocument17 pagesChapter-3 Termweightingabraham getuNo ratings yet

- KmeanseppcsitDocument5 pagesKmeanseppcsitchandrashekarbh1997No ratings yet

- Forensic Exchange Analysis of Contact Artifacts On Data Hiding TimestampsDocument33 pagesForensic Exchange Analysis of Contact Artifacts On Data Hiding TimestampsRossy ArtantiNo ratings yet

- A Method For Visualising Possible ContextsDocument8 pagesA Method For Visualising Possible ContextsshigekiNo ratings yet

- Information and Software TechnologyDocument16 pagesInformation and Software TechnologyFelipe Ramirez HerreraNo ratings yet

- IR Endsem Solution1Document17 pagesIR Endsem Solution1Dash CasperNo ratings yet

- Determining The Currency of Data: Wenfei Fan Floris Geerts Jef WijsenDocument12 pagesDetermining The Currency of Data: Wenfei Fan Floris Geerts Jef Wijsenmona_csNo ratings yet

- Term WeightingDocument71 pagesTerm Weightingdawit wolduNo ratings yet

- RDM Slides Text Mining With R 190703041151 PDFDocument64 pagesRDM Slides Text Mining With R 190703041151 PDFsunil kumarNo ratings yet

- Natural Language Processing (NLP) Introduction:: Top 10 NLP Interview Questions For BeginnersDocument24 pagesNatural Language Processing (NLP) Introduction:: Top 10 NLP Interview Questions For Beginners03sri03No ratings yet

- Introduction of IR ModelsDocument62 pagesIntroduction of IR ModelsMagarsa BedasaNo ratings yet

- Assignment 3 InstructionsDocument10 pagesAssignment 3 InstructionsAshutosh KushwahaNo ratings yet

- Personalized Information Retrieval SysteDocument6 pagesPersonalized Information Retrieval Systenofearknight625No ratings yet

- Irfan 2017Document5 pagesIrfan 2017septianfirman firmanNo ratings yet

- Benford LawDocument6 pagesBenford LawprofharishNo ratings yet

- 3 TermweightingDocument41 pages3 TermweightingHailemariam SetegnNo ratings yet

- Mid Sem 2023Document2 pagesMid Sem 202321051543No ratings yet

- Ijet V2i3p7Document6 pagesIjet V2i3p7International Journal of Engineering and TechniquesNo ratings yet

- Course Name: Advanced Information RetrievalDocument6 pagesCourse Name: Advanced Information RetrievaljewarNo ratings yet

- Chapter Three Term Weighting and Similarity MeasuresDocument33 pagesChapter Three Term Weighting and Similarity MeasuresAlemayehu GetachewNo ratings yet

- Jurnal Information RetrievalDocument4 pagesJurnal Information Retrievalkidoseno85No ratings yet

- Chap3 NLP Students (2) RemovedDocument19 pagesChap3 NLP Students (2) RemovedSultan AlenaziNo ratings yet

- Indexing: 1. Static and Dynamic Inverted IndexDocument55 pagesIndexing: 1. Static and Dynamic Inverted IndexVaibhav Garg100% (1)

- Assignment Brief BTEC Level 4-5 HNC/HND Diploma (QCF) : Merit and Distinction DescriptorDocument9 pagesAssignment Brief BTEC Level 4-5 HNC/HND Diploma (QCF) : Merit and Distinction DescriptorAzeem UshanNo ratings yet

- Preprocessing Stemin JIDocument3 pagesPreprocessing Stemin JIkidoseno85No ratings yet

- Simple Proven Approaches To Text RetrievalDocument8 pagesSimple Proven Approaches To Text RetrievalSimon WistowNo ratings yet

- Chapter 1: Text Mining: Big Data Analytics (15CS82)Document12 pagesChapter 1: Text Mining: Big Data Analytics (15CS82)Maltesh BannihalliNo ratings yet

- Chapter Three Term Weighting and Similarity MeasuresDocument25 pagesChapter Three Term Weighting and Similarity MeasuresXhufkfNo ratings yet

- 3-Term WeightingDocument25 pages3-Term WeightinglatigudataNo ratings yet

- Finbert: Pre-Trained Model On Sec Filings For Financial Natural Language TasksDocument12 pagesFinbert: Pre-Trained Model On Sec Filings For Financial Natural Language TasksMukul JaggiNo ratings yet

- Ex. No.: Text Mining On Commercial Application Date: MotivationDocument9 pagesEx. No.: Text Mining On Commercial Application Date: MotivationRamkumardevendiranDevenNo ratings yet

- Baselines and AnalysisDocument6 pagesBaselines and Analysisbelay beyenaNo ratings yet

- Significance of Similarity Measures in Textual Knowledge MiningDocument3 pagesSignificance of Similarity Measures in Textual Knowledge MiningIIR indiaNo ratings yet

- Representation Learning For Information Extraction From Form-Like DocumentsDocument10 pagesRepresentation Learning For Information Extraction From Form-Like Documentsbithi jainNo ratings yet

- A New Term Frequency With Gaussian Technique For Text Classification and Sentiment AnalysisDocument17 pagesA New Term Frequency With Gaussian Technique For Text Classification and Sentiment AnalysisArturNo ratings yet

- NLP IrDocument24 pagesNLP IrpawebiarxdxdNo ratings yet

- C10 IR M2021 IndexConstruction SimpleandDistributedDocument42 pagesC10 IR M2021 IndexConstruction SimpleandDistributedNaveen Kumar KalagarlaNo ratings yet

- C09 IR M2021 SpellingCorrection NoisyChannelModellingDocument33 pagesC09 IR M2021 SpellingCorrection NoisyChannelModellingNaveen Kumar KalagarlaNo ratings yet

- C14 M2020 IR Evaluation V3 1Document77 pagesC14 M2020 IR Evaluation V3 1Naveen Kumar KalagarlaNo ratings yet

- Measure User Happiness: - How To ? - Depends On Many FactorsDocument29 pagesMeasure User Happiness: - How To ? - Depends On Many FactorsNaveen Kumar KalagarlaNo ratings yet

- Final LP-VI NLP Manual 2023-24Document29 pagesFinal LP-VI NLP Manual 2023-24shreyasnagare3635No ratings yet

- Wood 2017Document8 pagesWood 2017mindworkz pro100% (1)

- 2023 욱일기 반대 논문 (최주현, 박광호)Document14 pages2023 욱일기 반대 논문 (최주현, 박광호)Joohyun ChoiNo ratings yet

- CAIM: Cerca I Anàlisi D'informació Massiva: FIB, Grau en Enginyeria InformàticaDocument21 pagesCAIM: Cerca I Anàlisi D'informació Massiva: FIB, Grau en Enginyeria InformàticaBlackMoothNo ratings yet

- Machine Learning For The Classification of Fake NewsDocument4 pagesMachine Learning For The Classification of Fake NewsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Fake News Detection Using Machine Learning: Presented by Fathima T H MSC Computer ScienceDocument15 pagesFake News Detection Using Machine Learning: Presented by Fathima T H MSC Computer Sciencefathima th83% (6)

- Ex. No.: Text Mining On Commercial Application Date: MotivationDocument9 pagesEx. No.: Text Mining On Commercial Application Date: MotivationRamkumardevendiranDevenNo ratings yet

- Amazon Food Review NotesDocument37 pagesAmazon Food Review NotesThe Chosen OneNo ratings yet

- "Vupnbufe "Wjbujpo 0ddvssfodft $bufhpsj (Bujpo: Kosio Marev and Krasin GeorgievDocument5 pages"Vupnbufe "Wjbujpo 0ddvssfodft $bufhpsj (Bujpo: Kosio Marev and Krasin GeorgievTietunNo ratings yet

- Data Mining: Data Preprocessing Exploratory Analysis Post-ProcessingDocument46 pagesData Mining: Data Preprocessing Exploratory Analysis Post-Processingibrahim mereeNo ratings yet

- Orange3 Text Mining Documentation: BiolabDocument53 pagesOrange3 Text Mining Documentation: BiolabKarin MuñozNo ratings yet

- 20200728204914D5872 - COMP6639 - Session 28 - Natural Language ProcessingDocument29 pages20200728204914D5872 - COMP6639 - Session 28 - Natural Language ProcessingGabrielle AngelicaNo ratings yet

- TextMining PDFDocument47 pagesTextMining PDFWilson VerardiNo ratings yet

- I Am Sharing 'Interview' With YouDocument65 pagesI Am Sharing 'Interview' With YouBranch Reed100% (3)

- Word Embedding Generation For Telugu CorpusDocument28 pagesWord Embedding Generation For Telugu CorpusDurga PNo ratings yet

- Sheet 5 SolvedDocument4 pagesSheet 5 Solvedshimaa eldakhakhnyNo ratings yet

- Song Recommendation System Using TF-IDF Vectorization and Sentimental AnalysisDocument11 pagesSong Recommendation System Using TF-IDF Vectorization and Sentimental AnalysisIJRASETPublicationsNo ratings yet

- Similarity-Based Techniques For Text Document ClassificationDocument8 pagesSimilarity-Based Techniques For Text Document ClassificationijaertNo ratings yet

- Basics of Bag of Words ModelDocument32 pagesBasics of Bag of Words ModelGanesh ChandrasekaranNo ratings yet

- Demos 2012Document550 pagesDemos 2012music2850No ratings yet

- Chapter 15 - MINING MEANING FROM TEXTDocument20 pagesChapter 15 - MINING MEANING FROM TEXTSimer FibersNo ratings yet

- An Online Verification System of Employee Classification Using Machine Learning ApproachDocument9 pagesAn Online Verification System of Employee Classification Using Machine Learning ApproachIJRASETPublicationsNo ratings yet

- Text AnalyticsDocument30 pagesText Analyticsmusadhiq_yavarNo ratings yet

- Pazzani - Content-Based Recommender SystemsDocument17 pagesPazzani - Content-Based Recommender SystemsDJNo ratings yet

- Sentiment Analysis of Nepali Text Using Naïve Bayes Under The Supervision ofDocument36 pagesSentiment Analysis of Nepali Text Using Naïve Bayes Under The Supervision ofbcaNo ratings yet

- Topic Modeling With BERT. - Towards Data ScienceDocument9 pagesTopic Modeling With BERT. - Towards Data ScienceRubi ZimmermanNo ratings yet

- UCD Computer Science Final Year Project ReportDocument34 pagesUCD Computer Science Final Year Project ReportRyanLacerna100% (1)

- Blockchain-Powered Learning Pathway Suggestion System: 1 Udit Kumar Mahaldar 2 Dr. Dinakaran MDocument5 pagesBlockchain-Powered Learning Pathway Suggestion System: 1 Udit Kumar Mahaldar 2 Dr. Dinakaran MDeegnasNo ratings yet

- Al Hawari2019Document17 pagesAl Hawari2019Ragnar AlonNo ratings yet

- Intuitive Understanding of Word Embeddings - Count Vectors To Word2VecDocument34 pagesIntuitive Understanding of Word Embeddings - Count Vectors To Word2VecfpttmmNo ratings yet